I’ll keep this month’s comment brief. I’m no travel expert, but it would appear that the demise of Thomas Cook is largely due to the fact that the business failed to modernise quickly enough. By not taking sufficient notice of the digital world, the travel company joins a growing list of household names, in the UK at least, that have all failed to grasp the need to embrace technology and its potential.

Much of the content of Digitalisation World might seem a world away from the business that you run right now. However, as I’ve stated in the past, very few companies in very few industries can afford to continue doing things the way that they have always done.

At the most basic level, you might be lucky enough to have a unique business model that means your customers can’t go anywhere else. Don’t be complacent, though. You could have a rival quicker than you think – thanks to the ability to start a company almost overnight and buy in the technology required to run it.

Then again, however loyal your customers, if they find that your lack of technology (a poor website, continued reliance on post rather than email etc.) a major obstacle, they could well look around for another supplier.

Cost is often cited as the major obstacle to modernising/digitalising a business. Thanks to the rise of the cloud and managed services, this should no longer be an issue. In any case, the cost of doing nothing demands you pay the ultimate price: extinction.

All a bit gloomy, maybe, but the good news is that you can revolutionise your business and your wider industry by selective use of digital technology. It’s not too late.

Whilst few would refute that technology contributes to making our lives easier, new global research from Lenovo has found that a large proportion of those surveyed feel that technology has the power to make us more understanding, tolerant, charitable and open-minded.

The survey polled more than 15,000 people from the US, Mexico, Brazil, China, India, Japan, UK, Germany, France and Italy and revealed that eight out of ten respondents (84 per cent) in the UK think that technology plays a large role in their day-to-day lives. Meanwhile, 78 per cent of the UK said that technology improves their lives.

Although we might presume the main ways in which technology impacts our lives is by helping us achieve our daily tasks – such as emails, streaming and so on – Lenovo’s research has found that in many cases, technology is actually having a strong impact on our human values. For example, 30 per cent of UK respondents believe smart devices such as PCs, tablets, smartphones and VR are making people more open-minded and tolerant.

It is likely that the rise of social media and video sharing platforms are key to this, allowing people to connect with those from other countries and cultures, gaining an insight into their lives through social posts, blogs, vlogs, video and other content. The window into the world of other peoples’ lives through technology is also a key contributor for the 66 per cent of UK respondents who believe technology makes us more ‘curious’.

The study also found that nearly a third (30 per cent) of people in the UK are of the opinion that technology makes us more charitable. This is likely the result of the increased prevalence of charitable ‘giving’ platforms which allow people to make donations online, as well as enabling people to share their charitable endeavors via social platforms.

Psychologist, Jocelyn Brewer, comments:

“Technology is often blamed for eroding empathy, the innate ability most humans are born with to identify and understand each other’s emotions and experiences. However, when we harness technological advances for positive purposes, it can help promote richer experiences that develop empathetic concern and leverage people into action on causes that matter to them.

“Developing the ability to imagine and connect with the experiences and perspectives of a broad range of diverse people can help build mental wealth and foster deeper, more meaningful relationships. Technology can be used to supplement our connections, not necessarily serve as the basis of them.”

Respondents to the survey also believe emerging technologies could have an even more significant impact on our values in the future. Indeed, nearly two-thirds (58 per cent) in the UK say that VR has the potential to cultivate empathy and understanding, and help people be more emotionally connected with people across the globe by allowing them to see the world through their eyes.

The global nature of this survey has meant that interesting differences can be found around the world. In particular, the developing economies of the BRIC countries (Brazil, Russia, India and China) appear to have the most faith in the ability of technology to positively impact our values. For example, 90 per cent of respondents in China think VR has the ability to increase human understanding, whilst that figure is 88 per cent in India and 81 per cent in Brazil. By comparisons, just 51 per cent believe this to be true in Japan, a country that is rather technologically advanced, whilst the lowest figure is in Germany (48 per cent).

One respondent in the study remarked: “VR would give those who think the world is perfect an insight into other people’s world and make them realise the pain and suffering some people have to endure in their daily lives.”

There are of course two sides to every coin, and some respondents do feel that technology can instead divide people. For example, 62 per cent of UK respondents in the survey agreed that tech makes people more judgmental of others, especially through the lens of social media.

The ‘immediate’ nature of the internet also has some side-effects. For example, 48 per cent of UK respondents believe that technology is making us less ‘patient’, whilst 65 per cent said it can make us ‘lazy’ and 54 per cent said that it can make us ‘selfish’.

As a global technology company, Lenovo believes such innovative uses of technology for good are important to lead as an example in actively promoting qualities like empathy and tolerance as products are developed for widespread adoption.

Dilip Bhatia, Vice President of User and Customer Experience, Lenovo, comments:

“In many ways society is becoming more polarised as many of us are surrounded by those who share similar views and opinions. This can reinforce both rightly and wrongly held views and lead to living in somewhat of an echo chamber. We believe smarter technology has the power to intelligently transform people’s world view, putting them in the shoes of others and allowing them to experience life through their eyes – leading to a greater understanding of the world and the human experience.

“This could be through using smart technology to connect people from diverse backgrounds or allowing you to literally see their world in VR. The more open we are to diversity in the world around us, the more empathetic we can be as human beings – and that is a good thing.”

Companies of all sizes have trouble competing and disrupting in a software-driven world due to persistent shortage of developers, as well as IT funding that falls short of supporting strategic goals.

Mendix has published key findings on the readiness of companies to successfully implement digital transformation initiatives. The survey shines a spotlight on the rise of “shadow IT” — when business teams pursue tech solutions on their own, independently of IT — in an effort to deal with the persistent shortage of software developers and inadequate budgeting to meaningfully advance a digital agenda. The research, conducted by California-based Dimensional Research, surveyed more than a thousand business and IT stakeholders in medium and large companies in the United States and Europe.

Clear communication between business and IT is often highlighted by leading analysts as imperative for successfully launching innovative technology solutions and user experiences. The survey, “Digital Disconnect: A Study of Business and IT Alignment in 2019,” did find a few strong areas of agreement, including a broad foundation of organizational respect for IT. There was near-unanimous agreement (95%) by all respondents that IT’s involvement in strategic initiatives is beneficial. Additionally, 70% of those surveyed give IT high marks as a value driver versus cost center for the enterprise; for example, 66% believe in IT’s potential to enable rapid, competitive responses to market changes and to increase employee productivity (65%). However, survey respondents identified significant hurdles to realizing that potential.

Where Business-IT Communication Breaks Down

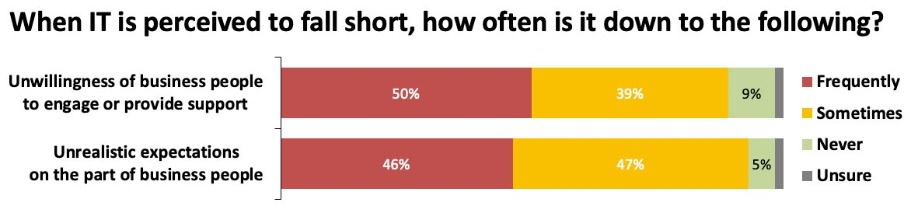

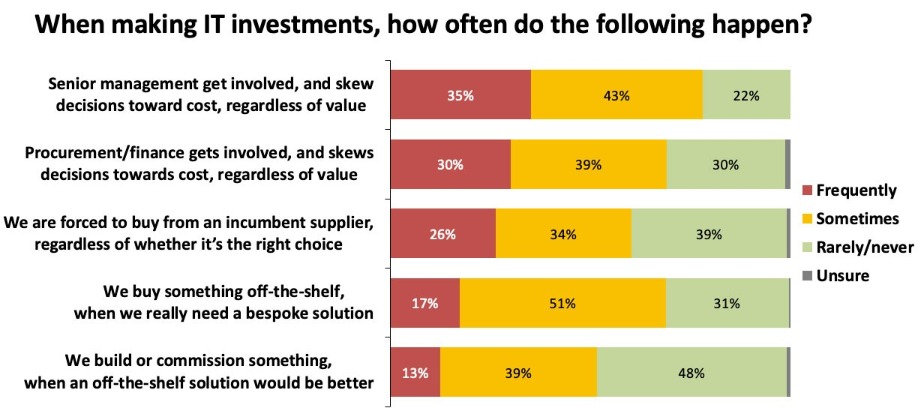

The alignment between business and IT fades quickly when the topic turns to budgeting and operational priorities. In the survey, 50% of IT professionals believe IT budgets are insufficient to deliver solutions at scale. Conversely, 68% of business respondents do not see any challenges with the level of funding. Nearly two-thirds of IT (59%) cite the need to support legacy systems as a drain on resources and impediment to innovation. Nearly half of IT (49%) reports difficulties in achieving stakeholder agreement on important business initiatives.

This impasse is clearly reflected in the shared belief that a huge pipeline exists of unmet requests for IT solutions (77% IT and 71% business), requiring many months or even years for completion. Nearly two-thirds of business stakeholders (61%) say that less than half of their requests rise to the surface for IT implementation. Not surprisingly, both sides strongly agree (78%) that business efforts to “go it alone” or undertake projects in the realm of “shadow IT” — without official IT support or even knowledge — have greatly increased over the last five years.

“While business and IT users agree on the urgent need to advance the enterprise’s digital agenda, they are worlds apart on how to eliminate the backlog and take proactive steps to develop critically important solutions at scale,” says Jon Scolamiero, manager, architecture & governance, at Mendix.“For many years, IT has been budgeted and managed like a cost center, leading to a tremendous increase in shadow IT. Business leaders say they want IT’s help in achieving strategic goals and ROI, but only a small percentage (32%) grasp that current IT budgets are insufficient and inflexible. This disconnect is difficult for enterprises to surface, yet resolving it is a necessary first step in changing the calculus. The research findings point out the barriers that are impeding successful, cross-functional collaboration.”

Drilling Down into the Disconnect

The survey data highlights a number of nuanced differences in perceptions. For example, nearly all respondents (96%) agree there is business impact when IT doesn’t deliver on solutions. But IT staff feels that impact largely as frustration and a loss of staff morale. Business staff, on the other hand, believes these delays lead to missed key strategic targets and revenue reductions, loss of competitive advantage, and other ROI impacts.

“We also see a disconnect on the wish list of digitalization priorities from business, centering on innovations and new technologies that impact ROI,” says Scolamiero. “However, IT feels its hands are tied because the lion’s share of its resources go to ongoing support issues, including having to support legacy systems, back-end integrations, maintenance, and governance.”

The study’s sharpest contrast is around business users’ preferred solution of undertaking digital projects without IT’s help — going it alone as “citizen developers” or “shadow IT.” Business respondents overwhelmingly (69%) believe such action to be mostly good, while an almost identical number of IT professionals (70%) believe it to be mostly bad.

IT is strongly united in its fear that business professionals tackling application development on their own will create new support issues that IT will have to clean up (78%), and open core systems to security vulnerabilities (73%).

To underscore their concerns, 91% of IT respondents say it is dangerous to build applications without understanding the guardrails of governance, data security, and infrastructure compatibility. However, there is virtually unanimous agreement (99%) by all respondents that capabilities such as easy integrations, fast deployment, easy collaboration — which are inherent in low-code application development — would benefit their organizations.

Scolamiero said, “IT can be expensive, and there’s a reason that people believe most IT projects fail. IT involves the reasoned application of science, logic, and math, and requires a high level of expertise to get things done right. This level of expertise is viewed as extremely costly. But when the business side sees IT as a revenue driver instead of a cost center, and empowers IT to come up with creative ideas for solving problems, important and positive changes ensue. These changes drive an ROI that can dwarf the perceived cost. Low-code, when done right, solves much of the shadow IT problem by enabling and empowering intelligent and motivated people on the IT side and the business side to come together and make valuable revenue-generating solutions faster than ever before.”

Mending the Gap with Low-Code Platforms

While business experts are still not widely familiar with the term “low-code,” business users who are familiar with low-code’s capabilities have aggressive plans for adoption, with 90% citing plans to adopt low-code. Interestingly, more business executives (55%) than IT executives (38%) believe their organization would achieve significant business value by adopting a low-code framework.

“Enabling meaningful collaboration to bridge the gap between business and IT is one of the main reasons we founded Mendix and developed our unified low-code and no-code platform,” says Derek Roos, Mendix CEO. “Years of budgeting and managing IT as a cost center has led to a crisis in business. Business and IT leaders must redefine teams and empower new makers to drive value and creativity and become top-performing organizations. Every iteration of Mendix’s application development platform has advanced the tools needed for successful collaboration between business users and IT experts, enabling greater participation by all members of the team to produce creative, value-delivering, transformative solutions that advance their enterprise’s digital agenda. Because that’s absolutely required to succeed in the marketplace today. This research identifies for leaders the pain points and communication gaps they must address in order to break out of the bonds of legacy systems and thinking, and chart a meaningful digital future.”

Despite rising optimism, 86 percent of organizations prevented from pursuing new digital services or transformation projects.

Despite rising optimism in digital transformation, the vast majority of organizations are still suffering failure, delays or scaled back expectations from digital transformation projects, research from Couchbase has found. In the survey of 450 heads of digital transformation in enterprises across the U.S., U.K., France and Germany, 73 percent of organizations have made “significant” or better improvements to the end-user experience in their organization through digital innovation. Twenty-two percent say they have transformed or completely “revolutionized” end-user experience, representing a marked increase over Couchbase’s 2017 survey (15 percent). However, organizations are still experiencing issues meeting their digital ambitions, including:

At the same time, transformation is not slowing down. Ninety-one percent of respondents said that disruption in their industry has accelerated over the last 12 months, 40 percent “rapidly.” And organizations plan to spend $30 million on digital transformation projects in the next 12 months, compared to $27 million in the previous 12.

“Digital transformation has reached an inflection point,” said Matt Cain, CEO, Couchbase. “At this pivotal time, it is critical for enterprises to overcome the challenges that have been holding them back for years. Organizations that put the right people and technology in place, and truly drive their digital transformation initiatives, will benefit from market advantages and business returns.”

Organizations are well aware of the risks of failing to digitally innovate. Forty-six percent fear becoming less relevant in the market if they do not innovate, while 42 percent say they will lose IT staff to more innovative competitors, in turn making it harder to innovate in the future. As a result, organizations are pressing forward with projects, perhaps recklessly. Seventy-one percent agree that businesses are fixated on the promise of digital transformation, to the extent that IT teams risk working on projects that may not actually deliver tangible benefits.

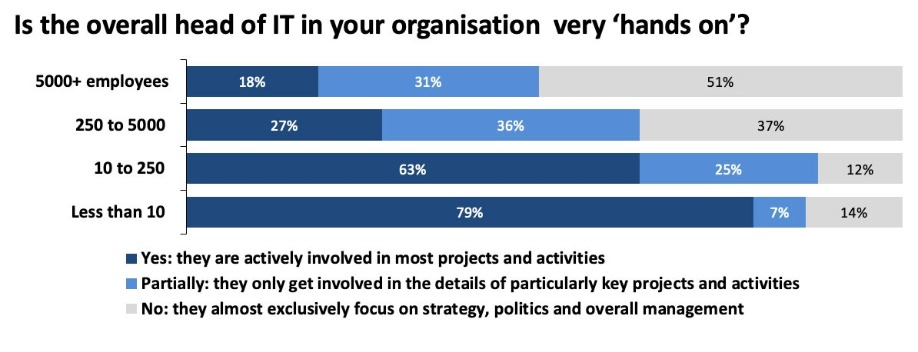

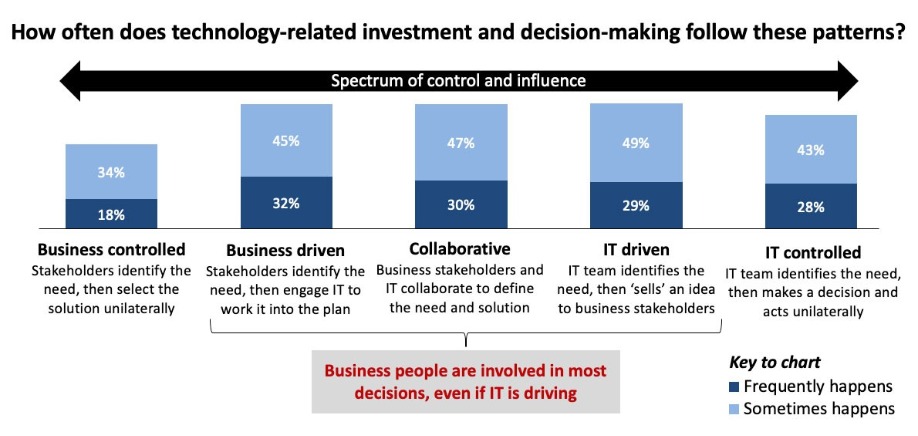

One key to delivering tangible benefits is ensuring that digital transformation strategy is set with the needs of the business in mind. The majority of organizations (52 percent) still have digital transformation strategy set by the IT team, meaning the C-suite is not guiding projects and strategy that should have a major impact on the business. At the same time, the primary drivers for transformation are almost all reactive – responding to competitors’ advances, pressure from customers for new services and responding to changes in legislation were each reported by 23 percent of respondents. Conversely, original ideas from within the business only drive eight percent of organizations’ transformations.

“In order for companies to succeed with their digital projects and overcome the inherent challenges with these new approaches, they have to attack the projects in a comprehensive and systemic way,” continued Matt Cain. “Transformation is ultimately achieved when the right combination of organizational commitment and next-generation technology is driven across the entire enterprise as a true strategic imperative, not left in the sole hands of the IT team. The best technology will then help companies enable the customer outcomes they desire.”

Digital transformation, migration to the enterprise cloud and increasing customer demands have tech leaders looking to AI for the answer.

Software intelligence company, Dynatrace, has published the findings of an independent global survey of 800 CIOs, which reveals that digital transformation, migration to the enterprise cloud and increasing customer demands are creating a surge in IT complexity and the associated costs of managing it. Technical leaders around the world are concerned about the effect this has on IT performance and ultimately, their business. Download the 2019 global report “Top Challenges for CIOs in a Software-Driven, Hybrid, Multi-Cloud World” here.

CIO responses captured in the 2019 research indicate that lost revenue (49%) and reputational damage (52%) are among the biggest concerns as businesses transform into software businesses and move to the cloud. And, as CIOs struggle to prevent these concerns from becoming reality, IT teams now spend 33% of their time dealing with digital performance problems, costing businesses an average of $3.3 million annually, compared to $2.5 million in 2018; an increase of 34%. To combat this, 88% of CIOs say AI will be critical to IT’s ability to master increasing complexity.

Findings of the 2019 global report include:

Software is transforming every business

Every company, in every industry, is transforming into a software business. The way enterprises interact with customers, assure quality experiences and optimize revenues is driven by applications and the hybrid, multi-cloud environments underpinning them. Success or failure comes down to the software supporting these efforts. The pressure of this “run-the-business” software performing properly has significant ramifications for IT professionals.

Enterprise “cloud-first” strategies increase complexity

Underpinning this software revolution is the enterprise cloud, allowing companies to innovate faster and better meet the needs of customers. The enterprise cloud is dynamic, hybrid, multi-cloud, and web-scale, containing hundreds of technologies, millions of lines of code and billions of dependencies. However, this transformation isn’t simply about lifting and shifting apps to the cloud, it’s a fundamental shift in how applications are built, deployed and operated.

The age of the customer increases pressure to deliver great experiences

We are squarely in the age of the customer, where high quality service is paramount due to the ease with which customers will try competitive offerings and share their experiences instantly via social media.

The research highlights the extent to which businesses are struggling to combat IT complexity that threatens the customer experience, with CIOs revealing:

IT teams are feeling the strain

Digital transformation, migration to the enterprise cloud and increasing customer demands are collectively putting pressure on IT teams, who continue to feel the strain, especially as it relates to performance.Revealing the extent of this dilemma, key findings of the research also show that:

CIOs look to AI for the answer

Exploring the potential antidote to these challenges, the research further reveals that 88% of CIOs say that they believe AI will be critical to IT’s ability to master increasing complexity.

“As complexity grows beyond IT teams’ capabilities, the economics of throwing more manpower at the problem no longer works,” said Bernd Greifeneder, founder and CTO, Dynatrace. “Organizations need a radically different AI approach. That’s why we reinvented from the ground up, creating an all-in-one platform with a deterministic AI at the core, which provides true causation, not just correlation. And, unlike machine learning approaches, Dynatrace® does not require a lengthy learning period. The Dynatrace® Software Intelligence Platform automatically discovers and captures high fidelity data from applications, containers, services, processes, and infrastructure. It then automatically maps the billions of dependencies and interconnections in these complex environments. Its AI engine, Davis™, analyzes this data and its dependencies in real-time to instantly provide precise answers – not just more data on glass. It’s this level of automation and intelligence that overcomes the challenges presented by the enterprise cloud and enables teams to develop better software faster, automate operations and deliver better business results.”

Harvard Business Review Analytic Services, in association with Cloudera, reveals enterprise IT mandate to protect data and minimize risk at odds with business needs for speed and agility.

Cloudera has published the findings of a global research report, created in association with Harvard Business Review Analytic Services, which examines the trends, pain points, and opportunities surrounding Enterprise IT challenges in data analytics and management in a multicloud environment.

The report, “Critical Success Factors to Achieve a Better Enterprise Data Strategy in Multicloud Environment,” is based on insights from over 150 global business executives representing a wide range of industries, with almost half being organizations with $1 billion or more in revenue. The study found that the majority (69%) of organizations recognize a comprehensive data strategy as a requirement for meeting business objectives, but only 35% believe that their current analytics and data management capabilities are sufficient in doing so.

“This report reveals specific obstacles modern enterprises must overcome to realize the true potential of their data and validates the need for a new approach to enterprise data strategy,” said Arun Murthy, Chief Product Officer of Cloudera. “Cloudera is committed to helping our customers with the data analytics their people need to quickly and easily derive insights from data anywhere their business operates, with built-in enterprise security and governance and powered by the innovation of 100% open source. We call that an enterprise data cloud.”

The future is hybrid and multicloud

The report confirms that the future of analytics and Enterprise IT data management is multicloud, with businesses managing data across private, hybrid and public cloud environments — but there is still progress to be made. Over half (54%) of the organizations surveyed have plans to increase the amount of data they store in the public cloud over the next year, but the majority still manage much of their data on-premises. As enterprises create cloud strategies that are customized to their needs, the ability to securely access data no matter where it resides and to seamlessly migrate workloads has never been more imperative.

Functions are diversifying

Most organizations are leveraging their data to support traditional functions like business intelligence (80%) and data warehousing (70%). Newer functions are less common but on the rise, with half of the organizations surveyed planning to implement artificial intelligence and machine learning in the next three years. To fully extract the business value embedded in data, an enterprise data strategy must support a full buffet of functions, from real-time analytics at the Edge to artificial intelligence.

A deeper understanding of regulatory compliance and security

The introduction of new regulations and increasingly complex processes around governance means that every single person in an organization must understand the importance of keeping data secure and compliant. Ten percent of those surveyed did not know if they were required to secure data within a regulatory framework or not, which is a small ignorance that could result in serious risk.

An open foundation

A specific approach to open source is essential for an effective enterprise data strategy, but half of those surveyed agreed that current cloud service providers are unable to meet their need for access to open-source software. Open compute, open storage, and open integration are baseline capabilities that a comprehensive enterprise data platform must provide.

More than two thirds (69%) of business decision-makers in senior management positions say that AI speeds up the resolution of customer queries, according to a recent survey commissioned by software provider, Enghouse Interactive.

Further underlining support for AI-driven customer service, more than a quarter (27%) of the sample claim that implementation of new technologies such as robots and automation would be the single thing that would help them serve customers the most during 2019, while a further 27% said better use and business-wide visibility of customer data (again often driven by intelligent technology) would be the top thing.

Jeremy Payne, International VP Marketing, Enghouse Interactive, said: “We are living in a digital age and the use of AI and robotics are on the increase in the customer service environment. This ongoing drive to automation has the potential to bring far-reaching benefits to organisations and the customers they serve. Yet, in making the journey to digital, businesses must guard against leaving their own employees behind and failing to allay their concerns about the digital future and their role in it.”

Three-quarters (75%) of respondents said they have had a positive experience of technological change within a contact centre or customer service environment, while just 6% said their experience had been negative. Moreover 85% of the sample agree with the statement: ‘my employer gives me the tools and technologies to deliver the best possible service to customers’.

However, the survey results do highlight some scepticism among customer service teams as to the motives their business has for implementing solutions. When asked to name what they thought their company was hoping to do by moving to robotics and automation technology, more than a third of respondents (37%) thought ‘cutting costs’ was among their company’s top two priorities and 34% said ‘deliver a competitive edge’. ‘Empower employees and add value to their jobs’ was lower down the list (referenced by 31%).

“That’s an area organisations will need to look at,” said Payne. “After all, the implementation of bots and AI typically helps them deal more efficiently with routine enquiries, thereby relieving contact centre employees from having to deal with standard questioning all the time and adding greater value to the interactions with which they are involved.”

Some enterprises lack understanding and mature data infrastructures to make Artificial Intelligence (AI) a success.

Mindtree has revealed the findings of its recent survey of AI usage across enterprises. The study found most businesses are well underway with AI experimentation, but many still lack an understanding of the use cases to deliver business value and the data infrastructures for making AI a success across the enterprise on a sustainable basis.

The survey, which gathered data from 650 global IT leaders from key business markets, found 85% of organisations have a data strategy and 77% have implemented some AI-related technologies in the workplace, with 31% already seeing major business value from their AI efforts. Organisations are achieving their vision to industrialize AI, but many can do more to gain real business value.

Greater focus is needed on use cases that deliver business value

When implementing an AI strategy, there’s a pressing need for use cases to demonstrate business value. The survey revealed that 16% of enterprises globally focus on a pain point and then define a use case, with smaller organisations (13%) being less likely to focus on the business impact compared with their larger counterparts (18%). With all the pressure to harness AI, many organisations are experimenting but not all have found the formula to deploy at scale and add significant value.

The survey found there are certain business functions such as sales (35%) and marketing (32%) gaining the most value from AI, as it accelerates the delivery of improved customer experiences. The most popular technologies deployed by global organisations are machine learning (34%), chatbots (34%), and robotics (28%).

Success with AI means merging rapid experimentation, organisational agility and skills

AI is already delivering measurable business benefits, but the majority of enterprises have yet to find a formula for repeatable success. An important requirement for enterprises to successfully start their AI journey is to experiment with different use cases and technologies with agile and rapid innovation methodologies. Just over a quarter (29%) of the enterprises surveyed said they are agile enough to rapidly experiment with AI, with large organisations (39%) having an edge compared to their smaller counterparts at 19%.

Progressive enterprises are spending 25% of their IT budget on digital innovations with a focus on use case definition, experimentation, and operationalization for scale. Businesses also understand the need to upgrade and refresh their skills to capitalize on AI. 44% say they will hire the best talent available externally, 30% have partnerships with academia, and 22% run hackathons to solve data challenges and identify potential candidates.

The AI-led enterprise – it’s all about data

Finding the right use cases and building alignment and support for AI initiatives are critical, but data is the make-or-break variable when it comes to scaling AI across the enterprise. Businesses need to modernize their data infrastructure, architectures and systems, along with an overarching data strategy and robust data governance processes. The survey revealed more than half (51%) of large enterprises say they don’t fully understand the data infrastructure needed to implement AI at scale, while 6 out of 10 organisations believe their data infrastructure and architectures are immature and not well positioned to deliver business value.

“The potential of AI to disrupt, transform and rebuild businesses is clearly felt in the C-Suite, even if it is not yet fully understood,” said Suman Nambiar, Head of Strategy, Partners and Offering for Digital at Mindtree. “Business and technology leaders are increasingly expected to prove business value, unlock the power of their data, and define their AI strategy and roadmap. To thrive in the Digital Age, businesses must be agile and unafraid of failure. They must also constantly refine their understanding of how AI will give them a competitive edge and deliver real and measurable business value to maximize their investment in these disruptive and powerful technologies.”

New survey reveals only 12% of today’s enterprises have fully transitioned to modern tools.

A new IT Operations Management survey has found that enterprises depending exclusively on legacy monitoring tools are falling behind in business agility and operational efficiency. The commissioned study, conducted by Forrester Consulting, uncovered that organizations with disjointed and outdated IT offerings that utilize legacy tools and strategies are trapped in a perpetual survival mode and unable to innovate. Only an alarming 12% of respondents report having fully transitioned to modern monitoring tools, with 37% stillrelying exclusively on legacy tools keeping them stuck in a digital deadlock.

Respondents revealed that legacy toolsets remain prevalent in their IT ecosystem, further relaying the negative implications of legacy IT vendors and tools that undermine enterprises service resilience, fast mean-time-to resolution, and the ability to automate to scale. A full 86% said they are still using at least one legacy tool, which is actively exposing their business to negative consequences, primarily high costs of IT support, service degradation, and increased security risks.

Top findings from the ITOM study include:

The Opportunity Ahead

Mature enterprises are attempting to match their digital-native counterparts by adopting cloud-based architectures, but continue to fall short, as many modern tools are unable to manage outdated legacy systems. To address IT visibility and remediation challenges, over two-thirds (68%) of companies surveyed have plans to invest in AIOps-enabled monitoring solutions over the next 12 months. These solutions apply AI/ML-driven analytics to business and operations data to make correlations and provide real-time insights that allow IT operations teams to resolve incidents faster–and avoid incidents altogether. IT decision-makers reported that the major benefits of AIOps solutions include increased operational efficiency and business agility, as well as reduced cost of downtime.

“Enterprises that operate on dozens of legacy vendor tools are siloing the view of their IT environment, leading to prolonged service disruptions, issues with incident resolution, and ultimately, providing for a poor customer experience. These “survival mode enterprises” have little chance of getting ahead of the agility curve and are in real danger of being left behind,” said Dave Link, founder and CEO of ScienceLogic. “As the adoption of newer technologies like containers and microservices continues to rise, forward-thinking companies will drive extensive automation with artificial intelligence and machine learning algorithms. This study shows that companies will need to adopt innovations like AIOps to ensure a successful modernization and automation journey.”

“These enterprises are starting to take the leap to modernize their IT environment, however, survival will require a cultural shift in how people and organizations understand the flow and impact of clean data as part of a broader strategy towards automation,” said Link. “The reality is that those who have not started are already behind, but it is not too late to future-proofs your IT systems and teams so they may focus on innovative advancements to propel your enterprise to market success.”

Veracode has released results of a global survey on software vulnerability disclosure, “Exploring Coordinated Disclosure,” that examines the attitudes, policies and expectations associated with how organisations and external security researchers work together when vulnerabilities are identified.

The study reveals that, today, software companies and security researchers are near universal in their belief that disclosing vulnerabilities to improve software security is good for everyone. The report found 90% of respondents confirmed disclosing vulnerabilities “publicly serves a broader purpose of improving how software is developed, used and fixed.” This represents an inflection point in the software industry – recognition that unaddressed vulnerabilities create enormous risk of negative consequences to business interests, consumers, and even global economic stability.

“The alignment that the study reveals is very positive,” said Veracode Chief Technology Officer and co-founder Chris Wysopal. “The challenge, however, is that vulnerability disclosure policies are wildly inconsistent. If researchers are unsure how to proceed when they find a vulnerability it leaves organisations exposed to security threats giving criminals a chance to exploit these vulnerabilities. Today, we have both tools and processes to find and reduce bugs in software during the development process. But even with these tools, new vulnerabilities are found every day. A strong disclosure policy is a necessary part of an organisation’s security strategy and allows researchers to work with an organisation to reduce its exposure. A good vulnerability disclosure policy will have established procedures to work with outside security researchers, set expectations on fix timelines and outcomes, and test for defects and fix software before it is shipped.”

Key findings of the research include:

·Unsolicited vulnerability disclosures happen regularly. The report found more than one-third of companies received an unsolicited vulnerability disclosure report in the past 12 months, representing an opportunity to work together with the reporting party to fix the vulnerability and then disclose it, thus improving overall security. For those organisations that received an unsolicited vulnerability report, 90% of vulnerabilities were disclosed in a coordinated fashion between security researchers and the organisation. This is evidence of a significant shift in mindset that working collaboratively is the most effective approach toward transparency and improved security.

·Security researchers are motivated by the greater good. The study shows security researchers are generally reasonable and motivated by a desire to improve security for the greater good. Fifty-seven percent of researchers expect to be told when a vulnerability is fixed, 47% expect regular updates on the correction, and 37% expect to validate the fix. Only 18% of respondents expect to be paid and just 16% expect recognition for their finding.

·Organisations will collaborate to solve issues. Promisingly, three in four companies report having an established method for receiving a report from a security researcher and 71% of developers feel that security researchers should be able to do unsolicited testing. This may seem counterintuitive since developers would be most impacted in having their workflow interrupted to make an emergency fix, yet the data show developers view coordinated disclosure as part of their secure development process, expect to have their work tested outside the organisation, and are ready to respond to problems that are identified.

·Security researchers’ expectations for remediation time aren’t always realistic. After reporting a vulnerability, the data shows 65% of security researchers expect a fix in less than 60 days. That timeline might be too aggressive and even unrealistic when weighed against the most recent Veracode State of Software Security Volume 9 report, which found more than 70% of all flaws remain one month after discovery and nearly 55% remain three months after discovery. The research shows that organisations are dedicated to fixing and responsibly disclosing flaws and they must be given reasonable time to do so.

·Bug bounties aren’t a panacea. Bug bounty programs get the lion’s share of attention related to disclosure but the lure of a payday actually is not driving most disclosures, according to the research. Nearly half (47%) of organisations have implemented bug bounty programs but just 19% of vulnerability reports come via these programs. While they can be part of an overarching security strategy, bug bounties often prove inefficient and expensive. Given that the majority of security researchers are primarily motivated by seeing a vulnerability fixed rather than money, organisations should consider focusing their finite resources on secure software development that finds vulnerabilities within the software development lifecycle.

Half of professionals also admitted concerns around their current cloud providers.

An overwhelming number of cybersecurity professionals (89%) have expressed concerns about the third-party managed service providers (MSPs) they partner with being hacked, according to new research from the Neustar International Security Council.

While most organisations reported working with an average of two to three MSPs, less than a quarter (24%) admitted to feeling very confident in the safety barriers they have in place to prevent third-party contractors from violating security protocols. Along with this concern, security professionals expressed a desire to switch cloud providers, with over half (53%) claiming they would if they could.

These threat levels were also apparent in Neustar’s International Cyber Benchmarks Index, which NISC began mapping trends in 2017, the most recent index revealed an 18-point increase over the two-year period.

Aside from third-party threats, security professionals ranked distributed denial of service (DDoS) attacks as their greatest concern (22%), closely followed by system compromise (20%) and ransomware (19%). Insider threat remained bottom of the list, with 29% seeing it as the least concerning.

In light of continued fears around DDoS attacks, organisations outlined their most recent security focus to be on increasing their ability to respond to DDoS threats, with 57% admitting to having been on the receiving end of an attack in the past. In the most recent data set collected and analysed by NISC, enterprises were most likely to take between 60 seconds and 5 minutes to initiate DDoS mitigation.

“Regardless of size or sector, every organisation relies on third-party service providers to support and enable their digital transformation efforts. Whether it’s a business intelligence tool, cloud platform or automation solution, the number of MSPs businesses work with is only set to increase, as enterprises continue to chase agility and find new ways to attract customers,” said Rodney Joffe, Chairman of NISC, Senior Vice President, Security CTO and Fellow at Neustar. “However, by multiplying the number of digital links to an organisation, you also increase the potential for risk, with malicious actors finding alternative ways to infiltrate your networks.”

“While businesses should adopt their own, always-on approach to security, it’s essential that they are also questioning the security of their whole digital network, including the third parties they work with. Missed connections or weak links can cause lasting damage to an organisation’s bottom line, leaving no room for error,” Joffe added.

The smart money is very interested in software at present, and this is driven by a booming SaaS sector; it means more to think about for the managed services sector as it wrestles with the rate of mergers and acquisitions among its suppliers. It also pushes consolidation among the MSPs themselves as they find themselves alongside similar businesses using the same limited resources and think M&A is a solution.

Overall, the M&A landscape in enterprise software is thriving, with volumes, value and valuations currently peaking as target firms continue to receive interest from established trade buyers and investors. This comes from new research by Hampleton Partners, experts in tech M&A, who will be speaking at the Managed Services Summit North on October 30 in Manchester.

In its latest analysis of global Mergers & Acquisitions activity in Enterprise Software, Hampleton Partners reports the highest deal count ever recorded, with 651 transactions inked over the first half of 2019. Multiples were also sky-high; the trailing 30-month median EV/EBITDA multiple peaked at 17.5x, inching its way up from last year’s levels.

And this boom in demand shows no signs of slowing, it says. Miro Parizek, founder and principal partner, Hampleton Partners, says: “In spite of any economic slowdown and public market volatility, companies are sprinting towards SaaS and software targets to cover all areas of digitalisation and digital transformation. Financial buyers, with access to cheap debt, are eager to capitalise on this drive for digital transformation.”

“Although we expect the sector’s record-breaking metrics to ease away from their current peak, the sector is set to remain stable and strong over the long-term. The need to improve business insights and resource allocation will remain imperative for every business and be spurred by further advances in machine learning and artificial intelligence for SaaS and cloud-based software.”

All of which is good news for managed service providers, although it does mean more technology-based changes to be considered at a strategic level. The fear of being left behind which has driven processes of change by customers in recent years is now finding its way to their suppliers and the MSPs, who find themselves in a race to keep up with advances.

And it does not mean that the MSPs themselves can rely on M&A action to drive their businesses forward or give them access to the limited resources they need. As a veteran of nine M&A deals, MSP IT Lab CEO Peter Sweetbaum told the London Managed Services Summit on September 18: “M&A is not a proxy for a poor sales and marketing strategy; if you cannot grow organically and don’t have that sales and marketing strategy fueling your growth, you need to rethink. M&A is additive, not a solution. We’ve seen buy-and-builds in this space that have been crushed by the weight of debt – there was no vision and they paid the price.”

And don’t underestimate the costs of growth, he added: “What we are seeing and what we all need to realise, is that vendors are changing the model. We [at IT Lab] have to keep up with Azure, Dynamics and Office365. Our clients do not have the skills and capacity to keep up with the likes of Microsoft and its monthly, weekly, sometimes daily, release cycles. At IT Lab we have to keep up with that - it is a challenge for us and we have to invest to keep up.”

Not every MSP can do that, he concluded. The rapid change in the enterprise software market and the application of large sums to its valuations may create even more pressure on MSP strategies.

Peter Sweetbaum will be presenting again at the Managed Services Summit North, in Manchester on October 30, as will Jonathan Simnett from Hampleton Partners.

Worldwide shipments of devices — PCs, tablets and mobile phones — will decline 3.7% in 2019, according to the latest forecast from Gartner, Inc.

Gartner estimates there are more than 5 billion mobile phones used around the world. After years of growth, the worldwide smartphone market has reached a tipping point. Sales of smartphones will decline by 3.2% in 2019, which would be the worst decline the category has seen (see Table 1).

“This is due to consumers holding onto their phones longer, given the limited attraction of new technology,” said Ranjit Atwal, senior research director at Gartner.

The lifetimes of premium phones — for example, Android and iOS phones — continue to extend through 2019. Their quality and technology features have improved significantly and have reached a level today where users see high value in their device beyond a two-year time frame.

Consumers have reached a threshold for new technology and applications: “Unless the devices provide significant new utility, efficiency or experiences, users do not necessarily want to upgrade their phones,” said Mr. Atwal.

Table 1

Worldwide Device Shipments by Device Type, 2018-2021 (Millions of Units)

| Device Type | 2018 | 2019 | 2020 | 2021 |

| Traditional PCs (Desk-Based and Notebook) | 195.3 | 188.4 | 177.9 | 169.2 |

| Ultramobiles (Premium) | 64.4 | 67.3 | 71.8 | 76.4 |

| Total PC Market | 259.7 | 255.7 | 249.7 | 245.6 |

| Ultramobiles (Basic and Utility) | 149.6 | 140.9 | 137.3 | 135.7 |

| Computing Device Market | 409.3 | 396.6 | 387 | 381.3 |

| Mobile Phones | 1,813.40 | 1,743.10 | 1,768.80 | 1,775.50 |

| Total Device Market | 2,222.70 | 2,139.70 | 2,155.80 | 2,156.80 |

Source: Gartner (September 2019)

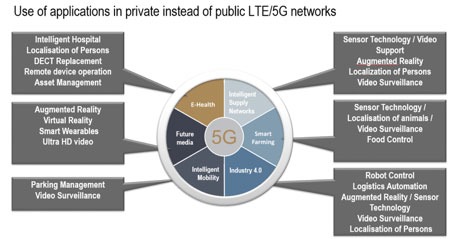

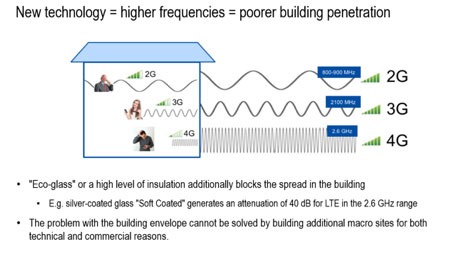

5G-Capable Phones Continue to Be On The Rise

The share of 5G-capable phones will increase from 10% in 2020 to 56% by 2023.

“The major players in the mobile phone market will look for 5G connectivity technology to boost replacements of existing 4G phones,” said Mr. Atwal. “Still, less than half of communications service providers (CSPs) globally will have launched a commercial 5G network in the next five years.

“More than a dozen service providers have launched commercial 5G services in a handful of markets so far,” said Mr. Atwal. “To ensure smartphone sales pick up again, mobile providers are starting to emphasize 5G performance features, like faster speeds, improved network availability and enhanced security. As soon as providers better align their early performance claims for 5G with concrete plans, we expect to see 5G phones account for more than half of phone sales in 2023. As a result of the impact of 5G, the smartphone market is expected to return to growth at 2.9% in 2020.”

5G will impact more than phones. The recent Gartner IoT forecast showed that the 5G endpoint installed base will grow 14-fold between 2020 and 2023, from 3.5 million units to 48.6 million units. By 2028, the installed base will reach 324.1 million units, although 5G will make up only 2.1% of the overall Internet of Things (IoT) endpoints.

“The inclusion of 5G technology may even be incorporated into premium ultramobile devices in 2020 to make them more marketable to customers,” said Mr. Atwal.

PC Device Trends in 2019

While worldwide PC shipments totaled 63 million units and grew 1.5% in the second quarter of 2019, unclear external economic issues still cast uncertainty over PC demand this year. PC shipments are estimated to total 256 million units in 2019, a 1.5% decline from 2018.

The consumer PC market will decline by 9.8% in 2019, reducing its share of the total market to less than 40%. The collective increase in consumer PC lifetimes will result in 10 million fewer device replacements through 2023. With the Windows 10 migration peaking, business PCs will decline by 3.9% in 2020 after three years of growth.

“There is no doubt the PC landscape is changing,” said Mr. Atwal. “The consumer PC market requires high-value products that can meet specific consumer tasks, such as gaming. Likewise, PC vendors are having to cope with uncertainty from potential tariffs and Brexit disruptions. Ultimately, they need to change their business models to one based on annual service income, rather than the peaks and troughs of capital spending.”

The blockchain effect – five to ten years away?

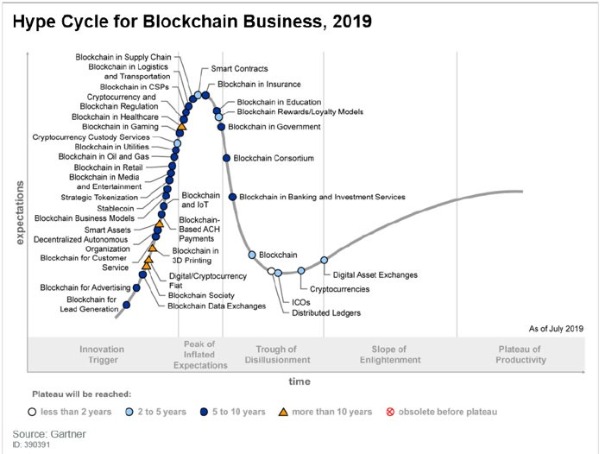

The 2019 Gartner, Inc. Hype Cycle for Blockchain Business shows that the business impact of blockchain will be transformational across most industries within five to 10 years.

“Even though they are still uncertain of the impact blockchain will have on their businesses, 60% of CIOs in the Gartner 2019 CIO Agenda Survey said that they expected some level of adoption of blockchain technologies in the next three years” said David Furlonger, distinguished research vice-president at Gartner. “However, the existing digital infrastructure of organizations and the lack of clear blockchain governance are limiting CIOs from getting full value with blockchain.”

The Hype Cycle provides an overview of how blockchain capabilities are evolving from a business perspective and maturity across different industries (see Figure 1).

Figure 1. Gartner’s Hype Cycle for Blockchain Business, 2019

Source: Gartner (September 2019)

Key Industries

Banking and investment services industries continue to experience significant levels of interest from innovators seeking to improve decades old operations and processes, however only 7.6% of respondents to the CIO Survey suggested that blockchain is a game-changing technology. That said, nearly 18% of banking and investment services CIOs said they have adopted or will adopt some form of blockchain technology within the next 12 months and nearly another 15% within two years.

“We see blockchain in several key areas in banking and investments services, primarily focused on permissioned ledgers,” said Mr. Furlonger. “We also expect continued developments in the creation and acceptance of digital tokens. However considerable work needs to be completed in nontechnology-related activities such as standards, regulatory frameworks and organization structures for blockchain capabilities to reach the Plateau of Productivity – the point at which mainstream adoption takes off, in this industry.

Blockchain in gaming. In the fast-growing esport industry, blockchain natives are launching solutions that allow users to create their own tokens to support the design of competition as well as to enable trading of virtual goods. The tokens provide gamers with more control over their in-game items making them more portable across gaming platforms.

“High user volumes and rapid innovation make the gaming sector a testing ground for innovative application of blockchain. It is the perfect place to monitor how users push the adaptability of the most critical components of blockchain: decentralization and tokenization,” said Christophe Uzureau, research vice president at Gartner. “Gaming startups provide appealing alternatives to the ecosystem approaches of Amazon, Google or Apple, and serve as a model for companies in other industries to develop digital strategies.”

In retail, Blockchain is being considered for “track and trace” services, counterfeit prevention, inventory management and auditing, any of which could be used to improve product quality or food safety, for example. Whilst these examples have value, the real impact of blockchain for retail industry will depend on supporting new ideas — such as using blockchain to transform or augment loyalty programs. Once it has been combined with the Internet of Things (IoT) and artificial intelligence (AI), blockchain has the potential to change retail business models forever, impacting both data and monetary flows and avoiding centralization of market power.

As a result, Gartner believes that blockchain has the potential to transform business models across all industries — but these opportunities demand that enterprises adopt complete blockchain ecosystems. Without tokenization and decentralization, most industries will not see real business value.

Self-service isn’t serving the customer

Only 9% of customers report resolving their issues completely via self-service, according to Gartner, Inc. Many companies create more channels for customer service, but this creates complex customer resolution journeys, as customers switch frequently between channels.

“The idea behind providing customers with more channels in order to give them what they ‘want’ and in an attempt to offer more choice in their service experience sounds like a great idea, but In fact, it has unintentionally made things worse for customers,” said Rick DeLisi, vice president in Gartner’s Customer Service & Support practice. “This approach of ‘more and better channels’ isn’t living up to the promise of reduced live call volume and is only leading to more complex and costly customer interactions to manage. That becomes a ‘lose-lose’ for customers and the companies that are trying to serve them.”

When customers can’t solve their issues via self-service, they resort to live customer service calls in order to solve their problem — thereby driving up operating costs as a result. Gartner’s 2019 Customer Service and Support Leader poll identified that live channels such as phone, live chat and email cost an average of $8.01 per contact, while self-service channels such as company-run websites and mobile apps cost about $0.10 per contact.

“As customer behavior in self-service continues to evolve, we are learning that most people have become used to the idea of using more than one channel (i.e., phone, chat, text/SMS, online videos, review sites in-store visits) during the resolution of one problem or issue with a given company,” added Mr. DeLisi. “The bad news is that it is definitely forcing a higher cost-to-serve for companies with no significant increase in the overall quality of the customer experience.”

In a survey of 8,398 customers, the top five customers’ preferred channels for issue resolution included: phone (44%), chat (17%), email (15%), company website (12%) and search engine (4%). Gartner research shows that both customer effort and customer satisfaction levels do not statistically differ between channels, and customer loyalty is not affected by use or availability of a preferred channel.

Yet, many service organizations continue to add more and more channels even though access to these channels does not produce the expected customer experience benefits. The addition of digital channels often results in varying levels of maturity and an inconsistent experience. To make matters worse, live call volume and associated costs for issue resolution aren’t decreasing.

Given the varying costs associated with each channel and customers’ willingness to use any channels available to them, service organizations must rethink their overall service strategy to move toward a more self-service dominant approach. This requires a thoughtful approach to channel offerings — one where channels can no longer be “bolted on” after the fact. Instead, customer service and support leaders must consider the following four imperatives to move to a more self-service dominant approach:

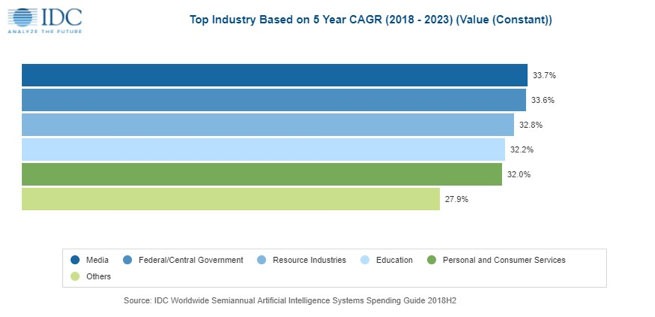

Global spending on artificial intelligence (AI) systems is expected to maintain its strong growth trajectory as businesses continue to invest in projects that utilize the capabilities of AI software and platforms. According to the recently updated International Data Corporation (IDC) Worldwide Artificial Intelligence Systems Spending Guide, spending on AI systems will reach $97.9 billion in 2023, more than two and one half times the $37.5 billion that will be spent in 2019. The compound annual growth rate (CAGR) for the 2018-2023 forecast period will be 28.4%.

"The AI market continues to grow at a steady rate in 2019 and we expect this momentum to carry forward," said David Schubmehl, research director, Cognitive/Artificial Intelligence Systems at IDC. "The use of artificial intelligence and machine learning (ML) is occurring in a wide range of solutions and applications from ERP and manufacturing software to content management, collaboration, and user productivity. Artificial intelligence and machine learning are top of mind for most organizations today, and IDC expects that AI will be the disrupting influence changing entire industries over the next decade."

Spending on AI systems will be led by the retail and banking industries, each of which will invest more than $5 billion in 2019. Nearly half of the retail spending will go toward automated customer service agents and expert shopping advisors & product recommendation systems. The banking industry will focus its investments on automated threat intelligence and prevention systems and fraud analysis and investigation. Other industries that will make significant investments in AI systems throughout the forecast include discrete manufacturing, process manufacturing, healthcare, and professional services. The fastest spending growth will come from the media industry and federal/central governments with five-year CAGRs of 33.7% and 33.6% respectively.

"Artificial Intelligence (AI) has moved well beyond prototyping and into the phase of execution and implementation," said Marianne D'Aquila, research manager, IDC Customer Insights & Analysis. "Strategic decision makers across all industries are now grappling with the question of how to effectively proceed with their AI journey. Some have been more successful than others, as evidenced by banking, retail, manufacturing, healthcare, and professional services firms making up more than half of the AI spend. Despite the learning curve, IDC sees higher than average five-year annual compounded growth in government, media, telecommunications, and personal and consumer services."

Investments in AI systems continue to be driven by a wide range of use cases. The three largest use cases – automated customer service agents, automated threat intelligence and prevention systems, and sales process recommendation and automation – will deliver 25% of all spending in 2019. The next six use cases will provide an additional 35% of overall spending this year. The use cases that will see the fastest spending growth over the 2018-2023 forecast period are automated human resources (43.3% CAGR) and pharmaceutical research and development (36.7% CAGR). However, eight other use cases will have spending growth with five-year CAGRs greater than 30%.

The largest share of technology spending in 2019 will go toward services, primarily IT services, as firms seek outside expertise to design and implement their AI projects. Hardware spending will be somewhat larger than software spending in 2019 as firms build out their AI infrastructure, but purchases of AI software and AI software platforms will overtake hardware by the end of the forecast period with software spending seeing a 36.7% CAGR.

On a geographic basis, the United States will deliver more than 50% of all AI spending throughout the forecast, led by the retail and banking industries. Western Europe will be the second largest geographic region, led by banking and discrete manufacturing. China will be the third largest region for AI spending with retail, state/local government, and professional services vying for the top position. The strongest spending growth over the five-year forecast will be in Japan (45.3% CAGR) and China (44.9% CAGR).

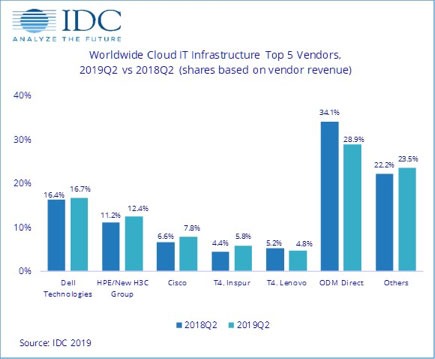

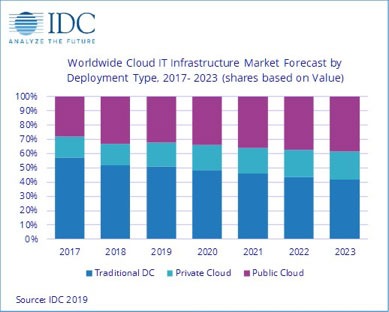

Cloud IT infrastructure revenues decline

According to the International Data Corporation (IDC) Worldwide Quarterly Cloud IT Infrastructure Tracker, vendor revenue from sales of IT infrastructure products (server, enterprise storage, and Ethernet switch) for cloud environments, including public and private cloud, declined 10.2% year over year in the second quarter of 2019 (2Q19), reaching $14.1 billion. IDC also lowered its forecast for total spending on cloud IT infrastructure in 2019 to $63.6 billion, down 4.9% from last quarter's forecast and changing from expected growth to a year-over-year decline of 2.1%.

Vendor revenue from hardware infrastructure sales to public cloud environments in 2Q19 was down 0.9% compared to the previous quarter (1Q19) and down 15.1% year over year to $9.4 billion. This segment of the market continues to be highly impacted by demand from a handful of hyperscale service providers, whose spending on IT infrastructure tends to have visible up and down swings. After a strong performance in 2018, IDC expects the public cloud IT infrastructure segment to cool down in 2019 with spend dropping to $42.0 billion, a 6.7% decrease from 2018. Although it will continue to account for most of the spending on cloud IT environments, its share will decrease from 69.4% in 2018 to 66.1% in 2019. In contrast, spending on private cloud IT infrastructure has showed more stable growth since IDC started tracking sales of IT infrastructure products in various deployment environments. In the second quarter of 2019, vendor revenues from private cloud environments increased 1.5% year over year reaching $4.6 billion. IDC expects spending in this segment to grow 8.4% year over year in 2019.

Overall, the IT infrastructure industry is at crossing point in terms of product sales to cloud vs. traditional IT environments. In 3Q18, vendor revenues from cloud IT environments climbed over the 50% mark for the first time but fell below this important tipping point since then. In 2Q19, cloud IT environments accounted for 48.4% of vendor revenues. For the full year 2019, spending on cloud IT infrastructure will remain just below the 50% mark at 49.0%. Longer-term, however, IDC expects that spending on cloud IT infrastructure will grow steadily and will sustainably exceed the level of spending on traditional IT infrastructure in 2020 and beyond.

Spending on the three technology segments in cloud IT environments is forecast to deliver growth for Ethernet switches while compute platforms and storage platforms are expected to decline in 2019. Ethernet switches are expected to grow at 13.1%, while spending on storage platforms will decline at 6.8% and compute platforms will decline by 2.4%. Compute will remain the largest category of spending on cloud IT infrastructure at $33.8 billion.

Sales of IT infrastructure products into traditional (non-cloud) IT environments declined 6.6% from a year ago in Q219. For the full year 2019, worldwide spending on traditional non-cloud IT infrastructure is expected to decline by 5.8%, as the technology refresh cycle driving market growth in 2018 is winding down this year. By 2023, IDC expects that traditional non-cloud IT infrastructure will only represent 41.8% of total worldwide IT infrastructure spending (down from 52.0% in 2018). This share loss and the growing share of cloud environments in overall spending on IT infrastructure is common across all regions.

Most regions grew their cloud IT Infrastructure revenues in 2Q19. Middle East & Africa was fastest growing at 29.3% year over year, followed by Canada at 15.6% year-over-year growth. Other growing regions in 2Q19 included Central & Eastern Europe (6.5%), Japan (5.9%), and Western Europe (3.1%). Cloud IT Infrastructure revenues were down slightly year over year in Asia/Pacific (excluding Japan) (APeJ) by 7.7%, Latin America by 14.2%, China by 6.9%, and the USA by 16.3%.

| Top Companies, Worldwide Cloud IT Infrastructure Vendor Revenue, Market Share, and Year-Over-Year Growth, Q2 2019 (Revenues are in Millions) | |||||

| Company | 2Q19 Revenue (US$M) | 2Q19 Market Share | 2Q18 Revenue (US$M) | 2Q18 Market Share | 2Q19/2Q18 Revenue Growth |

| 1. Dell Technologies | $2,355 | 16.7% | $2,565 | 16.4% | -8.2% |

| 2. HPE/New H3C Group** | $1,749 | 12.4% | $1,748 | 11.2% | 0.1% |

| 3. Cisco | $1,101 | 7.8% | $1,029 | 6.6% | 7.0% |

| 4. Inspur/Inspur Power Systems* *** | $820 | 5.8% | $690 | 4.4% | 18.9% |

| 4. Lenovo* | $670 | 4.8% | $813 | 5.2% | -17.5% |

| ODM Direct | $4,059 | 28.9% | $5,349 | 34.1% | -24.1% |

| Others | $3,307 | 23.5% | $3,473 | 22.2% | -4.8% |

| Total | $14,061 | 100.0% | $15,655 | 100.0% | -10.2% |

| IDC's Quarterly Cloud IT Infrastructure Tracker, Q2 2019 | |||||

Notes:

* IDC declares a statistical tie in the worldwide cloud IT infrastructure market when there is a difference of one percent or less in the vendor revenue shares among two or more vendors.

** Due to the existing joint venture between HPE and the New H3C Group, IDC reports external market share on a global level for HPE as "HPE/New H3C Group" starting from Q2 2016 and going forward.

*** Due to the existing joint venture between IBM and Inspur, IDC will be reporting external market share on a global level for Inspur and Inspur Power Systems as "Inspur/Inspur Power Systems" starting from 3Q 2018.

Long-term, IDC expects spending on cloud IT infrastructure to grow at a five-year compound annual growth rate (CAGR) of 6.9%, reaching $90.9 billion in 2023 and accounting for 58.2% of total IT infrastructure spend. Public cloud datacenters will account for 66.0% of this amount, growing at a 5.9% CAGR. Spending on private cloud infrastructure will grow at a CAGR of 9.2%.

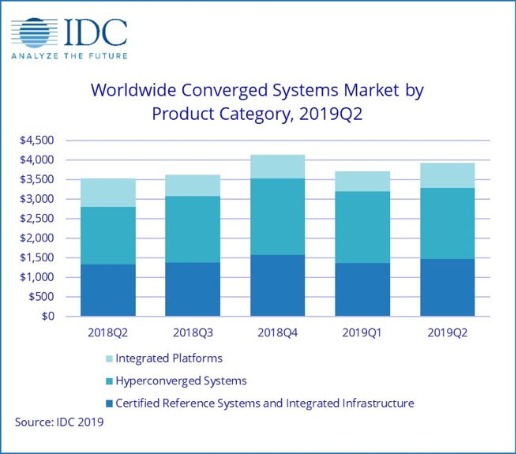

Converged Systems market grows 10.9%

According to the International Data Corporation (IDC) Worldwide Quarterly Converged Systems Tracker, worldwide converged systems market revenue increased 10.9% year over year to $3.9 billion during the second quarter of 2019 (2Q19).

"The value proposition of converged infrastructure solutions has evolved to align with the needs of a hybrid cloud world," said Eric Sheppard, research vice president, Infrastructure Platforms and Technologies at IDC. "Modern converged solutions are driving growth because they allow organizations to leverage standardized, software-defined, and highly automated datacenter infrastructure that is increasingly the on-premises backbone of a seamless multi-cloud world."

Converged Systems Segments

IDC's converged systems market view offers three segments: certified reference systems & integrated infrastructure, integrated platforms, and hyperconverged systems. The certified reference systems & integrated infrastructure market generated nearly $1.5 billion in revenue during the second quarter, which represents 10.5% year-over-year growth and 37.5% of total converged systems revenue. Integrated platforms sales declined 14.4% year over year during the second quarter of 2019, generating $626 million worth of sales. This amounted to 16.0% of the total converged systems market revenue. Revenue from hyperconverged systems sales grew 23.7% year over year during 2Q19, generating $1.8 billion worth of sales. This amounted to 46.6% of the total converged systems market.

IDC offers two ways to rank technology suppliers within the hyperconverged systems market: by the brand of the hyperconverged solution or by the owner of the software providing the core hyperconverged capabilities. Rankings based on a branded view of the market can be found in the first table of this press release and rankings based on the owner of the hyperconverged software can be found in the second table within this press release. Both tables include all the same software and hardware, summing to the same market size.

As it relates to the branded view of the hyperconverged systems market, Dell Technologies was the largest supplier with $533.2 million in revenue and a 29.2% share. Nutanix generated $258.8 million in branded revenue, which represented 14.2% of the total HCI market during the quarter. Cisco was the third largest branded HCI vendor with $114.0 million in revenue representing a 6.2% market share.

| Top 3 Companies, Worldwide Hyperconverged Systems as Branded, Q2 2019 ($M) | |||||

| Company | 2Q19 | 2Q19 Market Share | 2Q18 | 2Q18 Market Share | 2Q19/2Q18 Revenue Growth |

| 1. Dell Technologiesa | $533.2 | 29.2% | $418.7 | 28.4% | 27.4% |

| 2. Nutanix | $258.8 | 14.2% | $275.3 | 18.7% | -6.0% |

| 3. Cisco | $114.0 | 6.2% | $77.7 | 5.3% | 46.8% |

| Rest of Market | $919.1 | 50.4% | $703.8 | 47.7% | 30.6% |

| Total | $1,825.2 | 100.0% | $1,475.4 | 100.0% | 23.7% |

| Source: IDC Worldwide Quarterly Converged Systems Tracker, September 24, 2019 | |||||

Notes:

a – Dell Technologies represents the combined revenues for Dell and EMC sales for all quarters shown.

From the software ownership view of the market, systems running VMware hyperconverged software represented $694.1 million in total 2Q19 vendor revenue, or 38.0% of the total market. Systems running Nutanix hyperconverged software represented $522.0 million in second quarter vendor revenue or 28.6% of the total market. Both amounts represent the value of all HCI hardware, HCI software and system infrastructure software, regardless of how it was branded.

| Top 3 Companies, Worldwide Hyperconverged Systems Based on Owner of HCI Software, Q2 2019 ($M) | |||||

| Company | 2Q19 | 2Q19 Market Share | 2Q18 | 2Q18 Market Share | 2Q19/2Q18 Revenue Growth |

| 1. VMware | $694.1 | 38.0% | $497.7 | 33.7% | 39.5% |

| 2. Nutanix | $522.0 | 28.6% | $497.7 | 33.7% | 4.9% |

| 3. Cisco | $114.0 | 6.2% | $77.7 | 5.3% | 46.8% |

| Rest of Market | $495.1 | 27.1% | $402.4 | 27.3% | 23.0% |

| Total | $1,825.2 | 100.0% | $1,475.4 | 100.0% | 23.7% |

| Source: IDC Worldwide Quarterly Converged Systems Tracker, September 24, 2019 | |||||

Taxonomy Notes

Beginning with the release of 2019 results, IDC has expanded its definition of the hyperconverged systems market segment to include a new breed of systems called Disaggregated HCI (hyperconverged infrastructure). Such systems are designed from the ground up to only support distinct/separate compute and storage nodes. An example of such a system in the market today is NetApp's HCI solution. They offer non-linear scaling of the hyperconverged cluster to make it easier to scale compute and storage resources independent of each other while offering crucial functions such as quality of service. For these disaggregated HCI solutions, the storage nodes may not have a hypervisor at all, since they don't have to run VMs or applications.

IDC defines converged systems as pre-integrated, vendor-certified systems containing server hardware, disk storage systems, networking equipment, and basic element/systems management software. Systems not sold with all four of these components are not counted within this tracker. Specific to management software, IDC includes embedded or integrated management and control software optimized for the auto discovery, provisioning and pooling of physical and virtual compute, storage and networking resources shipped as part of the core, standard integrated system. Numbers in this press release may not sum due to rounding.

Certified reference systems & integrated infrastructure are pre-integrated, vendor-certified systems containing server hardware, disk storage systems, networking equipment, and basic element/systems management software. Integrated platforms are integrated systems that are sold with additional pre-integrated packaged software and customized system engineering optimized to enable such functions as application development software, databases, testing, and integration tools. Hyperconverged systems collapse core storage and compute functionality into a single, highly virtualized solution. A key characteristic of hyperconverged systems that differentiate these solutions from other integrated systems is their scale-out architecture and their ability to provide all compute and storage functions through the same x86 server-based resources. Market values for all three segments includes hardware and software but excludes services and support.

IDC considers a unit to be a full system including server, storage, and networking. Individual server, storage, or networking "nodes" are not counted as units. Hyperconverged system units are counted at the appliance (aka chassis) level. Many hyperconverged appliances are deployed on multinode servers. IDC will count each appliance, not each node, as a single system.

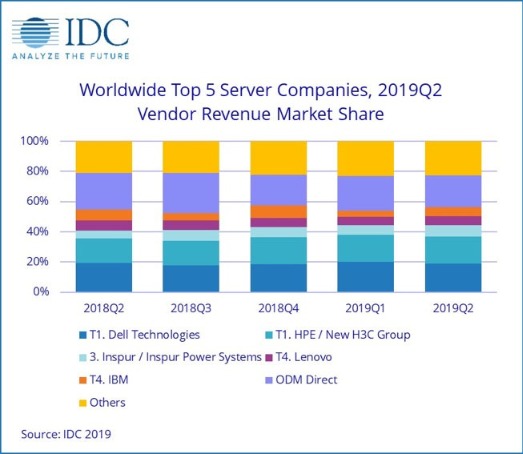

Server revenue declines 11.6%

According to the International Data Corporation (IDC) Worldwide Quarterly Server Tracker, vendor revenue in the worldwide server market declined 11.6% year over year to just over $20.0 billion during the second quarter of 2019 (2Q19). Worldwide server shipments declined 9.3% year over year to just under 2.7 million units in 2Q19.

After a torrid stretch of prolonged market growth that drove the server market to historic heights, the global server market declined for the first time since the fourth quarter of 2016. All classes of servers were impacted, with volume server revenue down 11.7% to $16.3 billion, while midrange server revenue declined 4.6% to $2.4 billion and high-end systems contracted by 20.8% to $1.3 billion.

"The second quarter saw the server market's first contraction in nine quarters, albeit against a very difficult compare from one year ago when the server market realized unprecedented growth," said Sebastian Lagana, research manager, Infrastructure Platforms and Technologies. "Irrespective of the difficult compare, factors impacting the market include a slowdown in purchasing from cloud providers and hyperscale customers, an off-cycle in the cyclical non-x86 market, as well as a slowdown from enterprises due to existing capacity slack and macroeconomic uncertainty."

Overall Server Market Standings, by Company

The number one position in the worldwide server market during 2Q19 was shared* by Dell Technologies and the combined HPE/New H3C Group with revenue shares of 19.0% and 18.0% respectively. Dell Technologies declined 13.0% year over year, while HPE/New H3C Group was down 3.6% year over year. The third position went to Inspur/Inspur Power Systems, which increased its revenue by 32.3% year over year. Lenovo and IBM tied* for the fourth position with revenue shares of 6.1%, and 5.9% respectively. Lenovo saw revenue decline by 21.8% year over year while IBM saw its revenue contract 27.4% year over year. The ODM Direct group of vendors accounted for 21.1% of total revenue and declined 22.9% year over year to $4.23 billion. Dell Technologies led the worldwide server market in terms of unit shipments, accounting for 17.8% of all units shipped during the quarter.

| Top 5 Companies, Worldwide Server Vendor Revenue, Market Share, and Year-Over-Year Growth, Second Quarter of 2019 (Revenues are in US$ millions) | |||||

| Company | 2Q19 Revenue | 2Q19 Market Share | 2Q18 Revenue | 2Q18 Market Share | 2Q19/2Q18 Revenue Growth |

| T1. Dell Technologies* | $3,809.0 | 19.0% | $4,375.8 | 19.3% | -13.0% |

| T1. HPE/New H3C Groupa* | $3,607.4 | 18.0% | $3,743.5 | 16.5% | -3.6% |

| 3. Inspur/Inspur Power Systemsb | $1,438.8 | 7.2% | $1,087.9 | 4.8% | 32.3% |

| T4. Lenovo* | $1,212.0 | 6.1% | $1,549.1 | 6.8% | -21.8% |

| T4. IBM* | $1,188.6 | 5.9% | $1,637.5 | 7.2% | -27.4% |

| ODM Direct | $4,232.7 | 21.1% | $5,488.2 | 24.2% | -22.9% |

| Rest of Market | $4,536.1 | 22.7% | $4,764.5 | 21.0% | -4.8% |

| Total | $20,024.6 | 100% | $22,646.3 | 100% | -11.6% |

| Source: IDC Worldwide Quarterly Server Tracker, September 4, 2019 | |||||

Notes: