This issue of Digitalisation World includes significant coverage of the first anniversary of GDPR, open source technology and collaboration. If there’s one theme that links these topic together, it must be that of trust. When it comes to handing over data, private individuals and corporations need to be able to trust that this information will not be misappropriated nor used for purposes for which it was never intended. Despite many example of data breaches and data misuse, most citizens still seem happy to hand over data concerning their lifestyles.

When it comes to collaboration and open source, both of these practices rely on the idea that everyone and every company, from huge great corporations right down to the most insignificant of individuals, all have a role to play in working together, and sharing information, hopefully for the good of all. As above, the key element is the trust required to work with others to develop solutions – solutions that are freely available to all, and not ‘hijacked’ along the line by proprietary technology.

And while we’re on the subject of trust, the ongoing furore concerning Huawei’s fitness, or otherwise, to supply 5G technology to the UK, is just one more example of an issue of trust. Is the Chinese government spying on us all, as Mr Trump suggests? Is he just indulging in yet more fake news to harm Huawei’s business prospects, and bolster those of US tech companies? And do we trust Facebook and Google any more, or less, than we do Huawei? And does any of it really matter anyway – after all, many citizens believe that, if a government wants to find out something about any individual, corporation, or other country, it will find a way to do so – enter Big Brother.

As intelligent automation develops, and data becomes the new oil (apologies for using this expression, but it does make the point!), levels of trust will have to increase and/or be stretched to breaking point if we are to benefit from all that AI and the like has to offer.

Or will there be a kick back at some point? Will the time come when the majority says ‘enough is enough’ and individual privacy regains its importance? I suspect not if the millennials have anything to do with it!

Study tracks the progress of European businesses towards empowering their workforce with the latest technological innovations.

Dell Technologies and VMware have unveiled the findings from a survey, the results of which are published in the IDC Executive Summary, Becoming “ Future of Work” Ready: Follow the Leaders, sponsored by Dell Technologies and VMWare. The study is focused on the adoption of the latest technological innovations in European businesses. The results reveal that only 29% of European organisations have successfully established a Future of Work strategy - a holistic and integrated approach to empower a company and its workers with the latest innovations and concepts.

The study surveyed full-time employees from small, medium and large businesses across the Czech Republic, France, Germany, Italy, Poland, Spain and the United Kingdom. In the businesses identified as “Future of Work determined organisations” (FDOs – companies that have established a Future of Work strategy), the top initiative currently underway (50%) is the implementation of training programs to bring employees up to speed with the latest digital skill requirements. Almost half of FDOs consider employee productivity as a critical driver in the transformation of their workplace.

However, driving the workplace of the future isn’t just the improvement of digital skills but also the working environment itself. 46% agreed that redesigning the office space for smarter working is an integral programme presently taking place, and France is leading the way with well over half of organisations in the country driving renovations.

Working styles have continued to evolve, and European FDOs have acknowledged this and are adapting. 48% of European businesses have created security policiesthat benefit contemporary working styles such as flexible and remote working. The UK is leading the chargewith two-thirds of Britain-based companies demonstrating a strong resolve to adapt to the ever-changing needs of the European workforce.

“There are great examples of companies who have adopted a holistic approach to the Future of Work and their success highlights the importance of this approach to today’s workforce. More European companies need to consider this enterprise-wide strategy,” says Therese Cooney, Senior Vice President, Client Solutions Group, Dell Technologies. “The future workplace shouldn’t be created to solely fit the needs of the company, but also the people who drive it. We need to equip employees with the right digital skills, technology and security safeguards in an environment which helps them grow and succeed with improved collaboration, productivity and flexibility.”

“Prospective employees today are more selective than ever when it comes to deciding where they want to work, which means companies need to transform their workplaces to attract, retain and empower top talent,” says Kristine Dahl Steidel, Vice President, End User Computing, VMware EMEA. “Employees are at the heart of the digital transformation that is changing the future of work and companies that provide an employee experience that boosts flexibility, mobility and productivity are managing to increase their performance and overall success.”

Study highlights

While this isn’t a detrimental issue, companies should still keep in mind that digital transformation shouldn’t run the risk of being left on the wayside but instead be built into their overall business plans for success.

The numerous positive benefits surrounding cloud-based platforms can help simplify device management and the level of scalability for an evolving business.

Eight in ten senior business and government leaders say digital competencies are either very or extremely important to achieving their organisational goals according to a new Economist Intelligence Unit (EIU) survey, commissioned by Riverbed Technology.

Digital competencies have become vital to achieving business goals, according to new research by the Economist Intelligence Unit (EIU). In Benchmarking competencies for digital performance,commissioned by Riverbed, eight in ten respondents see digital competencies as being either very or extremely important in achieving revenue growth, service quality, mission delivery, profit growth/cost reduction, and customer satisfaction.

The study is based on a survey of more than 500 senior business and government leaders across the world, including the UK, focused on assessing nine behaviours, skills and abilities that help organisations improve their digital performance and, ultimately, achieve their objectives. Accompanying the study is a digital competency assessment tool, which enables users to benchmark their organisation’s competencies and performance against all survey respondents. The tool can be accessed here.

The survey uncovers a shared awareness among businesses that digital transformation is necessary to achieve their goals and remain competitive. Yet, more than half of organisations say they are struggling to achieve these important goals because they lack digital competencies. In particular, 65% of respondents say that their digital-competency gaps have negatively affected user experience, which explains why almost half of respondents say they need to improve digital experience management.

The central importance that companies place on improved digital competency comes despite the fact that some firms are yet to achieve meaningful results. About a third of organisations surveyed report only neutral or no measurable benefits from their digital strategies. The issues appear especially problematic in the public sector, with 60% of private-sector respondents describing their IT modernisation/transformation as advanced, compared with only 45% in the public sector.

In terms of overcoming this capability gap, the IT function plays a pivotal role. Organisations are aware that IT must be agile, as 78% of high performers globally cite IT infrastructure modernisation and transformation as their top digital competency for achieving their goals. In addition, enabling greater communication and collaboration between IT and the rest of the organisation (where digital competencies may be scarce) can significantly enhance digital performance and user experience.

Robert Powell, Editorial Director of EIU Thought Leadership (Americas), says: “The study shows a clear consensus among respondents that improving digital competency is vital for boosting organisational performance, even if some are not yet witnessing the results. Nevertheless, among the highest performing, the lessons are clear—do not hesitate, encourage internal collaboration, and, even if you feel ahead of your competition, never stop looking over your shoulder.”

Paul Higley, Vice President, Northern Europe, at Riverbed Technology comments, “The survey results support what we’re hearing from businesses and government leaders across the UK region. It’s time to start addressing the digital-skills gaps in order to fully deliver on digital transformation and build a workforce that will drive creativity, innovation and growth. The findings also highlight that forward-thinking organisations must prioritise investments in tools to measure, monitor, and improve the end-user experience if they are to stay ahead of their competition. Further to this, developing digital skills programs and modernising IT infrastructure are key areas of development to maximise digital performance.”

Digital transformation often fails because of an inability to onboard suppliers and poor user adoption.

Ivalua has published the findings of a worldwide survey of supply chain, procurement and finance business leaders, on the status of their digital transformations, the obstacles encountered and keys to success.

The research, conducted by Forrester Consulting and commissioned by Ivalua, used a digital maturity index to assess organizations’ structure, strategy, process, measurement and technology to determine the true level of digital maturity. It found that most organizations are significantly overestimating their maturity. Only 16% of businesses had an advanced level of digital maturity in procurement, giving them a source of competitive advantage over rivals, though 65% assessed themselves as advanced.

“Procurement leaders have the opportunity to deliver a true competitive advantage for their organizations,” said David Khuat-Duy, Corporate CEO of Ivalua. “Digital transformation is critical to success, but requires a realistic assessment of current maturity, a clear vision for each stage of the journey and the right technology.”

Obstacles differ significantly based on the stage of transformation, indicating a need to assess technology based on both current needs and future ones. Early in the journey, lack of budget and executive support are primary obstacles, while more advanced organizations struggled with poor integration across their source-to-pay systems. As a result, advanced organizations were most likely (60%) to be planning to implement a full ePurchasing suite.

The study revealed that organizations frequently make poor choices with regards to technology, which impedes digital transformation. Over three-quarters (82%) switched or are considering switching technology providers. The primary reasons for switching are poor levels of supplier onboarding (30%) and poor user adoption (27%). Onboarding suppliers quickly is critical for any technology adoption, yet just 17% of organizations are able to onboard new suppliers in less than one month, with 59% taking 1-3 months per supplier.

“To ensure that technology empowers procurement transformation, rather than constrains it, leaders must consider their current and future requirements when evaluating options,” added David Khuat-Duy. “Doing so ensures a steady progression along their journey and the ability to gain an edge on competitors.”

Research finds inadequate access to skilled talent, technology, and data is holding AI initiatives back.

Most organisations are fully invested in AI but more than half don’t have the required in-house skilled talent to execute their strategy, according to new research from SnapLogic. The study found that 93% of US and UK organisations consider AI to be a business priority and have projects planned or already in production. However, more than half of them (51%) acknowledge that they don’t have the right mix of skilled AI talent in-house to bring their strategies to life. Indeed, a lack of skilled talent was cited as the number one barrier to progressing their AI initiatives, followed by, in order, lack of budget, lack of access to the right technology and tools, and lack of access to useful data.

The new research, conducted by Vanson Bourne on behalf of SnapLogic, studied the views and perspectives of IT decision makers (ITDMs) across several industries, asking key questions such as: where is your organisation in its AI/ML journey, what are the top barriers your organisation is facing when executing your AI initiatives, does your organisation have employees in-house with the required skillset to execute your strategy, and what are the top skills and attributes you are looking for in your AI team?

Where are organisations in their AI/ML journey?

When asked where organisations are in their AI/ML journey, most (93%) ITDMs claim to be fully invested in AI. Nearly three-quarters (74%) of organisations in the US and UK haveinitiated an AI project during the past three years, with the US leading the UK at 78% compared to 66% uptake.

Looking at specific industry sectors, the financial services industry is most progressive with 80% having current AI projects in place, followed closely by the retail, distribution and transport sector (76%) and the business and professional services sector (72%). Surprisingly, the IT industry was found to be among the least progressive in AI uptake with 70% having projects actively in place.

Key barriers holding AI initiatives back

Despite strong levels of AI uptake, organisations are being held back by significant barriers. More than half (51%) of ITDMs in the US and UK do not have the right in-house AI talent to execute their strategy. In the UK, this in-house skill shortage is considerably more acute, with 73% lacking the needed talent compared to 41% in the US.

In both the US and UK, manufacturing and IT are challenged the most from this in-house talent shortage. In the UK, 69% of manufacturing organisations and 56% in those in the IT sector raise lack of in-house talent as the top barrier. Likewise, in the US, those two sectors face similar challenges, with 50% in manufacturing and 41% in the IT industry citing lack of in-house talent as the primary barrier.

Behind lack of access to skilled talent, ITDMs in the US and UK also consider a lack of budget (32%) to be a key issue holding them back, followed by a lack of access to the right technologies and tools (28%), as well as access to useful data (26%).

Building the right AI team

Interestingly, the priority skills and attributes that organisations are looking for in their AI team are coding, programming and software development (35%), with data visualisation and analytics considered to be a priority by 33% of ITDMs. An understanding of governance, security and ethics is also considered a necessary skill (34%). Just over a quarter of ITDMs (27%) are looking for talent with an advanced degree in a field closely related to AI/ML.

To build the right AI team, an impressive 68% said they are investing in retraining and upskilling existing employees. Nearly 58% of ITDMs indicated they are identifying and recruiting skilled talent from other companies and organisations, while almost half (49%) believe that recruiting from universities is important to getting an effective AI team in place.

Gaurav Dhillon, CEO at SnapLogic, commented “The AI uptake figures are very encouraging, but key barriers to execution remain in both the US and UK. For organisations to accelerate their AI initiatives, they must upskill and recruit the right talent and invest in new technology and tools. Today’s self-service and low-code technologies can help bridge the gap, effectively democratising AI and machine learning by getting these transformative capabilities into the hands of more workers at every skill level and thus moving the modern enterprise into the age of automation.”

LogMeIn has published the results of a new study conducted by Forrester Consulting to determine how customer experience strategies affects overall business success.

The study, Build Competitive Advantage Through Customer Engagement and AI, surveyed 479 global customer engagement decision makers and found that organisations with a more mature strategy – including those who make Customer Experience (CX), an organisational priority and leverage omni-channel and Artificial Intelligence (AI) technologies – see an increase in revenue and conversion at double the rate of other companies. The results also show that as the maturity gap continues to widen, organisations that are falling behind may never be able to catch-up to their more mature competitors.

“Exceptional customer experience is a cornerstone of business success. Better customer engagement leads not only to higher customer satisfaction, but also to greater top-line revenue growth and more satisfied customer-facing employees,” according to the study. Organisations with greater engagement maturity reap benefits, not only more often, but of greater value, than less mature companies.”

The Impact of Technology

Emerging technologies like artificial intelligence are also accelerating the divide. Companies with a more mature engagement approach can more quickly adapt and incorporate powerful use cases of AI that propel them forward. 36% of the least mature respondents use AI, but only in proofs of concepts. Meanwhile 58% of CX “experts” have implemented a holistic AI strategy and roadmap. The comparison between long-term and short-term strategies of these organisations represent a significant set-back for the less mature companies who have not been able to capitalise on the business intelligence that AI-powered technology can provide.

Challenges Facing Less Mature Organisations

The study showed that 37% of less mature organisations rely too much on obsolete technology – especially in the area of digital channel support. Furthermore, poor self-service and automation capabilities are leading to frustrated customers and increased call centre volume – which conversely is not a typical challenge for the most mature of the group who are successfully leveraging these capabilities to create better overall customer experiences. Additional challenges that less mature organisations run into include lack of visibility into both customer data (37%) and the performance of engagement channels (42%). These limited views inhibit a company’s ability to quickly address weaknesses and understand how to best serve their customers.

Measurable Impact

For the most mature of those surveyed, 63% saw an increase in NPS as a result of their current customer engagement strategies and reported an average of 8 points higher than their lesser mature counterparts. Further, half of these organisations saw an increase in conversation rates, 56% reported an increase in revenue and 40% saw an increase in order size. Even agent satisfaction increased under the more mature organisations with nearly 50% reporting an increase in overall job happiness.

“With all of the hype around AI’s place in customer experience, it can be hard for companies to separate fact from fiction,” said Ryan Lester, Senior Director of Customer Engagement Technologies at LogMeIn. “The results of this study helped provide some clarity around the importance of continuing to evolve customer engagement strategies. Technologies like AI are creating a significant competitive advantage for leaders and leaving the rest falling irreparably behind.”

Splunk has released research that shows organisations are ignoring potentially valuable data and don’t have the resources they need to take advantage of it. The research reveals that although business executives recognise the value of using all of their data, more than half (55 percent) of an organisation’s total data is “dark data,” meaning they either don’t know it exists or don’t know how to find, prepare, analyse or use it.

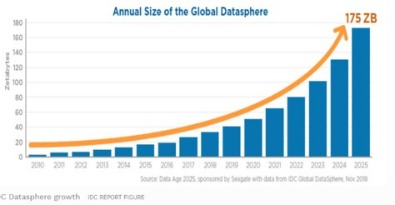

The State of Dark Data Report, built using research conducted by TRUE Global Intelligence and directed by Splunk, surveyed more than 1,300 global business managers and IT leaders about how their organizations collect, manage and use data. In an era where data is connecting devices, systems and people at unprecedented growth rates, the results show that while data is top of mind, action is often far behind.

·76 percent of respondents surveyed across the U.S., U.K., France, Germany, China, Japan, and Australia agree “the organization that has the most data is going to win.”

·60 percent of respondents said that more than half of their organizations’ data is dark, and one-third of respondents say more than 75 percent of their organization’s data is dark.

·Business leaders say their top three obstacles to recovering dark data is the volume of data, followed by the lack of necessary skill sets and resources.

·More than half (56 percent) admit that “data-driven” is just a slogan in their organization.

·82 percent say humans are and will always be at the heart of AI.

“Data is hard to work with because it’s growing at an alarming rate and is hard to structure and organise. So, it’s easy for organisations to feel helpless in this chaotic landscape,” says Tim Tully, chief technology officer, Splunk. “I was pleased to see the opportunity people around the world attach to dark data, even though fewer than a third of those surveyed say they have the skills to turn data into action. This presents a tremendous opportunity for motivated leaders, professionals and employers to learn new skills and reach a new level of results. Splunk can help those organizations feel empowered to take control of identifying and using dark data.”

Respondents are Slow to Seize Career and Leadership Opportunities

While respondents understand the value of dark data, they admit they don’t have the tools, expertise or staff to take advantage of it. Plus, the majority of senior leaders say they are close enough to retirement that they aren’t motivated to become data-literate. Data is the future of work, but only a small percentage of professionals seem to be taking it seriously. Respondents agree there is no single answer, though the top solutions having potential included training more employees in data science and analytics, increasing funding for data wrangling, and deploying software to enable less technical employees to analyze the data for themselves.

·92 percent say they are “willing” to learn new data skills but only 57 percent are “extremely” or “very” enthusiastic to work more with data.

·69 percent said they were content to keep doing what they’re doing, regardless of the impact on the business or their career.

·More than half of respondents (53 percent) said they are too old to learn new data skills when asked what they were doing to educate themselves and their teams.

·66 percent cite lack of support from senior leaders as a challenge in gathering data and roughly one-in-five respondents (21 percent) cite lack of interest from organization leaders as a challenge.

AI is Believed to Be The Next Frontier for Data-Savvy Organizations

Globally, respondents believe AI will generally augment opportunities, rather than replace people. While the survey revealed that few organizations are using AI right now, a majority see its vast potential. For example, in a series of use cases including operational efficiency, strategic decision making, HR and customer experience, only 10 to 15 percent say their organisations are deploying AI for these use cases while roughly two-thirds see the potential value.

·A majority of respondents (71 percent) saw potential in employing AI to analyze data.

·73 percent think AI can make up for the skills gaps in IT.

·82 percent say humans are and will always be at the heart of AI and 72 percent say that AI is just a tool to solve business problems.

·Only 12 percent are using AI to guide business strategy and 61 percent expect their organisation to increase its use of AI this way over the next five years.

Regional Differences

There are some key differences in the UK specific results. For example, 39 percent of people in the United Kingdom believe AI can make up for the skills gap versus only 27 percent globally. UK employees are also the most likely in the world to say they need to learn more data skills in order to get promoted again, 83 percent compared to the global figure of 76 percent. Additional UK specific results include:

·The UK often comes second only to China in its enthusiasm for data and AI, and its belief in the importance of data skills

·67 percent of UK companies agree “data-driven” is just a slogan at their organization, compared with only 56 percent globally

·The majority of respondents in the UK market (61 percent) report understanding AI extremely or very well — one of only two markets in which a majority make that claim (the other is China, at 77 percent). The global average is 48 percent

The European Managed Services Summit in May revealed a sector full of optimism, facing up to its challenges as a mature industry. A wealth of new research emerged at the same time, reinforcing the ideas from the events in London and Amsterdam last year that providers needed to specialise, and also work harder with their customers.

First out of the gate was Datto, whose annual managed services report is an eclectic mix of revelations as to the work-life pressures on a sector which faces continuous engagement with its customers, plus the news that half the MSPs have now been in business more than sixteen years. So this is now a mature industry with a mature industry’s outlook; but such problems as it faces are those of progress and growth. In the study, nearly 100% of the MSPs surveyed state that now is as good a time as ever to be in their industry.

At the Amsterdam Summit, Jason Howells, director of the international MSP business at Barracuda MSP presented a session based on his MSPDay research and also had some insights into the state of the market. One of the things that came out quite strongly was how fast things are changing: “Yes, I think this is a mature industry, but at the same time we've got a lot of new entrants - a lot of them coming out of customers and coming out of resellers,” he says.

“I think it's extremely fast-paced at the moment - not that the industry has never not been fast-paced. The MSP space in particular now is getting so much attention and investment it means that things are probably moving faster.”

“What we are seeing is demand from the end-user. That means that whether you are a reseller or a managed service provider, if you're not evolving your own businesses to adapt, then you're probably not going to survive. It will continue into a situation where most, if not all of the IT channel, will be providing monthly services.”

Managed Services Providers are facing an increasingly complex and competitive customer set, advised Mark Paine from researcher Gartner in the first keynote in Amsterdam. The complexity of the customer buying process, with many individuals involved at various levels has increased the length of the sales cycle to double that of customers’ expectations, though recent evidence shows it shortening again as MSPs get more sophisticated, he told the event

The trust that both MSPs and customers look to achieve is possible, but IT service suppliers need to work on their authenticity. “Find out who your prospects trust and make sure they know about you,” he says, “while you work to reposition your company and create authentic stories.”

The issue of trust also came up in the Barracuda MSPDay report referred to earlier, which asked what MSPs thought about customer relationships. This showed that customer misconception was identified as a barrier by the largest group of channel partners (89% of the sample). This seems to result from a view among customers that managed services provision removes their responsibilities for their networks, security and disaster recovery. “MSPs need to spell out the limits of their responsibilities, and the industry as a whole should work to educate its customer base on what is possible,” says Jason Howells.

He reported that network management had risen to the top of the list of services offered by MSPs, with email second, again based on security and reliability issues. And in a change from recent reports of high levels of competition in the market, the MSPs were positive: 73 percent concede there are “still plenty of opportunities out there.”

Which is all good news for MSPs, though in such a fast-changing industry, they need to be on top of trends and new offerings. The next snapshot of the industry will be revealed at the London Managed Services & Hosting Summit 2019 on 18th September (https://mshsummit.com/), and at the similar Manchester event on 30 October (https://mshsummit.com/north/).

The Managed Services & Hosting Summits are firmly established as the leading Managed Services event for the channel. Now in its ninth year, the London Managed Services & Hosting Summit 2019 aims to provide insights into how managed services continues to grow and change as customer demands expand suppliers into a strategic advisory role, and the pressures for compliance and resilience impact the business model at a time of limited resources. Managed Service Providers, other channels and their suppliers can evolve new business models and relationships but are looking for advice and support as well as strategic business guidance.

The Managed Services & Hosting Summits feature conference session presentations by major industry speakers and a range of sessions exploring both technical and sales/business issues.

More than half of organisations enforce encryption of data on all mobile devices and removable media.

Apricorn has published findings from a survey highlighting the rise in encryption technology post GDPR enforcement. Two thirds (66%) of respondents now hardware encrypt all information as standard, which is a positive step considering over a quarter (27%) noted the lack of encryption as being one of the main causes of a data breach within their organisation.

This is in contrast with last year’s survey where only half enforced encryption of data, or were completely confident in their encrypted data, in transit (52%), in the cloud (52%) and at rest (51%), showing a discernible increase in the use of, and need for, encryption as a key component of the data security process.

Forty one percent of respondents have also noticed an increase in the implementation of encryption in their organisation since GDPR was enforced, and their organisation now requires all data to be encrypted as standard, whether it's at rest or in transit. This demonstrates the significance of encryption in GDPR compliance and the protection of sensitive data and is likely driven by it being specifically recommended in Article 32 of GDPR as a method to protect personal data and in Article 34, where obligations towards breached data subjects are reduced where the breached data is encrypted.

GDPR is clearly making security a board level topic with the C-suite now owning the security budget in eighty six percent of the companies surveyed. Organisations are allocating just under a third (30%) of their IT budget to GDPR compliance, which is huge increase when considered against research commissioned by IBM in 2018 that set the ideal spend on cyber security, in general, at 9.8 to 13.7% of the IT budget.

However, despite last year’s survey finding that ninety eight percent of those who knew that GDPR applied to them forecasting a need to assign further budget and resources after achieving compliance, almost a quarter (24%) of this year’s respondents that claim to be in compliance, believe they do not need to assign any further budget or resources.

Jon Fielding, Managing Director, EMEA Apricorn commented: “With the one year anniversary of GDPR, it’s clear that organisations are getting their houses in order, but there still seems to be a long way to go in terms of education and awareness. Organisations need to be mindful that GDPR is an ongoing process and not just a tick box exercise. The most common ways to maintain compliance are to continue to enforce and update all policies and invest in employee awareness on a regular basis. Additionally, encryption is a key component within the compliance “kit”, helping to lessen the probability of a breach and mitigate any financial penalties and obligations that would apply in the unfortunate event of a breach.”

61% of IT organizations have little to modest confidence to mitigate access security threats, despite a majority significantly increasing their near-term budget.

Pulse Secure has published its “2019 State of Enterprise Secure Access” report, available for download at https:/www.pulsesecure.net/SESA19/. The findings quantify threats, gaps and investment as organizations face increasing hybrid IT access challenges. The survey of large enterprises in the US, UK and DACH uncovers business risk and impact resulting in a pivot towards extending Zero Trust capabilities to enable productivity and stem exposures to multi-cloud resources, applications and sensitive data.

While enterprises are taking advantage of cloud computing, the survey data showed all enterprises have ongoing data center dependencies. One fifth of respondents anticipate lowering their data center investment, while more than 40% indicated a material increase in private and public cloud investment. According to the report, “the shift in how organizations deliver Hybrid IT services to enable digital transformation must also take into consideration empowering a mobile workforce, supporting consumer and IoT devices in the workplace and meeting data privacy compliance obligations – all make for a challenging environment to ensure, monitor and audit access security.

"What was consistent across enterprise sizes, sectors, or location was that secure access for hybrid IT is a current and growing concern with cyberthreats, requirements and issues emerging from many sources. The reporting findings and insights should empower corporate leadership and IT security professionals to re-think how their organizations are protecting resources and sensitive data as they migrate to the cloud," said Martin Veitch, editorial director at IDG Connect.

Key Findings

The survey found the most impactful incidents were contributed by a lack of user and device access visibility and lax endpoint, authentication and authorization access controls. Over the last 18 months, half of all companies dealt with malware, unauthorized/vulnerable endpoint use and mobile or web apps exposures. Nearly half experienced unauthorized access to data and resources due to insecure endpoints and privileged users, as well as unauthorized application access due to poor authentication or encryption controls.

While a third expressed significant confidence, 61% of respondents indicated modest confidence in their security processes, human resources, intelligence and tools to mitigate access security threats. The survey revealed the top access threat mitigation deficiencies:

When survey participants were asked what they perceive as their largest operational gaps for access security, the majority identified hybrid IT application availability; user, device and mobile discovery and exposures; weak device configuration compliance; and inconsistent or incomplete enforcement. Correspondingly, the participants stated that their organizations are stepping up their access security initiatives:

The cited incidents, threat mitigation deficiencies and operational gaps are among reasons for the interest in a Zero Trust approach for access security. A Zero Trust model authenticates, authorizes and verifies users, devices, applications and resources no matter where they reside. It encompasses proving identity, device and security state before and during a transaction; applying a least privilege access closest to the entities, applications and data; and extending intelligence to allow policies to adapt to changing requirements and conditions.

Adding to management complexity, the report also found that organizations employ three or more secure access tools per each of 13 solutions presented in the survey. Larger companies have about 30% more tools than smaller enterprises. Correspondingly, nearly half of respondents were open to exploring the benefits of consolidating their security tools into suites. With the migration to cloud, one tool of interest cited by respondents as being implemented or planned over the next 18 months is Software Defined Perimeter (SDP).

Research Highlights

The independent research for the report, which offers key insights into the current access security landscape and the maturity of defenses, was conducted by IDG Connect. Survey respondents included more than 300 information security decision makers in enterprises with more than 1,000 employees across U.S., U.K. and DACH regions, and covered key verticals including financial services, healthcare, manufacturing and services.

“We are pleased to sponsor the 2019 State of Enterprise Secure Access Report. The independent research provides a useful litmus test for the level of exposure, controls and investment regarding hybrid IT access,” said Scott Gordon, chief marketing officer at Pulse Secure. “The key takeaway from this report is hybrid IT delivery has expanded security risks and necessitates more stringent access requirements. As such, organizations should re-assess their secure access priorities, capabilities and technology as part of their Zero Trust strategy.”

Hyperscale Data Center Market is set to grow from its current market was valued at over USD 20 Billion in 2018 to USD 65 Billion by 2025, according to a new research report by Global Market Insights, Inc.

Rise in the demand for big data and cloud computing solutions in distributed computing environments is expected to drive the hyperscale data center market. Rapid increase in data traffic is the major challenge for organizations to store, manage and retrieve the massively growing data. The companies are adopting cloud computing solutions for its increased benefits such as flexibility, reduced IT expenses, scalability, collaboration efficiency and access to automatic updating and storage of large volume of data.

Rapid increase in business data is encouraging the large enterprises to invest heavily in IT expansion. For instance, in August 2018, Google announced its plan to expand its data center in Singapore to scale up capacity and meet increasing demand for services. In addition, the company will invest USD 600 million to expand its South Carolina facility. The growing popularity of cloud-based infrastructure and investments to expand the product portfolio are contributing in the hyperscale data center market growth.

The demand for rack-based Power Distribution Units (PDUs) is growing rapidly in the hyperscale data center market due to their high availability and high-power ratings features. These PDUs can be incorporated with all types of rackmount equipment without interrupting the power supply. It helps organization to reduce power consumption, thereby enhancing the efficiency of an IT facility. These solutions also help in reducing an organization's carbon footprint. It is being widely adopted by businesses for enhancing the business efficiency.

Increase in adoption of cloud-based services and rapid growth in smartphone and social media users in Asia Pacific is expected to drive the market size. The number of smartphone users is expected to cross 6 billion by 2025 with countries such as India, China, South Korea, Taiwan, and Indonesia being the major contributors. Businesses in the region are adopting data-intensive applications such as IoT, data analytics, AI services which needs high amount of data and large capital investments. Several companies are constructing hyperscale facilities to reduce their capital and operational expenses.

In the hyperscale data center market, IT & telecom sector accounted for over 45% of the industry share and is witnessing high adoption of the large-scale infrastructure facilities owing to the increase in data generation and storage requirement. Telecom operators are offering flexible internet or data plans, which is driving the data traffic. The rapid increase in data generation is encouraging the businesses to implement highly scalable and efficient IT environment with high computing power, thereby accelerating the market growth. Global telecommunication companies are establishing mega infrastructure for catering the widespread customer base.

The Western European and Nordic markets are experiencing high demand for hyperscale data centers owing to the easy availability of renewable energy sources, land for development, tax incentives, strengthening fiber connectivity and reduction in electricity cost. These factors are helping cloud service providers to focus on constructing more hyperscale data center in Western Europe. For instance, In June 2018, Google announced its plan to launch its hyperscale data center in the Netherlands due to the availability of sustainable energy sources which will help to lower the energy costs for data centers.

Key players operating in the hyperscale data center market include Broadcom Ltd., Cavium, Inc., Cisco Systems, Inc., Dell, Inc., Huawei Technologies Co., Ltd., IBM Corporation, Intel Corporation, Lenovo Group Ltd., Microsoft Corporation, NVIDIA Corporation, Sandisk LLC, and Schneider Electric SE, among others. Players in the market are manufacturing business-specific solutions which enables customers to customize solutions based on enterprise requirements.

Over 84% of organisations in Europe use or plan to use digitally transformative technologies, but only a little more than half (55%) claim these deployments are very or extremely secure.

Thales has revealed a growing security gap among European businesses – with almost a third (29%) of surveyed enterprises experiencing a breach last year, and only a little more than half (55%) believe their digital transformation deployments are very or extremely secure. These findings are detailed in the 2019 Thales Data Threat Report – Europe Edition with research and analysis from IDC.

Across Europe, more than 84% of organisations are using or planning to use digitally transformative technologies including cloud, big data, mobile payments, social media, containers, blockchain and Internet of Things (IoT). Sensitive data is highly exposed in these environments: in the UK, almost all (97%) of these organisations state they are using this type of data with digital transformation technologies.

“Across Europe, organizations are embracing digital transformative technologies – while advancing their business objectives, this is also leaving sensitive data exposed,” said Sebastien Cano, senior vice president of cloud protection and licensing activity at Thales. “European enterprises surveyed still do not rank data breach prevention as a top IT security spending priority – focusing more broadly on security best practice and brand reputation issues. Yet, data breaches continue to become more prevalent. These organisations need to take a hard look at their encryption and access management strategies in order to secure their digital transformation journey, especially as they transition to the cloud and strive to meet regulatory and compliance mandates.”

Security confidence challenged in digitally transformative environments

However, not everyone is confident of the security of these environments. Across Europe, only a little more than half (55%) claim their digital deployments are very or extremely secure. The UK is the most confident in its levels of security with two thirds (66%) saying they are very or extremely secure. In Germany, confidence is much lower at 49%.

Multi-cloud security remains top challenge

The most common use of sensitive data within digital transformation is in the cloud. Across Europe, 90% of organisations are using, or will use, all cloud environments this year (Software as a Service, Platform as a Service and Infrastructure as a Service). These deployments do not come without concerns, however. The top three security issues for organisations using cloud were ranked as:

-38% - security of data if cloud provider is acquired/fails;

-37% - lack of visibility into security practises; and,

-36% - vulnerabilities from shared infrastructure and security breaches/attacks at the cloud provider.

Businesses are working hard to alleviate these concerns. Over a third (37%) of organisations see encryption of data with service provider managed encryption keys, detailed architecture and security information for IT and physical security, and SLAs in case of a data breach tied as the most important changes needed to address security issues in the cloud.

Compliance is not a security priority

Despite more than 100 new data privacy regulations, including GDPR, affecting almost all (91%) organisations across Europe, compliance is only seen as a top priority for security spend in the UK by 40% of businesses. Interestingly, 20% of UK businesses failed a compliance audit in the last year because of data security issues. When it comes to meeting data privacy regulations, the top two methods named by respondents working to meet strict regulations are encrypting personal data (47%) and tokenising personal data (23%).

“Clearly there is a significant shift to digital transformation technologies and the issues around data held within these cannot be taken lightly,” said Frank Dickson, program vice president for security products research, IDC. “Data privacy regulations have been hot on the agenda over the past 18 months, with so many coming into force. Organisations are now finding themselves considering the cost of becoming compliant against the risk of potential breaches and the subsequent fines.”

Attack levels are high

One of the most jarring findings of the report is that almost two thirds of organisations across Europe (61%) have encountered a data breach at some stage. The UK fares slightly better than the average for Europe with just over half (54%) of organisations saying they have encountered a breach. However, across Europe 29%, of organisations who have faced a data breach did so in the last year; a shocking one in 10 have suffered a data breach both in the last year and at another time.

Companies obstructed by a lack of technology, processes, training and support from leadership to get the most from their technology spend.

Global organizations are demanding more from their data management investments, despite most estimating that they achieve more than double the amount they invest, finds research from Veritas Technologies, a worldwide leader in enterprise data protection and software-defined storage.

The Value of Data study, conducted by Vanson Bourne for Veritas, surveyed 1,500 IT decision makers and data managers across 15 countries, and reveals that although companies see an average return of $2.18 USD for every $1 USD they invest in improving data management, an overwhelming majority (82 percent) of businesses expect to see an even higher return.

Just 15 percent achieved the ROI they expected to receive, while only 1 percent said the ROI they achieved exceeded expectations.

Businesses admit the key factors preventing them from improving their ROI are a lack of the right technology to support data management (40 percent), a lack of internal processes (36 percent) and inadequate employee engagement or training (57 percent). A third (33 percent) also cited an absence of support from senior management as a barrier to achieving a higher return on data management investment.

“Mismanaging data can cost businesses millions in security vulnerabilities, lost revenues and missed opportunities, but those that invest wisely are seeing the incredible potential of their data estates. Unfortunately, too many are being held back by technological or people-related challenges,” said Jyothi Swaroop, vice president, Product & Solutions, Veritas.

“Organizations must arm themselves with the ability to access, protect and derive insights from their data. By promoting a cultural shift in the way data is managed, which includes buy-in from leadership as well as tools, processes and training, companies can empower employees with full visibility and control of data.”

Take care of your data, and it will take care of you

Organizations that are investing in the proper management of their data say they are already benefiting from their investment and are achieving the objectives they set out to achieve. Respondents ranked increased data compliance, reduced security risks, cost savings, and the ability to drive new revenue streams and market opportunities, as the most attractive benefits of improving data management.

Of the organizations that are investing in looking after their data, four in five (81 percent) say they are already experiencing increased data compliance and reduced data security risks, while 70 percent are seeing reduced costs. Nearly three-quarters (72 percent) are driving new revenue streams or market opportunities as a result of investing in data management.

“As cases of high-profile data breaches and threats of hefty fines for regulatory non-compliance continue to plague the headlines, one of the biggest drivers for investing in data management is to protect their data. But many are also benefitting greatly from the ability to use their data more intelligently. Those that invest in overcoming the barriers to effective data management will reap significant rewards in today’s digital economy,” added Swaroop.

Vertiv, together with technology analyst firm 451 Research, has released the report on the state of 5G, “Telco Study Reveals Industry Hopes and Fears: From Energy Costs to Edge Computing Transformation". The report captures the results of an in-depth survey of more than 100 global telecom decision makers with visibility into 5G and edge strategies and plans. The research covers 5G deployment plans, services supported by early deployments, and the most important technical enablers for 5G success.

Survey participants were overwhelmingly optimistic about the 5G business outlook and are moving forward aggressively with deployment plans. Twelve percent of operators expect to roll out 5G services in 2019, and an additional 86 percent expect to be delivering 5G services by 2021.

According to the survey, those initial services will be focused on supporting existing data services (96 percent) and new consumer services (36 percent). About one-third of respondents (32 percent) expect to support existing enterprise services with 18 percent saying they expect to deliver new enterprise services.

As networks continue to evolve and coverage expands, 5G itself will become a key enabler of emerging edge use cases that require high-bandwidth, low latency data transmission, such as virtual and augmented reality, digital healthcare, and smart homes, buildings, factories and cities.

However, illustrating the scale of the challenge, the majority of respondents (68 percent) do not expect to achieve total 5G coverage until 2028 or later. Twenty-eight percent expect to have total coverage by 2027 while only 4 percent expect to have total coverage by 2025.

“While telcos recognise the opportunity 5G presents, they also understand the network transformation required to support 5G services,” said Martin Olsen, vice president of global edge and integrated solutions at Vertiv. “This report brings clarity to the challenges they face and reinforces the role innovative, energy-efficient network infrastructure will play in enabling 5G to realise its potential.”

To support 5G services, telcos are ramping up the deployment of multi-access edge computing (MEC) sites, which bring the capabilities of the cloud directly to the radio access network. Thirty-seven percent of respondents said they are already deploying MEC infrastructure ahead of 5G deployments while an additional 47 percent intend to deploy MECs.

As these new computing locations supporting 5G come online, the ability to remotely monitor and manage increasingly dense networks becomes more critical to maintaining profitability. In the area of remote management, data centre infrastructure management (DCIM) was identified as the most important enabler (55 percent), followed by energy management (49 percent). Remote management will be critical, as the report suggests the network densification required for 5G could require operators to double the number of radio access locations around the globe in the next 10-15 years.

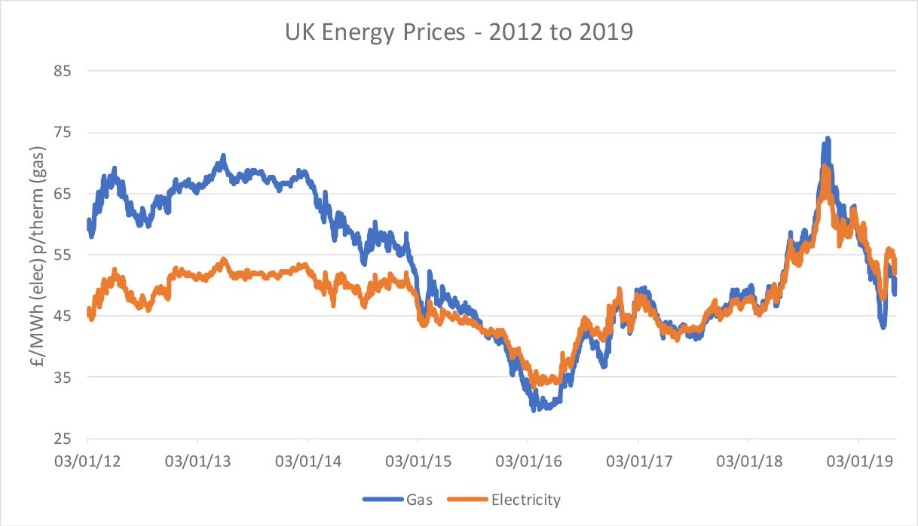

The survey also asked respondents to identify their plans for dealing with energy issues today and five years in the future when large portions of the network will be supporting 5G, which 94 percent of participants expect to increase network energy consumption. Among the key findings:

“5G represents the most impactful and difficult network upgrade ever faced by the telecom industry,” said Brian Partridge, research vice president for 451 Research. “In general, the industry recognises the scale of this challenge and the need for enabling technologies and services to help it maintain profitability by more efficiently managing increasingly distributed networks and mitigating the impact of higher energy costs.”

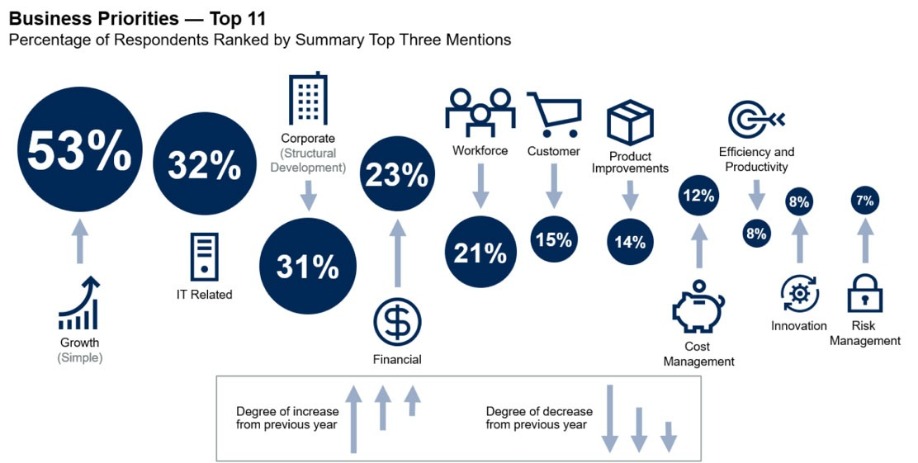

Growth continues to top the list of CEO business priorities in 2019 and 2020, according to a recent survey of CEOs and senior executives by Gartner, Inc. The most notable change in comparison to last year’s results is that a growing number of CEOs also deem financial priorities important, especially profitability improvement.

The annual Gartner 2019 CEO and Senior Business Executive Survey of 473 CEO and senior business executives in the fourth quarter of 2018 examined their business issues, as well as some areas of technology agenda impact. In total, 473 business leaders of companies with $50 million or more and 60% with $1 billion or more in annual revenue were qualified and surveyed.

“After a significant fall last year, mentions of growth increased this year to 53%, up from 40% in 2018,” said Mark Raskino, vice president and distinguished analyst at Gartner. “This suggests that CEOs have switched their focus back to tactical performance as clouds gather on the horizon.”

At the same time, mentions of financial priorities, cost and risk management also increased (see Figure 1). “However, we did not see CEOs intending to significantly cut costs in various business areas. They are aware of the rising economic challenges and proceeding with more caution — they are not preparing for recession,” said Mr. Raskino.

Figure 1: Top 11 Business Priorities of CEOs

Source: Gartner (May 2019)

New Opportunities for Growth

The survey results showed that a popular solution when growth is challenged is to look in other geographic locations for growth. Responses mentioned other cities, states, countries and regions, as well as “new markets” would also include some geographic reach (though a new market can also be industry-related, or virtual).

“It is natural to use location hunting for growth when traditional and home markets are saturated or fading,” said Mr. Raskino. “However, this year the international part of such reach is complicated and compounded by a shift in the geopolitical landscape. Twenty-three percent of CEOs see significant impacts to their own businesses arising from recent developments in tariffs, quotas and other forms of trade controls. Another 58% of CEOs have general concerns about this issue, suggesting that more CEOs anticipate it might impact their businesses in future.”

Another way that CEOs seem to be confronting softening growth prospects and weakening margins is to seek diversification — which increasingly means the application of digital business to offer new products and revenue-producing channels. Eighty-two percent of respondents agreed that they had a management initiative or transformation program underway to make their companies more digital — up from 62% in 2018.

High Hopes for Technology

Cost management has risen in CEO priorities, from No. 10 in 2018 to No. 8 today. When asked about their cost-control methods, 27% of respondents cited technology enablement, securing the third spot after measures around people and organization, such as bonuses and expense and budget management. However, when asked to consider productivity and efficiency actions, CEOs were much more inclined to think of technology as a tool. Forty-seven percent of respondents mentioned technology as one of their top two ways to improve productivity.

Digital Skills for All Executives

Digital business is something the whole executive committee must be engaged in. However, the survey results showed that CEOs are concerned that some of the executive roles do not possess strong or even sufficient digital skills to face the future. On average, CEOs think that sales, risk, supply chain and HR officers are most in need of more digital savvy.

Once all executive leaders are more comfortable with the digital sphere, new capabilities to execute on their business strategies will need to be developed. When asked which organizational competencies their company needs to develop the most, 18% of CEOs named talent management, closely followed by technology enablement and digitalization (17%) and data centricity or data management (15%).

“Datacentric decision-making is a key culture and capability change in a management system that hopes to thrive in the digital age. Executive leaders must be a role model to encourage and foster data centricity and data literacy in their business units and the organization as a whole,” Mr. Raskino said.

Supply chain to suffer blockchain ‘fatigue’

Blockchain remains a popular topic, but supply chain leaders are failing to find suitable use cases. By 2023, 90% of blockchain-based supply chain initiatives will suffer ‘blockchain fatigue’ due to a lack of strong use cases, according to Gartner, Inc.

A Gartner supply chain technology survey of user wants and needs* found that only 19% of respondents ranked blockchain as a very important technology for their business, and only 9% have invested in it. This is mainly because supply chain blockchain projects are very limited and do not match the initial enthusiasm for the technology’s application in supply chain management.

“Supply chain blockchain projects have mostly focused on verifying authenticity, improving traceability and visibility, and improving transactional trust,” said Alex Pradhan, senior principal research analyst at Gartner. “However, most have remained pilot projects due to a combination of technology immaturity, lack of standards, overly ambitious scope and a misunderstanding of how blockchain could, or should, actually help the supply chain. Inevitably, this is causing the market to experience blockchain fatigue.”

The budding nature of blockchain makes it almost impossible for organizations to identify and target specific high-value use cases. Instead, companies are forced to run multiple development pilots using trial and error to find ones that might provide value. Additionally, the vendor ecosystem has not fully formed and is struggling to establish market dominance. Another challenge is that supply chain organizations cannot buy an off-the-shelf, complete, packaged blockchain solution.

“Without a vibrant market for commercial blockchain applications, the majority of companies do not know how to evaluate, assess and benchmark solutions, especially as the market landscape rapidly evolves,” said Ms. Pradhan. “Furthermore, current creations offered by solution providers are complicated hybrids of conventional blockchain technologies. This adds more complexity and confusion, making it that much harder for companies to identify appropriate supply chain use cases.”

As blockchain continues to develop in supply chains, Gartner recommends that organizations remain cautious about early adoption and not to rush into making blockchain work in their supply chain until there is a clear distinction between hype and the core capability of blockchain. “The emphasis should be on proof of concept, experimentation and limited-scope initiatives that deliver lessons, rather than high-cost, high-risk, strategic business value,” said Ms. Pradhan.

Global grocers will use blockchain

Meanwhile, Gartner, Inc. predicts that, by 2025, 20% of the top 10 global grocers by revenue will be using blockchain for food safety and traceability to create visibility to production, quality and freshness.

Annual grocery sales are on the rise in all regions worldwide, with an emphasis of fast, fresh prepared foods. Additionally, customer understanding has increased for the source of the food, the provider’s sustainability initiative, and overall freshness. Grocery retailers who provide visibility and can certify their products according to certain standards will win the trust and loyalty of consumers.

“Blockchain can help deliver confidence to grocer’s customers, and build and retain trust and loyalty,” said Joanne Joliet, senior research director at Gartner. “Grocery retailers are trialing and looking to adopt blockchain technology to provide transparency for their products. Additionally, understanding and pinpointing the product source quickly may be used internally, for example to identify products included in a recall.”

Blockchain appears as an ideal technology to foster transparency and visibility along the food supply chain. Encryption capabilities on the food source, quality, transit temperature and freshness can be used to ensure that the data is accurate and will give confidence to both consumers and retailers.

Some grocers have already been experimenting with blockchain and are developing best practices. For example, Walmart is now requiring suppliers of leafy greens to implement a farm-to-store tracking system based on blockchain. Other grocers, such as Unilever and Nestlé, are also using blockchain to trace food contamination.

“As grocers are being held to higher standards of visibility and traceability they will lead the way with the development of blockchain, but we expect it will extend to all areas of retail,” Ms. Joliet said. “Similar to how the financial services industry has used blockchain, grocers will evolve best practices as they apply blockchain capabilities to their ecosystem. Grocers also have the opportunity to be part of the advancement of blockchain as they develop new use cases for important causes for health, safety and sustainability.”

Digital failure?

By 2021, only one-quarter of midsize and large organizations will successfully target new ways of working in 80% of their initiatives, according to Gartner, Inc. New ways of working include distributed decision making, virtual and remote work, and redesigned physical workspaces.

“Digital workplace initiatives cannot be treated exclusively as an IT initiative,” said Carol Rozwell, distinguished research vice president at Gartner. “When initiatives are executed as a series of technology rollouts, employee engagement and addressing the associated cultural change are left behind. Digital workplace success is impossible without such.”

Emerging Change Leadership

A new approach for coping with the shifting demands of digital business is emerging for digital workplace leaders — change leadership.

“Digital workplace leaders must realize that their role as the orchestrator of change is fundamentally moving away from previously ingrained leadership practices that view employees as a group resistant to change rather than involving them in co-creating the path forward,” said Ms. Rozwell.

Digital Workplace A-Team

As digital workplace leaders shift their thoughts and actions toward people-oriented designs, they can inspire and engage a cross-disciplinary “A Team” to help strategize news way of working. This “A Team” — drawn from IT, facilities management, human resources and business stakeholders — envisions how new technologies, processes and work styles will enhance the overall employee experience and enable them to perform mission-critical work more effectively. In the end, organizations that take time to invest in the employee experience will net a 10-percentage-point improvement in employee engagement scores.

Role of the Business Unit

Successful digital workplace programs are less about technology and more about understanding what affects the employee experience and making necessary changes to the work environment.

“The business unit leader is the champion of a new way of working in the digital workplace. This is the person who identifies the desired business outcomes, develops the business case and establishes the measures by which success is determined. Without engaging business unit leaders, it will be impossible to successfully grapple with the scope of changes needed,” said Ms. Rozwell.

In Europe we are witnessing a shift in technology spending from IT to the line of business (LOB). In a new update to the Worldwide Semiannual IT Spending Guide: Line of Business, International Data Corporation (IDC) forecasts that European technology spending by LOB decision makers will steadily grow and will increase faster than spending funded by IT organizations through 2022.

European companies are forecast to spend $399 billion on IT (hardware, software, and services) in 2019. More than half of that spending (58.9%) will come from the IT budget, while the remainder (41.1%) will come from the budgets of technology buyers outside of IT. Nonetheless, LOB technology spending will grow at a faster rate than IT spending in the years ahead. The compound annual growth rate (CAGR) for LOB spending over the 2017–2022 forecast period is forecast to be 5.9%, compared with a 2.9% CAGR for IT spending.

In 2019, banking, discrete manufacturing, and process manufacturing will have the largest spend coming from LOBs. Retail, professional services, and discrete manufacturing will have the fastest LOB spending growth over 2018.

"In Europe, business managers are raising their voice in the IT decision-making process," said Andrea Minonne, senior research analyst at IDC Customer Insights & Analysis. "This trend is revolutionizing and disrupting how companies make technology investments, with LOBs more often claiming control over IT budgets. The consumerization of applications, especially those to access content and improve collaboration, together with the more mainstream use of cloud solutions, the uptake of software as a service, and the BYOD area, are driving LOBs to make technology investments independently. This results in a greater tendency to skip IT department approval, which can sometimes take a long time and delay workloads."

Consumer technology spending to reach $1.3 trillion

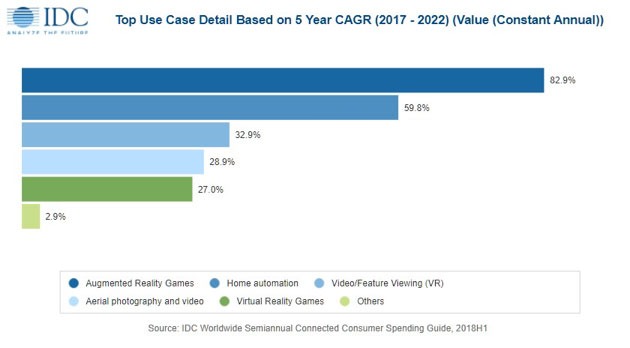

Consumer spending on technology is forecast to reach $1.32 trillion in 2019, an increase of 3.5% over 2018. According to the inaugural Worldwide Semiannual Connected Consumer Spending Guide from International Data Corporation (IDC), consumer purchases of traditional and emerging technologies will remain strong over the 2018-2022 forecast period, reaching $1.43 trillion in 2022 with a five-year compound annual growth rate (CAGR) of 3.0%.

"The new Connected Consumer Spending Guide leverages IDC's long history of capturing consumer device shipments, combined with valuable insights from regular consumer surveys and channel discussions, to tell a comprehensive story about consumer spending," said Tom Mainelli, IDC's group vice president for Devices and Consumer Research. "The Connected Consumer Spending Guide team has built out an initial set of consumer-focused use cases designed to deliver insights about spending across a wide range of device types, from smartphones to tablets, PCs to drones, and smart speakers to wearables. Over time, the team will continue to develop an ever-widening array of use cases, adding additional data about software and services, and eventually demographic-focused insights."

Traditional technologies – personal computing devices, mobile phones, and mobile telecom services – will account for more than 96% of all consumer spending in 2019. Mobile telecom services will represent more than half of this amount throughout the forecast, followed by mobile phones. Spending growth for traditional technologies will be relatively slow with a CAGR of 2.4% over the forecast period.

In contrast, emerging technologies, including AR/VR headsets, drones, robotic systems, smart home devices, and wearables, will deliver strong growth with a five-year CAGR of 20.6%. By 2022, IDC expects more than 5% of all consumer spending will be for these emerging technologies. Smart home devices and smart wearables will account for nearly 70% of the overall spending on emerging technologies in 2019. Smart home devices will also be the fastest growing technology category with a five-year CAGR of 38.0%.

"Connected technologies are transforming consumers' activities and habits, becoming more and more integrated into their daily lives. This is fueling the consumer's unquenchable thirst for content and immersive experiences delivered anytime, anywhere, via multiple formats and across a myriad of channels. As a result, we see the balance of power shifting in consumer-facing industries. Whereas once upon time, the enterprise called the shots, more and more consumer demands and expectations are propelling innovation," said Jessica Goepfert, program vice president, Customer Insights & Analysis at IDC. "What's the next wave of consumer transformation? Even more widely adopted and mature activities such as listening to music and shopping are being disrupted by new technologies such as smart speakers. And disruption presents opportunity."

Communication will be the largest category of use cases for consumer technology, representing nearly half of all spending in 2019 and throughout the forecast. Most of this will go toward traditional voice and messaging services, joined by social networking and video chat as notable use cases within this category. Entertainment will be the second largest category, accounting for nearly a quarter of all spending as consumers listen to music, edit and share photos and videos, download and play online games, and watch TV, videos, and movies. The use cases that will see the fastest spending growth over the forecast period are augmented reality games (82.9% CAGR) and home automation (59.8% CAGR).

"There's an expectation among today's consumers for a seamless consumer experience. The connected consumer is no longer a passive one; the connected business buyer is in control and it's essential for technology providers to understand this if they want to continue to grow and gain market share in this digital age. As technology becomes more affordable and accessible, the connected consumer is expected to spend more as they leverage these platforms for entertainment, education, social networking, commerce, and other purposes. IDC's Worldwide Semiannual Connected Consumer Spending Guide presents a comprehensive view of the consumer ecosystem and serves as a framework for how IDC organizes its consumer research and forecasts," said Stacey Soohoo, research manager with IDC's Customer Insights & Analysis group.

Connected vehicles shipments to reach 76 million by 2023

In its inaugural connected vehicle forecast, International Data Corporation (IDC) estimates that worldwide shipments of connected vehicles, which includes options for embedded and aftermarket cellular connectivity, will reach 51.1 million units in 2019, an increase of 45.4% over 2018. By 2023, IDC expects worldwide shipments to reach 76.3 million units with a five-year compound annual growth rate of 16.8%.

IDC defines a connected vehicle as a light-duty vehicle or truck that contains a dedicated cellular network wireless wide area connection that interfaces with the vehicle data (e.g., gateways, software, or sensors). Newer, recent model year vehicles are shipped with an embedded, factory-installed connected vehicle system. Older vehicles typically connect via an aftermarket device, which is a self-contained hardware and software unit that is installed into a vehicle's OBDII port.

The commitment of mass market automotive brands to make embedded cellular standard equipment in key markets was a major development in the arrival of connected vehicles. By 2023, IDC predicts that nearly 70% of worldwide new light-duty vehicles and trucks will be shipped with embedded connectivity. Likewise, IDC expects that nearly 90% of new vehicles in the United States will be shipped with embedded connectivity by 2023.

The sustained growth of the connected vehicles market is being driven by a multitude of factors, including consumer demand for a more immersive vehicle experience, the ability of auto manufacturers to better utilize connected vehicles for cost avoidance and revenue generation, evolving government regulations, and mobile network operator investments in new connections and services.

"The automotive ecosystem is positioning the vehicle as the next, emerging digital platform," said Matt Arcaro, research manager, Next-Generation Automotive Research at IDC. "Deploying embedded or aftermarket connectivity at scale will be key to unlocking its potential."

Telecoms market prepares for 5G impact

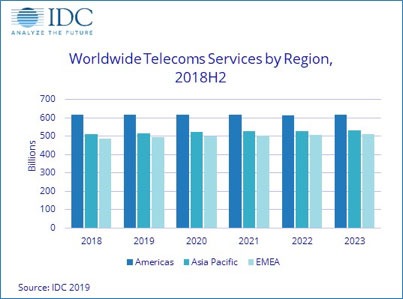

Worldwide spending on telecom services and pay TV services totaled $1.6 billion in 2018, reflecting an increase of 0.8% year on year, according to the International Data Corporation (IDC) Worldwide Telecom Services Database. IDC expects the worldwide spending on telecom and pay TV services to reach nearly $1.7 billion in 2023.

Mobile services will continue to dominate the industry in terms of spending, with mobile data still expanding, driven by the booming smartphone markets. At the same time, growth in mobile voice is slowly declining due to fierce competition and market maturity. The mobile segment, which represented 53.1% of the total market in 2018, is expected to post a compound annual growth rate (CAGR) of 1.4% over the 2019-2023 period, driven by the growth in mobile data usage and the Internet of Things (IoT), which are offsetting declines in spending on mobile voice and messaging services.

Fixed data, especially broadband internet access, is still expanding in most geographies, supported by the increasing importance of content services for consumers and IP-based services for businesses. Fixed data service spending represented 20.5% of the total market in 2018 with an expected CAGR of 2.6% through 2023, driven by the need for higher-bandwidth services. Spending on fixed voice services will record a negative CAGR of 5.3% over the forecast period and will represent only 8.5% of the total market through 2023. Rapidly declining TDM voice revenues are not being offset by the increase in IP voice.

The pay TV market, which consists of cable, satellite, IP, and digital terrestrial TV services, will remain flat over the forecast period; however, these services are an increasingly important part of the multi-play offerings of telecom providers across the world. Spending on multi-play services increased by 7.1% in 2018 and is expected to post a CAGR of 3.7% by the end of 2023.

On a geographic basis, the Americas was the largest services market, with revenues of $616 billion in 2018, driven by the large North American sector. Asia/Pacific was the second largest region, followed by Europe, the Middle East, and Africa (EMEA). The market with the fastest year-on-year growth in 2018 was EMEA (mainly by the emerging markets), followed by Asia/Pacific.

| Global Regional Services 2018 Revenue and Year-on-Year Growth | ||

| Global Region | 2018 Revenue ($B) | CAGR 2018-2023 (%) |

| Americas | 616 | 0.0 |

| Asia/Pacific | 512 | 0.8 |

| EMEA | 487 | 0.9 |

| Grand Total | 1,615 | 0.5 |

| Source: IDC Worldwide Semiannual Services Tracker 2H 2018 | ||

The calm stability that currently marks the telecom services market will not last for long. The advent of 5G is the focus of massive media attention as it represents new architectures, speeds, and services that will remake the mobile landscape.

"5G will unlock new and existing opportunities for most operators as early use cases such as enhanced mobile broadband and fixed wireless access will be gaining traction rapidly in most geographies, while massive machine-type communications and ultra-reliable low-latency communications will debut in more developed countries," says Kresimir Alic, research director with IDC's Worldwide Telecom Services. "Additionally, the worldwide transition to all-IP and new-generation access (NGA) broadband will help offset the fixed and mobile voice decline. We are witnessing a global digital transformation revolution and carrier service providers (CSPs) will play a crucial role in it by innovating and educating and by supporting the massive roll-out of software and services."

The DCS Awards, organised by the publisher of Digitalisation World, Angel Business Communications, , go from strength to strength. This year’s event, sponsored by Kohler Uninterruptible Power was MC’d by Paul Trowbridge, the MD of Angel’s sister event management business, EVITO. Following on from the excellent dinner, there was a short industry-focused speech from the Data Centre Alliance’s Steve Hone, and then the first half of the night’s entertainment - all kindly sponsored by Starline – with Zoe Lyons’s brilliant observational comedy. The DCS Awards were then handed out before an after-hours casino, with music from Ruby and the Rhythms. Here we focus on the winners.

Data Centre Energy Efficiency Project of the Year:

Sponsored by: Kohler Uninterruptable Power

WINNER: Aqua with 4D (Gatwick Facility)