The following observations may not be earth-shattering, but, to your correspondent, at least, it seems worthy of note that the topic of collaboration is cropping up again and again right across business at the present time. We have the internal collaboration between various, previously separate, business departments, who are now coming together to develop new ideas, products and services in a much more flexible, agile and quicker fashion. We have the collaboration between different vendors to bring to the market joint solutions. And we have the collaboration between vendors, the Channel, and end users, with the objective of ensuring that customers really do get the best possible solutions and services from their suppliers, whoever and wherever they may be.

The trigger for this observation was a recent trip to the US with Schneider Electric – with the focus very much being on The Edge. One particular presentation introduced edge solutions, that couple APC by Schneider Electric physical infrastructure with Cisco’s HyperFlex Edge, hyperconverged solutions, designed for quick and efficient deployment in edge environments.

It was a joint presentation given by individuals from both Schneider and Cisco. The two companies, building on their history of partnering, have worked together to develop the edge-focused hyperconverged solutions, with reference designs for HyperFlex deployments that have been pre-engineered to join APC and Cisco equipment for solutions that are pre-integrated, remotely monitorable and physically secure. And Schneider also works with other major IT vendors, including HP Enterprise and Lenovo.

Where once data centre and IT vendors saw fit to want to own ‘everything’, it seems that there is a growing realisation that plans for world domination can never take into account the customer’s desire for best-of-breed technology. No one organisation can be the best at everything it does. By recognising this, and working with partners who are the best at what they do, it’s possible to develop and/or be a part of a wider ecosystem that does offer customers the very best solutions across a range of IT and data centre disciplines. Furthermore, where once multi-vendor solutions were the prelude to an unholy mess when it came to after sales service and support – it’s not our fault, it’s there’s etc. – individual vendors and, increasingly, the channel which may well supply the heterogeneous technology solutions – are happy to stand behind their offerings, rather than indulge in finer pointing.

What might be termed as end to end collaboration – vendors, channel, end users – is developing because of the emergence of these multi-vendor solutions. The channel is supplying them to the customer, and everyone needs to be able to trust everyone else in the supply chain – there’s a lot at stake if this trust is broken. For the Channel, they are, if you like, sticking their necks on the line by telling their customers that a particular vendor collaboration solution is much better than that provided by any single vendor, and they need to believe that they will receive the right level of support from the vendors behind the solution. For the end user, they need to trust both their supplier (a channel company) and the vendors – mainly in terms of receiving seamless ongoing support and maintenance. And the vendors need to trust that the Channel will do a good job of representing their joint solutions – providing the right level of professionalism and support to customers. Vendors probably also need to trust that customers do not have totally unrealistic expectations as to what the collaboration solutions will deliver.

However, if everyone talks, and listens, to everyone else in the supply chain, then there’s a very real chance that all parties will benefits from this multi-faceted collaboration.

Finally, internal collaboration is already taking off, thanks in the main due to the development of DevOps and DevSecOps. Serial or over the wall development – where department A hands on its work to Department B, who hands it to Department C, before it goes back down and up and down the line endless times before a finished product or solution emerges – is a very old-fashioned, clunky and time-consuming way to develop new ideas. Much better that all interested parties – design, IT, marketing, sales, even finance, sit down together, agree objectives and plans and then all stay interested and provide near-real-time feedback as the project progresses. The result? New applications can be developed in, if not days, weeks, rather than months or even years.

Key to the success of all these different types of collaboration is an openness to change, an openness to share and an openness to trust. And key to accepting this is an acceptance that, no matter how good you think that your company is, it is almost certainly going to be even better if it works with partners who can contribute a level of knowledge and expertise about certain topics that is just not available inside your organisation. Sharing information and trusting the people with whom you share it might seem like a big step to take, but if everyone is trying to grow the market within which your organisation works, does it matter? Success for you, your partners means that the market will grow and you’ll be in a great position to take advantage of this growth.

Which brings me back to where I started. Schneider Electric has a whole department devoted to developing new ideas, helpful tools and white papers that are publicly available. For example, anyone planning a data centre build or refresh can access and use Schneider’s data centre optimisation tool, whereby a whole host of parameters can be input, amended, until finalised, before a final, optimised design is arrived at, or the exercise makes it clear that going the colo route, rather than staying on-premises, makes sense. Why make such information freely available to competitors? Well, they are also in the business of selling products and services in to the data centre space and the better they do it, the more likely it is that the overall market will grow, and Schneider itself will be benefit from such growth. In other words, if your confident that you are doing something well, why be afraid of telling the market?

Yes, intellectual property and technology developments might need to be kept under wraps, but the more open and collaborative any industry is, the faster it will grow and the faster the supplies will grow with it.

For many, collaboration is a word inexplicably linked with some of the murkier goings on of various 20th century conflicts. In the digital world, collaboration has nothing but positive connotations.

PA Consulting launches global survey of 500 senior business leaders.

Organisational agility is a major factor in driving financial performance, according to new research from PA Consulting, the global innovation and transformation consultancy.

PA’s research found that organisational agility is the single most important factor in responding to rapid technology, customer and societal change. The top 10 per cent of businesses by financial performance are almost 30 per cent more likely to display characteristics of agility. Organisational agility enables large-scale companies to thrive, delivering higher all-round performance, with positive benefits for customers, shareholders and employees.

However real and lasting organisational agility remains elusive for many. Three in five business leaders know they need to change yet are struggling to act. PA’s research found that:

Conrad Thompson, a business transformation expert at PA Consulting, says: “Businesses around the world face unprecedented disruption but also unprecedented opportunities. As radical change transforms the world we live in, organisations must evolve at pace. One in six of the companies we surveyed acknowledged that unless they evolve, they risk failure within five years. Yet we see organisational agility efforts transforming well-established financial institutions, household names, and industry stalwarts, establishing the conditions that have made them fit for the future. This research, and our experience, proves that embracing organisational agility is the most effective way to get ahead of the competition, and ensure your organisation thrives, today and tomorrow.”

What it takes to achieve organisational agility

PA has identified five attributes key to organisational agility and common to the high performing companies we surveyed:

Sam Bunting, an organisational agility expert at PA Consulting says: “At PA we believe in the power of ingenuity to build a positive human future in a technology-driven world. As a business leader right now, we’ve never been afforded a better opportunity to adapt and transform – to truly understand our customers’ motivations, to harness the power of technology to create ingenious products and services and to find creative and effective ways to engage and empower our people. We’ve seen first-hand how organisations can beat their numbers and deliver higher all-round performance as a result of taking these five perfectly achievable steps.”

Eight out of ten executives admit they do not review internal processes before setting transformation goals.

New research released by Celonis has found that despite significant pressure to embark on transformation initiatives that enable greater productivity, improve customer service and reduce costs, most businesses remain unclear on what they should focus on. The results showed that almost half of C-suite executives (45%) admit they do not know where to start when developing their transformation strategy. The Celonis study explores how businesses are approaching transformation programmes, as well as the disconnect between leadership and those on the frontline.

The survey of over 1,000C-suite executives and over 1,000 business analysts found that many organisations have wasted significant resources on business transformation initiatives that have been poorly planned. In fact, almost half (44%) of senior leaders believe that their business transformation has been a waste of time. With a quarter (25%) of businesses having spent over £500,000 on transformation strategies in the last 12 months, many organisations run the risk of incurring huge costs with no return.

Top-down strategies are stunting results

It’s no wonder that transformation initiatives aren’t hitting the mark, because business leaders are not using the expertise of those closest to running the organisation’s operations. The results highlighted a disconnect between those developing transformation strategies and those carrying them out:

Businesses are skipping square one

In addition, the study demonstrated that most organisations are struggling with transformation initiatives because they are diving into execution before understanding what to change first. In fact, more than eight out of 10 (82%) C-suite executives admit they do not review their internal business processes to understand what needs to be prioritised when setting initial goals and KPIs for a transformation programme. Perhaps this is because they don’t know how to gain this visibility; almost two-thirds (65%) of leadership state they would feel more confident deploying their transformation strategy if they had a better picture of how their business is being run.

And this trend has trickled down to the entire organisation. The research shows that almost two-fifths (39%) of analysts are not basing their work on internal processes when executing the transformation strategy given to them by senior personnel. Ultimately, this highlights that business leaders are investing in transformation initiatives because they think they should and not because they have identified a specific problem.

Look too far ahead and stumble in the present

Despite acknowledgement that an understanding of the here and now would be beneficial to inform transformation strategy, businesses are still jumping straight into tactics. For example, almost three quarters of C-suite executives cite AI/machine learning (73%) and automation (73%) as areas they want to maintain or increase investment in. In contrast, less than a third (33%) of senior leaders state that they plan to invest more in getting better visibility of their processes. But for those organisations that want to increase their investment in AI and innovation, understanding their current processes first could help them to work out which technologies would be most beneficial to their business.

“Transformation strategies will inevitably be part of every organisation’s operations, because no business can avoid adapting to the latest industry and technological trends,” commented Alexander Rinke, co-founder and co-CEO, Celonis. “However, they should be founded in concrete insights derived from processes that are actually happening within a company. Our research shows that too many businesses are rushing into costly initiatives that they do not necessarily even need to embark on. They are falling at the first hurdle; having a better understanding of inefficiencies in underlying business processes can help organisations invest wisely to provide the best possible service for their customers.”

“From early stages in digital transformation to post transformation, organisations must understand how internal processes can shape their business strategy,” added Jeremy Cox, Principal Analyst, Ovum. “Quantifying the business impact of existing or newly adapted processes, can help optimise the environment for customers. Ovum's annual global ICT Enterprise Insights research based on around 5000 enterprises reveals a consistent picture of struggle, as industry by industry around 80% have made little progress. While there are many reasons for this difficulty, a forensic examination on how work gets done, aided by intelligent process mining technology, would help quantify the consequences and drive consensus on what must change.”

Ethical and responsible AI development is a top concern for IT Decision Makers (ITDMs), according to new research from SnapLogic, which found that 94% of ITDMs across the US and UK believe more attention needs to be paid to corporate responsibility and ethics in AI development. A further 87% of ITDMs believe AI development should be regulated to ensure it serves the best interests of business, governments, and citizens alike.

The new research, conducted by Vanson Bourne on behalf of SnapLogic, studied the views and perspectives of ITDMs across industries, asking key questions such as: who bears primary responsibility to ensure AI is developed ethically and responsibly, will global expert consortiums impact the future development of AI, and should AI be regulated and if so by whom?

Who Bears Responsibility?

When asked where the ultimate responsibility lies to ensure AI systems are developed ethically and responsibly, more than half (53%) of ITDMs point to the organisations developing the AI systems, regardless of whether that organisation is a commercial or academic entity. However, 17% place responsibility with the specific individuals working on AI projects, with respondents in the US more than twice as likely as those in the UK to assign responsibility to individual workers (21% vs. 9%).

A similar number (16%) see an independent global consortium, comprised of representatives from government, academia, research institutions, and businesses, as the only way to establish fair rules and protocol to ensure the ethical and responsible development of AI. A further 11% believe responsibility should fall to the governments in the countries where the AI systems are developed.

Independent Guidance and Expertise

Some independent regional initiatives providing AI support, guidance, and oversight are already taking shape, with the European Commission High-Level Expert Group on Artificial Intelligence being one such example. For ITDMs, expert groups like this are seen as a positive step in addressing the ethical issues around AI. Half of ITDMs (50%) believe organisations developing AI will take guidance and adhere to recommendations from expert groups like this as they develop their AI systems. Additionally, 55% believe these groups will foster better collaboration between organisations developing AI.

However, Brits are more sceptical of the impact these groups will have. 15% of ITDMs in the UK stated that they expect organisations will continue to push the limits on AI development without regard for the guidance expert groups provide, compared with 9% of their American counterparts. Furthermore, 5% of UK ITDMs indicated that guidance or advice from oversight groups would be effectively useless to drive ethical AI development unless it becomes enforceable by law.

A Call for Regulation

Many believe that ensuring ethical and responsible AI development will require regulation. In fact, 87% of ITDMs believe AI should be regulated, with 32% noting that this should come from a combination of government and industry, while 25% believe regulation should be the responsibility of an independent industry consortium.

However, some industries are more open to regulation than others. Almost a fifth (18%) of ITDMs in manufacturing are against the regulation of AI, followed by 13% of those in the Technology sector, and 13% of those in the Retail, Distribution and Transport sector. In giving reasons for the rejection of regulation, respondents were nearly evenly split between the belief that regulation would slow down AI innovation, and that AI development should be at the discretion of the organisations creating AI programs.

Championing AI Innovation, Responsibly

Gaurav Dhillon, CEO at SnapLogic, commented: “AI is the future, and it’s already having a significant impact on business and society. However, as with many fast-moving developments of this magnitude, there is the potential for it to be appropriated for immoral, malicious, or simply unintended purposes. We should all want AI innovation to flourish, but we must manage the potential risks and do our part to ensure AI advances in a responsible way.”

Dhillon continued: “Data quality, security and privacy concerns are real, and the regulation debate will continue. But AI runs on data — it requires continuous, ready access to large volumes of data that flows freely between disparate systems to effectively train and execute the AI system. Regulation has its merits and may well be needed, but it should be implemented thoughtfully such that data access and information flow are retained. Absent that, AI systems will be working from incomplete or erroneous data, thwarting the advancement of future AI innovation.”

Egress has published the results of its first Insider Data Breach survey, examining the root causes of employee-driven data breaches, their frequency and impact. The research highlights a fundamental gulf between IT leaders and employees over data security and ownership that is undermining attempts to stem the growing tide of insider breach incidents.

The research was carried out by independent research organisation Opinion Matters and incorporated the views of over 250 U.S. and U.K.-based IT leaders (CIOs, CTOs, CISOs and IT directors), and over 2000 U.S. and U.K.-based employees. The survey also explored how employees and executives differ in their views of what constitutes a data breach and what is acceptable behaviour when sharing data.

Key research findings include:

-79% of IT leaders believe that employees have put company data at risk accidentally in the last 12 months. 61% believe they have done so maliciously.

-30% of IT leaders believe that data is being leaked to harm the organisation. 28% believe that employees leak data for financial gain.

-92% of employees say they haven’t accidentally broken company data sharing policy in the last 12 months; 91% say they haven’t done so intentionally.

-60% of IT leaders believe that they will suffer an accidental insider breach in the next 12 months; 46% believe they will suffer a malicious insider breach.

-23% of employees who intentionally shared company data took it with them to a new job.

-29% of employees believe they have ownership of the data they have worked on.

-55% of employees that intentionally shared data against company rules said their organisation didn’t provide them with the tools needed to share sensitive information securely.

The survey results highlight a perception gap between IT leaders and employees over the likelihood of insider breaches. This is a major challenge for businesses: insider data breaches are viewed as frequent and damaging occurrences, of concern to 95% of IT leaders, yet the vectors for those breaches – employees – are either unaware of, or unwilling to admit, their responsibility.

Carelessness and a lack of awareness are root causes of insider breaches

Asked to identify what they believe to be the leading causes of data breaches, IT leaders were most likely to say that employee carelessness through rushing and making mistakes was the reason (60%). A general lack of awareness was the second-most cited reason (44%), while 36% indicated that breaches were caused by a lack of training on the company’s security tools.

However, 30% believe that data is being leaked to harm the organisation and 28% say that employees leak data for financial gain.

From the employee perspective, of those who had accidentally shared data, almost half (48%) said they had been rushing, 30% blamed a high-pressure working environment and 29% said it happened because they were tired.

The most frequently cited employee error was accidentally sending data to the wrong person (45%), while 27% had been caught out by phishing emails. Concerningly, over one-third of employees (35%) were simply unaware that information should not be shared, proving that IT leaders are right to blame a lack of awareness and pointing to an urgent need for employee education around responsibilities for data protection.

Tony Pepper, CEO and Co-founder, Egress, comments: “The results of the survey emphasise a growing disconnect between IT leaders and staff on data security, which ultimately puts everyone at risk. While IT leaders seem to expect employees to put data at risk – they’re not providing the tools and training required to stop the data breach from happening. Technology needs to be part of the solution. By implementing security solutions that are easy to use and work within the daily flow of how data is shared, combined with advanced AI that prevents data from being leaked, IT leaders can move from minimising data breaches to stopping them from happening in the first place.”

Confusion over data ownership and ethics

The Egress Insider Data Breach survey found confusion among employees over data ownership. 29% believed that the data they work on belongs to them. Moreover, 60% of employee respondents didn’t recognise that the organisation is the exclusive owner of company data, instead ascribing ownership to departments or individuals. This was underlined by the fact that, of those who admitted to sharing data intentionally, one in five (20%) said they did so because they felt it was theirs to share.

23% of employees who shared data intentionally did so when they took it with them to a new job, while 13% did so because they were upset with their organisation. However, the majority (55%) said they shared data insecurely because they hadn’t been given the tools necessary to share it safely.

The survey also found that attitudes towards data ownership vary between generations, with younger employees less aware of their responsibilities to protect company data.

Tony Pepper adds: “As the quantity of unstructured data and variety of ways to share it continue to grow exponentially, the number of insider breaches will keep rising unless the gulf between IT leaders and employee perceptions of data protection is closed. Employees don’t understand what constitutes acceptable behaviour around data sharing and are not confident that they have the tools to work effectively with sensitive information. The results of this research show that reducing the risk of insider breaches requires a multi-faceted approach combining user education, policies and technology to support users to work safely and responsibly with company data.”

86% of IT executives surveyed believe human work, AI systems, and robotic automation must be well-integrated by 2020 -- but only 12% said their companies do this really well today.

Appian has published the third set of findings from its Future of Work international survey, conducted by IDG and LTM Research. Part three of the series looks specifically at “intelligent automation,” defined as the integration of emerging cognitive and robotic computing technologies into human-driven business processes and customer interactions. These technologies include artificial intelligence (AI), machine learning (ML), and robotic process automation (RPA). The data shows an enormous disconnect between the expected business benefits of intelligent automation and a typical organization’s ability to realize those benefits.

Business Urgency

Less than half (46%) of organizations have deployed intelligent automation. This is despite the fact that a large majority of IT leaders agree that effective intelligent automation holds enormous potential for their businesses:

IT leaders in enterprise organizations across North America and Europe feel tremendous urgency to take advantage of intelligent automation. However, the vast majority admit their companies can’t currently do it: 86% of executives interviewed indicated that human work, AI systems, and robotic automation “must be well-integrated by 2020,” yet only 12% of executives said their companies “do this really well today.”

Challenges: Complexity & Lack of Strategy

While there are deployments of individual emerging automation technologies, a lack of strategy and clear alignment to business goals is resulting in siloed deployments and overwhelmed internal application development teams. Less than half of surveyed companies have deployed any form of intelligent automation. Fully half of those companies boast IT staffs in excess of 20,000 employees. Specific pain-points include:

Survey of 500 IT and business decision makers examines perspectives on systematic automation of applications management.

A survey of business leaders by DXC Technology and Vanson Bourne, an independent research firm, reveals that 86 percent of IT and business decision makers believe that being able to predict and prevent future challenges with applications could be a “game changer” for their organizations. This could be achieved via automation, artificial intelligence (AI) and lean processes.

Respondents said investing in automation of applications management would have numerous benefits, including improved customer experience (49 percent), higher customer retention (46 percent) and greater customer satisfaction (46 percent). And 78 percent of respondents agree that without systematic automation, achieving zero application downtime would be a “distant dream.”

The findings provide a view of the attitudes, experiences and expectations of 500 IT and business decision makers on the impact of application performance in the digital economy. The survey was completed in four markets — the United Kingdom, Germany, Japan and the United States — and respondents represented sectors including banking, insurance, healthcare, and travel and transportation.

Despite the digital transformation opportunities, only 36 percent of senior managers surveyed said their peers are completely accepting of new technologies such as AI and automation to improve applications quality. Additionally, respondents said that the top barriers to investing in automation were security risks (44 percent) and legacy technology hurdles (36 percent).

“Because legacy technologies can require so much of an organization’s resources, it is essential for organizations to establish a plan to simplify their existing IT structures and free up funds for automation and other digital technologies,” said Rick Sullivan, vice president of digital applications and testing, Application Services, DXC Technology. “We are committed to helping our clients optimize applications performance through AI, lean processes and automation to enable great customer experiences today and future growth opportunities.”

While 82 percent of respondents agree that companywide strategies to invest in new AI-driven technologies to transform applications management would provide significant competitive advantages for their organizations, only 29 percent said their organizations actually have such strategies.

The survey also revealed several key findings for specific industries:

A new report by the Capgemini Research Institute has found that financial services firms are lagging behind in digital transformation compared to other industry sectors. Financial services firms report falling confidence in their digital capabilities, and a shortage of the skills, leadership and collective vision needed to shape the digital future.

The report, part of Capgemini’s Global Digital Mastery Series, examines sentiment on digital and leadership capabilities among bank and insurance executives, comparing it to an equivalent study from 2012. Over 360 executives were surveyed from 213 companies whose combined 2017 revenue represents approximately $1.67 trillion.

Key findings include:

Confidence in digital and leadership capabilities has sunk since 2012

Compared to 2012, a smaller proportion of financial services executives said their organizations had the necessary digital capabilities to succeed – with the confident few falling from 41 percent to 37 percent. Breaking this down, although more executives felt they had the required digital capabilities in customer experience (40 percent compared to 35 percent), confidence in operations saw a significant drop. Only 33 percent of executives said they had the necessary operations capabilities, compared to 46 percent from six years ago.

A shortfall in leadership was also cited, with only 41 percent of executives saying their organizations have the necessary leadership capabilities, down from 51 percent in 2012. In some specific areas, confidence in leadership fell significantly, including governance (45 percent to 32 percent), engagement (54 percent to 33 percent) and IT-business relationships (63 percent to 35 percent).

Digital Mastery proves to be illusive

In Capgemini’s digital mastery framework presented in the report, just 31 percent of banks and 27 percent of insurers are deemed to be digital masters, while 50 percent and 56 percent respectively are classified as beginners.

Executives also criticized the lack of a compelling vision for digital transformation across their organizations. Only 34 percent of banking and 24 percent of insurance respondents agreed with the statement that ‘our digital transformation vision crosses internal organizational units’, with just 40 percent and 26 percent respectively saying that ‘there is a high-level roadmap for digital transformation’.

Banking transformation has taken center stage, while insurance places focus on automation

Although banks’ digital transformation journeys are well underway, the industry has reached a crossroads, cites the report, as it attempts to meet the rising digital expectations of customers, manage cost pressures, and compete with technology upstarts. Fewer than half of banks (38 percent) say they have the necessary digital and leadership capabilities required for transformation. Insurance is catching-up with only 30 percent claiming to have the digital capabilities required and 28 percent the leadership capabilities necessary.

The banking sector does, however, outpace non-financial services sectors on capabilities such as customer experience, workforce enablement and technology and business alignment. Fifty-six percent of the banking firms said they use analytics for more effective target marketing (in comparison to 34 percent insurance and 44 percent non-financial services sector). More than half (53 percent) of banking organizations also said that upskilling and reskilling on digital skills is a top priority for them (32 percent for insurance and 44 percent for non-financial services sector).

One area of advantage for insurers was operational automation, with 42 percent of executives saying they used robotic process automation, against 41 percent of bankers, and 34 percent reporting the use of artificial intelligence in operations (compared to 31 percent of bank executives).

More challenges are ahead

On the other hand, business model innovation, defining a clear vision and purpose, and culture and engagement are some areas which are challenging both for banking and insurance. Only 33 percent of insurance and 39 percent of banking organizations have launched new businesses based on digital technologies (41 percent in non-financial services sector). While banking is in line with the non-financial services average, only around a third (34 percent) of banks had a digital vision that crossed organizational units. Insurance lags even further behind, with just around a quarter (24 percent) having an all-encompassing vision. In terms of culture aspects as well, only 33 percent of banking and 25 percent of insurance organizations thought their leaders were adopting new behaviors required for transformation, as compared with 37 percent in non-financial services organizations.

“This research shows that a reality check has taken place across the financial services industry, as incumbents now understand the true extent of the digital transformation challenge. In an environment of growing competition and consumer expectation, the view is very different from a few years ago, and it’s unsurprising that large organizations have become more realistic about their capabilities,” said Anirban Bose, Chief Executive Officer of Capgemini’s Financial Services and member of the Group Executive Board.

“At the same time, this is a wake-up call for banks and insurers to re-examine their business models. Tomorrow’s operating model is collaborative, innovative and agile. The digital masters we looked at are working with an ecosystem of third-party partners, developing and testing ideas more quickly under an MVP model, and nurturing a culture of bottom-up innovation and experimentation. The majority of financial services firms need to learn from the small pool of genuine innovators in their field,” Bose concluded.

Heightened investment in disruptive technologies and enterprise-class devices will empower the majority of front-line workers by 2023.

Zebra Technologies Corporation has published the results of its latest vision study on the Future of Field Operations. The study reveals mobile technology investment is a top priority for 36 percent of organizations and a growing priority for an additional 58 percent to keep up with rapidly evolving and increasing customer demand. The findings indicate investments will be made in disruptive technologies and enterprise mobile devices to enhance front-line worker productivity and customer satisfaction in field operations including fleet management, field services, proof of delivery and direct store delivery workflows.

“Driven by the acceleration of e-commerce along with customer’s heightened expectations and more focus within companies on differentiating service levels, the field operations industry is rapidly adapting the way it looks at its mobile technology investments,” saidJim Hilton, Director of Vertical Marketing Strategy, Manufacturing, Transportation & Logistics, Zebra Technologies. “Our study shows how growing challenges related to the on-demand economy drive organizations to adopt transformative, disruptive technologies such as augmented reality and intelligent labels to provide visibility and integrate business intelligence for a performance edge.”

KEY SURVEY FINDINGS

Equipping front-line workers with enterprise mobile devices remains a priority to stay competitive.

·The survey shows today only one-fifth of organizations have a majority of their field-based operations using enterprise mobile devices. This is estimated to reach 50 percent in five years.

·Respondents indicate most organizations intend to invest in handheld mobile computers, mobile printers and rugged tablets. From 2018 to 2023, handheld mobile computer usage with built-in barcode scanners is forecasted to grow by 45 percent, mobile printers by 53 percent and rugged tablets by 54 percent.The higher levels of inventory, shipment and asset accuracy provided by using these devices is expected to increase business revenues.

·A key driver of productivity, efficiency and cost-savings in field operations is ensuring ruggedized enterprise devices replace traditional consumer ones. Nearly 80 percent of respondents usually or always conduct a total cost of ownership (TCO) analysis of business devices prior to making a capital expenditure.Only 32 percent of respondents believe that consumer smartphones have better TCO than rugged devices.

Tertiary concerns and post-sale factors are important for organizations when evaluating front-line worker enterprise mobile devices.

·The survey reveals these TCO considerations when investing in new front-line enterprise technology: replacement (47 percent), initial device (44 percent), application development (44 percent) and programming/IT (40 percent).

·Almost 40 percent of respondents say device management and support costs are important as well as customer service (37 percent), device lifecycle cadence (36 percent) and repair costs (35 percent). Such factors increasingly influence the purchase cycle, showing that those who do not provide clear value or cannot control these costs will quickly be overtaken by those who do.

Emerging technologies and faster networks are disrupting field operations.

·The survey shows seven in ten organizations agree faster mobile networks will be a key driver for field operations investment to enable the use of disruptive technology.

·Significant industry game-changers will be droids and drones, with over a third of decision makers citing them as the biggest disruptors.

·The use of smart technologies such as sensors, RFID, and intelligent labels also play a role in transforming the industry. More than a quarter of respondents continue to view augmented/virtual reality (29 percent), sensors (28 percent), RFID and intelligent labels (28 percent) as well as truck loading automation (28 percent) as disruptive factors.

KEY REGIONAL FINDINGS

·Asia Pacific: 44 percent of respondents consider truck loading automation will be among one of the most disruptive technologies, compared respectively to 28 percent globally.

·Europe, Middle East and Africa: 70 percent of respondents agree e-commerce is driving the need for faster field operations.

·Latin America: 83 percent agree that faster wireless networks (4G/5G) are driving greater investment in new field operations technologies, compared with 70 percent of the global sample.

·North America: 36 percent of respondents plan to implement rugged tablets in the next year.

Research reveals less than 20% of IT professionals have complete and timely access to critical data in public clouds.

Keysight has released the results of a survey sponsored by Ixia, on ‘The State of Cloud Monitoring’. The report highlights the security and monitoring challenges faced by enterprise IT staff responsible for managing public and private cloud deployments.

The survey, conducted by Dimensional Research and polling 338 IT professionals at organizations from a range of sizes and industries globally, revealed that companies have low visibility into their public cloud environments, and the tools and data supplied by cloud providers are insufficient.

Lack of visibility can result in a variety of problems including the inability to track or diagnose application performance issues, inability to monitor and deliver against service-level agreements, and delays in detecting and resolving security vulnerabilities and exploits. Key findings include:

“This survey makes it clear that those responsible for hybrid IT environments are concerned about their inability to fully see and react to what is happening in their networks, especially as business-critical applications migrate to a virtualized infrastructure,” said Recep Ozdag, general manager and vice president, product management in Keysight’s Ixia Solutions Group. “This lack of visibility can result in poor application performance, customer data loss, and undetected security threats, all of which can have serious consequences to an organizations’ overall business success.”

Public and hybrid cloud monitoring maturity trails traditional data centers

The survey focused on challenges faced when monitoring public and private clouds, as well as on-premises data centers. Data revealed IT professionals indicated that cloud providers are not providing the level of visibility they need:

Visibility solutions enhance monitoring, network performance management, and security

Nearly all respondents (99%) identified a direct link between comprehensive network visibility and business value. The top three visibility benefits cited were:

The survey also revealed that visibility is critical for monitoring cloud performance, as well as validating application performance prior to cloud deployment:

nCipher Security says that as organizations embrace the cloud and new digital initiatives such as the internet of things (IoT), blockchain and digital payments the use of trusted cryptography to protect their applications and sensitive information is at an all-time high, according to the 2019 Global Encryption Trends Study from the Ponemon Institute.

With corporate data breaches making the headlines on an almost daily basis, the deployment of an overall encryption strategy by organizations around the world has steadily increased. This year, 45% of respondents say their organization has an overall encryption plan applied consistently across the entire enterprise with a further 42% having a limited encryption plan or strategy that is applied to certain applications and data types.

Threats, drivers and priorities

Employee mistakes continue to be the most significant threat to sensitive data (54%), more than external hackers (30%) and malicious insiders (21%) combined. In contrast, the least significant threats to the exposure of sensitive or confidential data include government eavesdropping (12%) and lawful data requests (11%).

The main driver for encryption is protection of an enterprise’s intellectual property and the personal information of customers – both 54% of respondents.

With more data to encrypt and close to 2/3 of respondents deploying 6 or more separate products to encrypt it, policy enforcement (73%) was selected as the most important feature for encryption solutions. In previous years, performance consistently ranked as the most important feature.

Cloud data protection requirements continue to drive encryption use, with encryption across both public and private cloud use cases growing over 2018 levels, and organizations prioritizing solutions that operate across both enterprise and cloud environments (68%).

Data discovery the number one challenge

With the explosion and proliferation of data that comes from digital initiatives, cloud use, mobility and IoT devices, data discovery continues to be the biggest challenge in planning and executing a data encryption strategy with 69% of respondents citing this as their number one challenge.

Trust, integrity, control

The use of hardware security modules (HSMs) grew at a record year-over-year level from 41% in 2018 to 47%, indicating a requirement for a hardened, tamper-resistant environment with higher levels of trust, integrity and control for both data and applications. HSM usage is no longer limited to traditional use cases such as public key infrastructure (PKI), databases, application and network encryption (TLS/SSL); the demand for trusted encryption for new digital initiatives has driven significant HSM growth over 2018 for code signing (up 13%), big data encryption (up 12%), IoT root of trust (up 10%) and document signing (up 8%). Additionally, 53% of respondents report using on-premises HSMs to secure access to public cloud applications.

Dr. Larry Ponemon, chairman and founder of the Ponemon Institute, says:

“The use of encryption is at an all-time high, driven by the need to address compliance requirements such as the EU General Data Protection Regulation (GDPR), California Data Breach Notification Law and Australia Privacy Amendment Act 2017, and the need to protect sensitive information from both internal and external threats as well as accidental disclosure. Encryption usage is a clear indicator of a strong security posture with organizations that deploy encryption being more aware of threats to sensitive and confidential information and making a greater investment in IT security.”

John Grimm, senior director of strategy and business development at nCipher Security, says:

“Organizations are under relentless pressure to protect their business critical information and applications and meet regulatory compliance, but the proliferation of data, concerns around data discovery and policy enforcement, together with lack of cybersecurity skills makes this a challenging environment. nCipher empowers customers by providing a high assurance security foundation that ensures the integrity and trustworthiness of their data, applications and intellectual property.”

Other key trends include:

·The highest prevalence of an enterprise encryption strategy is reported in Germany (67%) followed by the United States (65%), Australia (51%), and the United Kingdom (50%).

·Payment-related data (55% of respondents) and financial records (54% of respondents) are most likely to be encrypted. Financial records had the largest increase on this list over last year, up 4%.

·The least likely data type to be encrypted is health-related information (24% of respondents), which is a surprising result given the sensitivity of health information and the recent high-profile healthcare data breaches.

·61% of respondents classify key management as having a high level of associated “pain” (a rating of 7+ on a scale of 10). This figure is almost identical to the 63% of organizations that use six or more separate encryption products, suggesting there is clear correlation between the two findings.

·Support for both cloud and on-premises deployment of encryption has risen in importance as organizations have increasingly embraced cloud computing and look for consistency across computing styles.

Although the market for integration platform as a service (iPaaS) shows strong growth, the first signs of market consolidation are starting to emerge. Gartner, Inc. predicts that by 2023, up to two-thirds of existing iPaaS vendors will merge, be acquired or exit the market.

“The challenge for most iPaaS vendors is that their business is simply not profitable,” said Bindi Bhullar, senior research director at Gartner. “Revenue growth and increasing customer acceptance can’t keep up with the costs for running the platform and the heavy spending in sales and marketing.”

Megavendors such as Oracle, Microsoft and IBM are better-equipped to handle those challenges as they offer more-competitive offerings with more-aggressive pricing and packaging options than smaller players in the market. Gartner expects that this trend will continue, further diminishing the market share of specialist iPaaS players.

“For organizations looking to purchase an iPaaS solution, this is good news,” said Mr. Bhullar. “They can capitalize on the evolving market dynamics by solving short-term/immediate problems today, while preparing to adopt another iPaaS offering from an alternative vendor as the expected market consolidation accelerates through 2023.”

However, market consolidation means an increased risk that platform services will be discontinued due to the vendor exiting the market or being acquired. “Buyers should minimize exposure to vendor risk by adopting platforms that can deliver short-term payoffs, so that the cost of any eventual replacement can be more easily justified,” Mr. Bhullar added.

RPA Spend to Reach Over $2 Billion in 2022

Gartner estimates that global spending on robotic process automation (RPA) software will total $2.4 billion in 2022, up from $680 million in 2018. This increase in spending is primarily driven by the necessity for organizations to rapidly digitize and automate their legacy processes as well as enable access to legacy applications through RPA. “Organizations are adopting RPA when they have a lot of manual data integration tasks between applications and are looking for cost-effective integration methods,” said Saikat Ray, senior research director at Gartner.

Gartner predicts that by the end of 2022, 85 percent of large and very large organizations will have deployed some form of RPA. Mr. Ray added that 80 percent of organizations that completed proofs of concept and pilots in 2018 will aim to scale RPA implementations and increase RPA spending in 2019.

This shows that the technology is viable and has the desired effects. However, application leaders who are new to the technology should start with a simple RPA use case and work with internal stakeholders to identify more applicable processes.

Moving forward, Gartner expects more organizations to slowly discover that RPA offers benefits beyond cost optimization. RPA technology can support productivity and increase client satisfaction when combined with other artificial intelligence (AI) technologies such as chatbots, machine learning and applications based on natural language processing (NLP).

Consider the example of a client complaining that their invoice is showing the wrong amount. Chatbots engage with the client to understand the initial issue and delegate to a RPA bot to reconcile the invoice against the actual order entry record at the back-end. The RPA bot performs the matching transaction and sends the result back to the chatbot. The chatbot processes the RPA response and intelligently answers the client.

Retailers to use real-time in-store pricing

Gartner, Inc. predicts that by 2025, the top 10 global retailers by revenue will leverage contextualized real-time pricing through mobile applications to manage and adjust in-store prices for customers.

“Digital sales continue to grow, but it’s no longer a competition between online and offline. Today, many retailers find that half of their online sales are supported by their stores,” said Robert Hetu, vice president research analyst at Gartner. “As customers share more data and information from various sources, they expect more personalized and meaningful offers from retailers. Retailers should assess personal data and product preferences, and translate those inputs into immediate and contextualized offers.”

To offer consistent, relevant and personalized prices for customers, retailers need to understand customer behaviors, especially as the path to their purchase decisions becomes erratic across touchpoints. Digital twins — virtual representations of processes, things, environments or people — can simulate behavior and predict outcomes, including customer behavior and preferences. Examples include Adidas’ Speedfactory, to improve the quality, speed and overall efficiency of the company’s entire sporting goods product chain. The city of Singapore also has a full-scale digital twin of itself that can analyze future household energy storage.

Adoption of mobile payments and retailer mobile apps also supports the predicted move toward mainstream adoption of real-time in-store pricing. “Many consumers who have downloaded a retailer’s app use it for online purchases; others use it to obtain a coupon or discount offer that they can use in a physical store,” Mr. Hetu added.

However, retailers face some customer experience and technology challenges in ensuring that the correct price is accessible in real time. “Retailers need to educate customers in understanding the dynamic nature of pricing, which means that prices can rise or fall unexpectedly. Retailers also need to be better at managing delays in updating over-inventory and under-inventory levels,” observed Mr. Hetu.

To manage pricing signage, some retailers are using electronic shelf labels or digital shelf edge technologies. However, for the many that don’t use digital labels, associates must change price labels manually. This is a high-risk source of mistakes and a limitation to the frequency a retailer can adjust prices.

“Retailers must focus on enabling technologies such as a unified retail commerce platform, which uses centralized data for inventory, pricing, loyalty and other information to facilitate a continuous and cohesive experience,” said Mr. Hetu.

By 2030, 80 percent of the work of today’s project management (PM) discipline will be eliminated as artificial intelligence (AI) takes on traditional PM functions such as data collection, tracking and reporting, according to Gartner, Inc.

“AI is going to revolutionize how program and portfolio management (PPM) leaders leverage technology to support their business goals,” said Daniel Stang, research vice president at Gartner. “Right now, the tools available to them do not meet the requirements of digital business.”

Evolution of PPM Market

Providers in today’s PPM software market are behind in enabling a fully digital program management office (PMO), but Gartner predicts AI-enabled PPM will begin to surface in the market sometime this year. The market will focus first on providing incremental user experience benefits to individual PM professionals, and later will help them to become better planners and managers. In fact, by 2023, technology providers focused on AI, virtual reality (VR) and digital platforms will disrupt the PPM market and cause a clear response by traditional providers.

PPM as an AI-Enabled Discipline

Data collection, analysis and reporting are a large proportion of the PPM discipline. AI will improve the outcomes of these tasks, including the ability to analyze data faster than humans and using those results to improve overall performance. As these standard tasks start to get replaced, PPM leaders will look to staff their teams with those who can manage the demands of AI and smart machines as new stakeholders.

“Using conversational AI and chatbots, PPM and PMO leaders can begin to use their voices to query a PPM software system and issue commands, rather than using their keyboard and mouse,” said Mr. Stang. “As AI begins to take root in the PPM software market, those PMOs that choose to embrace the technology will see a reduction in the occurrence of unforeseen project issues and risks associated with human error.”

Server revenue grows and shipments increase

The worldwide server market continued to grow through 2018 as worldwide server revenue increased 17.8 percent in the fourth quarter of 2018, while shipments grew 8.5 percent year over year, according to Gartner, Inc. In all of 2018, worldwide server shipments grew 13.1 percent and server revenue increased 30.1 percent compared with full-year 2017.

“Hyperscale and service providers continued to increase their investments in their data centers (albeit at lower levels than at the start of 2017) to meet customers’ rising service demand, as well as enterprises’ services purchases from cloud providers,” said Kiyomi Yamada, senior principal analyst at Gartner. “To exploit data center infrastructure market disruption, technology product managers for server providers should prepare for continued increases in server demand through 2019, although growth will be a slower pace than in 2018.”

“DRAM prices started to come down, increasing demand for memory-rich configurations to support emerging workloads such as artificial intelligence (AI) and analytics kept buoying server prices. Product managers should market higher memory content servers to take advantage of DRAM oversupplies.”

Dell EMC secured the top spot in the worldwide server market based on revenue in the fourth quarter of 2018 (see Table 1). Dell EMC ended the year with 20.2 percent market share, followed by Hewlett Packard Enterprise (HPE) with 17.7 percent of the market. Huawei experienced the strongest growth in the quarter, growing 45.9 percent.

Table 1

Worldwide: Server Vendor Revenue Estimates, 4Q18 (U.S. Dollars)

| Company | 4Q18 Revenue | 4Q18 Market Share (%) | 4Q17 Revenue | 4Q17 Market Share (%) | 4Q18-4Q17 Growth (%) |

| Dell EMC | 4,426,376,116 | 20.2 | 3,606,976,178 | 19.4 | 22.7 |

| HPE | 3,876,819,483 | 17.7 | 3,578,005,770 | 19.3 | 8.4 |

| Huawei | 1,815,071,726 | 8.3 | 1,244,382,075 | 6.7 | 45.9 |

| Inspur Electronics | 1,801,622,141 | 8.2 | 1,260,671,411 | 6.8 | 42.9 |

| IBM | 1,783,691,221 | 8.2 | 2,623,501,533 | 14.1 | -32.0 |

| Others | 8,158,910,239 | 37.3 | 6,243,556,262 | 33.6 | 30.7 |

| Total | 21,862,491,037 | 100.0 | 18,556,994,228 | 100.0 | 17.8 |

Source: Gartner (March 2019)

In server shipments, Dell EMC maintained the No. 1 position in the fourth quarter of 2018 with 16.7 percent market share (see Table 2). HPE secured the second spot with 12.2 percent of the market. Both Dell EMC and HPE experienced declines in server shipments, while Inspur Electronics experienced the strongest growth with a 24.6 percent increase in shipments in the fourth quarter of 2018.

Table 2

Worldwide: Server Vendor Shipments Estimates, 4Q18 (Units)

| Company | 4Q18 Shipments | 4Q18 Market Share (%) | 4Q17 Shipments | 4Q17 Market Share (%) | 4Q18-4Q17 Growth (%) |

| Dell EMC | 580,580 | 16.7 | 582,720 | 18.3 | -0.4 |

| HPE | 424,347 | 12.2 | 443,854 | 13.9 | -4.4 |

| Inspur Electronics | 293,702 | 8.5 | 235,658 | 7.4 | 24.6 |

| Huawei | 260,193 | 7.5 | 257,916 | 8.1 | 0.9 |

| Lenovo | 191,032 | 5.5 | 181,523 | 5.7 | 5.2 |

| Others | 1,723,032 | 49.6 | 1,499,593 | 46.8 | 14.9 |

| Total | 3,472,886 | 100.0 | 3,201,264 | 100.0 | 8.5 |

Source: Gartner (March 2019)

The x86 server market increased in revenue by 27.1 percent, and shipments were up 8.7 percent in the fourth quarter of 2018.

Full-Year 2018 Server Market Results

In terms of regional results, in 2018, Asia/Pacific and North America posted strong growth in revenue with 38.3 percent and 34 percent, respectively. In terms of shipments, Asia/Pacific grew 17.6 percent and North America grew 15.9 percent year over year.

EMEA grew 3.1 percent in shipments and 20.4 percent in revenue. Latin America grew 20.9 percent in revenue, but declined 4.4 percent in shipments. Japan grew 3.3 percent in revenue, 2.1 percent in shipments.

Despite a slow start, Europe is now one of the fastest-growing regions worldwide for blockchain spending. This is due to a number of factors, such as enterprises moving blockchain to production and a wave of local start-ups driving marketing and sales activities.

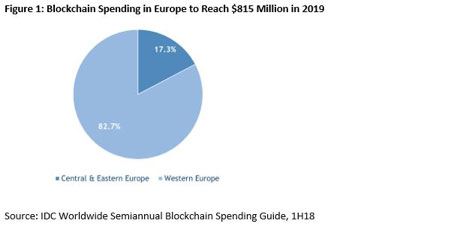

According to IDC's Worldwide Semiannual Blockchain Spending Guide, 1H18, published in February 2019, blockchain spending in Europe will reach more than $800 million in 2019, with Western Europe accounting for 83% of spending and Central and Eastern Europe 17%. Total spending in Europe will reach $3.6 billion in 2022, with a 2018–2022 five-year compound annual growth rate (CAGR) of 73.2%.

"In terms of technologies, IT services, such as consulting, outsourcing, deployment and support, and education and training, will drive spending, accounting for nearly 63% of European spending in 2019, growing at a 2018–2022 CAGR of 76.6%," said Carla La Croce, senior research analyst, Customer Insights and Analysis, IDC. "This is because blockchain needs to step up and demonstrate its production-readiness, and businesses need to ensure they take a long-term strategic view of their overarching blockchain initiatives."

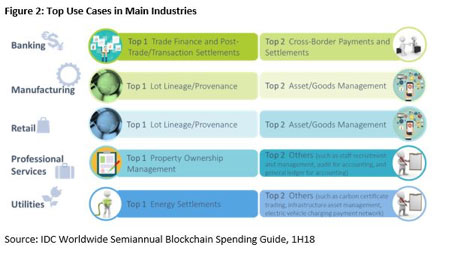

Blockchain emerged out of the financial sector and the technology is now well established there, whether as a POC or a real deployment in production, with banking the leading industry in terms of blockchain spending in Europe. Finance will account for a third of total spending in 2019, with widespread uses, from trade finance and post-trade/transaction settlements to cross-border payments and settlements, as well as regulatory compliance. Insurance is expected to be the fastest-growing industry over the 2018–2022 forecast period, with a CAGR of 81.3%.

Blockchain is also growing in other industries, with ongoing experimentation bringing to light new use cases in areas such as manufacturing and resources (accounting for 19% of total spending) and other supply chain related industries such as retail, wholesale, and transport (accounting for nearly 15%).

"Interest in blockchain among supply chain industries is seen in the increasing number of use cases for tracking products, such as lot lineage provenance and asset/goods management, from food to luxury goods," said La Croce. "The aim is to reduce paperwork, make processes more efficient, prevent counterfeiting, and improve trust and transparency with trading partners and consequently with their customers as well."

"IDC also sees strong competition between cloud giants to host, manage, and service the emerging blockchain ecosystems, especially from IBM and Microsoft, along with Amazon, Oracle, Google, and SAP, with Alibaba and Huawei expected to play an increasing role in the East," said Mohamed Hefny, program manager, Systems and Infrastructure Solutions, IDC. "Building consortiums and recruiting the leading enterprises in various segments is becoming a race in blockchain now, and as a result we are witnessing a growing number of large pilot projects."

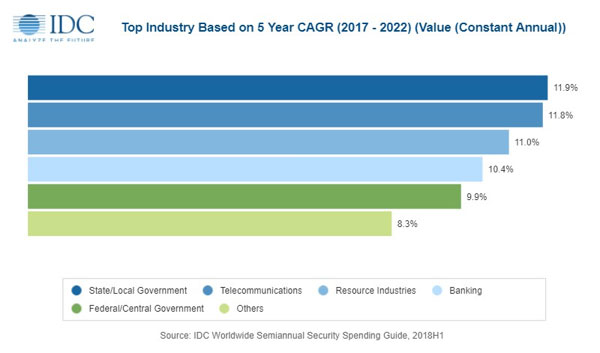

Security spending to reach $103 billion

Worldwide spending on security-related hardware, software, and services is forecast to reach $103.1 billion in 2019, an increase of 9.4% over 2018. This pace of growth is expected to continue for the next several years as industries invest heavily in security solutions to meet a wide range of threats and requirements. According to the Worldwide Semiannual Security Spending Guide from International Data Corporation (IDC), worldwide spending on security solutions will achieve a compound annual growth rate (CAGR) of 9.2% over the 2018-2022 forecast period and total $133.8 billion in 2022.

The three industries that will spend the most on security solutions in 2019 – banking, discrete manufacturing, and federal/central government – will invest more than $30 billion combined. Three other industries (process manufacturing, professional services, and telecommunications) will each see spending greater than $6.0 billion this year. The industries that will experience the fastest spending growth over the forecast period will be state/local government (11.9% CAGR), telecommunications (11.8% CAGR), and the resource industries (11.3% CAGR). This spending growth will make telecommunications the fourth largest industry for security spending in 2022 while state/local government will move into the sixth position ahead of professional services.

"When examining the largest and fastest growing segments for security, we see a mix of industries – such as banking and government – that are charged with guarding highly sensitive information in regulated environments. In addition, information-based organizations like professional services firms and telcos are ramping up spending. But regardless of industry, these technologies remain an investment priority in virtually all enterprises, as delivering a sense of security is everyone's business," said Jessica Goepfert, program vice president, Customer Insights and Analysis.

Managed security services will be the largest technology category in 2019 with firms spending more than $21 billion for around-the-clock monitoring and management of security operations centers. Managed security services will also be the largest category of spending for each of the top five industries this year. The second largest technology category in 2019 will be network security hardware, which includes unified threat management, firewalls, and intrusion detection and prevention technologies. The third and fourth largest investment categories will be integration services and endpoint security software. The technology categories that will see the fastest spending growth over the forecast will be managed security services (14.2% CAGR), security analytics, intelligence, response and orchestration software (10.6% CAGR), and network security software (9.3% CAGR).

"The security landscape is changing rapidly, and organizations continue to struggle to maintain their own in-house security solutions and staff. As a result, organizations are turning to managed security service providers (MSSPs) to deliver a wide span of security capabilities and consulting services, which include predicative threat intelligence and advanced detection and analysis expertise that are necessary to overcome the security challenges happening today as well as prepare organizations against future attacks," said Martha Vazquez, senior research analyst, Infrastructure Services.

From a geographic perspective, the United States will be the single largest market for security solutions with spending forecast to reach $44.7 billion in 2019. Two industries – discrete manufacturing and the federal government – will account for nearly 20% of the U.S. total. The second largest market will be China where security purchases by three industries -- state/local government, telecommunications, and central government – will comprise 45% of the national total. Japan and the UK are the next two largest markets with security spending led by the consumer sector and the banking industry respectively.

"While the U.S. and Western Europe will deliver two-thirds of the total security spend this year, the largest growth in security spend will be seen in China, Asia/Pacific (excluding Japan and China), and Latin America, each with double-digit CAGRs over the five-year forecast period," said Karen Massey, research manager, Customer Insights & Analysis.

Large (500-1000 employees) and very large businesses (more than 1000 employees) will be responsible for roughly two thirds of all security-related spending in 2019. These two segments will also see the strongest spending growth over the forecast with CAGRs of 11.1% for large businesses and 9.4% for very large businesses. Medium (100-499 employees) and small businesses (10-99 employees) will spend nearly $26 billion combined on security solutions in 2019. Consumers are forecast to spend nearly $5.7 billion on security this year.

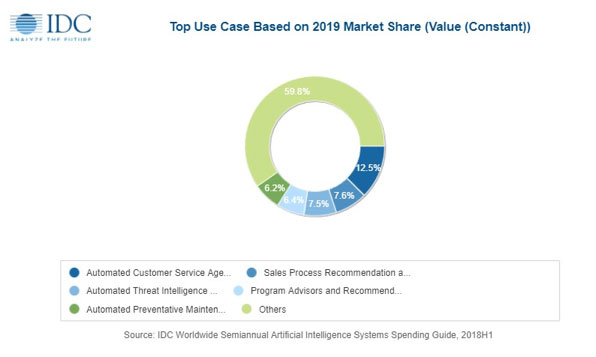

AI spending to grow to $35 billion

Worldwide spending on artificial intelligence (AI) systems is forecast to reach $35.8 billion in 2019, an increase of 44.0% over the amount spent in 2018. With industries investing aggressively in projects that utilize AI software capabilities, the International Data Corporation (IDC) Worldwide Semiannual Artificial Intelligence Systems Spending Guide expects spending on AI systems will more than double to $79.2 billion in 2022 with a compound annual growth rate (CAGR) of 38.0% over the 2018-2022 forecast period.

Global spending on AI systems will be led by the retail industry where companies will invest $5.9 billion this year on solutions such as automated customer service agents and expert shopping advisors & product recommendations. Banking will be the second largest industry with $5.6 billion going toward AI-enabled solutions including automated threat intelligence & prevention systems and fraud analysis & investigation systems. Discrete manufacturing, healthcare providers, and process manufacturing will complete the top 5 industries for AI systems spending this year. The industries that will experience the fastest growth in AI systems spending over the 2018-2022 forecast are federal/central government (44.3% CAGR), personal and consumer services (43.3% CAGR), and education (42.9% CAGR).

"Significant worldwide artificial intelligence systems spend can now be seen within every industry as AI initiatives continue to optimize operations, transform the customer experience, and create new products and services", said Marianne Daquila, research manager, Customer Insights & Analysis at IDC. "This is evidenced by use cases, such as intelligent process automation, expert shopping advisors & product recommendations, and pharmaceutical research and discovery exceeding the average five-year compound annual growth of 38%. The continued advancement of AI-related technologies will drive double-digit year-over-year spend into the next decade."

The AI use cases that will see the most investment this year are automated customer service agents ($4.5 billion worldwide), sales process recommendation and automation ($2.7 billion), and automated threat intelligence and prevention systems ($2.7 billion). Five other use cases will see spending levels greater than $2 billion in 2019: automated preventative maintenance, diagnosis and treatment systems, fraud analysis and investigation, intelligent process automation, and program advisors and recommendation systems.

Software will be the largest area of AI systems spending in 2019 with nearly $13.5 billion going toward AI applications and AI software platforms. AI applications will be the fastest growing category of AI spending with a five-year CAGR of 47.3%. Hardware spending, dominated by servers, will be $12.7 billion this year as companies continue to build out the infrastructure necessary to support AI systems. Companies will also invest in IT services to help with the development and implementation of their AI systems and business services such as consulting and horizontal business process outsourcing related to these systems. By the end of the forecast, AI-related services spending will nearly equal hardware spending.

"IDC is seeing that spending on both AI software platforms and AI applications are continuing to trend upwards and the types and varieties of use cases are also expanding," said David Schubmehl, research director, Cognitive/Artificial Intelligence Systems at IDC. "While organizations see continuing challenges with staffing, data, and other issues deploying AI solutions, they are finding that they can help to significantly improve the bottom line of their enterprises by reducing costs, improving revenue, and providing better, faster access to information thereby improving decision making."

On a geographic basis, the United States will deliver nearly two thirds of all spending on AI systems in 2019, led by the retail and banking industries. Western Europe will be the second largest region in 2019, led by banking, retail, and discrete manufacturing. The strongest spending growth over the five-year forecast will be in Japan (58.9% CAGR) and Asia/Pacific (excluding Japan and China) (51.4% CAGR). China will also experience strong spending growth throughout the forecast (49.6% CAGR).

"AI is a big topic in Europe, it's here and it's set to stay. Both AI adoption and spending are picking up fast. European businesses are hands-on AI and have moved from an explorative phase to the implementation stage. AI is the game changer in a highly competitive environment, especially across customer-facing industries such as retail and finance, where AI has the power to push customer experience to the next level with virtual assistants, product recommendations, or visual searches. Many European retailers such as Sephora, ASOS, and Zara or banks such as NatWest and HSBC are already experiencing the benefits of AI, including increased store visits, higher revenues, reduced costs, and more pleasant and personalized customer journeys. Industry-specific use cases related to automation of processes are becoming mainstream and the focus is set to shift towards next-generation use of AI for personalization or predictive purposes," said Andrea Minonne, senior research analyst, IDC Customer Insight & Analysis in Europe.

Cloud IT infrastructure revenues fall below ‘traditional’ infrastructure revenues

According to the International Data Corporation (IDC) Worldwide Quarterly Cloud IT Infrastructure Tracker, vendor revenue from sales of IT infrastructure products (server, enterprise storage, and Ethernet switch) for cloud environments, including public and private cloud, grew 28.0% year over year in the fourth quarter of 2018 (4Q18), reaching $16.8 billion. For 2018, annual spending (vendor revenue plus channel mark-up) on public and private cloud IT infrastructure totaled $66.1 billion, slightly higher (1.3%) than forecast in Q3 2018. IDC also raised its forecast for total spending on cloud IT infrastructure in 2019 to $70.1 billion – up 4.5% from last quarter's forecast – with year-over-year growth of 6.0%.

Quarterly spending on public cloud IT infrastructure was down 6.9% in Q418 compared to the previous quarter but it still almost doubled in the past two years reaching $11.9 billion in 4Q18 and growing 33.0% year over year, while spending on private cloud infrastructure grew 19.6% reaching $5.75 billion. Since 2013, when IDC started tracking IT infrastructure deployments in different environments, public cloud has represented the majority of spending on cloud IT infrastructure and in 2018 – as IDC expected – this share peaked at 69.6% with spending on public cloud infrastructure growing at an annual rate of 50.2%. Spending on private cloud grew 24.8% year over year in 2018.

In 4Q18, quarterly vendor revenues from IT infrastructure product sales into cloud environments fell and once again were lower than revenues from sales into traditional IT environments, accounting for 48.3% of the total worldwide IT infrastructure vendor revenues, up from 42.4% a year ago but down from 50.9% last quarter. For the full year 2018, spending on cloud IT infrastructure remained just below the 50% mark at 48.4%. Spending on all three technology segments in cloud IT environments is forecast to deliver slower growth in 2019 than in previous years. Ethernet switches will be the fastest growing at 23.8%, while spending on storage platforms will grow 9.1%. Spending on compute platforms will stay at $35.0 billion but still slightly higher than expected in IDC's previous forecast.

The rate of annual growth for the traditional (non-cloud) IT infrastructure segment slowed down from 3Q18 to below 1% but the segment grew 11.1% quarter over quarter. For the full year, worldwide spending on traditional non-cloud IT infrastructure grew by 12.2%, exactly as forecast, as the market has started going through a technology refresh cycle, which will wind down by 2019. By 2023, we expect that traditional non-cloud IT infrastructure will only represent 40.5% of total worldwide IT infrastructure spending (down from 51.6% in 2018). This share loss and the growing share of cloud environments in overall spending on IT infrastructure is common across all regions.

"The unprecedented growth of the infrastructure systems market in 2018 was shared across both cloud and non-cloud segments," said Kuba Stolarski, research director, Infrastructure Systems, Platforms and Technologies at IDC. "As market participants prepare for a very difficult growth comparison in 2019, compounded by strong, cyclical, macroeconomic headwinds, cloud IT infrastructure will be the primary growth engine supporting overall market performance until the next cyclical refresh. With new on-premises public cloud stacks entering the picture, there is a distinct possibility of a significant surge in private cloud deployments over the next five years."

All regions grew their cloud IT Infrastructure revenues by double digits in 4Q18. Revenue growth was the fastest in Canada at 67.2% year over year, with China growing at a rate of 54.4%. Other regions among the fastest growing in 4Q18 included Western Europe (39.7%), Latin America (37.9%), Japan (34.9%), Central & Eastern Europe and Middle East & Africa (30.9% and 30.2%, respectively), Asia/Pacific (excluding Japan) (APeJ) (28.5%), and the United States (15.5%).

| Top Companies, Worldwide Cloud IT Infrastructure Vendor Revenue, Market Share, and Year-Over-Year Growth, Q4 2018 (Revenues are in Millions) | |||||

| Company | 4Q18 Revenue (US$M) | 4Q18 Market Share | 4Q17 Revenue (US$M) | 4Q17 Market Share | 4Q18/4Q17 Revenue Growth |

| 1. Dell Inc | $2,820 | 16.8% | $1,991 | 15.2% | 41.6% |

| 2. HPE/New H3C Group** | $2,047 | 12.2% | $1,558 | 11.9% | 31.4% |

| 3. Cisco | $1,143 | 6.8% | $1,024 | 7.8% | 11.6% |

| 4. Inspur* | $950 | 5.7% | $504 | 3.8% | 88.4% |

| 4. Huawei* | $910 | 5.4% | $555 | 4.2% | 63.9% |

| ODM Direct | $4,631 | 27.6% | $4,176 | 31.9% | 10.9% |

| Others | $4,268 | 25.5% | $3,292 | 25.1% | 29.6% |

| Total | $16,768 | 100.0% | $13,101 | 100.0% | 28.0% |

| IDC's Quarterly Cloud IT Infrastructure Tracker, Q4 2018 | |||||

The MSP market is in flux; the market has been characterised recently by major changes, with a lot of consolidation, merger and acquisition activity and also considerable new business formation because of the low cost of entry and as traditional VARs move into more profitable areas.

Looking at its list of the top 500 MSPs, IT Europa says there have been massive movements as companies have moved up and down due to winning or losing big deals, so there is inherent volatility in the sector. There are many new entrants: it has recorded several instances of new MSPs being born out of individuals and groups in customer IT departments, especially in financial services.

There has been a steady process of consolidation, with mergers and acquisitions reported weekly. The UK still has the largest number of MSPs in Europe, leading the German and Netherlands markets by some way. But other countries are showing signs of stronger engagement.

One of the issues is that of definition: there are estimates of anything from 18,000 to 25,000 managed services providers in Europe, with estimates of between 5000 and 12,000 MSPs in the UK, depending on definition; some provide very basic backup to local SMBs or just Office365, others are part of large IT partners and supply a range of services. There are few pure MSP businesses and they tend to focus on specialist areas.