For as long as I’ve been involved in the world of IT, there’s always been a bottleneck (or three) that has slowed down the overall performance of the infrastructure designed to connect applications and users. Many moons ago, the very first storage service providers, with not a cloud on the horizon, found that their great idea had one major snag – latency. Yes, encouraging end users to offload their storage to a professional third party organisation made a great deal of sense, but not if it took days to back it up or to retrieve it. Of course, there are many other examples where either the compute, network or storage element of an IT infrastructure could not keep up with the other disciplines. And, right now, it seems that even the applications themselves are coming under scrutiny in terms of how they are written and how they perform on different devices – speed, flexibility and agility being the watch words.

In this issue of Digitalisation World, we turn our attention to another important, if not the most important component, of the overall IT infrastructure – the data centre. With data centre managers finally realising that their marvellous facilities do not exist in splendid isolation, but actually to house and empower or facilitate IT hardware and software, data centre design, location, operations and management is making a significant, positive contribution to the digital world. As the articles in this digital publication demonstrate, whether it’s hyperscale, the edge, outsourcing, hybrid, 5G and IoT, AR and VR, blockchain or AI, the data centre is central to the successful implementation of virtually any IT project.

Alongside the data centre predictions articles, you’ll find the final tranche of more general 2019 predictions. DW received over 160 responses to our request for a little bit of crystal ball gazing – thanks to all those who responded – and we think that they make for very interesting reading.

84% of business leaders think artificial intelligence (AI) will be critical to their business in three years, but only 15% are aware of AI projects being fully implemented in their organisations.

Despite executives having high expectations for the impact that AI will have on their businesses according to Cognizant’s new report, ‘Making AI Responsible - and Effective, only half of companies have policies and procedures in place to identify and address the ethical considerations of its applications and implementations. The study analyses the responses of almost 1,000 executives across the financial services, technology, healthcare, retail, manufacturing, insurance and media & entertainment industries in Europe and the US.

Whilst executives are enthusiastic about the importance and potential benefits of AI to their business, many lack the strategic focus and consideration for the non-technical aspects that are critical to the success of AI, such as trust, transparency and ethics.

Optimism and enthusiasm

The research shows that business leaders are positive about the importance and potential benefits of AI. Roughly two- thirds (63 %) say that AI is extremely or very important to their companies today, and 84 % expect this will be the case three years from now. Lower costs, increased revenues and the ability to introduce new products or services, or to diversify were cited as the key advantages for the future.

Companies that are growing much faster than the average business in their industry, in particular, expect major benefits in coming years, with 86% of executives of these fast-growing companies stating AI is extremely or very important to their company’s success, compared with 57% of those at their competitors with slower growth. These industry leaders say they plan to use AI to drive further growth, solidifying their leading positions and pull even further away from the pack. This is reflected in their greater investment in key AI technologies, including computer vision (64% vs 47%), smart robotics/autonomous vehicles (63% vs 43%) and analysis of natural language (67% vs 42%).

Almost half of those companies (44%) undertaking at least one AI project expect to increase their employee headcount over the next three years as a result of the impact of AI projects. Retail and financial services industry executives were most likely to expect a boost to employment (56% and 49% respectively).

Disconnect between optimism and actual implementation

However, business leaders’ optimism is disconnected from the actual implementation in many companies. While two-thirds of executives said they knew about an AI project at their company, only 24% of that group – just 15% of all respondents – were aware of projects that were fully implemented.

Some 40% of respondents said that securing senior management commitment, buy-in by the business and even adequate budget were extremely or very challenging, indicating that many companies are not yet fully committed to AI’s central role in advancing business objectives.

Neglect of ethical considerations

Only half of companies have policies and procedures in place to identify and address ethical considerations – either in the initial design of AI applications or in their behaviour after the system is launched.

Sanjiv Gossain, European Head, Cognizant Digital Business, commented: ‘The challenge today is less about understanding technical questions and technology capabilities, and more about crafting a strategy, determining the governance structures and practices needed for responsible AI’.

According to Gossain, ‘companies need to pay more attention to the non-technical considerations of AI deployments, many of which are more critical and complex than those related to developing and running the technology itself. AI operates in the real world and this will not only help companies determine which technologies can be used to advance business objectives, but also which have the potential to irritate customers, alienate employees, drive up R&D and deployment costs, and undermine brand reputation’.

The report provides three key recommendations to help companies take action and achieve the significant business benefits of AI:

Emerging markets are the most digitally mature, based on the latest Digital Transformation Index with 4,600 business leaders from 40+ countries.

Despite the relentless pace of disruption, the latest Dell Technologies Digital Transformation (DT) Index shows many businesses’ digital transformation programs are still in their infancy. This is evidenced by the 78 percent of business leaders who admit digital transformation should be more widespread throughout their organisation (UK: 71 percent). More than half of businesses (51 percent) believe they’ll struggle to meet changing customer demands within five years (UK: 22 percent), and almost one in three (30 percent) still worry their organisation will be left behind (UK: 19 percent).

Dell Technologies, in collaboration with Intel and Vanson Bourne, surveyed 4,600 business leaders (director to C-suite) from mid- to large-sized companies across the globe to score their organisations’ transformation efforts.

The study revealed that emerging markets are the most digitally mature, with India, Brazil and Thailand topping the global ranking. In contrast, developed markets are slipping behind: Japan, Denmark and France received the lowest digital maturity scores. The UK came in 19th place, in front of Germany (24th) but behind Russia (17th) and Spain (14th). What’s more, emerging markets are more confident in their ability to “disrupt rather than be disrupted” (53 percent), compared to just 40 percent in developed nations.

Behind the curve

The DT Index II builds on the first ever DT Index launched in 2016. The two-year comparison highlights that progress has been slow, with organisations struggling to keep up with the blistering pace of change. While the percentage of Digital Adopters has increased, there’s been no progress at the top. Almost four in 10 (39 percent) businesses are still spread across the two least digitally mature groups on the benchmark (Digital Laggards and Digital Followers).

“In the near future, every organisation will need to be a digital organisation, but our research indicates that the majority still have a long way to go,” says Michael Dell, chairman and CEO of Dell Technologies. “Organisations need to modernise their technology to participate in the unprecedented opportunity of digital transformation. The time to act is now.”

Barriers to transformation and confidence

The findings also suggest business leaders are on the verge of a confidence crisis, with 91 percent held back by persistent barriers.

Globally, the top five barriers to digital transformation success:

Plans to realise their digital future

Leaders around the world have reported common priorities and investments to aid future transformation, including an increased focus on workforce, security and IT. Forty-six percent are developing in-house digital skills and talent, by teaching all employees how to code for instance, up from 27 percent in 2016. For the UK this has increased from 27 percent in 2016 to 49 percent in 2018.

The top technology investments around the world for the next one to three years will be in:

How organisations fare in the future will depend on the steps they take today. For instance, Draper, a Dell Technologies customer, was traditionally focused on department of defence research but it’s starting to move into more commercial areas such as biomedical science.

“Technology enables us to keep solving the world’s toughest problems; from the infrastructure and services that underpin our innovation, to the experimental technologies that we wield to prevent disease for instance,” says Mike Crones, CIO, Draper. “We couldn’t push boundaries, and call ourselves an engineering and research firm, without being a fully transformed and modern company from the inside out.”

Only three in ten organisations make technology decisions in the boardroom, despite almost a third utilising strategic IT change to deliver increased revenues.

Coeus Consulting has published new research revealing that although the fate of many organisations depends on their ability to implement strategic change and to adopt disruptive technologies, a reported lack of business and IT alignment, coupled with a corporate fear of risk, means they risk losing out on crucial revenues and market share.

Just twenty one percent of those surveyed stated they seek to implement new technology as soon as possible, with some of the main barriers to adoption being: fear of disruption to core business (30%), lack of budget to adopt new technology (21%), and poorly planned adoption strategies (19%).

“While it is reassuring that organisations are at least attempting to keep up with disruptive technologies, it is somewhat concerning that they are not doing more. Monitoring advancements is the first step on the road, but only three in ten organisations make technology decisions in the boardroom. With technology now playing a vital role in every industry, organisations need to increase their understanding of technology and be prepared to take more calculated risks in order to reap the benefits and execute successful strategic change”, Keith Thomas, Head of IT Strategy Practice, Coeus Consulting commented.

Successful implementation rates are low among respondents which could explain these fears, with only seven percent noting that all of their organisation’s strategic IT change projects have met initial objectives over the past two years. The good news is that, of those from organisations that have a test and learn culture, and also set objective success or failure criteria for initiatives in advance, almost sixty percent report that their organisation investigates or adopts a different approach when initiatives don’t meet objective success criteria. “Organisations are blinkered to the market and must be willing to tread the fine line between adopting technologies quickly and rushing the process by investing in the wrong technology, otherwise they risk being overtaken by their competitors and will see declining revenues”, commented Ben Barry, Director, Coeus Consulting.

Aligned and informed organisational leadership is clearly an issue within organisations where at least some strategic IT change projects have not met initial objectives, with just over seventy percent admitting one of: business plans changing, senior management not buying into the change, or not taking enough risks as a reason for failure. “This is disconcerting, if those at board level are failing to see the benefits of strategic IT change, then implementation, adoption and deployment of new technologies is destined to fail. Businesses need to ensure board-level understanding of the importance of IT, as well as building stronger strategic IT change capabilities”, added Thomas.

“Consumer demand for new and improved offerings, paired with demand for digitalisation from the business, means that organisations not only need to increase the speed at which they are doing things, but must also match, or stay ahead of the offerings from disruptive and agile competitors”, Thomas noted.

Seeking to discover how organisations view the next wave of disruptive technology, almost a third (29%) of respondents believe artificial intelligence represents the most significant innovation set to impact their industry in the next two years, with data and analytics (18%) next in line. Despite their predictions on the next generation of technology, only 38% of respondents say they operate with dedicated teams monitoring the latest advancements. This suggests sixty percent of organisations could be operating with little knowledge of innovations taking place outside their four walls.

Despite the current economic climate, funding seems to be a secondary issue. Last years’ research found that just over six in ten (62%) of respondents predicted an increase in the size of their budget for the coming year. In actual fact, only fifty percent of respondents from the survey this year reported an increase.

However, just over fifty percent of respondents reported that digital services are being funded from the IT budget in their company, and additional funding is also allocated from elsewhere. Indeed, approaching six in ten (57%) are anticipating an increase in their budget for the financial year 2019 to 2020. This indicates that business leaders appreciate the need for IT in their current and future operations to the point of allocating funding, but not always to the point of consistently aligning with their IT counterparts.

Increasing operational efficiency (49%), customer satisfaction (32%) and increasing revenues/sales (31%) top the list of drivers of strategic IT change projects, demonstrating the expectations around the business value of IT change are not being effectively driven.

Businesses need to recognise the consequences that slowing IT spend, and ultimately, stagnating progress, could have on their business prospects. Taking unnecessary risks could lead to the downfall of an organisation, but in reality, spending on technology and taking a fail-fast, calculated approach to IT risk is now a necessity.

Benefits of digital technology are well-known, but a distinct lack of cohesion around how to effectively adopt it remains.

A new research report produced bySoftwareONE, a global leader in software and cloud portfolio management, has revealed that the majority of organisations (58 per cent) do not have a clearly defined strategy in place when it comes to adopting and integrating digital workspace technology. This indicates how, in many organisations, implementing and making use of such technology is still being carried out in something of a haphazard manner, meaning that they will struggle to truly maximise its potential unless they take steps to overhaul their strategic approach.

The findings of the research are summarised in SoftwareONE’sBuilding a Lean, Mean, Digital Machinereport, which has been released today. The report also found that, despite the fact that almost all organisations (99 per cent) employ some form of digital workspace technology, respondents have encountered a host of challenges when it comes to using them. These include higher security risks (cited by 47 per cent) and a lack of employee knowledge in how best to use the solutions (45 per cent).

For Zak Virdi, UK Managing Director at SoftwareONE, these figures should serve as a wake-up call to businesses who want to make the most of digital workspace technologies, but have not given enough thought to how to implement them in a way that maximises productivity while minimising any potential issues.

Virdi said: “It’s clear that our working lives have been made easier in many ways by digital technology – cloud apps like Office 365 and Dropbox have become very much the norm, and online collaboration tools like Smartsheet are being gratefully adopted by workers the world over.

“But bringing new digital solutions into the business without a unified, cohesive strategy in place is likely to lead to problems in the long run. These tools are designed to connect employees more effectively, but they can have the opposite effect if, for instance, one department is using a particular tool but another one is completely unaware of its existence. Moreover, introducing new technologies that are not sanctioned for use by senior leaders – frequently known as Shadow IT – can lead to security issues that can be difficult to remedy.”

This need for more clearly defined strategies is supported by the fact that digitalisation is being pushed not just by senior management, but by rank-and-file employees too. Almost two-thirds of respondents (63 per cent) believe that digital evolution is being promoted by the most senior personnel, while 30 per cent said that it is being driven by regular employees. With so many different needs to meet, a well-functioning digital workspace can only be created if there is a structured plan put in place by senior management.

Virdi added: “Employees are pushing hard for change, and it’s not just those at the top who are demanding it. There’s clear evidence that this appetite for new tech is present throughout the business, which means organisations have to work out how to cater to a very large cross-section of the workforce. With this in mind, it’s paramount that the drive to digital is built on the bedrock of a well-planned strategy.

“This should take into account the requirements of everyone at the business: senior managers and board members might seem to be the ones pushing hard for digitalisation, but this is often due to the pressure they are getting from their employees anyway.”

He concluded: “Building the digital workspace is not simply a process of introducing technologies and hoping that they take hold; it’s about having a specific lifecycle plan for every new tool that is introduced. If businesses adopt and maintain this mindset, the long-term benefits will be significant.”

Thales says that the rush to digital transformation is putting sensitive data at risk for organizations worldwide according to its 2019 Thales Data Threat Report – Global Edition with research and analysis from IDC. As organizations embrace new technologies, such as multi-cloud deployments, they are struggling to implement proper data security.

Ninety-seven percent of the survey respondents reported their organization was already underway with some level of digital transformation and, with that, confirmed they are using and exposing sensitive data within these environments. Aggressive digital transformers are most at risk for data breaches, but alarmingly, the study finds that less than a third of respondents (only 30%) are using encryption within these environments. The study also found a few key areas where encryption adoption and usage are above average: IoT (42%), containers (47%) and big data (45%).

As companies move to the cloud or multi-cloud environments as part of their digital transformation journey, protecting their sensitive data is becoming increasingly complex. Nine out of 10 respondents are using, or will be using, some type of cloud environment, and 44% rated complexity of that environment as a perceived barrier to implementing proper data security measures. In fact, this complexity is ahead of staff needs, budget restraints and securing organizational buy-in.

Globally, 60% of organizations say they have been breached at some point in their history, with 30% experiencing a breach within the past year alone. In a year where breaches regularly appear in headlines, the U.S. had the highest number of breaches in the last three years (65%) as well as in the last year (36%).

The bottom line is that whatever technologies an organization deploys to make digital transformation happen, the easy and timely access to data puts this data at risk internally and externally. The majority of organizations, 86%, feel vulnerable to data threats. Unfortunately, this does not always translate into security best practices as evidenced by the less than 30% of respondents using encryption as part of their digital transformation strategy.

Tina Stewart, vice president of market strategy at Thales eSecurity says:

“Data security is vitally important. Organizations need to take a fresh look at how they implement a data security and encryption strategy in support of their transition to the cloud and meeting regulatory and compliance mandates. As our 2019 Thales Data Threat Report shows, we have now reached a point where almost every organization has been breached. As data breaches continue to be widespread and commonplace, enterprises around the globe can rely on Thales to secure their digital transformation in the face of these ongoing threats. ”

When it comes to innovation, a focus on customers, talent and data are key for success.

Only one in seven businesses (14 percent) is able to realise the full potential of their innovation investments, according to research from Accenture, while the majority are missing out on significant opportunities to grow profits and increase market value.

Over the last five years, approximately £2.5tn was spent globally on innovation. Yet, the study shows it is not how much you spend that matters, it is how you spend it. The companies bucking the trend and seeing the biggest returns are investing in bold, watershed moves rather than incremental shifts.

The survey of C-suite executives found that:

Arabel Bailey, Managing Director UKI and Innovation Lead for Accenture, said:

“Fortune favours the bold when it comes to investing in innovation. The companies reaping the biggest rewards show a “go big or go home” mentality by investing in truly disruptive innovation projects. They don’t just tinker around the edges.

“The fact that return on investment overall is dropping is a worrying trend. Business are spending more than ever, but their inability to see proper returns is shocking. One of the reasons for this could be that many organisations still see innovation as a peripheral activity separate to the core business; an “ad-hoc creative process” rather than a set of practices that will fundamentally change their way of doing business. It’s like going jogging once a month and then expecting to be able to run a marathon.

“Equally some companies chase the latest tech trends without thinking about how to connect what they’re spending to the biggest problems or opportunities in their business. We have developed a more formalised approach to helping companies make more of their investment. It means thinking hard about your company, your market, your customer, your workforce, and placing your innovation bets on the things that can help to address your biggest business challenges.

The research revealed that there are seven key characteristics that can help companies to make more of their innovation spend. Companies need to be:

Use of blockchain technology to help secure IoT data, services and devices doubles in a year.

Gemalto reveals that only around half (48%) of businesses can detect if any of their IoT devices suffers a breach. This comes despite companies having an increased focus on IoT security:

With the number of connected devices set to top 20 billion by 2023, businesses must act quickly to ensure their IoT breach detection is as effective as possible.

Surveying 950 IT and business decision makers globally, Gemalto found that companies are calling on governments to intervene, with 79% asking for more robust guidelines on IoT security, and 59% seeking clarification on who is responsible for protecting IoT. Despite the fact that many governments have already enacted or announced the introduction of regulations specific to IoT security, most (95%) businesses believe there should be uniform regulations in place, a finding that is echoed by consumers[1] 95% expect IoT devices to be governed by security regulations.

“Given the increase in the number of IoT-enabled devices, it’s extremely worrying to see that businesses still can’t detect if they have been breached,” said Jason Hart, CTO, Data Protection at Gemalto. “With no consistent regulation guiding the industry, it’s no surprise the threats – and, in turn, vulnerability of businesses – are increasing. This will only continue unless governments step in now to help industry avoid losing control.”

Security remains a big challenge

With such a big task in hand, businesses are calling for governmental intervention because of the challenges they see in securing connected devices and IoT services. This is particularly mentioned for data privacy (38%) and the collection of large amounts of data (34%). Protecting an increasing amount of data is proving an issue, with only three in five (59%) of those using IoT and spending on IoT security, admitting they encrypt all of their data.

Consumers1 are clearly not impressed with the efforts of the IoT industry, with 62% believing security needs to improve. When it comes to the biggest areas of concern 54% fear a lack of privacy because of connected devices, followed closely by unauthorised parties like hackers controlling devices (51%) and lack of control over personal data (50%).

Blockchain gains pace as an IoT security tool

While the industry awaits regulation, it is seeking ways to address the issues itself, with blockchain emerging as a potential technology; adoption of blockchain has doubled from 9% to 19% in the last 12 months. What’s more, a quarter (23%) of respondents believe that blockchain technology would be an ideal solution to use for securing IoT devices, with 91% of organisations that don’t currently use the technology are likely to consider it in the future.

As blockchain technology finds its place in securing IoT devices, businesses continue to employ other methods to protect themselves against cybercriminals. The majority (71%) encrypt their data, while password protection (66%) and two factor authentication (38%) remain prominent.

Hart continues, “Businesses are clearly feeling the pressure of protecting the growing amount of data they collect and store. But while it’s positive they are attempting to address that by investing in more security, such as blockchain, they need direct guidance to ensure they’re not leaving themselves exposed. In order to get this, businesses need to be putting more pressure on the government to act, as it is them that will be hit if they suffer a breach.”

It appears that many organisations will begin the New Year by reviewing their security infrastructure and taking a ‘back to basics’ approach to information security. This is according to the latest in a series of social media polls conducted by Europe’s number one information security event, Infosecurity Europe 2019.

Asked what their ‘security mantra’ is for 2019, more than half (55 per cent) of respondents say they plan to ‘go back to basics’ while 45 per cent reveal they will invest in more technology. According to Gartner, worldwide spending on information security products and services is forecast to grow 8.7 per cent to $124 billion in 2019.

When it comes to complexity, two-thirds believe that securing devices and personal data will become more (rather than less) complicated over the next 12 months. With Forrester predicting that 85 per cent of businesses will implement or plan to implement IoT solutions in 2019, this level of complexity is only set to increase with more connected devices and systems coming online.

However, many organisations will be looking to reduce complexity in their security architecture this year by maximising what they already have in place. According to Infosecurity Europe’s poll, 60 per cent of respondents say that maximising existing technologies is more important than using fewer vendors (40 per cent).

Victoria Windsor, Group Content Manager at Infosecurity Group, admits: “CISOs are managing increasingly complex security architectures and looking to streamline operations and technology in the wake of a growing skills crisis, rising costs and a myriad of compliance requirements. With many of us starting the New Year with well-intended ‘new year, new you’ resolutions, it seems that many security professionals are doing the same.”

Attracting 8,500 responses, the Infosecurity Europe Twitter poll was conducted during the week of 7 January, the first week back for many workers, and a time when many take stock of both their personal and professional goals for the year.

Infosecurity Europe also asked its community of CISOs about their focus for 2019 and discovered that complexity is major headache regardless of industry or size of operations.

Stephen Bonner, cyber risk partner, Deloitte highlights new and impactful challenges and advises security leaders to see the ‘big picture’. “It's often said that complexity is the enemy of security, and this remains as true today as it was twenty years ago. The difference today is that, in addition to technical complexity, companies now have to grapple with overlapping cyber security regulations, legacy technology, and intricate supply chains that stretch around the globe.

“These challenges can no longer be managed with point solutions. Security and IT leaders must consider how their technology fits into – and interacts with – the wider business and beyond. In other words, they must integrate ‘systems thinking’ into business as usual. Cyber security is now a core operational risk for many organisations, and an ability to see the big picture has rarely been so valuable.”

Nigel Stanley, Chief Technology Officer - Global OT and Industrial Cyber Security CoE at TÜV Rheinland Group, points to the challenges in the complex world of operational technology (OT), which covers everything from manufacturing plants through autonomous vehicles and power stations, and where control equipment is often old in terms of IT and often overlooked when it comes to corporate cybersecurity. “The good news is that having a New Year stock take and further considering these security systems will help you understand the key areas of business risk and help to formulate a plan to address it. In my experience the uncomplicated process of changing default passwords, screen locking the engineering workstation and educating a workforce will be time well spent in 2019. My OT security world is getting more complicated each day as fresh challenges arise. As we run fast it seems the bad guys run even faster. I plan to get some new running shoes for 2019!”

For Paul Watts, CISO at Dominos Pizza UK & Ireland, the speed of IoT development will become increasingly challenging: “Accrediting the security posture of IoT devices is challenging for enterprises, particularly in the absence of any regulatory landscape. I welcome the voluntary code of practice issued by the Department of Culture, Media and Sport late last year. However whilst the market remains deregulated and global manufacturers not compelled to comply, it will not go far enough given the speed these products are coming onto the market coupled with the insatiable appetite of consumers to adopt them at break neck speed – usually without any due consideration for the safety, security and interoperability in so doing.”

Staff shortages have escalated in the last three months to become the top emerging risk organizations face globally, according to Gartner, Inc.’s latest Emerging Risks Survey.

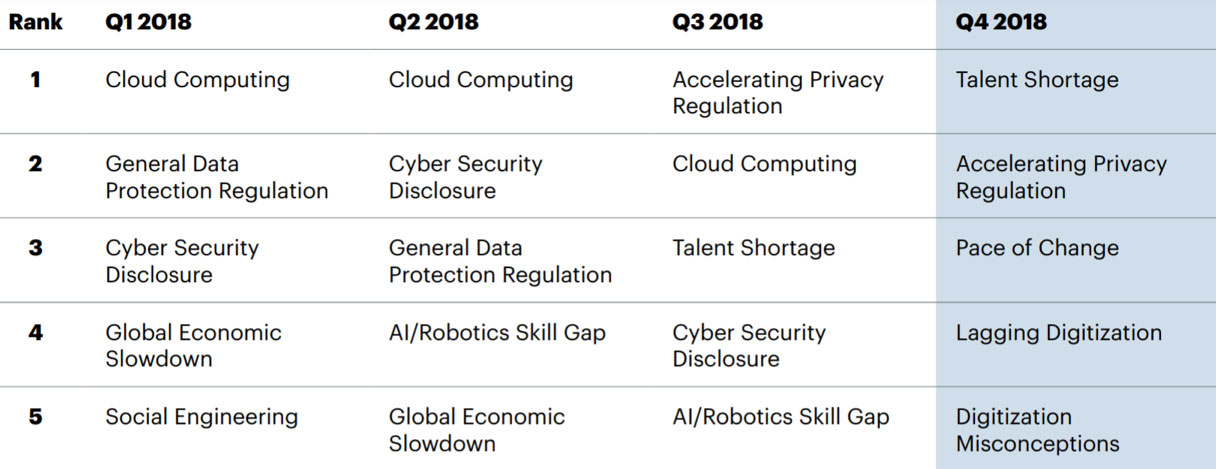

The survey of 137 senior executives in 4Q18 showed that concerns about “talent shortages” now outweigh those around “accelerating privacy regulation” and “cloud computing”, which were the top two risks in the 3Q18 Emerging Risk Monitor (see Figure 1).

“Organizations face huge challenges from the pace of business change, accelerating privacy regulations and the digitalization of their industries,” said Matt Shinkman, managing vice president and risk practice leader at Gartner. “A common denominator here is that addressing these top business challenges involves hiring new talent that is in incredibly short supply.”

Figure 1. Top Five Risks by Overall Risk Score: 1Q18, 2Q18, 3Q18, 4Q18

AI = Artificial Intelligence

Source: Gartner (January 2019)

Sixty-three percent of respondents indicated that a talent shortage was a key concern for their organization. The financial services, industrial and manufacturing, consumer services, government and nonprofit, and retail and hospitality sectors showed particularly high levels of concern in this area, with more than two-thirds of respondents in each industry signaling this as one of their top five risks.

Gartner research indicates that companies need to shift from external hiring strategies towards training their current workforces and applying risk mitigation strategies for critical talent shortages.

“Organizations face this talent crunch at a time when they are already challenged by risks that are exacerbated by a lack of appropriate expertise,” said Mr. Shinkman. “Previous hiring strategies for coping with talent disruptions are insufficient in this environment, and risk managers have a key role to play in collaborating with HR in developing new approaches.”

Talent Shortage May Exacerbate Other Key Risks

Beyond a global talent shortage, organizational leaders are grappling with a series of interrelated risks from a rapidly transforming business environment. Accelerating privacy regulation remained a key concern, dropping into second place in this quarter’s survey. Respondents indicated that the pace of change facing their organizations had emerged as the third most prominent risk, while factors related to the pace and execution of digitalization rounded out the top five emerging risks in this quarter’s survey.

Mitigation strategies to address this set of risks often come at least partially through a sound talent strategy. For example, a key Gartner recommendation in more adequately managing data privacy regulations is the appointment of a data protection officer, while both GDPR regulations and digitalization bring with them a host of specialized talent needs impacting nearly every organizational function.

“Unfortunately for most organizations, the most critical talent needs are also the most rare and expensive to hire for,” said Mr. Shinkman. “Adding to this challenge is the fact that ongoing disruption will keep business strategies highly dynamic, adding complexity to ongoing talent needs. Most organizations would benefit from investing in their current workforce’s skill velocity and employability, while actively developing risk mitigation plans for their most critical areas.”

Gartner recommends that enterprise risk teams and HR leaders collaborate to clearly define ownership of key talent risk areas that their organization is facing. “Different parts of the organization often have different pieces of information about what is actually going on from a talent risk perspective,” according to Brian Kropp, group vice president of Gartner’s HR Practice. “The best organizations are moving away from traditional engagement surveys to understand their talent risks. Building robust talent data collection and analysis techniques to better listen to their employees and identify real-time risks is a key part of this process.”

Global IT spending to reach $3.8 trillion in 2019

Worldwide IT spending is projected to total $3.76 trillion in 2019, an increase of 3.2 percent from 2018, according to the latest forecast by Gartner, Inc.

“Despite uncertainty fueled by recession rumors, Brexit, and trade wars and tariffs, the likely scenario for IT spending in 2019 is growth,” said John-David Lovelock, research vice president at Gartner. “However, there are a lot of dynamic changes happening in regards to which segments will be driving growth in the future. Spending is moving from saturated segments such as mobile phones, PCs and on-premises data center infrastructure to cloud services and Internet of Things (IoT) devices. IoT devices, in particular, are starting to pick up the slack from devices. Where the devices segment is saturated, IoT is not.

“IT is no longer just a platform that enables organizations to run their business on. It is becoming the engine that moves the business,” added Mr. Lovelock. “As digital business and digital business ecosystems move forward, IT will be the thing that binds the business together.”

With the shift to cloud, a key driver of IT spending, enterprise software will continue to exhibit strong growth, with worldwide software spending projected to grow 8.5 percent in 2019. It will grow another 8.2 percent in 2020 to total $466 billion (see Table 1). Organizations are expected to increase spending on enterprise application software in 2019, with more of the budget shifting to software as a service (SaaS).

Table 1. Worldwide IT Spending Forecast (Billions of U.S. Dollars)

|

| 2018 Spending | 2018 Growth (%) | 2019 Spending | 2019 Growth (%) | 2020 Spending | 2020 Growth (%) |

| Data Center Systems | 202 | 11.3 | 210 | 4.2 | 202 | -3.9 |

| Enterprise Software | 397 | 9.3 | 431 | 8.5 | 466 | 8.2 |

| Devices | 669 | 0.5 | 679 | 1.6 | 689 | 1.4 |

| IT Services | 983 | 5.6 | 1,030 | 4.7 | 1,079 | 4.8 |

| Communications Services | 1,399 | 1.9 | 1,417 | 1.3 | 1,439 | 1.5 |

| Overall IT | 3,650 | 3.9 | 3,767 | 3.2 | 3,875 | 2.8 |

Source: Gartner (January 2019)

Despite a slowdown in the mobile phone market, the devices segment is expected to grow 1.6 percent in 2019. The largest and most highly saturated smartphone markets, such as China, Unites States and Western Europe, are driven by replacement cycles. With Samsung facing challenges bringing well-differentiated premium smartphones to market and Apple’s high price-to-value benefits for its flagship smartphones, consumers kept their current phones and drove the mobile phone market down 1.2 percent in 2018.

“In addition to buying behavior changes, we are also seeing skills of internal staff beginning to lag as organizations adopt new technologies, such as IoT devices, to drive digital business,” said Mr. Lovelock. “Nearly half of the IT workforce is in urgent need of developing skills or competencies to support their digital business initiatives. Skill requirements to keep up, such as artificial intelligence (AI), machine learning, API and services platform design and data science, are changing faster than we’ve ever seen before.”

Artificial intelligence (AI) is increasingly making its way into the workplace, with virtual personal assistants (VPAs) and other forms of chatbots now augmenting human performance in many organizations. Gartner, Inc. predicts that, by 2021, 70 percent of organizations will assist their employees’ productivity by integrating AI in the workplace. This development will prompt 10 percent of organizations to add a digital harassment policy to workplace regulation.

“Digital workplace leaders will proactively implement AI-based technologies such as virtual assistants or other NLP-based conversational agents and robots to support and augment employees’ tasks and productivity,” said Helen Poitevin, senior research director at Gartner. “However, the AI agents must be properly monitored to prevent digital harassment and frustrating user experiences.”

Past incidents have shown that poorly designed assistants cause frustration among employees, sometimes prompting bad behavior and abusive language toward the VPA. “This can create a toxic work environment, as the bad habits will eventually leak into interactions with co-workers,” said Ms. Poitevin.

Recent experiments have also shown that people’s abusive behavior toward AI technologies can translate into how they treat the humans around them. Organizations should consider this when establishing VPAs in the workplace and train the assistants to respond appropriately to aggressive language.

Ms. Poitevin added: “They should also clearly state that AI-enabled conversational agents should be treated with respect, and give them a personality to fuel likability and respect. Finally, digital workplace leaders should allow employees to report observed cases of policy violation.”

Back-Office Bank Employees Will Rely on AI for Nonroutine Work

Gartner predicts that, by 2020, 20 percent of operational bank staff engaged in back-office activities will rely on AI to do nonroutine work.

“Nonroutine tasks in the back offices of financial institutions are things like financial contract review or deal origination,” said Moutusi Sau, senior research director at Gartner. “While those tasks are complex and require manual intervention by human staff, AI technology can assist and augment the work of the staff by reducing errors and providing recommendation on the next best step.”

AI and automation have been applied to routine work that has been successful across banks and their value chain. “In some cases, we witnessed layoffs to reduce unneeded head count, and understandably back-office staff are worried their jobs will be replaced by machines,” said Ms. Sau.

However, AI has a bigger value-add than pure automation, which is augmentation. “The outlook for AI in banking is in favor of proactively controlling AI tools as helpers, and those can be used for reviewing documents or interpreting commercial-loan agreements. Digital workplace leaders and CIOs should also reassure workers that IT and business leaders will ‘deploy AI for good’,” concluded Ms. Sau.

Technology seen as the greatest opportunity for organizations despite concerns about Artificial Intelligence.

Sword GRC, a supplier of specialist risk management software and services, has published the latest findings from its annual survey of global risk managers. Almost 150 Risk Managers from highly risk-aware organizations worldwide were canvassed for their opinions. Overall, cybersecurity was seen as the biggest risk to business by a quarter of organizations. In the UK, Brexit and the resulting potential economic fall-out was cited as the biggest risk to business by 14% of Risk Managers. The most notable regional variation was in the US where 40% of organizations see cybersecurity as the most threatening risk. The most lucrative opportunities for business were the benefits and efficiencies achieved by harnessing technology followed by expansion into new markets or sectors.

The Risk Managers were also asked about their acknowledgement and preparations for Black Swans (an event that is highly unlikely to materialize but if it did, would have a substantial impact). In both the US and UK, a major terrorist attack on the business is seen as the most likely Black Swan (UK 29% and US 35%), however, in Australia/New Zealand, only 13% of Risk Managers thought that one was likely. The next most likely Black Swan in the US is a natural disaster, with 48% of Risk Managers thinking it was likely or highly likely. This figure was 33% in Australia and New Zealand, and in the UK, where there are fewer adverse weather events, and no major fault lines in the earth’s crust, this figure was just 27%.

In the UK, Risk Managers were far more wary of Artificial Intelligence (AI) with 23% thinking it likely or highly likely that AI could get out of control. In the US this figure was 15%, and in Australia/New Zealand they clearly take a far more sanguine view with no one surveyed thinking AI was a risk.

Keith Ricketts, VP of Marketing at Sword GRC commented; “We are delighted to see the Active Risk Manager Survey going from strength to strength with a record number of responses in 2018. As Risk continues to grow in importance and influence in the Boardroom, we have this year focused on the biggest threats and most lucrative opportunities facing business. That cybersecurity is now recognised as the single biggest risk for many organizations is no surprise to us, as it supports the anecdotal evidence we have seen working with our clients in some of the most risk aware industries globally.

“Technology is a great enabler and that has never been more true. The feedback we have from our Risk Managers is that information technology is the key to almost every opportunity for business going forward, whether that is supporting expansion into new markets and geographies, streamlining processes to gain efficiency or harnessing big data and artificial intelligence to power product development and business performance.”

New data from Synergy Research Group shows that the number of large data centers operated by hyperscale providers rose by 11% in 2018 to reach 430 by year end.

In 2018 the Asia-Pac and EMEA regions featured most prominently in terms of new data centers that were opened, but despite that the US still accounts for 40% of the major cloud and internet data center sites. The next most popular locations are China, Japan, the UK, Australia and Germany, which collectively account for another 30% of the total. During 2018 new data centers were opened in 17 different countries with the US and Hong Kong having the largest number of additions. Among the hyperscale operators, Amazon and Google opened the most new data centers in 2018, together accounting for over half of the total. The research is based on an analysis of the data center footprint of 20 of the world’s major cloud and internet service firms, including the largest operators in SaaS, IaaS, PaaS, search, social networking, e-commerce and gaming.

On average each of the 20 firms had 22 data center sites. The companies with the broadest data center footprint are the leading cloud providers – Amazon, Microsoft, Google and IBM. Each has 55 or more data center locations with at least three in each of the four regions – North America, APAC, EMEA and Latin America. Alibaba and Oracle also have a notably broad data center presence. The remaining firms tend to have their data centers focused primarily in either the US (Apple, Facebook, Twitter, eBay, Yahoo) or China (Baidu, Tencent).

“Hyperscale growth goes on unabated, with company revenues growing by an average 24% per year and their capex growing by over 40% - much of which is going into building and equipping data centers,” said John Dinsdale, a Chief Analyst and Research Director at Synergy Research Group. “In addition to the 430 current hyperscale data centers we have visibility of a further 132 that are at various stages of planning or building. There is no end in sight to the data center building boom.”

Customers face uncertain times – the pace of change, digital transformation, security, Brexit, compliance and of course, being able to concentrate on their main line of work. They are turning to MSPs in particular for help, and those MSPs in turn need to be in a position to advise and provide a strategy. Standing still is not an option; managed services is a natural way to meet those extra customer needs without requiring them to commit to major capital spending and so is expected to continue to grow in 2019.

Worldwide IT spending is projected to total $3.76tn in 2019, an increase of 3.2% on 2018, according to the latest forecast by Gartner, with enterprise software up 8% in 2019 and up by a similar amount in 2020. The limiting factor, however, is resource to implement these changes, and this is where managed services plays its part.

Skills are in short supply; customers’ management may have its heart set on using data analytics; it wants to ensure compliance for regulatory systems, it wants to plan for the future, but it can’t do it on its own.

Managed services suppliers and providers are being asked to do much more than offer technology. Increasingly, they are being asked to talk through the process of managing the process of change for staff, how to draw up a management plan as well as IT strategy, spotting the gaps and matching resources to meet new demands. Gartner’s forecast in January 2019 says this is one of the key issues – half of the IT workforce are underskilled and cannot support digital initiatives. When it takes six months to hire externally, nine months to retrain, outsourcing to managed services looks more and more attractive. The key is presenting a skills and business alignment process.

“Despite uncertainty fuelled by recession rumours, Brexit, and trade wars and tariffs, the likely scenario for IT spending in 2019 is growth,” said John-David Lovelock, research vice president at Gartner. “However, there are a lot of dynamic changes happening with regards to which segments will be driving growth in the future. Spending is moving from saturated segments such as mobile phones, PCs and on-premises data centre infrastructure to cloud services and Internet of Things (IoT) devices.”

Organisations are expected to increase spending on enterprise application software in 2019, with more of the budget shifting to software as a service (SaaS) and managed services. With the shift to cloud, a key driver of IT spending, enterprise software will continue to exhibit strong growth, with worldwide software spending projected to grow 8.5% in 2019, Gartner says. It will grow another 8.2% in 2020 to total $466bn.

“In addition to buying behaviour changes, we are also seeing skills of internal staff beginning to lag as organisations adopt new technologies to drive digital business,” he says. “Nearly half of the IT workforce is in urgent need of developing skills or competencies to support their digital business initiatives. Skill requirements to keep up with technologies such as artificial intelligence (AI), machine learning, API and services platform design and data science, are changing faster than we’ve ever seen before,” he says.

The agenda for the 2019 European Managed Services and Hosting Summit, in Amsterdam on 23 May, aims to reflect these new pressures and build the skills of the managed services industry in addressing the wider issues of engagement with customers at a strategic level. Experts from all parts of the industry, plus thought leaders with ideas from other businesses and organisations will share experiences and help identify the trends in a rapidly-changing market.

Gartner’s vp of research Mark Paine will deliver a vital keynote at the MSH summit in Amsterdam entitled “Working with customers and their chaotic buying processes”. This will be a view on how the changed customer buying process has become hard to monitor, hard to follow and can be abruptly fore-shortened. Who are the real customers anyway, he is asking?

Angel Business communications are seeking nominations for the 2019 Datacentre Solutions Awards (DCS Awards).

The DCS Awards are designed to reward the product designers, manufacturers, suppliers and providers operating in data centre arena and are updated each year to reflect this fast moving industry. The Awards recognise the achievements of the vendors and their business partners alike and this year encompass a wider range of project, facilities and information technology award categories together with two Individual categories and are designed to address all the main areas of the datacentre market in Europe.

The DCS Awards team is delighted to announce Kohler Uninterruptible Power as the Headline Sponsor for this year’s event. Previously known as Uninterruptible Power Supplies Ltd (UPSL), a subsidiary of Kohler Co, and the exclusive supplier of PowerWAVE UPS, generator and emergency lighting products, UPSL is changing its name to Kohler Uninterruptible Power (KUP), effective March 4th, 2019.

UPSL’s name change is designed to ensure the company’s name reflects the true breadth of the business’ current offer, which now extends to UPS systems, generators, emergency lighting inverters, and class-leading 24/7 service, as well as highlighting its membership of Kohler Co. This is especially timely as next year Kohler will celebrate 100 years of supplying products for power generation and protection. Kohler Uninterruptible Power Ltd prides itself on delivering industry-leading power protection solutions and services.

The 2019 DCS Awards feature 26 categories across four groups. The Project Awards categories are open to end use implementations and services that have been available before 31st December 2018. The Innovation Awards categories are open to products and solutions that have been available and shipping in EMEA between 1st January and 31st December 2018. The Company nominees must have been present in the EMEA market prior to 1st June 2018. Individuals must have been employed in the EMEA region prior to 31st December 2018.

The editorial panel at Angel Business Communications will validate entries and announce the final short list to be forwarded for voting by the readership of the Digitalisation World stable of publications during April. The winners will be announced at a gala evening on 16th May at London’s Grange St Paul’s Hotel.

Nomination is free of charge and all entries can submit up to four supporting documents to enhance the submission. The deadline for entries is : 1st March 2019.

Please visit : www.dcsawards.com for rules and entry criteria for each of the following categories:

DCS PROJECT AWARDS

Data Centre Energy Efficiency Project of the Year

New Design/Build Data Centre Project of the Year

Data Centre Consolidation/Upgrade/Refresh Project of the Year

Cloud Project of the Year

Managed Services Project of the Year

GDPR compliance Project of the Year

DCS INNOVATION AWARDS

Data Centre Facilities Innovation Awards

Data Centre Power Innovation of the Year

Data Centre PDU Innovation of the Year

Data Centre Cooling Innovation of the Year

Data Centre Intelligent Automation and Management Innovation of the Year

Data Centre Safety, Security & Fire Suppression Innovation of the Year

Data Centre Physical Connectivity Innovation of the Year

Data Centre ICT Innovation Awards

Data Centre ICT Storage Product of the Year

Data Centre ICT Security Product of the Year

Data Centre ICT Management Product of the Year

Data Centre ICT Networking Product of the Year

Data Centre ICT Automation Innovation of the Year

Open Source Innovation of the Year

Data Centre Managed Services Innovation of the Year

DCS Company Awards

Data Centre Hosting/co-location Supplier of the Year

Data Centre Cloud Vendor of the Year

Data Centre Facilities Vendor of the Year

Data Centre ICT Systems Vendor of the Year

Excellence in Data Centre Services Award

DCS Individual Awards

Data Centre Manager of the Year

Data Centre Engineer of the Year

Nomination Deadline : 1st March 2019

www.dcsawards.com

Energy Supply, Power and the Challenges Caused by a Bunch of Zeros and Ones.

By Steve Hone CEO and Founder of DCA Global, The Data Centre Trade Association

As Global Event Partners for Data Centre World I have been doing a great deal of research on behalf of the team for the 6 Generation Data Centre Zone which will be unveiled for the first time at DCW London in March. During this research I came across many interesting articles and stats; some new and some a little older which are still very relevant today. The inspiration for this month’s foreword is based on this research along with the “Powering Data Centres” theme for this month’s journal.

Although most of our focus remains firmly on energy usage within the data centre itself, there is equally growing consideration given to the way the energy is generated in the first place and the losses incurred over the network before it even reaches our data centres.

What can you can do as a large power consumer to improve energy sustainability and lessen the carbon impact of these losses? As a business this largely depends on the country you reside in. Assuming your data centre draws power directly from a national power grid, you can look to procure your supply from a power generator with less carbon impact (e.g. hydro, nuclear and geothermal). In many cases, if these are available such contracts encourage further investment in non-fossil fuels and continue the move towards more sustainable systems. Transmission and distribution losses will tend to be the same by trying to eliminate fossil fuels you can lower the overall carbon impact of powering your data centre.

Increased Power requirements and the resultant thermal challenges

Escalating compute requirements are continuing to create power and thermal challenges for today’s data centre managers. Although there are still many 2-4Kw racks in circulation, the introduction of high-density racks and blade servers meant the data centre design had to change and evolve. These architectures are inherently more scalable, adaptable and manageable than traditional platforms, they deliver much-needed relief in complex and crowded data centres. They also introduce power and thermal loads that are substantially higher than those of the systems they replace. In many cases, they have already pushed the cooling infrastructures of older design facilities beyond their limits.

The long-term solution to these challenges requires broad industry innovation and collaboration. This needs to come from not only the organisations who’s job it is the cool things down when they get hot but also the guys who are generating the heat in the first place - that being the server manufacturers themselves.

PUE is like Marmite, love it or hate it, what can’t be denied is it has helps focus us on improving the way we design and operate our data centres. Although there is always more we could do, for many of the data centres who follow the best practice guidelines of the EU Code of Conduct, most have already harvested the low hanging fruit and the only thing left to improve is the compute itself which is often outside their control. As a result, I fear we may have reached the point where only a concerted effort on both sides of the “1”, will return any further major dents in overall performance or energy savings. With the support of the DCA progress is being made in this front; we have already seen the development of many liquid-based alternatives to traditional air cooling and continued RnD into the next generation of processors designed to run both faster and hopefully cooler.

How can zeros and ones cause so much trouble?

Forbes reports that collectively we are creating 2.5 quintillion bytes of new data every day. If you consider the energy needed to manage and transport this much data consumed more energy worldwide than the whole of the United Kingdom did last year you quickly see how all those little zeros and ones soon add up. The shift to cloud is helping to relieve the pressure locally for many businesses as they migrate applications over to the hyperscalers to reduce costs, risk, IT complexity and their own carbon footprint. However, although these cloud providers are infinitely better equipped to handle your data it’s important to recognise that the issue does not go away by simply outsourcing the problem as technically you are just moving it from your back yard to someone else’s.

This exponential rise in the amount of data we are generating and storing is equally being driven by all of us on a personal level as well. A rapid increase in the popularity of streaming video, use of social media platforms and a complete reluctance to delete anything we think has intrinsic or sentimental value are all adding to the massive mountain of data we are generating. This apparently is also just the tip of the iceberg, and we haven’t even considered the additional processing power required for Artificial Intelligence (AI).

Experts forecast that an explosion of Artificial Intelligence and internet-connected devices is on its way with IoT projected to exceed 20 billion devices/sensors by 2020. Although I don’t necessarily subscribe to the analyst’s timeline here you can’t ignore the potential impact this could have. We roughly have 10 billion internet-connected devices today and doubling that to 20 billion will require a massive increase to our data centre infrastructure and the reservation of even more power to maintain service availability to a hungry and rapidly growing consumer base.

Thank you again for all the contributions made by DCA members this month. The theme for the next edition of the DCA Journal focuses on “workforce sustainability” - already a big problem for our sector which has no quick fixes and needs to be collectively addressed. Joe Kava, VP of data centres for Google, summed it up nicely in a keynote address at last year’s Data Centre World conference, he said, “The greatest threat we’re facing is the race for talent” so please take advantage of this opportunity to publish your thought on this subject by contacting amandam@dca-global.org for copy details and deadlines.

Energy storage at Johan Cruyff Arena in Amsterdam shows the way to Data Centres

By Robbert Hoeffnagel, Green IT Amsterdam

The European EV-Energy project is working hard to map and promote legislation and regulations of local and provincial governments that can accelerate what is officially called 'decarbonisation of the energy and mobility sector'. This also affects the integration of data centres and smart grids. A project on battery storage at the Johan Cruyff Arena in Amsterdam shows how this can be achieved in practice and the benefits that this can bring.

Last summer, the Johan Cruyff Arena in Amsterdam officially launched a battery system for storing electrical energy. This opening followed an earlier project of the stadium where a large part of the roof was filled with solar panels. Generating energy through solar panels is interesting - especially if this energy can also be used immediately. For the Arena, however, many of the activities that take place here are planned in the evening hours. Storage of the energy generated by solar panels in batteries was therefore an important next step.

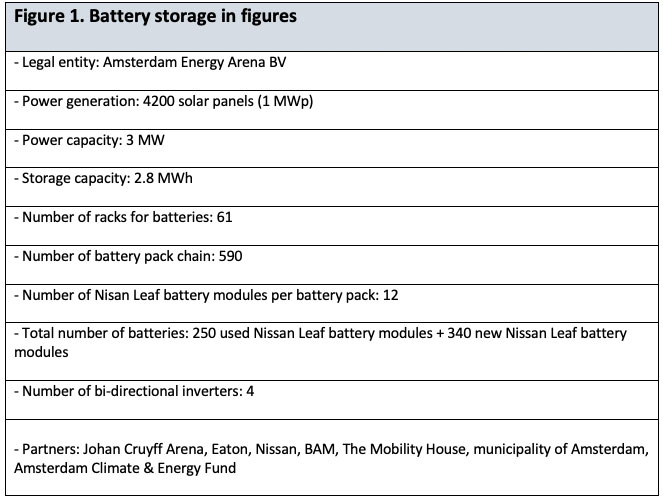

61 racks of batteries

It is therefore logical that last year's opening of a hall with 61 racks full of batteries has already received some significant attention. We are now more than six months further and it is becoming increasingly clear how important this project is - especially for the data centre industry. As Figure 1 shows, this project is not only about storing energy in batteries. In order to justify the relatively high costs of batteries, we need to develop a business case that is as broadly defined as possible. In other words: the batteries should be used in as many ways as possible so that the investments can be recouped. That is precisely the phenomenon that makes this project very relevant for data centres, who are now also discussing the possibilities that arise from integrating batteries and UPS systems with the energy networks of grid operators.

As shown in figure 1, a subsidiary of the Johan Cruyff Arena - called Amsterdam Energy Arena BV - has invested in a room filled with 61 racks full of batteries. These come from Nissan's electric car - the Leaf. After a number of years, the capacity of the batteries of these cars drops from 100% to 80%. This decline means that the batteries are no longer suitable for use in an electric car and therefore need to be replaced. What to do with so many ‘useless’ car batteries? It turns out, however, that these batteries are still perfectly suitable for storing electrical energy in, for example, an energy storage system linked to solar panels. The Amsterdam Arena has now installed 61 racks with 590 battery packs. Good for 3 MW and 2.8 MWh.

Generating and using

What exactly does the Arena use the stored energy for? This is first of all (see figure 2) to compensate for the mismatch between the moment of generation and the time of use. The 4200 solar panels on the roof of the stadium generate electrical energy during the day, while many sports matches and concerts, for example, require energy in the evening hours. These are serious amounts of energy. If the Arena is running at full speed in the evening, the energy stored in the batteries is sufficient to meet the energy requirements for an hour. If not all systems are actually switched on, the Arena can extend this period to 3 hours. Outside this period, energy will have to be taken from the grid.

It is interesting to note that it is of course not necessary to draw maximum electrical energy from the batteries every evening. At times when there are no events planned Amsterdam Energy Arena BV can use the storage capacity in other ways. This is shown in figure 2. Think of energy services that are delivered to the grid. This will give the local grid operator more and better opportunities to keep the grid in balance. This can be done by temporarily storing energy from the grid in the batteries of the Arena or by drawing energy from them and transferring that electrical energy to the network.

Peak shaving

However, the Amsterdam Energy Arena also provides other services; for example, electric or hybrid cars can be charged via bi-directional charging stations in the stadium. But the other way around is also possible: temporary energy storage in the batteries of these cars. Peak shaving is also possible. Depending on supply and demand, peaks and troughs in energy consumption can be absorbed by using energy from the batteries.

Another remarkable application: backup power during events. Many major artists who give concerts in venues such as the Amsterdam Arena generally do not rely on the backup energy supply in the venues where they perform. In too many places there are problems with the quality and robustness of the network, in their experience. They prefer to bring their own diesel generators to ensure uninterrupted power supply during their events. With all the extra costs that entails, of course. In the case of the Arena, this is no longer necessary as these artists can now call on the battery storage.

Future for data centres

With this energy storage system the Johan Cruyff Arena in Amsterdam is an interesting example of what might be the future of many data centres. European projects such as EV Energy and CATALYST are working hard to enable the integration of data centres and smart grids. Batteries and UPS systems at the data centre are connected to the grid via smart management software. The advantages for grid operators are then, of course, clear. As with the Amsterdam Arena, they can then use the storage capacity of a data centre - the batteries installed there - to help keep the network stable. Because data centres may invest more in renewable energy generation, they may also be able to supply energy to the network. Peak shaving and a better organised form of backup power is also possible.

Of course, this also creates interesting opportunities for data centres. Until now, they function on the basis of a business model that has only one financial pillar: selling space for processing data. Especially in many commercial data centres we see that the margins on projects of this kind tend to decline: the projects are getting bigger, but the margins are getting smaller. However, an integration of the data centre and smart grid makes it possible - what we will just call - to put 'grid services' as a second financial pillar under the business model of a data centre. Provided this is done on the basis of sound agreements, new turnover will be generated. Initially of course modest in size, but at the same time with a relatively high margin.

Financial possibilities

The same applies, of course, to data centres that in the future want to supply residual heat to customers for a fee. These transactions will also have a relatively high margin and can therefore make an interesting financial contribution to the operation of data centres.

The project on battery storage in the Johan Cruyff Arena (figure 2) could very well serve as an example to the data centre industry. Although at the moment the storage capacity at the stadium is not yet sufficient to supply energy to external customers, this project does show that developing and delivering energy services offers interesting opportunities to data centres. The energy transition facing the data centre and ICT sector could thus offer unexpectedly great opportunities - not in the least financially.

Why do we always blame the battery when the lights go out?

The preservation of power is crucial to the operation of a datacentre and every facility will have many battery back-up systems to prevent a mains failure from being a catastrophic disaster.

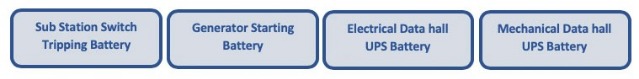

These systems can be identified under two categories.

1) Critical Infra-Structure Systems, such as:

2) Essential Facilities Systems, such as:

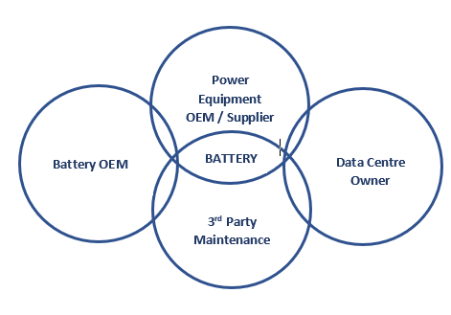

Clearly the battery underpins each standby power system and by definition of being a sub component, prevents the battery manufacturer from being directly part of the datacentre supply chain, in either the design and build phase or during operations of the facility.

With such an array of different types of systems that use batteries, the accountability of battery maintenance therefore falls within the equipment vendor’s Service Level Agreements (SLA’s) or the Facility Management maintenance team.

Where SLA’s make provision for vendor neutral battery supply, generic battery warranty compliance is managed by the battery maintenance experts.

Where systems are not supported with SLA’s the risk of failure becomes the direct responsibility of the Facility Management team.

The complexities of successful battery maintenance are therefore key to preventing a power failure being blamed on to the battery.

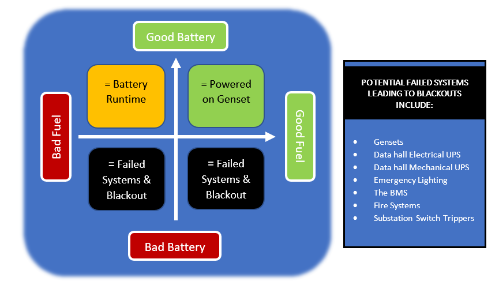

Thankfully datacentres are designed with redundant power paths, this prevents the vulnerability of one battery string being the single point of failure, but nevertheless the battery remains the primary frontline alternative source of power in a mains outage.

As shown in diagram, a good battery will be needed in all power outages and while bad fuel is a single point of failure for the datacentre, the battery will protect against the majority of power outages.

The frontline reliance on the battery to cover the short duration outages creates an equal importance of both preventative battery maintenance and the integrity of fuel quality. Together they are essential for the reliability of the datacentre.

The importance of maintenance

The battery is an indirect cost within any datacentre CAPEX budget, being a component of the emergency power system equipment. The component status prevents any direct relationship between the datacentre owner and the battery manufacturer which leads to the reliance of protecting the battery investment (battery maintenance) by others in the supply chain.

The OPEX budgets are issued alongside vendors SLA’s for the supplied emergency power system equipment. For commercial reasons this allows the battery to be a none specific branded component, so the SLA will not be specific to the battery manufacturer warranty conditions.

This creates the need for using battery experts to deliver specific preventative maintenance to manufacturer’s warranty conditions. The correct interpretation is crucial to ensure the battery is not being blamed when the lights go out.

The IT server equipment in Datacentres cannot operate on the raw utility power supplied by the grid. Datacentre therefore depend on UPS Systems to provide quality filtered mains required by IT equipment.

In addition, under the direction of Tier certifications, the redundancy and size of the UPS Systems are dimensions on the total IT load of the datahall. Therefore, a Tier 3 datacentre with a 1000kW datahall will have a minimum of a dual path UPS design which gives say 15 minute run time protection.

This investment into the UPS System may be for 10 years, the battery may only be a 5 year product due to cost or even a 10 year product may be changed during its life, add in the scenario of battery replacement from a different brand and the complexities of managing the battery maintenance requires specialist knowledge if we are going to keep the lights on.

The battery manufacturer will determine the end of life in terms of the design life capacity value of the battery with the recommendation to perform a 3 hour constant current or power discharge test (with a DC load bank) to determine the battery capacity. This is normal for industries such as oil & gas, petro-chem, power generation where emergency back up systems are predominately batteries and chargers.

The battery performance verification will be confirmed as part of the UPS autonomy run time test, which is usually performed annually with an AC load bank and careful year on year analysis of the battery performance can help determine the end of life of the battery.

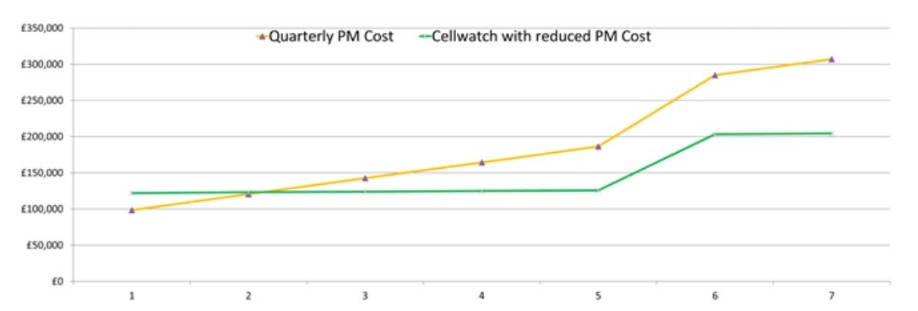

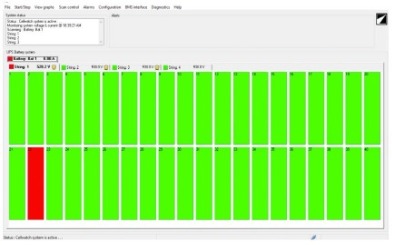

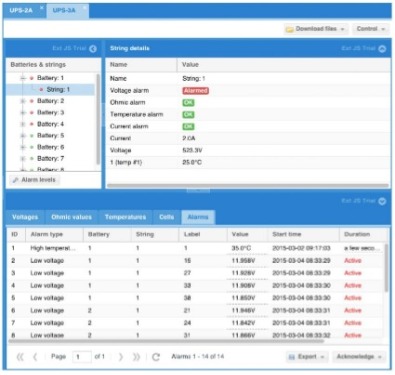

However because the run time is short the test engineer cannot measure the individual battery blocks during an autonomy test there is also a need to deploy specialist 3rd party battery experts to carry out more frequent maintenance work on the battery system.

Such maintenance will include taking impedance, conductance or resistance measurements on the individual battery blocks. The preference for the type of reading taken is subject to the preference of the 3rd party expert.

These results can also be taken with permanent battery monitoring systems and the collation of all this data helps identify problems with the batteries.

Another important and somewhat over looked part of maintenance is visual inspections. In North America they fall under the NERC & FERC regulation and they form part of good practice giving additional valuable contribution to battery maintenance.

Conclusions

Ensuring the integrity of power to the datacentre requires both a robust preventative battery maintenance system and a transparency of reporting for warranty compliance and end of life replacement.

Commercially battery suppliers will want closer relationships to manage replacements & warranty claims to safe guard future potential sales. Therefore it makes sense to engage and create relationships between facility owners, vendors and battery suppliers to increase reliability of operation.

Battery failures in the datacentre originate from the exclusion of the battery manufacturer during the design and build phase of the datacentre and is encompassed within generic SLA for emergency power system equipment and the differences of battery maintenance recommendations from 3rd party experts.

Creating an holistic maintenance program that combines historical – present & future predictions for the performance of every battery system will avoid the blame being placed on the battery when the lights go out

Paul is presently running a small team developing a vendor neutral low cost annual subscription battery maintenance software platform called BattLife.

BattLife addresses all the maintenance issues described in this article and is due for release Qtr1 2019.

Contact: Tel: +44 161 763 3100 Web: https://hillstone.co.uk

By the IEC - International Electrotechnical Commission

A number of low voltage direct current (LVDC) trials are preparing the ground for a wider use of the technology, including for powering data centres.

Low voltage direct current (LVDC) is seen increasingly as an energy efficient method of delivering energy, as well as a way of reaching the millions of people without any access to electricity. It’s fully in line with the UN’s Sustainable Development Goal, of providing universal access to affordable, reliable and modern energy services by 2030.

In direct contrast to the conventional centralized model of electricity distribution via alternating current (AC), LVDC is a distributed way of transmitting and delivering power. Today, electricity is generated mostly in large utility plants and then transported through a network of high voltage overhead lines to substations. It is then converted into lower voltages before being distributed to individual households. With LVDC, power is produced very close to where it is consumed.

Using DC systems make a lot of sense because most of the electrical loads in today’s homes and buildings – for instance computers, mobile phones and LED lighting - already consume DC power. In addition, renewable energy sources, such as wind and solar, yield DC current. No need to convert from DC to AC and convert back to DC, sometimes several times, as a top-down AC transmission and distribution set-up requires. This makes DC more energy efficient and less costly to use.

LVDC used for powering data centres

The environmental gains from using a more energy-efficient system supplied from renewable sources make LVDC a viable alternative for use in developed countries as well as in remote and rural locations where there is little or no access to electricity.

“The potential benefits of LVDC already have been demonstrated by a number of pilot projects and niche studies in developed nations. For example, a pilot data centre run by ABB in Switzerland running on low direct current power has shown a 15% improvement on energy efficiency and 10% savings in capital costs compared to a typical AC set-up. This is interesting because data centres consume so much power,” comments Dr Abdullah Emhemed from the Institute of Energy and Environment at Strathclyde University in the UK. Dr Emhemed leads the University’s international activities on LVDC systems. He is also a member of a systems committee on LVDC and LVDC access inside the global standard-setting organization for the electro-technical industry, the IEC.