Sensor systems is one of the highest growing and upcoming segments in the semiconductor market. Sensors have a vast array of applications in electronics, industrial automation, avionics and military, mobile devices, consumer electronics, building and infrastructure, medical equipment, security, transportation, IT infrastructure and communication among others.

Sensor technology is evolving consistently and relies on numerous leading-edge technologies to deliver improved functionality required by industries worldwide.

Sensor technology is driven by key factors such as low cost, chip level integration, low power and wireless connectivity. Advancement in technology and miniaturization of devices has driven the market growth in recent times. High demand in consumer electronics and automotive industry has positively impacted the market demand owing to numerous applications of sensors in these sectors.

In addition to factors such as time to market and price sensitivity posing a challenge, the fluctuating global economic conditions could hinder the sensor market

In 8 weeks, we will welcome 30 leading industry speakers to the second sensor event, Sensor Solutions International. (26-27 March, Brussels) These innovators of industry will speak on themes covering application and developments in Transportation, Flight, Health, Imaging and Energy to name a few.

To support the event, the first issue of our quarterly title Sensor Solutions will address key issues in the industry and report on the ever-changing dynamics of this growth market.

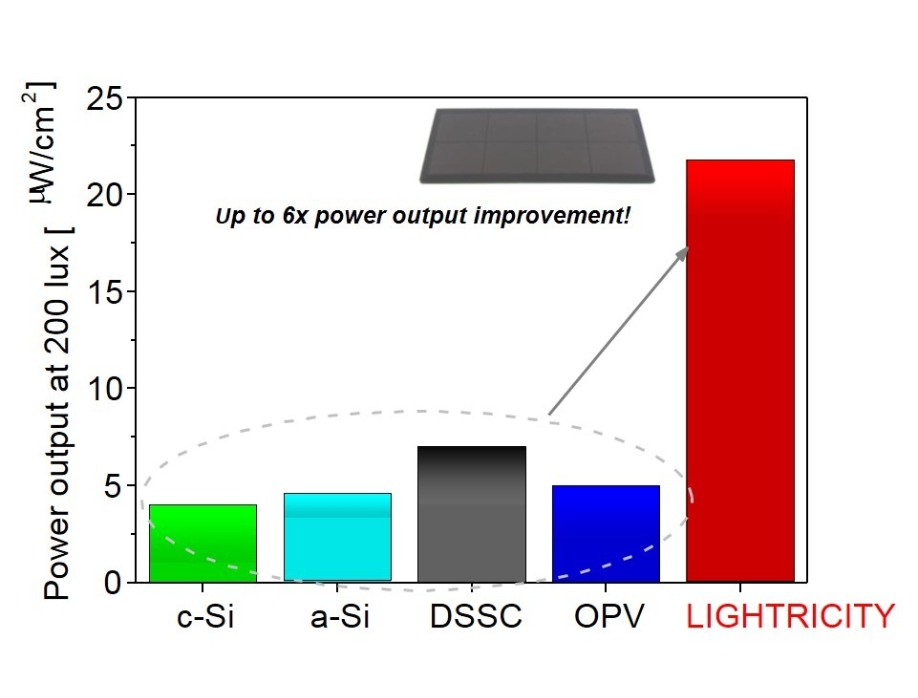

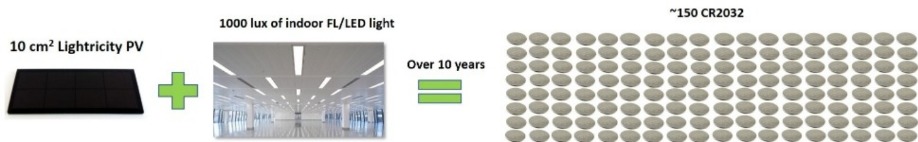

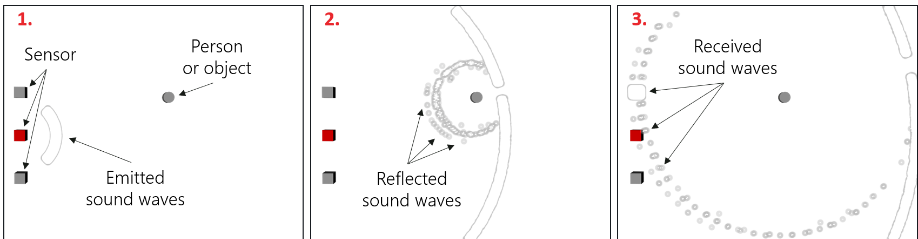

This issue is packed with features, with contributions from companies such as, Toposens, who describes “Next-level 3D Ultrasound Sensor Based on Echolocation”; Lightricity on a “PV Energy Harvesting solution”; InvenSense who looks at “The Growing Role of Sensors in the IoT/IIoT”.

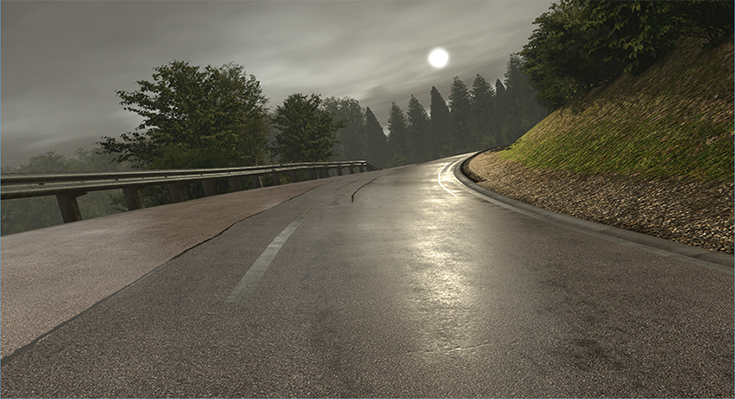

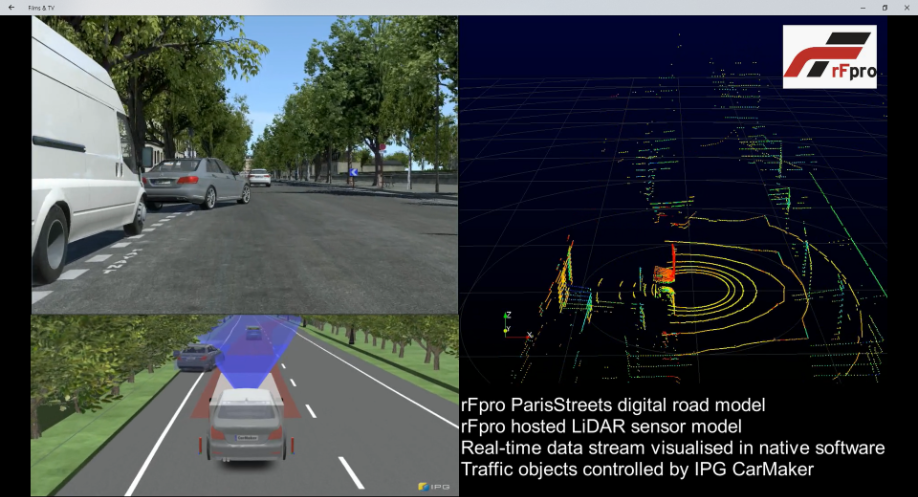

Bosch discusses “Sensors and artificial intelligence” and Analog Devices explains the demands on sensors for future servicing. We would also like to thank Euroicc for their piece on “Smart thermostats”; Claytex for Sensor models for virtual testing of autonomous vehicles “and ams AG for “AI in Sensors for IoT”

If you would like to be considered for the next issue, please contact me on Jackie.cannon@angelbc.com

Jackie Cannon

In a H2020-project MIREGAS, Technical Research Centre of Finland VTT Ltd and Tampere University of Technology teams, together with European partners, developed novel components for miniaturized gas sensors exploiting the principle of Mid-IR absorption spectroscopy. In particular, the main advances concerned the development of novel superluminescent LEDs for 2.65 µm wavelength, Photonic Integrated Circuits (PICs) with 1nm bandwidth for spectral filtering at Mid-IR, hot-embossed Mid-IR lenses for beam forming and photodetectors for 2 to 3µm wavelengths. Silicon photonics PIC technology that was originally developed for optical communication applications allows for the miniaturization of the sensor. The components entail important benefits in terms of cost, volume production and reliability.

The market impact is expected to be disruptive, since the devices currently on the market are typically complicated, expensive and heavy instruments. The components developed by the consortium enable miniaturized integrated sensors with important benefits in terms of cost, volume production and reliability, which are instrumental features for the wide penetration of gas sensing applications.

The IR spectroscopy is a powerful tool for multigas analysis. Conventional sensors are based on the use of filters, spectrometers or tuneable lasers. MIREGAS project, has introduced breakthrough components, which enable multigas analysis using integrated solution. Moreover, using the new technology, the wavelengths of light can be filtered more precisely, and interfering gas components can be excluded.

H2020 European consortium, MIREGAS, brought together world-leading European institutes and multinational companies. On the technology side, VTT Technical Research Centre of Finland Ltd coordinated the programme and provided Si PICs as well as photonics packaging and integration technologies. The Optoelectronics Research Centre at the Tampere University of Technology in Finland was responsible for developing innovative superluminescent LEDs, ITME (PL) for mouldable Mid-IR lenses and VIGO (PL) for Mid-IR detectors. Industrial partners Vaisala (FI), AirOptic (PL) and GasSecure (NO) brought their competences in the areas of gas sensing and Mid-IR sensor fabrication, and at the same time, validated the technologies developed by the consortium.

"The project will enable us to extend our gas sensing technology into new market areas. The MIREGAS technology offers a unique mix of sensitivity, selectivity and competitive pricing that will be a complement to our present high-performance, laser-based sensing technology. Airoptic plans to a release a first product for hydrocarbon detection based on the technology developed within the MIREGAS project in 2019", says CEO Pawel Kluczynski from Airoptic.

"Bearing in mind the potential market for devices developed in the MIREGAS project, VIGO System SA has started to implement a development plan that assumes investments in increasing technological and production capacities - which will allow for the future, high volume production of sensors based on SLED sources. The main actions of the plan are the purchase of a high-performance epitaxial machine, the expansion of the assembly capabilities and the acquisition of specialists in the field of epitaxial materials for the production of IR sources," stated Chief Sales Officer Przemysław Kalinowski from VIGO.

"Within this project, ITME was able to establish technology for low-cost mid IR optics development using moulding optics approach. We have developed several novel glasses transparent from visible up to Mid-IR range. We establish moulding processing that allow us to develop free-form optical components with optical surface quality for Mid-IR without further polishing. It allows to reduce cost of production first, but also to develop lenses in environment friendly matter since number of glass waste is dramatically reduces. Also grinding and polishing process that requires use of large amount of water and polishing powders is totally redundant. We found also new materials for mould development that are low cost and can be processed with standard CNC machines. This way our technology becomes cost effective also for prototyping and short series of components. This breakthrough give access for custom made free-form glass optics even for SME companies," says Prof. Ryszard Buczynski, head of Department of Glass, ITME. "Now we verify market potential of our technology and offer access to this technology as a custom service through ITME. After market validation, we plan to transfer this technology to other existing companies or establish spin-off company. Some venture funds and world-recognized companies already expresses their interest in our innovations."

"At the start of the project, Mid-IR SLED technology targeting high-brightness and broadband operation was simply not existing. MIREGAS enabled important scientific breakthrough; in fact, the project results represent the state-of-the-art both in terms of power and wavelength coverage. Yet, what it is maybe the most important, contributed to creating a new European ecosystem based on combined expertise in Mid-IR optoelectronics and Si-photonics. We are just at the start of many other applications we will target with this powerful combination of technologies", adds Prof. Mircea Guina, the head of ORC team at Tampere University.

"VTT's Silicon-on-Insulator (SOI) based Photonic Integrated Circuit (PIC) technology owns two unique features: Firstly, in addition to the conventional optical communications wavelengths at 1550 nm, it is applicable for Mid-IR wavelengths; Secondly, it allows for the integration of active devices, such as, laser diodes or photodetectors, directly on the PIC chip. Therefore, the SOI PIC technology is very attractive for gas sensing and sensor integration, in general. In the MIREGAS project, we were able to take this offering to the next level together with the beneficiaries", stated Pentti Karioja, VTT, project coordinator.

.

A new sensor fusion technique based on X-ray and 3D imaging promises improvements to the 3D modelling of mineral resources and more efficient sorting of precious metals. VTT Technical Research Centre of Finland Ltd (VTT) is coordinating the sensor development process through an EU project called X-Mine in collaboration with businesses, international research institutions and mining lobbies.

The European Commission has granted EUR 9.3 million to a three-year H2020 project coordinated by VTT called X-Mine, which develops new sensor technologies for mining companies' drill core analyses and for efficient sorting of precious minerals and metals in ore. The X-Mine project also promotes more efficient 3D modelling of mining companies' mineral resources, and thus improved recovery of precious minerals. The project combines products developed by various sensor manufacturers with commercial ore dressing equipment and aims to achieve sensor fusion that allows rock with low levels of minerals to be separated from the ore. The project reached its midway point at the turn of the year and is progressing gradually to the piloting of both drill core analysers and ore dressing equipment at mines during the spring of 2019. Two drill core analysers were adopted at mines in Greece and Sweden towards the end of 2018, and members of the project consortium are currently testing new sensors for ore dressing equipment and designing ore dressing algorithms. The project consortium consists of 15 research partners and businesses from around the

Figure 1. a) Project consortium, b) Members of the consortium on a visit to Kęty in Poland to learn about the development of ore dressing equipment at a demonstration event hosted by Comex

The project gives the participating research institutions and businesses an opportunity to develop sensor-based solutions for the mining industry together with experts specialising in the exploitation of ore resources, mineral processing and geological mapping. The consortium's aim is to develop solutions for efficient ore extraction and for reducing the amount of waste generated by the ore extraction process. All in all, the project is expected to reduce the harmful environmental impacts of mining by reducing the need for ore processing and chemical processing in mineral recovery. The project consortium is also looking to lower mining companies' production costs.

The X-Mine project involves developing and piloting two prototypes to meet mining companies' needs. Mining companies extract material from the bedrock in order to analyse the location of the ore and the volume of minerals and to estimate their mineral resources. The project consortium is hoping to increase the efficiency of ore exploration by developing equipment that can be used to scan drill core samples on site using new, highly sensitive layered imaging technology based on X-ray fluorescence as well as composition analyses. Analysing and scanning drill core samples on site speeds up the evaluation of ore resources.

Figure 2. a) A drill core analyser developed by Orexplore b) Prototype of ore dressing equipment to be built in Comex's research environment

The project also expects to improve the operation of automated mineral selectivity systems at the extraction stage by establishing a sensor fusion technique that combines X-ray transmission scanning along a line of highly sensitive sensors developed in the course of the project, X-ray fluorescence technology and 3D vision technology with rapid analyses with the help of efficient algorithms. More efficient ore dressing increases resource efficiency and mining companies' profitability while reducing the harmful environmental impacts of mining.

The project was granted funding through the Horizon2020 instrument – the EU's research and development programme that has EUR 80 billion to award to European research initiatives over a seven-year period (between 2014 and 2020). The EU's H2020 funding programme promises more breakthroughs, discoveries and world-firsts by taking great ideas from the lab to the market. The total budget for the three-year project is EUR 11.9 million, of which EUR 9.3 million comes from the European Union.

The X-Mine project is based on international cooperation between research institutions from Finland (VTT), Sweden (Uppsala University, Geological Survey of Sweden) and Romania (Geological Institute of Romania) as well as sensor and equipment manufacturers from Finland (Advacam Oy), Poland (Antmicro Sp. z o. o. and Comex Polska Sp. z o. o), the Czech Republic (Advacam s.r.o.) and Sweden (Orexplore AB). End users involved in the project include mining companies in Bulgaria (Assarel Medet Jsc.), Greece (Hellas Gold S.A.), Cyprus (Hellenic Copper Mines Ltd) and Sweden (Lovisagruvan AB) as well as mining lobbies in Sweden (Bergskraft) and Australia (Swick Mining Services Ltd).

Sensirion is a manufacturer of environmental as well as gas and liquid flow sensors. The cost-effective LD20 single-use liquid flow sensor, which has been used for this concept study, is compact and measures flow rates in the micro and milliliter per hour range with outstanding precision and reliability thanks to the patented CMOSens Technology. Additionally, it features sensitive failure detection mechanisms to help counteract, for example, occlusions and air-in-line. Quantex is an innovative leader in single-use disposable pump technology. The pump’s design is based on a rotary, fixed displacement principle thus is much less sensitive to variables such as line pressure, fluid viscosity and flow rate.

The new wearable drug delivery IoT platform “Quantex 4C” demonstrates an innovative approach to infusion therapies. It integrates connectivity with a modular drug delivery platform and enables continuous monitoring of the therapy with the help of a single use liquid flow sensor for safe ambulatory treatments. “Real time bidirectional flow verification at the low flow rates that are typical for the targeted application and the sensor’s compact form factor made the Sensirion LD20 single-use liquid flow sensor the ideal choice”, said Paul Pankhurst, Founder and CEO of Quantex. The objective of the presented study is to demonstrate the possibilities of a comprehensive drug delivery system to the medical technology industry. The compactness, low power consumption and cost-effectiveness of both, sensor and pump, allow the design of a wearable device which controls and monitors the drug delivery therapy simultaneously. Such a connected drug delivery platform opens up entirely new possibilities for all involved stakeholders. While patients and clinicians benefit from an increased ease of use and confidence in the therapy, low maintenance and less training efforts; it allows healthcare and pharma companies to capture vital adherence data and simplifies patient compliance.

Reliable failure detection is a central requirement for next generation drug delivery devices. Ambulatory treatments and wearable applications will only be successful if patients as well as clinicians trust the reliability of those next generation devices.

Redsense Medical announces that the company has started the development of a prototype with fully functional optical measuring based on the innovative, smart wound technology that was presented in November 2018. The prototype is expected to be ready in Q2 2019.

The company's smart wound care technology makes it possible to develop thin sensor layers for optical measuring of several physiological and biological parameters such as blood and exudate. The layer of sensors can be used separately or integrated directly into smart bandages or adhesive plasters. As the technology enables very cost and resource effective individual wound care, it has potential to become as revolutionary for wound care.

"We have now decided to go from TRL 2 to TRL 3 on NASA's readiness level indicator for development projects, and it will be very exciting to present a working prototype before the start of the summer," says Redsense Medical's CEO Patrik Byhmer.

As announced earlier, Redsense has already initiated discussions on potential collaborations or out-licensing of the technology with large, global wound care companies. The discussions are expected to intensify and become more concrete when a working prototype can be presented and evaluated.

Redsense will present ongoing project updates during the coming months as substantial progress is expected. Based on this outlook, the smart wound care technology has the potential to build substantial value for Redsense Medical´s shareholders.

Strong outlook for the wound care market

The wound care market was valued at approximately USD 18 billion in 2016, and it is expected to grow at a CAGR of 5.3 % until 2023. The primary drivers of market growth are an ageing global population, a larger number of diabetics and increasing investments in research and development, which is also expected to lead to new research discoveries in this area. Chronic wounds are expected to constitute most of the wound care market up until 2022.

The Internet of Things (IoT) is creating many new exciting application opportunities to create smart environments where sensors monitor for changes so that the appropriate actions can be taken. The fastest growing examples of this are HVAC (Heating Ventilation and Air Conditioning), IAQ (Indoor Air Quality), smart homes and smart offices where a network of sensors monitors temperature and carbon dioxide (CO2) levels to ensure the optimal conditionals are maintained with the minimum of energy expenditure. A challenge for such systems in that the CO2 sensors need mains power to operate incurring costs for cabling and, in the case of installing in existing buildings, redecoration.

Gas Sensing Solutions (GSS) has solved this problem with its low power, LED-based sensor technology. The sensor’s power requirements are so low that wireless monitors can be built that measure CO2 levels as well as temperature and humidity with a battery life of over ten years. Being wireless means that they can be placed wherever they are required with no need for cabling or disruption and simply relocated as building usages changes

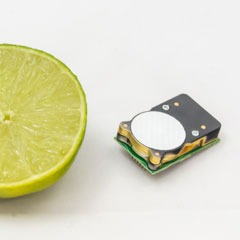

CO2 Detector with Lime

To make the design of these monitors even easier, GSS has added an I2C interface to its very low power CO2 sensor, the CozIR-LP. Having the widely used I2C interface now makes the integration of the sensor into a design very easy. The CozIR-LP is the lowest power CO2 sensor available requiring only 3mW that is up to 50 times lower than typical NDIR CO2 sensors. The GSS patented LED technology also means that the solid state sensor is very robust. This keeps maintenance costs to a minimum as the expected lifetime is greater than 15 years making them the perfect choice for fit and forget applications that measure low (ambient) levels of CO2 from 0-1%.

“Although HVAC and IAQ are major application areas,” explained Calum MacGregor, CEO at GSS, “the lightweight, miniature size of the CozIR-LP also opens up other new possibilities for CO2 monitoring such as portable and wearable devices. The power requirements are so low that energy harvesting designs, such as solar, are now easily achievable. Here again, the new feature of an I2C interface will simplify the design process of integrating the sensor with other sensors and devices all on the I2C bus.”

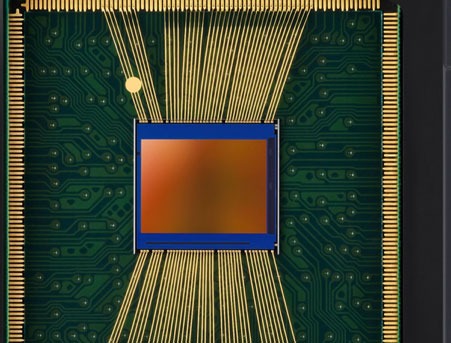

Samsung introduces its smallest high-resolution image sensor, the ISOCELL Slim 3T2, said to be the industry’s most compact 20MP image sensor at 1/3.4-inches. The 0.8μm-pixel ISOCELL Slim 3T2 is aimed to both front and back cameras in mid-range smartphones. The 1/3.4-inch 3T2 fits into a tiny module making more space in ‘hole-in or notch display’ designs.

“The ISOCELL Slim 3T2 is our smallest and most versatile 20Mp image sensor that helps mobile device manufacturers bring differentiated consumer value not only in camera performance but also in features including hardware design,” said Jinhyun Kwon, VP of System LSI sensor marketing at Samsung Electronics. “As the demand for advanced imaging capabilities in mobile devices continue to grow, we will keep pushing the limits in image sensor technologies for richer user experiences.”

When applied in rear-facing multi-camera settings for telephoto solutions, the 3T2 adopts an RGB color filter array instead of Tetracell CFA. The small size of the image sensor also reduces the height of the tele-camera module by around 7% when compared to Samsung’s 1/3-inch 20MP sensor. Compared to a 13MP sensor with the same module height, the 20Mp 3T2 retains 60% higher effective resolution at 10x digital zoom.

The Samsung ISOCELL Slim 3T2 is expected to be in mass production in Q1 2019.

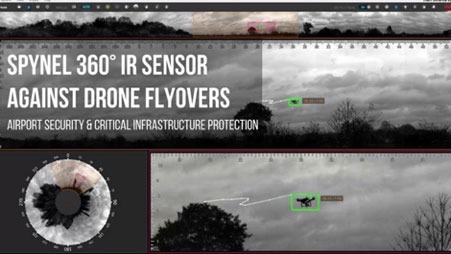

The incident at London Gatwick airport in the UK caused major travel disruption for more multiple days after drones were spotted flying over this sensitive area. This incident highlighted the need for anti-drone technologies to address this evolving threat and secure the safety of flight. Following the episode, the US Federal Aviation Administration was instructed to develop a strategy to allow wider use of counterdrone technologies across airports. Detecting drones, and any UAV threat is a real challenge for many reasons. HGH Infrared Systems with its family of renowned SPYNEL thermal sensors offers a unique set of solutions to address this evolving threat and ensure true, real-time airport security.

In these times of heightened UAV threats, the SPYNEL IR imaging camera provides an innovative solution which guarantees the ability to detect, track and classify any types of drones.

Whereas the drone technology is constantly evolving, bringing on the market many different types of drones including fixed wing, multi rotor drones, drones with GPS, autopilot and camera, autonomous drones emitting low or no electromagnetic signature, the SPYNEL thermal imaging technology, makes it impossible for a UAV to go unnoticed: any object, hot or cold will be detected by the 360° thermal sensor, day and night. Driven by the CYCLOPE intrusion detection software, the panoramic thermal imaging system tracks an unlimited number of targets to ensure that no event is missed over a long-range, wide area surrounding. SPYNEL is thus fully adapted to multi-target airborne threats like UAV swarming. SPYNEL is a versatile, multi-function sensor with a large field of view enabling real-time surveillance of both airborne and terrestrial threats at the same time.

The CYCLOPE automatic detection software provides advanced features to monitor and analyse the 360° high resolution images captured by SPYNEL sensors. The ADS-B plugin enables aerial target identification and the aircraft ADS-B data can be fused with thermal tracks to differentiate an airplane from a drone. With the forensics analysis offering a timeline, sequence storage and playback possibilities, it is also possible to go back in time to analyse the behaviour of the threat since its first apparition on the CYCLOPE interface. Moreover, the latest CYCLOPE feature makes 3D passive detection by triangulation available when using several SPYNEL sensors at the same time. The feature consists in analyzing the distance and the altitude of multiple targets, creating a kind of "protective bubble" around the airport.

Edouard Campana, Sales Director at HGH Infrared Systems, said: "Spynel 360° panoramic thermal camera and its Cyclope software are frequently used against drones to ensure the security of national and international events, critical infrastructures, airport and more. The real-time visualization and detection of multiple targets makes it a unique sensor for ultimate situational awareness. This solution is rapidly deployable and offers HD playback capabilities, very useful for events clarification.”

A key advantage of the SPYNEL detection system for airport applications is that it is a fully passive technology, meaning it will not be a source of disturbance in the electromagnetic environment of the airport, unlike radars. Indeed, a concern often raised by air-safety regulators is that anti-drone systems designed to jam radio communications could interfere with legitimate airport equipment.

Part of the complete surveillance equipment of an airport, the SPYNEL thermal imaging sensor is the must have security equipment for such a high-risk infrastructure, operating with complementary detection sensors. Military facilities, correctional institutions, stadiums and other critical infrastructures have already chosen to integrate the SPYNEL sensor with their other security and facility systems, such as radars, PTZ cameras, Video Management System and more. SPYNEL can also be rapidly deployed as a standalone solution for temporary surveillance, to face urgent cases. With its 24/7 and panoramic area surveillance capabilities, the SPYNEL thermal camera provides an early warning and an opportunity for rapid and accurate detection over large areas, to support proactive decisions.

The MLX90340 is an absolute position sensor based on the Melexis Triaxis Hall technology targeted for various applications in consumer and industrial markets. With a key set of core parameters, the MLX90340 addresses the essence: simple and robust position sensing. It offers the best flexibility to measure a 360 degrees rotational (end-of-shaft or through-shaft) and up to a +/- 20 mm linear magnet movement.

This new device consists of a Triaxis® Hall magnetic front-end, an analog to digital signal conditioner, a DSP for advanced signal processing and one output stage driver. Due to the Integrated Magneto Concentrator (IMC), it is sensitive to magnetic flux in three planes (X, Y & Z) enabling the design of non-contact position sensors with an Analog or PWM output.

The MLX90340 is available in both a single die (SOIC-8) and fully redundant dual-die (TSSOP-16) package for safety-critical applications. It will complement the successful automotive grade MLX90365 by adding three different temperature ranges for cost-effective applications.

Additionally, Melexis offers four pre-programmed versions customized for various rotation ranges. These provide an analog voltage from 10% to 90% of the supply voltage over an angle span of 90, 180, 270, or 360 degrees, avoiding the need for additional programming at the customers’ side and enabling embedded designs where the power ground and output pins are not accessible.

See why Edge use single point loadcells for converting retail space into sales

By Kim Paulussen - Marketing Specialist, Zemic Europe B.V.

Single points used for realtime retail analysis on the spot

EdgeNPD offers innovative SaaS (Software as a Service) solutions with state-of-the-art tools to help retailers and manufacturers increase sales and ROI from retail space with minimum cost and risk. theStore2 Retail Lab software is a VR enabled 3d application for optimising planograms, POSM and space management, allowing for prototyping and testing future trade, marketing or in-store communication strategies. The solution facilitates faster and better decisions driven by advanced data analytics. The full-stack model allows clients to outsource the entire process necessary to manage the category efficiently - from market research, through analytics and decision making, with implementation and training of sales teams.

Edge NPD’s underStand is an Internet of Things hardware and software solution for both retailers and manufacturers. It is a weight sensor-based, cloud connected device which collects and processes data from POS in real time. UnderStand reports and analyses rotation and out of stock data from your promotional activities allowing to separate regular and promotional sales and gain full control over your secondary placement. UnderStand can be used for pre-testing future- or monitoring current promotions allowing to optimize replenishments and sales rep visits.

This goal of Edge NPD solutions matches very well with Zemic’s vision. As we strongly believe that our focus on creating value for our customers will help them to differentiate themselves in their market. Zemic Europe's slogan is therefore "We believe we make you stronger!".

Zemic & Edge NPD

How Zemic consulted EDGE NPD

For the concept "Edge IoT underStand" which EDGE NPD developed, monitoring sales is the most important feature. Not only to detect when a product is bought by a consumer; but more importantly to monitor the product & turnover flow in order to optimize the retail store. All monitoring features of the "Edge IoT underStand" concept are meant to create the most optimum and efficient retail store. This is how space is converted into sales.

To have an accurate and reliable result EDGE NPD searched for a reliable partner which could supply a large number of single point loadcells in a relatively short time. Zemic Europe offered, with their european warehouse with 40.000 products in stock, the right combination of fast delivery together with the right quality/price/service ratio. This enabled EDGE NPD to act quickly and professionally, assisting their customers' needs within the fast moving consumer goods branch.

About underStand from EDGE NPD

EDGE NPD is the laureate of the 2017 edition of the New Europe 100 list. This is a listing of outstanding innovators in Central and Eastern Europe.

Edge underStand has the following benefits for retailers :

1. underStand requires no involvement from the store – it can be installed on a variety of stands and can provide both stand-based or shelf-based monitoring

2. underStand makes it easy to organize and monitor promotions in real time so you can estimate future profits instantly

3. the unique architecture of underStand allows you to monitor parameters such as the effectiveness of shelf product distribution

4. underStand is integrated in all Edge systems: theStore, Geo, ProPOSe, VideoAnalysis, and Trade Planner

Zemic Europe L6E single point loadcell for retail concept.

Single Point Load Cells are often installed in most small to medium sized platform scales. The L6E aluminium load cell is used for the "underStand" concept of Edge NPD. This is an aluminium alloy IP65 single point loadcell suitable for platforms up to 400 x 400 mm. The L6E family is available in OIML C3 , C4 and C5 accuracies in capacities from 50kg up to 300kg.

For more information:

Zemic Europe is a leading manufacturer and designer of loadcells, strain gages and force sensors. We are here to help our European customers with all enquiries and assisting them with their challenges. With 34 years of being active in the field of weighing you can expect from us a professional technical support for your application and we can give you advice for the “best fit” load cell, mounting hardware, strain gage or pressure and torque transducer.

Our Head office in Europe is based in The Netherlands from where we stock thousands of products which can be delivered within 1 day to anywhere in Europe. Zemic Europe can also offer you your own private label with or without OIML approvals. If our wide range of standard products does not meet your requirements our engineering staff of over 225 engineers is ready to design a special product according to your specifications.

The Internet of Things (IoT) is now already reality in multiple application areas. Smart sensors used in smart cities, autonomous driving, home and building automation (HABA), industrial applications, etc. experience challenging requirements in large interconnected networks.

A significant increase of data transmission and required bandwidth, leading to an overload of the communication infrastructure can be expected.

To mitigate this, the use of artificial intelligence (AI) in smart sensors can significantly reduce the amount of data exchange within the networks. Philipp Jantscher from ams AG explains.

Artificial Intelligence (AI) is not a new topic at all. In fact, the idea and the name AI, already appeared in the 1950s. People started to develop computer programs to play simple games like Checkers. One milestone known to many people was the launch of the computer program ELIZA, built in 1966 by Joseph Weizenbaum. The program was able to run a dialog in written English. It created questions, the user provided an answer and ELIZA continued with another question related to the response provided by the user.

Figure 1: Example screenshot of the ELIZA terminal screen

The main AI technique are neural networks. Neural networks have first been used in 1957 when Frank Rosenblatt invented the perceptron. Today’s neurons in neural networks are still using a very similar principle. However, the capabilities of single neurons were rather limited. It took until the early 70s before scientist realized that multiple layers of such perceptrons could overcome these limitations. The final breakthrough on multi-layer perceptron was the application of the backpropagation algorithm to learn the weights for multi-layer networks. An article in Nature in 1986 by Rumelhart et. al.[Rum] made backpropagation mark the breakthrough of neural networks. From this moment, many scientists and engineers were drawn into the neural network hype.

In the 1990 and early 2000, the method was applied to almost any kind of problem. The number of research publications around AI, and particularly neural networks, significantly increased. Nevertheless, once all the magic behind neural networks has been understood, it became just one of many other classification techniques. Due to their very demanding training efforts, neural networks in the second half of the 2000 decade faced significantly reduced interest.

Reinvestigating neural networks with respect to their operating principles caused the second hype, currently still ongoing. By having more computational power at hand and the involvement of a large number of people, Google demonstrated to beat the best Go players with a trained neural network.

Types of AI's

Over the last decades, different AI techniques have emerged. In fact, it is not black and white whether a certain technique belongs to AI. Many simple classification techniques like principle components analysis (PCA) also use training data. They are not classified as AI however. Four very prominent techniques are outlined in the subsequent sections. Each of them has many variants and the given overview in this article does not claim to be complete.

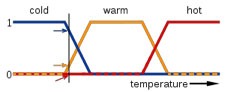

Fuzzy Logic

Fuzzy Logic extends classic logic based on false/true or 0/1 by introducing states that are in between true and false, like “a little bit” or “mostly”. Based on such fuzzy attributes it is possible to define rules for problems. For example, one of the rules to control a heater could be the following: “If it is a little bit cold, increase the water temperature a little bit”. Such rules seem to express the way humans think very well. That is why Fuzzy Logic often is considered an AI technique.

In the real application, large sets of such fuzzy rules are applied to control problems for instance. Fuzzy Logic provides algorithms for all the classical operators like AND, OR and NOT to work on fuzzy attributes. With such algorithms, it is possible to infer a decision out of a set of fuzzy rules.

A set of fuzzy rules has the advantage of being easily read, interpreted and maintained by humans.

Figure 2: Example for fuzzy states of a heater control. The arrows denote the state values at the indicated temperature

Figure 2 illustrates the fuzzy states of a heater control. The states are “Cold”, “Warm”, and “Hot”. As can be seen in the figure, the three states do have some overlap and some temperatures belong to two states at the same time. In fact, each temperature belongs to a certain state with a defined probability.

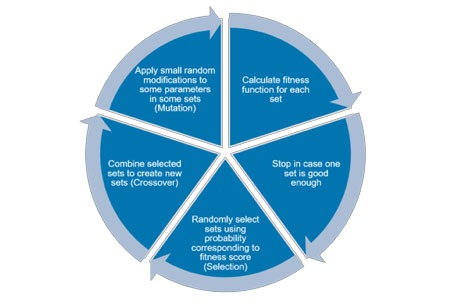

Genetic Algorithm

Genetic Algorithms apply the basic principles of biological evolution to optimization problems in engineering. The principles of combination, mutation and selection are applied to find an optimum set of parameters for a high dimensional problem.

Assuming a large (more than 20) set of parameters with a given fitness function, it is mathematically not possible to determine an optimum set of parameters to maximize the fitness function.

Genetic Algorithms tackle this problem in the following way. First, a population of random parameter sets is generated. For each set in this population, the fitness function is calculated. Then the next generation is derived from the previous generation by applying the following principles:

Each set of the next generation is then evaluated using the fitness functions. In case one set appears to be good enough, the genetic algorithm stops, otherwise a new generation is created as described above.

Figure 3: Description of generating algorithm cycle

It has been shown that for many high-dimensional optimization problems a Genetic Algorithm is able to find a global optimum, whereas conventional optimization algorithms fail, because they were stuck in a local optimum.

Genetic Programming

Genetic Programming takes Genetic Algorithms a step further by applying the same principles to actual source code of programs. The sets are replaced by sequences of program code and the fitness function is the result of executing the actual code.

Very often, the generated program code does not execute at all. It has been demonstrated however that such a procedure can indeed generate working source code for problems like finding an exit in a maze.

Neural Networks

Neural networks mimic the behavior of human brains by implementing neurons. They take input from many other neurons, and then perform a weighted sum. Finally, the output is limited to a defined range. The impact of a specific input depends on the weight associated to this input. These weights resemble the functions of synapses in the human brain to a certain extent.

The weights of the connections are determined by applying inputs and desired outputs. This again is very similar to the way humans teach their kids how to determine the difference between a dog and a cat.

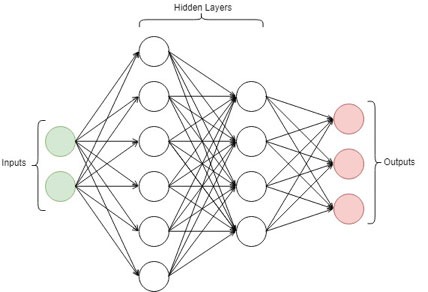

Figure 4: Example of a neural network architecture

The main components of a neural network architecture are input nodes where the input data is applied. The second set of components are hidden layers who process the inputs through application of weights to their inputs. The weighted inputs are then transferred to the inputs of the next layer. Finally, the outputs assign a certain weight to the classification of the input set as a result.

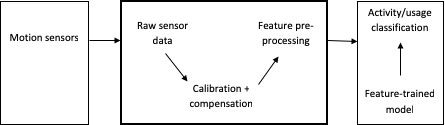

Local Intelligence

IoT sensor solutions today are mostly only responsible for data acquisition. The raw-data needs to be extracted from the sensor and transmitted to another, more computationally capable device within the network. Depending on the use-case, this device could be either an embedded system, or a server within the cloud. The receiving end collects the raw-data and performs pre-processing in order to present relevant results. Frequently the raw-data of the IoT device needs to be processed using artificial intelligence, as in speech recognition for example. The amount of IoT devices and especially the demand for artificial intelligence is expected to increase dramatically over the next years since sensor solutions become more complex.

However, the growing amount of connected IoT devices, which rely on cloud solutions to compute meaningful results, leads to various problems in several areas. The first issue is the latency between acquiring the raw-data and the response with the evaluated information. It is not possible to build a real time system, since the data needs to be sent over the network, processed on a server and then again interpreted by the local device. This leads to the second problem, which is the increasing network traffic and therefore is reducing reliability of network connections. Servers need to handle more and more requests of IoT devices and thus could be overwhelmed in the future.

A major advantage of neural networks is their ability to extract and store the essential knowledge of a large set of data in a fixed, typically much smaller set of weights. The amount of data, which is used to train a neural network, can be vast. In particular, for high dimensional problems the data set needs to scale exponentially to maintain a certain case coverage. The training algorithm extracts the features out of the data, which efficiently will classify unseen input data. As the number of weights is fixed, the amount of storage does not correlate to the size of the training data set. For sure, if the network is too small it will not deliver good accuracy, but once a proper size has been found the amount of training data does not affect the size of the network anymore. Nor does it affect the execution speed of the network. This is another reason why in IoT applications a local network can outperform a cloud solution. The cloud solution may of course store vast amounts of reference data, but then the response time of the cloud degrades quickly with the number of reference data stored in the cloud.

By definition, IoT nodes are connected to a network, and very likely to the Internet. However, it can be very desirable to have a local intelligence. Then, processing of raw data can happen on the sensor or in the IoT node instead of requiring communication with the network. The most important reason for such a strategy is the reduction of energy consumption of network communication traffic.

Major companies as embedded microprocessor manufacturers already realized, that cloud-based services have to be adopted. One of the consequences is the introduction of new embedded microprocessor cores capable of machine learning tasks. In the future, the trend of processing data within the cloud will be further shifted back to local on-device processing. This allows more complex sensor solutions, which involve sensor fusion or pattern recognition. For these applications, local intelligence of the IoT device is needed. Sensor solutions will become truly smart, as they already deliver finalized meaningful data.

Figure 5 represents this paradigm shift from cloud-based solutions, to local intelligence.

However, computing elaborate AI solutions within an IoT device, requires new solutions which meet power, speed and size constraints. In order to archive this, the trend is shifting to integrated circuits optimized for machine learning. This type of processing is commonly referred to as edge AI.

Constraints

In sensors for IoT applications, which are very frequently mobile or at least service free, the most prominent constraint is power consumption.

This leads to a system design, which minimizes the amount of data to be transferred via a communication channel as sending and receiving data. In particular, in wireless mode, transmission is always very expensive in terms of power budget. Thus, the goal is to process all raw data locally and only transmit meaningful data to the network.

Local processing neural networks are a great option as their power consumption can be well controlled. First, the right architecture (recurrent versus non-recurrent) and the right topology (number of layers and neurons per layer) must be chosen. This is far from trivial and requires experience in the field. Second, the bit-resolution of the weights get important. Whether a standard float type is used or whether someone can find an optimized solution using just 4 bits per weight contributes significantly to memory size and therefore to power consumption.

Gas Sensor Physics

The sensor system, used as a test case for AI in sensors, is a Metal-oxide (MOX) based gas sensor. The sensor works based on the principle of a chemiresistor[Kor]. Under a certain possible set of reducing (e.g. CO, H2, CH4) and/or oxidizing (e.g. O3, NOx, Cl2) gases the detector layer is changing its resistivity. This can in turn be detected via a metal electrode sitting underneath the detector layer. The main problem of such a configuration is the indiscriminate response to all sorts of gases. Therefore, the sensor is thermally cycled (through a microhotplate). This causes the sensor to react with a resistance change with a unique signature and thus significantly increases the selectivity of gas detection.

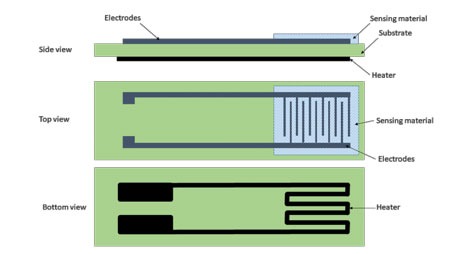

Figure 6: Structural concept of a chemiresistive gas sensor

Another approach is to combine different MOX sensor layers to discriminate further between the different gas types.

A closed physical model explaining the behavior of chemiresistors would depend on too many parameters. A non-exhaustive list of these parameters are thickness, grain size, porosity, grain faceting, agglomeration, film texture, surface geometry, sensor geometry, surface disordering, bulk stoichiometry, grain network, active surface area, size of necks of the sensor layer. Together with the thermal cycling profile, the model would be too complex and is currently simply not available.

Therefore, such systems form an ideal case to apply modern AI methods.

Supervised Learning

Gas sensing is an especially potent application for AI. The problem, which needs to be solved, is the prediction of the concentration of gasses with the resistance of multiple MOX pastes as the inputs.

To solve the task, the behavior of the MOX pastes when exposed to various gasses with different concentrations has been recorded. From this data, a dataset consisting of features (the temporal resistance trend of each paste) and labels (the gas that was present) has been created.

This kind of data is especially well suited for the supervised learning method. In supervised learning, the neural network is given many samples, each consisting of features and a label. The network then learns to associate features with labels in an iterative learning process. It is exposed to every sample multiple times. Its prediction is nudged in the direction of the correct label every iteration by adjusting its weights.

Architecture and Solution Approach

A neural network is defined by its architecture. The architecture is usually influenced by the dataset at hand. In our case, the dataset has a temporal structure, so a recurrent neural network is a good fit. Recurrent neural networks process the features in multiple steps and keep information about previous steps in an internal state.

The architecture also has to be adapted to the already mentioned IoT constraints. The neural network should be as small as possible to minimize power consumption. We use one hidden layer with 47 neurons. The weights are quantized to 4 bits, which further reduce power consumption. On top of this, the network is implemented in an analog circuitry to make it even more efficient.

The network was first tested in a pure software environment using TensorFlow (https://www.tensorflow.org/). This allowed rapid adjustment of the architecture to make sure it is able to solve the task properly before actually building it.

Results

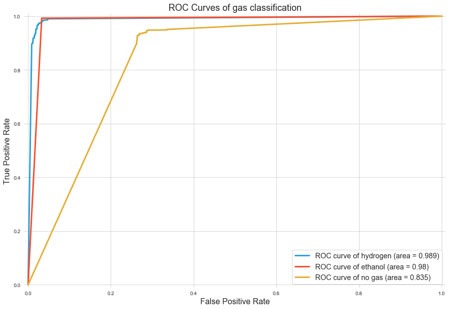

It is not trivial to evaluate a machine learning classifier. There are multiple ways to measure performance. One of the most popular ones is the Receiver Operating Characteristic (ROC). The ROC is a line with false positive rate on one axis and true positive rate on the other. The area under the curve (AUC) of this line should be as high as possible. It measures how well the classifier can separate the positive and negative samples.

Figure 7: Correlation of false positives to good positives for the example of ROC classified gas data

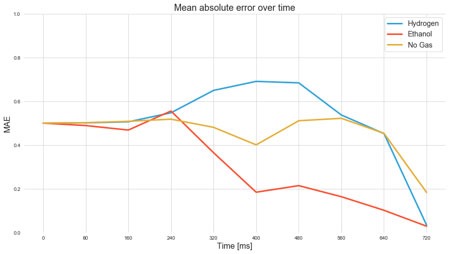

Another interesting metric is the mean absolute error (MAE). The MAE averages the absolute error of the prediction over all samples. We have been looking at this metric over time to get a sense of how many time steps the network needs to achieve a good prediction.

Figure 8: Development of MAE metric over time of a neural network trained on the gas sensor data

Systems where AI could be relevant

This section gives an overview of possible future sensor solutions. In general it can be assumed, that reading raw-data from sensors will not be sufficient anymore, since the complexity and capability of sensors themselves is increasing.

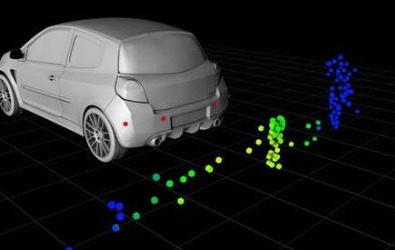

The raw-data needs to be heavily computed and/or combined with the raw data of other sensors to deliver a meaningful result. This method is called sensor fusion. Often, all sensors required for the task, are already included in one solution including an embedded system. Using AI, sensor fusion can be done much easier and more accurately than with classical algorithms. Neural networks can cope with unknown situations much better. In addition, it can detect compensation techniques given the training data and potentially increases the value of the delivered result to the customer.

For our example, classical algorithms would require physics based models, which are not available.

Another example, where the usage of AI is the state-of-the-art solution is object recognition within a camera image. This area has huge potential within the sensor industry.

It allows for small wirelessly connected IoT devices, which are reporting detected objects immediately, anywhere throughout the building without the need of cloud processing.

This could spawn sophisticated surveillance solutions, presence detection or even enable things like smart fridges, which recognize the type of grocery inserted into it.

In addition, facial recognition in embedded devices and smartphones are a trending topic. Here, the facial recognition could be directly performed within the IoT device, creating a plug-and-play solution for customers.

Another area are sensor hubs, which are IoT devices collecting information of other sensors and cameras within the network. Using AI, these devices are capable of identifying patterns, routines and make pro-active steps in support of the customer.

This allows new applications within home automation, which are currently not possible.

Privacy and Data Protection

Typical IoT devices provide no protection against reverse engineering. Therefore, any data, which is stored as a standard firmware on a micro controller in a sensor node, must be regarded as public. Very often, the algorithm to infer meaningful information from sensor raw data is an important asset of sensor providers.

Neural networks do not store the data, which has been used for training. Instead, they extract the relevant features of the data and store these features in the set of weights. It is just impossible to reverse this data extraction and storage operation. This means even given all the weights of a network it is not impossible to derive even parts of the original data, which has been used for training.

This is an important property of neural networks. In particular, in the area of biometry where training data is always a large set of very personal data like face images or fingerprints. Neural network based systems can use such data without any problems of privacy and data protection after the training phase has been finalized.

Summary & Conclusions

This article has shown the increasing importance of AI within sensors in IoT systems. The history of AI and its types was introduced, which lead to the use cases within sensor solutions. It was shown, why AI has a strong potential for sensor solutions in the future and it was demonstrated using the example of gas sensing applications. A prototype implementation for this example including the results was presented. In the end, possible future applications were discussed.

The importance of privacy though protection of the data is very important and cannot be ignored in future systems. Shifting the intelligence back to the IoT device instead in the cloud allows for better selection of data, which need to be processed by third parties.

[Rum] Rumelhart, David E.; Hinton, Geoffrey E.; Williams, Ronald J. (1986-10-09). "Learning representations by back-propagating errors". Nature. 323 (6088): 533–536.

[Kor] Korotcenkov, Ghenadii “Handbook of Gas Sensor Materials – Properties, Advantages and Shortcomings for Applications Volume 1: Conventional Approaches”, Springer 2013, ISBN 978-1-4614-7164-6

http://northstream.se/insights/blog/the-convergence-of-ai-and-iot-are-we-there-yet/

https://developer.arm.com/products/processors/machine-learning

We already live in the age of smart devices, yet the future seems to be even more ‘’smart’’.

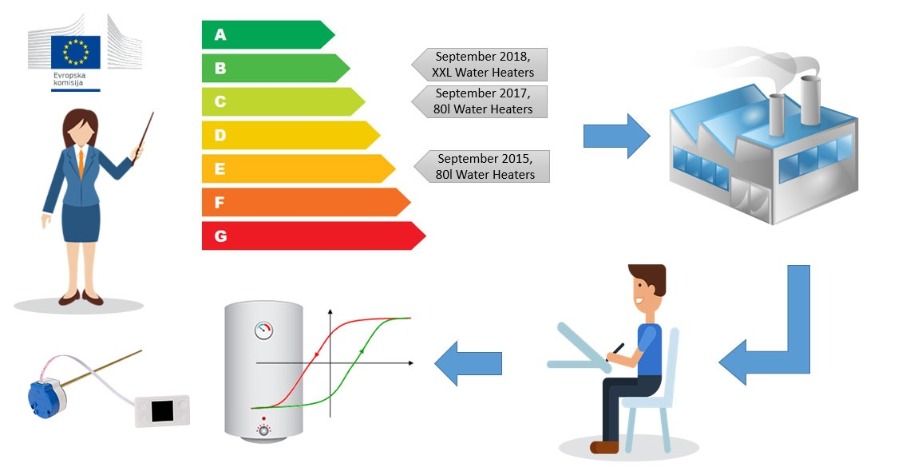

The future of the water heater industry lies in the energy efficient, smart and connected devices. The water heater will be an integral part of the smart home. The market is already familiar with the smart water heater concept, but this product category is still in its early stage.

What is a smart water heater?

There are several answers to this question. We will try to provide the most commonly used definitions.

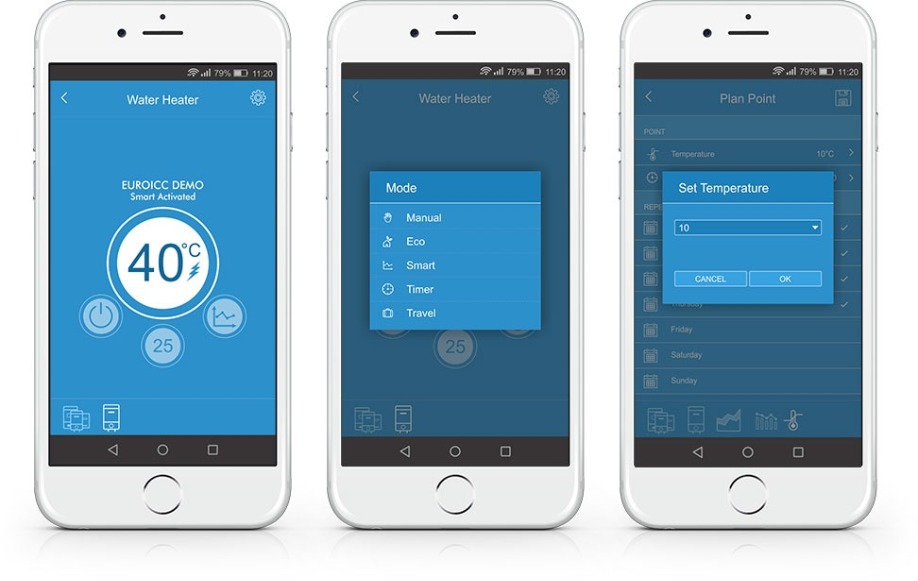

The first one is related to the concept of ‘’smart devices’’ and the Internet of Things (IoT), meaning they are being connected to the internet and remotely controlled via mobile devices (i.e. mobile phone or tablet). This image of the smart water heater is represented worldwide, especially in the USA.

The other is related to the implementation of Artificial Intelligence (AI) concept in the HVAC industry. This means that besides the water heater can be easily programmed, it can even learn user’s behavior and prepare hot water when needed, based on user preferences. This optimizes energy usage and leads to cost reduction. This definition of the smart water heater is commonly used in Europe thanks to the EU’s very clear regulations regarding home appliances. The European Commission has issued several directives and introduced the phrase ‘’smart control’’ – which means ‘’that device can automatically adapt the water heating process to individual usage conditions with the aim of reducing energy consumption[1]’’

Probably the best answer is the combination of the previous two mentioned definitions and concepts. The smart water heater is connected, self-learning, user-adaptable, and energy efficient device.

How can one conventional domestic water heater (DWH) become the smart one?

Definitely, the answer to this question lies in high-tech electronics. Most of the solutions represent some kind of smart thermostat or PCBs which can replace the conventional mechanical thermostats.

EST-100 smart thermostat for electric storage water heaters, introduced by Euroicc, combines reliable mechanics and high-tech electronics in a single device. It’s a rod or stem type electronic thermostat with smart control, LCD display and Wi-Fi module that can be controlled via Android and iOS mobile apps.

[1] https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:32013R0812#ntr1-L_2013239EN.01008301-E0001

EST-100 solution consists of 2 elements, connected by a quick faston connection cable. The first element is the electromechanical thermostat, which controls the heater and collects data about water temperature. The second element is electronics with LCD TFT display 1.44'', 4 buttons, user interface, and Wi-Fi module. Mobile applications, as a part of EST-100 solution, provide complete product functionality and enable remote control of the electric storage water heater. The water heaters’ end-user is provided with 5 selectable operating modes and 2 background operating functions.

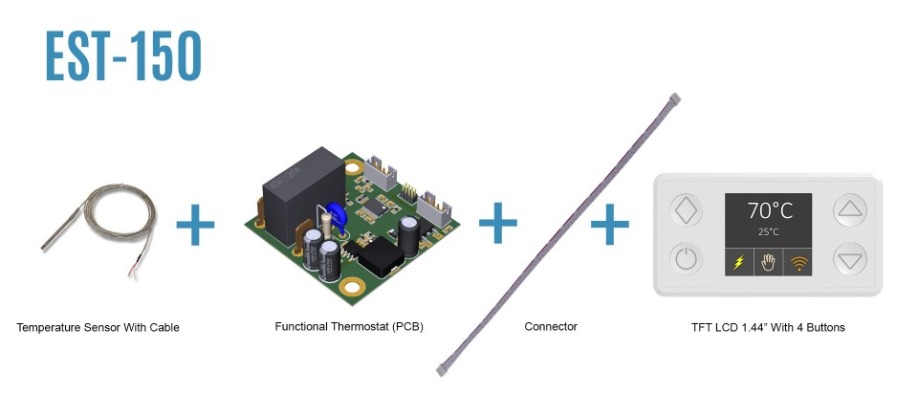

Is rod or stem type of thermostats the only one?

Some of the electric storage water heaters use the different type of thermostats, mostly the capillary version. The capillary solution usually consists of two units, where the first is a functional one, and the another is a safety thermostat. There are also solutions where one device comprises both kinds of thermostats.

In order to fulfill the needs of DWH manufacturers who use the conventional capillary thermostats, Euroicc is developing capillary smart thermostat product version. This solution is called EST-150 smart thermostat. The PCB is replacing the functional thermostat and the conventional mechanical safety thermostat should be kept in the water heater as an integral part of the solution. Instead of being placed in the stem, the two temperature sensors are located on the cable. Besides the safety component, this is the only difference between the EST-100 rod and EST-150 capillary solution.

What are the core values of the smart water heater concept?

Every smart water heater has to be safe, energy efficient, connected and to provide exceptional comfort to its users. These are the core values and principles of the Euroicc’s smart thermostats, which are used in the development and manufacturing process.

How can smart thermostat increase the water heater’s safety?

Water heater’s safety is increased and provided by a bimetal disc, bipolar safety cut-out, and safety software. The safety software is responsible for electronic functional cut-off and bimetallic disc for safety cut-out. Euroicc has combined the new technology with traditional mechanical safety. This is considered to be the optimal solution since there are at least two levels of safety - if the electronics and safety software fail, the bimetal disc will cut-out the electrical supply of the heater. In every moment, thanks to the mobile application, the user has remote and complete control over the water heater.

The bipolar safety cut-out is very important since it disconnects all supply conductors, live and neutral, by a single initiating action. The bimetal disc and bipolar safety are well-known and proven in the decades of DWH practice. What sets apart the electronic solutions, like EST-100 and EST-150, is the software. The advanced software is constantly monitoring the water temperature for the user’s additional safety. Two active temperature sensors in thermostat’s rod are vouching for accurate temperature measurement. The thermal hysteresis loop of EST-100 and EST-150 is 4 ± 2°C /39.2 ± 35.6 °F. It can be even narrower, but the detailed laboratory tests have shown that this is the optimal tradeoff between the accuracy and relay durability.

Why is accurate and constant temperature measurement so important?

Besides the obvious answer - to prevent the possible overheating, there are less known but also important safety aspects. The first one is Anti-Legionella and the second is freeze protection function. Even the smart function and smart control would be impossible without the precise temperature measurement data.

When the water temperature doesn’t reach a higher level of degrees, for a longer period of time (i.e. 70 °C/158 °F, 2 weeks in a row), the dangerous and harmful bacteria (Legionella) can flourish. If neglected, this process can be a threat to the user's health. This is why the smart thermostat will automatically activate the Anti-Legionella cycle if the heater does not reach 71 °C/159.8 °F for 15 days in a row. In Anti-Legionella cycle, the water is heated to 75 °C/167 °F for 15 minutes and this treatment will remove all potentially harmful bacteria from the water.

Another part of the safety software, constantly operating in the background, is the freeze protection function. The smart thermostat prevents water temperature to drop below 10 °C/50 °F. If the temperature falls below this level, the heater will turn on automatically and heat the water to 15 °C/59 °F. The water temperature is constantly kept at 10 °C/50 °F, in order to prevent freezing in winter periods. If there is a risk of frost, the water heater must be drained ahead of the cold season in case the appliance will not be used for several days or if the power supply is disconnected.

How can smart thermostat increase the energy efficiency?

The electronic temperature measurement with two temperature sensors guarantees the accurate water heater operation. This is fundamental for energy saving modes and smart thermostat functionalities. EST-100 and EST-150 smart thermostats have 3 energy saving modes: Eco, Timer and Smart.

In Eco mode, the water temperature is kept at 55 °C/131° F. This temperature level enables optimal long-term water heater operation, in terms of energy savings, lower heat losses, hot water availability and heating element durability. This mode is recommended for traditional usage when the water heater is left to operate continuously at one set point.

Timer programmable mode, allows the users to program the water heater in line with their needs. Water temperature can be set hourly, daily and weekly and saved as a personalized plan. The programming is done via a mobile application. The major energy savings can be made by programming the water heater to operate only when really needed and in off-peak hours.

Smart mode activates the advanced software for heating optimization, reducing energy consumption by up to 15%. The smart function enables the water heater to learn shower habits and to adjust water heating to its user’s needs. This means that hot water is available when needed, but made with major energy savings. The European Commission demands at least 7% of energy savings to be gained due to smart control, under the prescribed conditions[1]. If prescribed conditions and smart control factor are fulfilled, the electric storage water heater is ‘’smart control compliant’’.

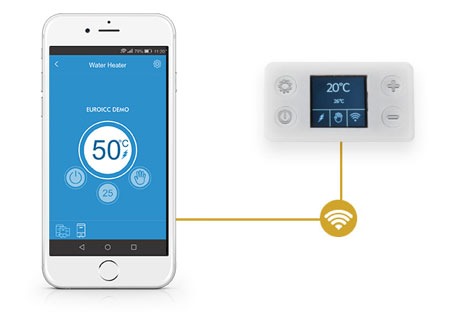

How can water heater become a smart home device?

Any electric storage water heater with EST-100 or EST-150 smart thermostat automatically becomes a smart home device. Integrated Wi-Fi module enables a wireless connection of the water heater. The remote control is provided via easy-to-use Android and iOS apps. With a cloud solution, the water heater can be connected and controlled via the internet too, providing the real Internet of Things (IoT) experience and benefits. Remote control from any location is perceived to be a major innovation in the HVAC industry and the highest added value for the end-user.

How can smart water heater provide maximum comfort to its users?

The

recent water heater industry trend is digital control with

user-friendly HMI. One of the main features of

EST-100 and EST-150 is an advanced

color LCD display with 4 buttons for easy settings and water heater control.

It’s important to create the innovations which are simple, intuitive and easy

for everyday usage. Euroicc’s in-house research

has shown that displays with more than 4

buttons are too complicated for the average

user. In order to provide flexibility and customization options, the buttons

and display plastics can be UV printed with different logos and icons.

Even though the displays are currently the cutting edge solutions, the future belongs to the remote control and intuitive Android and iOS mobile applications. Mobile apps provide a unique water heater user experience and add a new dimension of comfortable usage. You can turn on the water heater before leaving the office, and hot shower water will be waiting for you at home. With the branded mobile apps, DWH manufacturers can stand out from the competition and increase their brand awareness. Language localization in mobile apps is also really appreciated and popular among the users.

By implementing the Artificial Intelligence (AI) concept in its products, DWH manufacturers can create added value for the users. When the smart mode is activated, the software is 2 weeks collecting data about the water temperature acquired from the temperature sensors. The collected data is being processed and applied afterward in the heating process. The heating process is being optimized according to the user’s needs. Basically, the water heater is ‘’learning’’ its users' shower routine and implementing it later on in its operation.

Conclusion

It's fascinating how technology changes our businesses and our lives. The new concepts like Artificial Intelligence (AI) and the Internet of Things (IoT) will shape the industries and be one of the most important growth drivers. Innovations, quick adaptation and creation of added and unique value to the user are the best ways to distinguish ourselves from the competition. The smart thermostats are changing the HVAC industry, especially the water heating segment. The only question is: are we ready to change?

About the Author

Lazar Ćurčija MSc is a Product Manager for Smart Thermostats in Euroicc. Euroicc is a high-tech electronics company, with in-house development and manufacturing. Euroicc has more than 20 years of experience in building automation, PLCs, microcontrollers and smart home devices. We can help water heater manufacturers in the creation of smart, energy efficient and connected products and solutions.

Thomas Brand from Analog Devices explains the demands on sensors for future servicing

Improving condition monitoring and diagnostics, as well as overall system optimization, are some of today’s core challenges in the use of mechanical facilities and technical systems. This topic is taking on an ever-greater role not only in the industrial sector, but wherever machines are used. Machines used to be serviced according to a plan, and late maintenance would mean a risk of production downtime. Today, process data from the machines is used for predicting the remaining service life. Especially critical parameters such as temperature, noise, and vibration are recorded to help determine the optimal operating state or even necessary maintenance times. This allows unnecessary wear to be avoided and possible faults and their causes to be detected early on. With the help of this monitoring, considerable optimization potential in terms of facility availability and effectiveness arises, bringing with it decisive advantages. For example, with it, ABB1 could verifiably reduce downtimes by up to 70%, extend motor service life by up to 30%, and decrease the energy consumption of its facilities by up to 10% within a year.

The main element in this predictive maintenance (PM), as it is known in technical jargon, is condition-based monitoring (CBM), usually of rotating machines such as turbines, fans, pumps, and motors. With CBM, information about the operating state is recorded in real time. However, predictions about possible failure or wear are not made. They only come about through PM and thus mark a turning point: With the help of ever-smarter sensors and more powerful communications networks and computing platforms, it is possible to create models, detect changes, and perform detailed calculations on service life.

To create meaningful models, it is necessary to analyze vibrations, temperatures, currents, and magnetic fields. Modern wired and wireless communications methods already permit factory- or company-wide monitoring of facilities today. Additional analysis possibilities are yielded through cloud-based systems so that the data providing information about the condition of the machine can be made accessible to operators and service technicians in a simple way. However, local smart sensors and communications infrastructure on the machines are indispensable as a basis for all of these additional analysis possibilities. How these sensors should look, which requirements are imposed on them, and what the key characteristics are—these and other questions will be considered in this article.

Probably the most fundamental question in condition monitoring is: How long can I let the machine run before maintenance becomes necessary?

In general, it logically applies that the sooner maintenance is performed, the better. However, for the goal of optimizing operating and maintenance costs or to fully achieve maximum facility effectiveness, the knowledge of experts who are familiar with the properties of the machines is needed. In the analysis of motors, these experts predominantly come from the area of bearings/lubrication, which experience has shown to be the weakest link. The experts ultimately decide if a deviation from the normal state with respect to the actual life cycle (see Figure 1) should already lead to repair or even replacement.

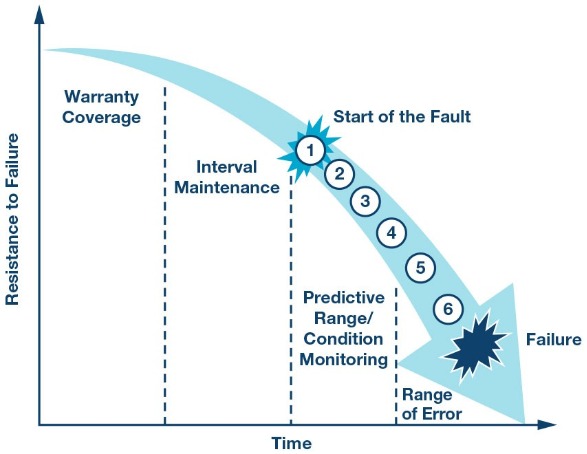

Figure 1. Life cycle of a machine.

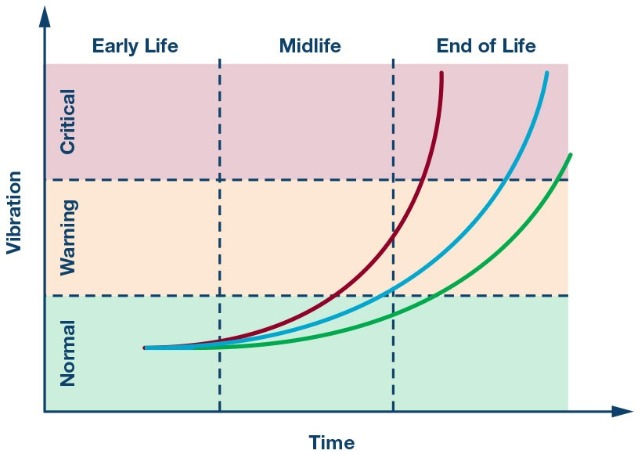

Thus, the still unused machine is initially in the so-called warranty phase. Failure at this early stage in the life cycle may not be able to be ruled out, but it is relatively rare and can usually be traced back to production faults. Only in the subsequent phase of interval maintenance do targeted interventions by appropriately trained service personnel begin. They include routine maintenance performed independently of a machine’s condition at specified times or after specified periods of use, as is the case, for example, with an oil change. The probability of failure between the intervals is still very low here, too. With increasing machine age, the condition monitoring phase is reached. From this point on, faults should be expected. Figure 1 shows the following six changes, starting with changed levels in the ultrasonic range (1) and followed by vibrations (2). Through analysis of the lubricant (3) or through a slight increase in temperature (4), the first signs of pending failure can be detected before an actual fault occurs in the form of perceivable noise (5) or heat generation (6). Vibration is often used to identify aging. The vibration patterns of three identical machines over their life cycles are shown in Figure 2. In the initial period, all are within the normal range. However, starting at middle age, the vibrations increase more or less rapidly according to the load before increasing exponentially to the critical range at the end of life. As soon as the machines reach the critical range, an immediate reaction is necessary.

Figure 2. Changes in vibration parameters over time.

Parameters such as the output speed, the gear ratio, and the number of bearing elements are of prime relevance for analysis of the machine vibration pattern. Normally, the vibrations caused by the gearbox are perceived in the frequency domain as a multiple of the shaft speed, whereas characteristic frequencies of bearings usually do not represent harmonic components. Vibrations due to turbulence and cavitation are also often detected. They are typically connected with air and/or liquid flows in fans and pumps and hence tend to be considered as random vibrations. They are usually stationary and exhibit no variance in their statistical properties. However, random vibrations can also be cyclostationary and hence have statistical properties. They are generated by the machines and vary periodically, as in an internal combustion engine in which ignition occurs once per cycle in each cylinder.

The sensor orientation also plays a key role. If a primarily linear vibration is measured by a single-axis sensor, the sensor must be adjusted according to the direction of the vibration. There are also multiaxis sensors that can record vibrations in all directions, but single-axis sensors offer lower noise, a higher force measuring range, and a larger bandwidth due to their physical characteristics.

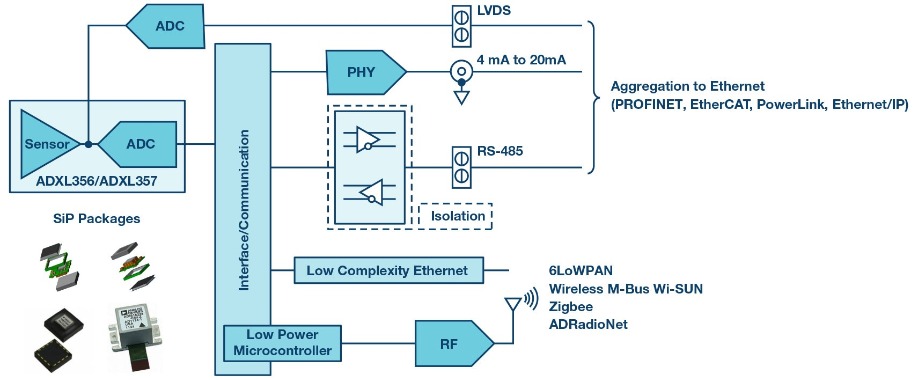

To enable widespread use of vibration sensors for condition monitoring, two factors are of great importance: low cost and small size. Where previously piezoelectric sensors were frequently used, MEMS-based accelerometers are increasingly being used today. They feature higher resolutions, excellent drift and sensitivity characteristics, and a better signal-to-noise ratio, and they enable detection of extremely low frequency vibrations nearly down to the dc range. They also are extremely power saving, which is why they are also ideal for battery-operated wireless monitoring systems. Another advantage over piezosensors is the possibility of integrating entire systems in a single housing (system in package). These so-called SiP solutions are growing to form smart systems through incorporation of additional important functions: analog-to-digital converters, microcontrollers with embedded firmware for application-specific preprocessing, communications protocols, and universal interfaces, while also including diverse protective functions.

Integrated protective functions are important because excessively high forces acting on the sensor element can often result in sensor damage or even destruction. The integrated detection of a possible overrange delivers a warning or deactivates the sensor element in a gyroscope by switching off its internal clock and thus protecting the sensor element. A SiP solution is shown in Figure 3.

Figure 3. MEMS-based system in package (left side).

As demands in the CBM field increase, so do the demands on the sensors. For useful CBM, the requirements regarding the sensor measuring range (full-scale range, or FSR for short) are already in part greater than ±50 g.

Because the acceleration is proportional to the square of the frequency, these high acceleration forces are reached relatively quickly. This is proven by Equation 1:

Variable a stands for acceleration, f for frequency, and d for the amplitude of vibration. Thus, for example, for a 1 kHz vibration, an amplitude of 1 µm already yields an acceleration of 39.5 g.

Variable a stands for acceleration, f for frequency, and d for the amplitude of vibration. Thus, for example, for a 1 kHz vibration, an amplitude of 1 µm already yields an acceleration of 39.5 g.

Regarding noise performance, this should be very low over as wide a frequency range as possible, from nearly dc to the middle two digit kHz range, so that beyond other artifacts, bearing noise can also already be detected at very low speeds. Yet it is precisely here that the manufacturers of vibration sensors are currently facing a huge challenge, especially for multiaxis sensors. Only a few manufacturers offer adequate low noise sensors with bandwidths of greater than 2 kHz for more than one axis. Analog Devices, Inc. (ADI) has developed the ADXL356/ADXL357 three-axis sensor family especially for CBM applications. It offers very good noise performance and outstanding temperature stability. Despite their limited bandwidth of 1.5 kHz (resonant frequency = 5.5 kHz), these accelerometers still deliver important readings in condition monitoring of lower speed equipment such as wind turbines.

The single-axis sensors in the ADXL100x family are suitable for higher bandwidths. They offer bandwidths of up to 24 kHz (resonant frequency = 45 kHz) and g ranges of up to ± 100 g at an extremely low noise level. Due to the high bandwidth, the majority of faults occurring in rotating machines (damaged plain bearings, imbalance, friction, loosening, gear tooth defects, bearing wear, and cavitation) can be detected with this sensor family.

The analysis of machine states in CBM can be accomplished using various methods. The probably most common methods are analysis in the time domain, analysis in the frequency domain, and a mix of the two.

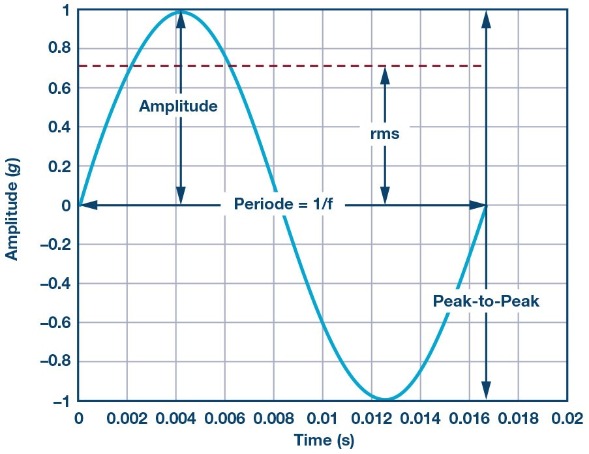

In vibration analysis in the time domain, the effective value (root mean square, or rms for short), the peak-to-peak value, and the amplitude of vibration are considered (see Figure 4).

Figure 4. Amplitude, effective value, and peak-to-peak value of a harmonic vibration signal.

The peak-to-peak value reflects the maximum deflection of the motor shaft and thus allows conclusions about its maximum loading to be made. The amplitude value, in contrast, describes the magnitude of the occurring vibration and identifies unusual shock events. However, the duration or the energy during the vibration event and hence the destructive capability are not considered. The effective value is thus usually the most meaningful because it considers both the vibration time history and the vibration amplitude value. A correlation for the statistical threshold for the rms vibration can be obtained through the dependencies of all of these parameters on the motor speed.

This type of analysis proves to be very simple because it requires neither fundamental system knowledge nor any type of spectral analysis.

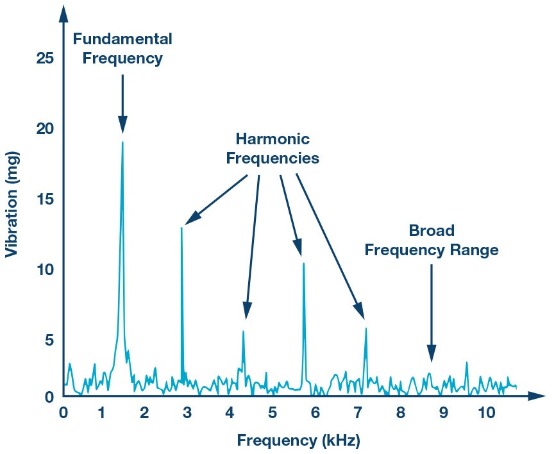

With frequency-based analysis, the temporally changing vibration signal is decomposed into its frequency components via a fast Fourier transform (FFT). The resulting spectrum plot of magnitude vs. frequency enables monitoring of specific frequency components as well as their harmonics and sidebands, as shown in Figure 5.

The FFT is a widespread method used in vibration analysis, especially for detecting bearing damage. With it, a corresponding component can be assigned to each frequency component. Through the FFT, the dominant frequency of the repetitive pulses of certain faults caused by contact between rolling elements and defective regions can be filtered out. Due to their different frequency components, different types of bearing damage can be differentiated (damage on outer race, on inner race, or in ball bearing). However, precise information about the bearing, motor, and the complete system is needed for this.

Additionally, the FFT process requires that discrete time blocks of the vibration be repeatedly recorded and processed in a microcontroller. Although this requires slightly more computing power than time-based analysis does, it leads to more detailed analysis of the damage.

The tracking of the fundamental frequency is especially decisive because the effective values and other statistical parameters change with speed. If the statistical parameters change significantly from the last measurement, the fundamental frequency must be checked so that possible false alarms can be avoided.

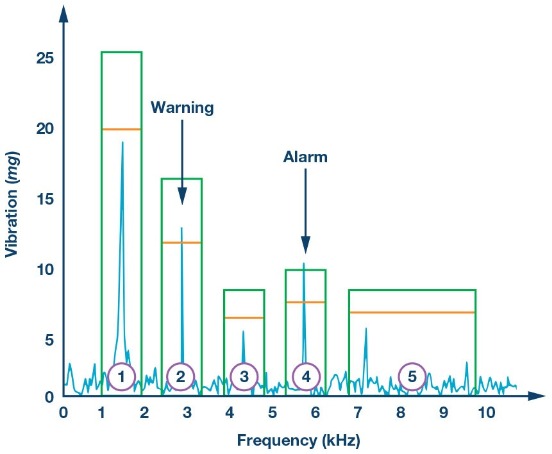

A change in the respectively measured values over time is common to all three analytical methods. A possible method for monitoring the system can involve first recording the healthy condition, or generating a so-called fingerprint. It is then compared with the constantly recorded data. In the case of excessive deviations or when exceeding the corresponding threshold values, a reaction is necessary. As shown in Figure 6, possible reactions can be warnings (2) or alarms (4). Depending on the severity, the deviations may also require immediate intervention by service personnel.

Due to the rapid development of integrated magnetometers, measurement of the stray magnetic field around a motor represents another promising approach to condition monitoring of rotating machines. Measurement is noncontact; that is, no direct connection between the machine and the sensor is required. As with the vibration sensors, with the magnetic field sensors, there are single- and multiaxis versions.