Ahead of 2019, DW requested predictions from organisation working in the IT industry. We received over 150 of these, some of which you will have read in December, some of which you’ll read in this issue of DW, and the rest of which will appear in February. They make for fascinating reading. However, not one of them sought to make any social or moral judgement as to whether or not the technologies discussed are a force for good, for bad or for neutral.

For example, the rise of the digital personal assistant seems to get everyone very excited but, in the UK at least, obesity is a growing issue (if you’ll excuse the pun) and any technology that allows people to spend even more time sat on their couch is not necessarily a good thing. Indeed, thanks to the rise of technology, it’s more than possible to live one’s life entirely without leaving one’s home. We can work from home, all the sustenance we need can be delivered to home and all our leisure hours can be spent at home. Of course, we can exercise at home as well.

And there’s the crux of the issue. Technology itself is definitely neutral, it’s how we choose to use it (or not) that gives it a good or bad association. Many cases are clear cut – technology used by cybercriminals to mess up individuals, companies and even governments is not a good idea; technology used to save people’s lives is.

Ah, but is it that simple? We live on a planet with finite resources and, the more people we keep alive for longer and longer, the more pressure that will be put on these resources. So, is keeping alive people until they are, say, 120, just so they can sit in their armchairs and watch television and sleep all day, a good thing? Especially when the resources required to do this could be better used to help individuals at the other end of the life cycle?

There are no easy answers, but there seems to be an urgent need for governments across the globe to come to grips with the digital world and what it means for their citizens moving forward. For example, there is growing unease that some of the tech giants appear to be able to play fast and loose with the tax rules as they currently exist in most, if not all, countries. So, how does one redress the balance, so that what most would deem a fair share of tax is paid by these organisations?

At a time when the UK seems hell-bent on withdrawing from an organisation whose primary aim (whatever else has followed) is to ensure cooperation between many European countries, it seems that more and more of such cooperation is required, rather than less. A pipe dream maybe, but imagine if countries across the planet had unified policies in many areas of life – not least technology. Right now, it’s all too easy for any organisation or individual to justify a policy or an action along the lines of: “Well, if we don’t do it then someone else, somewhere else will take advantage of the opportunity (ie selling arms to various nations!). Imagine if there was a global policy for everything?!!

And in case anyone thinks that the DW editor has completely lost the plot, I would ask this question: “Why is so much time and effort being spent on developing autonomous vehicles, and, apparently, so little effort being spent on developing some kind of Robocop, when crime and anti-social behaviour levels seem to be rising on a daily basis?”

Governments have a chance to shape the digital future. Right now, they appear to be passengers.

In order to stay ahead of the competition, 50.4% of businesses reported having a proactive ‘opportunity-minded’ approach to new and emerging technologies.

Technology is often thought about in terms of physical devices that are electrical or digital. When in fact, technology encompasses far more than simply tangible objects. New and emerging technologies often impact the value of existing models and services, resulting in digital disruption, which leads to many companies re-evaluating and transforming.

Technology disruption is defined as ‘technology that displaces an established technology and shakes up the industry or creates a completely new industry’.

There is currently a high-stakes global game of digital disruption, fuelled by the latest wave of technological advances spurred by A.I and data analytics. As a result, business models within industry sectors are inevitably changing. Despite the fact 19.3% of companies feel that the pace of technological change has made them significantly more competitive in the past three years, a large majority of companies are still struggling to keep up with this change.

As a result, SavoyStewart.co.uk sought to identify whether businesses view technology disruption as an opportunity or threat, though an analysis of the latest research conducted by Futurum*.

Interestingly, it was discovered that 1 in 4 businesses still struggle to keep up with the times and thrive from digital disruption. Despite this, whilst weighing up the opportunity vs. threat of technological disruption, 39.6% of businesses feel that it provides them with new opportunities to improve and grow as a company.

Savoy Stewart determined this was down to the companies approach to technology adaption, with 24.4% surprisingly, admitting to having no approach. Positively, 50.4% of businesses reported to have a proactive ‘opportunity-minded’ approach, ensuring they remain competitive and up to date.

With 25.1% of business seemingly adopting a passive ‘wait and see’ approach, it is unsurprising that 30.7% of companies felt the impact of technological change over the past three years has made them less competitive.

The window of opportunity to gain competitive advantage generally falls inside a window of three years. It is, therefore, critical for business leaders to understand the value of technologically proactive leadership and operational agility. The faster a company can use technology disruption to their advantage, the more likely it is to surge ahead of its competitors.

Surprisingly, whilst 29.5% of companies stated they feel very excited about their ability to adapt over the next three years, only 18.3% rated themselves as ‘Digital Leaders’. These individuals are highly proactive and agile business leaders who are ahead in their strategic and operational anticipation of the technological change facing them and their organisation.

Thereafter, 35% of businesses feel somewhat optimistic about their ability to adapt. Which is not far off the 36.3% of companies that rated themselves to be ‘Digital Adopters’; easily adaptable and proactive in their approach to evolve with technology disruption.

Following suit, 23.4% are a little concerned about their ability to adapt over the next three years, indicating their company is adaptable but passive in their approach. Which is once again close in correlation to the amount of businesses that rated themselves to be ‘Digital Followers’ at 22%.

Lastly, 12% of businesses stated they are very worried about their ability to adapt to technological change. Which is interesting considering almost double (23.4%), rated themselves to be ‘Digital Laggards’.

Research from the Cloud Industry Forum and BT finds over half of enterprises expect their business models to be disrupted within two years.

New research, launched by the Cloud Industry Forum (CIF) and BT, has revealed that while large enterprise organisations are investing in new technologies in pursuit of digital transformation, skills shortages, and migration and integration challenges, are inhibiting the pace of change.

The research, which was conducted by Vanson Bourne and commissioned by CIF in association with BT, sought to understand how the technology decisions being made by large enterprises, with more than 1,000 employees, in the face of heightening levels of digital disruption. It found that 52% of enterprises expect their business models to be moderately or significantly disrupted by 2020 and that 74% either have a digital transformation strategy in place or are in the process of implementing one.

However, just 14% believe that they are significantly ahead of their competitors in terms of the adoption of next generation technologies, indicating that many are struggling to adapt to the digital revolution. The research suggests that skills shortages sit at the heart of this issue, with enterprises significantly more likely to report facing skills shortages than their smaller counterparts. 59% stated that they lack staff with integration and migration skills (compared to just 28% of SMEs), 64% needed more security expertise, and 54% require more strategic digital transformation skills.

Commenting on the findings, David Simpkins, General Manager, Managed Services and Public Cloud at BT, said: “The research confirms that most enterprises have well developed strategies aimed at minimising digital disruption and enhancing competitiveness. Cloud is clearly an enabler, but many organisations are finding challenges in achieving the agility and flexibility they seek. Unlike small organisations, who can easily be more agile and nimbler in the face of market conditions, change within large enterprises, whose IT estates are infinitely more complex, is much more difficult to achieve.

“Increasingly we’re seeing enterprises managing a wide range of workloads, combining public and private cloud deployments with data centre infrastructure, while at the same time addressing a range of new security threats. This is changing the skillsets that enterprise IT departments need, and it is clear that many will need greater support to safely transition to the digital age,” he continued.

Alex Hilton, CEO of CIF added: “Of all the parts of our economy, it is large, enterprises that are the most vulnerable to digital disruption and that is clearly something that our respondents recognise. Many have invested heavily in their company assets and carry with them a significant amount of tech debt, often making change difficult, slow and expensive. This makes the customer and IT supplier relationship critical, and enterprise organisations must consider their choice of partner carefully to help them navigate this complexity and integrate existing legacy investments with newer cloud-based technologies. Those that do not, will find themselves at a digital disadvantage.”

The internet is made up of thousands of public and private networks around the world. And since it came to life in 1984, more than 4.7 zettabytes of IP traffic have flowed across it. That’s the same as all the movies ever made crossing global IP networks in less than a minute.Yet the new Visual Networking Index (VNI) by Cisco predicts that is just the beginning.

By 2022, more IP traffic will cross global networks than in all prior ‘internet years’ combined up to the end of 2016. In other words, more traffic will be created in 2022 than in the 32 years since the internet started. Where will that traffic come from? All of us, our machines and the way we use the internet. By 2022, 60 percent of the global population will be internet users. More than 28 billion devices and connections will be online. And video will make up 82 percent of all IP traffic.

“The size and complexity of the internet continues to grow in ways that many could not have imagined. Since we first started the VNI Forecast in 2005, traffic has increased 56-fold, amassing a 36 percent CAGR with more people, devices and applications accessing IP networks,” said Jonathan Davidson, senior vice president and general manager, Service Provider Business, Cisco. “Global service providers are focused on transforming their networks to better manage and route traffic, while delivering premium experiences. Our ongoing research helps us gain and share valuable insights into technology and architectural transitions our customers must make to succeed.”

Key predictions for 2022

Cisco’s VNI looks at the impact that users, devices and other trends will have on global IP networks over a five-year period. From 2017 to 2022, Cisco predicts:

1. Global IP traffic will more than triple

2. Global internet users will make up 60 percent of the world’s population

3. Global networked devices and connections will reach 28.5 billion

4. Global broadband, Wi-Fi and mobile speeds will double or more

5. Video, gaming and multimedia will make up more than 85 percent of all traffic

Regional IP traffic growth details (2017 – 2022)

More than 87 per cent of organisations are classified as having low business intelligence (BI) and analytics maturity, according to a survey by Gartner, Inc. This creates a big obstacle for organisations wanting to increase the value of their data assets and exploit emerging analytics technologies such as machine learning.

Organisations with low maturity fall into “basic” or “opportunistic” levels on Gartner’s IT Score for Data and Analytics. Organisations at the basic level have BI capabilities that are largely spreadsheet-based analyses and personal data extracts. Those at the opportunistic level find that individual business units pursue their own data and analytics initiatives as stand-alone projects, lacking leadership and central guidance.

“Low BI maturity severely constrains analytics leaders who are attempting to modernize BI,” said Melody Chien, senior director analyst at Gartner. “It also negatively affects every part of the analytics workflow. As a result, analytics leaders can struggle to accelerate and expand the use of modern BI capabilities and new technologies.”

According to Ms Chien, organisations with low maturity exhibit specific characteristics that slow down the spread of BI capabilities. These include primitive or aging IT infrastructure; limited collaboration between IT and business users; data rarely linked to a clearly improved business outcome; BI functionality mainly based on reporting; and bottlenecks caused by the central IT team handling content authoring and data model preparation.

“Low maturity organisations can learn from the success of more mature organisations,” said Ms Chien. “Without reinventing the wheel and making the same mistakes, analytics leaders in low BI maturity organisations can make the most of their current resources to speed up modern BI deployment and start the journey toward higher maturity.”

Gartner said there are four steps that data and analytics leaders can follow in the areas of strategy, people, governance and technology, to evolve their organisations’ capabilities for greater business impact.

1. Develop holistic data and analytics strategies with a clear vision

Organisations with low BI maturity often exhibit a lack of enterprisewide data and analytics strategies with clear vision. Business units undertake data or analytics projects individually, which results in data silos and inconsistent processes.

Data and analytics leaders should coordinate with IT and business leaders to develop a holistic BI strategy. They should also view the strategy as a continuous and dynamic process, so that any future business or environmental changes can be taken into account.

2. Create a flexible organisational structure, exploit analytics resources and implement ongoing analytics training

Enterprises must have people, skills and key structures in place to foster and secure skills and develop capabilities. They must anticipate upcoming needs and ensure the proper skills, roles and organisations exist, are developed, or can be sourced to support the work identified in the data and analytics strategy.

With limited analytics capabilities in-house, data and analytics leaders should strive for a flexible working model by building “virtual BI teams” that include business unit leaders and users.

3. Implement a data governance programme

Most organisations with low BI maturity do not have a formal data governance programme in place. They may have thought about it and understand the importance of it, but do not know where to start.

Analytics leaders can consider governance as the “rules of the game.” Those rules can support business objectives and also enable the organisation to balance out the opportunities and risks in the digital environment. Governance is also a framework that describes the decision rights and authority models that must be imposed on data and analytics.

4. Create integrated analytics platforms that can support a broad range of uses

Low-maturity organisations often have primitive IT infrastructures. Their BI platforms are more traditional and reporting-centric, embedded in enterprise resource planning systems, or simple disparate reporting tools that support limited uses.

To improve their analytics maturity, data and analytics leaders should consider integrated analytics platforms that extend their current infrastructure to include modern analytics technologies.

Sumo Logic has released new research at DockerCon Europe in Barcelona that reveals 63 percent of European organisations use machine data analytics for security, but lag in broader implementation across the business. The report, titled ‘Using Machine Data Analytics to Gain Advantage in the Analytics Economy,’ takes a comparative look at the adoption and usage of machine data across Europe and the U.S., further finding that only 40 percent of European companies had a “software-centric mindset” compared to 64 percent of US organisations.

The research, conducted by 451 Research and commissioned by Sumo Logic, surveyed 250 executives across the UK, Sweden, the Netherlands and Germany. This was also compared with data based on a previous survey of US respondents that were asked the same questions. The results show that companies in the U.S. are currently more likely to use and understand the value of machine data analytics than their European counterparts.

Key findings include:

Docker adoption continues to expand rapidly in AWS

Looking at its own research, which is derived from active and anonymised data from more than 1,600 customers and 50,000 users, Sumo Logic found in its 2018 “State of Modern Applications and DevSecOps in the Cloud” report that Docker adoption has grown rapidly for companies deploying on AWS. In the past year, the number of companies running Docker containers on AWS has grown from 24 percent of respondents to 28 percent, according to the findings. This represents a year on year growth in companies running Docker containers on AWS of 16 percent. Both Docker Engine, which provides a standardized packaging format for diverse applications,and Docker Enterprise, an enterprise-ready container platform for managing and securing applications, are available on the AWS Marketplace. Additionally, the Sumo Logic Logging Plugin for Docker Enterprise is available for download on the Docker Store.

“The move to microservices and container-based architectures from Docker Enterprise makes it easier to deploy at scale, but it can also make it harder to effectively monitor activities over time without the right approach to logs and metrics in place,” said Colin Fernandes, director of EMEA product marketing, Sumo Logic “Conversely, getting effective oversight across systems and users with machine data makes delivering better services easier alongside improving security and operations. It’s gratifying to see that European organisations already understand the value in using machine data analytics for security purposes.”

International gaming and casino operations company Paf has deployed its critical applications infrastructure on AWS as part of a move to modernise its IT. The deployment included using Sumo Logic to get detailed machine data analytics and insight into performance levels.

"We chose Sumo Logic to provide us with insight into our new application deployments, which are far more complex than our previous applications, and to support our move to container based deployments," said Lars-Goran Hakamo, Security Architect at Paf. “Sumo Logic gives us the ability to identify and resolve issues faster and keep those applications performing at scale. The rich visibility and data insights we are able to glean from Sumo Logic from across our container estate, across our applications and our AWS infrastructure is simply unbelievable.”

Business barriers to data deployments

The 451 Research report also provided insight into the barriers preventing wider usage of machine data analytics:

“Europe is adopting modern tools and technologies at a slower rate than their U.S. counterparts, and fewer companies currently have that ‘software-led’ mindset in place. However, the desire for more continuous insights derived from machine data is there. What the data shows is that once European organisations start using machine data analytics to gain visibility into their security operations, they begin to see the value for other use cases across operations, development and the business,” said Fernandes. “It’s our goal to democratise machine data and make it easier to deploy this as part of modern application deployments running on on the Docker Enterprise container platform.”

Findings demonstrate pressing need to build the right foundation for consolidating and managing cloud deployments.

New research commissioned by SoftwareONE, a global leader in software and cloud portfolio management, has revealed that more than six in ten organisations (62 per cent) believe that the actual costs of maintaining cloud technologies are higher than they expected. This indicates that, despite the evident benefits that the cloud brings to an organisation’s digital transformation efforts, businesses can run into difficulties if they do not have the technology, licensing agreements and ongoing monitoring procedures in place to effectively manage and optimise cloud-based software and applications within their wider IT estate.

The survey, conducted by market research firm Vanson Bourne, also found that 36 per cent of businesses feel that cloud-based “as-a-service” offerings – such as SaaS, PaaS or IaaS – have increased in complexity over the past two years, with an additional 33 per cent believing that the level of complexity has remained unchanged in this time. When viewed alongside the unexpected management costs associated with the cloud, it becomes clear that cloud deployments are fraught with challenges if businesses do not have the means to administer the various elements of their implementations effectively.

Zak Virdi, UK Managing Director at SoftwareONE said: “The cloud has been instrumental in helping to bring greater agility and efficiency to IT, but it’s also important to recognise that making it a success takes time, as well as a commitment to proper, long-term integration and management. This means selecting the right technology, meeting the right licensing agreements, and putting frameworks are in place to consistently and closely monitor the entire implementation.

“From our research, it’s clear that the rapid growth in cloud services and options – while providing an exceptional level of choice to businesses – is also leading to organisations struggling to fully maximise cloud investments while keeping expenditure as low as possible,” continued Virdi.

To illustrate this point further, the research also found that more than four in ten (44 per cent) of respondents claimed that budget restraints often push their organisation towards choosing second or third-choice technology options, rather than the option that they felt was ideal for them. Moreover, 38 per cent said that the management of licences and subscriptions for both cloud deployments and on-premise software pose a significant degree of complexity.

For Virdi, this provides additional evidence of a need for technology that can ease the management of so many different software-based requirements, whether they are based in the cloud or on-premise.

He added: “With so many plates to keep spinning and so many different responsibilities to fulfil – on-premise and cloud deployments, licensing requirements, keeping costs down and so on – it’s absolutely vital that businesses are able to cut out or automate much of the administrative burden, enabling companies to maximise ROI and make their digital transformation efforts a resounding success.”

Virdi concluded: “Amid the urge to adopt cloud, it’s about being able to step back and take stock of what needs to be done from a management perspective. If organisations embrace a platform that allows them to manage their entire software estate and cloud portfolio from a single location, they will be in a much better position to monitor, analyse and optimise the resources at their disposal.”

Businesses across the world still struggle to understand, optimise, and protect their rapidly expanding application environments, according to new research from F5 Labs.

The 2018 Application Protect Report reveals that as many as 38% of respondents have “no confidence” they have an awareness of all their organisation’s applications in use.

Based on regional analysis conducted for the report by the Ponemon Institute, UK businesses know the least about their application situation – only 32% are “confident” or “very confident” that they have full oversight –whereas Germans are the most confident, with 45% claiming to know the full story.

The Application Protection Report, which is the most extensive study of its kind yet, also identified grossly inadequate web application security practices, with 60% of businesses stating they don’t test for web application vulnerabilities, have no pre-set schedule for tests, are unsure if tests happen, or only test annually.

Furthermore, 46% of surveyed respondents disagreed or strongly disagreed that their organisation had adequate resources to detect application vulnerabilities. 49% said the same about their remediation capabilities.

“Many businesses fail to keep pace with technological developments and make unwitting and dangerous security compromises as they have a worrying lack of insight into their application environments. This is a big problem. The pressure has never been higher to deliver applications with unprecedented speed, adaptive functionality, and robust security — particularly against the backdrop of increasing European data protection legislation,” said David Warburton, Senior EMEA Threat Research Evangelist, F5 Networks.

Counting the cost

According to thePonemon Institute’s regional review, the global average for web app frameworks and environments in use is 9,77. The US has the most (12,09), with both the UK (9,72) and Germany (10,37) claiming to be above average.

On average, global businesses consider 33,85% all apps to be “mission critical”. In EMEA, the percentage is 35% and 33% for the UK and Germany, respectively. All regions identified the same top three critical apps: document management and collaboration; communication apps (such as email and texting); and Microsoft Office suites.

Global respondents were also unanimous that the three most devastating threats facing businesses today are credential theft, DDoS attacks, and web fraud.

In EMEA, 76% of German respondents are most concerned about credential theft, which is second only to Canada (81%). DDoS attacks (64%) and web fraud (49%) are German business’ next biggest concerns.

Interestingly, the UK is more threatened by web fraud than anyone else (57% of respondents). Nevertheless, its biggest worries are credentials theft (69%) and DDoS attacks (59%).

Unsurprisingly, web app attacks are a major operational blight in all countries. 90% of respondents in the US and Germany said it would be “very painful” if an attack resulted in the denial of access to data or apps. The UK is the next most potentially vulnerable country with 87% concurring.

The global average incident cost for app denial of service is $6,86m. The US endures the costliest range of attacks with losses of $10,64m on average, closely followed by Germany’s $9,17 million. The UK is slightly below the global average with an average of $6,57m per incident.

Regional differences are also apparent when estimating the incident cost of confidential or sensitive information leaks, such as intellectual property or trade secrets. Globally, the average cost stands at $8,63m. The US pays out the most, having to foot an average bill $16,91m. Germany is second with typical losses of $11,30m. The UK fares better with average losses of $8,10m, which is almost half the US estimate.

Meanwhile, the global average estimated incident cost for leakage of personally identifiable information (customer, consumers or employees) stands at $6,29m. The US is once again hardest hit at an average of $9,37m, ahead of Germany ($8,48m), India ($6,63m), and the UK ($5,63m).

Tools and tactics

According to surveyed businesses, the three main tools for keeping apps safe are Web Application Firewalls (WAF), application scanning, and penetration testing

WAF takes the top spot in the US (30%), Brazil (30%), UK (29%), Germany (29%), Canada (26%) and India (26%). Penetration testing is most prominent in India (24%), followed China (20%), Brazil (19%), Germany (20%), Canada (20%), the UK (18%) and the US (18%). India is again in the lead for app scanning (24%), trailed by China (22%), Brazil (21%), Canada (19%), the US (18%), Germany (16%), and the UK (13%).

The business community’s growing appetite for WAF is further echoed in F5’s 2018 State of Application Delivery report3, which revealed that 61% of surveyed global businesses currently use WAFs to protect applications – a trend largely driven by soaring multi-cloud usage.

The Ponemon Institute also reported that DDoS mitigation and backup technologies are the most widely used technologies to achieve high web application availability. German and Brazilian respondents were the strongest DDoS mitigation advocates (both 64%), edging out the US (62%), the UK (60%) and China (60%). Backup technologies are most popular in Canada (76%), the UK (74%), and Germany (73%).

Storage encryption is also seen as a critical defensive tool. Germany leads the way in this respect, with 50% of businesses using the technology “most of the time”, ahead of Canada (44%), the US (40%) and the UK (39%).

Safeguarding the future

“A company’s reputation depends on a comprehensive security architecture. Firms across the globe can no longer rely on traditional IT infrastructures. Technologies such as bot protection, application-layer encryption, API security, and behavior analytics, as we see in advanced WAFs, are now essential to defend against attacks. Thanks to automated tools with enhanced machine learning, businesses can start to detect and mitigate cybercrime with the highest level of accuracy yet,” said Warburton.

TIBCO Software Inc. has released the initial results of the 2018 TIBCO CXO Innovation Survey examining the leading trends in innovation strategy within the enterprise.

The research provides a deep dive into how and why companies are innovating, as well as the tactics and technologies needed to drive initiatives. The research results also examine the role of digital transformation in innovation across industries, which teams within an organisation are driving innovation the most aggressively, and the obstacles they are facing.

The 2018 TIBCO CXO Innovation Survey polled more than 600 respondents around the world including CXOs, senior vice presidents, vice presidents, senior directors, and directors from business and IT functional areas. Respondents represented industries that include retail/wholesale, manufacturing, financial services, healthcare, transportation, aerospace, energy, oil and gas, professional services, technology and software, and more.

Key findings include:

“Unlocking innovation potential is key for organisations that want to be at the cutting edge of their markets,” said Thomas Been, chief marketing officer, TIBCO. “People, data, and technology form the nucleus of innovation and executives across all industries need all three working together for success. This survey dives deep on these relationships and shows how more digitally mature companies are innovating faster and more often. We’re excited to bring forward compelling insights on the factors critical to innovation. These elements are what every executive should consider when determining strategies to stay competitive.”

Large organizations are running an average of 29 different pre-deployment initiatives to digitize the supply chain, but 86% have failed to scale any of them.

A new study from the Capgemini Research Institute “The digital supply chain’s missing link: focus”, has identified a clear gap between expectations of what supply chain digitization can deliver, and the reality of what companies are currently achieving. While exactly half of the organizations surveyed consider supply chain digitization to be one of their top three corporate priorities, most are still struggling to get projects beyond the testing stage (86%).

Cost savings and new revenue opportunities are the top goals for supply chain digitization

Over three quarters (77%) of companies said their supply chain investments were driven by the desire for cost savings, with increasing revenues (56%) and supporting new business models (53%) also frequently cited. Organizations, especially in the UK (58%), Italy (56%), The Netherlands (54%) and Germany (53%) have supply chain digitization as one of their top priorities.

The broad enthusiasm for focusing on digital supply chain initiatives may be explained by the prospect of the return on investment (ROI) that they offer. The research finds that ROI on automation in supply chain and procurement averaged 18%, compared to 15% for initiatives in Human Resources, 14% in Information Technology, 13% in Customer Service and 12% in Finance and Accounting, and also R&D. According to the report, the average pay back period for supply chain automation is just twelve months.

Most organizations have spread their investments too thinly and are struggling to scale pilot initiatives

The organizations surveyed have an average of 29 digital supply chain projects at the ideation, proof-of-concept or pilot stage. Just 14% have succeeded in scaling even one of their initiatives to multi-site or full-scale deployment. However, for those that have achieved scale, 94% report that these efforts have led directly to an uplift in revenue.

The evidence from those who have moved to implementation suggests that companies are taking on too much, and not focusing enough on strategic priorities. The organizations who successfully scaled initiatives had an average of 6 projects at proof-of-concept stage while those who failed to scale averaged 11 projects.

There was also a clear gap in procedure and methodology between organizations that had and had not implemented digital supply chain initiatives at scale. The vast majority of companies to have successfully scaled said they had a clear procedure in place to evaluate the success of pilot projects (87% vs. 24%) and had clear guidelines for prioritizing those projects that needed investment (75% vs. 36%).

Dharmendra Patwardhan, Head of the Digital Supply Chain Practice for Business Services at Capgemini, added: “While most large organizations clearly grasp the importance of supply chain digitization, few appear to have implemented the necessary mechanisms and procedures to turn it into a reality. Companies are typically running too many projects, without enough infrastructure in place, and lack the kind of focused, long-term approach that has delivered success for market leaders in this area. Digitization of the supply chain will only be achieved by rationalizing current investments, progressing on those that can be shown to drive returns, and involving suppliers and distributors in the process of change.”

Steps to unlock the value in supply chain transformation

As well as learning from organizations that have successfully scaled supply chain initiatives, the report recommends that companies looking to make progress should focus on three key areas:

·Advocate and Align: Ensure transformation efforts are driven by C-suite leadership and senior management. Supply chain digitization is a complex process that spans planning, procurement, IT and HR and as such it cannot be led by any one business unit and must be driven from the top to succeed. Leadership needs to advocate for this transformation, and to provide strategic focus on objectives and what to prioritize. Supply chain digitization is integral to achieving business objectives and must also be aligned with wider efforts – for example to increase transparency and improve customer satisfaction – so it is not considered solely as a cost-cutting exercise.

·Build: For supply chain digitization to be successful, both upstream and downstream partners (suppliers and distributors/logistics providers) need to be onboarded and made part of the digitization efforts. Breaking the silos among the various supply chain functions as well as the technology teams is also critical to the success of supply chain initiatives.

·Enable: While the above help in starting the digitization, in order to sustain it, organizations also need to invest in key areas of building a customer-centric mindset and developing a talent base. They need to devise approaches to attract, retain and upskill their employees.

Commenting on this approach, Rob Burnett, CIO of Global Supply Chain & Engineering at GE Transportation said: “Management buy-in is a huge part of identifying and investing in the digital supply chain projects that can really drive improvement. Rather than a cost center, the supply chain can be a source of innovation and efficiency for the whole organization, but it’s important to maintain a sharp focus on priority projects to get the ball rolling. There should be a wider appreciation that less is more.”

Robotic Process Automation (RPA) and Internet of Things (IOT) represent several viable use cases

In terms of specific use cases, Capgemini’s report reviews the 25 most popular use cases for supply chain digitization today, analyzing each in terms of how easy it is to implement, and benefits realized, to produce the top recommended use cases that can become strategic wins. Of these, RPA and IOT feature more often, in use cases like order processing, smart sensors to monitor product conditions, and to update and maintain connected products. Based on working examples from across today’s supply chain, these use cases have been shown to save time and money on supply chain processes.

New research reveals 75 percent of customers still favour live agent support for customer service vs 25 percent self-service and chatbots.

New research from NewVoiceMedia, a Vonage Company and leading global provider of cloud contact centre and inside sales solutions, reveals that three-quarters of consumers prefer to have their customer service inquiries handled by a live agent over self-service options or a chat bot.

Chat bots can provide customers with quick answers to frequently asked questions or issues, and the survey notes the benefit of chat bots for certain interactions, such as 24/7 service. When it comes to handling sensitive financial and personal information, however, most customers are more comfortable with a live agent, and just 13 percent say they’d be happy if all service interactions are replaced by bots in the future.

According to the survey¹, top concerns for using chat bots for service include: lack of understanding of the issue (65 percent); inability to solve complex issues (63 percent) or get answers to simple questions (49 percent); and lack of a personal service experience (45 percent). Though 48 percent of respondents indicated they would be willing to use chat bots for service – versus the 38 percent who wouldn’t – 46 percent also felt that bots keep them from reaching a live person.

When asked about transactions for which they would not feel comfortable using a chat bot, a significant majority of respondents said large banking (82 percent), medical inquiries (75 percent) and small banking (60 percent). For frequently asked questions or common issues, however, chat bots can add efficiencies to the live agent’s day, freeing them to provide the extra care and time to more complex issues and to the customers who really need it.

“When a situation becomes emotional or complex, people want to engage with people”, says Dennis Fois, President of NewVoiceMedia. “As businesses add more customer service channels, conversations are becoming more complex and higher value, and personal, emotive customer interactions play a critical role in bridging the gap for what digital innovation alone cannot solve. For this reason, companies must find the right balance between automation and human support to deliver the service that customers demand. Frontline contact centre teams will continue to be the difference-makers on the battlefield to win the hearts and minds of customers, and organisations deploying self-service solutions should ensure that there is always an option to reach a live agent”.

A cloud contact centre solution presents businesses with the opportunity to take advantage of emerging technologies such as bots and AI technology, without losing that personal touch, creating a better customer experience. The key is providing customers with the right balance of personalised, white glove service by a live agent when they have the need for deeper, more complex customer care, while also giving them the ability to get quick answers to basic questions provided by chat bots through a variety of communications channels – chat, voice, SMS, or social messaging.

Customers prefer live agents for technical support (91 percent); getting a quick response in an emergency (89 percent); making a complaint (86 percent); buying an expensive item (82 percent); purchase inquiries (79 percent); returns and cancellations (73 percent); booking appointments and reservations (59 percent); and paying a bill (54 percent). However, when asked about buying a basic item, 56 percent would choose a chat bot over a live interaction. The top benefit cited for dealing with chat bots was 24-hour service.

Younger respondents (aged 18-44) were more open to using chat bots overall and across the individual scenarios compared to older consumers (aged 45-60+). In fact, 52 percent of those aged 60 and older would be unwilling to use chat bots for service at all.

This research follows NewVoiceMedia’s Serial Switchers Swayed by Sentiment study, in which nearly half of respondents (48 percent) considered calls to be the quickest way of resolving an issue. The survey also found that the top reasons customers leave a business due to poor service are feeling unappreciated (36 percent) and not being able to speak to a person (26 percent), but that 63 percent would be more likely to return to a business if they felt they’d made a positive emotional connection with a customer service agent.

Angel Business communications have announced the categories and entry criteria for the 2019 Datacentre Solutions Awards (DCS Awards). The DCS Awards are designed to reward the product designers, manufacturers, suppliers and providers operating in data centre arena and are updated each year to reflect this fast moving industry. The Awards recognise the achievements of the vendors and their business partners alike and this year encompass a wider range of project, facilities and information technology award categories together with two Individual categories and are designed to address all the main areas of the datacentre market in Europe.

The DCS Awards categories provide a comprehensive range of options for organisations involved in the IT industry to participate, so you are encouraged to get your nominations made as soon as possible for the categories where you think you have achieved something outstanding or where you have a product that stands out from the rest, to be in with a chance to win one of the coveted crystal trophies.

The editorial staff at Angel Business Communications will validate entries and announce the final short list to be forwarded for voting by the readership of the Digitalisation World stable of publications during April. The winners will be announced at a gala evening on 16th May at London’s Grange St Paul’s Hotel.

The 2019 DCS Awards feature 26 categories across four groups. The Project Awards categories are open to end use implementations and services that have been available before 31st December 2018. The Innovation Awards categories are open to products and solutions that have been available and shipping in EMEA between 1st January and 31st December 2018. The Company nominees must have been present in the EMEA market prior to 1st June 2018. Individuals must have been employed in the EMEA region prior to 31st December 2018.

Nomination is free of charge and all entries can submit up to four supporting documents to enhance the submission. The deadline for entries is : 1st March 2019.

Please visit : www.dcsawards.com for rules and entry criteria for each of the following categories:

DCS PROJECT AWARDS

Data Centre Energy Efficiency Project of the Year

New Design/Build Data Centre Project of the Year

Data Centre Consolidation/Upgrade/Refresh Project of the Year

Cloud Project of the Year

Managed Services Project of the Year

GDPR compliance Project of the Year

DCS INNOVATION AWARDS

Data Centre Facilities Innovation Awards

Data Centre Power Innovation of the Year

Data Centre PDU Innovation of the Year

Data Centre Cooling Innovation of the Year

Data Centre Intelligent Automation and Management Innovation of the Year

Data Centre Safety, Security & Fire Suppression Innovation of the Year

Data Centre Physical Connectivity Innovation of the Year

Data Centre ICT Innovation Awards

Data Centre ICT Storage Product of the Year

Data Centre ICT Security Product of the Year

Data Centre ICT Management Product of the Year

Data Centre ICT Networking Product of the Year

Data Centre ICT Automation Innovation of the Year

Open Source Innovation of the Year

Data Centre Managed Services Innovation of the Year

DCS Company Awards

Data Centre Hosting/co-location Supplier of the Year

Data Centre Cloud Vendor of the Year

Data Centre Facilities Vendor of the Year

Data Centre ICT Systems Vendor of the Year

Excellence in Data Centre Services Award

DCS Individual Awards

Data Centre Manager of the Year

Data Centre Engineer of the Year

Nomination Deadline : 1st March 2019

As we look forward to another year of potentially great change and uncertainty, the managed services industry can take some comfort from its apparent resilience as a model. All the current thinking and forecasting around 2019 in the IT industry suggests a continuing pressure on customers who know they need to become more productive, but are not sure where to spend their IT investment and when. Managed services is uniquely placed to deliver IT solutions in an effective way.

Yet, if anything the pressures are rising for customers; just as in a rising market, many stay profitable enough not to have to think about changing infrastructure, while IT mistakes and wrong decisions matter less, a year of great change may eventually find out those poor deals and false savings. Hence the hesitant IT customer confidence, especially in areas which have less history behind them such as AI and IoT. The thinking is that it is better to hold on to existing technologies which are at least better understood through use.

At the same time, however, the pressure from senior management and those with a strategic view is asking IT to do more with less; squeezed resources will not deliver change, and IT departments themselves may have become more siloed in the last year.

Working with scarce resources should be something that the managed services providers well understand; their industry is all about scale and efficient delivery; they should be able to talk to senior management in customers about the inherent effectiveness of their IT model and how this can be shared with the customer and the customer’s customer.

Yet the promise of technological delivery of riches has not always lived up to the expectations, and research has shown that a half-hearted or lightweight approach to innovation does not yield such good results. The recent Capgemini Research Institute’s “Upskilling your people for the age of the machine” study found that automation has not improved productivity because it has not been a part of the full digital transformation of business processes.

Companies do need to do their homework in terms of change management, in many cases. The need to adopt technology that links to and supports the implementation of a strategy should be a no-brainer. But this is not about new technology, it’s about the basics of change management and communication from leaders, which have been discussed for decades.

The managed services industry may need to encourage new thinking along these lines even more in 2019. It should be pushing at an open door: Equinix research shows that almost three quarters (71%) of organisations are likely to move more of their business functions to the cloud in coming years, they may still need answers that address their security fears, however. And they need help managing that change process in their businesses.

It will be those managed services companies with expertise in particular vertical markets or with a particularly strong customer relationship who do best in this. They are able to talk in meaningful ways about real solutions and engage with realistic customer expectations. They will also hopefully not be afraid to say when a transformation is not going far enough and what real gains could be made by further moves.

For this to work properly they need a clear understanding of the nature of change management in customer organisations, coupled with the required changes in working practices that deliver a safe and secure environment.

The agenda for the 2019 European Managed Services and Hosting Summit, in Amsterdam on 23 May, aims to reflect these new pressures and build the skills of the managed services industry in addressing the wider issues of engagement with customers at a strategic level. Experts from all parts of the industry, plus thought leaders with ideas from other businesses and organisations will share experiences and help identify the trends in a rapidly-changing market.

This month’s journal theme is focused on ‘Significant Moments which helped shape 2018 together with some Predictions for 2019’.

With so much going on in 2018 I thought the best thing to do was list all the awards won and significant reports published over the year by supporting members. CLICK HERE to find out more.

You may also wish to visit the DCA news page where you can look at all the news items from 2018 - https://dca-global.org/news

In addition to supporting and representing the best interests of its members, the DCA has also had a busy year working behind the scenes with the launch of new branding and new website/members portal. 2018 saw the DCA support over forty industry events, initiatives and projects all designed to benefit both members and the sector as a whole. One such project was the 3-year EU Horizon 2020 project called EURECA which received outstanding feedback and praise from the EU commission.

The DCA is extremely proud to have been a contributing partner and the EURECA project has subsequently been nominated for several awards culminating in the EURECA project winning the DCD ‘Data Centre Imitative of the Year’ Award in December in London.

There is much work to do in 2019 which I am confident will be yet again be busy and (without fear of mentioning he “B” word) I am sure it will be both an interesting and challenging year as well! One thing is for sure it won’t be boring! On that very subject the DCA will be working with collaborative partners in the coming months to see what can be done to increase the data centre sectors appeal as a career destination of choice for school and college leavers keen to join the job market.

The trade association already has strong influence both nationally and regionally when it comes to regulation, policy and standards and this valuable work will continue in the year ahead, with new opportunities for members to contribute and participate in helping shape our sector for the benefit of all.

As global event partners for Data Centre World we are again working with Closer Still Media on the next event at Excel in March and we are already busy planning our flagship Data Centre Transformation Conference for this summer in Manchester (more on this to follow). All upcoming events can be found in the DCA Events Calendar and again the annual printed DCA wall calendar show all the DC related events throughout the year will be available soon.

Over the past 12 months the DCA has published over 180 articles in publications which are received and read by over a total of 250,000 subscribers globally, thank you again to all the members who contributed articles over the last year.

It is generally recognised that the DCA Trade Association has built a strong reputation as a trusted source of thought leadership approved content and as one of its founders this is an achievement, I am particularly proud of, 2019 will see an increased focus on how this content is disseminated to further maximise exposure and impact.

The next publication theme is ‘Insight’ with a focus on new industry trends and innovations, such as Open Compute, Edge DC’s and Liquid Cooling to name but a few. The call for papers is now open and the deadline for submitting articles is the 25 January. To submit email amandam@dca-global.org

We’d like to wish all our members, collaborative partners and those working in the Data Centre sector a very happy and prosperous 2019.

2018 Celebrated Another First For the Data Centre Sector

And another first for CNet Training… with the celebration at the very first graduation ceremony from the Masters Degree in Data Centre Leadership and Management program.

After an intense three years of study, learners from the class of 2018 graduated at a ceremony in the famous university city of Cambridge, UK, including one of CNet’s technical Instructors, Pat Drew.

In addition to being the global leader in technical education for the data centre sector, CNet is an Associate College of Anglia Ruskin University in Cambridge, UK and is therefore approved to design and deliver the content of this prestigious Masters Degree program.

The graduates joined the Masters Degree program from some of the world most respected organizations including Unilever, Capital One, IBM, Irish Life and Wirewerks. Each committed to the three-year distance learning program to unite their existing knowledge and skills with new learning centered around leadership and business management within a data centre environment.

For more information see www.cnet-training.com/masters-degree

Designed and written by the global leaders in data centre technical education, CNet Training, in close collaboration with leading data centre professionals from across the globe, the Certified Data Centre Sustainability Professional (CDCSP®) has been officically launched.

The program has been created as a result of demand from data centre professionals for a sector focused, comprehensive program to provide knowledge and innovative approaches to planning, designing, implementing and monitoring a sustainability strategy for data centre facilities and operational capability.

A one-year distance learning program, the Certified Data Centre Sustainability Professional (CDCSP®) provides flexibility and convenience of learning for busy data centre professionals as they log in from across the globe. The learning, managed and supported by CNet’s dedicated in-house team, provides in-depth knowledge of all the stages of data centre sustainability from strategic vision and business drivers, operational analysis of power, cooling and IT hardware, operational processes and procedures, risk evaluation and mitigation, to design innovation and implementing initiatives, whilst appreciating the business and operational challenges that can be encountered. Maintenance strategies, continuous planning and critical analysis against identified targets and demonstrating ROI are also explored.

2018 has been an exciting year for EcoCooling. The Nordic market has seen some of the largest growth as operators exploit the cool climate and renewable electricity. Working within the fast paced, innovative HPC sector has allowed us to continue developing our CloudCooler range to become one of the lowest total cost of ownership, free cooling systems available. These innovative Plug & Play solutions have proved extremely popular with over 2000 installed worldwide. Engineered to be rapidly deployed to remote locations with minimal requirements for qualified labour, the principles behind it underline our latest product offering, in the form of a mobile, high performance computing containerised data centre. It has been developed to support the growing requirements of the low latency data processing infrastructure required for applications such as 5G, AI and smart cities.

What’s happening for 2019 – We are excited to have just moved into spacious new offices in Bury St Edmunds, we are looking to build on the principles of low total cost of ownership to simplify our product offerings and achieve optimum efficiencies for our end users. Watch this space for new products, exciting case studies, and a whole lot of energy saving!

Andrew Fray, Managing Director at European data centre experts Interxion

5G’s potential won’t be fully realised

The 5G tsunami is well on its way and it will hit our shores in 2019, with CCS Insight predicting that we could see 1 billion 5G users by 2023. Its rollout next year has the potential to completely transform every industry, from manufacturing and marketing to communications and entertainment. Fast data speeds, higher network bandwidth and lower latency mean smart cities, connected transport, smart healthcare and manufacturing are all becoming closer to a reality.

Despite the first deployments of 5G and the launch of the first 5G-compatible devices next year, we don’t expect the impact of widespread 5G implementation be fully felt in 2019. Instead, for many businesses, 2019 will be full of continued investment and focus into rearchitecting existing networks and infrastructure ready to host 5G networks.

“Multi-cloud” will be the new buzzword

Conversations this year have been full of the continued development and challenges of cloud adoption, and next year will be no different. A major learning from this year for many companies is that putting all of their eggs, in this case workloads, in one basket – whether it’s private cloud, public cloud or data centre – isn’t the best strategy. Instead, businesses are increasingly turning to multi-cloud adoption, consuming and mixing multiple cloud environments from several different cloud providers at once. In fact, multi-cloud adoption and deployments have doubled in the last year, and this will remain a major topic for 2019. Major cloud providers are also showing increased interest in multi-cloud, with public cloud providers such as Amazon and Alibaba offering private cloud options, as well as a number of acquisitions and partnerships that will allow the marrying of cloud environments.

In 2019, multi-cloud will become the new norm, allowing businesses to realise the full potential of the cloud, giving them increased flexibility and control over workloads and data, whilst avoiding vendor lock-in.

eSIM will take centre stage

It’s been suggested that, despite the build-up around 5G, it’s actually eSIMs that will be a game changer in the technology and telecoms sectors. Up until fairly recently, uptake of eSIM has been slow, as operators have been concerned with how the technology will impact their businesses. However, the eSIM market is estimated to grow to $978 million by 2023, with demand being driven by adoption of internet-enabled technology which requires built-in cellular connectivity. This year, we’ve already seen a shift in mindset in relation to the technology. New guidelines introduced by the GSMA have also contributed to increased awareness of the capabilities.

In 2019, we’ll see a large number of operators, service providers and vendors trial and launch new eSIM-based solutions. The impact of this growing emergence will be broad and will pave the way for significant developments in consumer experiences, in everything from entertainment and ecommerce to automotive.

Living on the edge in 2019

Edge computing has been on the horizon for a number of years now. However, it’s yet to be fully understood. In 2019, 5G deployments and the increasing proliferation of the IoT will be key drivers behind ‘the edge’ gaining significant awareness and traction. Business Insider Intelligence estimates that 5.6 billion enterprise-owned devices will utilise edge computing for data collection and processing by 2020.

As we move into next year, the edge will continue to be at the epicentre of innovation within enterprises, with the technology exerting its influence on a number of industries. Businesses will look to data centre providers to lead the charge when it comes to developing intelligent edge-focused systems. In terms of technological developments, a simplified, smarter version of the edge will emerge. The integration of artificial intelligence and machine learning will provide greater computing and storage capabilities.

Adoption of blockchain will start to accelerate, especially in financial services

Up until fairly recently, blockchain has remained a confusing topic for many businesses, especially those operating in highly-regulated industries such as the financial services sector. As a result, many financial institutions have been slow to embrace the technology. However, next year, more use cases for blockchain will be uncovered and will make an impact. According to PwC, three-quarters (77%) of financial sector incumbents will adopt blockchain as part of their systems or processes by 2020.

In particular, we’ll see an increasing number of fintech partnerships built over the course of the coming year as more financial companies look to harness the technology’s potential.

Cloud gaming will require a new approach to networking

According to Microsoft’s head of gaming division, the future of gaming is cloud gaming. This new form of gaming promises new choices for players in when and where to play, frictionless experiences and direct playability, as well as new opportunities for game creators to reach new audiences. However, based on a subscription model, the highest quality of service is critical for subscriber retention. Delivering this superior user experience, often across multi-user networks and devices, is dependent on low-latency. For gaming companies looking to keep up with demand for connectivity and bandwidth, it’s all about having the right infrastructure to deliver game content without delay or disruption.

Next year, we’ll see more gaming companies take a new approach to networking and harness the power of data centres to optimise performance, maintain low-latency and provide the resiliency and scalability to cope with the volatile demands of today’s gamers.

University of East London was named on the UK Universities Best Breakthrough List

The Enterprise Computing Research Lab (EC Lab) at the University of East London (https://www.eclab.uel.ac.uk), who played a key role in driving energy efficiency in the design and operation of data centres and computer servers, has been named on the UK Universities top 100 Best Breakthrough list.

The Breakthrough list demonstrates how UK universities are at the forefront of some of the world’s most important discoveries, innovations and social initiatives.

To find out more about the MadeAtUni campaign, please visit: https://madeatuni.org.uk

EURECA wins the DCD Global Awards 2018 for the Industry Initiative of the Year

The EURECA project ( https://www.dceureca.eu ) has won the 'Industry Initiative of the Year' category at the 2018 Data Centre Dynamics (DCD) awards. EURECA beat off strong competition from US state government body Maryland Energy Administration; Host in Scotland; and Host in Ireland.

Speaking after collecting the award, EURECA project coordinator, Dr Bashroush said, "It is such an honour to win this global award, but I have to admit it was such a close call given the three other great initiatives shortlisted.”

By Kevin Towers, Managing Director

Looking back over the last 12 months, it is hard to believe that so much has happened. Here is what we have celebrated in 2018 and hope to build on in 2019.

Techbuyer was honoured to be invited to contribute to the Parliamentary Review for the second year in a row this January. We had a lot to report in terms of business growth and workforce increases (28% this year according to the latest figures). It was also wonderful to report on the circular economy and show how data centre hardware can lead the way with sustainable solutions for servers, storage and networking to go on to second, third and more lives after initial use.

Techbuyer went live on the Telegraph Business website in February with a documentary spotlighting the benefits of refurbished IT equipment. The film was part of the Great British Business Campaign that featured on various television and media outlets throughout the year. The campaign focused on SMEs, which have traditionally under-represented in the media despite making up over 99% of the private sector and being responsible for 60% of private sector employment in the UK. Natasha Kaplinsky was outstanding in getting the message out about refurbished IT.

March was the month Techbuyer first joined the Data Centre Alliance, a move that has led to a large number of opportunities. These include listing on the award-winning DC EURECA project’s directory, an introduction to working with the UK government’s cross party think-tank Policy Connect, research that led to a KTP with the University of East London and insights into the issues facing this fascinating sector. We also had the opportunity to publicise sustainable hardware solutions in a number of industry magazines.

Techbuyer allied itself with the likes of Barclays, Deloitte, Vodafone, PwC and the University of Liverpool when it joined the Northern Powerhouse partnership programme in June. With a strong belief in the economic potential of the North to develop skills, science and innovation, it is a fantastic opportunity to be part of something that strengthens our home base and has the potential to develop the data centre industry in new areas.

Innovate UK gave us the go ahead this September for a ground breaking joint project with the University of East London. The “Knowledge Transfer Project” (KTP) will embed the research expertise of Rabih Bashroush, Director of the Enterprise Computing Research Lab, and other academics to create a tool that optimises IT hardware refresh in data centres. The focus will be to drive the circular economy and green agenda.

October saw the opening of our first Asia Pacific office in Auckland, New Zealand. We now have a presence in three continents in addition to our offices in the UK, Germany and USA. The move is designed to better service our clients in Asia Pacific and enables us to offer a round the clock service to our customers. Since 2018 saw us deliver to over 100 countries, our global offices are set to be increasingly important. A technical facility is due to open in Australia early 2019 to partner the operation.

Following the work the DCA have done on the EN50600 standard, the Cyber and Physical Security Special Interest Group are in search of new challenges. We are delighted to be part of the process. Rich Kenny, our IT Security Manager joined the group in October to offer insight into cyber protections that are most suitable for the Data Centre Environment. The group is currently working on a white paper to describe best practice. Work will progress in the new year.

Techbuyer joined this cross party think-tank in December following an introductory talk given at the DCA Annual General meeting. 2019 will see us discuss ways that other industries can learn from the server, storage and networking secondary market when it comes to economically viable solutions for reuse and product life extension. Techbuyer is also hoping to learn about developments in skills training and developments in recycling technologies as we gear up to make our contribution to the UN Sustainable Development goals in the new year.

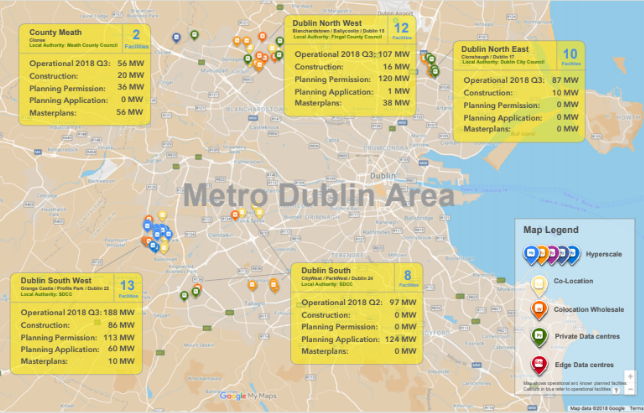

Since the release of Host in Ireland’s report ‘Ireland’s Data Hosting Industry 2017’ there has been a 120 MW increase in total connected data centre power capacity and seven new operational data centres in Ireland.

Most of Ireland’s 48 data centres, with a combined 540 MW of grid-connected power capacity, are located in the Dublin Metro area. The concentration of data centres combined with Dublin’s offerings of public, private and hybrid data clouds in close proximity to leading colocation, managed services and hyperscale facilities has helped the city earn the nickname “Home of the Hybrid Cloud”.

If predictions of continued investment in data centre construction are realized numbers will continue to increase in 2019, with an estimated investment of €1.5 Billion and by 2021, €9.3 Billion will have been invested in Ireland.

A round-up of the latest Gartner research, covering IT infrastructure and 5G.

As more organizations embrace digital business, infrastructure and operations (I&O) leaders will need to evolve their strategies and skills to provide an agile infrastructure for their business. In fact, Gartner, Inc. said that 75 percent of I&O leaders are not prepared with the skills, behaviors or cultural presence needed over the next two to three years. These leaders will need to embrace emerging trends in edge computing, artificial intelligence (AI) and the ever-changing cloud marketplace, which will enable global reach, solve business issues and ensure the flexibility to enter new markets quickly, anywhere, anytime.

IT departments no longer just keep the lights on, but are also strategic deliverers of services, whether sourced internally or external to the organization. They must position specific workloads based on business, regulatory and geopolitical impacts. As organizations’ customers and suppliers grow to span the globe, I&O leaders must deliver on the idea that “infrastructure is everywhere” and consider the following four factors:

Agility Thrives on Diversity

Bob Gill, vice president at Gartner, said the days of IT controlling everything are over. As options in technologies, partners and solutions rise, I&O leaders lose visibility, surety and control over their operations. “The cyclical, dictated factory approach in traditional IT cannot provide the agility required by today’s business,” Mr. Gill said. “The need for agility evolved faster than our ability to deliver.”

Despite agility placing among their top 3 priorities for 2019, I&O leaders are faced with conundrum as a diverse range of products is available to them. “The ideal situation for I&O leaders would be to coordinate the unmanageable collection of options we face today — colocation, multicloud, platform as a service (PaaS) — and get ahead of the business needs tomorrow. We must reach into our digital toolbox of possibilities and apply it to customer intimacy, product leadership and operational excellence to establish guardrails around managing the diversity of options in the long term,” Mr. Gill said.

The need for agility will only increase, so the two key tasks of I&O leaders will be to manage the sprawl of diversity in the short term and become the product manager of services needed to build business driven, agile solutions in the long term. “I&O has the governance, security and experience to lead this new charge for the business,” Mr. Gill said.

Applications Enable Change

Infrastructure can save money and enable applications, but by itself it does not drive direct business value — applications do. Dennis Smith, vice president at Gartner, said that there is no better time to be an application developer. “Application development offers an opportunity to jump on the express train of change to satisfy customer needs and build solutions composed of a tapestry of software components enabled through APIs,” Mr. Smith said.

Gartner research found that by 2025, 70 percent of organizations not adopting a service/product orientation will be unable to support their business, so I&O engineers must engage with consumers and software developers; integrate people, processes and technology; and deliver services and products all to support a solid infrastructure on which applications reside.

Boundaries Are Shifting

Digital business blurs the lines between the physical and the digital, leveraging new interactions and more real-time business moments. As more things become connected, the data center will no longer be the center of data. “Digital business, IoT and immersive experiences will push more and more processing to the edge,” said Tom Bittman, distinguished vice president at Gartner.

By 2022, more than half of enterprises-generated data will be created and processed outside of data centers, and outside of cloud. Immersive technologies will help to light a fire of cultural and generational shift. People will expect more of their interactions to be immersive and real time, with fewer artificial boundaries between people and the digital world.

The need for low latency, the cost of bandwidth, privacy and regulatory changes as data becomes more intimate, and the requirement for autonomy when the internet connection goes down, are factors that will expand the boundary of enterprise infrastructures all the way to the edge.

People Are the Cornerstone

I&O leaders are struggling to deliver value faster in a complex, evolving environment, hindering the ability of organizations to learn quickly and share knowledge.

“The new I&O worker profile will embrace versatile skills and experiences rather than reward a narrow focus on one technical specialty,” said Kris van Riper, managing vice president at Gartner. “Leading companies are changing the way that they reward and develop employees to move away from rigid siloed career ladders toward more dynamic career diamonds. These new career paths may involve experiences and rotations across multiple technology domains and business units over time. Acquiring a broader understanding of the IT portfolio and business context will bring collective intelligence and thought diversity to prepare teams for the demands of digital business.”

Ultimately, preparing for I&O in the digital age comes down to encouraging different behaviors. Building competencies such as adaptability, business acumen, fusion collaboration and stakeholder partnership will allow I&O teams to better prepare for upcoming change and disruption.

Gartner, Inc. has highlighted the key technologies and trends that infrastructure and operations (I&O) leaders must start preparing for to support digital infrastructure in 2019.

“More than ever, I&O is becoming increasingly involved in unprecedented areas of the modern day enterprise. The focus of I&O leaders is no longer to solely deliver engineering and operations, but instead deliver products and services that support and enable an organization’s business strategy,” said Ross Winser, senior research director at Gartner. “The question is already becoming ‘How can we use capabilities like artificial intelligence (AI), network automation or edge computing to support rapidly growing infrastructures and accomplish business needs?”

During his presentation, Mr. Winser encouraged I&O leaders to prepare for the impacts of 10 key technologies and trends to support digital infrastructure in 2019. They are:

Serverless Computing

Serverless computing is an emerging software architecture pattern that promises to eliminate the need for infrastructure provisioning and management. I&O leaders need to adopt an application-centric approach to serverless computing, managing APIs and SLAs, rather than physical infrastructures. “The phrase ‘serverless’ is somewhat of a misnomer,” noted Mr. Winser. “The truth is that servers still exist, but the service provider is responsible for all the underlying resources involved in provisioning and scaling a runtime environment, resulting in appealing agility.”

Serverless does not replace containers or virtual machines, so it’s critical to learn how best and where to use the technology. “Developing support and management capabilities within I&O teams must be a focus as more than 20 percent of global enterprises will have deployed serverless computing technologies by 2020, which is an increase from less than 5 percent today,” added Mr. Winser.

AI Impacts

AI is climbing up the ranks in terms of the value it will serve I&O leaders who need to manage growing infrastructures without being able to grow their staff. AI has the potential to be organizationally transformational and is at the core of digital business, the impacts of which are already being felt within organizations. According to Gartner, global AI-derived business value will reach nearly $3.9 trillion by 2022.

Network Agility (or Lack of?)

The network underpins everything IT does — cloud services, Internet of Things (IoT), edge services — and will continue to do so moving forward.

“Teams have been under pressure to ensure network availability is high and as such the team culture is often to limit change, yet all around the network team the demands for agility have increased,” said Mr. Winser. The focus for 2019 and beyond must move to how I&O leaders can help their teams increase the pace of network operations to meet demand. “Part of the answer is building network agility that relies on automation and analytics, and addressing the real skills shift needed to succeed,” said Mr. Winser.