The recent DCD London event was a great opportunity to take the pulse of not just the data centre industry but the wider IT community as well – as one vendor put it: ‘The lines between IT and data centre infrastructure are becoming increasingly blurred’. If there was one message to take away from the event, it would be that, no matter where you work, artificial intelligence is hurtling towards you like a barely controlled juggernaut full of Christmas goodies. There’s every chance that the vehicle and the goodies will arrive in one piece and provide you with hours, months and years of entertainment and rewards, but there’s just the slim possibility that, if the driver becomes too carefree and crashes before arrival, or the waiting customers don’t get out of the way when the lorry parks up, disaster could ensue.

Put it another way, the promise of artificial intelligence, machine learning, Internet of Things and other intelligent technologies is huge. But, don’t get carried away with the hype. Companies who have got to grips with this stuff are finding that there are levels of complexity (both human and technology ones) that need to be understood and addressed before meaningful and sustainable AI solutions can deliver major, ongoing benefits.

Folks that remember the virtualisation wave, will recall that the hype was replaced by the slow dawning reality that it was complicated to implement successfully, but worth the effort. Virtualisation took time to reach maturity.

Now, of course, virtualisation is the platform on which the software-defined era has been built, and a deeper foundation for the Cloud, and the enabler for the orchestration that is, perhaps, the crucial part of intelligent automation.

In the present digital, ‘want everything now’ world in which we live, the suggestion that intelligent automation requires patience and skill (and time) to implement properly might not be that welcome. Nevertheless, if my feedback from DCD is accurate, almost everyone who’s looked at using AI – both vendors and end users alike – is excited by the possibilities, then realises that an AI project throws up as many questions as answers, but still remains excited as overcoming these challenges will ensure that the end product is a robust, comprehensive AI solution, and not a half-baked one that will (as with early virtualisation projects) disappoint more than inspire.

The first question to ask is not: ‘How much money can I save with AI?’ BUT: ‘How can AI help improve my customer service?’ Along the way, you may well find that you do save money, but be open-minded as to where the AI journey might take you.

Workday, Inc. has released the results of a new European study, ‘Digital Leaders: Transforming Your Business,’ an IDC white paper, sponsored by Workday which reveals that there is a ‘digital disconnect’ emerging in many organisations between the expectations of digital leaders and the ability of core business systems to meet their needs. Challenged at driving digital transformation (DX) at scale, they see their finance and HR systems as merely ‘adequate’ for today’s business requirements but lack the flexibility and sophistication to deliver on the broader DX mandate being driven by many CEOs.

Leading IT market research and advisory firm, IDC, conducted the survey of 400 digital leaders in Europe across France, Germany, the Netherlands, Sweden and the UK, in 2018, sponsored by Workday. It focused on where the IDC-termed ‘digital deadlock’ was related to finance and HR systems, and the impact of DX on core business systems as organisations move beyond DX experiments and pilots to try and operationalise their DX strategy and embed it within the business.

The digital leaders surveyed identified the following three critical pain points, with digital leaders in the UK admitting that in their organisations:

Other key research findings included:

As part of the white paper, IDC studied a number of companies deemed ‘best-in-class’ in digital transformation in Europe. IDC suggested three areas for finance, HR and IT to work alongside digital leaders to help ensure this potential ‘digital disconnect’ does not materialise in the long run:

76% viewed this as very or extremely important. There is an understanding that to do this the ability to reconfigure finance and HR systems in a dynamic fashion is critical.

Best-in-class organisations are making significant investments in finance and HR systems. In fact, 88% of these organisations are investing in advanced finance, and 86% in advanced HR systems, to support the digital transformation journey

“Through the research we have identified three areas of best practice that can drive successful digital transformation, with DX placing new and challenging requirements on finance and HR,” said Alexandros Stratis, co-author and senior research analyst, IDC. “Firstly, the importance of a digital ‘dream team’ that cuts across finance, IT, HR and the broader business; secondly, the need to be able to dynamically reconfigure finance and HR systems; and thirdly, that best-in-class organisations who are leading the way are already making significant investments to modernise their finance and HR systems.”

“The time to disrupt is now,” said Carolyn Horne, regional vice president of UK, Ireland and South Africa, Workday. “DX strategies are being rolled out by organisations as forward-thinking CEOs realise digital transformation across the business is critical if they are to survive and thrive. Technology is playing a critical role in this change, but this change is not just about how a company innovates around new products and services, or changes the customer experience, the whole organisation needs to adapt and modernise. This must include finance and HR, otherwise the agility, flexibility and insights needed to be successful in the modern business world cannot be achieved.”DataCore Software has published the results of its 7th consecutive market survey, “The State of Software-Defined, Hyperconverged and Cloud Storage,” which explored the experiences of 400 IT professionals who are currently using or evaluating software-defined storage, hyperconverged and cloud storage to solve critical data storage challenges. The results yield surprising insights from a cross-section of industries over a range of workloads, including the fact that storage availability and avoiding vendor lock-in remain top concerns for IT professionals, and illustrate the status of the industry on its journey to a software-defined future.

The report reveals what respondents view as the primary business drivers for implementing software-defined, hyperconverged, public cloud and hybrid cloud storage. For example, the top results for software-defined storage include: automate frequent or complex storage operations; simplify management of different types of storage; and extend the life of existing storage assets. This portrays the market’s recognition of the economic advantages of software-defined storage and its power to maximise IT infrastructure performance, availability and utilisation.

The report also highlightsthe capabilities that users would like from their storage infrastructure.The top capabilities identified were business continuity/high availability (which can be achieved via metro clustering, synchronous data mirroring, and other architectures) at 74%;disaster recovery (from remote site or public cloud) at 73%; and enabling storage capacity expansion without disruption at 72%.

Business continuity was found to be a key storage concern, whether on-premise or in the cloud. It is first on the list for the primary capability that respondents would like from their storage infrastructure, and was also number one in the previous DataCore market survey. Additionally, business continuity is the top business driver for those deploying public and hybrid cloud storage (46% and 41%), and similarly ranks high in the complete results for software-defined and hyperconverged storage business drivers, coming in at45% and 43% respectively.

Surprises, False Starts and Technology Disappointments

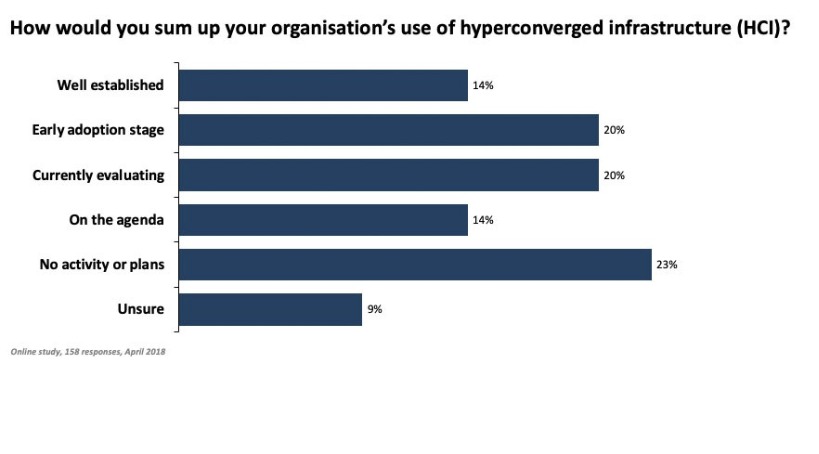

The biggest surprise reported was that there is still too much vendor lock-in within storage, with 42% of respondents noting this as their top concern. Software-defined storage is being used to solve this (management of heterogeneous environments) as well as for automation (lowering costs, fewer migrations and less work provisioning). Therefore, it should not be a surprise that the results also showed adoption of software-defined storage is about double that of hyperconverged (37% vs. 21%), with 56% of respondents also strongly considering or planning to consider software-defined storage in the next 12 months.

The survey further revealed the reality of hyperconverged deployments. While it continues to make inroads, in addition to above, some respondents also said they are ruling out hyperconverged because it does not integrate with existing systems (creates silos), can’t scale compute and storage independently and is too expensive. Hybrid-converged technology is a good option for IT to consider in these cases.

Additionally, while all-flash arrays are often viewed as the simplest way to add performance, more than 17% of survey respondents found that adding flash failed to deliver on the performance promise—most likely as flash does not solve the I/O bottlenecks pervasive in most enterprises. Technologies such as Parallel I/O provide an effective solution for this.

In regards to emerging technologies, many enterprises are exploring containers, however actual adoption is slow primarily due to: lack of data management and storage tools; application performance slowdowns—especially for databases and other tier-1 applications; and lack of ways to deal with applications such as databases that need persistent storage. NVMe is also still struggling to become mainstream. About half of respondents have not adopted NVMe at all. Thirty percent of survey respondents report that 10% or more of their storage is NVMe, and more than 7% report that more than half of their storage is NVMe.

While adoption is still slow, enthusiasm for the technology does appear strong. Technologies such as software-defined storage with Gen6 HBA support and dynamic auto-tiering with NVMe on a DAS can help simplify and accelerate adoption.

“We see enterprise IT maturing in its use of software-defined technologies as the foundation for the modern data centre,” said Gerardo A. Dada, chief marketing officer at DataCore. “DataCore is delighted to be a catalyst that helps IT meet business expectations of availability and performance while reducing costs, as well as enabling users to enjoy architectural flexibility and vendor freedom.”

Additional highlights of “The State of Software-Defined, Hyperconverged and Cloud Storage”market survey include:

Worldwide spending on security-related hardware, software, and services is forecast to reach $133.7 billion in 2022, according to an new update to the Worldwide Semiannual Security Spending Guide from International Data Corporation (IDC). Although spending growth is expected to gradually slow over the 2017-2022 forecast period, the market will still deliver a compound annual growth rate (CAGR) of 9.9%. As a result, security spending in 2022 will be 45% greater than the $92.1 billion forecast for 2018.

"Privacy has grabbed the attention of Boards of Directors as regions look to implement privacy regulation and compliance standards similar to GDPR. Frankly, privacy is the new buzz word and the potential impact is very real. The result is that demand to comply with such standards will continue to buoy security spending for the foreseeable future," said Frank Dickson, research vice president, Security Products.

Security-related services will be both the largest ($40.2 billion in 2018) and the fastest growing (11.9% CAGR) category of worldwide security spending. Managed security services will be the largest segment within the services category, delivering nearly 50% of the category total in 2022. Integration services and consulting services will be responsible for most of the remainder. Security software is the second-largest category with spending expected to total $34.4 billion in 2018. Endpoint security software will be the largest software segment throughout the forecast period, followed by identity and access management software and security and vulnerability management software. The latter will be the fastest growing software segment with a CAGR of 10.7%. Hardware spending will be led by unified threat management solutions, followed by firewall and content management.

Banking will be the industry making the largest investment in security solutions, growing from $10.5 billion in 2018 to $16.0 billion in 2022. Security-related services, led by managed security services, will account for more than half of the industry's spend throughout the forecast. The second and third largest industries, discrete manufacturing and federal/central government ($8.9 billion and $7.8 billion in 2018, respectively), will follow a similar pattern with services representing roughly half of each industry's total spending. The industries that will see the fastest growth in security spending will be telecommunications (13.1% CAGR), state/local government (12.3% CAGR), and the resource industry (11.8% CAGR).

"Security remains an investment priority in every industry as companies seek to protect themselves from large scale cyber attacks and to meet expanding regulatory requirements," said Eileen Smith, program director, Customer Insights and Analysis. "While security services are an important part of this investment strategy, companies are also investing in the infrastructure and applications needed to meet the challenges of a steadily evolving threat environment."

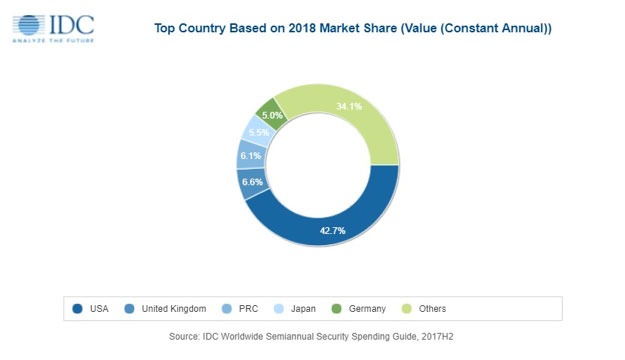

The United States will be largest geographic market for security solutions with total spending of $39.3 billion this year. The United Kingdom will be the second largest geographic market in 2018 at $6.1 billion followed by China ($5.6 billion), Japan ($5.1 billion), and Germany ($4.6 billion). The leading industries for security spending in the U.S. will be discrete manufacturing and the federal/central government. In the UK, banking and discrete manufacturing will deliver the largest security spending while telecommunications and banking will be the leading industries in China. China will see the strongest spending growth with a five-year CAGR of 26.6%. Malaysia and Singapore will be the second and third fastest growing regions with CAGRs of 21.1% and 18.2%, respectively.

From a company size perspective, large and very large businesses (those with more than 500 employees) will be responsible for nearly two thirds of all security-related spending in 2018. Large (500-999 employees) and medium businesses (100-499 employees) will see the strongest spending growth over the forecast, with CAGRs of 11.8% and 10.0% respectively. However, very large businesses (more than 1,000 employees) will grow nearly as fast with a five-year CAGR of 10.1%. Small businesses (10-99 employees) will also experience solid growth (8.9% CAGR) with spending expected to be more than $8.0 billion in 2018.

CA Veracode has released the latest State of Software Security (SOSS) report. The study includes promising signs that DevSecOps is facilitating better security and efficiency, and provides the industry with the company’s first look at flaw persistence analysis, which measures the longevity of flaws after first discovery.

In every industry, organisations are dealing with a massive volume of open flaws to address, and they are showing improvement in taking action against what they find. According to the report, 69 percent of flaws discovered were closed through remediation or mitigation, an increase of nearly 12 percent since the previous report. This shows organisations are gaining prowess in closing newly discovered vulnerabilities, which hackers often seek to exploit.

Despite this progress, the new SOSS report also shows that the number of vulnerable apps remains staggeringly high, and open source components continue to present significant risks to businesses. More than 85 percent of all applications contain at least one vulnerability following the first scan, and more than 13 percent of applications contain at least one very high severity flaw. In addition, organisations’ latest scan results indicate that one in three applications were vulnerable to attack through high or very high severity flaws.

An examination of fix rates across 2 trillion lines of code shows that companies face extended application risk exposure due to persisting flaws:

“Security-minded organisations have recognised that embedding security design and testing directly into the continuous software delivery cycle is essential to achieving the DevSecOps principles of balance of speed, flexibility and risk management. Until now, it’s been challenging to pinpoint the benefits of this approach, but this latest State of Software Security report provides hard evidence that organisations with more frequent scans are fixing flaws more quickly,” said Chris Eng, Vice President of Research, CA Veracode. “These incremental improvements amount over time to a significant advantage in competitiveness in the market and a huge drop in risk associated with vulnerabilities.”

Regional Differences in Flaw Persistence

While data from U.S. organisations dominate the sample size, this year’s report offers insights into differences by region in how quickly vulnerabilities are being addressed.

The UK was among the strongest performing regions: businesses here closed the first 25 percent of their flaws in just 11 days, second fastest among all regions, closed 50 percent of flaws in 72 days and closed 75 percent of flaws in 304 days. These marks outpaced averages across regions. Companies in Asia Pacific (APAC) are the quickest to remediate, closing out 25 percent of their flaws in about 8 days, followed by 22 days for the Americas and 28 days for those in Europe and the Middle East (EMEA). However, companies in the U.S. and the Americas caught up, closing out 75 percent of flaws by 413 days, far ahead of those in APAC and EMEA. In fact, it took more than double the average time for EMEA organisations to close out three-quarters of their open vulnerabilities. Troublingly, 25 percent of vulnerabilities in organisations in EMEA persisted more than two-and-a-half years after discovery

Data Supports DevSecOps Practices

In its third consecutive year documenting DevSecOps practices, the SOSS analysis shows a strong correlation between high rates of security scanning and lower long-term application risks, presenting significant evidence for the efficacy of DevSecOps. CA Veracode’s data on flaw persistence shows that organisations with established DevSecOps programs and practices greatly outperform their peers in how quickly they address flaws. The most active DevSecOps programs fix flaws more than 11.5 times faster than the typical organisation, due to ongoing security checks during continuous delivery of software builds, largely the result of increased code scanning. The data shows a very strong correlation between how many times a year an organisation scans and how quickly they address their vulnerabilities.

Open Source Components Continue to Thwart Enterprises

In prior SOSS reports, data has shown that vulnerable open source software components run rampant within most software. The current SOSS report found that most applications were still rife with flawed components, though there has been some improvement on the Java front. Whereas last year about 88 percent of Java applications had at least one vulnerability in a component, it fell to just over 77 percent in this report. As organisations tackle bug-ridden components, they should consider not just the open flaws within libraries and frameworks, but also how they are using those components. By understanding not just the status of the component, but whether or not a vulnerable method is being called, organisations can pinpoint their component risk and prioritise fixes based on the riskiest uses of components.

72 per cent recognise the benefits of analytics but only 39 per cent use it to inform strategy.

Nearly three-quarters of organisations (72 per cent) claim that analytics helps them generate valuable insight and 60 per cent say their analytics resources have made them more innovative, according to research commissioned by SAS, the leader in analytics.

That is despite only four in 10 (39 per cent) saying that analytics is core to their business strategy. A third of respondents (35 per cent) report that it is used for tactical projects only. Despite acknowledged value – and most (65 per cent) can quantify this - businesses are not getting the most out of their analytics investments.

However, they are now pursuing rapid analytical insight as a priority as they push into emerging technologies like artificial intelligence (AI) and the internet of things (IoT).

The research, “Here and Now: The need for an analytics platform”, surveyed analytics experts, and IT and line-of-business professionals in a wide range of industries around the world. It found that analytics is changing the way companies do business. This does not just apply to day-to-day operations as it’s also driving innovation - more than a quarter (27 per cent) say analytics has helped launched new business models. There are many identified benefits of an analytics platform, the most common being less time spent on data preparation (46 per cent), smarter and more confident decision-making (42 per cent) and faster time-to-insights (41 per cent).

“The findings show a strong desire in the business community to boost competitive insight and efficiency using analytics,” said Adrian Jones, Director of SAS’ Global Technology Practice. “The majority recognise that effective analytics could benefit their organisations, particularly as they develop their ability to deploy cutting-edge AI. But the number of those effectively using analytics strategically across the organisation could be much higher.”

The survey underscored a lack of alignment in the skills and leadership needed to maximise the potential of analytics. Many companies struggle to manage multiple analytics tools and data management processes.

“If they are to achieve success, organisations must put analytics at the heart of strategic planning and empower analytics resources to drive innovation using a unified analytics platform,” said Jones.

Views differ on the role of an analytics platform: most (61 per cent) believe it’s to extract insight and value from data, but many are split on its other purposes or benefits, such as better governance over data, predictive models and open source technology. Fifty-nine per cent believe another role of an analytics platform is to have an integrated or centralised data framework, while 43 per cent believe it’s to provide modelling and algorithms for AI and machine learning.

The responses suggest companies know analytics can help them, but they lack a clear and common understanding of the benefits of using a platform approach across the enterprise and the analytics lifecycle. It would explain why few organisations have a suitable platform in place according to results from SAS’ Enterprise AI Promise Study announced at Analytics Experience Amsterdam last year. This revealed only a quarter (24 per cent) of businesses felt they had the right infrastructure in place for AI, while the majority (53 per cent) felt they either needed to update and adapt their current platform or had no specific platform in place to address AI.

Despite the wide variety of uses for analytics, confidence in the end result is high. Respondents on average have 70 per cent confidence that they can derive business value from their data through analytics. Those that invest in data science talent are more likely to see ROI: confidence rises to 72 per cent for those in analytics roles but drops to 65 per cent for standard IT teams.

The same is true when considering the future. Analytics teams are more confident (66 per cent) of their ability to scale to meet future analytics workloads, compared to those in standard IT roles (59 per cent).

“When we speak with business leaders who are scaling up to use analytics and AI strategically, challenges they commonly identify are the need for an enterprise analytics platform and access to talent with data science and analytics skills,” said Randy Guard, Executive Vice President and Chief Marketing Officer at SAS.

“With AI now top-of-mind for many organisations, it’s more important than ever to have a powerful, streamlined analytics capability,” said Guard. “AI can only be as effective as the analytics behind it, and as analytical workloads increase, a comprehensive platform strategy is the best way to ensure success at scale.”

Today's announcement was made at the Analytics Experience conference in Milan, a business technology conference presented by SAS that brings together thousands of attendees on-site and online to share ideas on critical business issues.

As companies aggressively invest in a future driven by intelligence – rather than just more analytics – business and IT decision-makers are increasingly frustrated by the complexity, bottlenecks and uncertainty of today’s enterprise analytics, according to a survey of senior leaders at enterprise-sized organizations from around the world.

The survey, conducted by independent technology market research firm Vanson Bourne on behalf of Teradata, found significant roadblocks for enterprises looking to use intelligence across the organization. Many senior leaders agree that, while they are buying analytics, those investments aren’t necessarily resulting in the answers they are seeking. They cited three foundational challenges to making analytics more pervasive in their organization:

1) Analytics technology is too complex: Just under three quarters (74 percent) of senior leaders said their organization’s analytics technology is complex, with 42 percent of those saying analytics is not easy for their employees to use and understand.

2) Users don’t have access to all the data they need: 79 percent of respondents said they need access to more company data to do their job effectively.

3) “Unicorn” data scientists are a bottleneck: Only 25 percent said that, within their global enterprise, business decision-makers have the skills to access and use intelligence from analytics without the need for data scientists.

“The largest and most well-known companies in the world have collectively invested billions of dollars in analytics, but all that time and money spent has been met with mediocre results,” said Martyn Etherington, Teradata’s Chief Marketing Officer. “Companies want pervasive data intelligence, encompassing all the data, all the time, to find answers to their toughest challenges. They are not achieving this today, thus the frustration with analytics is palpable.”

Overly Complex Analytics Technology

The explosion of technologies for collecting, storing and analyzing data in recent years has added a significant level of often paralyzing complexity. The primary reason, cited in the survey, is that generally technology vendors don’t spend enough time making their products easy for all employees to use and understand; this problem is further exasperated by the recent surge and adoption of open source tools.

·About three quarters (74 percent) of respondents whose organization currently invests in analytics said that the analytics technology is complex.

·Nearly one out of three (31 percent) say that not being able to use analytics across the whole business is a negative impact of this complexity.

·Nearly half (46 percent) say that analytics isn’t really driving the business because there are too many questions and not enough answers.

·Over half (53 percent) agree that their organization is actually overburdened by the complexity of analytics.

·One of the main drivers of this complexity is that the technology isn’t easy for all employees to use/understand (42 percent).

Limited Access to Data

The survey further found that users need access to more data to do their jobs effectively. Decision-makers and users understand that more data often leads to better decisions, but too often, a lack of access to all the necessary data is a significant limiting factor for analytics success. According to the survey, decision-makers are missing nearly a third of the information they need to make informed decisions, on average – an unacceptable gap that can mean the difference between market leadership and failure.

·79 percent of senior leaders said they need access to more company data to do their job effectively.

oOn average, respondents said they are missing nearly a third (28 percent) of the data they need to do their job effectively.

·81 percent agree that they would like analytics to be more pervasive in their organization.

·More than half (54 percent) of respondents said their organization’s IT department is using analytics, compared to under a quarter (23 percent) who said that C-suite and board level are doing so.

Not Enough Data Scientists

Finally, “unicorn” data scientists remain a bottleneck, preventing pervasive intelligence across the organization. Respondents see this as an issue and connect the problem to the challenge of using complex technologies. To combat it, the vast majority say that they are investing, or plan to invest, in easier-to-use technology, as well as in training to enhance the skills of users.

·Only 25 percent of companies said their business decision-makers have the skills to access and use intelligence from analytics without the need for data scientists.

·Nearly two thirds (63 percent) of respondents from organizations that currently invest in analytics agreed that it is difficult for non-analytics workers to consume analytics within their organization.

·In 75 percent of respondents’ companies, data scientists are needed to help business decision-makers extract intelligence from analytics.

·To reduce this over-reliance on data scientists, 94 percent of respondents’ businesses where data scientists are currently needed are investing, or plan to invest, in training to enhance the skills of users; while 91 percent are investing, or plan to invest, in easier-to-use technology.

Automation enables agility, increases speed, and drives top-line revenue while freeing up resources and budgets to focus on more strategic tasks

CA Technologies has published the results from its landmark research conducted by analyst firm Enterprise Management Associates (EMA), “The State of Automation”. The study reveals the global state of automation maturity across organisations, highlights the increasing adoption of automation to drive business success, and demonstrates that automation is becoming the backbone for modern business. Organisations who do not embrace modern business automation will flounder and struggle to survive.

Automation drives agility, speed & revenue

The report illustrates automation’s impact on businesses’ strategies. These results provide business decision makers with a real-world understanding of the rate at which their industries are becoming automated, and its overall effect on productivity and revenue growth.

According to the report:

The successful implementation of automation also varies widely across industries, with retail showing the highest level of maturity (70 percent) and manufacturing the least mature (35 percent).

Anticipating Automation’s Future with Artificial Intelligence and Machine Learning

Businesses believe that artificial intelligence (AI) will only further increase automation’s value to their processes:

“As companies continue to embrace AI and automation, it’s imperative that different industries and departments understand their respective priorities and bottlenecks in adopting and implementing these technologies,” said Dan Twing, president and COO at EMA.

Additional key findings from the report include:

The research also indicates that many organisations have struggled with a large number of task-specific tools, and many others have the benefit of the experience of those who have gone before them as they start down the path to automation. Participants stated that they are looking to broader, multipurpose automation tools, such as workload automation (WLA) on the IT side of the house, or robotic process automation (RPA) for business processes. Both approaches are broad, and while WLA is generally thought of as an IT operations tool, the research demonstrates that it is now increasingly being applied to business processes.

The RPA Conundrum

According to the research, many participants are using RPA on a limited basis for some IT process automation. While 88 percent of respondents have deployed RPAs, or plan to deploy, RPA usage is very low, with most organizations having less than 100 bots. Thirty-five percent of respondents believe RPA will become more important as it becomes AI-enabled.

Responding to this, CA Technologies is the only vendor that supports clients as they work to get their bots smarter, supporting their need to keep up with the speed of the business, which requires continuous updates. With new integrations with leading RPA vendors, CA Continuous Delivery gives customers the business agility to deploy bots faster into production.

“This research shows that business automation is clearly recognised as a key enabler for business success. Organisations that fail to embrace modern automation solutions risk becoming industry laggards. Never has the phrase ‘Automate or Die’ become more relevant,” said Kurt Sand, general manager, CA Technologies. “CA offers enterprises the reliable, secure solutions needed to enable a business to become efficiently automated.”

European spending on mobility solutions is forecast to reach $293 billion in 2018, according to a new update to the Worldwide Semiannual Mobility Spending Guide from International Data Corporation (IDC). IDC expects the market to post a five-year compound annual growth rate (CAGR) of 2.4%, leading to spending in excess of $325 billion in 2022.

Western Europe (WE) accounted for 78% of total mobility spending in 2017 and will remain by far the largest contributor in the wider European region, with a CAGR of 2.7% for the 2017–2022 period. At the same time, mobility expenditure in Central and Eastern Europe (CEE) will grow at 1.2%.

Mobility plays a central role in enterprises' digital strategies for an agile business, increased competitiveness, and greater customer engagement. New mobility use cases and technology adoption among enterprises is driving growth in all market segments from devices to software and services. Nevertheless, security and compliance are top issues for large mobility projects. According to Dusanka Radonicic, senior research analyst with the Telecoms & Networking Group in IDC Central and Eastern Europe, Middle East, and Africa (CEMA), increased data usage is driving growth in mobile business services spending, with business-specific applications dependent on high-speed data connectivity.

Consumers will account for around 65% of European mobility spending throughout the forecast, with slightly more than half of this amount going toward mobile connectivity services, and most of the remainder going toward devices, mainly smartphones.

Banking is forecast to be the European industry leader for mobility spending in 2018, followed by discrete manufacturing and professional services. More than half of mobility spending among businesses will go to mobile connectivity services and enterprise mobility services. Mobile devices will be the second-largest area of spending, followed by still considerable investments in mobile applications and mobile application development platforms. The industries that will see the largest mobility spending growths over the forecast period are utilities, state/local government and banking with CAGRs above 6%.

From a technology perspective, mobility services will be the largest area of spending throughout the forecast period, surpassing $190 billion in 2022. Within the services component, mobile connectivity services will continue to represent the largest portion, albeit with relatively flat growth, while enterprise mobility services will post a CAGR exceeding 14%. Hardware will be the second largest technology category, with spending forecast to reach $129 billion in 2022. Despite being the smallest technology category, software will see strong spending growth over the forecast period (12.1% CAGR), driven by mobile enterprise applications. Businesses will also increase their development efforts with mobile application development platforms recording a five-year CAGR of 18.4%, making it the fastest-growing technology category overall. Enterprise mobility management and mobile enterprise security are the most mature segments in the software category, with expected CAGRs of approximately 8% reflecting that status.

Western Europe represents more than three-quarters of the European market and is forecast to account for approximately 79% of total value in 2022. "Western European companies now have a clear understanding of the benefit mobility technologies can deliver and are evolving their approach — focusing on applications integrated with enterprise systems," says Gabriele Roberti, research manager for IDC EMEA Customer Insights and Analysis. "In customer-centric industries, like financial services and retail, mobility is becoming a pivotal part of digital transformation." Among Western European countries, the United Kingdom, Germany, and France remain the top spenders on mobility technologies, accounting for more than half of the regional market.

“Central and Eastern European companies are adapting their business models to integrate mobility technologies, seeking more flexible company structures to foster innovation, improving the infrastructure and connectivity, and mitigating the security concerns,” says Petr Vojtisek, research analyst for IDC CEMA Customer Insights and Analysis. Among CEE countries, Russia remains the single biggest market, accounting for over 38% of overall European mobility spending, followed by the cluster of countries referred to as the Rest of CEE (Slovakia, Ukraine, and Croatia), and by Poland. The fastest-growing countries will be jointly the Czech Republic and Romania, each with a spending CAGR of around 1.8% throughout the forecast period.

Pan EU survey results point to increased appetite to shift physical security systems to the cloud to access big data insights and meet business objectives more readily.

A new study of 1500 IT decision makers across Europe into the attitudes towards and behaviours behind cloud adoption has provided some revelatory insight that will serve the physical security industry. The survey, conducted by Morphean, a Video Surveillance-as-a-Service (VSaaS) innovator, highlights not only a favourable shift towards cloud but also a need to adopt technology to extract the intelligent insights needed to accelerate business growth.

Respondents from organisations with 25 employees and above from the UK, France and Germany were asked to share their views on cloud technologies, cloud security, future cloud investment and new areas of cloud growth. The results showed that while nearly 9 out of 10 businesses surveyed are already using cloud-based software solutions, 89% of respondents would possibly or definitely move physical security technology such as video surveillance and access control to the cloud. Furthermore, 92% felt it to be important or very important that their physical security solutions meet their overall business objectives.

| Key survey findings include:

|

International Data Corporation's (IDC) EMEA Server Tracker shows that in the second quarter of 2018, the EMEA server market reported a year-on-year (YoY) increase in vendor revenues of 29.0% to $4.1 billion, with a YoY decline of 3.7% in units shipped to 515,000.

From a euro standpoint, 2Q18 EMEA server revenues increased 18.7% YoY to €3.4 billion. The top 5 vendors in the region and their quarterly revenues are displayed in the table below.

Top 5 EMEA Vendor Revenues ($M)

| Vendor | 2Q17 Server Revenue | 2Q17 Market Share | 2Q18 Server Revenue | 2Q18 Market Share | 2Q17/2Q18 Revenue Growth |

| HPE | 1,031.5 | 32.5% | 1,162.6 | 28.4% | 12.7% |

| Dell EMC | 599.9 | 18.9% | 916.3 | 22.4% | 52.7% |

| ODM Direct | 381.5 | 12.0% | 442.8 | 10.8% | 16.1% |

| IBM | 195.9 | 6.2% | 387.3 | 9.5% | 97.7% |

| Lenovo | 226.8 | 7.1% | 330.2 | 8.1% | 45.6% |

| Others | 739.0 | 23.3% | 853.1 | 20.8% | 15.4% |

| Total | 3,174.5 | 100.0% | 4,092.3 | 100.0% | 28.9% |

Source: IDC Quarterly Server Tracker, 2Q18

Reviewing the quarter at a product level, standard rack optimized grew 29.0% YoY, led by a strong performance in the U.K. and Germany. Standard multinode shipments grew 251.4% YoY, driven largely by the U.K., Germany, and the Netherlands. Custom multinode revenues remained strong, increasing 87.3% YoY. Higher average selling prices across standard and custom servers have driven improved revenues over the quarter. Large systems also performed well, with shipments increasing 48.6% YoY due to major refreshes in Denmark, Italy, France, and Germany.

"The second quarter of 2018 saw the first shipments of EPYC processors in Western Europe. Expectations are that these processors will align more closely to Moore's Law, a factor that will only drive faster adoption in the future," said Eckhardt Fischer, senior research analyst, IDC Western Europe.

"ODM growth has been driven by datacenter buildout of several hyperscale public cloud providers — AWS, Microsoft, Google — in Western Europe. This growth has slowed down somewhat in recent quarters," said Kamil Gregor, senior research analyst, IDC Western Europe. "France is the major exception, with both AWS and Microsoft opening new datacenters around Paris and Marseille. The hyperscalers now focus on diversifying the portfolio of EMEA countries with datacenter presence, which will translate into significant ODM growth in new geographies such as Austria."

Regional Highlights

Segmenting market performance at a Western European level, many major countries experienced overall shipment declines accompanied by significant increases in average selling prices. As Western Europe's largest market during the quarter, Germany experienced a 14.0% decline in shipments with a 23.2% increase in revenues. The U.K. saw an 8.7% shipment decline and a 29.9% revenue increase, which helped propel overall Western European revenues over the second quarter. U.K. growth was driven by revenue increases for all top 3 vendors, particularly in standard rack optimized and blade performance. Ireland saw a significant revenue boost from a large hyperscale deal, which drove Standard Multi Node revenues to $28.5 million. Spain was the only Western European country to see a revenue decline over the year, due to a major multinode deal in 2Q17 that significantly impacted the country's total spend.

"Central and Eastern Europe, the Middle East, and Africa server revenue increased by 23.3% year over year to $790.1 million in the second quarter of 2018," said Jiri Helebrand, research manager, IDC CEMA. "Strong server sales were primarily the result of undergoing refresh cycles and ODM server shipments. The Central and Eastern Europe subregion grew 33.7% year over year with revenue of $413.7 million. Romania, Poland, and Czech Republic recorded the strongest growth, supported by a solid economic situation in the region and interest in investing to update datacenter infrastructure. The Middle East and Africa subregion grew 13.6% year over year to $376.5 million in 2Q18, somewhat slower compared with WE and CEE, partly due to seasonality and challenges due to weakening local currencies. Qatar was the top performer, benefitting from a large public deal. South Africa and Egypt also recorded solid growth driven by investments in new IT infrastructure to support next-generation applications."

Taxonomy Changes

Modular server category: Server form factors have been amended to include the new "modular" category that encompasses today's blade servers and density-optimized servers (which are being renamed multinode servers). As the differentiation between these two types of servers continues to become blurred, IDC is moving forward with the "modular server" category as it better reflects the directions in which vendors and the entire market are moving when it comes to server design.

Multinode (density-optimized) servers: Modular platforms that do not meet IDC's definition of a blade are classified as multinode. This was formerly called density optimized in IDC's server research and server-related tracker products.

Analyst firm Gartner has been busy in recent weeks, producing a range of reports around its annual Gartner Symposium/ITxpo. Technology trends, possible digital disruptions and predictions all come under the spotlight.

Gartner has highlighted the top strategic technology trends that organizations need to explore in 2019. Gartner defines a strategic technology trend as one with substantial disruptive potential that is beginning to break out of an emerging state into broader impact and use, or which are rapidly growing trends with a high degree of volatility reaching tipping points over the next five years.

“The Intelligent Digital Mesh has been a consistent theme for the past two years and continues as a major driver through 2019. Trends under each of these three themes are a key ingredient in driving a continuous innovation process as part of a ContinuousNEXT strategy,” said David Cearley, vice president and Gartner Fellow. “For example, artificial intelligence (AI) in the form of automated things and augmented intelligence is being used together with IoT, edge computing and digital twins to deliver highly integrated smart spaces. This combinatorial effect of multiple trends coalescing to produce new opportunities and drive new disruption is a hallmark of the Gartner top 10 strategic technology trends for 2019.”

The top 10 strategic technology trends for 2019 are:

Autonomous Things

Autonomous things, such as robots, drones and autonomous vehicles, use AI to automate functions previously performed by humans. Their automation goes beyond the automation provided by rigid programing models and they exploit AI to deliver advanced behaviors that interact more naturally with their surroundings and with people.

“As autonomous things proliferate, we expect a shift from stand-alone intelligent things to a swarm of collaborative intelligent things, with multiple devices working together, either independently of people or with human input,” said Mr. Cearley. “For example, if a drone examined a large field and found that it was ready for harvesting, it could dispatch an “autonomous harvester.” Or in the delivery market, the most effective solution may be to use an autonomous vehicle to move packages to the target area. Robots and drones on board the vehicle could then ensure final delivery of the package.”

Augmented Analytics

Augmented analytics focuses on a specific area of augmented intelligence, using machine learning (ML) to transform how analytics content is developed, consumed and shared. Augmented analytics capabilities will advance rapidly to mainstream adoption, as a key feature of data preparation, data management, modern analytics, business process management, process mining and data science platforms. Automated insights from augmented analytics will also be embedded in enterprise applications — for example, those of the HR, finance, sales, marketing, customer service, procurement and asset management departments — to optimize the decisions and actions of all employees within their context, not just those of analysts and data scientists. Augmented analytics automates the process of data preparation, insight generation and insight visualization, eliminating the need for professional data scientists in many situations.

“This will lead to citizen data science, an emerging set of capabilities and practices that enables users whose main job is outside the field of statistics and analytics to extract predictive and prescriptive insights from data,” said Mr. Cearley. “Through 2020, the number of citizen data scientists will grow five times faster than the number of expert data scientists. Organizations can use citizen data scientists to fill the data science and machine learning talent gap caused by the shortage and high cost of data scientists.”

AI-Driven Development

The market is rapidly shifting from an approach in which professional data scientists must partner with application developers to create most AI-enhanced solutions to a model in which the professional developer can operate alone using predefined models delivered as a service. This provides the developer with an ecosystem of AI algorithms and models, as well as development tools tailored to integrating AI capabilities and models into a solution. Another level of opportunity for professional application development arises as AI is applied to the development process itself to automate various data science, application development and testing functions. By 2022, at least 40 percent of new application development projects will have AI co-developers on their team.

“Ultimately, highly advanced AI-powered development environments automating both functional and nonfunctional aspects of applications will give rise to a new age of the ‘citizen application developer’ where nonprofessionals will be able to use AI-driven tools to automatically generate new solutions. Tools that enable nonprofessionals to generate applications without coding are not new, but we expect that AI-powered systems will drive a new level of flexibility,” said Mr. Cearley.

Digital Twins

A digital twin refers to the digital representation of a real-world entity or system. By 2020, Gartner estimates there will be more than 20 billion connected sensors and endpoints and digital twins will exist for potentially billions of things. Organizations will implement digital twins simply at first. They will evolve them over time, improving their ability to collect and visualize the right data, apply the right analytics and rules, and respond effectively to business objectives.

“One aspect of the digital twin evolution that moves beyond IoT will be enterprises implementing digital twins of their organizations (DTOs). A DTO is a dynamic software model that relies on operational or other data to understand how an organization operationalizes its business model, connects with its current state, deploys resources and responds to changes to deliver expected customer value,” said Mr. Cearley. “DTOs help drive efficiencies in business processes, as well as create more flexible, dynamic and responsive processes that can potentially react to changing conditions automatically.”

Empowered Edge

The edge refers to endpoint devices used by people or embedded in the world around us. Edge computing describes a computing topology in which information processing, and content collection and delivery, are placed closer to these endpoints. It tries to keep the traffic and processing local, with the goal being to reduce traffic and latency.

In the near term, edge is being driven by IoT and the need keep the processing close to the end rather than on a centralized cloud server. However, rather than create a new architecture, cloud computing and edge computing will evolve as complementary models with cloud services being managed as a centralized service executing, not only on centralized servers, but in distributed servers on-premises and on the edge devices themselves.

Over the next five years, specialized AI chips, along with greater processing power, storage and other advanced capabilities, will be added to a wider array of edge devices. The extreme heterogeneity of this embedded IoT world and the long life cycles of assets such as industrial systems will create significant management challenges. Longer term, as 5G matures, the expanding edge computing environment will have more robust communication back to centralized services. 5G provides lower latency, higher bandwidth, and (very importantly for edge) a dramatic increase in the number of nodes (edge endoints) per square km.

Immersive Experience

Conversational platforms are changing the way in which people interact with the digital world. Virtual reality (VR), augmented reality (AR) and mixed reality (MR) are changing the way in which people perceive the digital world. This combined shift in perception and interaction models leads to the future immersive user experience.

“Over time, we will shift from thinking about individual devices and fragmented user interface (UI) technologies to a multichannel and multimodal experience. The multimodal experience will connect people with the digital world across hundreds of edge devices that surround them, including traditional computing devices, wearables, automobiles, environmental sensors and consumer appliances,” said Mr. Cearley. “The multichannel experience will use all human senses as well as advanced computer senses (such as heat, humidity and radar) across these multimodal devices. This multiexperience environment will create an ambient experience in which the spaces that surround us define “the computer” rather than the individual devices. In effect, the environment is the computer.”

Blockchain

Blockchain, a type of distributed ledger, promises to reshape industries by enabling trust, providing transparency and reducing friction across business ecosystems potentially lowering costs, reducing transaction settlement times and improving cash flow. Today, trust is placed in banks, clearinghouses, governments and many other institutions as central authorities with the “single version of the truth” maintained securely in their databases. The centralized trust model adds delays and friction costs (commissions, fees and the time value of money) to transactions. Blockchain provides an alternative trust mode and removes the need for central authorities in arbitrating transactions.

”Current blockchain technologies and concepts are immature, poorly understood and unproven in mission-critical, at-scale business operations. This is particularly so with the complex elements that support more sophisticated scenarios,” said Mr. Cearley. “Despite the challenges, the significant potential for disruption means CIOs and IT leaders should begin evaluating blockchain, even if they don’t aggressively adopt the technologies in the next few years.”

Many blockchain initiatives today do not implement all of the attributes of blockchain — for example, a highly distributed database. These blockchain-inspired solutions are positioned as a means to achieve operational efficiency by automating business processes, or by digitizing records. They have the potential to enhance sharing of information among known entities, as well as improving opportunities for tracking and tracing physical and digital assets. However, these approaches miss the value of true blockchain disruption and may increase vendor lock-in. Organizations choosing this option should understand the limitations and be prepared to move to complete blockchain solutions over time and that the same outcomes may be achieved with more efficient and tuned use of existing nonblockchain technologies.

Smart Spaces

A smart space is a physical or digital environment in which humans and technology-enabled systems interact in increasingly open, connected, coordinated and intelligent ecosystems. Multiple elements — including people, processes, services and things — come together in a smart space to create a more immersive, interactive and automated experience for a target set of people and industry scenarios.

“This trend has been coalescing for some time around elements such as smart cities, digital workplaces, smart homes and connected factories. We believe the market is entering a period of accelerated delivery of robust smart spaces with technology becoming an integral part of our daily lives, whether as employees, customers, consumers, community members or citizens,” said Mr. Cearley.

Digital Ethics and Privacy

Digital ethics and privacy is a growing concern for individuals, organizations and governments. People are increasingly concerned about how their personal information is being used by organizations in both the public and private sector, and the backlash will only increase for organizations that are not proactively addressing these concerns.

“Any discussion on privacy must be grounded in the broader topic of digital ethics and the trust of your customers, constituents and employees. While privacy and security are foundational components in building trust, trust is actually about more than just these components,” said Mr. Cearley. “Trust is the acceptance of the truth of a statement without evidence or investigation. Ultimately an organization’s position on privacy must be driven by its broader position on ethics and trust. Shifting from privacy to ethics moves the conversation beyond ‘are we compliant’ toward ‘are we doing the right thing.’”

Quantum Computing

Quantum computing (QC) is a type of nonclassical computing that operates on the quantum state of subatomic particles (for example, electrons and ions) that represent information as elements denoted as quantum bits (qubits). The parallel execution and exponential scalability of quantum computers means they excel with problems too complex for a traditional approach or where a traditional algorithms would take too long to find a solution. Industries such as automotive, financial, insurance, pharmaceuticals, military and research organizations have the most to gain from the advancements in QC. In the pharmaceutical industry, for example, QC could be used to model molecular interactions at atomic levels to accelerate time to market for new cancer-treating drugs or QC could accelerate and more accurately predict the interaction of proteins leading to new pharmaceutical methodologies.

“CIOs and IT leaders should start planning for QC by increasing understanding and how it can apply to real-world business problems. Learn while the technology is still in the emerging state. Identify real-world problems where QC has potential and consider the possible impact on security,” said Mr. Cearley. “But don’t believe the hype that it will revolutionize things in the next few years. Most organizations should learn about and monitor QC through 2022 and perhaps exploit it from 2023 or 2025.”

Digital disruptions

Gartner has also revealed seven digital disruptions that organizations may not be prepared for. These include several categories of disruption, each of which represents a significant potential for new disruptive companies and business models to emerge.

“The single largest challenge facing enterprises and technology providers today is digital disruption,” said Daryl Plummer, vice president and Gartner Fellow. “The virtual nature of digital disruptions makes them much more difficult to deal with than past technology-triggered disruptions. CIOs must work with their business peers to pre-empt digital disruption by becoming experts at recognizing, prioritizing and responding to early indicators.”

Gartner analysts presented seven key digital disruptions CIOs may not seeing coming during Gartner Symposium/ITxpo, which is taking place here through Thursday.

Quantum Computing

Quantum computing (QC) is a type of nonclassical computing that is based on the quantum state of subatomic particles. Classic computers operate using binary bits where the bit is either 0 or 1, true or false, positive or negative. However, in QC, the bit is referred to as a quantum bit or qubit. Unlike the strictly binary bits of classic computing, qubits can represent 1 or 0 or a superposition of both partly 0 and partly 1 at the same time.

Superposition is what gives quantum computers speed and parallelism, meaning that these computers could theoretically work on millions of computations at once. Further, qubits can be linked with other qubits in a process called entanglement. When combined with superposition, quantum computers could process a massive number of possible outcomes at the same time.

“Today’s data scientists, focused on machine learning (ML), artificial intelligence (AI) and data and analytics, simply cannot address some difficult and complex problems because of the compute limitations of classic computer architectures. Some of these problems could take today’s fastest supercomputers months or even years to run through a series of permutations, making it impractical to attempt,” said Mr. Plummer. “Quantum computers have the potential to run massive amounts of calculations in parallel in seconds. This potential for compute acceleration, as well as the ability to address difficult and complex problems, is what is driving so much interest from CEOs and CIOs in a variety of industries. But we must always be conscious of the hype surrounding the quantum computing model. QC is good for a specific set of problem solutions, not all general-purpose computing.”

Real-Time Language Translation

Real-time language translation could, in effect, fundamentally change communication across the globe. Devices such as translation earbuds and voice and text translation services can perform translation in real-time, breaking down language barriers with friends, family, clients and colleagues. This technology could not only disrupt intercultural language barriers, but also language translators as this role may no longer be needed.

“To prepare for this disruption, CIOs should equip employees in international jobs with experimental real-time translators to pilot streamlined communication,” said Mr. Plummer. “This will help establish multilingual disciplines to help employees work more effectively across languages.”

Nanotechnology

Nanotechnology is science, engineering and technology conducted at the nanoscale — 1 to 100 nanometers. The implications of this technology is that the creation of solutions involve individual atoms and molecules. Nanotech is used to create new effects in materials science, such as self-healing materials. Applications in medicine, electronics, security and manufacturing herald a world of small solutions that fill in the gaps in the macroverse in which we live.

“Nanotechnology is rapidly becoming as common a concept as many others, and yet still remains sparsely understood in its impact to the world at large,” said Mr. Plummer. “When we consider applications that begin to allow things like 3D printing at nanoscale, then it becomes possible to advance the cause of printed organic materials and even human tissue that is generated from individual stem cells. 3D bioprinting has shown promise and nanotech is helping deliver on it.”

Swarm Intelligence

Digital business will stretch conventional management methods past the breaking point. The enterprise will need to make decisions in real time about unpredictable events, based on information from many different sources (such as Internet of Things [IoT] devices) beyond the organization’s control. Humans move too slowly, stand-alone smart machines cost too much, and hyperscale architectures cannot deal with the variability. Swarm intelligence could tackle the mission at a low cost.

Swarm intelligence is the collective behavior of decentralized, self-organized systems, natural or artificial. A swarm consists of small computing elements (either physical entities or software agents) that follow simple rules for coordinating their activities. Such elements can be replicated quickly and inexpensively. Thus, a swarm can be scaled up and down easily as needs change. CIOs should start exploring the concept to scale management, especially in digital business scenarios.

Human-Machine Interfaces

Human-machine interface (HMI) offers solutions providers the opportunity to differentiate with innovative, multimodal experiences. In addition, people living with disabilities benefit from HMIs that are being adapted to their needs, including some already in use within organizations of all types. Technology will give some of these people “superabilities,” spurring people without disabilities to also employ the technology to keep up.

For example, electromyography (EMG) wearables allow current users who would be unable to do so otherwise to use smartphones and computers through the use of sensors that measure muscle activity. Muscular contraction generates electrical signals that can be measured from the skin surface. Sensors may be placed on a single part or multiple parts of the body, as appropriate to the individual. The gestures are in turn interpreted by a HMI linked to another device, such as a PC or smartphone. Wearable devices using myoelectric signals have already hit the consumer market and will continue migrating to devices intended for people with disabilities.

Software Distribution Revolution

Software procurement and acquisition is undergoing a fundamental shift. The way in which software is located, bought and updated is now in the province of the software distribution marketplace. With the continued growth of cloud platforms from Amazon Web Services (AWS), Microsoft, Google, IBM and others, as well as the ever-increasing introduction of cloud-oriented products and services, the role of marketplaces for selling and buying is gathering steam. The cloud platform providers realize (to varying degrees) that they must remove as much friction as possible in the buying and owning processes for both their own offerings and the offerings of their independent software vendors (ISVs) (i.e., partners). ISVs or cloud technology service providers (TSPs) recognize the need to reach large and increasingly diverse buying audiences.

“Establishing one’s own marketplace or participating as a provider in a third-party marketplace is a route to market that is becoming increasingly popular. Distributors and other third parties also see the opportunity to create strong ecosystems (and customer bases) while driving efficiencies for partners and technology service providers,” said Mr. Plummer.

Smartphone Disintermediation

The use of other devices, such as virtual personal assistants (VPAs), smartwatches and other wearables, may mean a shift in how people continue to use the smartphone.

“Smartphones are, today, critical for connections and media consumption. However, over time they will become less visible as they stay in pockets and backpacks. Instead, consumers will use a combination of voice-input and VPA technologies and other wearable devices to navigate a store or public space such as an airport or stadium without walking down the street with their eyes glued to a smartphone screen,” said Mr. Plummer.

CIOs and IT leaders should use wearability of a technology as a guiding principle and investigate and pilot wearable solutions to improve worker effectiveness, increase safety, enhance customer experiences and improve employee satisfaction.

Top predictions for IT organisations and users for 2019 and beyond

Gartner has revealed its top predictions for 2019 and beyond. Gartner’s top predictions examine three fundamental effects of continued digital innovation: artificial intelligence (AI) and skills, cultural advancement, and processes becoming products that result from increased digital capabilities and the emergence of continuous conceptual change in technology.

“As the advance of technology concepts continues to outpace the ability of enterprises to keep up, organizations now face the possibility that so much change will increasingly seem chaotic. But chaos does not mean there is no order. The key is that CIOs will need to find their way to identifying practical actions that can be seen within the chaos,” said Daryl Plummer, vice president and Gartner Fellow, Distinguished.

“Continuous change can be made into an asset if an organization sharpens its vision in order to see the future coming ahead of the change that vision heralds. Failing that, there must be a focus on a greater effort to see the need to shift the mindset of the organization. With either of these two methods, practical actions can be found in even the seemingly unrelated predictions of the future.”

Gartner analysts presented the top 10 strategic predictions during Gartner Symposium/ITxpo, which is taking place here through Thursday.

Through 2020, 80 percent of AI projects will remain alchemy, run by wizards whose talents won’t scale widely in the organization.

In the last five years, the increasing popularity of AI techniques has facilitated the proliferation of projects across a wide number of organizations worldwide. However, change is still outpacing the production of competent AI professionals. When it comes to AI techniques, the needed talent is not only technically demanding, mathematically savvy data scientists to inventive data engineers, and rigorous operation research professionals to shrewd logisticians, are needed.

“The large majority of existing AI techniques talents are skilled at cooking a few ingredients, but very few are competent enough to master a few recipes — let alone invent new dishes,” said Mr. Plummer. “Through 2020, a large majority of AI projects will remain craftily prepared in artisan IT kitchens. The premises of a more systematic and effective production will come when organizations stop treating AI as an exotic cuisine and start focusing on business value first.”

By 2023, there will be an 80 percent reduction in missing people in mature markets compared with 2018 due to AI face recognition.

Over the next few years, facial matching and 3D facial imaging will become important elective aspects of capturing data about vulnerable populations, such as children and the elderly or people who are otherwise impaired. Such measures will reduce the number of missing people without adding large numbers of dramatic discoveries in large public crowds, which is the popularly imagined environment. The most important advances will take place with more robust image capture, image library development, image analysis strategy and public acceptance. Additionally, with improved on-device/edge AI capability on cameras, public and private sectors will be able to prefilter necessary image data instead of sending all video streams to cloud for processing.

By 2023, U.S. emergency department visits will be reduced by 20 million due to enrollment of chronically ill patients in AI-enhanced virtual care.

Clinician shortages, particularly in rural and some urban areas, are driving healthcare providers to look for new approaches to delivering care. In many cases, virtual care has shown it can offer care more conveniently and cost-effectively than conventional face-to-face care. Gartner research shows that successful use of virtual care helps control costs, improves quality of delivery and improves access to care. Without change, the traditionally rigid physical care delivery methods will increasingly render healthcare providers noncompetitive. This transition will not come easily, and will require modification of cultural attitudes and healthcare financial models.

By 2023, 25 percent of organizations will require employees to sign an affidavit to avoid cyberbullying, but 70 percent of these initiatives will fail.

To prevent actions that have a detrimental impact on the organization’s reputation, employers want to strengthen employee behavioral guidelines (such as anti-harassment and discrimination norms) when using social media. Signing an affidavit of agreement to refrain from cyberbullying is a logical next step. Alternatively, legacy code of conduct agreements should be updated to incorporate cyberbullying.

“However, cyberbullying isn’t stopped by signing an agreement; it’s stopped by changing culture,” said Mr. Plummer. “That culture change should include teaching employees how to recognize what cyberbullying is and provide a means of reporting it when they see it. Formulate realistic policies that balance deterrence measures and strict definition, regulation or behavior monitoring. Make sure employees understand why these measures are needed and how they benefit the organization and themselves.”

Through 2022, 75 percent of organizations with frontline decision-making teams reflecting diversity and an inclusive culture will exceed their financial targets.

Business leaders across all functions understand the positive business impact of diversity and inclusion (D&I). A key business requirement currently is the need for better decisions made fast at the lowest level possible, ideally at the frontline. To create inclusive teams, organizations need to move beyond obvious diversity cues such as gender and race, to seek out people with diverse work styles and thought patterns. A final key factor to ensure D&I initiatives directly contribute to business results is to manage scale and employee engagement with them. There are numerous technologies that can significantly enhance the scale and effectiveness of interventions to diagnose the current state of inclusion, develop leaders who foster inclusion and embed inclusion into daily business execution.

By 2021, 75 percent of public blockchains will suffer “privacy poisoning” — inserted personal data that renders the blockchain noncompliant with privacy laws.

Companies that implement blockchain systems without managing privacy issues by design run the risk of storing personal data that can’t be deleted without compromising chain integrity. A public blockchain is a pseudo-anarchic autonomous system such as the internet. Nobody can sue the internet, or make it accountable for the data being transmitted. Similarly, a public blockchain can’t be made accountable for the content it bears.

Any business operating processes using a public blockchain must maintain a copy of the entire blockchain as part of its systems of record. A public blockchain poisoned with personal data can’t be replaced, anonymized and/or structurally deleted from the shared ledger. Therefore, the business will be unable to resolve its needs to keep records with its obligations to comply with privacy laws.

By 2023, ePrivacy regulations will increase online costs by minimizing the use of “cookies” thus crippling the current internet ad revenue machine.

GDPR and upcoming regulations, including The California Consumer Privacy Act of 2018 and ePrivacy continue to limit the use of cookies and put greater pressure on what constitutes informed consent. An individual may not be able to simply accept the use of cookies, as they do now, but, will have to give explicit consent to what the cookie track and how that tracking will be used.

“None of the current nor future legislation will be a 100 percent prohibition on personalized ads. However, the legislation does cripple the current internet advertising infrastructure and the players within,” said Mr. Plummer. “The current ad revenue machine is an intricate overlapping of companies that are able to track individuals, compile personal data, analyze, predict and target advertisements. By interrupting the data flow, as well as causing some use to be illegal, the delicate balance of service and provision, that has been built-up over decades of free use of data, is at the very least, upset.”

Through 2022, a fast path to digital will be converting internal capabilities to external revenue-generating products using cloud economics and flexibility.

For years, internal IT organizations that developed unique capabilities wanted to take them to market to generate value for their organizations, but economic, technical and other issues did not allow this to happen. Cloud infrastructure and cloud services providers change all of these dynamics. Capacity for supporting scale of the application solution is the cloud provider’s responsibility. Market-dominant app stores take over distribution and aspects of marketing. Simpler, accessible cloud tools make the support and enhancement of applications as products easier. Cloud also shifts the impacts on internal financial statements from below the line to above the line areas. As more aggressive companies convert internal processes and data into marketable solutions and start to report digital revenue gains, other organizations will follow suit.

By 2022, companies leveraging the “gatekeeper” position of the digital giants will capture 40 percent global market share, on average, in their industry.

Global market share of the top four firms by industry fell by four percentage points between 2006 and 2014 as European firms, likely weakened and distracted by economic and monetary crises, lost market share to a rising group of emerging market firms, particularly those from China.

“We believe this is about to change as the powerful economics of scale and network effects assert themselves on a global basis. Innovations in digital technology are producing an ever greater density of connections, even more value from the intelligence captured across those connections (such as through machine learning and AI) and greater immediacy of exchange between connections (such as through faster data transmission speeds),” said Mr. Plummer.

But the path to achieving and sustaining a dominant market share position globally is likely to lead to and through one or more of the digital giants (Google, Apple, Facebook, Amazon, Baidu, Alibaba and Tencent) and their ecosystems. These giants already command massive consumer share and have begun to use their “gatekeeper” positions and infrastructure to enter the B2B space as well. They are keenly aware of the trend to connect, are leading the advance into capabilities such as AI, and have aggressively taken actions to invade the “physical world” and make it part of their digital world — a world they control.

Through 2021, social media scandals and security breaches will have effectively zero lasting consumer impact.

The core point of this prediction is that the benefits of using digital technologies outweigh potential, but unknown, future risks. Consumer adoption of digital technologies will continue to grow, and backlash of organizations taking technology too far will be short term.