The recent Gatwick/Vodafone IT crash – for which I’ve heard conflicting views as to what and who exactly caused the problem (!) – put disaster recovery firmly back in the spotlight. In simple terms, disaster recovery is a glorified insurance policy and, as such, individual companies have to choose what level of cover they a) want and b) can afford, in the same way that we all choose whether to take out home and contents, life, health, car, redundancy, pet and various other insurance policies. Only the very wealthy – whether companies or individuals – can probably afford every type of insurance policy out there, or the very best, most resilient disaster recovery plan. So, choices have to be made as to the type and level of cover that is essential.

In the case of Gatwick, it could be argued that, despite the negative fallout from the IT failure, the airport is unlikely to lose much in the way of business – after all, the UK only has so many international airports, and very few which offer the quantity of flights and destinations which Gatwick does. So, in many cases, customers cannot choose to fly from another airport, and for the carriers, the capacity which Gatwick offers is just not available at virtually any other UK airport.

All of which leads me to suggest that, if the passenger information display boards fail, and re replaced by written details, does it really matter?!

The alternative is that Gatwick spends millions (which it seems it chose not to originally) on ensuring the complete resilience of its IT infrastructure, such that there is no possibility of a single point of failure existing anywhere whatsoever.

In conclusion, as I’ve written before, there is a type and size of organisation which, although I am sure it does everything it can within reason to ensure a high level of customer service, doesn’t actually have to worry that much if customers feel let down, as these customers have very few, if any, alternatives.

Several years ago, I remember reading an interview with the head of one of the budget airlines where he, more or less, stated, ‘if you’re paying such a low price to fly to Nice and back, can you really complain that much if your luggage doesn’t make it?’! And he’s right. In some industries, where competition is high, customer service, underpinned by reliable, resilient IT, is massively important. In others, where true competition is thin on the ground, good customer service is a nice to have, but not an essential.

So, whisper it quietly, when it comes to (re-)assessing your disaster recovery plan, take a minute to understand what might go wrong, what impact this will have on your customers, and then decide how much it’s worth spending on what kind of a solution – and you might just find that you don’t need to spend that much money at all…

Survey unveils huge differences between knowing how to be digitally effective versus how UK businesses are approaching digital development.

New research has highlighted that as businesses strive to meet the needs of today’s digitally empowered consumer there is a gap between businesses knowing what drives digital effectiveness and current digital practices.

The findings were part of a larger study exploring the opinions and digital practices of UK businesses. It also compared the views of the top performing businesses with those of their mainstream counterparts.1

In the report,

It was quite a different story when the survey asked the same respondents about their actual digital practices.

While 97% believe that user-centricity leads to better outcomes, overall, only 65% said that they put user needs at the heart of their development. Comparing top performing businesses and the mainstream, three-quarters of top performing businesses say they put the user needs at the heart of their development but only half of the mainstream group say the same.

It was a similar result looking at ‘belief’ and ‘practices’ regarding cross-disciplinary teams with just over 70% of all respondents implementing this approach (despite nearly all saying this is necessary for success). Again top-performing companies are slightly more advanced towards multi-disciplinary teams in comparison to their mainstream counterparts (81% versus 66%).

While 96% of the company respondents agree team learning and reflection is a necessity for digital transformation, half (52%) of organisations don’t take time out to learn and reflect. A mere 40% of mainstream businesses said that their teams take time out to learn and reflect on what they are doing.

And looking at practices around long term versus short term goals; while a massive 92% say businesses that concentrate on the long term are likely to be more successful, in practice 53% actually admit to focusing on short-term targets. Only 35% of the mainstream group said that they focus more on long-term goals than short-term targets.

Agility is also cited as a best practice (88% of all companies think agile approaches are more likely to be more successful than traditional ones) but overall only 58% adopt agile methods; this figure dropped to less than half (46%) of mainstream companies and only 58% focus on outcomes in development and are happy to change requirements if needed. 70% of top performing businesses adopt agile processes and three-quarters of the top performing businesses say that they focus on outcomes in development and are happy to change requirements if needed.

Other key findings in the survey include:

The survey was commissioned by digital agency Code Computerlove. CEO of Code Computerlove, Tony Foggett says, “While our survey was primarily designed to show levels awareness around Product Thinking, a mindset and approach to digital product development, it was striking to see the difference between what companies think will help them drive digital effectiveness and what is happening within their businesses.

“There is clear consensus that digital approaches need to be customer-centric, agile and data-driven, all principals that fall into the Product Thinking mindset that we implement. But all business – whether they are out performing their competition or not – seem a way off actually truly embracing these techniques.

“The survey also highlighted that while most companies agree what the basic principals of a digitally transformed business are, it is the EXECUTION that makes the difference between best performers and the rest. The gap between thinking and doing is much smaller for top-performing companies than it is for their mainstream counterparts.

“So what’s stopping businesses from applying a Product Thinking approach as a means to get ahead? One theory is the degree to which they can, and have, changed their business culture. As businesses move from the traditional ways of working to the more agile/adaptable approaches required for the 21st century they come up against deeply ingrained structural and cultural barriers.

“While agreement with the principals of a digital-first organisation is all but unanimous, the majority of businesses still have cultures that can best be described as retrograde.”

Foggett added, “Ultimately ‘Product Thinking’ is a cultural model that pertains not only to a mindset (a collective drive for effectiveness by continually growing value for the customer and company) but also the methodologies, roles and organisational design required to see the approach through.

“While it’s impossible to prove that it’s the implementation of these Product Thinking ideas that is responsible for ‘success’ in the top performing businesses the correlation is extremely strong. In organisational culture, management approach, development philosophy, every area of business practice we looked at showed the firms who acted on the product philosophy were more likely to be successful.”

Based on interviews with 150 IT decision-makers across the UK – as part of an EMEA-wide study – the research gleaned insights as to organisation’s approaches to mobility and digital transformation; the drivers and adoption of accessories; the impact of these within the workplace; and their approach to accessories moving forward.

It found a huge 94 per cent of UK organisations are either planning, about to start or currently undergoing some form of digital transformation. A further 5 per cent claim to have already ‘completed’ digital transformation, which includes employers upgrading the devices provided to their workforce to enable greater levels of productivity, collaboration and agility, as well as improving talent attraction and retention.

While nearly every organisation across the globe is trying to digitally transform, many projects are stalling due to ‘digital deadlock’ – a term coined by IDC, which describes the blockers restricting employers from managing change within their business. Ensuring individuals are educated, engaged and know how to correctly deploy technologies is fundamental to the success of any digital transformation project, according to IDC.

Digital transformation projects are stalling because organisations are failing to manage change and the impact such change will have on their employees. Nathan Budd, Senior Consulting Manager at IDC added, "For organisations serious about delivering transformed working environments, agility, productivity and innovation, it’s time to invest in the right tools for the job. This requires a fundamental shift in the way in which leaders introduce new technology, the way they define customer experience, as well as the way in which they engage employees and stakeholders.”

Tackling the productivity challenge

With organisations always looking for new ways to boost employee productivity levels – 58 per cent admitted to changing their working environment in an effort to do so – it is not surprising workplace accessories are becoming a key productivity driver. In fact, many found that inappropriate, or a lack of, accessories significantly harmed employee productivity.

There is also a stark contrast in the value that businesses that have completed digital transformation projects place on the role of accessories – such as premium bags and cases, docking stations, privacy screens and cable locks – in driving productivity, compared to those that have not yet undergone digital transformation.

Marcus Harvey, Regional Director of Commercial Business EMEA at Targus said, “The findings of this study showcase a clear relationship between getting the right workplace tools in place and improved productivity and employee engagement.

“Yet, getting to this point requires leadership and team consultation. Despite technology being the driver behind digital transformation, people are the true agents of change, so making sure they are engaged and bought into the vision behind such moves is critical to improving the working environment,” he added.

Alongside this, measurement of helpdesk inquiries shows employees reporting higher levels of inquiries or complaints regarding power requirements, peripherals, missing accessories, and damaged devices due to lack of appropriate cases.

However, while four in five (77 per cent) IT managers admitted to receiving complaints relating to missing or unavailable accessories, what makes an interesting observation is that fewer issues are reported to IT than to line managers. These findings suggest IT departments, which could be responsible for accessory deployments, may be in some way detached from the experiences of those using them.

Facilitating collaboration and new ways of working

Three in five (61 per cent) organisations claimed to be making changes to the accessories they offer in order to facilitate greater collaboration, as well as more than half (59 per cent) seeking to adapt to new technology changes.

As an increasing number of employees are now required to work remotely – three in five (61 per cent) claimed their staff have roles that involve some form of travel, and around a quarter (23 per cent) managing more flexible, non-deskbound roles – mobility has changed the way staff achieve results. With many individuals now relying on accessories to create the same working experience they would get when working from the office - when working remotely or from home – it is essential employers are providing the right tools and technologies to make this possible.

“Working outside the office has never been easier and the very concept of work is ever changing,” Harvey added. “Businesses must ensure their accessories support innovation and productivity wherever their teams are working – not only enhancing the quality of work but also attracting and retaining the best talent.”

Providing the best tools for the best people

With technology transcending geographical location, there have never been more opportunities for talented workers to jump ship and move onto alternative employers. Understanding the importance of retaining valuable members of staff, a third (33 per cent) of organisations are changing their working environments to retain talent, while the same number of respondents see the benefits of mobile working environments for talent retention.

A recent article by Steve Gillaspy of Intel outlined many of the challenges faced by those responsible for designing, operating, and sustaining the IT and physical support infrastructure found in today's data centers. This paper targets four of the five macro trends discussed by Gillaspy, how they influence the decision making processes of data center managers, and the role that power infrastructure plays in mitigating the effects of the following trends.

Jul 19th, 2018

In Datacentre & Network security, Identity/Access Management,

Research conducted at Infosecurity Europe by Lastline shines a light on security best practices, as well as shifting attitudes to cryptocurrency.

Lastline Inc has published the results of a survey conducted at Infosecurity Europe 2018, which suggests that 45 percent of infosec professionals reuse passwords across multiple user accounts – a basic piece of online hygiene that the infosec community has been attempting to educate the general public about for the best part of a decade.

The research also suggested that 20 percent of security professionals surveyed had used unprotected public WiFi in the past, and 47 percent would be cautious about buying the latest gadgets due to security concerns. “The fact that elements of the security community are not listening to their own advice around security best practices and setting a good example is somewhat worrying,” said Andy Norton, director of threat intelligence at Lastline.

“Breaches are a fact of life for both businesses and individuals now, and reusing passwords across multiple accounts makes it much easier for malicious actors to compromise additional accounts, including access to corporate data, to steal confidential or personal information. The attendees at Infosecurity Europe should be significantly more aware of these issues than the average consumer.”

The survey also identified a shift in the changing attitudes of the security community to cryptocurrency. Similarly to the study conducted at RSA, 20 percent of survey respondents suggested they would take their salary in cryptocurrency, with a whopping 92 percent of individuals suggesting that they have used cryptocurrency to purchase gift cards.

“Cryptocurrencies are emerging from the murky waters they have occupied since conception, and are getting closer and closer to financial legitimacy,” continued Norton. “In the next few years we are likely to see some of the more mainstream currencies such as Bitcoin being accepted by large online retailers, and generally moving away from their current association with instability and criminality.”

Jul 26th, 2018

In Colocation/Hosting, Security, Storage, Platform, Digital Business, Infrastructure,

New research finds that IT leaders are divided on the future of ‘cloud’ as a term.

New research has revealed that the term ‘cloud’ could be obsolete as soon as 2025. The cloud has become so deeply embedded in business performance that the word itself may soon no longer be required, with a quarter (26 per cent) of IT decision makers in the UK believing that we won’t be talking about ‘cloud’ by the end of 2025.

Commissioned by Citrix and carried out by Censuswide, the research quizzed 750 IT decision makers in companies with 250 or more employees across the UK to pinpoint the current state of cloud adoption and the future of cloud in the enterprise. A separate survey of 1,000 young people aged 12-15 was run in conjunction with this research to identify the next generation’s perspective on cloud, outlining the future workforce’s awareness of and interactions with cloud technology.

The research offers a snapshot of current cloud strategies in the UK and the extent to which the term ‘cloud’ is already dying out as it becomes firmly embedded in business processes.

2025: The cloud dissipates

A quarter (26 per cent) of IT decision makers in the UK believe the term ‘cloud’ will be obsolete by 2025. Of those who don’t see a future for the term, more than half (56 per cent) believe that cloud technology will be so embedded in the enterprise that it will no longer be seen as a separate term. One third (33 per cent) believe that employees will refer to cloud-native apps, such as Salesforce, specifically – with no consideration of where the data is hosted in future.

The possible extinction of the term ‘cloud’ is also mirrored in the next generation of workers due to start entering the workforce in 2021. Three in ten (30 per cent) 12-15 year olds in the UK don’t know what the term ‘cloud’ means while one third (33 per cent) never use the term outside of ICT classes at school. While they may not regularly use the term, the benefits of the cloud are already deeply embedded in teenagers’ lives. When asked what the cloud meant to them, 83 per cent of 12-15 year olds in the UK recognised that it was where they stored their photos and music while two fifths (42 per cent) confirmed that they used the cloud to share data, such as photos, music and documents for schoolwork, with friends.

Current state of cloud adoption

Nearly two fifths (38 per cent) of large businesses in the UK currently store more than half of their data in the cloud. Yet, almost three in five (59 per cent) still also access and manage data on premises. The majority (89 per cent) of large UK organisations agreed that cloud is important to their business. For most large UK companies (87 per cent), improving productivity is a key benefit of cloud adoption.

Further education about the benefits of the cloud is required in the enterprise, particularly at a board level. Over half (52 per cent) of UK IT decisions makers thought middle management within their organisation had a good understanding of cloud, while the figure stood at 39 per cent for board members. As cloud adoption continues within the business, this lack of awareness at board level could become indicative of the fact that the term ‘cloud’ is dying out and neither management nor the C-suite now need to know the ‘ins and outs’ of the technology.

Despite this relatively low level of understanding, businesses are serious about the cloud – 91 per cent have implemented a cloud strategy or plan to put one in place imminently. However, these strategies are in their infancy. Only 37 per cent said this plan was “incredibly detailed” and aligned to business objectives.

Security concerns

When it comes to public and hybrid cloud, security concerns still exist. Three in 10 (31 per cent) UK IT decision makers are not confident that a public cloud set-up is able to handle their organisation’s data securely. This figure stands at 19 per cent for hybrid cloud set-ups.

Large organisations in the UK are most confident when it comes to private cloud – 88 per cent are quite confident or highly confident that this cloud set-up can handle its data securely. In light of this, it is not surprising that private cloud is the most prevalent model – used by 61 per cent of large UK businesses. Around one third of UK-based organisations also use public cloud (36 per cent) and one quarter (25 per cent) have implemented a hybrid cloud model.

Darren Fields, Regional Director, UK & Ireland at Citrix, said:

“Much like BYOD before it, this research indicates that cloud as a term may soon have had its day and be relegated to the buzzword graveyard. This has nothing to do with its relevance in the IT industry but everything to do with the evolution of technology and the ubiquity of cloud services to underpin future ways of working.

“Most IT budget-holders agree that cloud can improve productivity, lower costs, ensure security and optimise performance, as part of a digital transformation agenda. However, there is still more education required to effectively communicate the benefits of cloud services – and there’s still a gap to be bridged between boardrooms and IT decision-makers in relation to this.

“Arguably a level of mistrust and misunderstanding still holds back UK businesses. And it is clear a cultural and educational shake-up is needed for cloud and digital transformation to deliver on its potential. Once this awareness stems from IT to the board and beyond, there should be fewer barriers to hold cloud adoption back.”

85% of businesses agree IT is restricting their potential.

A lack of choice and flexibility in IT infrastructure is holding back UK businesses according to a new study by Cogeco Peer 1, a global provider of enterprise IT products and services, with 85% of respondents believing that their organisation would see faster business growth if its IT vendors were less restrictive.

The study, which questioned 150 IT decision-makers across several different industries including financial services, retail, higher education, business services and media, found that CIOs and IT Directors are frustrated with the restrictions they encounter, which manifests in a lack of flexibility (51%) and reliability (50%), as well as 40% of respondents citing too much choice from IT vendors, which their organisation finds restrictive. The vast majority (84%) of respondents stated that their organisation is not currently running the optimum IT system.

Almost seven in ten (69%) felt that their organisation’s growth/development has been restricted by its IT vendors’ contracts, with around two in ten (17%) reporting significant restrictions.

When an organisation adopts new technology, it is clear it doesn’t always go to plan. Most respondents (81%) said that an IT service or system they’ve adopted has not lived up to expectations and almost half (48%) stated this has happened on multiple occasions. The most common impacts of this have been reliability issues (65%), not getting the service required (57%) and higher costs (52%).

When it comes to upgrades, it is a similar story, with 75% of respondents reporting that an IT upgrade purchased by their organisation has not lived up to expectations, with 41% saying this has happened multiple times. Among the reasons as to why this is, is a system not integrating well with existing systems (63%), the technology was too immature or unproven (45%) or the vendor did not add the expected value (24%).

Furthermore, business agility was highlighted as a key area where IT vendors could drastically improve the value of their service. Three in five (60%) respondents agreed that their organisation’s IT vendor could do more to help their business to be more agile, with just over a fifth (21%) stating that IT vendors do not help their business to be agile at all.

Susan Bowen (current VP & GM, EMEA) future President of Cogeco Peer 1 said: “It is clear that the technology industry is key to helping businesses in every sector and specialism grow and to reach their full potential.

“Agility and flexibility are key tenets of this, so businesses should seek the right partners and services which enable them to scale up and down to match seamlessly with their needs.

“Far from being restrictive, properly scalable solutions can allow businesses to focus on what they do best, rather than being bogged down in their system requirements.”

There is plenty of scope for IT providers to adapt to their customer’s changing needs, with the survey concluding that almost all respondents (91%) felt that there are areas within their own organisations that are too complicated, with the most common areas being integration (55%), security (41%) and data protection (37%).

The overlooked element of DevOps.

Tony Lock, Director of Engagement and Distinguished Analyst, Freeform Dynamics Ltd.

Many words have been written about the need for automation, for DevOps, for Continuous Delivery, and for all the other buzzwords that people use when they talk about evolving IT and managing change. Yet research suggests that few have paid enough attention to why change fails – and that's a failure to engage the people, rather than the technology.

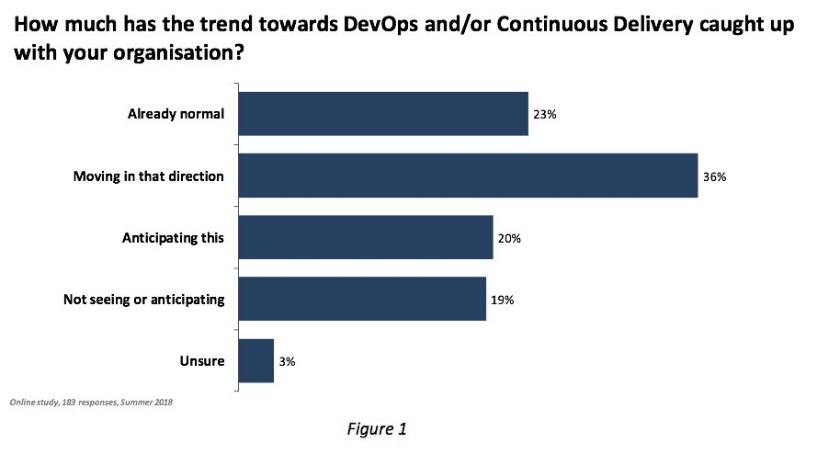

In some respects, IT infrastructure has changed rapidly over the last 5 years – in particular, virtualisation and Cloud have become widely accepted, almost standard approaches. Yet significant numbers of organisations are still struggling to get to grips with how these are changing their data centres. Another major change beginning to impact data centres is the adoption of DevOps. But while DevOps and Continuous Delivery garner considerable media attention, the results of a survey (LINK: https://freeformdynamics.com/it-risk-management/managing-software-exposure/] recently carried out by Freeform Dynamics shows they are still relatively young in their widespread adoption in the enterprise (Figure 1).

As DevOps usage grows and organisations seek greater flexibility from their IT systems, it brings with it the need to automate a considerable number of processes that were formerly labour intensive. These include the provisioning of infrastructure resources to services as they are deployed, potentially re-provisioning them far more frequently than in the past and, perhaps the most overlooked element of all, de-provisioning them perhaps after just a few days or weeks of use. Of course, all of these processes must be managed with a strong focus on security.

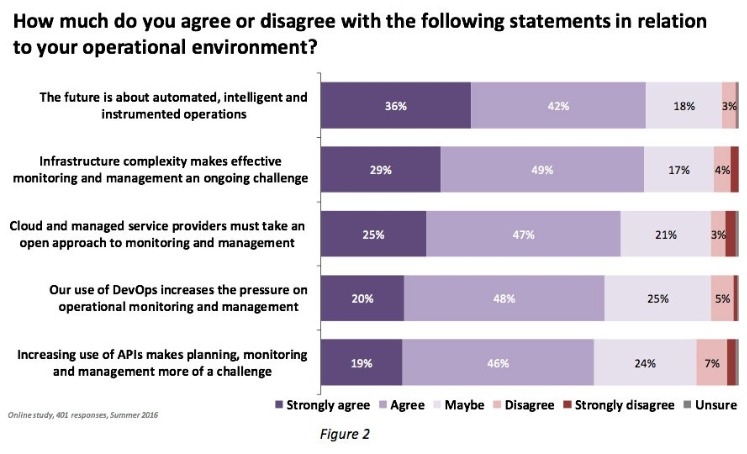

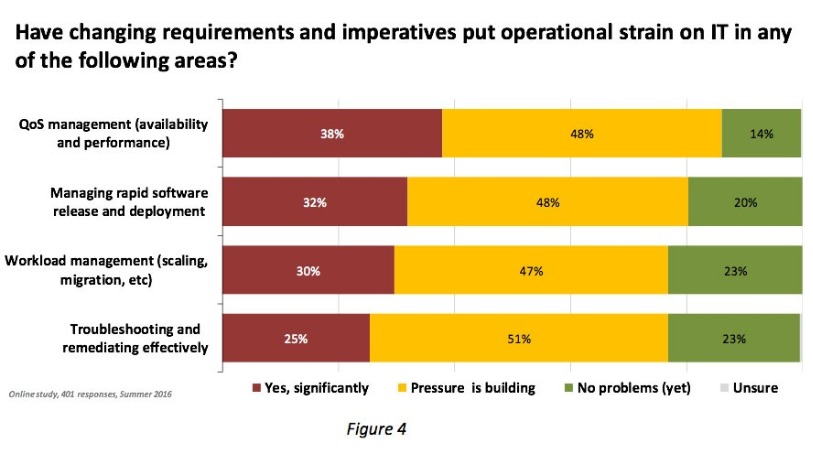

These new requirements are already presenting challenges in the data centre as shown in the results of another survey [LINK:

https://freeformdynamics.com/software-delivery/it-ops-as-a-digital-business-enabler/], where the flexibility required of IT resources is highlighting the weakness in many existing monitoring tools, especially when the impact of Cloud usage is considered (Figure 2).

With so much change happening, how can data centre managers and IT professionals ensure everything runs smoothly? Clearly attention, and investment, are required to bring monitoring up to scratch in the dynamic new world. The same can be said for the management and security tools used to control the data centre’s IT infrastructure.

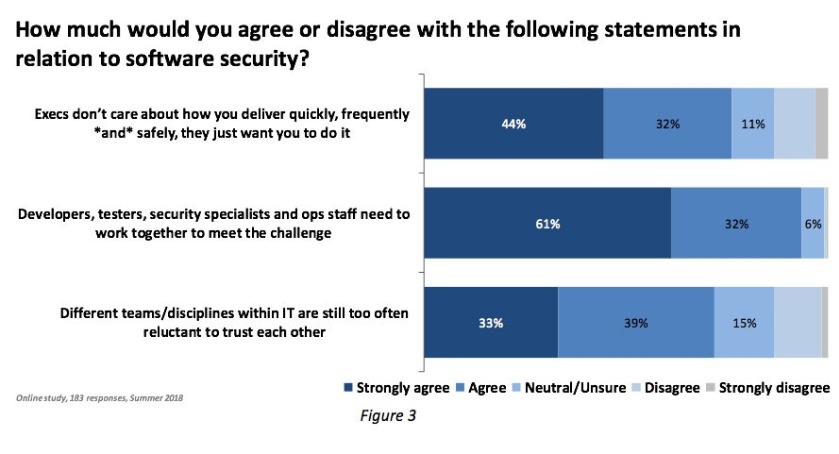

But most importantly of all, it is people and process matters that require immediate attention. In many, if not most organisations with large IT teams, it has long been recognised that the days of having narrow specialist teams looking after particular technology silos were coming to an end in order to allow staff to be able handle workloads. But the results of the survey mentioned earlier in Figure 1 tell us that getting different parts of IT working together well is a challenge for many (Figure 3).

The survey returns show that a clear majority of IT teams or groups have experienced trust issues with other teams. This is despite almost every respondent acknowledging that it is critically important for different groups to work together to meet existing challenges, especially in terms of security. There are many possible reasons for the lack of trust, sometimes it might simply be down to history or personal issues. But it is also very likely that many organisations do not have good processes in place to enable people to work effectively.

It could be that Operations and Developers rarely speak or meet, that Operations and Security teams only get in touch with each other when something has ‘gone wrong’, or simply that Security professionals and Developers only communicate in terms of commands. In the dim and distant past when IT systems were relatively static, communications may not have needed to be open all of the time, but even then, there was a need to believe that what one group told another could be trusted as a base from which to act. In the far more dynamic environments that are being built today, good communications and trust are even more crucial if serious problems are to be avoided.

In essence “feedback” must be at the heart of any dynamic system, and the DevOps approach to creating, modifying and running software and systems is a case in point. Operations needs to understand the type of infrastructure that developers require in order to deploy their systems, and they have to put in place the monitoring tools to keep it functioning effectively and to highlight any issues that need to be addressed. Data Centre Ops staff then must be able to feed back the results of the monitoring and management of the systems they run to allow developers to fix bugs, optimise code that isn’t running well or that is consuming more physical resources than expected.

These people skills and processes are often overlooked but are becoming increasingly important. The benefits can be difficult to measure exactly, but the consequences of getting them wrong will become visible almost immediately. Until these people and process issues are tackled, problems will continue (Figure 4).

The Bottom Line

Complexity in the data centre continues to increase. To keep everything operational needs not just good technology but processes that have been modernised to handle the challenges of today, not those of a decade ago. Communication, both verbal and electronic, is essential to improving IT service delivery and avoiding failures and outages. But improving how Ops speaks with Devs, developers speak with ops and security speaks with everyone can also allow each team to have a positive influence. People and communications are easy to overlook. Don’t.

At present, data centres (DCs) are one of the major energy consumers and source of CO2 emissions globally.

By Professor Habin Lee, Brunel Business School, Brunel University London

At present, data centres (DCs) are one of the major energy consumers and source of CO2 emissions globally. The GREENDC project[1] (http://www.greendc.eu/) addresses this growing challenge by developing and exploiting a novel approach to forecasting energy demands. The project bring together five leading academic and industrial partners with the overall aim of reducing energy consumption and CO2 emissions in specific national DCs. It implement a total of 163 person-months staff and knowledge exchanges between industry and academic partners. More specifically, knowledge of data centres operations is transferred from industry to academic partners, whereas simulation based optimization for best practice of energy demand control will be transmitted from academia to industry through the knowledge transfer scheme.

In recent years, the use of the information and communications technologies (ICT), comprising communication devices and/or applications, DCs, internet infrastructure, mobile devices, computer and network hardware and software and so on, has increased rapidly. Internet service providers such as Amazon, Google, Yahoo, etc., representing the largest stakeholders in the IT sector, constructed a large number of geographically distributed internet data centers (IDCs) to satisfy the growing demand and providing reliable low latency services to customers. These IDCs have a large number of servers, large-scale storage units, networking equipment, infrastructure, etc., to distribute power and provide cooling. The number of IDCs owned by the leading IT companies is drastically increasing every year. As a result, the number of servers needed has reached an astonishing number. According to the European Commission’s JRC (Joint Research Centre) report, IDCs is expected to consume more than 100 TWh by 2020.

Due to large amount of energy implied and the related cost, IDCs can make a significant contribution to the energy efficiency by reducing energy consumption and power management of IT ecosystems. This is why most researchers focus on reducing power consumption of IDCs. Those efforts include designing innovative architecture of DCs to minimise loss of cool airs, heats from IT devices, protecting from outside heats and so on. Also, computer scientists developed energy efficient workload algorithms to minimise overloads of servers to minimise energy consumption and heat generation.

However, efforts to reduce energy consumption via efficient DC operation are still scarce. For example, DC managers are yet to find answers to such questions as what are the optimal temperature of the DCs to minimize energy consumption without affecting the performance of IT devices and meeting the service-level-agreement? How many servers or virtual machines need to be on for next 24 hours or a week considering expected workloads? What are the optimal schedules of servers and VMs for handling workloads which are various time to time? Where are the best operational schedules of cooling devices within the DC to have maximum cooling effect?

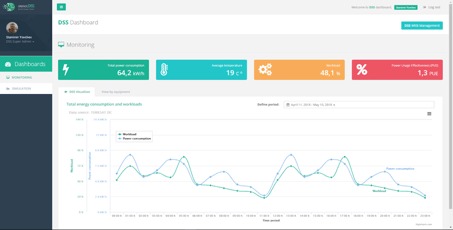

The GREENDC project tries to find answers to those questions for DC managers via a multi-disciplinary study. It takes a more holistic view by considering the system as a whole i.e. servers, cooling system, backup power and electrical distribution system. Particularly, a decision support system (DSS) that integrates functionalities including workload and energy forecasting, generation of optimal operation scheduling of cooling and IT devices and simulation for impact analysis of DC operation strategies.

The architecture of the GREENDC DSS takes layered architecture to guarantee maximum level of independence of components in different layers. This allows the DSS easily be customised for the different requirements of various types of DCs in different regions. There are four layers: data, mathematical model, business logic and user interface layer.

Data layer contains components that collect energy, workload, and meteorological data from target DCs. Collected data is processed by a data normalization component that converts different format of data into the standard format of the GREENDC to be stored in a data warehouse. The normalized data is consumed by upper layer components including math model and business logic layers. Math model layer contains utility components for forecasting and optimization functionalities of the GREENDC DSS and mainly used for the components in the business logic layer. Business logic layer components provide the main services of the DSS by using the components in lower layers. Those services include monitoring, estimation, optimization, and simulation of energy and workloads data. The components in the business logic layer also respond to the requests from user interface layer to get requests and respond to those requests.

As shown in Figure 1, GREENDC DSS provides a dashboard style user interface to DC managers for easy use of the system and compliant with majority energy management tools.

[1] GREENDC project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement No 734273.

The GREENDC project is implemented by five experts in the field: Brunel University London (UK), Gebze Technical University (Turkey), Turksat (Turkey), LKKE (UK) and David Holding (Bulgaria). In particular, the GREENDC DSS will be tested through two field trials in Turksat and Davind Holdings respectively. Turksat is operating one of the largest data centre in Turkey to provide eGovernment services. It also hosts large number of IT servers for municipalities in Turkey for public service provision. The GREENDC DSS will be tested using the real data obtained from Turksat’s data centre.

Professor Habin Lee is chair in data analytics and operations management at Brunel Business School, Brunel University London. His research interests include energy data analytics, sustainable logistics and supply chain management, and cooperation mechanisms for operations management. He is currently coordinating the GREENDC project (Jan 2017 – Dec 2020).

By Steve Hone

DCA CEO and Cofounder, The DCA

The DCA summer edition of the DCA Journal focuses on Research and Development. It’s been proven time and time again that research leads to increased knowledge which in turn helps us to develop and innovate. There is no doubt that an investment needs to be made to see the benefits and competitive advantage this can deliver both to businesses and the sector as a whole. This continued investment was plain to see again in Manchester at the annual DCA conference in July.

The unique workshop format of the Data Centre Transformation conference allowed both DCA members and stakeholders the opportunity to come together and openly discuss the key issues effecting the health and sustainability of our growing sector.

The quality of content and healthy debate which took place in all sessions was testament to just how well run the workshops were. I would like to say a big thank you to all the chairs, workshop sponsors, the organising committee and Angel Business Communications all of whom worked hard to ensure the sessions were interactive, lively and educational.

The workshop topics covered subject matter from across the entire DC sector, however research and development continued to feature strongly in many of the sessions which is not surprising given the speed of change we are having to contend with as the demand for digital services continues to grow.

All the delegates I spoke to gained a huge amount from the day, and it was clear that the insight gained from speaking with fellow colleagues together with the knowledge and experience shared during the workshops and keynotes was invaluable.

Although some of us will be slipping away to recharge batteries; there is still time to submit articles for the DCA Journal. Every month the DCA provides members with the opportunity to provide thought leadership content for major publications.

On the morning of the 25th September The DCA is hosting a series of Special Interest Group (SIG) Meetings followed by the DCA Annual Members Meeting in the afternoon at Imperial College London, all are welcome! More details can be found in the DCA event calendar www.dca-global.org/events should you wish to register your attendance.

Please forward all articles to Amanda McFarlane (amandam@dca-global.org) and please do call if you have any questions 0845 873 4587.

The need to deploy new IT resources quickly and cost-effectively, whether as upgrades to existing facilities or in newly built installations, is a continuing challenge faced by todays data-centre operators. Among the trends developing to address itare convergence, hyperconvergence and prefabrication, all of which are assisted by an increasing focus on modular construction across all product items necessary in a data centre.

The modular approach enables products from different vendors, and those performing different IT functions, to be racked and stacked according to compatible industry standards and deployed with the minimum of integration effort.

Modularity is not just confined to the component level. Larger data centres, including colocation and hyperscale facilities, will often deploy greater volumes of IT using groups, or even roomfuls, of racks at a time. Increasingly these are deployed as pods, or standardised units of IT racks in a row, or pair of rows, that share common infrastructure elements including UPS, power distribution units, network routers and cooling solutions in the form of air-handling or containment systems.

An iteration of the pod approach is the IT Pod Frame which further streamlines and simplifies the task of deploying large amounts of IT quickly and cost-effectively. A Pod Frame is a free-standing support structure that acts as a mounting unit for pod-level deployments and as a docking point for the IT racks. It addresses some of the challenges facing the installation of IT equipment, especially those that facilitate the services typically requiring modifications to the building that houses the data room.

A Pod Frame, for instance, greatly reduces the need to mount or install ducting for power lines and network cables to the ceiling, under a raised floor, or directly to the racks themselves. Analytical studies show that using a Pod Frame can produce significant savings1, both in terms of Capital Expenditure, in some cases up to 15%,and reduced speed of deployment.

Air-containment systems can be assembled directly on to an IT Pod Frame. If deploying a pod without such a frame, panels in the air-containment system have to be unscrewed and pulled away before a rack can be removed. Use of an IT Pod Frame, therefore makes the task of inserting and removing racks faster, easier and less prone to error.

In the case of a colocation facility, where the hosting company tends not to own its tenants IT, it allows all of the cooling infrastructure to be installed before the rack components arrive. It also enables tenants to rack and stack their IT gear before delivery and then place it inside the rack with the minimum of integration effort.

IT Pod Frames have overhead supports built into the frame, or the option to add such supports later, which hold power and network cabling, bus-way systems or cooling ducts. This capability eliminates most of, if not all of the construction required to build such facilities into the fabric of the building itself. This greatly reduces the time taken to provide the necessary supporting infrastructure for IT equipment.

They also allow greater flexibility in the choice between a hard or raised floor for a data centre, for example, ducting for cables and cooling can be mounted on the frame, a raised floor is not necessary. If, however, a raised floor is preferred for distributing cold air then the fact that network and power cables can be mounted on the frame, rather than obstructing the cooling ducts makes the use of under floor cooling more efficient. It also removes the need for building cutouts and brush strips that are necessary when running cables under the floor, thereby saving both time and construction costs.

An example of an IT Pod Frame is Schneider Electric's new HyperPod solution, which is designed to offer flexibility to data centre operators. Its base frame is a freestanding steel structure that is easy to assemble and available in two different heights, whilst its aisle length is adjustable and can support multi-pod configurations.

It comes with mounting attachments to allow air containment to be assembled directly on to the frame, and has simple bi-parting doors, dropout roof options and rack height adapters to maintain containment for partially filled pods.

Several options are available for distributing power to racks inside the IT pod, including integrating panel boards, hanging busway or row-based power distribution units (PDUs). The HyperPod can also be used in hot or cold aisle cooling configurations and has an optional horizontal duct riser to allow a horizontal duct to be mounted on top of the pod. Vertical ducts can also be accommodated.

Analytical studies based on standard Schneider Electric reference designs provide an overview of the available savings in both time and costs that can be achieved using a Pod Frame. Taking the example of a 1.3MW IT load distributed across nine IT pods, each containing 24 racks a comparison was made between rolling out the racks using an IT Pod Frame as opposed to a traditional deployment.

CAPEX Costs were reduced by 15% when the IT Pod Frame was used. These were achieved in a number of ways. Ceiling construction costs were reduced by eliminating the need for a grid system to supply cabling to individual pods. All that was needed was a main data cabling trunk line down the centre of the room with the IT Pod Frame used to distribute cables to the individual racks.

A shorter raised floor with no cutouts was possible with the IT Pod Frame. The power cables were distributed overhead on cantilevers attached to the frame, therefore no cables were needed under the floor. Further cost savings were achieved by using low-cost power panels attached directly to the frame instead of the traditional approach of using PDUs or remote power panels (RPPs) located on the data centre floor. This not only save material and labour cost but also reduced footprint to free up data centre space for a more productive use.

The time to deployment using an IT Pod Frame was 21% less when compared with traditional methods. This was mainly achieved through the reduced requirement for building work, namely ceiling grid installations, under-floor cutouts and the installation of under-floor power cables. Assembly of the air containment system was also much faster using a Pod Frame due to the components being assembled directly on to the frame.

In conclusion, using an IT Pod Frame such as Schneider Electric's HyperPod can produce significant cost savings when rolling out new IT resource in a data centre.

Building on the modular approach to assembly commonly found in modern data centre designs, the Frame simplifies the provision of power, cooling and networking infrastructure. Thereby reducing materials and labour costs to make the deployment process far quicker, easier and less prone to human-error.

White Paper #223, ͚Analysis of How Data Center Pod Frames Reduce Cost and Accelerate IT Rack Deployments ͚, can be downloaded by visiting http://www.apc.com/uk/en/prod_docs/results.cfm?DocType=White+Paper&query_type=99&keyword=&wpnum=263

1 Statistics from Schneider Electric White Paper #263, ‘Analysis of How Data Center Pod Frames Reduce Cost and Accelerate IT Rack Deployments ‘.

By Robbert Hoeffnagel, European Representative for Open Compute Project (OCP)

The modern and often called “digital enterprise” is dependent on a constant flow of new business propositions to keep up with the competition. These initiatives are more and more based on a never-ending stream of technical innovations. Don’t expect traditional hardware suppliers tobe able to keep up with the speed a modern enterprise likes and - tobe honest - needs. Only open source hardware projects like the Open Compute Project (OCP) where hundreds of companies - both vendors, system integrators and users - work together tocome up with the smart innovations that a modern data centre needs. Why? Here are three reasons why open source is the future of data centre hardware.

But first: what exactly is open source hardware? This is what we can learn from Wikipedia:

Open source hardware (OSH) consists of physical artifacts of technology designed and offered by the open design movement. Both free and open-source software (FOSS) and open source hardware are created by this open source culture movement and apply a like concept to a variety of components. Itis sometimes, thus, referred toas FOSH (free and open source hardware). The term usually means that information about the hardware iseasily discerned so that others can make it– coupling it closely to the maker movement. Hardware design (i.e. mechanical drawings, schematics, bills of material, PCB layout data, HDL source code and integrated circuit layout data), in addition to the software that drives the hardware, are all released under free/libre terms. The original sharer gains feedback and potentially improvements on the design from the FOSH community.

Working together

Although open source hardware may not beas well-known as open source software and its most successful project - Linux - we can hardly call the open hardware movement new. In1997 Bruce Perens was already working on a project he called the Open Hardware Certification Program. And popular open source hardware projects like Arduino aren’t exactly brand-new either.

Where the OHCP program in itself was not very successful it did give birth to the idea ofanopen hardware movement consisting of a variety of sometimes competing companies and other parties that were able to work closely together and come up with tools and methods to help guarantee that their products where interoperable. The Arduino board - originally designed by the Interaction Design Institute Ivrea in Italy - has proven that an open hardware product can be very disruptive and can even become one of the major building blocks of a completely new trend: in this case the maker movement.

Open source in the data centre

In other words: open source hardware has already proven tobe very important. But canitalso be important to the data centre?

That isan interesting question. Because on the one hand we see a lot of innovation and change happening in the data centre - mostly at the IT layer - but on the other hand there also is a sometimes very traditional approach. When we look at how enterprise data centres and traditional colocation facilities have been built and in many cases are still being built wedo not see a lot of innovation. Yes we try to squeeze more hardware capacity into a rack, but the way we design the overall data centre infrastructure is often very much the same as we did it 5 to10or even 20 years ago.

Facility and IT

No doubt one of the stumbling blocks here is the infamous gap between facility and IT. Itishardly an exaggeration to state that the facility guys come up with a building, put in power, cooling and some form of structured cabling and order a certain number of racks. Then they call the IT department and tell them that there is new data centre capacity available and if they could please fill this up with the IT hardware they need to run their applications.

That approach worked well as long as organizations felt IT was just another service supporting the business. No one really gave any thought to squeezing the last bit ofperformance out of those systems. Nor did they try to reduce the energy bill, because that was not where IT could make a difference or impact.

Hyperscalers

Things started to change with the hyperscalers and other large-scale usersof data centres and IT hardware. All of a sudden IT was no longer a support service and thus a cost centre. To them digital processes and consequently ITare the business.

These companies were investing billions of dollars in data centre capacity. And soon they realized that the off-the-shelf hardware - and software as well, by the way - they would buy from the same vendors everyone else was getting their hardware from simply wasn’t goodenough. They were never optimized for specific tasks, they used much more energy than necessary, they required a lot ofIT personnel to keep them running - the list goes on.

Do your own R&D

Maybe they where not the first ones to realize that the traditional IT hardware was not goodenough for the services they were building. But because of their scale they were able todotheir ownR&D and innovate where the typical data centre manager ofan enterprise data centre or colo can not. So these hyperscalers decided to design their own hardware. And with a lot of success.

But then an idea came up: what would happen if they published those hardware designs? And invite other companies to help improve these designs and maybe even use them intheir own product development and innovation projects? Would that help to increase the speed of innovation in the data centre space?

That is in a nutshell how one of the most successful open source hardware projects - the Open Compute Project - was born. And the answer to that question about speed ofinnovation is absolutely: yes very much so.

Three reasons why

So why is that? Why isan open source hardware movement like the Open Compute Project (OCP) able to increase the much needed speed of innovation in the data centre?

I think there are three reasons:

OCP Summit in Amsterdam

One of the remarkable characteristics ofan open source project like OCP is the incredible speed at which developments take place. Trying to keep up with all the technological innovations and other developments can sometimes be a challenge.

That is why the Open Compute Project is organizing its first regional summit. The first one will bein Amsterdam on October 1-2. These two days will be packed with presentations byboth users and vendors. And quite a few OCP projects will present updates on the R&D and innovations they are working. Besides that there will bean exhibition floor where you can meet the many companies that have developed products and services based onor inspired by OCP designs.

More info is available here: http://opencompute.org/events/regional-summit/.

Robbert Hoeffnagel is European Representative for Open Compute Project, robbert@opencompute.org

By Cindy Rose, Chief Executive of Microsoft UK

It is easy to take technology for granted. People can work from anywhere, collaborate like never before and share ideas in new and exciting ways. An entire generation is growing up with the world literally at their fingertips; every piece of information ever discovered is just a click away on their mobile phone.

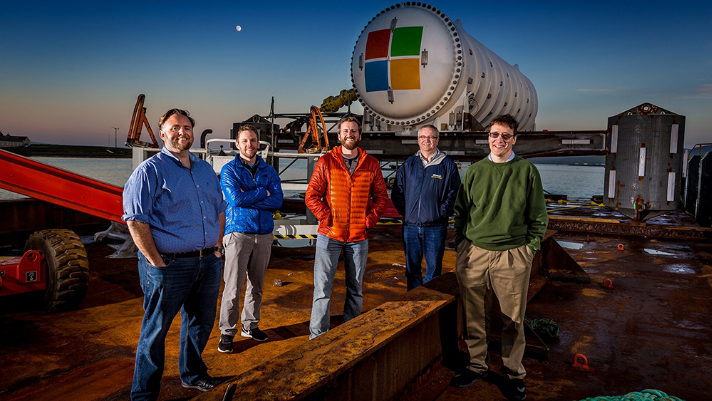

Spencer Fowers, senior member of technical staff for Microsoft’s special projects research group, prepares Project Natick’s Northern Isles datacenter for deployment off the coast of the Orkney Islands in Scotland. The datacenter is secured to a ballast-filled triangular base that rests on the seafloor. Photo by Scott Eklund/Red Box Pictures.

As our reliance on technology increases, so do the demands we place on it. This is why I am excited by the news today that a Microsoft research project is pushing the boundaries of what can be achieved, to ensure that we empower every person and organisation to achieve more. I am lucky to be able to work with some of the world’s brightest minds, and be surrounded by cutting-edge technology. However, I’m still amazed by what our staff’s passion and creativity can lead to.

Microsoft’s Project Natick team gathers on a barge tied up to a dock in Scotland’s Orkney Islands in preparation to deploy the Northern Isles datacenter on the seafloor. Pictured from left to right are Mike Shepperd, senior R&D engineer, Sam Ogden, senior software engineer,Spencer Fowers, senior member of technical staff, Eric Peterson, researcher, and Ben Cutler, project manager. Photo by Scott Eklund/Red Box Pictures.

Project Natick is one such example. Microsoft is exploring the idea that data centres – essentially the backbone of the internet – can be based on the sea floor. Phase 2 of this research project has just begun in the Orkney Islands, where a more eco-friendly data centre was lowered into the water. The shipping-container-sized prototype, which will be left in the sea for a set period of time before being recovered, can hold data and process information for up to five years without maintenance. Despite being as powerful as several thousand high-end consumer PCs, the data centre uses minimal energy, as it’s naturally cooled.

Windmills are part of the landscape in the Orkney Islands, where renewable energy technologies generate 100 percent of the electricity supplied to the islands’ 10,000 residents. A cable from the Orkney Island grid also supplies electricity to Microsoft’s Northern Isles datacenter deployed off the coast, where experimental tidal turbines and wave energy converters generate electricity from the movement of seawater. Photo by Scott Eklund/Red Box Pictures.

It is powered by renewable energy from the European Marine Energy Centre’s tidal turbines and wave energy converters, which generate electricity from the movement of the sea. Creating solutions that are sustainable is critical for Microsoft, and Project Natick is a step towards our vision of data centres with their own sustainable power supply. It builds on environmental promises Microsoft has made, including a $50m pledge to use AI to help protect the planet.

Almost half of the world’s population lives near large bodies of water. Having data centres closer to billions of people using the internet will ensure faster and smoother web browsing, video streaming and gaming, while businesses can enjoy AI-driven technologies.

Project Natick’s Northern Isles datacenter is partially submerged and cradled by winches and cranes between the pontoons of an industrial catamaran-like gantry barge. At the deployment site, a cable containing fiber optic and power wiring was attached to the Microsoft datacenter, and then the datacenter and cable lowered foot-by-foot 117 feet to the seafloor. Photo by Scott Eklund/Red Box Pictures.

I often hear of exciting research projects taking place at our headquarters in Redmond and other locations in the US, so I’m delighted this venture is taking place in the UK. It sends a message that Microsoft understands this country is at the cutting-edge of technology, a leader in cloud computing, artificial intelligence and machine learning. It’s a view I see reflected in every chief executive, consumer and politician I meet; the UK is ready for the Fourth Industrial Revolution and the benefits that it will bring.

The support from the Scottish government for Project Natick reflects this. Paul Wheelhouse, Energy Minister, said: “With our supportive policy environment, skilled supply chain, and our renewable energy resources and expertise, Scotland is the ideal place to invest in projects such as this. This development is, clearly, especially welcome news also for the local economy in Orkney and a boost to the low carbon cluster there. It helps to strengthen Scotland’s position as a champion of the new ideas and innovation that will shape the future.”

Engineers slide racks of Microsoft servers and associated cooling system infrastructure into Project Natick’s Northern Isles datacenter at a Naval Group facility in Brest, France. The datacenter has about the same dimensions as a 40-foot long ISO shipping container seen on ships, trains and trucks. Photo by Frank Betermin.

I’m proud that some of the first milestones achieved by Project Natick will occur in UK waters, and hope that the work being done in the Orkney Islands will be replicated in similar data centres in other locations in the future.

Peter Lee, corporate vice-president of Microsoft AI and Research, said Project Natick’s demands are “crazy”, but these are the lengths our company is going to in order to make potentially revolutionary ideas a reality.

Only by demanding more of ourselves as a technology company will we meet the demands of our customers.

Spencer Fowers, senior member of technical staff for Microsoft’s special projects research group, prepares Project Natick’s Northern Isles datacenter for deployment off the coast of the Orkney Islands in Scotland. The datacenter is secured to a ballast-filled triangular base that rests on the seafloor. Photo by Scott Eklund/Red Box Pictures

Managed services providers (MSPs) must see the wider picture in order to provide the strategic vision that resonates with customers. That strategic partner status is a vital part of the relationship but needs nurturing as part of that all-encompassing vision.

It is clear from research by Gartner and others that MSPs globally are facing a multi-faceted struggle to grow their businesses. On the one hand, there is competition from the public cloud players such as AWS, Google and Microsoft who set pricing levels and have reach but are unable to customise or tailor their offerings to specific verticals. The bulk of MSPs are much smaller, and specialist in technologies covered and markets, but need scale to build their profitability and have limited resources.

There is a clear move to consolidate among larger players, with Gartner saying that, when compared to 2017, the entry criteria have become much harder and stringent. The focus has squarely shifted to hyperscale infrastructure providers, it says, and this has resulted in it dropping more than 14 vendors from its top players list. According to Gartner, there are no more visionaries and challengers left in the market; only a handful of leaders and niche players driving the momentum.

At the other end of the market, among smaller players, the pace of competition has stepped up and they are feeling a major pressure to differentiate, either on skills, markets covered, geographical coverage or in customer relations.

This is a common feature of the MSP market on a global scale. The answer is always to build and then demonstrate expertise and understanding in the marketplace. As Gartner's research director Mark Paine told April’s European Managed Services Summit: “The key to a successful and differentiated business is to give customers what they want by helping them (the customer) buy”.

One way to win more customers is by showing them their place in the future, according to Jim Bowes, CEO and founder of digital agency Manifesto, and Robert Belgrave, chief executive of digital agency hosting specialist Wirehive. The two experts will be covering the marketing aspects at the UK Managed Services & Hosting Summit in London on September 29th. Agenda here: http://www.mshsummit.com/agenda.php

They draw on a wider experience, arguing that the managed services and hosting industry isn’t the only one having to undergo rapid adjustments due to technological advances and changing customer expectations. Customers are experiencing the same disorientation, and need help figuring out how their IT infrastructure needs to evolve over the next five to ten years. Which means it’s time to ditch the old marketing models built on email lists and dry whitepapers. It’s time to get agile, personalised and creative, they will say.

A key part of the event will also be hearing from the experiences of MSPs themselves and looking at established winning ideas. MSPs already confirmed will relate their stories on business-building including how they use managed security services, how MSPs can position security without terrifying the customer, and how they work with customers to keep their lights on.

Now in its eighth year, the UK Managed Services & Hosting Summit event will bring leading hardware and software vendors, hosting providers, telecommunications companies, mobile operators and web services providers involved in managed services and hosting together with Managed Service Providers (MSPs) and resellers, integrators and service providers migrating to, or developing their own managed services portfolio and sales of hosted solutions.

It is a management-level event designed to help channel organisations identify opportunities arising from the increasing demand for managed and hosted services and to develop and strengthen partnerships aimed at supporting sales. Building on the success of previous managed services and hosting events, the summit will feature a high-level conference programme exploring the impact of new business models and the changing role of information technology within modern businesses.

You can find further information at: www.mshsummit.com

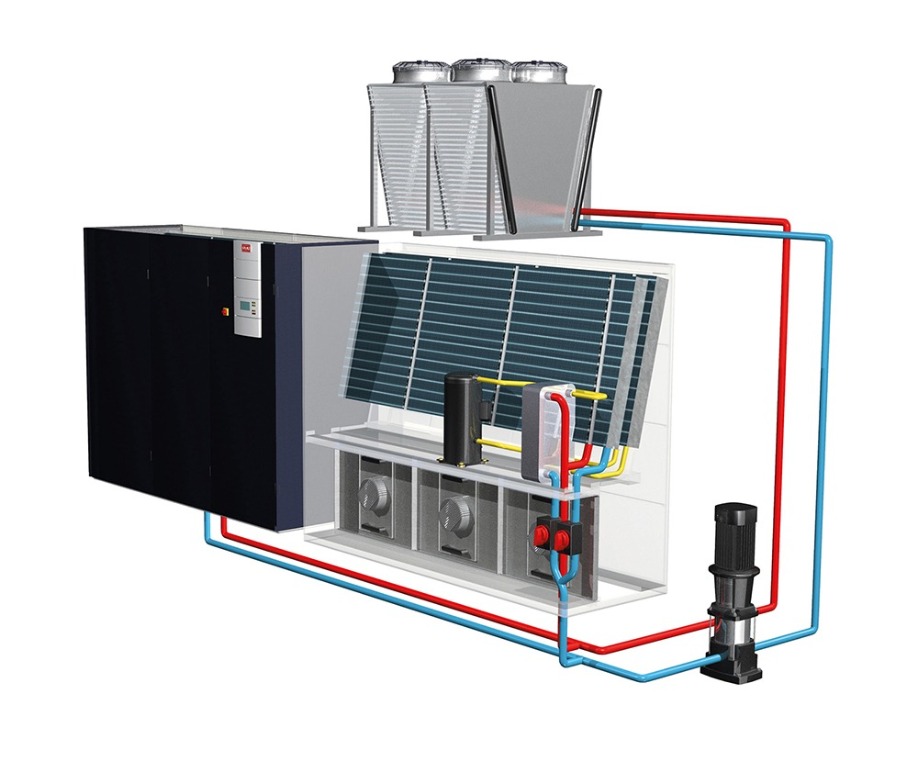

When data centre superpower Next Generation Data (NGD) began the 100,000 square foot, ground floor expansion of its high security data centre in Newport, they relied on trusted supply chain partners Stulz UK and Transtherm Cooling Industries to deliver a substantial package of temperature management and plant cooling technology.

Renowned for its industry leading 16-week build-out programmes, NGD, and funding partner Infravia Capital Partners, demand not only quality systems in the construction of their sites, but product design and logistical flexibility from their supply chain partners, in order to maintain such strict project timescales.

With an ultimate capacity of over 22,000 racks and 750,000 square feet, NGD’s Tier 3+ data centre is the biggest data centre in Europe and serves some of the world’s leading companies, including global telecommunications provider BT and computer manufacturer IBM.

Known for providing large organisations with bespoke data halls constructed to the highest standards, NGD’s South Wales campus is one of the most efficient data centres in Europe with impressively low Power Usage Effectiveness ratings (PUE) – a data centre specific measurement of total power consumption.

Having secured additional contracts with a number of Fortune 500 companies worth £125 million over the next five years, NGD has developed the capacity to respond at a rapid speed in delivering the private and shared campus space required to fulfil the exacting needs of their world-class customers.

For this particular expansion project, NGD specified 114 data centre specific GE Hybrid Cooling Systems from leading manufacturer Stulz UK, plus a combination of 26 high performance horizontal and VEE air blast coolers and pump sets from industrial cooling technology specialist, Transtherm, to manage the inside air temperature of the new campus expansion.

Phil Smith, NGD’s Construction Director, comments on the company’s industry-leading build-out timescales:

“Responding to global market opportunities is an important part of data centre best practice standards and our 16-week build-out programme allows us to lead from the helm when it comes to meeting demand, on time.

“Completing a build of such scale and complexity within just four months requires more than 500 construction workers to be permanently on site. To keep things moving at the right pace, suppliers are required to adjust the design and build of their products in accordance to the build schedule and deliver them just in time for installation to prevent costly delays to NGD and our local contracting firms. The solution provided by Stulz and Transtherm is a great example of how data centres can work with trusted, reliable and dedicated supply chain partners.”

A three-part delivery solution

As long-term suppliers to NGD, both Stulz UK and Transtherm understood the importance of just-in-time deliveries so that the new air conditioning system did not impact the build speed on site. With a usual lead time of eight weeks for its GE Hybrid technology, Stulz UK set about devising suitable production alternations which would enable them to deliver their equipment within NGD’s rapid build programme.

Mark Vojkovic, Sales Manager for Stulz UK, explains:

“Specified for installation into the floor of the new campus expansion, we altered the manufacturing process of our GE hybrid units to enable us to deliver the technology in two halves. First to be delivered was the fan bases, which were installed onto their stands during the earlier stages of the build, just in time for the construction of the suspended floor. Later in the build programme, between weeks 10 and 12, Stulz UK delivered the upper coil sections of the air conditioning units and Transtherm delivered, installed and commissioned their equipment on the outside of the building.”

Tim Bound, Director for Transtherm added:

“Supplying a data centre superpower like NGD requires a reliable and creative supply chain solution which can not only work in tandem to deliver the most efficient product packages, but also communicate effectively to deliver products from multiple manufacturing sites ‘just-in-time’ in order to maintain their industry leading build-out times. It’s vital on projects of this size that manufacturing partners can see the bigger picture and adjust their own project parameters to suit.

“In this instance, NGD had 500 construction workers on site each day, working to an industry leading deadline. It was imperative that Stulz UK and Transtherm were appreciative of the on-site complexities so that we could deliver and install our plant with minimal disruption.

“This project is a real testament to how Stulz UK and Transtherm can combine their technologies, engineering know-how and logistical capacity to deliver a substantial project, within potentially restrictive time and installation constraints.”

The technology in focus

The Stulz GE system utilises outdoor air for free-cooling in cooler months when the outside ambient air temperature is below 20°C, with indirect transfer via glycol water solution maintaining the vapour seal integrity of the data centre.

The indoor unit has two cooling components, a direct expansion (DX) cooling coil and a free cooling coil. In warmer months when the external ambient temperature is above 20°C, the system operates as a water-cooled DX system and the refrigeration compressor rejects heat into the water via a plate heat exchange (PHX) condenser. The water is pumped to the Transtherm air blast cooler where it is cooled, and the heat rejected to air.

In cooler months below 20°C external ambient temperature, the system automatically switches to free-cooling mode, where dry cooler fans are allowed to run and cool the water to approximately 5°C above ambient temperature before it is pumped through the free cooling coil. In these cooler months dependant on water temperature and/or heatload demands, the water can be used in “Mixed Mode”. In this mode the water is directed through both proportionally controlled valves and enables proportional free cooling and water-cooled DX cooling to work together.

Crucially, 25% Ethylene glycol is added to water purely as an antifreeze to prevent the dry cooler from freezing when the outdoor ambient temperature is below zero.

A partnership approach

Stulz UK has specified Transtherm’s leading air blast cooling technology as part of its packaged air-conditioning solution for around 10 years.

Mark Vojkovic continues: “Transtherm’s air blast coolers and pump sets complete our data centre offering by fulfilling our requirement for outside plant which is efficient, reliable and manufactured to the highest standard. Their engineering knowledge is unparalleled and the attention to detail and design flexibility they apply to every project matches the service we always aspire to give our customers.”

Situated around the periphery of the building and on its gantries, Transtherm’s 26 VEE air blast coolers are fitted with ERP Directive ready fans and deliver significant noise reduction when compared to other market leading alternatives, in accordance with BS EN 13487:2003.

Tim Bound concludes:

“Stulz UK and Transtherm are proud to be part of NGD’s continued expansion plans as they move at speed to meet market opportunities within their sector. This most recent expansion showcases the many benefits of established supply chain relationships, especially on campus build-outs which need to deliver unrivalled quality on the tightest deadlines.”

For more information on Transtherm Cooling Industries visit www.transtherm.co.uk and for further details on Stulz UK visit www.stulz.co.uk. Details about NGD can be found at www.nextgenerationdata.co.uk.

About STULZ

Since it was founded in 1947, the STULZ company has evolved into one of the world’s leading suppliers of air conditioning technology. With the manufacture of precision air conditioning units and chillers, the sale of air conditioning and humidifying systems and service and facility management, this division of the STULZ Group achieved sales of around 420 million euros in 2016. Since 1974 the Group has seen continual international expansion of its air conditioning business, specializing in air conditioning for data centers and telecommunications installations. STULZ employs 2,300 workers at ten production sites (two in Germany, in Italy, the U.S., two in China, Brazil, and India) and nineteen sales companies (in Germany, France, Italy, the United Kingdom, the Netherlands, Mexico, Austria, Belgium, New Zealand, Poland, Brazil, Spain, China, India, Indonesia, Singapore, South Africa, Australia, and the U.S.). The company also cooperates with sales and service partners in over 135 other countries, and therefore boasts an international network of air conditioning specialists. The STULZ Group employs around 6,700 people worldwide. Current annual sales are around 1,200 million euros.

For more information visit www.stulz.co.uk

For further press information please contact:

Debby Freeman on: +44 (0) 7778 923331

Every organisation faces the imperative need to transform its operations and business model in order to compete in highly dynamic, digital business environments. For many this transformation must be underpinned by robust information systems that can adequately support future growth. However, many corporate networks are incapable of supporting additional capacity and in fact IDG research suggests that upgrading existing wide-area networks (WAN) remains a priority for CIOs and IT professionals. By Paul Ruelas, Director of Product Management at Masergy.

More and more companies are embracing Software-Defined WAN (SD-WAN) and as the digital evolution of business exponentially accelerates, there have been profound impacts on innovation and the role of the CIO. As decision-makers look to improve both the efficiency and effectiveness of their overall IT environment, it’s important to explore changes in IT delivery and the benefits that SD-WAN can bring.

Changing requirements

Many CIOs are increasingly facing complex challenges when it comes to connectivity, including additional numbers of devices, locations and users. This can lead to mounting costs as well as a need for improved security for employees – whether they be working in geographically dispersed offices or remotely. When combined these challenges illustrate the need to move beyond legacy WAN administration and cost structures and consider the potential benefits of adopting an SD-WAN managed services offering.

With many employees adopting new working practices, the workforce of today has become increasingly reliant on innovative new applications that utilise more data and require robust application response and service levels. As new applications and workloads continue to evolve and drive digital business they also continue to strain the WAN. It would be unfeasible for every workload or application to be treated as a high-priority on the network. This leaves network managers/administrators and IT staff carefully balancing service levels and costs.

As overall IT budgets flat line, increasingly CIOs are expected to do more with less, all while not dropping the ball on existing infrastructure and investments that need to reach the end of their contracts. Harnessing managed services partners with agile and scalable solution platforms is key to being able to focus scarce IT resources on the things that really differentiate the company. Additionally, SD-WAN has the ability to make technology more accessible, instantly programmable, and linkable through powerful integration tools. Hybrid networking and software defined platforms help CIOs build a blended approach, augmenting legacy systems with the advantages of modern capabilities. SD-WAN provides far greater agility as well as the ability to match the network capabilities to the application’s needs. This can be done by introducing features such as application-based routing which builds intelligence into the network to understand the various applications and their particular bandwidth requirements. SD-WAN also offers the following benefits to CIOs looking to meet evolving business needs and support digital transformation efforts:

Meeting key challenges

The pressures on the corporate WAN are increasing dramatically as organisations deploy more demanding applications and workloads on their digital transformation journey. IT professionals recognise that the existing WAN cannot meet these requirements so are looking to solutions such as SD-WAN because it offers the agility, control, and efficiency essential for delivering a platform that can support a dynamic organisation.

With the rapid rate of change, CIOs are taking the brunt of the impact. To succeed they should continue to focus on their core competencies. Utilising technologies like SD-WAN can help to build extreme responsiveness and agility into the business’ infrastructure. For instance, SDN can be used to quickly and easily create experimental environments which easily allow the testing of new disruptive technologies – driving down the time-to-value and time-to-market.

Energy costs, reliability and air conditioning are all important criteria for choosing an external data center. But today’s key considerations also include efficient process implementation and, above all, fast and secure access to global cloud and application providers. Private, public and hybrid cloud solutions demand a hacker-protected connection with fast response times and colocation offers the ideal platform for this, enabling providers to act as a digital marketplace for the implementation of IT concepts. By Volker Ludwig, Senior Vice President Sales at e-shelter in Frankfurt.

Due to the increasing digitisation of our lives, the amount of data being stored in data centers will continue to increase dramatically. According to IDC's 2017 study, Big Data and the Internet of Things will reach a data volume of approximately 163 zettabytes (ZB) by 2025. For comparison: In 2016, only one-tenth of this data volume (16ZB) was produced. In addition, the same IDC study predicted that this generation of data will increasingly shift to the enterprise sector. But many enterprise data centers will not grow to the same extent. Data centers must evolve and grow in line with this data growth.

From colocation to the marketplace

Colocation today is about much more than just putting racks together – the classic colocation model is changing. Activities range from optimising the physical footprint, to resolving redundant power supplies and connectivity services. The increasing pressure on companies to provide their users with modern applications, some of which run independently in public clouds, presents CIOs with completely new challenges. In many cases, an unplanned multi-cloud approach has already developed in order to meet users’ needs, but this can be complex and uneconomical especially when departments go rogue and start independently purchasing services from third-party providers without the IT team being involved.