The full report of the recent DCS/DCA Data Centre Transformation (DCT) conference can be found elsewhere in this issue, but I think it’s worthwhile using my editorial comment to re(emphasise) the key message from the event: transformation is happening and will continue to happen, at an ever increasing pace, and there is coming a time when many, if not all, of the traditional assumptions made around the data centre and the IT infrastructure it houses and enables are going to be replaced by new technologies and ideas.

For end users, the question is a relatively simple one: Do I want to embrace the change and shape the impact that it will have on my organisation, or do I want to carry on doing what I’ve always done and cross my fingers that everything will be okay?

The hyperscalers and the large-scale web disruptors might be viewed by many as ‘the enemy’ – destroying the high street and many long-standing household names as they leverage technology to undercut companies tied to physical premises and the paying of rent and rates – but their innovative use of existing technologies and their pioneering use of new ones show the way forward for virtually all other businesses, over time.

Of course, not everything will end up in the virtual world – no, we will have yet another hybrid to add to the list – hybrid Cloud, hybrid IT, hybrid data centres…and, now, hybrid business. Physical premises and shops will continue to exist. Some workforces will need to have an office or factory from which to work, but an increasing amount will not; some businesses will continue to need physical showrooms and shops, more and more will not. Towns and cities large enough to be ‘destinations’ when it comes to shopping, will survive, if not thrive; those that do not have a compelling draw to customers, will see more and more empty premises.

Add to this national and regional variations – loyalty to local suppliers and businesses seems to be much more prevalent on mainland Europe, as opposed to the UK and the US – and the world of work and leisure is going to become a real hybrid mixture of the physical and the virtual (and quite literally the virtual when technology will allow us to sight see, play golf and do many other things all over the world from the comfort of our own homes.

There’s no guide to how, when, where or what when it comes to digital transformation, but it’s already here, its momentum is accelerating, and the data centre of, say, 10 years’ time, could look nothing like that of today. More likely, we’ll have some data centres that do look the same from the outside at least – but look radically different when you look under the covers – and joining the current, large scale, consolidated data centres will be all manner of data centre shapes, sizes and locations that are optimised for the applications and data processing tasks which they are required to undertake.

Digital transformation – threat or opportunity? It’s up to each business to decide!

The recent Data Centre Transformation (DCT) conference may not have generated quite as much excitement as England’s victory over Columbia in the World Cup, but it’s safe to say that, if ignorance and uncertainty were the event’s opposition, then DCT played them off the park without a hint of extra time and penalties!

If there was one key take away from the highly successful DCT conference, it had to be the growing recognition that data centre transformation is happening, is here to stay and those who wish to deny its existence – both vendors and end users – would do well to learn the lesson of King Canute, who managed to demonstrate to his subjects that, king he may be, but there was nothing he could do to prevent the tide coming in.

The scene was set courtesy of the Simon Campbell-Whyte Lecture, presented by Rudi Nizinkiewicz. Cyber security was his topic, and Rudi managed to bring a fresh perspective to the world of hacking, phishing, malware and the daily reports of data breaches, as he suggested that, without proper risk assessment, any IT security strategy was doomed to failure. A company needs to understand its potential vulnerabilities – and not just the obvious ones related to outside attacks. No, equally important is the knowledge of how the data centre and its contents might be vulnerable from changes and mistakes, or malicious attacks, from within an organisation.

Rudi gave the recent example of the TSB IT meltdown, where it would seem that the company had not fully understood the inter-relation of its IT infrastructure, hence the problems that occurred when a planned migration took place. And here he touched on the major reason why cyber security and disaster recovery needs to be taken so seriously – the damage it causes to a company. Yes, there’s damage to the company’s day to day business as the mess needs to be sorted out, but there’s also damage to a company’s reputation. What is the true cost of this?

For the cyber security ‘world weary’, Rudi’s take on the subject was fresh and challenging, especially as he was reluctant to concede that the current received wisdom – security breaches will happen, it’s how you manage them that matters – is dangerous, as the focus really should be on preventing any such incidents from taking place. Yes, managing/minimising the impact of a breach is important, but no matter how well this is done, there will be some damage to an organisation, so it has to be better to prevent cyber attacks wherever possible.

And it was also refreshing to hear that, like it or not, bringing in a bank of consultants to advise on cyber security strategy is not the answer. After all, who knows your business best – you, or random third parties?! So, prepare to roll up your sleeves and get that risk assessment project under way.

So, fresh thinking required for cyber security.

And, if delegates were struggling to comprehend the message of Rudi’s lecture, well John Laban’s keynote presentation on open source left them in no doubt that change is coming, like it or not. Open software is a familiar part of the IT landscape, but open hardware has received less traction to date. However, the Open Compute Project (OCP) seeks to change this and promises major disruption to an industry already ‘reeling’ from the move to Cloud and managed services. That’s to say, many hardware vendors are already having to re-invent themselves in terms of how and where they obtain their revenues, becoming less and less reliant on selling expensive ‘boxes’ as more and more end users sign up to Cloud and managed services. The momentum behind the OCP project suggests that not only will the IT hardware folks continue to suffer, but that many of the data centre hardware vendors could be similarly disrupted.

The OCP server design promises to do away with much of the traditional data centre infrastructure required to support it. It’s early days, but when one saw John’s slide of a traditional data centre layout – crammed full of the power and cooling required to support the servers, storage and switches – and then the following slide of the almost empty data centre required to support OCP servers – well, a picture really did paint a thousand words. Perhaps most tangibly, John explained how, using OCP servers, a Scandinavian company was working on a modular data centre design which has two rows of servers – running along each side of the container, tight to the walls – doubling the capacity of the traditional one row design that exists now.

Of course, there’s so much legacy kit out there that open hardware may not make a major impact in the data centre just yet, but there’s no doubting that its benefits will prove hard to resist over time.

The trio of keynote presentations was completed by Jon Summers, who gave a brilliant insight into how the world of the edge computing and edge data centres was likely to develop over time. Jon spoke of the ‘pervasive impact’ of edge – all that remains is for organisations to obtain a proper understanding of where edge fits into their overall IT and data centre infrastructure. In other words, where data is created, is processed, is at rest, is retrieved, where it is re-processed, where it is stored – all this needs to be understood. Only then can an organisation hope to put together an edge strategy that provides the optimum experience for the customer and the most efficient use of IT and data centre resources for the organisation.

The rest of the DCT conference was given over to a mixture of technology and issue focused workshops - subjects discussed included digital business, automation, digital skills, energy and hybrid data centres – and selected vendor presentations.

Cyber security, open compute and edge might be seen as obvious business disruptors. However, as the day progressed, it became increasingly clear that few, if any, data centre ‘components’ are not experiencing something of a transformation as they have to evolve to address the requirements of the emerging information technologies that are underpinning the digital age.

Take just one example – cooling. Right now, air-cooled data centres are the norm. However, in the HPC space, liquid cooling is widely accepted, as it is seen to offer greater efficiency, greater potential for heat recovery and the ability to cool high density racks that are becoming a feature of more and more data centres. The hybrid data centre workshop debated the possibility that air cooling and liquid cooling could co-exist in the data centre well into the future, but is liquid cooling the only game in town in the longer term, when we’re led to believe that HPC-type applications will become the norm?

In summary, the Data Centre Transformation conference delivered a brilliant, balanced agenda that suggested how the industry is going to have to change over the coming years, always mindful that that great ‘enemy’ to progress – legacy infrastructure – is not going away any time soon.

For most organisations, the transformation challenge is knowing how and when to let go of traditional technologies and ideas, replacing them with faster, smarter, more efficient solutions. Doing nothing is not an option. Not only does every industry sector have one or more digital disruptor – a new company that is not hampered by the ‘shackles’ of legacy infrastructure – but there’s every chance that even rival organisations with the same transformation dilemmas as your own are starting to plan for a digital future.

The prospect of so much change might be daunting, but, if the England team can learn how to (finally) win a penalty shoot-out, there must be hope for us all, whatever the challenges. That was certainly the view of the DCT delegates who enjoyed watching the match at the evening buffet/dinner!

Worldwide spending on the technologies and services that enable the digital transformation (DX) of business practices, products, and organizations is forecast to be more than $1.1 trillion in 2018, an increase of 16.8% over the $958 billion spent in 2017.

DX spending will be led by the discrete and process manufacturing industries, which will not only spend the most on DX solutions but also set the agenda for many DX priorities, programs, and use cases. In the newly expanded Worldwide Semiannual Digital Transformation Spending Guide, International Data Corporation (IDC) examines current and future spending levels for more than 130 DX use cases across 19 industries in eight geographic regions. The results provide new insights into where DX funding is being spent as well as what DX priorities are being pursued.

Discrete manufacturing and process manufacturing are expected to spend more than $333 billion combined on DX solutions in 2018. This represents nearly 30% of all DX spending worldwide this year. From a technology perspective, the largest categories of spending will be applications, connectivity services, and IT services as manufacturers build out their digital platforms to compete in the digital economy. The main objective and top spending priority of DX in both industries is smart manufacturing, which includes programs that focus on material optimization, smart asset management, and autonomic operations. IDC expects the two industries to invest more than $115 billion in smart manufacturing initiatives this year. Both industries will also invest heavily in innovation acceleration ($33 billion) and digital supply chain optimization ($28 billion).

Driven in part by investments from the manufacturing industries, smart manufacturing ($161 billion) and digital supply chain optimization ($101 billion) are the DX strategic priorities that will see the most spending in 2018. Other strategic priorities that will receive significant funding this year include digital grid, omni-experience engagement, omnichannel commerce, and innovation acceleration. The strategic priorities that are forecast to see the fastest spending growth over the 2016-2021 forecast period are omni-experience engagement (38.1% compound annual growth rate (CAGR)), financial and clinical risk management (31.8% CAGR), and smart construction (25.4% CAGR).

"Some of the strategic priority areas with lower levels of spending this year include building cognitive capabilities, data-driven services and benefits, operationalizing data and information, and digital trust and stewardship," said Research Manager Craig Simpson, of IDC's Customer Insights & Analysis Group. "This suggests that many organizations are still in the early stages of their DX journey, internally focused on improving existing processes and efficiency. As they move into the later stages of development, we expect to see these priorities, and spending, shift toward the use of digital information to further improve operations and to create new products and services."

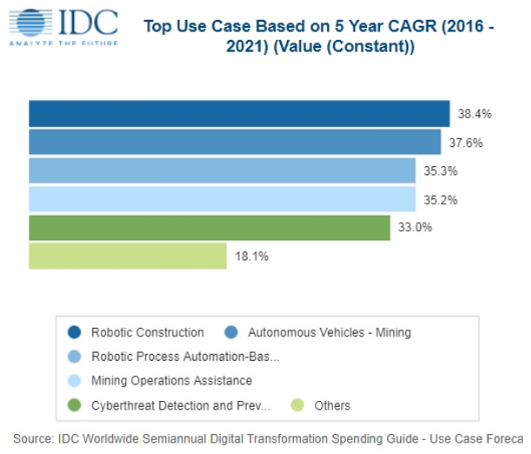

To achieve its DX strategic priorities, every business will develop programs that represent a long-term plan of action toward these goals. The DX programs that will receive the most funding in 2018 are digital supply chain and logistics automation ($93 billion) and smart asset management ($91 billion), followed by predictive grid and manufacturing operations (each more than $40 billion). The programs that IDC expects will see the most spending growth over the five-year forecast are construction operations (38.4% CAGR), connected automated vehicles (37.6% CAGR), and clinical outcomes management (30.7% CAGR).

Each strategic priority includes a number of programs which are then comprised of use cases. These use cases are discretely funded efforts that support a program objective, and the overall strategic goals of an organization.. Use cases can be thought of as specific projects that employ line-of-business and IT resources, including hardware, software, and IT services. The use cases that will receive the most funding this year include freight management ($56 billion), robotic manufacturing ($43 billion), asset instrumentation ($43 billion), and autonomic operations ($35 billion). The use cases that will see the fastest spending growth over the forecast period include robotic construction (38.4% CAGR), autonomous vehicles – mining (37.6% CAGR), and robotic process automation-based claims processing (35.5% CAGR) within the insurance industry.

"While the influence of the manufacturing industries is apparent in the program and use case spending, it's clear that other industries, such as retail and construction, will also be spending aggressively to meet their own DX objectives," said Eileen Smith, program director, Customer Insights & Analysis. "In the construction industry, DX spending is expected to grow at a compound annual rate of 31.4% while retail, the third largest industry overall, is forecast to grow its DX spending at a faster pace (20.2% CAGR) than overall DX spending (18.1% CAGR)."

Cloud migration remains a ‘critical’ or ‘high priority’ business initiative for most business leaders.

Businesses have big ambitions for the cloud to transform their agility and reduce overall business expenditure, but unrealistic cost estimates and unforeseen complexities are still dramatically increasing the time-to-value of cloud migration programmes. Only 28 percent of businesses say the cloud has been comprehensively embedded across their organisation.

A June 2018 study, Maintaining Momentum: Cloud Migration Learnings, commissioned by Rackspace and conducted by Forrester Consulting, describes the ongoing organisational focus on moving applications and data to the cloud. 71 percent of business and IT decision makers across the UK, France, Germany and the U.S. report that they are now more than two years into their cloud journey, with the migration of existing workloads into a public or private cloud environment remaining a ‘critical’ or ‘high priority’ business initiative for 81 percent of business leaders over the next 12 months.

Predicting cost

Half of business and IT decision makers (50 percent) identify significant cost reduction as their main driver for cloud adoption. However, several years in, 40 percent of businesses stated that their cloud migration costs were still higher than expected.

The biggest disparity uncovered was around upgrading, rationalising and/or replacing legacy business apps and systems, with 60 percent of respondents identifying costs as higher than expected.

Execution complexity

The survey also found that businesses massively underestimated the greater task at hand, encountering a number of both technical and non-technical internal barriers.

During the planning and executing stages, data issues around capture, cleaning, and governance; workload management in the cloud; and vision and strategy for the transformation were the most commonly cited challenges (40 percent, 34 percent and 31 percent respectively).

Post-migration, respondents highlighted a lack of adequate user training; cultural resistance to cloud migration; and inadequate change management programmes as their greatest challenges (44 percent, 37 percent and 36 percent respectively).

Delivering on the vision

Companies need a clear strategy that connects both business and IT to minimise cloud migration challenges and accelerate the realisation of benefits, with a lack of a strategic vision cited as an issue before and during migration to cloud by 58 percent of respondents.

‘Going it alone’ also increases the scale of the challenge. 78 percent of businesses already recognise the role of service partners in helping them reshape operating models to support their cloud adoption strategy. And when considering what they’d do differently, half (51 percent) of respondents said they would hire experienced cloud experts to help with migration projects, with 41 percent recognising the need to increase the assistance they get from advisory and consulting services.

Commenting on the findings Adam Evans, director of Professional Services at Rackspace said: “Cloud is the engine of digital transformation and a critical enablement factor for innovation, cost reduction and CX initiatives. But while most organisations we meet have started on their cloud journey, I would say the majority did not expect the scale of the ongoing challenge.”

“As a business generation, we are getting faster at new technology adoption, but we still seem to stumble when it comes to understanding the requirements (and limitations) of the business consuming it. Introducing new cloud-based operating practices across an entire organisation is rarely straightforward, as with anything involving people, processes and their relationship with technology. Managing the gap between expectation and reality plays a huge role in programme success, so it’s imperative that organisations start with an accurate perspective on their maturity, capability and mindset. Only then can we start to forecast cost and complexity reliably.”

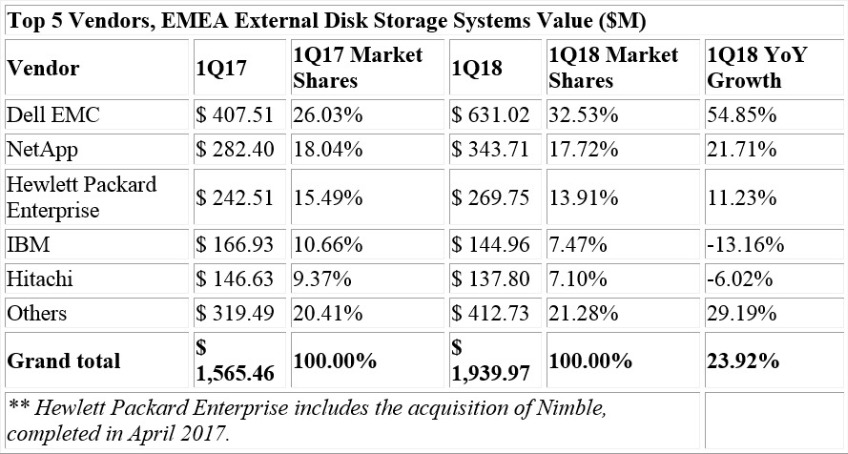

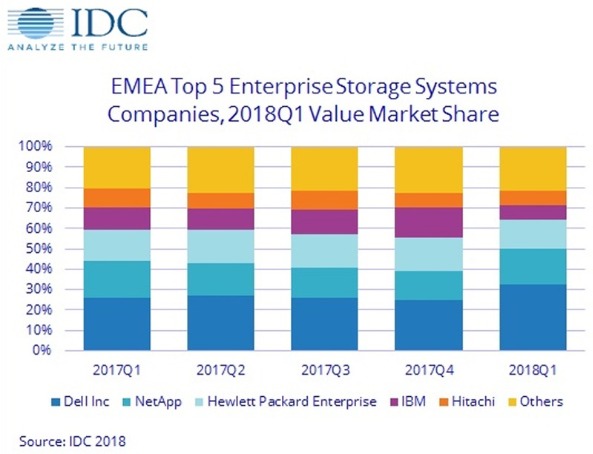

The total EMEA external storage systems value increased almost by 24% in dollars in the first quarter of 2018 (+7.4% in euros), according to International Data Corporation's (IDC) EMEA Quarterly Disk Storage Systems Tracker, 1Q18.

The all-flash-array (AFA) market value recorded high double-digit growth in dollars (58.7%), accounting for 32.7% of overall external storage sales in the region, with most gains recorded in the CEMA subregion. Hybrid arrays, in turn, came close to representing half of total external storage shipments (49.1%). The growth in flash-powered arrays came at the expense of HDD-only systems, which recorded yet another double-digit decline (-24.9%).

"The EMEA external storage market showed remarkable growth in 1Q18, aided by a favorable exchange rate and returning growth for some major players," said Silvia Cosso, research manager, European Storage and Datacenter Research, IDC. "Digital transformation (DX), alongside infrastructure optimization, is the main driver pushing investments in the region. As AFA penetration in the average EMEA datacenter is still low, and end-users have just started dipping their toes into IoT and AI-related projects, we expect further growth in the emerging segments of the datacenter infrastructure market, although with an increasing share of this being captured by public cloud deployments."

(For further research into the workload evolution in the Enterprise Storage market, please refer to IDC's Worldwide Semiannual Enterprise Storage Systems Tracker: Workload).

Western Europe

The Western European market grew 23.9% in U.S. dollar terms, and by 7.4% in euro terms. All flash arrays remained stable at a third of total shipments, with a year-on-year increase well exceeding 50%

The big news of this quarter was a U.K. market returning to growth after 14 consecutive quarters of decline or zero growth in U.S. dollar terms. An unfavorable exchange rate and difficult macroeconomic conditions reflected in stalling investments have been affecting the U.K. market, but DX is finally bringing some investment back.

Meanwhile, the German and French markets continued to show strong growth on the back of higher business confidence at the beginning of the year.

Central and Eastern Europe, the Middle East, and Africa

Alongside Western Europe, the external storage market in Central and Eastern Europe, Middle East and Africa (CEMA) reached 24.1% YoY growth ($423.2 million) triggered by a solid double-digit value boost in both subregions, CEE and MEA.

Both flash-based storage arrays (flash and hybrid) recorded sizeable growth. However, AFA gained 8% share increase compared to a year ago, to account for 30% of total market value, thanks to investments shifting from HFA to AFA, especially in the CEE region. Datacenter upgrades to AFA solutions were a major focus of most storage vendors.

As predicted by IDC, CEMA's positive performance was visible across most big countries, resulting from major verticals investing in datacenter preparation for 3rd platform workloads and technologies. Although emerging, projects related to digital transformation were increasingly present on end users' agendas. As a result, the high-end segment exploded with three-digit YoY growth and the midrange segment stabilized to reclaim 60% of the market.

"As long as the macroeconomic framework does not endure any major changes, the external storage market potential in the region will continue to be visible in the near future," said Marina Kostova, research manager, Storage Systems, IDC CEMA. "Vendors leveraging a regional strategy to address digital transformation efforts and changing consumption models of the market with initial datacenter investments from a growing number of smaller companies, are poised to be more successful."

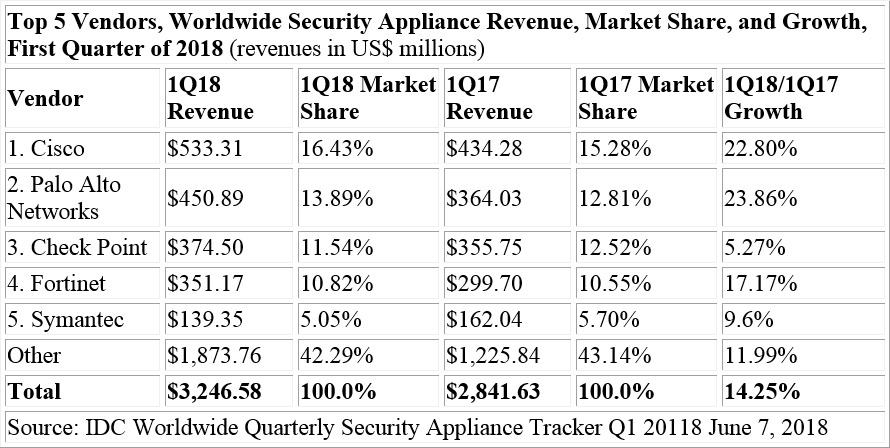

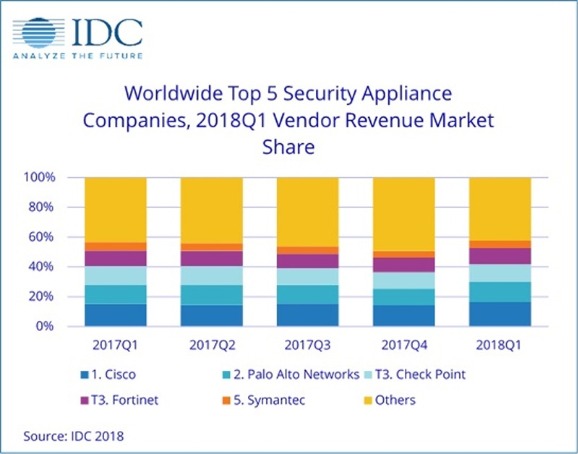

According to the International Data Corporation (IDC) Worldwide Quarterly Security Appliance Tracker, the total security appliance market saw positive growth in both vendor revenue and unit shipments for the first quarter of 2018 (1Q18). Worldwide vendor revenues in the first quarter increased 14.3% year over year to $3.3 billion and shipments grew 18.9% year over year to 838,098 units.

The trend for growth in the worldwide market driven by the Unified Threat Management (UTM) sub-market continues, with UTM reaching record-high revenues of $2.1 billion in 1Q18 and year-over-year growth of 16.1%, the highest growth among all sub-markets. The UTM market now represents more than 53% of worldwide revenues in the security appliance market. The Firewall and Content Management sub-markets also had positive year-over-year revenue growth in 1Q18 with gains of 17.4% and 7.5%, respectively. The Intrusion Detection and Prevention and Virtual Private Network (VPN) sub-markets experienced weakening revenues in the quarter with year-over-year declines of 13.0% and 3.0%, respectively.

Regional Highlights

The United States delivered 42.3% of the worldwide security appliance market revenue and was the major driver for spending in Q1 2018 with 16.7% year-over-year growth. Asia/Pacific (excluding Japan) (APeJ) had the strongest year-over-year revenue growth in 1Q18 at 15.9% and captured 21.0% revenue market share. The more mature regions of the world – the United States and Europe, the Middle East and Africa (EMEA) – combined to provide nearly two thirds of the global security appliance market revenue. Both regions had positive growth in the single-digit range. EMEA saw an annual increase of 11.6%.

Asia/Pacific (including Japan)(APJ) and the Americas (Canada, Latin America, and the U.S.) experienced year-over-year growth of 13.1% and 16.3%, respectively.

"The first quarter of 2018 exhibited strong growth for network security due to consistent double-digit growth across nearly every region and continued momentum from UTM as vendors reported $240.6 million more in revenue for 1Q18 than in 1Q17. Firewall and UTM are the strongest areas of growth as network refreshes drive perimeter security refreshes and as vendors add new features and improve performance across all product lines," said Robert Ayoub, program director, Security Products.

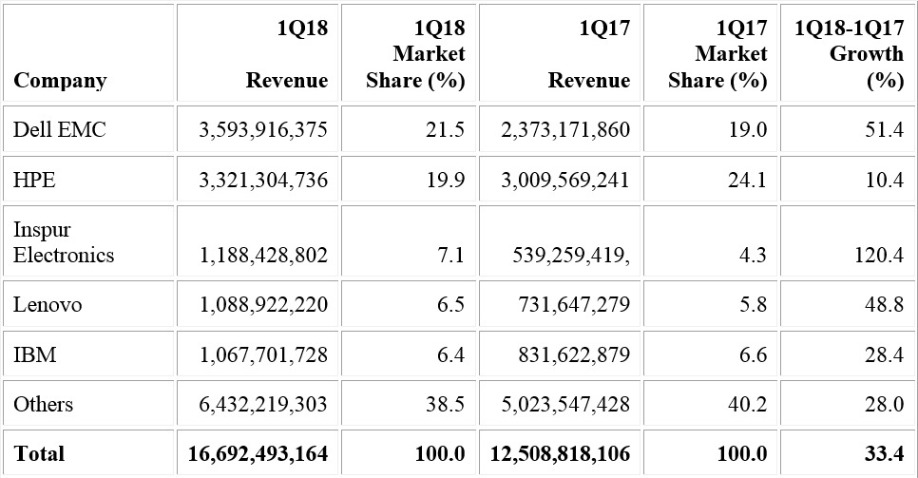

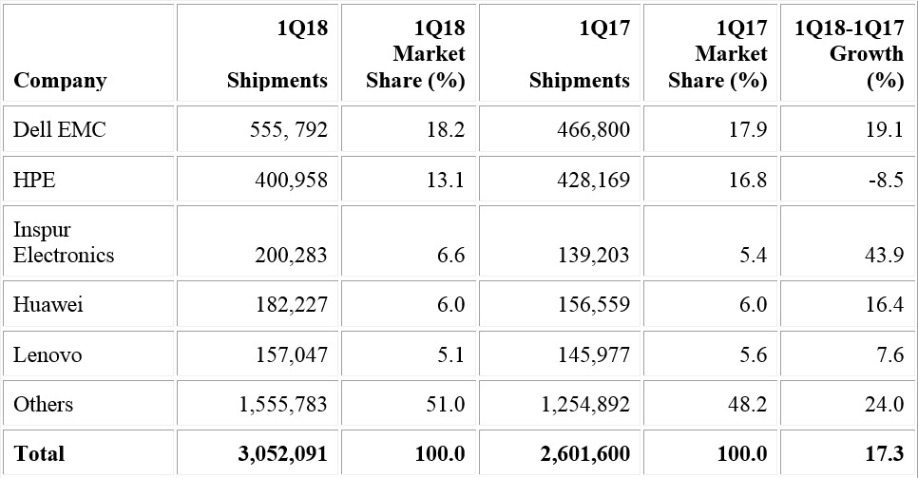

The growth at the end of 2017 continued for the worldwide server market in the first quarter of 2018 as worldwide server revenue increased 33.4 percent and shipments grew 17.3 percent year over year, according to Gartner, Inc.

"The server market was driven by increases in spending by hyperscale as well as enterprise and midsize data centers. Enterprises and midsize businesses are in the process of investing in their on-premises and colocation infrastructure to support server replacements and growth requirements even as they continue to invest in public cloud solutions," said Jeffrey Hewitt, research vice president at Gartner. "Additionally, when it came to server average selling prices (ASP) increases for the quarter, one driver was the fact that DRAM prices increased due to constrained supplies."

Regional results were mixed. North America and Asia/Pacific experienced particularly strong growth double-digit growth in revenue (34 percent and 47.8 percent, respectively). In terms of shipments, North America grew 24.3 percent and Asia/Pacific grew 21.9 percent. EMEA posted strong yearly revenue growth of 32.1 percent while shipments increased 2.7 percent. Japan experienced a decline in both shipments and revenue (-5.0 percent and -7.3 percent, respectively). Latin America experienced a decline in shipments (-1.8 percent), but growth in revenue (19.2 percent).

Dell EMC experienced 51.4 growth in the worldwide server market based on revenue in the first quarter of 2018 (see Table 1). This growth helped widen the gap a bit between Dell EMC and Hewlett Packard Enterprise (HPE) as Dell EMC ended the quarter in the No. 1 spot with 21.5 percent market share, followed closely by HPE with 19.9 percent of the market. Inspur Electronics experienced the strongest growth in the first quarter of 2018 with 120.4 percent growth.

Table 1 - Worldwide: Server Vendor Revenue Estimates, 1Q18 (U.S. Dollars) - Source: Gartner (June 2018)

In server shipments, Dell EMC maintained the No. 1 position in the first quarter of 2018 with 18.2 percent market share (see Table 2). Despite a decline of 8.5 percent in server shipments, HPE secured the second spot with 13.1 percent of the market.

Table 2 - Worldwide: Server Vendor Shipments Estimates, 1Q18 (Units) - Source: Gartner (June 2018)

The x86 server market increased in revenue by 35.7 percent, and shipments were up 17.5 percent in the first quarter of 2018. The RISC/Itanium UNIX market continued to struggle with shipments down 52.8 percent, while revenue declined 46.7 percent.

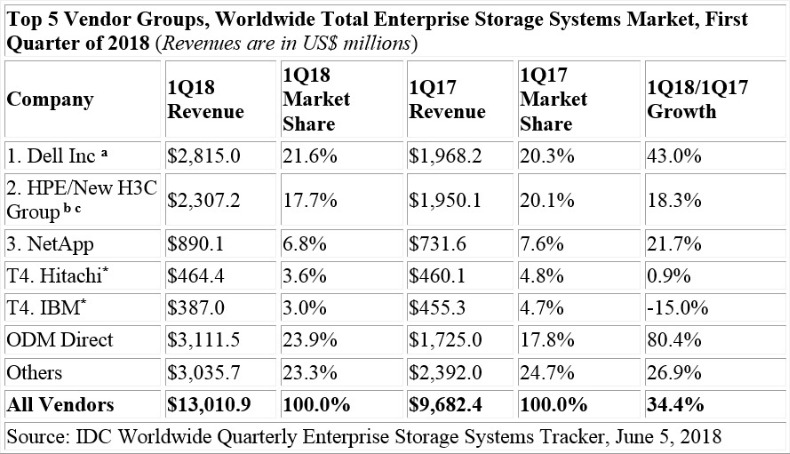

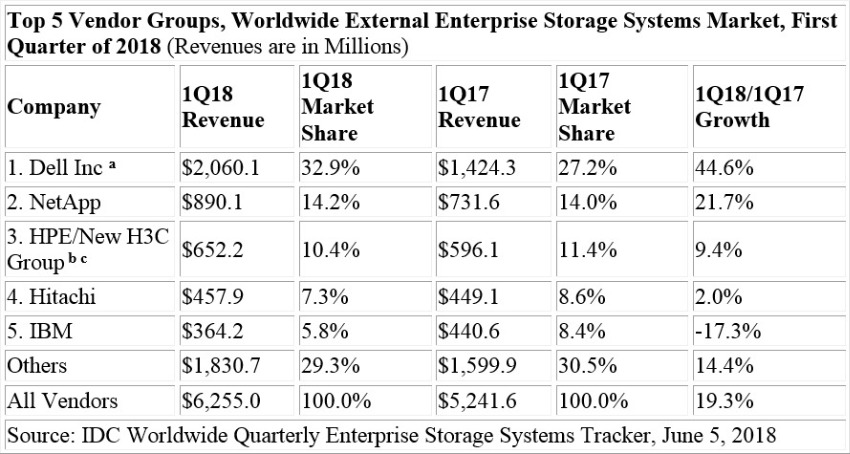

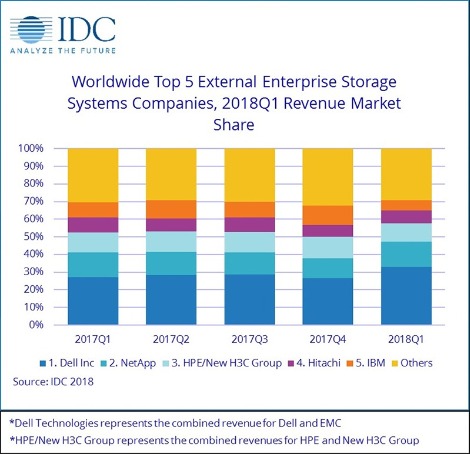

The total worldwide enterprise storage systems factory revenue grew 34.4% year over year during the first quarter of 2018 (1Q18) to $13.0 billion, according to the International Data Corporation (IDC) Worldwide Quarterly Enterprise Storage Systems Tracker. Total capacity shipments were up 79.1% year over year to 98.8 exabytes during the quarter.

Revenue generated by the group of original design manufacturers (ODMs) selling directly to hyperscale datacenters increased 80.4% year over year in 1Q18 to $3.1 billion. This represented 23.9% of total enterprise storage investments during the quarter. Sales of server-based storage increased 34.2% year over year, to $3.6 billion in revenue. This represented 28.0% of total enterprise storage investments. The external storage systems market was worth $6.3 billion during the quarter, up 19.3% from 1Q17.

"This was a quarter of exceptional growth that can be attributed to multiple factors," said Eric Sheppard, research vice president, Server and Storage Infrastructure. "Demand for public cloud resources and a global enterprise infrastructure refresh were two important drivers of new enterprise storage investments around the world. Solutions most commonly sought after in today's enterprise storage systems are those that help drive new levels of datacenter efficiency, operational simplicity, and comprehensive support for next generation workloads."

1Q18 Total Enterprise Storage Systems Market Results, by Company

Dell Inc was the largest supplier for the quarter, accounting for 21.6% of total worldwide enterprise storage systems revenue and growing 43.0% over 1Q17. HPE/New H3C Group was the second largest supplier with 17.7% share of revenue. This represented 18.3% growth over 1Q17. NetApp generated 6.8% of total revenue, making it the third largest vendor during the quarter. This represented 21.7% growth over 1Q17. Hitachi and IBM were statistically tied* as the fourth largest suppliers with 3.6% and 3.0% respective share of revenue during the quarter. As a single group, storage systems sales by original design manufacturers (ODMs) directly to hyperscale datacenter customers accounted for 23.9% of global spending during the quarter, up 80.4% over 1Q17.

Notes:

a – Dell Inc represents the combined revenues for Dell and EMC.

b – Due to the existing joint venture between HPE and the New H3C Group, IDC will be reporting market share on a global level for HPE as "HPE/New H3C Group" starting from 2Q 2016 and going forward.

c – HPE/New H3C Group includes the acquisition of Nimble, completed in April 2017.

* – IDC declares a statistical tie in the worldwide enterprise storage systems market when there is one percent difference or less in the revenue share of two or more vendors.

1Q18 External Enterprise Storage Systems Results, by Company

Dell Inc was the largest external enterprise storage systems supplier during the quarter, accounting for 32.9% of worldwide revenues. NetApp finished in the number 2 position with 14.2% share of revenue during the quarter. HPE/New H3C Group was the third largest with 10.4% share of revenue. Hitachi and IBM rounded out the top 5 with 7.3% and 5.8% market share, respectively.

Notes:

a – Dell Inc represents the combined revenues for Dell and EMC.

b – Due to the existing joint venture between HPE and the New H3C Group, IDC will be reporting external market share on a global level for HPE as "HPE/New H3C Group" starting from 2Q 2016 and going forward.

c – HPE/New H3C Group includes the acquisition of Nimble, completed in April 2017

Flash-Based Storage Systems Highlights

The total All Flash Array (AFA) market generated $2.1 billion in revenue during the quarter, up 54.7% year over year. The Hybrid Flash Array (HFA) market was worth $2.5 billion in revenue, up 23.8% from 1Q17.

By 2020, 92 percent of European companies will have deployed Robotic Process Automation (RPA) to streamline business processes.

Advanced business use of Robotic Process Automation (RPA) is expected to double in Europe by 2020, as companies seek to improve customer experience and streamline their finance operations, according to new research from Information Services Group (ISG), a leading global technology research and advisory firm.

ISG, in partnership with RPA software provider Automation Anywhere, surveyed more than 500 European business leaders to assess their adoption of RPA technology and services. The research reveals the percentage of European companies expecting to be at the advanced stage of RPA use will double by 2020, while fewer than 10 percent will not yet have started their RPA journey.

While Europe has been slower to adopt technologies like automation than other markets, RPA is moving into the mainstream, with 92 percent of respondents saying they anticipate using RPA by 2020, and 54 percent saying they will reach the advanced stage of adoption by then, up from 27 percent currently.

ISG’s research reveals RPA budgets in Europe increased on average by 9 percent in the last year, significantly ahead of the average increase for general IT spending. Of those companies that posted an increase, 25 percent saw a double-digit increase. Third parties, such as consultants and service providers, make up more than half of this budget.

Increasingly, improving the quality, speed and efficiency of client-facing and finance functions are becoming top priorities for corporate automation buyers. Over the next 24 months, respondents say RPA is expected to have the greatest impact on customer service and order-processing functions (43 percent), closely followed by finance, treasury and audit (42 percent); procurement, logistics and supply chain (40 percent), and sales and marketing (38 percent).

Adoption of RPA is in its initial stages in Europe, with three-quarters of respondents saying they are in either the early phase (up to pilot testing) or intermediate phase (automating fewer than 10 business processes). By 2020, however, nearly three-quarters expect to be in the intermediate to advanced phase (automating 10 or more processes).

Among Europe’s largest markets, 60 percent of German companies expect to be in the advanced stage of deployment by 2020 (versus 32 percent today), following by 50 percent in France (up from 22 percent) and 46 percent in the UK (up from 23 percent).

However, barriers to adoption remain. Security is a key concern, with 42 percent of businesses citing this as an obstacle to expanding their RPA use. Lack of budget and resistance to change were both cited by 33 percent, followed by concerns over governance and compliance (29 percent), lack of IT support (28 percent) and lack of executive commitment (27 percent).

Commenting on the findings, Andreas Lueth, partner at ISG, said: “Robotic Process Automation is delivering improved outcomes for enterprises across Europe and our research shows many more businesses will be taking advantage of the technology by 2020 as adoption accelerates. The increasing prominence of RPA in organizations is borne out in the fact that many businesses are now choosing to appoint Heads of Automation – a role that has appeared only in the past two years.

“This technology has the potential to revolutionize customer service and back-office functions alike, but organizations should be wary of falling into the RPA trap. The decision to deploy RPA should be treated as a strategic business initiative, with defined objectives and measures. Without this, the chance of failure is high.”

James Dening, vice president, Europe at Automation Anywhere adds: “European enterprises are at an exciting juncture with respect to RPA. If implemented properly, over the next few years RPA technologies will help deliver significant value for businesses across a range of European enterprises and industries, ensuring growth in productivity, efficiency and output, and helping these firms and industries stay competitive at a local, regional and global level.”

European Union legislation requires the phased reduction of refrigerants that have considerable greenhouse effects by 2030. Such refrigerants are widely used in water chillers and direct expansion (DX) air-conditioning systems used across industries, including datacenters. The current legislation took effect in 2015, but it wasn't until 2018 that the total available supply saw a great cut, by about one-third, according to the phaseout plan. By Daniel Bizo, Senior Analyst, Datacenters and Critical Infrastructure, 451 Group.

As a direct result of this, refrigerant prices have been rising rapidly since 2017, and now resellers are increasing quotes by 10-20% every month on already high prices as the market races to find a new equilibrium. Costs are being passed on to end users and becoming material to datacenter design and equipment sourcing decisions. The next phase takes effect in 2021, when another 30% of the supply will be cut. The HVAC-industry (heating, ventilation and air-conditioning) has started rolling out new chillers and developed new refrigerants in response. Those that build datacenters optimized for free-cooling and use mechanical refrigeration for trimming peaks are less affected, while those with more traditional cooling regimes, particularly around computer room air-conditioners, might reconsider their equipment choices.

The 451 Take

Investigating refrigerant options and how it might affect cooling design is an unlikely item on the task lists of datacenter engineers and managers. The last time such a change took place was over 20 years ago, when the production of ozone-depleting refrigerants was banned. But the picture today couldn't be more different – datacenter facilities have spread in numbers, and grown in size and importance. In reflection of all this, datacenters have become a major expenditure. On top of all that, environmental charities and pressure groups closely scrutinize major datacenter operators. Skyrocketing costs and looming hard shortages are inescapable and will demand the attention of datacenter owners for prudent reasons, but they also present an opportunity to speed up the shift toward more environmentally sustainable operations.

Some refrigerants are just not cool anymore Having eliminated virtually all sales of ozone-depleting gases (and equipment that makes use of them) ahead of its commitments made under the Montreal Protocol, the European Union turned its attention to leading the charge against substances with strong greenhouse effects, or global warming potential (GWP) in climate research and regulatory parlance. These are hydrofluorocarbons (HFC) – generally referred to as F-gases. European regulation of F-gases has been in place since 2007, but the EU adopted a new and much more stringent regulation in 2014 to limit emissions. Ambitiously, the new legislation has set out a plan that will reduce the sale of F-gases in the EU to one-fifth of the baseline (Fgas sales are measured by their carbon dioxide equivalence) by 2030, set at the average from 2009-2012. Only chemical processing, military applications and semiconductor manufacturing are exempt from the phase-down.

As a result, F-gas emissions in the European Union will be cut to one-third of 2014 levels by the end of the next decade. The EU, the world's largest trading bloc, adopted its accelerated HFC phase-down legislation ahead of the Kigali Amendment to the Montreal Protocol, a global agreement to cut HFC consumption by 80% in 30 years, which comes into force in 2019.

The first phase of cuts in the EU came into effect in 2016, yet at 7% (of total supply) it had marginal effect on the overall supply-demand picture. Come 2018, however, the total available supply was cut to 63% of the baseline. The next cut, due in 2021 will see it reduced to 45% of baseline, and 31% by 2024. Anyone producing or importing F-gases needs to apply for quota. Prices have been escalating quickly, with double-digit monthly adjustments by resellers as hard shortages of key refrigerants, such as R134a, which is the predominant choice for datacenter cooling systems, hit the market – supplies are going to the highest bidder. Another contributing factor may have been increases in production costs of key F-gases driven by price surges of key ingredients in 2017. The net effect today is a tenfold jump compared with just a couple of years ago (1,000%).

Vendors say the shortage-driven price of R134a is already becoming material to end users. In cases where large amounts of refrigerant are needed to charge the system, datacenter customers will see as much as a 5% cost increase on capital costs for cooling, up from a negligible amount. This is only the start. Even if markets find a new equilibrium, it will be short-lived with new phases coming into effect every three years. Anticipation of shortages, much like in 2017, will also likely bring demand ahead as many participants will attempt to build stockpiles, making shortages and price increases even more pronounced elsewhere. Vendors expect the issue of refrigerants (both the cost and environmental sustainability) to speed up the shift toward extended temperature ranges in the data hall, as well as systems that are optimized for maximized free cooling hours (time during which no mechanical refrigeration is employed) and rely on mechanical refrigeration for only part of the load. Prime examples are indirect evaporative air handlers and economized free cooling chillers.

Ultimately, the HVAC industry (heating, ventilation and air-conditioning) will shift away from F-gases to substances with much lower GWP – it has no other choice. There are multiple options being explored, some of which are ready for commercial application. Still, questions remain about their cost performance and, more importantly, long-term sustainability. While some alternative refrigerants offer low GWP, which makes them ideal for environmental sustainability, either cooling performance or safety properties make them less than ideal for application in existing cooling systems. Ammonia, for example, is a high-performance refrigerant that also occurs naturally and has a zero GWP. Yet, unlike R134a, which is an inert gas, it is flammable, toxic and (with moisture present) corrosive to some metals (such as copper). This makes it unattractive for many applications, such as computer room air-conditioning (CRAC), where it puts both people and potentially mission-critical IT infrastructure at risk.

Count to four, a new compound is at the door Fortunately, there are much more suitable compounds available. The most promising one for datacenter-type applications is R1234ze, a so-called fourth-generation refrigerant with zero ozone depletion and negligible global warming potential. As a hydrofluoroolefin (HFO) compound, however, it is classified as mildly flammable by climatic industry body ASHRAE (American Society of Heating, Refrigerating and Air-Conditioning Engineers). Even though it is difficult to ignite (lots of energy required), this will require some changes to the design of cooling and fire-suppression systems, as well as to the operational procedures around storing it and maintaining equipment that uses the gas, particularly in a closed environment such as a computer room.

Another issue is performance: R1234ze has lower cooling capacity relative to R134a, which means it can absorb less thermal energy for a given volume. This means it is not ideal as a drop-in replacement in existing cooling systems unless a drop-in cooling capacity is acceptable, alongside marginal fire risks, for the operator – for example, enterprise sites with low utilization. But changes required to support R1234ze are relatively minor, and chiller-manufacturers have already started rolling out new products that accept this environmentally sustainable refrigerant with a new compressor option. The cost of adoption is not negligible: peak cooling capacity is about 10-25% lower for a similar configuration (same frame size, number of compressors, number of fans, etc.). This means more expensively configured and possibly larger (or more) chiller units for a given IT load where R1234ze is used.

An intermediate option is to blend the currently established R134a and one of the new refrigerants in various mixes. Such cocktails (e.g., R450A, R513A) offer very similar performance to R134a in datacenter applications and lower greenhouse effects by about 50-70%, depending on the mix. Loss of cooling capacity and efficiency is marginal, so it should not be of concern unless the datacenter site is already running at design capacity.

It is for these features that the industry widely considers such blends as drop-in replacements to R134a to be a cost-effective measure to increase environmental sustainability while addressing the supply issues of pure R134a. These blends will give datacenter operators more time to weigh their options and plan for upgrading equipment. Long term, 451 Research expects these blends to give way to R1234ze and other substances with very low GWP.

By Steve Hone, CEO The DCA

Ever tried fishing and wondered why the guy next to you always seemed to catch not only more but catches the biggest fish, while your net remains woefully empty? Rather than struggling on alone, if you took the time to ask you would probably find it was a simple case of having a better strategy, better position and / or better bait.

Speak to any business owner irrespective of the sector and you will quickly discover you are not alone when it comes to the challenge of identifying the right people needed to help drive your businesses forward. Finding the right skills, at the right time and at the right price can be a real uphill battle. This is even more evident when you are working at the leading edge of technology such as those relating to the resources needed within the data centre sector.

Workshops and other events I have recently attended had an increased focus on the issue of gender diversity within the data centre space. There is no doubt that our sector is currently very male dominated, and the data centre trade association is working with its fellow strategic partners to address this issue.

For the DCA, the issue of gender diversity forms part of a far larger ͞Sustainability Initiative͟ which is equally addressing both the challenges we face with a looming labour shortage and the challenges of ensuring that this new labour force has the skill set required to hit the ground running.

With such growth forecasts and career opportunities on offer you may well be lulled into thinking this should be an easy task to solve. If only it were that simple!As previously mentioned we are not alone in this challenge, and the competition we face is fierce. Almost every sector is reporting the same challenges and concerns and there lies the issue. We face far stiffer competition from other industries who are all equally looking for the same sort of skill sets as us, and quite frankly they are doing a far better job at attracting the attention of those we desperately need in the DC Sector.

To attract the right people the DC Sector needs to not only do a better job promoting the data centre sector from an awareness perspective but also to ensure that students studying Mechanical Engineering, Electrical Engineering, Computer Science etc., are able to accessspecialist modules that specifically focus on the Data Centre sector.

Awareness and accessibility to qualifications go hand in hand if we are to stand any chance of fixing this growing issue. The data centre sector continues to flourish creating opportunities not just within the data centre itself but also the supply chain of manufacturers, installers and consultants which under pin it. This in turn creates job opportunities not just for graduates but equally students studying for A Levels, BTEC’s, and similar technical qualifications at UTC’s, Technical Colleges etc.

All of these students need to be made aware of the fantastic opportunities which exist within the Data Centre sector and to be given the tools to enable them to select our industry as their chosen career path. The Data Centre Trade Association continues to work with Industry leaders such as CNet Training, the Academic Community and Policy Makers to raise the profile of the Data Centre Sector and we would welcome your collaborative support.

In summary, it is vital that as a sector we work closely together to ensure we have the right strategy, the right story and the right bait to increase our chances of attracting the prize of new talent into our net rather than into everyone else’s.

Many thanks to those supporting members who summitted articles for this months Journal edition. Upcoming themes include Research & Development in September with a deadline of 14th August 2018 and Smartcities, Big Data, Cloud, IoT and AI coming up in October with a deadline of 12th September 2018. If you would like to submit content, please contact Amanda McFarlane –amandam@dca-global.org / 0845 873 4587.

By Steve Hone, CEO The DCA

By Dr Terri Simpkin Higher and Further Education Principal at CNet Training

Skills and labour shortages are an often cited and lamented data centre sector issue. The topic is examined, dissected and argued at events, workshops and meetings all over the globe. More often than not the usual suggested responses are trotted out and people nod in agreement and the lack of advancement of the topic is bemoaned.

Getting into schools, raising the profile as an employer and getting universities on board with specific education programs are all repeated "go-to" resolutions. And while these are clearly common sense suggestions there are some fundamental platform issues that make it more difficult than it appears at face value. If it were less complex, we (and many other sectors facing the same issues) would have the problem resolved by now.

One of the most obvious responses to lack of talent, too, is the lack of women in the data centre sector. It really is an obvious resolution to a highly observable and problematic lack of diversity. However, as with the other fundamental capability and talent concerns being faced by our sector, getting more women into our organisations is not quite as straightforward as putting out the welcome mat and inviting them to participate.

Why diversity matters

The business case for more inclusive workplaces has been made for decades. Organisations such as the World Bank, The World Trade Organisation and the United Nations1 for example, have all published robust arguments as to why getting more women into the workforce, to meet their potential for economic contribution, is good business. The World Economic Forum suggests that at current rates of improvement (using that word in a very loose fashion!) the gender gap in regard to parity of access to privileges such as pay and rewards, access to economic participation, political participation and education, will take 217 years to achieve2.

A raft of research, both empirical and industry based tells a story of greater innovation, better decision-making, greater efficiency and improved profitability where a diverse workforce and board representation is present3. As a business case, it's a bit of a "no-brainer" and one that is intuitively self-evident.

Despite the compelling arguments put forth, getting women into science, technology, engineering and mathematics (STEM) occupations, particularly at the leadership and senior management levels, is a key challenge. Organisations such as WISE, WES and POWERful Women are charged with raising the profile of women in STEM sectors and lobbying for greater representation in the STEM workforce.

Research recently delivered by WISE suggests that women make up 24 per cent of the STEM workforce in the UK. In terms of professional engineers, women represent 11 per cent with 27 per cent of the science and engineering technician roles occupied by women. In professional ICT the number has dropped by 1 per cent to 17 per cent and 19 per cent of the ICT technician population is women. In management roles in science, engineering and technology women represent 15 per cent. Looking at the audience at any data centre event and you'll find far fewer women than that.

So, what’s going on?

The reasons for a general lack of women in STEM are complex. Social and organisational barriers have long existed and are difficult to shift. The work of Angela Saini, for example, paints a picture of long held, but erroneous, assumptions about the capabilities of men and women. In her book Inferior4, she recounts a raft of scientific studies that have set up the "nature" over "nurture" argument for women being less capable in the sciences for example.

Take this comment from Charles Darwin who wrote in correspondence that states "I certainly think that women though generally superior to men [in] moral qualities are inferior intellectually... and there seems to me to be great difficulty... in their becoming the intellectual equals of man" (Saini, 2017:18).

Fast forward to more recent times and Saini tells of a raft of research that misinterprets social conditioning for "natural" ability in terms of the capacities of men and women. Essentially, we've been conditioned to believe that men are better at sciences and women are better at humanities. While this is still a strongly held belief, neuroscience is now debunking the myth. Social and workplace attitudes are a long way from being brought up to date, however. Even our schools still actively, if implicitly, push girls into social sciences, creative and humanities subjects rather than routinely offering a broader range of science based learning to all students. My own research into women in STEM illustrated a shocking statistic that 89 per cent of respondents experienced the impostor phenomenon; the unfounded feeling of being a fake in their own STEM occupation.

We know that girls are underrepresented in science at senior levels of secondary education, but do better in assessments. Girls represent about 35 per cent of all students enrolled in STEM related studies with some of the lowest representation in ICT and engineering. A disproportionate attrition of women from higher education STEM courses continues into early career and even in later career5. It's not so much a leaky pipeline as a leaky funnel.

Career decision making starts early

We know that when considering occupations, the first decision children unconsciously make in divesting options is made on the basis of gender. If girls see no women around them in engineering, IT or broader STEM occupations they'll consider them "men's" jobs and fail to consider them as suitable.This happens at early infant school age and the tragedy is, it's unlikely that they'll reconsider those options later in life. The data centre sector then, is well and truly off the agenda.

The dearth of visible women in the sector is perpetuating a lack of awareness and failing to paint the sector as an option for girls who will go on to consider other options more consistent with the gender roles they are aware of from their parental, social and educational contexts.

So, the upshot of all this is that there are fewer women to choose from, but suggesting that there are no women to hire is an easy out. Employers that want to find women will. And here's how...

Get critical

Organisations that actively examine their processes and procedures for implicit bias are likely to be more successful at attracting women. This includes recruitment and selection, reward and recognition and succession planning for example. It's known that a broader pool of talent can be tapped into by changing language, broadening out the "wish list" often used by hiring managers and creating more inclusive role descriptions.

This is not just to attract women.

If a critical view is taken in an attempt to rebuild traditionally oriented workplace structures to reflect a more diverse workforce, then it naturally expands the talent pool to those from different socio-economic and social backgrounds as well as women. This is far from being a process of "feminising" structures and processes, but of "humanising" them. It is not a zero sum proposition where the focus is on women at the expense of men, but a recognition that the workplace and demands of workers has changed irreversibly, but our workplace structures have not. The second machine age workplace is still running with industrial age structures. It's like trying to run a data centre with ENIAC.

The traditional mechanisms we use to manage organisations have been built for men by men6. This is not a criticism but a historical fact. It's known too, that hiring managers often hire in their own image. Challenge that and diversity is more likely. We also know that culturally embedded practices such as networking within a narrow social circle (read the traditional "boy's club" moniker here), excludes women and men who lie outside of that circle. Expand that out and the talent pool will open up.

But I’ve done some unconscious bias training, won’t that do?

In three words? No, no and a resounding no. Unconscious bias training is a good start to raise awareness but it won't change the culture. It may stop people from telling "blonde" jokes but it's known that in isolation it does not work7. Indeed, research suggests that it's more likely to infuriate men and fail women. Recall the Google diversity email manifesto scandal8 and the backlash against the "me too" movement.

Organisations that actively examine the "back of house" processes, policies, values and procedures in alignment with unconscious bias training over the long term are more likely to reap rewards.

Start now

Theres a broad ranging sector imperative at risk here if organisations continue to drag their feet on critically reviewing workplace practices. With a sectoral disadvantage associated with a lack of visibility in comparison to other sectors such as manufacturing and construction, the war for talent has never been so intense. It makes sense for data centre organisations to get wise about the reconstruction of outmoded structures and attitudes that are not serving the talent attraction agenda. The skills and labour shortage will not be resolved unless the recruitment, retention and advancement of women is actively advanced and perpetuated.

To inform a better approach to inclusion, as part of my role within CNet Training, I'm revising my global work on the experience of women in STEM to focus on women in data centres. This empirical research aims to better understand the structural barriers to entry, retention and advancement of women in the sector. Work such as this will provide a clearly articulated suite of evidence that the sector can use to formulate an evidence-based response to an all-pervasive and entrenched issue of lack of workforce diversity.

Dr Terri Simpkin

Higher and Further Education Principal

CNet Training

1 https://en.unesco.org/sites/default/files/usr15_is_the_gender_gap_narrowing_in_science_and_engineering.pdf

https://www.wto.org/english/tratop_e/womenandtrade_e/gendersdg_e.htm

http://www.worldbank.org/en/topic/gender

2 https://www.weforum.org/agenda/2017/11/women-leaders-key-to-workplace-equality

3 https://www.mckinsey.com/business-functions/organization/our-insights/delivering-through-diversity

4 Saini, A. 2017. Inferior. How Science Got Women Wrong... and the New Research That’s Rewriting the Story.London. 4th Estate.

5 http://unesdoc.unesco.org/images/0025/002534/253479e.pdf

6 https://theconversation.com/what-the-google-gender-manifesto-really-says-about-silicon-valley-82236

7 http://time.com/5118035/diversity-training-infuriates-men-fails-women/

By Dr Umaima Haider, Research Fellow at the University of East London

Over 800 stakeholders were trained globally through various events organised by the EURECA project

The EURECA Project

EURECA was a three-year (March 2015 – February 2018) project funded by the European Commission’s Horizon 2020 Research and Innovation programme. It was aimed at providing tailored solutions to help identify the cost saving opportunities represented by innovation procurement choices, as related to the environmental impact of these choices, for public sector data centres. One of the main objectives of the EURECA project was to:

"Provide tailored procurement practical training and awareness programmes covering environmental, legal, social, economic and technological aspect".

The main purpose of this objective was to bridge the knowledge gap between non-technical officers and technical stakeholders to improve solution viability and engagement in environmentally sound products and services.

For further information, please visit the EURECA project website at www.dceureca.eu

The EURECA Training Kit

During the project’s lifetime, a training curriculum (called the "EURECA Training Kit") was designed to help stakeholders identify opportunities for energy savings in data centres.

In relation to the EURECA objectives, the training kit has 9 modules divided into two categories, "Procurement" and "Technical". The procurement courses (6 courses) cover various different aspects of innovation procurement such as policy, strategy, tendering and business case development. The technical courses (3 courses) cover best practices, standards and frameworks related to data centre energy efficiency.

All the EURECA training modules are available electronically and accessible freely, and the majority of the modules come with video recordings. The modules can be accessed via this link: https://www.dceureca.eu/?page_id=2064

Training Approach

The EURECA training modules enable stakeholders to play a crucial role in delivering an effective efficiency and cost control strategy for their data centres. 815 stakeholders were trained across the world through various events organised by the EURECA project. More specifically, 10 EURECA face-to-face training events were held across Europe (UK, Netherland, Dublin and Paris, to name just a few). Two webinars and two bespoke training events were delivered at stakeholder premises. One of the webinars was dedicated to European cities while the two bespoke training sessions were tailored for senior stakeholders from the Irish and UK Governments.

Training Outcome

Overall, 46% of the EURECA training attendees came from the public sector, as compared to 54% from the private sector. 71% of the participants attended face-to-face events, whereas 29% joined the webinars. Finally, the vast majority of attendees (80%) came from EU member states. This is illustrated in Figure 1 below. There was a diverse set of stakeholders attending the EURECA training events. The following groups were represented: C level IT executives, ICT/Data Centre/Energy managers, policy makers at EU level, procurement experts and academic researchers.

Figure 1: EURECA Trainee Analysis

Finally, these numbers (and consequently, the analysis) do not include stakeholders whose capacities were increased via other modes of knowledge sharing. Similarly, these figures do not include the number of people who attended/downloaded the online training curriculum available on the EURECA website. This number is regularly increasing; However, given that we cannot be sure whether the people who download the files actually go through them, or how many unique users downloaded the files, we decided to leave this number out of our analysis. Yet the contribution of this online platform towards building the capacities and skills of stakeholders should not be underestimated.

Going Forward ...

Based on the lessons learned from the EURECA pilots and from other project activities, the bespoke training kit designed under EURECA can be considered a world first. It captures the knowledge hotspots identified as crucial for successfully implementing energy efficiency projects in the data centre industry (targeting the European public sector). Thus, it has helped to build a substantial pool of capacities and skills in the field of data centre energy efficiency.

Going forward, we anticipate the online platform playing a key role in the continued dissemination of knowledge. The training programme will be maintained by the EURECA partners to ensure its currency and alignment with the various developments in standardisation, legislation, and best practices. Additionally, EURECA members continue to deliver face-to-face training and awareness sessions, such as the ones done recently at Data Centre World London in March 2018, Uptime Institute Network EMEA Conference, Vienna, in April 2018 and Datacentres North, Manchester, in May 2018.

AUTHOR: Dr Umaima Haider

JOB TITLE: Research Fellow

ORGANISATION: University of East London

BIO: Dr Umaima Haider is a Research Fellow at the University of East London within the EC Lab (https://www.eclab.uel.ac.uk). She held a visiting research scholar position at Clemson University, South Carolina, USA. Her main research interest is in the design and implementation of energy efficient systems, especially in a data centre environment.

CONTACT: uhaider@clemson.edu

By Julie Chenadec, Project Manager, Green IT Amsterdam

The month of May marks the 4 years anniversary of my arrival in the Netherlands when I started my first internship at Green IT Amsterdam.

Upon my arrival, I discovered a, new for me, booming industry: Information and Technology. Digging into that world, I discovered new concepts and technologies such as distributed data centres, smart grids, cloud technology, server virtualisation and so on and so forth. Just like every layman, I knew how to use the Internet on my phone and laptop, but I knew nothing about what is behind the curtain. As my work evolved, I also came across another related industry challenge: the sense of urgency. Data grows so fast nowadays that sustainability is a necessity. Not only to scale-up but also as an enabler towards sustained IT critical infrastructures.

Take for example IT on its own: as data centres are the backbone of our digital economy, our use of digital services generates huge amount of data traffic every year and its rising every year. In Europe, data centres energy consumption is expected to reach 104 TWh by 2020, despite the use of more virtualisation and cloudification, (or even perhaps because of it?). Therefore, we need to think how to turn data centres into flexible multi-energy hubs, which can sustain investments in renewable energy sources and energy efficiency, which is the primary goal of the CATALYST project.

"Data centres can and should offer energy flexibility services to their smart grid and district heating networks", CATALYST project.

As a young professional woman at age 23, showing up in a world traditionally dominated by men was quite a stepping stone for me. Nevertheless, I’ve never doubted my choice to pursue a career on this sector. On the contrary it gave me the opportunity to bring in a fresh, and perhaps in some cases much needed, viewpoint.

4 years later, I can proudly say that "I work in green IT"! Although most of the people outside of this sector barely know it, my colleagues and I have a lot of ambition and vision because we all think that a need for cooperation and knowledge building is by far the most important element to support innovation and breakthrough technologies.

When it comes to Green IT, all stakeholders need to be innovative, technology-savvy, coming up with novel business model, and always find a revolutionary solution (take Asperitas who are in a quest to disrupt the data centre industry with their cleantech solution). IT is a sector that can adapt really fast and actually has the power to change and disrupt itself at its core.

In 4 years, the knowledge and responsibility I gathered is impressive. From analysing the transfer of IT good practices from different European regions, to now being the project leader of a European project, EV ENERGY where we aim to combine clean energy & electric mobility in cities, providing analysis to a number of regional and EU wide projects and launching with 3 other organisations a European multi-country alliance for Green IT (GRIG), I have accumulated both skills and experience.

One that I'm particularly proud of is sustainable leadership and awareness raising. I am confident enough to say I'm a positive and optimistic person in general, while always keen on sharing my vision, explaining the reasons of necessary changes in our society: I solely believe sustainable leadership is the key. It is not enough that we are working towards more energy efficiency with less greenhouse gases emissions, if we cannot reach the society, share our thoughts and take action, we won't succeed in tackling climate change. Ask yourself: "Is my activity providing a positive impact for the society?"

"No smart future without green clouds" Anwar Osseyran

Nevertheless, I envision myself only working in IT. Why? The impact with IT is tremendous. We have the opportunity to work on many different aspects of IT: electric vehicles, smart grids, data centres, energy flexibility, policy-making, etc. as well as working with a lot of different stakeholders. Something that I learned over the past 4 years, is that we need to engage with everyone. Everyone is of importance when we green the IT sector, collaboration is key.

For all these reasons, I particularly want to thank Jaak Vlasveld, Esther van Bergen, Vasiliki Georgiadou and Maikel Bouricious who gave me the opportunity in the first place and trusted me along this wonderful journey. But also to every single person I came across and still meet today, thank you too!

Green IT Amsterdam is a non-profit organization that supports the wider Amsterdam region in realizing its energy transition goals. Our mission is to scout, test and showcase innovative IT solutions for increasing energy efficiency and decreasing carbon emissions. We share knowledge, expertise and ambitions, for achieving these sustainability targets with our public and private Green IT Leaders. Follow us on twitter @GreenItAms; visit our website http://www.greenitamsterdam.nl/.

Article provided by STEM Learning

UK STEM businesses have warned of a growing skills shortage as they struggle to recruit qualified workers in science, technology, engineering and mathematical fields.

According to new findings from STEM Learning, the largest provider of STEM education and careers support in the UK, the shortage is costing businesses £1.5 billion a year in recruitment, temporary staffing, inflated salaries and additional training costs.

The STEM Skills Indicator1 reveals that nine in 10 (89%) STEM businesses have found it difficult to hire staff with the required skills in the last 12 months, leading to a current shortfall of over 173,000 workers - an average of 10 unfilled roles per business.

The findings come as the UK is entering the "Fourth Industrial Revolution"2, a time of significant technological, economic and societal change, along with a Brexit outcome that remains uncertain, and severe funding challenges in schools.

As a result, the recruitment process is taking much longer for the majority (89%) of STEM employers – an average of 31 days more than expected – forcing many to turn to expensive temporary staffing solutions (74%), hire at lower levels (65%) and train staff in-house (83%) or inflate salaries (76%) by as much as £8,500 in larger companies to attract the right talent.

Almost half (48%) of STEM businesses are looking abroad to find the right skills, while seven in 10 (70%) are hiring candidates without a STEM background or simply leaving positions empty (60%).

Businesses are concerned about the outlook too. Over half (56%) expect the shortage to worsen over the next 10 years, with expansion in the sector set to nearly double the number of new STEM roles required.

Employers are concerned that the UK could fall behind other countries in terms of technological advancement (54%) or lose its research and development credentials (43%), while others warn a lack of talent could put off foreign investment in the sector (50%).

Building the future pipeline of skills will therefore be key to maintaining the UK's standing in the STEM sector. Low awareness of the jobs available (31%) and a lack of meaningful work experience opportunities (35%) are identified by businesses facing recruitment challenges as key barriers to young people considering STEM careers.

In a rapidly changing technological environment, the UK government is planning to invest over £400 million in mathematics, digital and technical education3, but businesses will also need to start investing in a sustainable pipeline of talent now.

Nearly one in five STEM businesses (18%) that are finding it difficult to recruit admit that employers need to do more to attract talent to the sector. STEM Learning is therefore calling for businesses to join its efforts to inspire young people in local schools and colleges and help grow the future workforce.

Yvonne Baker, Chief Executive, STEM Learning said: ͞We are heading towards a perfect storm for STEM businesses in the UK - a very real skills crisis at a time of uncertainty for the economy and as schools are facing unprecedented challenges.

"The shortage is a problem for employers, society and the economy, and in this age of technological advancement the UK has to keep apace. We need to be in a better position to home grow our talent but it cannot be left to government or schools alone – businesses have a crucial role to play too.

"STEM Learning bridges the gap between businesses and schools. By working with us to invest in teachers in local schools and colleges, employers can help deliver a world-leading STEM education, inspiring young people and building the pipeline of talent in their area, making it a win-win for everyone."

The DCA are in discussion with STEM Learning regarding the introduction of the STEM Ambassadors Programme from the Data Centre Industry.

For further information contact info@dca-global.org

It was made clear in the presentation from Gartner at April’s European Managed Services Summit that customers were finding it hard to differentiate individual managed services providers and their services. Research director Mark Paine from Gartner told a packed event that while MSP Services were growing at over 35%, it might not last, and certainly the pace of competition was not going to let up. Even so, at the latest estimate, the global managed services market is expected to grow from €150bn last year to €250bn by 2022.

“The key to a successful and differentiated business is to give customers what they want by helping the customer buy,” he told the Amsterdam audience.

In a business where 65% of the buying process is over before the buyer contacts the seller, because of all the information gathered beforehand, the MSP is even less in charge of what happens than in the old VAR model. Without differentiation, the customer is more likely to buy from their usual sources, or at the least, ignore an MSP who does not offer anything different, he says.

But the rewards are waiting for those MSPs who can prove what problems they solve and what makes them special, particularly when the MSP can show how the deal will work and how customers get value, he says. Research shows that product success and aggressive selling carry no weight with the customer, compared to laying out a vision for the customer’s own growth and success.

So a major part of the message in the Amsterdam event, and in the forthcoming London summit on September 29th will be on marketing issues with the aim of getting MSPs up to speed on the current best techniques in building sales pipelines, leveraging available marketing resources, and best practice using social media.

Among the issues challenging the MSP marketing teams is that fact that buying teams are large and extended, containing a variety of influencers, with decision-making spread throughout the organisation. Getting a consistent message through in this environment is a problem which MSPs themselves will be discussing at the London event, which will have speakers who have successfully promoted their message at the highest levels.

One secret about which more will be revealed is that MSPs need to align their businesses with their customers, so that a win for either is a win for the other. Being able to demonstrate this is a good deal-closer. Being able to lay out a convincing vision to improve the customer’s business and to offer this as a unique and critical perspective will win business, says Gartner.

Now in its eighth year, the UK Managed Services & Hosting Summit event will bring leading hardware and software vendors, hosting providers, telecommunications companies, mobile operators and web services providers involved in managed services and hosting together with Managed Service Providers (MSPs) and resellers, integrators and service providers migrating to, or developing their own managed services portfolio and sales of hosted solutions.

It is a management-level event designed to help channel organisations identify opportunities arising from the increasing demand for managed and hosted services and to develop and strengthen partnerships aimed at supporting sales. Building on the success of previous managed services and hosting events, the summit will feature a high-level conference programme exploring the impact of new business models and the changing role of information technology within modern businesses.

The UK Managed Services and Hosting Summit 2018 on 19 September 2018 will offer a unique snapshot of this fast-moving industry. MSPs, resellers and integrators wishing to attend the convention and vendors, distributors or service providers interested in sponsorship opportunities can find further information at: www.mshsummit.com