The current excitement over digital transformation shows no signs of slowing down. Just when it seems everyone is comfortable with, and actually deploying, Cloud and managed services, along comes AI, ML and RPA to bolster the rising profile of IoT. Rest assured, VR and AR are waiting just around the next bend in the technology road.

With the supply chain stacked with a whole portfolio of clever, elegant, cost-effective, useful technology solutions and end users recognising that they have to embrace the future if they are not to go the way of the various high profile businesses that have shut their doors in the past year or so, what could possibly go wrong with the digital transformation story?

Skills, or rather a lack of them, is the answer. Vendors and the Channel are struggling to identify the individuals who combine the necessary levels of problem-solving and technology attributes to become the trusted advisors that customers need; and most industry sectors, with the exception, perhaps, of fintech, have a distinct lack of knowledge when it comes to specifying and deploying digital technology.

The starting point for all this is that end users do not really want to have conversations about IT, but about business solutions. This is what I want to do…how can you help me achieve it?

Traditionally, the supply chain has approached sales trying to convince customers that the newest server or storage box is twice as fast as the previous one, at half the price, so why wouldn’t you buy it? And, once upon a time, customers would have. However, now customers would respond to this ‘compelling’ sales story with a ‘So what?’ What they want is someone telling them that they can help improve the speed and reliability of their ecommerce website, the performance of their mobile devices, speed up resolution of customer complaints…solutions not technology for technology’s sake!

The sales skills replied to do this are thin on the ground right now. So, there is a very real danger that the headlong rush for digitalisation will slow down as there aren’t enough people with the right combination of skills and knowledge to specify and/or supply business solutions.

It will be fascinating to see whether this gap can be addressed successfully and quickly so that digitalisation’s momentum is not checked. But it’s not immediately obvious how and when, with rather too many current Channel and IT specialists still trying to hold on to what they’ve always done and reluctant to embrace the change that their bosses are demanding.

Worldwide spending on cognitive and artificial intelligence (AI) systems will reach $19.1 billion in 2018, an increase of 54.2% over the amount spent in 2017. With industries investing aggressively in projects that utilize cognitive/AI software capabilities, the International Data Corporation (IDC) Worldwide Semiannual Cognitive Artificial Intelligence Systems Spending Guide forecasts cognitive and AI spending will grow to $52.2 billion in 2021 and achieve a compound annual growth rate (CAGR) of 46.2% over the 2016-2021 forecast period.

"Interest and awareness of AI is at a fever pitch. Every industry and every organization should be evaluating AI to see how it will affect their business processes and go-to-market efficiencies," said David Schubmehl, research director, Cognitive/Artificial Intelligence Systems at IDC. "IDC has estimated that by 2019, 40% of digital transformation initiatives will use AI services and by 2021, 75% of enterprise applications will use AI. From predictions, recommendations, and advice to automated customer service agents and intelligent process automation, AI is changing the face of how we interact with computer systems."

Retail will overtake banking in 2018 to become the industry leader in terms of cognitive/AI spending. Retail firms will invest $3.4 billion this year on a range of AI use cases, including automated customer service agents, expert shopping advisors and product recommendations, and merchandising for omni channel operations. Much of the $3.3 billion spent by the banking industry will go toward automated threat intelligence and prevention systems, fraud analysis and investigation, and program advisors and recommendation systems. Discrete manufacturing will be the third largest industry for AI spending with $2.0 billion going toward a range of use cases including automated preventative maintenance and quality management investigation and recommendation systems. The fourth largest industry, healthcare providers, will allocate most of its $1.7 billion investment to diagnosis and treatment systems.

"Enterprise digital transformation strategies are increasingly including multiple cognitive/artificial intelligence use cases," said Marianne Daquila, research manager, Customer Insights & Analysis at IDC. "Business transformation is occurring across all industries as successful companies embrace the array and potential impact of these solutions. Automated customer service agents, increased public safety, preventative maintenance, reduction of fraud, and improved healthcare diagnosis are just the tip of the iceberg driving spend today. With double-digit year-over-year spending growth forecast, IDC expects to see an increase in general use cases, as well as a refinement of industry-specific use cases."

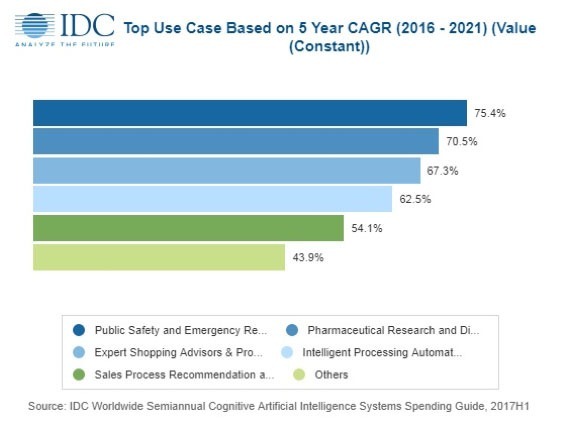

The cognitive/AI use cases that will see the largest spending totals in 2018 are: automated customer service agents ($2.4 billion) with significant investments from the retail and telecommunications industries; automated threat intelligence and prevention systems ($1.5 billion) with the banking, utilities, and telecommunications industries as the leading industries; and sales process recommendation and automation ($1.45 billion) spending led by the retail and media industries. Three other use cases will be close behind in terms of global spending in 2018: automated preventive maintenance; diagnosis and treatment systems; and fraud analysis and investigation. The use cases that will see the fastest spending growth over the 2016-2021 forecast period are: public safety and emergency response (75.4% CAGR), pharmaceutical research and discovery (70.5% CAGR), and expert shopping advisors and product recommendations (67.3% CAGR).

A little more than half of all cognitive/AI spending throughout the forecast will go toward cognitive software. The largest software category is cognitive applications, which includes cognitively-enabled process and industry applications that automatically learn, discover, and make recommendations or predictions. The other software category is cognitive platforms, which facilitate the development of intelligent, advisory, and cognitively enabled applications. Industries will also invest in IT services to help with the development and implementation of their cognitive/AI systems and business services such as consulting and horizontal business process outsourcing related to these systems. The smallest category of technology spending will be the hardware (servers and storage) needed to support the systems.

On a geographic basis, the United States will deliver more than three quarters of all spending on cognitive/AI systems in 2018, led by the retail and banking industries. Western Europe will be the second largest region in 2018, led by retail, discrete manufacturing and banking. The strongest spending growth over the five-year forecast will be in Japan (73.5% CAGR) and Asia/Pacific (excluding Japan and China) (72.9% CAGR). China will also experience strong spending growth throughout the forecast (68.2% CAGR).

"The latest iteration of the Cognitive/AI Spending Guide is a roadmap for the journey of organizational digital transformation through the use of AI, deep learning, and machine learning," added Schubmehl. "Organizations should be evaluating and starting to use AI throughout their systems and the Cognitive/AI Spending Guide is an indispensable resource in that effort."

Forty-eight percent of organizations that are implementing the Internet of Things (IoT) said they are already using, or plan to use digital twins in 2018, according to a recent IoT implementation survey by Gartner, Inc. In addition, the number of participating organizations (202 respondents across China, U.S., Germany and Japan) using digital twins will triple by 2022.

Gartner defines a digital twin as a virtual counterpart of a real object, meaning it can be a product, structure, facility or system. Gartner predicts that, by 2020, at least 50 percent of manufacturers with annual revenues in excess of $5 billion will have at least one digital twin initiative launched for either products or assets.

"There is an increasing interest and investment in digital twins and their promise is certainly compelling, but creating and maintaining digital twins is not for the faint hearted," said Alexander Hoeppe, research director at Gartner. "However, by structuring and executing digital twin initiatives appropriately, CIOs can address the key challenges they pose."

Gartner has identified four best practices to tackle some of the top challenges posed by digital twins:

1- Involve the entire product value chain

Digital twins can help alleviate some key supply chain challenges. Digital twin investments should be made value chain driven to enable product and asset stakeholders to govern and manage products, or assets like industrial machinery, facilities across their supply chain in much more structured and holistic ways. Some challenges that supply-chain officers face in improving their performance are for example, a lack of cross-functional collaboration or a lack of visibility across the supply chain.

The value of digital twins can be an extensible product or asset structure that enables addition and modification of multiple models that can be connected for cross-functional collaboration. It can also be a common reference with comprehensive content for all stakeholders to access and understand the current status of the physical counterpart. When engaging the supply chain in digital twin initiatives, CIOs should incorporate access control based on the sensitivity of the content and the role of the supplier.

2- Establish well documented practices for constructing and modifying the models

Best-in-class modeling practices increase transparency on often complex digital twin designs and make it easier for multiple digital twin users to collaboratively construct and modify digital twins. They attempt to minimize the amount of effort to enable changes within the digital twin or between the digital twin and external, contextually important content. When modeling practices are standardized, one user is more likely to understand how another user created a digital twin. This enables the downstream user to modify the digital twin in less time and with less need to destroy and recreate portions of the digital twin.

3- Include data from multiple sources

It is difficult, to anticipate the nature of the simulation models, data types and data analysis of sensor data that might be necessary to support the design, introduction and service life of the digital twins' physical counterparts. While 3D geometry is sufficient to communicate the digital twin visually and how parts fit together, the geometric model may not be able to perform simulations of the behavior of the physical counterpart in use or operation. At the same time, the geometric model may not be able to analyze data if it is not enriched with additional information. CIOs can expand the utility of digital twins by recommending that IT architects and digital twin owners define an architecture that allows access and use of data from many different sources.

4- Ensure long access life cycles

Digital twins with long life cycles include buildings, aircraft, ships, factories, trucks and industrial machinery. The life cycles of these digital twins extend well beyond the life spans of the formats for proprietary design software that most likely were used to create them and the means of storing data.

"This means that digital twins created in proprietary design software formats have a high risk of being unreadable throughout their service life," said Mr. Hoeppe.

Additionally, the digital twin evolves and accumulates growing historical data, such as geometric models, simulation data and IoT data. As a result, the digital twin owner risks becoming increasingly locked into the vendor with the authoring tools. "CIOs can guard against this if they increase the viable life of digital twins by setting a goal for IT architects and digital twin owners to plan for the long-term evolution of data formats and data storage," Mr. Hoeppe added.

Study shows firms must first master interconnected disciplines of customer experience, operational excellence and business innovation to achieve stronger digital transformation maturity.

Virtusa has published the findings of The Digital Transformation Race Has Begun, a September 2017 study commissioned by Virtusa and conducted by Forrester Consulting, that reflects the digital maturity of firms worldwide. The study evaluates the state of digital transformation across six key industries – retail, banking, healthcare, insurance, telco, and media.Internet of Things (IoT)-based attacks are already a reality. A recent CEB, now Gartner, survey found that nearly 20 percent of organizations observed at least one IoT-based attack in the past three years. To protect against those threats Gartner, Inc. forecasts that worldwide spending on IoT security will reach $1.5 billion in 2018, a 28 percent increase from 2017 spending of $1.2 billion.

"In IoT initiatives, organizations often don't have control over the source and nature of the software and hardware being utilized by smart connected devices," said Ruggero Contu, research director at Gartner. "We expect to see demand for tools and services aimed at improving discovery and asset management, software and hardware security assessment, and penetration testing. In addition, organizations will look to increase their understanding of the implications of externalizing network connectivity. These factors will be the main drivers of spending growth for the forecast period with spending on IoT security expected to reach $3.1 billion in 2021 (see Table 1)."

Table 1

Worldwide IoT Security Spending Forecast (Millions of Dollars)

| 2016 | 2017 | 2018 | 2019 | 2020 | 2021 | |

| Endpoint Security | 240 | 302 | 373 | 459 | 541 | 631 |

| Gateway Security | 102 | 138 | 186 | 251 | 327 | 415 |

| Professional Services | 570 | 734 | 946 | 1,221 | 1,589 | 2,071 |

| Total | 912 | 1,174 | 1,506 | 1,931 | 2,457 | 3,118 |

Source: Gartner (March 2018)

Despite the steady year-over-year growth in worldwide spending, Gartner predicts that through 2020, the biggest inhibitor to growth for IoT security will come from a lack of prioritization and implementation of security best practices and tools in IoT initiative planning. This will hamper the potential spend on IoT security by 80 percent.

"Although IoT security is consistently referred to as a primary concern, most IoT security implementations have been planned, deployed and operated at the business-unit level, in cooperation with some IT departments to ensure the IT portions affected by the devices are sufficiently addressed," explained Mr. Contu. "However, coordination via common architecture or a consistent security strategy is all but absent, and vendor product and service selection remains largely ad hoc, based upon the device provider's alliances with partners or the core system that the devices are enhancing or replacing."

While basic security patterns have been revealed in many vertical projects, they have not yet been codified into policy or design templates to allow for consistent reuse. As a result, technical standards for specific IoT security components in the industry are only now just starting to be addressed across established IT security standards bodies, consortium organizations and vendor alliances.

The absence of "security by design" comes from a lack of specific and stringent regulations. Going forward, Gartner expects this trend to change, especially in heavily regulated industries such as healthcare and automotive.

By 2021, Gartner predicts that regulatory compliance will become the prime influencer for IoT security uptake. Industries having to comply with regulations and guidelines aimed at improving critical infrastructure protection (CIP) are being compelled to increase their focus on security as a result of IoT permeating the industrial world.

"Interest is growing in improving automation in operational processes through the deployment of intelligent connected devices, such as sensors, robots and remote connectivity, often through cloud-based services," said Mr. Contu. "This innovation, often described as Industrial Internet of Things (IIoT) or Industry 4.0, is already impacting security in industry sectors deploying operational technology (OT), such as energy, oil and gas, transportation, and manufacturing."

Worldwide shipments for augmented reality (AR) and virtual reality (VR) headsets will grow to 68.9 million units in 2022 with a five-year compound annual growth rate (CAGR) of 52.5%, according to the latest forecast from the International Data Corporation (IDC) Worldwide Quarterly Augmented and Virtual Reality Headset Tracker. Despite the weakness the market experienced in 2017, IDC anticipates a return to growth in 2018 with total combined AR/VR volumes reaching 12.4 million units, marking a year-over-year increase of 48.5% as new vendors, new use cases, and new business models emerge.

The worldwide AR/VR headset market retreated in 2017 primarily due to a decline in shipments of screenless VR viewers. Previous champions of this form factor stopped bundling these headsets with smartphones and consumers have shown little interest in purchasing such headsets separately. While the screenless VR category is waning, Lenovo's successful fourth quarter launch of the Star Wars: Jedi Challenges (Lenovo Mirage AR headset)—a screenless viewer for AR—showed the form factor may still have legs if paired with the right content. Other new product launches during the quarter included the first Windows Mixed Reality VR tethered headsets with entries from Acer, ASUS, Dell, Fujitsu, HP, Lenovo, and Samsung.

"There has been a maturation of content and delivery as top-tier content providers enter the AR and VR space," said Jitesh Ubrani senior research analyst for IDC Mobile Device Trackers. "Meanwhile, on the hardware side, numerous vendors are experimenting with new financing options and different revenue models to make the headsets, along with the accompanying hardware and software, more accessible to consumers and enterprises alike."

Looking ahead, IDC also expects the VR headset market to rebound in 2018 as new devices such as Facebook's Oculus Go, HTC's Vive Pro, and Lenovo's Mirage Solo with Daydream ship into the market with new capabilities and new price points. Meanwhile, with the exception of screenless viewers, AR headsets are likely to remain largely commercially focused until later in the forecast due to the technology's high cost and complexity.

"While there's no doubt that VR suffered some setbacks in 2017, companies such as Google and Facebook continue to push hard toward making the technology more consumer friendly," said Tom Mainelli, program vice president, Devices & AR/VR research. "Meanwhile, Lenovo's success with its first consumer-focused AR product shows that consumers are beginning to understand what augmented reality is and the experiences it can provide. This bodes well for the category long term."

Category Highlights

Augmented Reality head-mounted displays will see market-beating growth over the next five years as standalone and tethered devices grow to account for more than 97% of the market by 2022. IDC expects AR screenless viewers, the overall market leader in 2017, to peak in 2019 as standalone and tethered products become more widely available at lower price points. The rise of screenless viewers geared toward consumers tilted shipment volumes away from commercial viewers in 2017 and that's likely to continue in 2018; by 2019 the segment will shift back toward more commercial shipments.

Virtual Reality head-mounted displays will see a shift in product mix. Screenless viewers, once the overall leader, will see share erode quickly over time. Meanwhile, standalone and tethered devices – in the minority in 2017 – will comprise 85.7% of total shipments by 2022. Consumers will account for a majority of headset shipments throughout the forecast, but commercial users will slowly occupy a larger share, growing to nearly equal status in 2022.

| AR/VR Headset Market Share by Form Factor, 2018 and 2022 | |||

| Technology | Form Factor | 2018* | 2022* |

| Augmented Reality | Screenless Viewer | 6.7% | 1.1% |

|

| Standalone HMD | 2.4% | 19.1% |

|

| Tethered HMD | 1.0% | 17.9% |

| Virtual Reality | Screenless Viewer | 34.9% | 8.8% |

|

| Standalone HMD | 11.7% | 29.8% |

|

| Tethered HMD | 43.3% | 23.3% |

| Total |

| 100.0% | 100.0% |

| Source: IDC Worldwide Quarterly AR and VR Headset Tracker, March 19, 2018 | |||

* Note: Forecast values.

Just 5% of board members in non-tech organisations have digital competencies.

Global pressures of digital disruption are failing to impact businesses as only five per cent of non-tech digital board members have digital competencies. What’s more, despite the central role digital transformation plays in building a more competitive and efficient business, non-tech organisations have shown little or no effort to improve their digital capabilities in the last two years. This is according to a new landmark study from global executive search firm, Amrop.

The report, “Digitisation on Boards: Are Boards Ready for Digital Disruption?”, examines how listed organisations are addressing digitisation. Worryingly, it revealed that the digital picture remains fragmented, and multiple questions still surround its role.

Since the previous study in 2015, Amrop’s Digitisation report found that most non-tech listed organisations have shown little or no effort to improve digital competencies on boards. The latest report revealed that the five per cent figure remains quasi-unchanged since the study’s first edition. This is despite the view that digital transformation is supposedly occurring in organisations all across the globe.

The study, which examined the profiles of 3,000 board members in listed organisations across 14 countries, did find some places where digital board representations had improved. This included the UK, where non-tech companies raised their digital competencies from three to eight per cent since 2015. Finland is leading the way and in growth-mode, tripling its representation between 2015 and 2016 from four to two per cent. In the Netherlands however, there has been a decline in digital competency, with representation dropping from seven to five per cent in the last two years.

Moreover, the report found that traditional committees are still dominating. Just nine of the 300 boards analysed have an official technology committee. That’s just three per cent of organisations that have a committee dedicated to technical strategy on their business agenda.

The report analysed the digital competencies on boards for a number of sectors. Unsurprisingly, it found that representation for tech companies far outstrips the non-tech equivalents. Compared to the five per cent representation in non-tech companies, the study found that 43 per cent of board members in technology companies have digital competencies.

What’s more, the gap between tech and non-tech is widening — in 2015 the previous study found board-level digital competencies were seven times higher in the tech industry than in others. In this study, the representation now sits at nine times higher.

One explanation for the sluggish movement is the length of time needed to nominate and integrate board members. The wheels of board governance turn slowly and in some sectors, regulation clogs them even further.

As Mikael Norr, Leader of Amrop’s Global Technology & Media Practice, explains, “the core answer must lie in the difficulty of positioning the right talent in the top layer of organisations: people who can help fellow board members develop a robust strategy based on a strong business case and a clear view of the purpose of the organisation, its culture, its structure, and its markets.

“Once this is done, change must be stimulated in the right place, at the right pace, with strong management of resources and risk. Engaging in education programmes for boards, before setting out, can help prepare the road.”

Whilst examining the profiles of 3,342 board members, the study revealed a significant correlation between boards with tech profiles and a higher degree of female representation. According to the findings, women hold 35% of all digital/technology positions in the boards surveyed.

What’s more, the report found there to be a significant increase in female representation in these roles over the past year. For an industry that is often criticised for its lack of diversity, these findings suggest that digital/technology jobs may in fact now be a catalyst for change.

France and Italy lead the field with around 60% of digital/tech profiles represented by women. The UK however is worryingly at the bottom of the list, alongside the USA, Turkey and Spain, having named no new female digital/tech board members among the 133 new non-exec directors appointed in 2016.

Discussing the importance of diversity in these roles, Amrop Partner Bo Ekelund, who authored the diversity section of the report, comments: “Every day, working with clients worldwide, we see successful enterprises adopting the powerful trends of diversity and gender balance. Yet we also know that only 20% of all the people working in the IT sector are women. Those who are in place are severely under-represented in decision-making positions.

“Diversity drives innovation. To ensure new voices are properly heard, boards may need to ask tough questions about how they interact as a group. A structured and anonymous board assessment and rigorous onboarding for new members is strongly advised”.

The shortlist has been confirmed and Online voting for the 2018 DCS Awards has just opened. Make sure you don’t miss out on the opportunity to express your opinion on the companies, products and individuals that you believe deserve recognition as being the best in their field.

Following assessment and validation from the panel at Angel Business Communications. The shortlist for the 21 categories in this year’s DCS Awards has been put forward for online voting by the readership of the Digitalisation World portfolio of titles. The Data Centre Solutions (DCS) Awards reward the products, projects and solutions as well as honour companies, teams and individuals operating in the data centre arena.

The winners of this year’s awards will be announced at a gala ceremony taking place at London’s Grange St Paul’s Hotel on 24 May.

All voting takes place on line and voting rules apply. Make sure you place your votes by 11 May when voting closes by visiting: http://www.dcsawards.com/voting.php

The full 2018 shortlist is below:

Data Centre Energy Efficiency Project of the Year

New Design/Build Data Centre Project of the Year

Data Centre Consolidation/Upgrade/Refresh Project of the Year

Data Centre Power Product of the Year

Data Centre PDU Product of the Year

Data Centre Cooling Product of the Year

Data Centre Facilities Automation and Management Product of the Year

Data Centre Safety, Security & Fire Suppression Product of the Year

Data Centre Cabling & Connectivity Product of the Year

Data Centre ICT Storage Product of the Year

Data Centre ICT Security Product of the Year

Data Centre ICT Management Product of the Year

Data Centre Cabinets/Racks Product of the Year

Data Centre ICT Networking Product of the Year

Data Centre Hosting/co-location Supplier of the Year

Data Centre Cloud Vendor of the Year

Data Centre Facilities Vendor of the Year

Excellence in Data Centre Services Award

Data Centre Energy Efficiency Initiative of the Year

Data Centre Innovation of the Year

Data Centre Individual of the Year

Voting closes : 11 May

One of the latest buzz words taking Cloud Computing by storm is that of Functions as a Service (FaaS) or serverless computing by Chris Gray, Chief Delivery Officer, Amido.

Serverless is a hot topic in the world of software architecture, however it has been gaining attention from outside the developer community since AWS pioneered the serverless space with its release of AWS Lambda back in 2014. As one of the fastest growing cloud service delivery models, FaaS has fundamentally changed the way in which technology is not only being purchased but how it’s delivered and operated.

The significance of FaaS for businesses could be huge. Businesses will no longer have to pay for the redundant use of servers, but just for how much computing power that application consumes per millisecond, much like the per-second billing approach that containers are moving towards. Instead of having an application on a server, the business can run it directly from the cloud allowing it to choose when to use it and pay for it, per task – making it event driven. According to Gartner, by 2020, event-sourced, real-time situational awareness will be a required characteristic for 80% of digital business solutions, and 80% of new business ecosystems will require support for event processing.

FaaS is a commoditised function of cloud computing and one that takes away wasted compute associated with idle server storage and infrastructure. “Not every business is going to be right for FaaS or serverless, but there is a real appetite in the industry to reduce the cost of adopting the cloud – so this is a great way to help drive these costs down,” adds Richard Slater, Principal Consultant at Amido. “The thing is, if you’re considering this as an option you are signing up to the ultimate in vendor lock-in as it’s not easy to move these services from one cloud to another (though there are promising frameworks like Serverless JS which claim to resolve this); each cloud provider approaches FaaS in a different way and at present you can’t take a function and move it between vendors. As the appetite for serverless technologies grow, the nature of DevOps will subsequently change; it will still be relevant, although how we go about doing it will be very different. We could say that we are moving into a world of NoOps where applications run themselves in the cloud with no infrastructure and little human involvement. Indeed, humans will need to be there to help automate those services, but won’t be required to do as much coding or testing as they do now. With the advent of AI, the IoT, and other technologies, business events can be detected more quickly and analysed in greater detail; enterprises should embrace ‘event thinking’ and Lambda Architectures as part of a digital landscape.”

With FaaS and serverless gaining momentum, we are seeing fundamental changes to the traditional way in which decisions around technology are made, with roles like the CIO evolving at enterprise level now that there isn’t the same level of vendor negotiations. “Cloud providers are basically the same price across the board, meaning there is little room for negotiation, other than length of contract. However, signing up to long-term single-cloud contracts introduces the risk of having a spending commitment with a cloud that doesn’t offer the features that you need in the future to deliver business value. In this respect, the CIO is still necessary,” adds Richard Slater.

The current industry climate is demanding an increase in specialised IT skills that can cater to serverless digital transformation. If business leaders want to deliver, they need to let go of the ‘command and control’ approach and empower teams to be accountable. Creating the environment and securing the right skillsets to be able to develop, own and operate applications from within the same team is demanding for a new breed of IT engineering. Organisations wanting to embrace digital transformation and this new breed of cloud service delivery must start to give trust to the individuals closest to the business and writing code on the ground. “To a certain extent this trust must be earned, but in many of today’s enterprises there is so much governance around technical delivery that it has the effect of slamming the brakes on any transformation,” concludes Richard Slater, Principal Consultant at Amido.

We’ve seen trends come and go over the years, but with global companies like Expedia and Netflix embracing serverless computing, and cloud heavyweights Amazon, Google and Microsoft offering serverless computing models in their respective public cloud environments, FaaS seems here to stay.

With the Global Data Protection Regulation (GDPR) on the horizon, businesses that wish to operate in the European Union are having to spend more time than ever thinking about compliance.

By Mark Baker, Field Product Manager, Canonical.

Not only does all personally identifiable customer data need to be accounted for – a task that is easier said than done for many organisations – internal processes also have to be updated and employees need to be educated to ensure the compliance deadline of 25th May 2018 is met.

Of course, GDPR is just one legislative challenge facing businesses. Financial services firms, for example, have a revamped version of the Markets in Financial Instruments Directive (also known as MiFID II) to respond to, while the UK telco industry is facing the prospect of new legislations being enforced after Brexit.

And as falling foul of industry regulations has the potential to result in massive financial penalties, as well as the threats of reputational damage and a loss of customers, organisations simply can’t afford to be complacent.

However, fear of the complexity of managing compliance in new infrastructure as well as the effort already involved in ensuring existing systems are ready to go, is prompting many businesses to shy away from cloud, despite the many benefits such services offer. Concerns are primarily due to a misconception that cloud platforms, with data held by third parties on shared systems, will be a more difficult undertaking than traditional in-house systems and potentially less secure, but the truth is very different.

Public cloud services can be extremely secure and often can be a more secure option than in-house systems. So, what exactly is behind this misconception and why should businesses be trusting public cloud services with their compliance needs?

Privacy please

On the face of things, it’s easy to see why many people would assume on-premise infrastructure is more secure and easy to manage. In theory, businesses know exactly where their data is being stored and who has access to it, both of which provide comfort for organisations.

They can also design the architecture to suit their own specific needs and preferences, as well as reducing the risk of data loss if a public cloud provider goes out of business. One could argue that such a setup would be particularly appealing to businesses operating in highly regulated industries, such as healthcare and financial services, which need to have greater visibility and control over how their data is managed.

However, firms would be wise to remember that operating their own private cloud places the responsibility of security and compliance squarely on their shoulders. Businesses are at the mercy of the whims of nature and the resilience of their local power grid, potentially leaving them helpless if something goes wrong.

It also leaves them vulnerable to disgruntled employees and internal data theft. Employees may have easy access to confidential data, sometimes with very little to stop them from stealing corporate information simply by pulling a disk from a server and leaving the building with it. Often employees can also connect USB drives which have been used in home systems and may contain malware or viruses. Huge faith is placed in the firewall as an effective means of keeping intruders out, yet backdoors may well exist in the form of legacy and unsecured modem connections, as well as poor access control processes that leave user credentials in place long after the relevant employee has left the company.

So just because infrastructure is in your data centre doesn’t mean it is inherently more secure, resilient or suitable to meet the needs of regulatory compliance than public cloud.

Going public

While some businesses may feel more comfortable knowing their data is being stored within their own walls, data location is only one small aspect of security and compliance.

Along with the provision of innovative new services to enable business growth, it is the job of public cloud providers to protect their customer’s data. A central component of their value proposition, therefore, is the delivery of systems, tools and continuity plans that make their cloud infrastructure safe and secure.

This applies to both virtual and physical means of protection. Corporate data will be stored in a secure facility with multiple layers of physical security that are often not present if businesses opt to run their cloud infrastructure in-house.

And, with competition in the market continuing to increase at a rapid rate, ensuring compliance is not only a valuable competitive advantage for those businesses offering public cloud services, but also essential to gaining customer trust and in turn, loyalty. In this respect, smart cloud providers such as City Cloud are leading the way with a value proposition focused very much around regulatory compliance

Public cloud providers are also likely to carry out software patching on a more regular basis which is essential to manage compliance. Businesses running their own private clouds will generally be slower to patch security gaps, leaving themselves exposed to potential data breaches and compliance holes. The recent Spectre and Meltdown vulnerabilities are a great example of this, with Google, Microsoft and Amazon all patching their system quickly after the problems became public. Meanwhile many businesses will still be trying to determine what systems they need to patch and how they go about doing it.

Furthermore, public cloud providers tend to have highly skilled and experienced IT teams, which isn’t something that can be said for all businesses. The skills gap issue is an extremely prevalent one in the cloud world and businesses are finding it harder than ever to attract talented developers. This is causing problems when it comes to addressing the more technical compliance challenges, which could be solved using third-party infrastructure.

Add in the fact that businesses will not be alone when defending against attacks and the skills argument provides compelling support for the merits of using third-party providers to ensure legislative compliance.

The combination of these factors means that in many cases public cloud can actually be a better option than a private cloud for systems with high security and compliance requirements . It can certainly be a less complicated option for businesses and help to give them peace of mind amidst shifting regulatory landscapes.

As end users become far more sensitive to security of their personal data and initiatives like Open Banking come into effect, the challenges are only going to grow. That’s why organisations today, rather than shying away from public infrastructure, should be embracing them as part of a hybrid cloud offering on their journey to compliance.

However, the importance of investing varies when viewed from the perspective of senior level executives compared with those on the frontline of an organisation.

This digital transformation has resulted in businesses becoming far more reliant on technology to manage the day to day operations and for efficient decision making. To be successful in this environment, organisations must evolve to be smarter, stronger and faster than they are today, enabled by changes across three dimensions: organisation and talent; business and customer; and process and technology.

Those leading the change will have a distinct advantage over their competitors — a performance gap that will continue to grow as emerging technologies and digital channels offer further insight using data from both within and outside the enterprise.

However, only 55 per cent of those at entry level agree, so as the evidence shows, the investment strategy is not filtering through from the top to the frontline. If a business invests well even in basic technology, it can instantly boost staff motivation and productivity as well as appeal to prospective customers.

Employees expect an easy, quick and efficient experience whenever they use a device, whether onsite or remotely. For most businesses, the workforce is its largest investment. From motivating and developing talent to creating an engaged workforce, the effectiveness of employee management has a direct impact on business results and competitiveness.The role of IT has evolved from being a historical provider of technology to challenging processes and leading the digital changes across organisations on a global scale. Reflecting this, 69 per cent of employees believe their company is investing in mobile devices to differentiate themselves from the competition.

The market has shifted, and businesses are now embracing mobility as a game-changer, lowering the overall cost of IT investment by reducing device costs, making business operations more efficient and making employees more productive - key elements in growing revenue and increasing profitability.Leveraging a mobile strategy has many distinct advantages including a higher level of employee responsiveness and engagement.

Today, digital transformation is infiltrating every aspect of an organisation and as a result, technology demands across departments have skyrocketed. It is now critical for senior executives to effectively manage those out in the field.Moscow IT Department has completed the implementation of a unified IoT platform monitoring and controlling over 22,000 communal vehicles.

The Department of Information Technologies of Moscow (DIT) has completed the implementation of a unified IoT platform for monitoring and controlling over 22,000 communal vehicles: street sweepers, snowplow and waste trucks, water carts, etc. With the launch of the project millions of Moscow citizens now have a chance to experience the benefit of living in an IoT-dominated landscape.

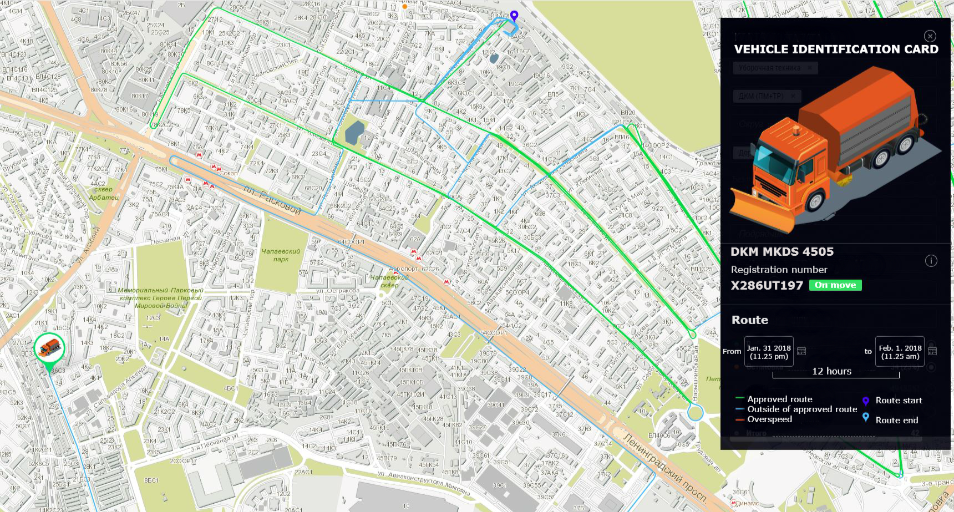

All communal vehicles have been integrated into a unified network and are equipped with special sensors which allow the city authorities track routes as well as control speed, fuel consumption and operation mode. AI generates a daily scope of work for each vehicle based on the weather forecast. The system automatically indicates the period of work and calculates the most optimal route selected from the database of route patterns. The GLONASS system enables to identify a precise location of the vehicle and thus plan the route for it in the most efficient way, while special fuel sensors contribute to reducing fuel consumption.

AI calculates daily scope of work for each vehicle, the period of work and thus plans the route in the most efficient way.

The IoT platform helps to optimize the routes for municipal vehicles and thus avoid overconsumption of fuel. The savings per month amount to 9.1 million rubles (162.000 USD) which equivalents to the cost of 2 brand-new snowplow trucks.

Special sensors installed on vehicles transmit data on speed and fuel consumption.

Moscow has recently suffered a month's worth precipitation in the city records: 47 centimetres (17 inches) of snow covered the capital during the past weekend and at the start of the week. This weather cataclysm turned into a real crash-test for the newly implemented system, however the system did pass it. Over 15,500 of equipment units controlled by the IoT platform were involved into removing unprecedented 1.2 million cubic meters of snow. Despite extremal weather conditions no public transport breakdowns were recorded.

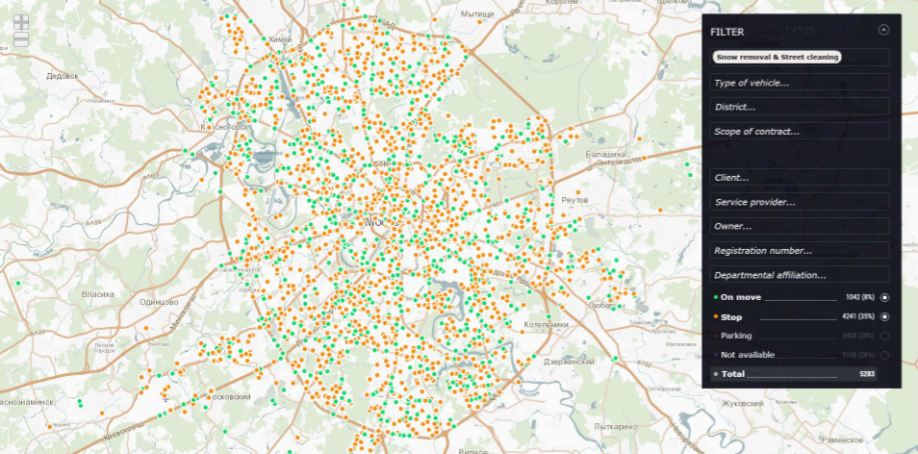

"Navigation and telemetric data accumulated via the unified IoT platform allows profile departments of Moscow Government monitor and control activities of communal vehicles operating in Moscow. Thus we have a full online access and 24/7 real-time control of each of the 22,000 vehicles”, - commented CIO of Moscow Artem Ermolaev. “First days of February brought record-breaking snowfalls to Russia’s capital, however thanks to coordinated work of the city authorities and the unified IoT platform we could react quickly and resolved the crisis". - said Mr. Ermolaev.

Telemetric and navigation data from the transmission data systems gets to the dispatch center of the Department of Housing and Communal Services and Improvement of Moscow. All the data is transmitted over secure encrypted channels. The center collects, processes, transfers and stores data, which enables to manage and control communal vehicles in real-time.

The IoT platform provides access to the data according to the position and level of the requesting official. For instance, the Mayor of Moscow and the executives of the Department of Housing and Communal Services and Improvement of Moscow have full access to the database, while the access of the municipal authorities and services’ providers is limited. They receive only the data on the districts which fall under their responsibility.

The IoT platform identifies a precise location of each of 22,000 vehicles in real time. Marked “green” are on the move, “orange” are on hold.

Telemetric data allows housing and communal services provide round the clock technical support and maintenance service as well as forehanded replacement of vehicles. The system monitors engine performance metrics warning about potential breakdowns and equipment wear. Experts say that the next step could be monitoring drivers’ performance. The installed sensors will be able to indicate first signs of fatigue and recommend a driver to stop for a rest thus increasing road safety.

IoT is going to become one of the primary drivers of digital transformation in 2018 and beyond. Smart cities worldwide are quickly moving to implement IoT solutions into daily life. The telemetry technology in smart cities worldwide is thriving. Vehicles are monitored in real time by thousands of sensors. Telemetry thus forms a solid basis for a smart infrastructure that is going to become a next step to self-driving vehicles which are to shape our transport future.

Large organisations are being forced between a rock and a hard place, as agile companies continually interrupt the current state of established markets and industries – their only option to avoid failure is to innovate. Just take a look at how Netflix’s digital transformation takeover has rendered Blockbuster redundant. This highlights the very real issue that is facing large legacy enterprises today.

By Bob Davis, CMO of Plutora.

The new reality is that every company needs to be a digital, software-powered company. IT projects and software functionality have permeated every aspect of every business. From backend processes all the way to customer-facing innovation, and application development is at the heart of many of these processes. Staying competitive requires swift digital transformation capable of keeping pace with the ever-increasing demand for faster app releases and updates. While small businesses and start-ups can thrive on this demand, large enterprises face many obstacles to speeding up software delivery.

There are key drivers behind the need to innovate and release software faster, but the question business leaders are left with this year is, “how do we actually increase speed and be more responsive without losing control?” To answer this question, instead of looking for small changes to project management or operations that could increase productivity, enterprises should look to automation to overhaul their release management processes and provide the means to bridge the gap between engineering speed and project management visibility, specifically:

1. Necessary acceleration

There has been plenty written about the advantages of adopting agile DevOps at the engineering level to help automate and streamline technical processes. However, less is mentioned of the need for project management teams to handle all of the different automation solutions the engineering teams deploy. With manual processes, there’s no way to accelerate support for the engineering side, so embracing automation where possible, and sensible, within release management will enable teams to keep pace with their counterparts in development.

2. Managing risk

Every software release is accompanied by a certain level of risk for the business. Whether it’s a bug in a customer-facing system that leads to revenue loss, or an internal IT system that crashes and diminishes productivity, software release risk must be managed. As delivery demand increases, manual release management also brings with it some additional risk as project management teams work to keep pace with increased release cycles. Automated release management provides additional visibility into the total risk landscape – something manual processes can’t provide.

3. Juggling delivery pipelines

When release schedules were based on a 12-month or bi-annual pace, it was much easier for project managers to manually track delivery pipelines. However, daily or weekly cycles are creating major overlap between delivery pipelines – and the sheer volume of delivery pipelines increases on a near-daily basis. Additionally, one failed process or pipeline conflict can compromise many other applications and projects, causing a ripple effect throughout an organisation. Managing these pipelines with spreadsheets simply won’t work anymore, driving the need for delivery management change, and automation is here to answer the call.

Uniting your teams

One of the main challenges businesses will continue to face in 2018 when trying to speed up the IT delivery process is the widening gap between the management portions of IT – delivery and operations teams. Project teams are largely focused on the development and delivery functions, and generally accept and facilitate improvements to increase the speed of delivery. With a deep concern for making delivery faster, better, and cheaper, the delivery teams are usually very responsive to the adoption of automated technology and the acceleration necessary for digital transformation.

The operations side, on the other hand, is concerned with securing and protecting the integrity of production environments. While this governance and quality assurance is vital to the delivery process, manual approaches to operations are a hindrance to the modern rate of releases. When engineering teams followed relatively slow and methodical release cycles, the gap between project management and operations was manageable. Business demand for greater agility necessitates a more streamlined and automated approach to quality and risk.

Deeper down the value stream, engineering teams will use numerous tools and platforms to build new software and release it. For example, there could be hundreds of projects going on at once, each one supported by tools such as Puppet, Jenkins, JRA, Rally, and more. With so many tools, individual engineering teams have plenty of resources to speed up their processes – but only in isolated pockets. To break down the silos, both development and operations need a way to coordinate across multiple tools to gather the necessary information to manage delivery processes holistically.

Automated enterprise release management is the bridge between project delivery and operations. It connects the two management components to the underlying engineering level – no matter the tools they choose to use. Enterprise release management sits between project management and operations, and builds a comprehensive view of all information related to IT delivery throughout the organisation.

Why automated enterprise release management is a bonus

Streamlining the release management, test environment management, and deployment processes enables changes to be made more quickly. By keeping up with release demand, companies can deliver a differentiated customer experience necessary for survival in increasingly competitive digital markets.

Traditional manual spreadsheet strategies often lead to rework for IT delivery teams – especially when demands increase to meet digital transformation needs. Automated enterprise release management eliminates the need to reconcile inconsistent information on a day-to-day basis.

Similarly, unifying release processes throughout the organisation allows management teams to absorb more information about changes while controlling IT service quality even under heavy speed demands.

For most organisations, digital transformation is either up and running or on the horizon. This year more companies will be looking to implement an automated enterprise release management solution that enables them to increase their speed and responsiveness to market changes by addressing the risk management element of IT delivery. When quality management and governance are also built into automated enterprise release management solutions, digital transformation will become a much less risky venture.

"Never waste a good crisis," as Churchill once said. And the next big crisis that's about to hit digital businesses, in many people's eyes, is the General Data Protection Regulations (GDPR). This is a once in a lifetime "crisis" that the best firms will "not waste" by overhauling their business processes and their culture of compliance.

By Vivek Dodd, Chief Compliance Officer, Skillcast.

Whilst many will see compliance with GDPR (and other legislation) as a burden on business, it is better to accept it as something that is part and parcel of business life. GDPR and other regulatory measures create a level playing field that benefits the ethical business, restores the trust of consumers, and provides a clearer environment for companies to operate in.

Compliance with regulations applies to all businesses. So, the companies that can imbibe the regulations and create a culture of compliance will have a competitive advantage and will thrive over time.

Now with GDPR upon us, it's the perfect opportunity for you make your employees sit up, take notice and change their behaviour to act with more care, integrity and compliance. The way to do this quickly and inexpensively is with the use of digital training and compliance apps that provide instant access, streamlined logistics and people analytics for you to effectively monitor and manage compliance in your organisation.

Of course, in many arenas, classroom training is a favourite but it is increasingly looking ill equipped and out-dated for the demands of the modern world - particularly for the cutting edge digital market.

Mobiles phones are now more powerful than many laptops were just a few years back and high bandwidths, video and data analysis are all available to engage with and train staff in imaginative ways.

Whilst digital learning is a great solution, it is also really worth taking the time with compliance training to carefully look at the detail and work out who really needs to know what. The training has to be relevant and there is nothing more frustrating than learning new information, which will not be applied. We can all recollect times when we’ve taken time away from our day job to be on courses where much of the material has been a waste of time and not appropriate to the job we do.

So, it’s vital to be precise with the content - after all employees should be engaged and then have the option to choose how and when they will undertake their learning. This allows the workforce to buy in.

The content required for compliance programmes can take place in many diverse forms - from professionally developed micro-learning videos, interactive scenarios, e-books, podcasts, articles and research reports to the less polished informal content generated by blogs and vlogs.

Also, it’s worth adding that recent research by Deloitte reveals that companies are investing considerably in programmes that use data for workforce planning, talent management and operational improvement.

Such analysis of data can build knowledge and competency maps that show areas for improvement for an individual and/or even the entire organisation, whilst also providing a measure of success for learning interventions and/or the entire learning programme.

This also paves the way for adaptive learning, whereby the learning platform recommends learning based on an employee's job role, experience and previous knowledge of the subject.

Within digital learning a trend that's taking hold is organisations furthest up the maturity curve are aligning learning much more closely to job performance. It's no longer standard to conduct training in isolation in the hope that some of it will be retained by employees when they go back to their daily roles. Instead, they want training to be integrated with performance support apps and suitable job aids. For instance, integrating RegTech tools with digital learning to reduce operational complexity, and improve compliance with laws and regulations.

Also, what cannot be emphasised highly enough is compliance training, and indeed all training, has to be enjoyable. Like everything in life, when something is fun it is so much easier to take on board information and retain it.

A good way to do this is by adding elements of gamification, such as interesting storylines, non-linear pathways, timers and elements of risk taking. Another way, is by noting and rewarding employees for their achievements both online or offline.

In short, the demands of compliance will not go away, so adopt an across the board, enthusiastic approach, and then go about the task knowing that the days of sitting at a desk and forgetting what you’ve learnt have finally gone.

Again, it is worth remembering that it has always been the case that employees are at their happiest learning when they can exercise control over how they access their training and have a selection of exciting pathways that they can take to complete it.

The digital industry needs no convincing that technology will help them adapt how they train their staff and no doubt they will see much higher employee engagement, staff retention and productivity, plus the added bonus that compliance will hopefully be no longer viewed as a burden.

The learning solutions are here. It’s up to you to harness them to create that culture of compliance and get a head start on your competitors.

As we settle into 2018, Europe has a fantastic opportunity to firmly establish itself as a hive for digital innovation. We will see significantly more forging of cross-industry partnerships as organisations strive to deliver on their promise of a fully digital offering. In addition, augmented reality (AR), blockchain and robotics will move out of pilots into mainstream adoption.

By Euan Davis, European Lead for Cognizant’s Center for the Future of WorkHere are five key trends to look out for in 2018 and recommendations on how businesses can make the most of upcoming opportunities:

1. Brands begin to blend the physical with the virtual - AR edges into the mainstream

Over the course of this year, we will see AR weave its way into more serious B2B and B2C interactions, improving internal processes and business models. Immersive technology will underpin brands of the future thanks to its ability to make stronger connections with people. AR will quickly appear within experiences and transactions in both our personal and business lives, in the form of what we call ‘journeys’ – blending people, places, time, space, things, and events. Organisations will also begin weaving these immersive technologies into customer, employee, supplier and partner physical interactions, increasingly using them in training or as a sales tool, for example.

The most successful businesses will be those using the technology to create captive moments that draw in people’s attention by creating personalised stories for each consumer. This is a trend that will see consumers continue to favour spending money on experiences, such as visiting a pop-up virtual reality (VR) game in their city, over buying ‘things’ such as the latest TV.

2. Europe continues to build-out the economic foundations for a digital future

Traditionally, the USA is the world leader in software and Asia leads in hardware. In a global context, while full of innovative digital businesses, Europe still lacks the scale of the existing global tech companies. Yet, the basis of modern day Europe – as an open, collaborative and pluralist continent – will form the foundations for a successful digital economy in 2018. Furthermore, the impact of new technologies applied to all aspects of business and society is so large that there is no way to escape its gravitational pull.

Expect to see regional leadership emerge around the innovation needed to build the industries of the future, such as next generation education, smart cities, connected healthcare and autonomous vehicles. As Europeans successfully master the digital economy , we shall see a rise in employment, improved productivity and building social cohesion. In this vein, the four freedoms that underpinned the 1990’s single market—goods, services, capital and people—could get a fifth, as Europe builds the foundation for digital single market.

This year, European economies will continue to make smart bets on their future, and the start-up scene will see new investment funds open up for the likes of big data, artificial intelligence (AI) and VR. Additionally, the region’s leadership on digital regulation will stand it in good stead to establish itself as a long term sustainable leader in industries of the future.

3. Ecosystems accelerate the breakdown of traditional industry boundaries

Cross-industry partnerships are nothing new, but in 2018 these relationships will begin to blur the lines between industries to an even greater degree as sectors begin to “cross-source” functional areas of expertise from one another. As opportunities to integrate additional functions into existing products or services multiply, organisations will realise that collaborating with other industry experts will speed up the process, while at the same time exposing them to a more diverse customer base, across multiple industries.

For instance, last year Ford integrated Amazon Alexa to run its in-car infotainment systems. Whilst Ford is diluting its direct exposure to its captive customer base, the quality of its digital work is greatly improved through its collaboration with Amazon and therefore boosts the overall customer experience. Equally, Amazon gains increased exposure to Ford’s customers. A win-win situation for both.

4. Blockchain begins to truly influence consumers and business

The stratospheric rise of Bitcoin in 2017 revealed a growing public awareness of crypto-currencies and the blockchain technology that underpins them. Blockchain, over the last couple of years, has been largely confined to proof of concept projects, but this year we will begin to see a flood of live blockchain-enabled projects aimed at transforming B2B and B2C transactions.

Following a year of high profile data breaches in 2017, blockchain technology has come at a time when consumer trust has reached its lowest ebb. Successful blockchain rollout will begin to positively influence consumer trust in organisations. We will see new business models emerge as companies begin to understand and take advantage of secure data transfer. Elsewhere, cross-industry collaborations will take a leap forward as smart contract technology, based on blockchain, will allow for the secure transfer of consumer data. Regulators across the world will be working with businesses to keep up with the momentum of blockchain adoption.

5. People begin to embrace, rather than fear, the machine

New types of work are now emerging thanks to machines, despite the doom and gloom around automation and AI from some commentators. Machines still need humans and we are beginning to get a realistic vision of how they will work together. From ‘data detective’ to ‘personal memory curator’, new jobs will start to emerge as business leaders evaluate the working relationship between people and machines.

As outlined in a recent Cognizant whitepaper, ‘21 Jobs of the Future’, based on the major macroeconomic, societal, business and technology trends observable today, new types of work are emerging. In the future, we are likely to see more accurate forecasts about the impact of technology on jobs, focusing on how technology will continue to improve the human experience, not rob us of our humanity.

Machine learning may sound expensive and out of reach, but it needn’t be. Nearly every major machine learning implementation has been made available. Open source platforms include offerings from Amazon, Google, Microsofit, Baidu, and many more.

By Chris Adams, President and COO, Park Place Technologies.

These “kits” substantially reduce the knowledge base required to apply machine learning, to the point it can be nearly turnkey. In fact, machine learning has become so accessible, the most technically unsavvy of reporters are trying their own experiments.

Thus the barrier is no longer obtaining the PhD scientists required to build a bespoke machine learning application—although some big, moneyed players like Uber are doing just that—it’s about using the tools on the market in ways that truly drive value for the business.

Here’s what various experts recommend to those embarking on the machine learning mission:

In short, machine learning is more accessible than many believe, requiring less data than many IT pros might expect. And it’s not a whole lot different than other technological tools. To work for the business, it needs to be built into the existing processes, culture, and oversight/governance structures within the enterprise.

Need another bit of encouragement? You won’t be far behind most in the field.

We may all be talking about neural networks, but “[t]he state of the practice is less futuristic,” opines TechCrunch. Most applications of machine learning, even among the tech leaders, are using the same algorithms and engineering tools from years ago. Regression analysis, decision trees, and similar methods are driving ad targeting, product recommendations, and search results ranking to a greater degree than sexy “deep learning” advancements.

What’s more, there are infrastructure issues yet to be solved. The majority of time devoted to machine learning is spent preparing and monitoring the learning tools. Building the AI is a relatively small part of the picture.

Unfortunately, preparing data is a hassle, and the “bigger” the data, the worse the problems. Using scripts to consolidate duplicates, normalize metrics, and so on, can involve days of manual labor for a single run.

Big data can also lead to big machine learning errors, so monitoring production models is essential. Again we reach an impasse: When moving into unsupervised machine learning, where the correct output isn’t known in advance, traditional testing and validation tools no longer work. So how is IT to determine if the model is making “good” predictions? Dashboards and program alerts fill the gap at the development level, and more capable and specific tools are finally being developed by a few innovators.

The point is that machine learning isn’t breaking any molds for rapid adoption. To the contrary, it’s experienced a slow rise. Neural networks joined the scientific literature in the 1930s, the math was completed by the 1990s, and it’s taken the intervening decades for computers to catch up.

The next obstacle will be developing end-to-end solutions, which will accelerate the transition from the rudimentary machine learning dominating business today to the more futuristic possibilities still mostly dormant in neural networking laboratories. How long such a transition will take is still up for debate.

If any more evidence were needed that a fire has been lit under digital transformation, then the prediction that the enterprise mobility market will explode to $500bn by 2020 is it.

By Nick Pike, VP UK and Ireland, OutSystems.

Fuelling this blaze is customer and employee demand for a seamless mobile digital experience in all aspects of their home and working lives. For businesses, it’s a case of adapt or be left in the dust, as they face challenges from disruptive industry entrants and innovators. IDC predicts that, by 2020, half of the Global 2000 enterprises will see the lion’s share of their businesses depending on their ability to create digitally-enhanced products, services and experiences. The deadline is short and the opportunities are huge, so why are some corporate organisations stuck at the starting gate?

Reason 1: The fear factor

Digital transformation means just what it says: fundamentally changing the way enterprises do business from top to bottom. That’s a daunting prospect for many executive teams in large corporations, despite the promised rewards. While workers at the coalface of the business may be crying out for streamlined mobile business processes and apps that will make them more efficient, the drive for large scale strategic change has to come from the top. Human and financial resources need to be allocated and the whole business lined up in support of the process so that digital transformation is viewed as a strategic investment in the future competitiveness of the company, rather than an expense.

Fear can also arise from concerns that already overstretched IT departments will struggle to cope with the new demands of application development and rollout. In fact, Gartner fed this particular fear in 2015, when predicting that by the end of 2017 the demand for enterprise-grade mobile apps would have grown at least five times faster than the ability of internal IT departments to deliver them. However, in the two years since that prediction was made, the rise in rapid application development platforms has reduced the burden on IT departments and shortened the time to launch. So, this particular fear can now be faced with a degree of confidence.

Reason 2: User resistance

Large companies with employees that are used to a slower pace of life can find it hard to adapt to the speed of digital transformation. They can struggle to align vital employee education programmes with the rollout timeframe that can be achieved. It’s no good having a fantastically efficient new system if users are still hankering after the legacy technology – warts and all – and struggling to embrace their new environment. Therefore, user education is a critical part of the transformation process.

OutSystems customer Aravind Kumar encountered this challenge when migrating his consulting company from a collection of 50 IBM Lotus Notes applications to a suite of new applications that were developed using the OutSystems low-code platform. He told us: “Getting people to shift their thinking was one of the greatest hurdles we had to overcome. In fact, even as we were building new applications, people were saying we should try to recreate them just as they were in Lotus Notes!”

Fortunately Aravind was able to bring his users with him on a journey to discover the efficiency and accessibility of the new applications his team had developed. The key point is that, to be successful in digital transformation, businesses need to invest in the human factor as well as in the technological expertise in order to realise the full benefits and mitigate resistance.

Reason 3: Letting the past dictate the future

Every large enterprise has a past. Unlocking information and freeing business processes from legacy IT systems can be one of the biggest stumbling blocks when it comes to digital transformation. However, it is important to recognise that, as I’ve said before, legacy systems exist because they work - they just don’t have the agility that the digital world requires. Establishing when legacy systems should be retired and when they should be incorporated into mobile business processes is a key challenge and evidence suggests that enterprises are mixing it up. A recent report by VDC research found that 53% of large organisations (organisations with >1000 employees) said that the most common development projects they worked on involved building net-new applications from the ground up; however 43% stated that they were modernising legacy applications.

The elegant solution is to find a way to leverage the legacy systems of the past without letting them crush the ambition and potential of the future. An advantage of the OutSystems low-code platform is its ability to integrate with legacy systems, even if they are unique to the customer, meaning that prior investment is not wasted.

As IDC neatly put it “Digital transformation starts with mobility. Organisations with untethered business processes and ubiquitously accessible IT resources will be better positioned to compete and thrive in the digital economy.” This is why organisations need to address the challenges of fear, user resistance and integrating legacy systems to get out of the starting gate onto the competitive racetrack of digital transformation.

It has been predicted that by 2020, there will be more than 200 billion devices connected by the Internet of Things (IoT). Whilst it has been discussed in technology circles for many years, it is only recently that businesses have started to properly embrace it. It essentially consists of billions of devices all connected by an invisible network, combined with faster wireless speeds and smart technology, it is slowly reshaping how entire industries function.

By Syed Ahmed, CEO at SAVORTEX.

One of the biggest challenges businesses face is a constant pressure to reduce costs and improve their carbon footprint. However, these two challenges contradict each other because in order to improve CO2 levels, a company tends to have to spend more money. Therefore, businesses are more frequently looking for cost effective solutions that will improve efficiency and help them to meet green targets, and many are turning towards the IoT for a solution.

In a recent survey conducted by Forbes, two thirds of the companies questioned believed that the IoT is important to their current business, and over 90% stated that it will play a crucial role in the future of their company. This new reliance on the IoT has provided manufacturers and technology companies with an opportunity to innovate and develop products that will help businesses achieve their goals.

For example, we partnered with Intel to create a revolutionary hand dryer that would harness the IoT and bring substantial savings and benefits to the businesses that installed them. The adDryer is an IoT-enabled smart dryer which includes a digital screen that can deliver tailored, high-definition video messages to users, which can be used for internal marketing or as an additional revenue stream.

The sleek and sustainable design means the adDryer has a depth of only 134mm and the lowest carbon footprint per dry as it runs off 500kW. With super-fast drying times from 11 seconds – 2.7 times faster than other dryers on the market, and using a patented energy recovery and curved air delivery technology, it results in a 66% energy saving compared to any other dryer on the market, with 97% savings when compared to using paper towels. This ensures the hand dryer meets BREEAM and LEED compliance.

In addition, due to the adDryer’s unique data capture technology, we can create an audience value of the estate based on the users of the building, for example gender, age and occupation. The company’s media buyers can then use this information to set and agree a cost per view rate that will be paid to the building owner each time someone uses an adDryer and sees an advert, thus creating revenue.

Moving forward, manufacturers now have a responsibility to make the IoT more accessible to smaller businesses. In recent years, the IoT has mainly been designed with bigger companies in mind. The fact that the technology is still fairly advanced means there are not many off-the-shelf solutions, and few SMEs have the resources for this sort of complexity. Therefore, simpler and more straightforward products must be developed to enable companies of all sizes to benefit from the savings and efficiencies the IoT can bring.

Whilst the washroom is only one part of the office space, by introducing the IoT, businesses can achieve substantial energy and cost savings by simply updating their hand dryers, and this is a solution that can be adopted by businesses of any size. If the same innovation is applied to all industries, companies will be able to surpass their sustainability targets.

Hyperconverged infrastructure is essential when embracing the demands of the IoT by Lee Griffiths, Infrastructure Solutions Manager, IT Division, APC by Schneider Electric.

The explosion in digital data requires, among many other things, an expanded vocabulary just to be able to describe it. Many of us familiar with computer systems as far back as the 1980s—or further—remember what a kilobyte is, in much the same way as we remember the fax machine. However, millenials may only have a vague recollection that such terms or technology ever existed or were relevant.

Now we must become as familiar with terms like the Zettabyte (that's 1x 1021byte) as we are with products like smart phones and self-driving cars—marvels that were science fiction not so long ago but which have become, or are rapidly becoming, commercially available realities. Industry analyst IDC predicts that by 2020 there will be 44Zbyte of data created and copied in Europe alone, based on the assumption that the amount will double every two years.