Should we still care about cables? asks Indi Sall, Technical Director, NG Bailey’s IT Services division in one of the articles in this issue of DCS. I’m particularly pleased that he’s written this article for two reasons.

Firstly, the question he asks is one of many that needs to be asked to question long held ideas and traditions when it comes to the world of data centres and IT.

Secondly, and selfishly, I’m pleased Indi asks the question as it’s been a favourite one of mine to ask all manner of IT and data centre professionals over the years and the universal reply has been: ‘How can we ever think of eliminating cables – the security risks alone associated with wireless mean that hard-wiring will always be required?’

To which, of course, one could politely point out that the security of data travelling along cables doesn’t look too clever right now – and certainly not as safe as data kept under lock and key in a filing cabinet and moved around physically accompanied by a courier and/or security guard!!

Let’s move on.

So many traditional views about so many aspects of our lives are being challenged on a regular basis, that it’s perfectly reasonable to ask whether cables are necessary any more. The success, or failure, of each radical new idea stands or falls by doing some kind of a calculation based on the pros, the cons and the cost of the concept – both for the providers and the customers.

Technology-wise, Ethernet is, perhaps, the classic example, where a technology that is some way away from being the best available one, has been universally adopted because of cost advantages – cost of buying and implementing the technology when compared to others, and Ethernet experts are rather more widely available than, say, Fibre Channel ones.

Back to wired v wireless (at last I hear you cry!) and one could easily see the argument for wireless replacing cables – configuration of networks is so much faster, easier and less expensive. These are major advantages when compared to the costs associated with cables. However, for now at least, it seems that the unreliability of wireless networks when it comes to transmitting and receiving data, alongside the security issue mentioned previously, means that business will stick to ‘tried and tested’ cables.

It will be fascinating to see whether this situation does change - there may well come a technology/price comparison tipping point – and what other IT and data centre traditions will go by the wayside. For example, with more and more focus on data mobility and flexibility, will large, centralised data centres begin to be replaced by more, smaller, more localised facilities – and 10 distributed data centres replacing one centralised one might make a difference when it comes to thinking about resilience (you may just have created an N + 9 environment!!).

Change for changes sake is not an easy concept to sell. Change to make a huge difference – whether to quality of customer service and/or the balance sheet and/or the ability to bring new ideas to market faster…well that’s worth thinking about, however unpromising it might sound.

Worldwide spending on public cloud services and infrastructure is forecast to reach $160 billion in 2018, an increase of 23.2% over 2017, according to the latest update to the International Data Corporation (IDC) Worldwide Semiannual Public Cloud Services Spending Guide. Although annual spending growth is expected to slow somewhat over the 2016-2021 forecast period, the market is forecast to achieve a five-year compound annual growth rate (CAGR) of 21.9% with public cloud services spending totaling $277 billion in 2021.

The industries that are forecast to spend the most on public cloud services in 2018 are discrete manufacturing ($19.7 billion), professional services ($18.1 billion), and banking ($16.7 billion). The process manufacturing and retail industries are also expected to spend more than $10 billion each on public cloud services in 2018. These five industries will remain at the top in 2021 due to their continued investment in public cloud solutions. The industries that will see the fastest spending growth over the five-year forecast period are professional services (24.4% CAGR), telecommunications (23.3% CAGR), and banking (23.0% CAGR).

"The industries that are spending the most – discrete manufacturing, professional services, and banking – are the ones that have come to recognize the tremendous benefits that can be gained from public cloud services. Organizations within these industries are leveraging public cloud services to quickly develop and launch 3rd Platform solutions, such as big data and analytics and the Internet of Things (IoT), that will enhance and optimize the customer's journey and lower operational costs," said Eileen Smith, program director, Customer Insights & Analysis.

Software as a Service (SaaS) will be the largest cloud computing category, capturing nearly two thirds of all public cloud spending in 2018. SaaS spending, which is comprised of applications and system infrastructure software (SIS), will be dominated by applications purchases, which will make up more than half of all public cloud services spending through 2019. Enterprise resource management (ERM) applications and customer relationship management (CRM) applications will see the most spending in 2018, followed by collaborative applications and content applications.

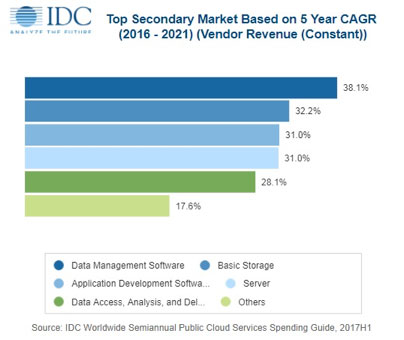

Infrastructure as a Service (IaaS) will be the second largest category of public cloud spending in 2018, followed by Platform as a Service (PaaS). IaaS spending will be fairly balanced throughout the forecast with server spending trending slightly ahead of storage spending. PaaS spending will be led by data management software, which will see the fastest spending growth (38.1% CAGR) over the forecast period. Application platforms, integration and orchestration middleware, and data access, analysis and delivery applications will also see healthy spending levels in 2018 and beyond.

The United States will be the largest country market for public cloud services in 2018 with its $97 billion accounting for more than 60% of worldwide spending. The United Kingdom and Germany will lead public cloud spending in Western Europe at $7.9 billion and $7.4 billion respectively, while Japan and China will round out the top 5 countries in 2018 with spending of $5.8 billion and $5.4 billion, respectively. China will experience the fastest growth in public cloud services spending over the five-year forecast period (43.2% CAGR), enabling it to leap ahead of the UK, Germany, and Japan into the number 2 position in 2021. Argentina (39.4% CAGR), India (38.9% CAGR), and Brazil (37.1% CAGR) will also experience particularly strong spending growth.

The U.S. industries that will spend the most on public cloud services in 2018 are discrete manufacturing, professional services, and banking. Together, these three industries will account for roughly one third of all U.S. public cloud services spending this year. In the UK, the top three industries (banking, retail, and discrete manufacturing) will provide more than 40% of all public cloud spending in 2018, while discrete manufacturing, professional services, and process manufacturing will account for more than 40% of public cloud spending in Germany. In Japan, the professional services, discrete manufacturing, and process manufacturing industries will deliver more than 43% of all public cloud services. The professional services, discrete manufacturing, and banking industries will represent more than 40% of China's public cloud services spending in 2018.

"Digital transformation is driving multi-cloud and hybrid environments for enterprises to create a more agile and cost-effective IT environment in Asia/Pacific. Even heavily regulated industries like banking and finance are using SaaS for non-core functionality, platform as a service (PaaS) for app development and testing, and IaaS for workload trial runs and testing for their new service offerings. Drivers of IaaS growth in the region include the increasing demand for more rapid processing infrastructure, as well as better data backup and disaster recovery," said Ashutosh Bisht, research manager, Customer Insights & Analysis.

100% of IT leaders with high degree of cost transparency are on company board, compared with 54% of non or partially cost transparent enterprises.

A survey of senior IT decision-makers in large enterprises, commissioned by Coeus Consulting, found that IT leaders who can clearly demonstrate the cost and value of IT have greater influence over the strategic direction of the company and are best positioned to deliver business agility for digital transformation. Consequently, cost transparency leaders are twice as likely to be represented at board level and thus are better prepared for external challenges such as changing consumer demand, GDPR and Brexit.

The survey of organisations with revenues of between £200m and £30bn revealed the importance of cost transparency within IT when it comes to forward planning and defining business strategy. Based on the responses of senior decision-makers (more than half of whom are C-level), the report identifies a small group of Cost Transparency Leaders who indicated that their departments: work with the rest of the organisation to provide accurate cost information; ensure that services are fully costed; and manage the cost life cycle.

88% of respondents were unable to indicate that they can demonstrate cost transparency to the rest of the organisation.

When compared to their counterparts, Cost Transparency Leaders are:

Twice as likely to be represented at board level (100% v 54%)1.5x more likely to be involved in setting business strategy (85% v 55%)Twice as likely to report that the business values IT’s advice (100% v 52%)Twice as likely to demonstrate alignment with the business (90% v 50%)More than seven times as likely to link IT performance to genuine business outcomes (38% v 5%)

“This survey clearly reveals that cost transparency is a pre-requisite for IT leaders with aspirations of being a strategic partner to the business. Those that get it right are better able to transform the perception of IT from ‘cost centre’ to ‘value centre’ and support the constant demand for business agility that is typical of the modern, digital organisation. Only those that have achieved cost transparency in their IT operations will be able to deal effectively with external challenges such as Brexit and GDPR” said James Cockroft, Director at Coeus Consulting.

Digital transformation trends mean that businesses are focusing more heavily on their customers and are using technology to improve their experience. However, IT departments remain bogged down in day-to-day activities and the need to keep the lights on, which is preventing teams focusing on how they can help drive improvements to the customer experience.

This is according to research commissioned by managed services provider Claranet, with results summarised it its 2018 Report, Beyond Digital Transformation: Reality check for European IT and Digital leaders.

In a survey of 750 IT and Digital decision-makers from organisations across Europe, market research company Vanson Bourne found that the overwhelming majority (79 per cent) feel that the IT department could be more focused on the customer experience, but that staff do not have the time to do so. More generally, almost all respondents (98 per cent) recognise that there would be some kind of benefit if they adopted a more customer-centric approach, whether this be developing products more quickly (44 per cent), greater business agility (43 per cent), or being better prepared for change (43 per cent).

Commenting on the findings, Michel Robert, Managing Director at Claranet UK, said: “As technology develops, IT departments are finding themselves with a long and growing list of responsibilities, all of which need to be carried out alongside the omnipresent challenge of keeping the lights on and making sure everything runs smoothly. Despite a tangible desire amongst respondents to adopt a more customer-centric approach, this can be difficult when IT teams have to spend a significant amount of their time on general management and maintenance tasks.”

Improving customer experience is the second most-commonly-cited challenge by European IT departments (38 per cent), only behind security. Finding the right balance between digital and human interaction was also a common struggle, with 46 per cent of respondents expressing that sentiment. For Michel, this is where the expertise of managed service providers could help to lessen the load and enable IT staff to work more readily on customer-driven projects. When asked about the main drivers behind outsourcing elements of an IT estate to third parties, 46 per cent cited more time to focus on innovation, and 48 per cent said it frees up resource for them to focus on company strategy.

Michel continued: "IT and digital staff are struggling for resource in an increasingly competitive business environment, and the evidence from our survey underlines this. By entrusting the responsibility for keeping the lights on to a third-party, IT departments can relieve themselves of this time-consuming burden, while maintaining peace of mind in knowing that these tasks are in the hands of a partner with a high level of expertise in this field.”

Michel concluded: “Placing faith in a third-party supplier will allow the IT department – and the wider business in general – to focus on what really matters: an ever-improving, positive customer experience. IT innovation will be free to come to the fore, and it will become easier for the goals of the IT department to be effectively aligned with those of the rest of the organisation.”

Cisco has released the seventh annual Cisco® Global Cloud Index (2016-2021). The updated report focuses on data center virtualization and cloud computing, which have become fundamental elements in transforming how many business and consumer network services are delivered.

According to the study, both consumer and business applications are contributing to the growing dominance of cloud services over the Internet. For consumers, streaming video, social networking, and Internet search are among the most popular cloud applications. For business users, enterprise resource planning (ERP), collaboration, analytics, and other digital enterprise applications represent leading growth areas.451 Research, a top five global IT analyst firm and sister company to datacenter authority Uptime Institute, has published Multi-tenant Datacenter Market reports on Hong Kong and Singapore, its fifth annual reports covering these key APAC markets.

451 Research predicts that Singapore’s colocation and wholesale datacenter market will see a CAGR of 8% and reach S$1.42bn (US$1bn) in revenue in 2021, up from S$1.06bn (US$739m) in 2017. In comparison, Hong Kong’s market will grow at a CAGR of 4%, with revenue reaching HK$7.01bn (US$900m) in 2021, up from HK$5.8bn (US$744m) in 2017.

Hong Kong experienced another solid year of growth at nearly 16%, despite the lack of land available for building, the research finds. Several providers still have room for expansion, but other important players are near or at capacity, and only two plots of land are earmarked for datacenter use. Analysts note that the industry will face challenges as it continues to grow, hence the reduced growth rate over the next three years.

“The Hong Kong datacenter market continues to see impressive growth, and in doing so has managed to stay ahead of its closest rival, Singapore, for yet another year,” said Dan Thompson, Senior Analyst at 451 Research and one of the report’s authors. However, with analysts predicting an 8% CAGR for Singapore over the next few years, Singapore’s datacenter revenue is expected to surpass Hong Kong’s by the end of 2019.

451 Research analysts found that, while the number of new builds in Singapore slowed in 2017, the market still saw nearly 12% supply growth overall, compared with 19% the previous year. The report notes that the reduced builds in 2017 follow two years when providers had invested heavily in building new facilities and expanding existing ones.

“Rather than seeing 2017 as a down year for Singapore, we see it as a ‘filling up’ year, where providers worked to maximize their existing datacenter facilities,” said Thompson. “Meanwhile, 2018 is shaping up to be another big year, with providers including DODID, Global Switch and Iron Mountain slated to bring new datacenters online in Singapore.”

Analysts also reveal that demand growth in both Hong Kong and Singapore has shifted from the financial services, securities, and insurance verticals to the large-scale cloud and content providers.

451 Research finds that Singapore’s role as the gateway to Southeast Asia remains the key reason why cloud providers are choosing the area. “Cloud and content providers are choosing to service their regional audiences from Singapore because it is comparatively easy to do business there, in addition to having strong connectivity with countries throughout the region. This all bodes well for the country’s future as the digital hub for this part of APAC,” added Thompson.

451 Research finds that Hong Kong’s position as the gateway into and out of China remains a key reason why cloud providers are choosing the area, as well as the ease of doing business there. This is good news for the city as long as providers find creative solutions to their lack of available land.

451 Research has also compared the roles of the Singapore and Hong Kong datacenter markets in detail. The analysts concluded that multinationals need to deploy datacenters in both Singapore and Hong Kong, since each serves a very specific role in the region: Hong Kong is the digital gateway into and out of China, while Singapore is the digital gateway into and out of the rest of Southeast Asia.

Analysts find that these two markets compete for some deals, but surrounding markets are vying for a position as well. As an example, Singapore sees some competition from Malaysia and Indonesia, while Hong Kong could potentially see more competition from cities in mainland China, such as Guangzhou, Shenzhen and Shanghai. However, the surrounding markets are not without challenges for potential consumers, suggesting that Singapore and Hong Kong will remain the primary destinations for datacenter deployments in the region for the foreseeable future.

Growing adoption of cloud native architecture and multi-cloud services contributes to $2.5 million annual spend per organization on fixing digital performance problems.

Digital performance management company, Dynatrace, has published the findings of an independent global survey of 800 CIOs, which reveals that 76% of organizations think IT complexity could soon make it impossible to manage digital performance efficiently. The study further highlights that IT complexity is growing exponentially; a single web or mobile transaction now crosses an average of 35 different technology systems or components, compared to 22 just five years ago.

This growth has been driven by the rapid adoption of new technologies in recent years. However, the upward trend is set to accelerate, with 53% of CIOs planning to deploy even more technologies in the next 12 months. The research revealed the key technologies that CIOs will have adopted within the next 12 months include multi-cloud (95%), microservices (88%) and containers (86%).

As a result of this mounting complexity, IT teams now spend an average of 29% of their time dealing with digital performance problems; costing their employers $2.5 million annually. As they search for a solution to these challenges, four in five (81%) CIOs said they think Artificial Intelligence (AI) will be critical to IT's ability to master increasing IT complexity; with 83% either already, or planning to deploy AI in the next 12 months.

“Today’s organizations are under huge pressure to keep-up with the always-on, always connected digital economy and its demand for constant innovation,” said Matthias Scharer, VP of Business Operations, Dynatrace. “As a consequence, IT ecosystems are undergoing a constant transformation. The transition to virtualized infrastructure was followed by the migration to the cloud, which has since been supplanted by the trend towards multi-cloud. CIOs have now realized their legacy apps weren’t built for today’s digital ecosystems and are rebuilding them in a cloud-native architecture. These rapid changes have given rise to hyper-scale, hyper-dynamic and hyper-complex IT ecosystems, which makes it extremely difficult to monitor performance and, find and fix problems fast.”

The research further identified the challenges that organizations find most difficult to overcome as they transition to multi-cloud ecosystems and cloud native architecture. Key findings include:

76% of CIOs say multi-cloud makes it especially difficult and time-consuming to monitor and understand the impact that cloud services have on the user-experience72% are frustrated that IT has to spend so much time setting-up monitoring for different cloud environments when deploying new services72% say monitoring the performance of microservices in real-time is almost impossible84% of CIOs say the dynamic nature of containers makes it difficult to understand their impact on application performanceMaintaining and configuring performance monitoring (56%) and identifying service dependencies and interactions (54%) are the top challenges CIOs identify with managing microservices and containers

“For cloud to deliver on expected benefits, organizations must have end-to-end visibility across every single transaction,” continued Mr. Scharer. “However, this has become very difficult because organizations are building multi-cloud ecosystems on a variety of services from AWS, Azure, Cloud Foundry and SAP amongst others. Added to that, the shift to cloud native architectures fragments the application transaction path even further.

“Today, one environment can have billions of dependencies, so, while modern ecosystems are critical to fast innovation, the legacy approach to monitoring and managing performance falls short. You can’t rely on humans to synthesize and analyze data anymore, nor a bag of independent tools. You need to be able to auto detect and instrument these environments in real time, and most importantly use AI to pinpoint problems with precision and set your environment on a path of auto-remediation to ensure optimal performance and experience from an end users’ perspective.”

Further to the challenges of managing a hyper-complex IT ecosystem, the research also found that IT departments are struggling to keep pace with internal demands from the business. 74% of CIOs said that IT is under too much pressure to keep up with unrealistic demands from the business and end users. 78% also highlighted that it is getting harder to find time and resources to answer the range of questions the business asks and still deliver everything else that is expected of IT. In particular, 80% of CIOs said it is difficult to map the technical metrics of digital performance to the impact they have on the business.

By Steve Hone, CEO, The DCA

I would like to thank all the members who contributed to this month’s journal topic. Next month you will be able to hear from some of our many Strategic Partners on the collaborative work being undertaken and The DCA will be providing a review of the EU Commission funded EURECA project which officially concludes this February. Finally, I look forward to seeing you all in London at DCW18, the DCA are on stand D48.

James Cooper, Product Manager from ebm-papst UK, takes a look at understanding the impact of inefficient cooling and airflow management in legacy data centres; and how simple steps, including upgrading the fan technology, can make a system more efficient and give a new lease of life to old equipment.

We are seeing a definite trend towards more investment in data centre efficiencies, by removing legacy components and replacing them with innovative and more efficient systems. Organisations are continuously looking at new ways to optimise their data centre infrastructure and the critical resources they house.

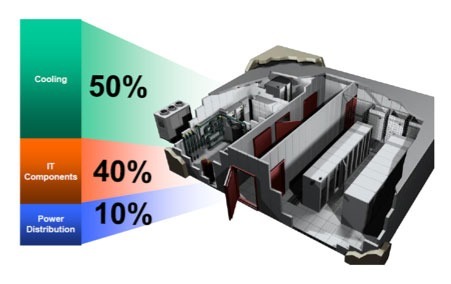

Power and cooling, rather than floor space, are becoming the largest limitation. On average, around 50% of all electrical energy consumed in a data centre is a result of cooling, therefore this has to be one of the main focuses when looking for efficiency savings.

Operators are striving to create more efficiency and better understand how to design their data centres. Effective airflow management is crucial, and upgrading legacy equipment and installing new airflow management systems is a key consideration to acheive optimum results.

An average legacy data centre in the UK has a PUE of 2.5 which means that only 40% of the energy used is available for IT load.

Another way of expressing this is by using the metric DCiE (Data centre infrastructure efficiency), which would indicate that a data centre with a PUE of 2.5 is 40% efficient.

Most of the energy, unless it is a modern data centre, is put towards mechanical cooling; which in the eyes of most IT people is a necessary evil. It doesn’t have to be that way, as cooling methods and technology have also moved on significantly and there are many ways to make it more efficient. Modern data centres designed to use ambient air directly or indirectly, are becoming increasingly popular and show much more efficient results, coming in at 1.2 or better.

There are a range of cooling options in data centres. Traditional CRAC units (Computer Room Air Conditioners), that tended to sit against the walls in the room or in a corridor blowing under the floor have seen a more recent influx of aisle or rack units, taking the cooling closer to the server with DX or water, suggesting higher density cooling capabilities. Direct and indirect fresh air cooling is also seen as a viable option for the UK; since our average annual temperature is 60% of the time below 12degC. Adiabatic cooling has also seen a revival recently, even though it is most efficient in hotter climates.

The problem is that, certainly in legacy data centres, there are limited options in modifying the structure of the building to utilise new ideas. Most data centres run on partial load, and never get anywhere near their original design. Although high density racks are available to maybe get 60kW+ in a rack, in the past few years the average rack density has barely gone above 4kW/cabinet (less than 2kW/m2).

A good strategy is to start with low hanging fruit and be realistic with what your infrastructure can support. This will help narrow the options. The first thing to consider is that two critical components within the cooling system should be the focus; the compressors and the fans. If you can improve the efficiency of the cooling circuit to allow compressors to run for less time, then this will lead to a huge energy saving. If you can use the latest EC fan technology and reduce the airflow when not required, then this will also lead to bigger energy savings.

Air is lazy! If air can find an easy route it will. One of the biggest and easily fixed wastes of energy is the lack of air management. If the air can escape and bypass a server it will do, and it is therefore wasting energy. Plugging gaps and forcing the air to go only to the front of the racks is an easy step into improving efficiency. The Uptime Institute indicated that simple airflow management and best practice could increase PUE’s from 2.5 to 1.6.

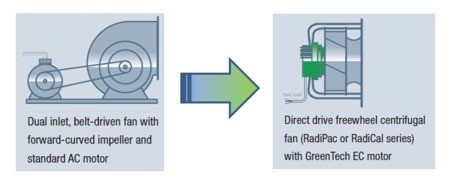

Fans are critical to the movement of air around the data centre. Legacy units may contain old inefficient AC blowers with belt drives that break regularly and shed belt dust through the room. They are usually patched up and kept going, because to change a complete CRAC unit can be costly and sometimes physically impossible. Typically, the fans are running at a single speed, and due to most data centres requiring only partial load, airflow management is controlled by shutting off air vents or switching units off completely.

Upgrading to EC fans (a plug and play solution without the need to setup and commission separate drives) is one way to achieve an immediate saving. With modern EC fan technology there is no need for belts and pulleys, and the efficiencies of the motors are significantly higher, >90%. The main benefit is that they are easily and cost effectively speed controlled, allowing a partial load data centre to turn down the airflow to only what is needed. The amount of turn down is dependent on the capabilities and type of unit and whether it is DX or chilled water.

A 50% reduction in airflow can mean 1/8 of the power being consumed by an EC fan and a potential reduction in noise of 15dB(A)! This added to the improvement in cooler running, maintenance free operation and longer lifetimes offers a simple and cost-effective improvement.

The effect of improving airflow within the data centre will mean that upstream systems, chillers, condensers etc can relax and be reduced; so even more energy can be saved. As technology advances at an exponential rate into the future with equipment becoming smaller and faster, the future of cooling is secure. Whatever the choice of medium, there will be a need to keep equipment cool, efficiently.

Innovations and concepts around data centre architecture and design will force the sector to think differently when trying to achieve optimal efficiency and create competitive advantage. Data centres will continue to expand as demands, both social and business, for digital content and services grow. IT loads will become more computational/energy efficient and, as a result, far more dynamic. Thermal management will improve and PUEs will fall to 1.1-1.2 in Europe. Air will take over from chilled water as the most economic cooling solution, and variable speed EC fans will become mandatory for either pressure or capacity control with the modern efficient data centre.

Visit www.ebmpapst.co.uk

By John Booth, The DCA Energy Efficiency Steering Group Chair, MD of Carbon3IT Ltd and Technical Director of the National Data Centre Academy

Back in May 2015 I wrote the following article for publication in the DCA Journal. Recently, having been asked to undertake some work for an innovative company in this space, I thought I would dig out the article, see if anything had changed and make some edits.

Sadly, I must report that whilst some new companies have entered the space, not much has changed, the underlying rationale still has credence and the sentiment is still correct but there’s still not much movement at scale.

The original article is in black, whilst my updated comments are in blue.

The use of liquid cooling technologies in the ICT industry is very small, but is this about to change? Perhaps, but at a slow pace than I expected.

First, we need to understand why liquid cooling is gaining ground in a traditionally air-cooled technology area. Rack power densities are projected to rise, a recent Emerson Network Power survey indicated that 70% of 800 respondents expected rack power will be at or above 40Kw by 2025 (Data Centre 2025), high rack power drives increased power distribution losses, increased air flow and power consumption, lower server inlet air temperatures and increased chiller power consumption.

Judging from anecdotes from industry, rack densities have remained static in colocation sites and even new builds have not been fitting out for much more than 10kW, we’re not sure that this remains true for hyperscale but then they are adopting OCP which runs on DC. We can rest assured that we still have 7 years left until the forecast is completely trashed!

Energy costs will rise globally sooner rather than later if fossil fuels continue to be the primary source due to scarcity of supply, but even the use of other alternative energy solutions become more widely adopted, the integration of the new plant will require new infrastructure, all of which require massive investment globally.

The costs of which will eventually be passed on to the consumer.

Most of the above still applies, and with BREXIT (which was only a twinkle in a frog’s eye in May 2015) recent news, such as the closure of coal fired power stations in the UK and the dependence on Dutch and French power to supplement our own generation could bring energy shortages to the UK, earlier than I expected.

We must also be mindful of a whole raft of regulation and energy taxation (12) that could have a serious impact.

Thermal management options will dictate rack power density and will have an impact on energy efficiency.

Finally, users are having an impact, high performance computing has always been drawn to liquid cooling options but non-academic uses such as bitcoin mining, pharma and big data, social media, AI and face recognition are all driving a need for energy efficient compute.

Having visited some bitcoin mines, (albeit virtually via a well known online video platform), it seems that liquid cooling is not in their thoughts at the present time, although recent news from Iceland (February 2018) suggested that soon the bitcoin mining community would be using MORE energy than is required for residential and commercial properties which could change the dynamic somewhat. The other users don’t seem to have materialised…yet!

The Emerson Network Power survey suggested that 41% of respondents felt that in 2025 air and liquid would be prevalent, 20 % ambient air (free cooling), 19% cool air, 11% liquid, and 9% immersive.

I’d stand by these numbers, after all, it is 2025, and we are away off yet!

It is the 20% comprising liquid and immersive technologies that are of real interest.

Liquid cooling can be split into four main types, these are, “on chip”, basically liquid cooled chipsets that use heat sinks to dissipate the heat generated and the hot air is expelled in a similar fashion to conventional.

Single Phase immersion, where all thermal interface materials are replaced with oil compatible versions, fans are removed, optical connections are positioned outside of the bath and the servers are immersed in an oil bath.

Two phase immersion, where thermal interface materials are replaced with dielectric compatible versions, fans are removed, rotating media (storage) are replaced with Solid state drives, optical connections are located outside the bath and the servers are immersed in a dielectric solution.

The final method is dry disconnect, where heat pipes transfer heat to the edge of the server, the server connects to a water-cooled wall via a dry interface and the rack itself is connected to a coolant distribution system.

Nothing to see here, the technology hasn’t changed much in three years, and most of that has been developments in heat transfer technology i.e. better values and less leaks and monitoring.

PUE’s of 1.1 or lower have been cited for pure liquid cooled solutions, but these have been largely carried out on single rack installations and may not have considered additional water pumping when installed on a large scale.

Ah, yes, at scale, well that is largely the trouble, no one big user has committed to using liquid cooling at scale, plenty of pilots and demonstrations but none at any kind of scale, although my spies tell me that their have been some developments but none that are ready to be revealed as yet, perhaps in another three years, when no doubt I’ll dust this report off again and have a little revise!

That’s said, considerable savings can be made by switching to liquid cooling, a recent Green Grid Liquid cooling webinar cited figures of 88% reduction in blower power and 97% reduction in chiller power.

I still believe that the savings are possible.

Liquid cooling also has another benefit in that inlet temperatures can be higher (up to 30-40°C) and useful heat in liquid form (up to 65°C) is the output.

ASHRAE released the “W” classifications in 2016, these range from W1 – W3 where the operating range is similar to air cooled equipment, and is designed to integrate with conventional air cooling systems, the W4 range where the input temperature is higher, but the upper range is only 45° and finally W5, the hot water cooling range above 45°C.

This means that the data centre can now be connected to CHP systems or the heat sold for other purpose. The data centre will need to become part of an ecosystem, one where waste water from an industrial process can be used for cooling and where the waste heat from the data centre can be used for another purpose.

Indeed, this presupposes that you have a client who will “buy” your heat locally. In Amsterdam in January 2018, Jaak Vlasveld of Green IT Amsterdam spoke about the concept of “heat brokers” who would sit between data centre operators and potential heat users to deal with the commercial side of things. Personally, I’d like to see some enhanced thinking on this. Perhaps locating heat producers (liquid cooled (medium/high grade) air doesn’t provide the necessary heat transfer properties and is low grade) such as data centres and any one of a host of potential heat users, swimming pools, urban farms, textiles, office heating etc.

The outlook for liquid cooling in the data centre arena.

Clearly, adoption is on the up, from the Emerson survey many of the respondents expected to see more liquid cooled solutions in the space come 2025, which after all is only ten years away.

But, and it is a big but, a lot of investment has been made globally in the construction of air cooled data centres and these are not well suited to the wide scale adoption of liquid cooling technologies. Add the “ecosystem” element, which will no doubt cause some problems with managers and designers and you have another set of reasons not to adopt.

It is difficult to see past the clout of the vendors who have air cooled solutions who ultimately determine what technology is installed, unless they either develop their own tech or buy one of the current players.

Many of the liquid cooled solutions are the domain of smaller, dare I say, niche players and there may be difficulties in a rapid adoption of Liquid through a lack of equipment, skills and infrastructure. This of course will be negated if a big giant comes a calling.

Hmm, I’ll have to polish my crystal ball more often, its looking a little dusty, however I’m pleased with my comments from May 2015, so far, so good.

That said, liquid cooling in my mind is a disruptive technology and we await developments with interest.

Next update in 2021!

By Richard Clifford, Keysource

With heightened competition driving the need for new efficiencies to be found across data centre estates, Richard Clifford, head of innovation at critical environment specialist Keysource, discusses some of the key drivers for change in the data centre market.

With increased competition and tighter margins comes new impetus for operators to identify efficiencies. Implementing more efficient cooling systems and streamlining maintenance procedures are well explored routes to doing this, but they also represent low hanging fruit in terms of cost savings. Competition in the co-location and cloud markets is heating up, and so data centre operators are going to have to be more imaginative if they are to stay ahead of the curve.

Some notable trends are likely to accelerate over the next five years and operators would be wise to consider how they can be incorporated into their estates.

The resurgence of the edge-of-network market is one. This relies on a decentralised model of data centres that employ several smaller facilities, often in remote locations, to provide an ‘edge’ service. This reduces latency by bringing content physically closer to end users.

The concept has been around for decades, but it fell out of favour with businesses with the advent of large, singular builds, which began to offer greater cost-efficiencies. That trend is now starting to reverse, due in part to the rise of the Internet of Things and a greater reliance on data across more aspects of modern life. Growing consumer demands for quicker access to content is likely to lead to more operators choosing regional, containerised and micro data centres.

Artificial Intelligence (AI) is also set to have a transformational impact on the industry. Like many sectors, the potential of AI is becoming a ubiquitous part of the conversation in the data centre industry, but there are few real-world applications of it in place. Currently complex algorithms are used to lighten the burden on management processes, for example, some operators are using these systems to identify and regulate patterns in power or temperature consumption that could indicate an error or inefficiency within the facility. Managers can then deploy resources to fix it before it becomes a bigger problem or risks downtime. Likewise, they can also be used to identify security risks, for example recording if the data centre has been accessed remotely or out of hours and reporting any unusual behaviour.

This is still in early stages of development and at the moment, AI still relies on human intervention to make considered decisions, rather than automatically deploying solutions. But as the industry learns to embrace this tool more, we’re likely to see its capability expand. Specialist research projects such as IBM Watson and Google DeepMind are already focusing on creating new AI systems that are self-aware which can be incorporated into a cloud offering and solve problems independently, lessening the management burden even further.

As the implementation of edge networks grows, it is likely that AI will have a greater role in managing facilities remotely. To work successfully, edge data centres must be adaptable, modular and remotely manageable as ‘Lights Out’ facilities, serviced by an equally flexible workforce and thorough management procedures – a perfect example of where AI can pick up the burden. Likewise, storing information in remote units brings increased security risks and businesses will need to consider a vigilant approach to data protection to meet legal obligations and identify threats before they cause damage. Introducing AI algorithms that can remotely monitor security and day-to-day maintenance will go some way to reassuring clients that these risks can be mitigated through innovation.

Innovation must be a carefully considered decision for data centre operators. Implementing an innovative system represents a significant capital investment and it can be difficult to quantify a return. New processes need to be adopted early enough to give a competitive advantage, while caution needs to be exercised to avoid being the first to invest in brand new technology only for it to become obsolete a year later. Striking a balance between these two considerations will be key for data centre operators looking to grow their market share in such a competitive sector - despite the risk, when innovation works successfully the payoff can be huge.

For information http://www.keysource.co.uk/

By Tim Mitchell, Klima-Therm

One of the key areas of innovation in the data centre industry over the past ten years has been improvement in energy efficiency. It is central to both improving profitability through reducing running costs, and enhancing Power Usage Effectiveness (PUE) and other energy-related metrics to meet the sustainability requirements of corporate clients.

Despite the emergence of more temperature tolerant chips, one of the biggest components of data centre power usage remains cooling. There have been attempts to manage this with fresh-air-only ventilation systems, but issues with latent system requirements, space constraints and concerns around reliability and resilience mean that mechanical cooling – of one sort or another – remains the default choice.

Mechanical cooling relies on refrigerant compressors, an area of technology that had remained more or less static for decades until the emergence of compact centrifugal compressors ten years ago. Their appearance marked the start of a new era for air conditioning efficiency. In those early days, few recognised the impact this rather esoteric new technology would have on the market and the wider industry.

As one of the first adopters in the world of this new approach, I will admit we were slightly mesmerised by the idea of harnessing magnetic levitation bearings in a compact centrifugal design. It was a compelling proposition, as it overcame the need for oil in the compressor, thereby avoiding at a stroke all the problems that accompany conventional compressor lubrication, spanning operation (especially low-load operation as data halls are populated) and the requirements of ongoing service and maintenance.

That advance alone would have been highly significant, and a major advantage for both data centre operators and service companies. However, when you add the exceptional efficiency gains – generally 50per cent better than traditional systems - smaller chiller foot-print, low start-up current, low noise operation, long-term reliability and overall low maintenance requirements, it is not hard to see why compact centrifugal technology has become such a game-changer.

In a nut-shell, it enabled more cooling from less energy in a more compact space, and required less power to start and fewer service visits to maintain. The fact that it is compatible with efficient and stable low Global Warming Potential (GWP) HFO refrigerants is a further major plus for data centre operators looking to future-proof themselves against legislative changes.

It is a classic example of a disruptive technology rewriting the rules. The early sceptics have been proved decisively wrong. With the technology proven, multi-million pound investments are now being made in expanding production of Turbocor compressors and further refining the technology.

With take-up growing across the world, manufacturers of traditional cooling compressors are now looking to develop their own magnetic levitation-based compact centrifugal systems. However, as often with disruptive technology, it is a steep research and development curve and requires very substantial investment.

Early adopters are already working on the next generation of systems, which take the gains delivered by Turbocor-based systems to the next level. A stand-alone compact centrifugal compressor is already highly efficient, and the opportunities for wringing further efficiency gains are inherently limited. However, there are significant opportunities for improving efficiency in terms of the overall chiller design and the performance of other key system components.

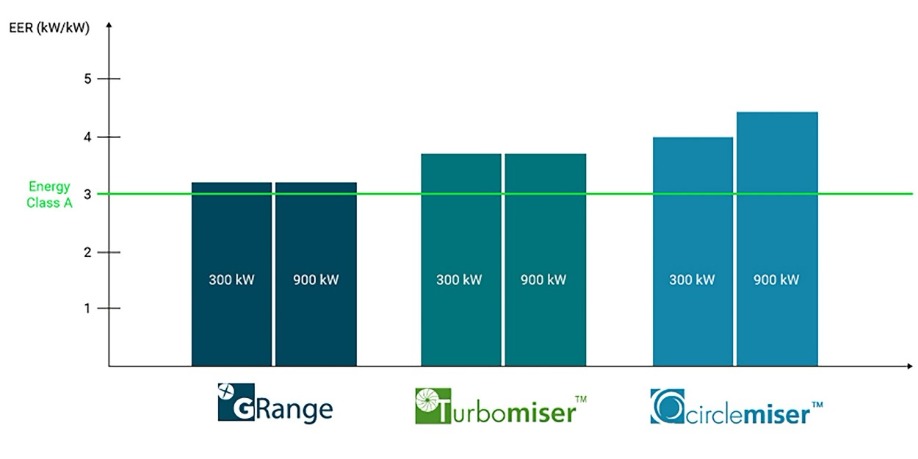

Heat exchangers are a key area of interest. For example, the recently introduced Circlemiser chiller from our supply partner Geoclima, based on Turbocor compressors, uses a new design of condenser heat exchangers. This delivers improvements of up to 15per cent on Turbomiser’s already outstanding EER* rating. It is believed to be the most efficient dry air-cooled chiller in the world.

This has been made possible by replacing the traditional flat coils with cylindrical condensers, and the use of flooded evaporators in a cascade system. By packing more active heat exchange surface into a given space, the heat exchange capacity of Circlemiser’s cylindrical microchannel condensers is increased by 45per cent compared with traditional condensers.

Cylindrical heat exchangers increase heat exchanger capacity in both the rejection and delivery sides by reducing condensing temperature as well as evaporator approach temperature. Importantly, this improvement in performance is achieved without increasing the chiller footprint, enabling more cooling capacity in a given space, while significantly reducing energy consumption.

With rooftop and plant room space often at a premium in new and refurbishment projects, this offer a major advantage over both conventional chillers and standard Turbomiser machines.

The use of a cascade system with flooded evaporators helps reduce the ΔT between evaporation temperature and outlet temperature of the chilled water. This increase in evaporation temperature further reduces energy consumption.

Comparing the like-for-like performance of Circlemiser with standard air-cooled Turbomisers (at AHRI/EUROVENT conditions, with the same number and model of compressors), Circlemiser shows an increase in EER of up to +9.5per cent with one compressor, and up to +15per cent with multiple compressors. The highest EER value achieved is 4.35, but the most staggering comparison comes at part load or full load / less than peak ambient temperature conditions, where gains of +25percent are typical compared to even the most efficient existing Turbocor-based machines

This gives Circlemiser equivalent efficiency to Turbomiser chillers equipped with adiabatic evaporative systems (at 50per cent Relative Humidity), but without the additional cost and complication of installation and maintenance associated with adiabatic systems.

It lends itself to use in refurbishment projects, where data centre cooling loads may have increased over time and the existing chiller is now under-sized, but where plant space is restricted and unable accommodate a larger replacement. This situation arises often, particularly in city centres.

While manufacturers of conventional compressor technology seek to catch up with the compact centrifugal revolution, those who pioneered the technology are already several laps ahead – on both the refinement of the base technology itself and its application in ever-more efficient systems. The early adopters bore the risks, but are now harvesting the fruits of embracing this game-changing new approach.

For data centre operators wrestling with the often conflicting demands of rising cooling loads, limited power head-room and site space restrictions, these latest developments in heat exchange and compressor technology offer potential solutions not hitherto possible with conventional mechanical cooling.

For more details, contact Tim Mitchell 07967 030737; tim.mitchell@klima-therm.co.uk

*EER = COP coefficient of performance (for cooling), in accordance with ANSI/AHRI STANDARD 551/591 (SI).

By Graham Hutchins, Marketing Manager, Simms - Memory and Storage Specialists

Data centres are facing a tough challenge – do more with less. For them transformation is not a choice any longer, it’s do or die, innovate or stagnate.

Most of us by now have heard Google’s Eric Schmidt’s ‘5 exabytes’ quote, which is the amount of data produced from the dawn of civilisation to 2003, and which we now create every two days. It’s safe to say that data and IT are no longer business enablers, they are the business. The use of data has to impact positively on any company’s P&L.

Focusing on the hardware element of this, positive impact is ultimately achieved when a datacentre or hosting company identifies ways in which it can reduce infrastructure and operational costs, whilst enhancing (best case), not impacting (worst case), customer experience.

This is best achieved when the data use case(s) drive technology selection. This new approach is already being heavily adopted in North America. Tech-savvy system integrators (Google, Amazon, Facebook etc.), with complex and large data requirements, have established that relying exclusively on out-of-the-box configurations from top-branded server manufacturers is no longer offering them the best value. There are options at all levels.

So what changes are we seeing from a memory and storage perspective for the transforming data centre? Memory and storage for the enterprise environment is evolving rapidly in a quest to; enhance QoS, lower latency, perform faster, increase capacity, reduce power, improve reliability and productivity, reduce failure rates, eliminate downtime and give a better return on investment. We also need to understand what’s happening in the market.

With 3D NAND flash production continuing apace (by the end of 2018 3D NAND will account for 74% of all NAND flash) we are seeing some major developments and shifts in data centre memory and storage. 3D NAND is quickly making its way into the next generation of SSD enterprise storage with Samsung, Intel, Toshiba and others already utilising this new technology. 3D NAND opens the doors to all kinds of possibilities; 128-layer is widely touted to be available shortly with some laboratories also showcasing 200 layer flash chips.

In addition to this manufacturers are looking for new ways in which to innovate in a bid to stay ahead of the competition and deliver real value. For example, Intel is breaking ground with its pioneering Optane memory – the first all-new class of memory in 25 years - creating a bridge between DRAM and storage to deliver intelligent, highly-responsive computing for the HPC market.

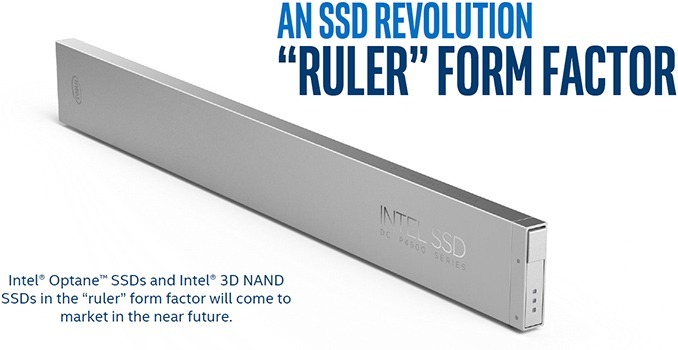

Intel has also announced plans to release a new form factor for server class SSDs called the ‘Ruler’. The design is based on the in-development Enterprise & Datacentre Storage Form Factor (EDSFF) and is intended to enable server makers to install up to 1 PB of storage into 1U of rack space while supporting all enterprise-grade features. Watch this space!

But what does all this mean for the data centre? Despite being historically cost-prohibitive, SSD technology, with its unrivalled high capacities compared to HDDs, is becoming the mainstay of data centre storage. The game is changing and savvy datacentre managers are looking at new SSD tech to increase performance and reduce cost.

The UK data centre market is fiercely competitive, so how can one differentiate? Aside from the obvious security, compliance, disaster recovery, and SLA considerations (all pretty standard stuff), it really comes down to the performance of kit, which is where SSDs outshine their HDD cousins.

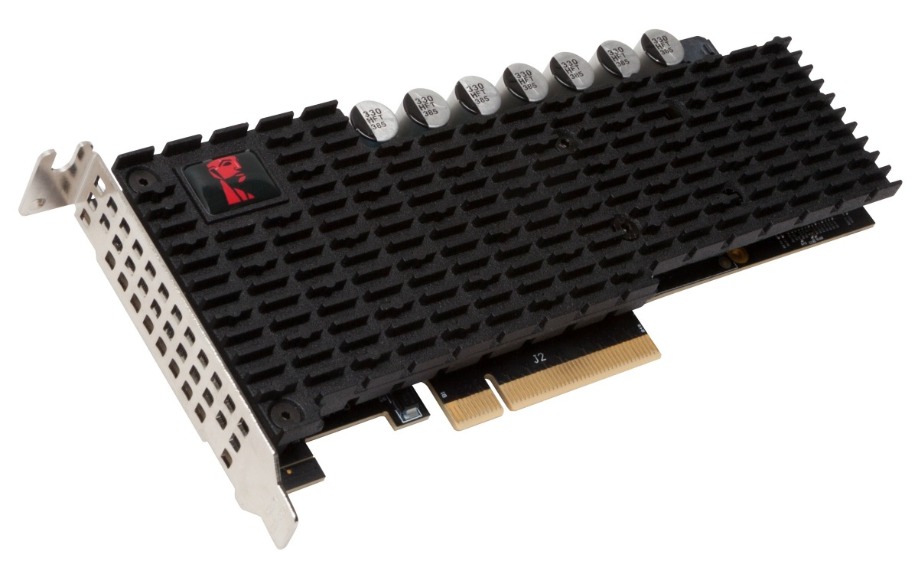

SAS and SATA interfaces have been around for a number of years and offer good transfer speeds but, as with all tech, limitations will depend on the use case. SSD manufacturers are looking ahead to the next generation of interfaces, with manufacturers such as Intel and Kingston really starting to push PCIe NVMe storage as the next big thing. This technology is fast becoming a firm favourite with data centres due, in part, to its blistering speeds.

That said you wouldn’t choose a blisteringly quick, convertible sports car on a rainy day to do lengthy business mileage if you had access to something more economical and comfortable. The same applies for SSD selection, the trick is to understand what benefits each offers to your environment and make an educated decision. Reach out to the technology specialists and manufacturers for help as assumptions can invariably prove costly.

As specialist distributors we witness first-hand what is happening in the memory and storage market and trends indicate that PCIe NVMe is moving and moving fast. 2.5” HHHL AIC and M.2 factors give data centres the flexibility of choosing the right solution for different storage servers. Coupled with 3D NAND flash the performance really is mind-blowing, especially for the next generation data centre. We have seen a substantial upsurge in enterprise storage sales since Q3 2017 for PCIe SSDs. The key thing to note here is that SSD technology is now being exclusively developed for the data centre environment, whereas previous generations were designed for entirely different applications.

These SSDs are having a big impact in how system architects build systems and how developers create applications. The big boost in IOPS they provide helps to keep today’s fast CPUs continually fed with data. But selecting the right SSD is not as simple as it may appear. Major server builders will of course push their approved storage choice and scaremonger data centres about invalidating warranties if non-approved storage is used. However, manufacturers such as Intel and Kingston can offer data centres a choice, backed up with extensive warranties and a robust suite of services that ensure comprehensive protection whilst using their technology.

Power loss protection, faster and consistent read/write speeds and encryption are all key benefits of SSD over HDD. SSDs also consume less power. Given that over 50% of a data centre’s cost is energy-related and the importance of a good PUE rating (Power Usage Effectiveness) as a key indicator of efficiency, the debate gets very interesting. Throw in health monitoring system for SSDs where IT managers and CIOs can pre-empt possible failures and the argument is all but won. You simply cannot do that with HDDs.

In our experience Read/Write IOPs, write bandwidth and endurance are arguably the key performance attributes that data centres are looking for. Using a flash-based PCIe NVMe storage device can help a specialised application, software defined storage or database achieve the performance its users ultimately demand.

With data centres taking on more workload, there is extra pressure on the memory to work harder but is upgrading memory getting easier or more difficult? It really depends on perspective. OEMs will of course push their own or approved 3rd party memory partners for upgrades. However, the cost to upgrade multiple servers can be prohibitive using OEM memory, so why not shop around? Memory manufacturers have come a long way, with DDR3/4 very much a mainstay and DDR5 expected in 2018 (potentially doubling the speed of DDR4). DDR5 is touted to further reduce power consumption while doubling the bandwidth and capacity relative to DDR4 (but until it is fully available we can only speculate). Add to that considerable potential cost savings and it has to be seriously considered.

Optimal memory choice ultimately makes a significant difference to performance. Exploring all the alternatives and selecting memory that is designed and manufactured to your system requirements is vital.

marketing@simms.co.uk

The European Managed Services & Hosting Summit 2018 is a management-level event designed to help channel organisations identify opportunities arising from the increasing demand for managed and hosted services and to develop and strengthen partnerships.

Previous articles here have reflected on the changes that the managed services model brings customers – their abilities to change their buying model to revenue-based, often to do more with less resources, and then adopt new working tools such as analytics, which just weren’t available at the right price before. The impact on the IT industry supplying those customers has been profound as well, requiring a real re-think of sales processes, built around a continuous relationship with the customer, not just a “sell-and-forget” on big-ticket items.

Obviously, the IT channel is attracted by the prospect of more sales by working in managed services, with the world market predicted to grow at a compound annual growth rate of 12.5% to 2019. But it is such a fundamental change in their structures, that some are thinking it a step too far, even under pressure from customers for the benefits that managed services can bring them. Those partners may find themselves rapidly left behind as the new model becomes the standard in most industries.

This, coupled with the ease of entry into the market for cloud-based solutions suppliers, means that the IT channel is having to face a whole new competitive threat. A business “born in the cloud” has an obvious advantage when trying to sell cloud services to a customer, compared with a traditional reseller – the cloud-based channel “eats its own dog-food”, to adopt a rather unwholesome phrase imported across the Atlantic.

So, in establishing the agenda for the European Managed Services and Hosting Summit in Amsterdam in May this year, the organisers are thinking beyond the obvious GDPR issues which will inevitably be in the headlines as its deadline comes round, and even the ever-popular M&A discussions of company value, to bring out a flavour of the sales-engagement process in managed services. We are asking our leading speakers to examine the business processes of the best managed services companies, to try to identify what makes them tick - and tick ever faster and with wider portfolios.

How is the sales process managed? How are the salespeople rewarded in the revenue model? How do they maintain that ongoing relationship with the customer in a cost-effective way? How do they ensure that the salesforce is motivated and retained in the longer term, while keeping them up-to-date with the latest information on the market, the technologies, and customers issues?

None of this is easy, and many managed service providers, integrators, traditional resellers and even those new and fast-growing “born-in-the cloud” supplier companies still have many questions to put to the experts, and the MSHS Europe is the perfect event at which to do this, with many leading suppliers on hand as well as industry experts.

The MSHS event offers multiple ways to get those answers: from plenary-style presentations from experts in the field to demonstrations; from more detailed technical pitches to wide-ranging round-table discussions with questions from the floor. There is no excuse not to come away from this with questions answered, or at least a more refined view on which questions actually matter.

One of the most valuable parts of the day, previous attendees have said, is the ability to discuss issues with others in similar situations, and we are all hoping to learn from direct experience, especially in the complex world of sales and sales management.

In summary, the European Managed Services & Hosting Summit 2018 is a management-level event designed to help channel organisations identify opportunities arising from the increasing demand for managed and hosted services and to develop and strengthen partnerships. More details:

Angel Business Communications have announced the categories and entry criteria for the 2018 Datacentre Solutions Awards (DCS Awards).

The DCS Awards are designed to reward the product designers, manufacturers, suppliers and providers operating in data centre arena and are updated each year to reflect this fast moving industry. The Awards recognise the achievements of the vendors and their business partners alike and this year encompass a wider range of project, facilities and information technology award categories as well as Individual and Innovation categories, designed to address all the main areas of the datacentre market in Europe.

The DCS Awards categories provide a comprehensive range of options for organisations involved in the IT industry to participate, so you are encouraged to get your nominations made as soon as possible for the categories where you think you have achieved something outstanding or where you have a product that stands out from the rest, to be in with a chance to win one of the coveted crystal trophies.

This year’s DCS Awards continue to focus on the technologies that are the foundation of a traditional data centre, but we’ve also added a new section which focuses on Innovation with particular reference to some of the new and emerging trends and technologies that are changing the face of the data centre industry – automation, open source, the hybrid world and digitalisation. We hope that at least one of these new categories will be relevant to all companies operating in the data centre space.

The editorial staff at Angel Business Communications will validate entries and announce the final short list to be forwarded for voting by the readership of the Digitalisation World stable of publications during April and May. The winners will be announced at a gala evening on 24th May at London’s Grange St Paul’s Hotel.

The 2018 DCS Awards feature 26 categories across five groups. The Project and Product categories are open to end use implementations and services and products and solutions that have been available, i.e. shipping in Europe, before 31st December 2017. The Company nominees must have been present in the EMEA market prior to 1st June 2017. Individuals must have been employed in the EMEA region prior to 31st December 2017 and the Innovation Award nominees must have been introduced between 1st January and 31st December 2017.

Nomination is free of charge and all entries can submit up to two supporting documents to enhance the submission. The deadline for entries is : 9th March 2018.

Please visit : www.dcsawards.com for rules and entry criteria for each of the following categories:

DCS Project Awards

Data Centre Energy Efficiency Project of the Year

New Design/Build Data Centre Project of the Year

Data Centre Automation and/or Management Project of the Year

Data Centre Consolidation/Upgrade/Refresh Project of the Year

Data Centre Hybrid Infrastructure Project of the Year

DCS Product Awards

Data Centre Power product of the Year

Data Centre PDU product of the Year

Data Centre Cooling product of the Year

Data Centre Facilities Automation and Management Product of the Year

Data Centre Safety, Security & Fire Suppression Product of the Year

Data Centre Physical Connectivity Product of the Year

Data Centre ICT Storage Product of the Year

Data Centre ICT Security Product of the Year

Data Centre ICT Management Product of the Year

Data Centre ICT Networking Product of the Year

DCS Company Awards

Data Centre Hosting/co-location Supplier of the Year

Data Centre Cloud Vendor of the Year

Data Centre Facilities Vendor of the Year

Data Centre ICT Systems Vendor of the Year

Excellence in Data Centre Services Award

DCS Innovation Awards

Data Centre Automation Innovation of the Year

Data Centre IT Digitalisation Innovation of the Year

Hybrid Data Centre Innovation of the Year

Open Source Innovation of the Year

DCS Individual Awards

Data Centre Manager of the Year

Data Centre Engineer of the Year

The next Data Centre Transformation events, organised by Angel Business Communications in association with DataCentre Solutions, the Data Centre Alliance, The University of Leeds and RISE SICS North, take place on 3 July 2018 at the University of Manchester and 5 July 2018 at the University of Surrey.

For the 2018 events, we’re taking our title literally, so the focus is on each of the three strands of our title: DATA, CENTRE and TRANSFORMATION.

The DATA strand will feature two Workshops on Digital Business and Digital Skills together with a Keynote on Security. Digital transformation is the driving force in the business world right now, and the impact that this is having on the IT function and, crucially, the data centre infrastructure of organisations is something that is, perhaps, not as yet fully understood. No doubt this is in part due to the lack of digital skills available in the workplace right now – a problem which, unless addressed, urgently, will only continue to grow. As for security, hardly a day goes by without news headlines focusing on the latest, high profile data breach at some public or private organisation. Digital business offers many benefits, but it also introduces further potential security issues that need to be addressed. The Digital Business, Digital Skills and Security sessions at DTC will discuss the many issues that need to be addressed, and, hopefully, come up with some helpful solutions.

The CENTRES track features two Workshops on Energy and Hybrid DC with a Keynote on Connectivity. Energy supply and cost remains a major part of the data centre management piece, and this track will look at the technology innovations that are impacting on the supply and use of energy within the data centre. Fewer and fewer organisations have a pure-play in-house data centre real estate; most now make use of some kind of colo and/or managed services offerings. Further, the idea of one or a handful of centralised data centres is now being challenged by the emergence of edge computing. So, in-house and third party data centre facilities, combined with a mixture of centralised, regional and very local sites, makes for a very new and challenging data centre landscape. As for connectivity – feeds and speeds remain critical for many business applications, and it’s good to know what’s around the corner in this fast moving world of networks, telecoms and the like.

The TRANSFORMATION strand features Workshops on Automation and The Connected World together with a Keynote on Automation (Ai/IoT). IoT, AI, ML, RPA – automation in all its various guises is becoming an increasingly important part of the digital business world. In terms of the data centre, the challenges are twofold. How can these automation technologies best be used to improve the design, day to day running, overall management and maintenance of data centre facilities? And how will data centres need to evolve to cope with the increasingly large volumes of applications, data and new-style IT equipment that provide the foundations for this real-time, automated world? Flexibility, agility, security, reliability, resilience, speeds and feeds – they’ve never been so important!

Delegates select two 70 minute workshops to attend and take part in an interactive discussion led by an Industry Chair and featuring panellists - specialists and protagonists - in the subject. The workshops will ensure that delegates not only earn valuable CPD accreditation points but also have an open forum to speak with their peers, academics and leading vendors and suppliers.

There is also a Technical track where our Sponsors will present 15 minute technical sessions on a range of subjects. Keynote presentations in each of the themes together with plenty of networking time to catch up with old friends and make new contacts make this a must-do day in the DC event calendar. Visit the website for more information on this dynamic academic and industry collaborative information exchange.

This expanded and innovative conference programme recognises that data centres do not exist in splendid isolation, but are the foundation of today’s dynamic, digital world. Agility, mobility, scalability, reliability and accessibility are the key drivers for the enterprise as it seeks to ensure the ultimate customer experience. Data centres have a vital role to play in ensuring that the applications and support organisations can connect to their customers seamlessly – wherever and whenever they are being accessed. And that’s why our 2018 Data Centre Transformation events, Manchester and Surrey, will focus on the constantly changing demands being made on the data centre in this new, digital age, concentrating on how the data centre is evolving to meet these challenges.

Is One Better Than the Other, or Do You Need Both?

By Erik Rudin, VP of Business Development & Alliances at ScienceLogic.

For quite some time in the IT Service Management industry, agent versus agentless monitoring has been a fiercely disputed topic of conversation. Which is superior? Depending on who you ask, you were likely to get very different answers. With the rise of hybrid and multi-cloud infrastructures, we propose a combination of both is the best way to keep on top of changing IT environments.

Need more convincing? We break down more of this debate below.

Tradition Versus Easy and Fast: The Pros and Cons

The agent approach is the more traditional procedure for data gathering and involves the installation of software (agents) on all computers from which data is required.

Pros:

Cons:

The agentless approach is likely to be easier and faster to deploy as it leverages what’s already available on the server without installing additional software.

Pros:

Cons:

So, Which Is Better?

Agents are fantastic for engaging in concentrated, deep, high-fidelity monitoring. They’re also highly applicable to log management, which is an increasingly important facet of modern applications.

Agentless monitoring provides that big picture view of what’s going on in your IT world.

But, there is one factor of this debate we cannot overlook; As if finding the right approach for your environment was not hard enough, you also must consider the rise of multi-cloud.

Hybrid Cloud Infrastructures: The Complicating Factor

Today, the ingredients of a modern IT infrastructure have changed. According to ScienceLogic research, one third of enterprises have more than 25 percent of their IT in the cloud and 81 percent already combine on-premises, private, and public cloud environments. When hybrid cloud environments and configuration management databases (CMDBs) are thrown into the mix, our agent versus agentless conversation becomes much more complicated.

Manual processes have become unmanageable in a hybrid world where cloud instances are spun up and down at will—after all, there are no people in the cloud. Hybrid cloud has also disrupted CMDBs, but the CMDB hasn’t kept up in multi-cloud environments. We see more and more failed and out-of-date CMDBs in organizations every day.

Jonathan Arnold - Managing Director at Volta Datacentres, shares his predictions for the IT and datacentre industry in 2018.

Stakeholders in the IT industry – vendors, Channel, consultants, analysts and end users – must look at the political world with envy when it comes to the certainties to be encountered at Westminster and beyond! Brexit is an almost guaranteed disaster. For the Brexiteers, we’ll end up being too generous when it comes to the much talked about ‘divorce bill’, and for the Bremoaners, any deal short of the existing one we have as part of the EU will be a disaster. Add in the certainty of financial and personal life scandals, stir with the ongoing will she or won’t she remain as Prime Minster, sprinkle in a pinch of economic meltdown as the lessons of 2008 seem well and truly forgotten (apparently there’s more personal debt now than there was pre that previous crash – oh dear!), and that’s the year 2018 in politics.

Turning our attention to the world of IT and data centres, and nothing is quite so clear. Blink and you’ll miss the next technology development or business trend. Don’t believe me? Then think back over the past 10 years or so. Plenty has changed and, most notably, the pace of change has picked up dramatically, which makes planning for the future a particularly difficult task. That said, while there may be no ‘death and taxes’ certainties, there are some obvious trends that will continue, alongside the emergence of at least one new technology.

The IT and data centre industry has performed the in/out dance since its birth. One minute everything needs to be centralised. The next minute everything needs to be distributed. That model is now being replaced by what I call hybrid infrastructure – a balanced mix of centralised and distributed IT resource, housed in a mixture of large, consolidated data centre facilities and, increasingly, local, edge, micro data centres. Clearly, one size doesn’t fit all.

Similarly, there seems to be a final recognition that any organisation needs to have a mixture of in-house and external IT and data centre resources, including colo, Cloud and Managed Services. Optimum flexibility, efficiency and cost requirements dictate such a policy. So, we have the emergence of a hybrid, hybrid world. IT and data centre infrastructure owned and operated in-house, owned and operated externally from a mixture of consolidated, centralised and distributed, localised facilities.

To reflect this change, it seems reasonable to expect an organisation’s workforce expertise to be transformed - especially when it comes to the IT department. There may no longer be the need for dedicated, single discipline IT experts, as much of the required skills are being sourced externally. The data centre is finally being recognised as a business’s data/information resource, and this requires that the data centre professionals and the facilities folks bury the hatchet and work closely together. It’s been talked about for years, but it does need to happen. Add in the fact that completely non-technical staff should be enabled (within boundaries) to fire up IT resources without finding a bottleneck in the data centre or IT infrastructure, and we see the emergence of…a hybrid, almost virtualised, pool of human resource when it comes to specifying and implementing the infrastructure required to support any new application. That’s to say, personnel from all company departments need to be involved in the IT and data centre process.

Automation and orchestration are crucial to achieving all of the above. So expect big things from this management area over the next 12 months. Running a hybrid, agile and optimised data centre and IT infrastructure is no longer possible without high levels of automated management. It’s just surprising that there aren’t more comprehensive solutions out there. We seem to be stuck in the multi-console age – whereby there are far too many software programmes running small chunks of the business. That needs to change.

Technology-wise, things move fast in the IT space. Not so much in the data centre industry – where some of the established technologies are those discovered by the ancient Greeks! It may take a while for the pace of change to increase, but I’m reliably informed by several sources that foam batteries will have a major impact on the data centre space in the near future. The promise is of better safety, much higher densities, and lighter weight batteries. Foam batteries seem to be under the radar as far as Wikipedia is concerned, but there’s plenty of vendor information out there for those interested.

As the world goes wireless, Indi Sall, Technical Director, NG Bailey’s IT Services division asks if the days of the wired network are numbered.

The future is wireless: Should we still care about cables?

Compared to a few years ago, plugging things in has started to feel like a major hassle.

In the home environment the wireless smart hub has become the centre of the digital home, connecting smart TV’s, wireless speakers, wireless printers, lighting, power and heating controls, and providing a platform for voice assistants such as Siri and Alexa. This wireless environment is also becoming increasingly visible in the corporate world with networks now supporting all of the above in addition to applications such as united communications and wireless conferencing solutions etc.