Alexa, please will you run my data centre as efficiently as possible, bearing in mind the constantly fluctuating workload, the regulatory environment, the current energy price, the outside temperature, the new range of Managed Services being launched today, not to mention the cost of property rental, the geographical location of all the company’s employees who will need to be accessing various applications and datasets…?

As I’ve written before, persons under a certain age might find the above, somewhat exaggerated Digital Age scenario exciting and/or believable. The older generation might quake at the mere suggestion that robots can take over the workplace to the extent that the human workers, if there are any left, will be doing the menial stuff.

Long term, it seems that the only decisions to be made are just how much automation is possible and, just as importantly, acceptable? The finance sector is already well on the way towards digitalisation, and one can foresee a future where we all do our own banking, and there’s only virtual money, and the banks employ robots and computers only – except, maybe, for the board of directors.

And how many other industries will, ultimately, lend themselves to such a business model? Put it another way, how many industries, or specific jobs, cannot be completely automated? Given the appropriate level of artificial intelligence, it’s more than possible that a robot judge will preside over robot prosecutors and defence lawyers, who have the whole history of legal precedent stored in their memories upon which to call, as they seek to make their case for their human and, no doubt, robot clients.

In terms of transport, the automated future has all but arrived – it just needs a bit of tweaking before it goes mainstream. Shopping is half way to being a totally virtual experience – and given that the online experience, making full use of virtual reality, will eventually replace the need to touch, pick up, smell etc. anything that we might want to purchase, one can see a time when the high streets will be empty.

Eventually, we’ll all be living in a virtual world, where a ‘real life’ experience is the exception, not the norm.

For now, here’s wishing you a happy festive season, and enjoy some very real food, drink and relaxation before 2018 looms large!

-l1uge6-aynu23-mwt7vo.jpg)

Continued strong demand driving investor appetite in Europe.

The data centre sector is seeing record investment levels as investors seek exposure to the record market growth in Europe. This investment is driven by take-up of colocation power hitting a Q3 YTD record of 86MW across the four major markets of London, Frankfurt, Amsterdam and Paris, according to global real estate advisor, CBRE.

There is also a substantial amount of new supply across the major markets as developers look to capture demand in a sector where speed-to-market is still key. The four markets are on course to see 20% of all market supply brought online in a single year. This 20% new supply, projected at 195MW for the full-year, equates to a capital spend of over £1.2 billion.

London has been centre-stage for European activity in 2017, its 41MW of take-up in the Q1-Q3 period represents 48% of the European total and dampened any concerns over the short-term impact of Brexit on the market. London was also home to two key investment transactions in Q3 as Singapore’s ST Telemedia acquired full control of VIRTUS and Iron Mountain acquired two data centres from Credit Suisse, one of which is in London.

CBRE projects that the heightened market activity seen so far in 2017 will continue into Q4 in three forms:A continuation of strong demand, including significant moves into the market from the Chinese cloud and telecoms companies. Further new supply; CBRE is projecting that 80MW new supply will come online in Q4, including several brand-new companies such as datacenter.com, KAO and maincubesOngoing investment activity, with at least one major European investment closed out by the year-end.

Mitul Patel, Head of EMEA Data Centre Research at CBRE commented: “2017 has been a remarkable year for colocation in Europe and, with 2018 set to follow-suit, any thoughts that 2016 might have been a one-off have been allayed. We have entered a ‘new-norm’ for the key hubs in Europe, where market activity is double what we have been accustomed to in the pre-2016 years.

“Given this ongoing market activity, it is no surprise to see so many investors wanting a piece of the action in Europe. As demand for data centre capacity continues to entice investors, the pool of available companies and assets diminishes. Consequently, those looking to deploy capital in Europe will need to act decisively, leading to more M&A investment in the coming year, beginning in Q4.

“Furthermore, the low cost of capital available to large data centre developers, and a shift from private equity to more longer-term institutional and infrastructure investors, will mean that both investment volumes and prices paid will remain at historically high levels.”

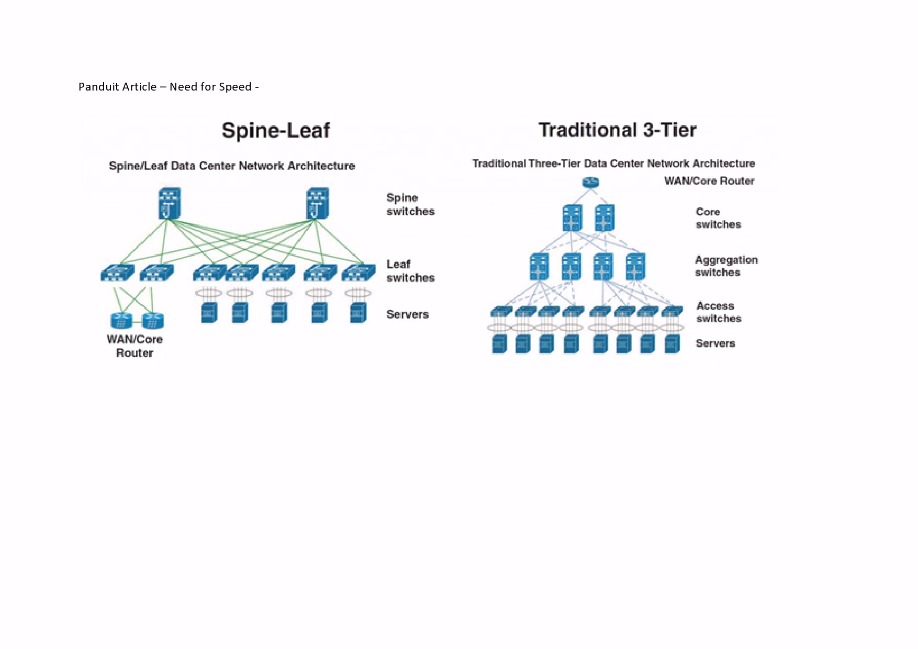

The need for high-speed data transmission and increased data traffic in cloud computing have enabled convergence of complementary metal-oxide semiconductors (CMOS) technology, three-dimensional (ED) integration technology, and fibre-optic communication technology to create photonic integrated circuits.

In the near future, by leveraging CMOS technology, the silicon medium has the potential to be fabricated and manufactured on a much larger scale. Some of the most disruptive innovations in silicon photonics are high-speed Ethernet switches, interconnects, photo detectors and transceivers, which enable high-bandwidth communications at a lower cost through low form factor, low power generation and increased performance integration into a single device.

Frost & Sullivan’s new analysis of “Innovations in Silicon Photonics” finds that the North American region has seen significant growth in silicon photonic research and development (R&D) due to the location of hyper-scale data centre facilities, while Asia-Pacific has witnessed investments to improve methods for large-scale manufacturing of silicon photonic components and circuits. The study analyses the current status of the silicon photonics industry, including factors that influence development and adoption. Innovation hotspots, key developers, growth opportunities, patents, funding trends, and applications enabled by silicon photonics are also discussed.

“Currently, innovations in silicon photonics are driven by the convergence of optical and electronic capabilities on a single chip. The innovations are highly application-specific, focusing on high-speed optical communications,” said Frost & Sullivan TechVision Research Analyst Naveen Kannan. “Further research and investments are looking towards developing next-generation, high-speed quantum computing. Researchers have transformed high-speed computing by achieving quantum entanglement using two quantum bits in a silicon chip. This will enable high-speed database search, molecular simulation, and drug designing.”

Wide-scale adoption is expected in various industries, such as data centres, cloud computing, biomedical and automotive. Building low-power interconnects that use light to transfer data rapidly is the main application area within data centres. In the biomedical industry, silicon photonics will enable the creation of highly sensitive biosensors for diagnostic applications.

“Photonic integrated circuits require the designing of photonic components simultaneously with electrical and electronic components. This can be challenging,” noted Naveen Kannan. “Players can overcome this challenge by offering services in terms of developing innovative photonic integrated circuit design, product prototyping, and testing methodology as per customer requirements.”

There is no better way to understand trends in any industry than by going directly to those leading the way in that space. With this in mind, Schneider Electric commissioned a new 451 Research study designed to shed light on hybrid IT environments at large enterprises from across the globe. This research was conducted through intensive, in-depth interviews with C-suite, data centre and IT executives and offers peer lessons and perspective into innovative deployment of technologies others in the industry can use to evaluate and manage their own hybrid landscapes.

Through these interviews a few key points came to light:

As cloud services are deployed, there are ripple effects across the organization. There is a significant shift in business models, while greater demand is placed on connectivity and workload management. To realize the full value of a hybrid approach, the management of a combination of data centre environments has become one of the most complex issues for modern enterprise leaders, forcing them to re-consider strategy and common practice.

The study also reveals that while the experiences, strategies and innovative technologies used varied greatly, there were clear common themes:

In one use case, a U.K.-based retailer found that when determining the best venue, security, performance and latency requirements needed to also be factored into the total cost analysis, along with data transit and application license costs. These vectors combined helped to determine the right mix of colocation and public cloud computing infrastructure to support connectivity needs while also controlling costs.

As one study participant noted, “If I had my dream, we would have the visibility to predict failures before they occur.”

“The biggest challenge of all is cost control, and management and financial applicability of capitalization,” noted one U.S. retailer.

North Denmark Region data center has been Tier IV certified for its constructed facility by Uptime Institute, the globally recognized data center authority. This Certification is testament to the focus by the organization on building a highly resilient data center that ensures availability of critical systems that benefit patients in the form of supporting stable hospital operation in a secure environment.

The data center is the first hospital in the world, to receive this certification of the highest standard - Tier IV, for both design and construction of the data center. It joins a group or 99 data centers in the world with a Tier IV design certification, and one of only 42 being subsequently awarded the Tier Certified Constructed Facility certification. As the global data center authority, Uptime Institute has certified over 1,200 data centers across 85 countries.

"IT is a vital part of the operation of a modern health system, so that patients can be treated in a timely and proper manner and have their health data safely stored. We are therefore immensely proud that we have become part of the exclusive club of only 42 companies in the world that achieves this certification” said Klaus Larsen, CIO, Regional Nordjylland

North Denmark Region data center houses more than 450 systems including 911 ambulance services, patient healthcare records, decision support systems and other critical systems supporting over 15,000 employees. Supporting a regional population of 600.000 any downtime in the data center can have major consequences for both hospital operation and the other healthcare operations in the region. Therefore, availability and stability of the data center is of the highest priority.

“North Denmark Region is a forward-looking organization, focused on protecting the public by improving the provision of healthcare in the region, using technology which is underpinned by their investment in a highly resilient and robust data center, said Phil Collerton, Managing Director, EMEA, Uptime Institute. “Regional Nordjylland has shown foresight by designing and building the first Uptime Institute Certified Tier IV data center in the region. Their focus on achieving the Tier IV Certification for both the design and constructed facility means that the data center is ready and able to support the health service for future generations.”

Unique solutions delivered by in-house employees

The planning, design and development of the data center was completed in partnership between the employees of the North Denmark Region IT department and technical department at Aalborg University Hospital. This means that the data center was fully designed by North Denmark Regions own employees. This is testament to the fact that the employees have excellent knowledge and competencies that are among the best in Denmark.

The partnership has resulted in innovative solutions that ensures data center resilience an application availability. For instance, the North Denmark Region data center is now the only Tier IV data center in the world that uses mechanical systems and variables such as weight, temperatures and pressure to automate outage responses when issues are detected. In addition, North Denmark Region is one of select few data centers in the world that have been certified while operational.

“We are part of an elite group in the world that has become Uptime Institute Tier IV certified, while the systems are in operation, and while building and testing”, says Michael Lundsgaard Sørensen, head of IT management, operations and support.

Eric Maddison, Senior Consultant at Uptime Institute, has participated in over 30 Tier Certifications of Constructed Facilities (TCCF), and regards North Denmark Regions TCCF as one of the best prepared organization he has worked with.

“It is a great achievement to upgrade an existing data center to the demanding standards, which an Uptime Institute Tier IV certified data center requires,” said Eric Maddison, Senior Consultant at Uptime Institute. “It is the fastest execution of a Tier IV certification, I have personally witnessed. It includes an interesting use of industrial inspired control systems as opposed to a Buildings Management System (BMS) and Programmable Logic Controllers (PLC).”

New facility supports teaching and research activities and meets strict sustainability criteria.

Italtel – a leading telecommunications company in IT system integration, managed services, Network Functions Virtualization (NFV) and all-IP solutions – has designed and deployed a new green data center at the University of Pisa, one of Italy’s oldest universities.

Working within a Raggruppamento Temporaneo di Imprese (RTI, a temporary group of companies) with West Systems and Webkorner, Italtel built the data center within the Parco Regionale Migliarino San Rossore Massaciuccoli. This is linked to the university by a fiberoptic ring, guaranteeing a high level of connectivity and reliability.

The data center has been built with best-of-breed technologies, including racks and air conditioning technologies with a guaranteed level of quality and efficiency, bringing a Power Usage Effectiveness (PUE) value of 1.17. This is even lower than the requirement of 1.3 needed to certify a data center as environmentally friendly and brings additional benefits such as reduced running costs.

“Because of their requirements for continuous power and air conditioning, data centers are traditionally associated with very high levels of energy consumption,” said Fiorenzo Piergiacomi, Head of Public Sector Account Unit at Italtel. “By choosing to create a green data center, the University of Pisa has reduced its environmental impact while also optimizing the available space and cutting ongoing maintenance costs.”

One of the key criteria for the University of Pisa when selecting its new technology infrastructure was that it meets its strict sustainability goals. Data centers are huge consumers of energy and having one that meets PUE targets while still reducing costs provided an ideal solution for the University.

The project was implemented by Italtel in just eight months, despite the infrastructure challenges posed by converting part of an existing building into a reliable and technologically-advanced data hub.

With AWS adding 53,000 SKUs in the last two weeks, analysts predict the rise of cloud dealers with simple, fixed-price offerings aimed at untangling this complexity.

At AWS re:Invent, 451 Research is revealing how quickly enterprises are moving to hybrid and multi-cloud environments; the growth of the cloud market to$53.3 billion in 2021 from $28.1 billion this year; and the impact of cloud service providers’ ever-expanding portfolio of offerings.

451 Research’s most recent Voice of the Enterprise: Cloud Transformation survey finds that cloud is now mainstream with 90% of organizations surveyed using some type of cloud service. Moreover, analysts expect 60% of workloads to be running in some form of hosted cloud service by 2019, up from 45% today. This represents a pivot from DIY owned and operated to cloud or hosted third-party IT services.

451 Research finds that the future of IT is multi-cloud and hybrid with 69% of respondents planning to have some type of multi-cloud environment by 2019.

The growth in multi- and hybrid cloud will make optimizing and analyzing cloud expenditure increasingly difficult. 451 Research’s Digital Economics Unit has analyzed the scope of AWS offerings and reveals that there are already over 320,000 SKUs in the cloud provider’s portfolio. This complexity is likely to increase over time – in the first two weeks of November 2017, for example, AWS added more than 53,000 new SKUs.

“Cloud buyers have access to more capabilities than ever before, but the result is greater complexity. It is a nightmare for enterprises to calculate the cost of computing using a single cloud provider, let alone comparing providers or planning a multi-cloud strategy,” said Dr. Owen Rogers, Research Director at 451 Research. “The cloud was supposed to be a simple utility like electricity, but new innovations and new pricing models, such as AWS Reserved Instances, mean the IT landscape is more complex than ever.”

Flexibility has become the new pricing battleground over the past three months, with Google, Microsoft and Oracle all announcing new pricing models targeted at AWS. Analysts believe there will be a market opportunity for cloud dealers that can resolve this complexity, giving users simple and low-cost prices – similar to how consumer energy suppliers abstract away the complexity of global energy markets.

451 Research’s quarterly Cloud Price Index continues to track the global cost of public and private clouds from over 50 cloud providers.

Cloud market growth

The latest data from 451 Research’s Market Monitor finds that the cloud computing as a service market is expected to grow 27% to $28.1 billion in 2017 compared to 2016. With a five-year CAGR of 19%, cloud computing as a service will reach $53.3 billion in 2021.

The report examines revenue generated by 451 global cloud service providers across infrastructure as a service(IaaS) and platform as a service (PaaS), as well as infrastructure software as a service (ISaaS), which includes IT management vas a service and SaaS storage (online backup/recovery and cloud archiving).

The report predicts that IaaS will account for 57% of cloud computing as a service revenue in 2017.

451 Research analysts forecast that ISaaS will see the fastest growth through 2021 with a 21% CAGR, while Integration PaaS will be the fastest growth sector within the PaaS marketplace with a five-year CAGR of 27%.

70% of organizations surveyed have a Digital Transformation strategy, but only 10% have a full deployment plan.

HCL Technologies has released the findings of an independent–research study of senior business and technology decision–makers regarding digital transformation at large global enterprises. The global CXO survey highlights a wide gap between strategy and execution with organizations’ digital transformation initiatives. These findings come at a time when digital transformation has emerged as a defining strategy for modern global enterprises.

Will it be possible, at some time in the not-too-distant future, for enterprises, colocation companies and cloud service providers to dispense with all the heavy infrastructural gear of the mission-critical datacentre and operate with lightweight distributed IT? How feasible is it to rely on emerging technologies that dynamically replicate or shift workloads and traffic whenever a failure looms?

By Andy Lawrence, Executive Director at Uptime Institute Research.

This prospect has been tantalising many in IT for a decade or more, and for a few, it's a reality – of sorts. Big cloud service providers, in particular, have long boasted they can rapidly switch traffic between sites when problems arise, and so they build datacentres that are optimised for cost, not availability. In their environments, they say, developers needn't care about failures. That is all taken care of.

Meanwhile, some operators and enterprises have replaced their expensive and mostly dormant DR sites with subscriptions to cloud services; others have replicated their loads across a distributed fabric of sites that are always active, and which can fail without great consequence.

All of this points to a potentially big and disruptive change in the areas of physical infrastructure, datacentres, business continuity and risk management. But as ever, the hype can screen the reality: research conducted by 451 Research and Uptime Institute suggests that although distributed resiliency is likely to be an increasingly used, and even dominant, architecture in the years ahead, it is also proving to be complex and demanding.

The engineering diligence that is so key at the mission-critical infrastructure layer doesn't map easily onto the web of services that are the foundation of modern architectures.

Our research identified four levels of resiliency. These are

These are not necessarily alternatives – prudent CIOs will likely find themselves using some or all of these approaches. Our study found that each of these approaches introduces new layers of complexity, as well as the promise of higher availability and greater efficiency.

Critically, the report suggests that for new cloud-native applications, it is trivial to take full advantage of distributed resiliency capabilities – not because resiliency is trivial, but because the cloud provider has made the investment in redundancy, replication, load management and distributed data management.

But for most existing applications, including many that are cloud-optimised rather than cloud-native, it is much more complicated. Some applications need rewriting, some will never transfer across, and several factors such as cost, compliance, transparency, skills, latency and interdependencies add complexity to the decision. For these reasons, complicated hybrid architectures will prevail for many years.

There are more than 20 technologies that may be involved in building truly or partially distributed resiliency architectures.

Among our key findings are:

The report concludes that the use of distributed resiliency and a complex, hybrid web of datacentres, distributed applications, and outsourcing services and partners will be problematic for executives seeking good visibility and governance of risk. Outsourcing can mean that cloud providers have power without responsibility, while CIOs have responsibility without power. This is creating a need for better governance, transparency, auditing and accountability findings.

How to protect and secure your business data while successfully employing business collaboration in The Cloud.

By Jessica Woods, Product Manager at Timico, a Managed Cloud Service Provider.

Collaboration is the buzzword in business today and high on the topical agenda. The market for better business communications has shifted dramatically in recent years, thanks to advancements in technology. Collaboration tools and platforms have greatly improved, allowing us to be more productive and enjoy a more flexible way of working.

Research and advisory company, Gartner, predicts that by 2025 “The changing nature of work and people’s digitally intermediated perceptions of connected spaces mean IT leaders must reconceptualise the 2025 workplace as a smart, adaptable environment that conforms to workers' contexts and evolved job requirements’.

The benefits of heightened collaboration within a company are endless, especially when it comes to enhancing the customer experience. Collaborative features such as instant messaging, video conferencing, screen sharing, Voice over IP, and shared cloud document management allows a business to communicate more effectively between locations, departments (virtual or otherwise), and people.

But effective collaboration can also flag major security concerns, especially as many data breaches within organisations are caused internally. And with the General Data Protection Regulation (GDPR) set to come into effect in May 2018, it’s imperative for businesses to ensure that their collaborative systems are robust. The more we collaborate, the more we share and so data security is a business-critical priority.

As employees and organisations are seeking ways to collaborate more and more, how can you fully harness the power of collaboration in the cloud while being fully protected?

1.Invest in the right software

When implementing new technology, security remains a key priority. New collaborative

tools feature secure and safe document handling with security layers to keep your business

safe, and the ability of managing access permissions for safe sharing and handling documents from remote or out-of-office locations. This allows an organisation to take advantage of new collaborative technology without the risks of sensitive data or important information being wrongly shared.

The laptop your best sales exec misplaced on the tube after a busy day of client meetings? A remote wipe and user access reset will avoid you worrying about who has access to that data now. It won’t be cheap but it will be worth it. Select collaboration tools carefully, ones that promise optimal security and that are user friendly.

2. Protect your information

To protect company information, data can be encrypted so that only authorised parties can access it. In addition to this, data can be assigned usage rights, and be given

embed classifications that follows the data no matter where it goes. This ensures

that shared corporate information never reaches the wrong hands and is constantly

protected. Data security starts with 256-bit file encryption. File encryption is required for all files that are stored on the vendor servers and in transit and acts as a protective shell around a file so that a hacker cannot view the contents if they intercept it at any point.

3. Define access

In order to give a business complete control, a company can define specific access

controls using an access management system ranging from the main datacentre to the cloud. Through this, access can be given at different levels of the company, or certain permissions can be given for important documents. For example, some team members may be able to edit, but not share documents, or some may be able to do both. This feature is great for complete control over what happens to important data.

4. Direct management, on the fly

A business can secure their applications and data in one simple click. With collaborative

security controls, security features can be added to documents no matter where

they are, and are covered instantly. This means if something needs to be changed quickly, a business is fully capable of doing so.

5. Gain visibility

A business can use data protection to track shared data, and see who accesses important information, and where it is sent to. This means that access can be given or taken away easily, with complete visibility for the business.

6. Understand the benefits of using an MCSP

Working with the right MCSP (Managed Cloud Service Provider) will help optimise use of collaborative communications and increase business efficiency, allowing you to focus on your customers. The right MCSP will provide dedicated servers in a highly secure data centre, ensure that you stay on top of legislation with fully protected and robust technologies and help manage client and team activity in a secure, shared and collaborative space.

7. Educate your team

Understanding data security and ensuring software maintains the highest level of data is imperative. Therefore, it is essential to ensure that all active users in the organisation are regularly trained on how to keep data safe while enjoying the full benefits of collaboration and that they understand that safe and secure practices must be adhered to, such as password changes and social media processes.

Through continual learning, development and education, organisations can find the right balance between meaningful collaboration in the workforce through the use of technologies and the necessary business requirements of maintaining IT security, data, privacy, and business sensitive information.

By Ian Bitterlin, Consulting Engineer & Visiting Professor, Leeds University

The hype-cycle is a curve that looks like the outline of a dromedary camel’s back and neck with time as the horizontal and hype as the vertical axis. A new product idea starts just behind the base of the hump and the hype surrounding it quickly rises to the peak of the hump. At that stage reality starts to impact on the hype – maybe the claims for greatness are seen through as just a fad or the cost of adoption looks too high – but the hype starts to reduce and quickly slides down the hump to reach a trough at the nape of the camel’s neck.

At this stage in a products life only two things can happen: It either fails to regain the hype, stops being talked about and disappears forever (sometimes to return when enough of the potential market has forgotten about its first incarnation) or the product hype recovers, and the product succeeds – this time in a steady upward adoption stage following the hype-cycle curve ever upward.

I think that in 2017 we have seen two products in the data centre arena that are at two different positions on the hype-cycle and deserve comment.

First, we have Data Centre Infrastructure Management, DCIM. Touted to be a ‘single pane of glass into the data centre environment’ it can be little more than the combination of a BMS, EMS and an asset manager. A single screen with pretty pictures, dials and whistles capable of storing and processing vast quantities of data, eventually issuing charts and information that enable the user to make decisions. In terms of the hype-cycle it looks like DCIM is well over the initial hump and is rapidly reaching the critical trough – if not already there.

Promising everything at first, including time-saving in not having to manage assets on spreadsheets and operating a central alarm and event monitoring the peak of the hype was reached quickly – pushed along many vendors who had invested great big chunks of cash in software development – we ended up with two distinct DNA sources. One was those OEMs that came from BMS and added an IT hardware asset manager and the other was the inverse, the IT asset manager that had BMS functionality added to it. The vendors paid the market reporters to review their products and we had a whole string of ‘Best DCIM in the World’ gongs issued. But very few customers stepped up in relation to the investment made by the OEMs – so the hype machine was fed more hype. When the reality stepped in the product hype took a sharp downturn. That reality included realization of the high implementation cost, intangible benefits (DCIM doesn’t ‘manage’ it only reports), incalculable Return-on-Investment and growing realization that in general you still needed to install a functional BMS with a degree of system control. DCIM didn’t appear to replace anything and quickly became seen as a ‘very-nice-to-have’ but a real luxury. It also (still) has one commercial problem which doesn’t aid its adoption – no one has worked out who to sell it to and when. In the project timeline of a data centre all of the things that a BMS controls (power & cooling etc.) are up-front via main and specialist contractors who don’t want to talk about DCIM because it is seen as part of the ICT system and infrastructure. By the time the data centre is finished the IT system integrators don’t want to talk about DCIM as it isn’t in the budget and is seen as part of the M&E infrastructure. The target market and the ideal time to hit it has been new territory for newly converted BMS vendors to get their heads and sales budgets around. So, will DCIM pull out of the hype-trough and climb the general adoption path? Possibly but not likely unless the costs are substantially lower than today, OR a return-on-investment is proven in terms of cash not reports. The cost may have to be reduced by more than 50%, probably more like 60%, which will lead to standardized products and plenty of vendor/market consolidation. A meaningful RoI will be even tougher to create than the market price reduction will be to swallow for the vendors.

There is one chance that might save DCIM or reincarnate it as IDCIM (Intelligent DCI Manager) where the data centre is actually controlled based on the ICT load that is flowing in-out - but that will take another chunk of investors cash.

The second product that is on the hype-cycle is Lithium Ion battery cells. It hasn’t quite reached the top of the hump, but the hype is everywhere. So, what will happen at the peak? Well, so far, the hype is based on product attributes that are either not useful, are distorted versions of the reality or even hidden from view by a smoke screen:

So, will Lithium-ion replace VRLA? I doubt it very much. The price difference doesn’t warrant either the risk of explosion nor the lack of proven reliability. Yes, they will find huge applicability in cars and consumer goods and probably small UPS <10kVA but, I think, they will slide down the large UPS hype-cycle and not recover.

Steve Hone CEO Data Centre Alliance and Guy Willner, CEO of IXcellerate talk Personal Data Protection, Information Laws and GDPR

Steve Hone: 1. It is believed that data privacy is one of the hottest topics in all industries across the globe. Referring to international news, we can see that the US and Europe have different ideas about data and its privacy. Why do we need it and how can it be realised and localised?

Guy Willner: The answer is simple: each country, each government is responsible to its citizens. For the personal information of its citizens, it must put some regulation or control in place so that that data doesn’t go to the wrong people. If that information is sent out of the country, then the government doesn’t have control over what is happening, so there is an element of obligation from each jurisdiction to take responsibility for the security of its citizens and information.

2. How does data privacy and sovereignty influence international business across the world?

Until recently we thought that globalisation of the Internet meant providing a global service from one particular location. What we see now with Russia’s information law, GDPR in Europe or the Australian and German regulations, is that all information providers have to adopt and establish platforms within each country for the two hundred or so countries around the world. There are already up to 25 countries that have particular local data regulations and that will continue to increase. It's something that the larger global information providers and anyone starting a business on a global level now need to include in their technical and legal compliance strategy.

3. The media is talking about General Data Protection Regulation (GDPR), which is coming into force in May 2018. This is expected to create a new set of regulations, which are aimed to protect EU citizens from privacy and data breaches. What do you think about it?

The European Union with its 27 countries is beginning to flex its muscles and to have some influence over what happens to this information, so this is a good example. It seems that GDPR is many times more expensive than the equivalent in Russia. In the European Union, I believe it can cost you 4% of the global turnover of the company or 20 million euros. So it’s a large stake that the European Union is putting in place and no doubt it will be fantastic for an IT industry as their workout solution to implement this.

4. Having experience with IXcellerate and Equinix, what role do Data Centres play in the world currently?

Regarding the information law or general data sovereignty, the data centre is the foundation on which that is built, because the data is going to be stored physically somewhere. When you are looking at the optimised strategy, you are not going to put that information under the desk in the office - you need to put it in a highly secured, well-managed facility. Hence, the data centre is a natural home for this type of information, and if we look at the Russian market, there are about five or six credible data centre companies, they are all accepting many new customers who are coming in to localise their data in Russia. It is a big part of the data centre industry now.

5. How do you (IXcellerate) guarantee security?

At IXcellerate we are looking after our customers’ racks, we do not provide the service, hardware, switching, etc., we are looking after our clients’ service, hardware, and switching. As a result, the most important aspect for us is physical security, which means we have five levels of protection[1] before you get real access to the server rack. We also have a lot of preventive-defensive measures regarding the monitoring of movements on or near the sites, the identity checks, etc.

With five levels of physical security, we can offer assurance to larger customers.

We have systems like PCI DSS, a unique credit card security level of which we are accessed and checked each year by an international certifying body. The second thing is that security is not just about preventing people getting to your information; it is ensuring a continuous flow of information within the data centre. IXcellerate has multiple networks so if one system fails; we can always move to the next. We have 37 carriers connected to our Moscow data centre, meaning a maximum level of connectivity regarding network and information flow.

6. Why should International Companies choose IXcellerate in Moscow?

IXcellerate is a continuation of data centre experience that started in 1998. There is a lot of experience and expertise that have been built up over the years, culminating in the creation of IXcellerate. The team is experienced and has been dealing with banks, stock exchanges, global Internet players, global media companies, global retail companies for many years. We understand how to treat these companies and what their priorities are, how we can help and assist them in their growth. We are the “GO TO” data centre company in Russia for international companies. With a multilingual team, we communicate to our international customers in a variety of languages including, Japanese, Chinese, German, French, as well as Russian and English.

7. How do you ensure that businesses feel comfortable, being your customers? What’s your approach to customer satisfaction?

Annually we survey of our entire customer base, to ensure we understand their views. This was completed again in 2017, and we were pleased to have a very high satisfaction level (more than 90%).

We were also called finalists in the DCS Excellence in Service Award in 2017.

Our customers claim that each IXcellerate employee provides excellent assistance and the duty shift response time is within ten minutes, which is several times quicker than the market average. As I’ve said, we’ve been dealing with very sophisticated customers for many years, we have a lot of respect for our clients, and we like to continuously keep in touch with them, so we are aware of any issues they might have.

Within the Moscow One campus, we have specific areas devoted to customer experience onsite. Customers are able to listen to music, drinking coffee and relax in the open air – we maintain a high level of customer satisfaction, by listening and responding to customer needs.

8. Can you please let the readers known your top three to do list for International Business?

Regarding the data sovereignty, the top three items will be:

1. Evaluate where you are currently today.

2. Evaluate what new regulations are coming into those specific countries.

3. Formulate a specific plan to work towards satisfying those requirements because the requirements are not going to disappear.

IXcellerate operates the leading carrier-neutral Data Centres in Moscow (Russia). IXcellerate offers pure-play co-location designed to meet the standards of financial institutions, multinational corporations, international carriers and major content providers. The business aim is to deliver top “European” service level to all customers in Russia. Moscow One Data Centres, designed and operated at Tier 3 standards, sits at a prime location in Moscow, where power is available the in abundance both now and in future. The facility offers unique hosting solutions and tailor-made colocation with respective security and low latency links provided to and from other global markets.[1] The data centre features a multi-level fire safety system:

• Level 1: Keeping the data centre tidy.

• Level 2: Regular inspection of the data centre by maintenance staff (4 times a day per specially assigned roadmaps)

• Level 3: VESDA LaserPLUS, a smoke detection system monitoring the air in the server rooms and providing early warning. It detects fire hazard very early, before the emergence of smoke or fire.

• Level 4: An automatic fire notification system and fire alarm system.

• Level 5: In case of fire, response by data centre staff using basic tools (handheld fire extinguishers) and HI-FOG fire hoses.

• Level 6: Automatic fire suppression with HI-FOG.

Steve Hone CEO, The DCA

As 2017 ends there is a great deal to reflect on and look forward to in the year ahead.

Brexit has dominated the news in 2017 and the uncertainty surrounding this has continued to cause both business and investment challenges. Having said that, overall the data centre sector continues to grow and mature as it attempts to keep pace this the insatiable demand for digital services.

This fast pace of growth is not without its challenges and growing pains; which was self-evident following several rather high-profile DC related outages which when combined grounded more than 1200 flights and stranded over 100,000 passengers. Although we all hope that valuable lessons were learnt to prevent a repeat performance, it has equally served to remind us all just what a mission critical role data centres play in supporting the digital services the we would all struggle to live without.

On that note I wanted to take the opportunity to congratulate Simon Allen on a fantastic job launching The Data Centre Incident Reporting Network. DCIRN is designed to help prevent, what I would term as “Ground Hog Day”. We seem to be guilty of making the same mistakes time and time again rather than learning from each other, after all prevention is always better than cure. This is a great initiative which has the full support of the DCA Trade Association and we look forward to working more closely with DCIRN in the year ahead.

2017 also saw the data centre trade association support a record number of events. In addition to being global event partners for Data Centre World the DCA has helped to organise, promote and support 23 conferences and workshops right across Europe and the Far East. I am equally pleased to confirm that 2017 saw the DCA form a Strategic Partnership with Westminster Forum Projects providing members with direct access to senior level select conferences designed to inform and guide policy both within the UK Government and EU on key topics which have an impact on our sector.

To be outstanding you first need to stand out from the crowd and I am pleased to report that thanks to collaborative support of all its Media Partners the DCA continues to provide a platform where trusted knowledge and insight can be both shared and gained. It is important to note however that none of this would be possible without the continued support of all the members, so thank you to each member who has submitted thought leadership content over the past year and to our very own Amanda McFarlane (DCA Marketing Manager) who personally reviews all your submissions before release.

Research and development continued to be a strong focus for the DCA throughout 2017 with representation offered on all relevant International Standards development groups, The EU Code of Conduct, EN50600 and EMAS. As an EU endorsed Horizon 2020 partner The DCA Trade Association continued to support the EURECA project which has been extended by the EU Commission until 2018 and the H2020-MSCA-RISE project which is a collaboration between the EU and China which runs for another two years.

Although I will leave it up to my esteemed colleagues to offer their predictions for what they think 2018 has in store for us all, I wanted to share some of the plans we have for next year; hopefully you will have a new members portal to play with in Q1, new member support services, more regional workshops and new tailored executive briefings designed to provide both insight and influence when it comes to key issues of focus and importance.

Depending on when you read this issue I hope you manage(d) to all have a well-earned rest over the festive season and I look forward to working with you all in the year ahead.

To find out more about The DCA, please contact us or visit our website.

W: www.datacentreallliance.org

T - +44(0)845 873 4587

E - info@datacentrealliance.org

To submit an article please contact Amanda McFarlane

T - +44(0)845 873 4587

John Booth is the Managing Director of Carbon3IT Ltd and chairs DCA Energy Efficiency Steering Committee

Data Centre energy efficiency seems to be back on many operators’ agenda’s, not that it really went away but it is good to see it back.

We’ve always known that there are energy efficiency savings to be made in this space, but this time we’re going to look at it from a UK PLC perspective.

I’ve been pondering on the size of the opportunity and what steps operators can take to realise energy efficiency savings over the past few weeks and I was suddenly struck that no one had any real data on how many data centres there actually are in the UK and what their corresponding energy consumption is, so I’ve been conducting some research in order to establish a baseline.

Calculating the actual energy consumption used by data centres in the UK is a very difficult task, and there are a host of reasons for this, however some information has fallen into my hands that may prove useful. I can’t divulge the source at the present time and it could be a wild over or under estimate, but it is being used a source material for a EU project and thus the results and final report will probably be used for official purposes.

The report states that in the UK there are probably around 11,500 “enterprise” data centres, 450 colocation and 25 Managed Service Provider (MSP) data centres.

The last two numbers in my opinion are probably wrong, but this isn’t a gut feel, this is based upon information published by techUK in their “Climate Change Agreement (CCA) for Data Centres, Target Period 2: Report on findings” released in September 2017. That document covers 129 facilities from 57 target units, representing 42 companies. However, we must assume that there are some colocation datacentres that are not in the CCA, perhaps because they only have one facility or that the administration requirements outweigh any financial benefits they may receive.

The total energy used for the organisations within the CCA for the 2nd target period was 2.573 TWh, per year, this has been averaged over the 2 year target period. There has been an increase of 0.4 TWh over the previous target period which reflects the growth of the number of participants in the scheme and their activities.

Let’s look at CCA PUE, the PUE in the base year was 1.95 and the PUE in the target period was 1.80, an improvement but STILL far above where I would expect it to be.

The first big problem is that we have no real clue as to how many data centres, and by the term “data centres” I use the EUCOC definition, being “the term “data centres” includes all buildings, facilities and rooms which contain enterprise servers, server communication equipment, cooling equipment and power equipment, and provide some form of data service (e.g. large scale mission critical facilities all the way down to small server rooms located in office buildings).” there are in the UK. There is no national licensing requirement or regulatory regime associated with data centres and reviews of government websites don’t give us much to work on, so I’m afraid that its estimates, good estimates, but estimates nevertheless. We could use the 10,500 enterprise data centre figures mentioned in the report above, but as this was conducted by a data centre research company, we have to assume an under reporting. Why? Because the questions asked were probably targeted on the words “data centres” and many users do not consider their server, machine or IT rooms to be data centres and thus they go unreported.

My approach is to analyse businesses. In the digital world, almost every business has a digital footprint, so.

In 2016, there were 5.5 Million businesses in the UK, but it’s safe to assume that not all of them have their own server room, as 5.3 Million are microbusinesses, employing less than 10 people, some of these businesses will be using cloud or indeed are so small that the business operates with one computer.

There are 33,000 businesses employing 50-249 employees and 7,000 that employ over 250 employees, thus 40,000 businesses should have some sort of IT estate, and thus would require some sort of central computer room, server room or data centre. The configurations of these facilities will depend on the growth patterns, financing options and level of understanding within the business as to the criticality of the data contained within them.

For, the purposes of this article, I’m going to leave it there, yes, I considered the possibility that some businesses may have branch networks but in my experience, these are likely to be a mini hub, possibly 1 -2 servers and a small network switch located in the manager’s office so not worthy of further analysis and in any case do not meet our EUCOC criteria.

So, approximately 40,000 small computer rooms, server rooms or data centres that may fall into our definition, lets add another 40,000 for non-business IT, including the Government, all 26 central government departments and their local offices, local authorities, the blue lights, being NHS, Police, Ambulance, and Fire services and finally Universities (147) schools (24,372), and other educational establishments. So, 80,000 in all (approximately)

We still have no idea how big/small these facilities are so I’m going to make an assumption that most of these 80,000 are 2-5 cabinets or less and have 50 items of servers/storage/network and transmission systems each. The average server will consume between 500 and 1000watts an hour, according to Ehow.com. If the average use is 850 watts per hour, multiplied by 24 that equals 20,400 watts daily, or 20.4 kilowatts (kWh). Multiply that by 365 days a year for 7,446 kWh per year.

Networking and storage average out at about 250w per hour so roughly half, lets assume that half the IT is servers, and half networking/storage.

We have 50 items so, the total IT energy consumption is going to be 240,900 kWh per year.

We have to assume that these facilities are cooled….badly, so let us revert to the old 1watt of IT needs 1w of cooling and we get 240,900 kWh of cooling energy consumption, giving us a grand total of 481,800 kWh, for one, yes, just one site, at commercial electricity rates this will cost anywhere between 8p – 15p per kWh, so…taking an average electricity cost of 0.12p per kWh

| Energy Cost per kWh | Consumption | Total |

| 12p | 481,800 kWh | £57,816.00p |

And then if we multiply by our 80,000 locations we get to…..

|

| Total Number of Data Centres | Average Energy Cost per annum | Total Cost | Average Energy Consumption for 1 data centre | Total UK PLC Data Centre Energy Consumption |

| .12p per kWh | 80,000 | £57,816.00p | £4,625,280,000.00p | 481800 kWh | 38,54 TWh |

Yes, you are reading those numbers right, the total cost of running the 80,000 server rooms is costing the UK anywhere between £4 and 7 Billion pounds per annum (depending on actual tariff). And using 38.54Twh, which is 11.37% of the electricity generated in the UK in 2016.

If we add the CCA calculation for colocation sites, then we have a total of 41.11TWh representing 12.13% of electricity generated in the UK.

That is a lot of juice!

So, I’ll go through how we can grab this pot of energy efficiency gold!

Firstly, a little more clarification, I attended the DCD Zettastructure in early November and spoke to a lot of people about the figures above, most of them, understood my criteria and the rationale, some said that there was NO way that there were 80,000 small server rooms in the UK and that as electricity use had been declining my figures didn’t stack up. Wow, cue more research.

So, we know from the techUK report that there are 129 facilities reporting for the CCA, so I cross-reference those with other data and found that there are 256 sites listed, many of these will be duplicated with the techUK report but there is still a significant variance.

I’m going to stand by the overall figure of 80,000 server rooms and the amount of energy consumed as I believe that this number is probably also under-reported by approximately 10-20,000 sites, these sites are not conventional “server rooms” and include rooms like rail signal controls, telco Points of Presence (POPS’) and Mobile Phone Base Stations, (approximately 23,000)

How much energy can be saved?

From studies undertaken over the last 5 years or so, the minimum you can expect will be somewhere in the region of 15- 25%, this would be by the application of some of the basic best practices (more later) however some organisations may be able to achieve up to 70% but this would be a very radical approach and they would need to be willing to move to a full cultural and strategic change to the entire organisation. From our figures above, you could reduce your server room energy bills by around £10,000-£25,000, this may not sound very much but multiply that by 80,000 and you get a UK PLC saving of many millions. If consumption were to reduce by this amount then it may also mean that one or two less power stations need to be built. Seeing that we (UK Taxpayers) are underwriting the construction this could reduce energy bills even more!

Firstly, the best option is to adopt the EU Code of Conduct for Data Centres (Energy Efficiency), the definition “data centres” includes all buildings, facilities and rooms which contain enterprise servers, server communication equipment, cooling equipment and power equipment, and provide some form of data service (e.g. large scale mission critical facilities all the way down to small server rooms located in office buildings).”

The EUCOC has 153 best practices that cover Management, IT equipment, Cooling, Power Systems, Design, and finally Monitoring and Measurement. The best practice guide is updated annually and is free to download from https://ec.europa.eu/jrc/en/energy-efficiency/code-conduct/datacentres.

It really is all you need to know about how to optimise your facility.

Secondly, using the EUCOC Section 9 “Monitoring and measurement”, get some basic data, how much energy does your IT use, how much energy is used by providing cooling and UPS etc. I almost guarantee that most small server rooms will not have even the most basic measuring equipment, in that case buy some clamp meters and take readings over a month. There is loads of information online on what and where to measure.

We’re looking for 2 pieces of data, first the actual amount of energy and thus the cost being consumed by the IT estate, for too long organisations have had zero visibility of this figure, some basic measurement will provide this actual cost, and then we can start to consider our energy saving options.

The second figure is the calculation of the PUE, Power Utilisation Effectiveness, this is the total amount of energy consumed by the entire facility (that’s everything used for the room, cooling, UPS, lighting, a portion of the room used by the IT maintenance personnel (IT Kit etc) security/fire etc and then divide it by the IT load.

PUE is an “improvement” metric, you calculate your baseline PUE then re-calculate once you have taken some improvement actions, it should be lower with the result of lower energy bills. Once the initial PUE is calculated, and it’s likely to be somewhere in the region of 2-3 you can begin to think about how to reduce energy.

The EUCOC has some information on the intention of the best practice but how to implement this may be a problem for some managers, so continue if you have some basic knowledge, but failing that, undertake some training, all of the major global data centre training providers have at least one training course on energy efficiency, and you can definitely apply the knowledge for any facility.

The EURECA project (for public sector only) will be running the last face to face training course in the New Year, please visit the www.dceureca.eu website for the event details and some online training courses.

If you’re still not sure or are private sector, then you could contact your organisations IT Provider, if they don’t know, then contact me directly for some guidance.

For the last 9 years, everything we know about data centre server energy consumption has been estimated, indeed my figures above are estimates, but in this case, probably nearer the actual figures than most, but I am prepared to assist and organise a full UK PLC survey of all data centres/server rooms/machine rooms/pops/mobile phone towers, transportation signalling systems etc if someone is prepared to pay for it. This will be a massive task and should not be underestimated, we will need create a survey and contact 80,000 plus organisations. Collate the results and analyse the data, however the information that can be garnered will be worth its weight in energy efficiency gold.

Author: Dr. Terri Simpkin - Higher and Further Education Principal, CNet Training

Barely a week goes by without publications, industry events and think tanks declaring that skills gaps, capability shortages or talent wars are ravaging the Science, Technology, Engineering and Mathematics industries (STEM).

Indeed, the rhetoric surrounding difficulties in finding skilled labour across a range of sectors has been decades in the making[1]. It seems that while the issue is well known, resolutions are thin on the ground and what initiatives are in place are failing to keep pace with the rampant demand for people and their skills.

Indeed, attend any industry trade show or conference and any number of good, often repeated, ideas are put forward with well-meaning enthusiasm or disheartened frustration. More often than not ideas from the floor suggest educating more people, better targeted university/vocational curriculum and ‘getting into schools’. Sadly, the issue of skills and labour shortages in STEM is much more complex than these suggestions can cope with individually. If it weren’t we’d have had the situation in hand by now.

While there is any number of published reports on skills shortages across industries, the general consensus is that industry should stop suggesting that the skills gap is a future matter. It’s here now, and has been for at least a decade. A 2016 Manpower report[2] suggests that globally 40% of all employers report experiencing skills shortages, the same as in 2006.

So, what is going on and why is managing our industries out of the skills crisis so difficult? Well, lets look at the Data Centre sector as a case study for why simple resolutions won’t cut it.

It’s not just about skills.

If it were just a matter of educating more people we’d have the situation sorted within a few years. Sadly, structural factors such as an aging workforce, a global market that makes it relatively easy for people to move across borders and traditional workplace structures are hampering efforts to get people into the data centre sector.

Retirement rates, a lack of succession planning and labour turnover contribute to a challenging landscape where the sector is unable to educate people to fill existing vacancies as well as vacancies generated by growth, innovation and market complexities. Long term 'speed to competence' of traditional learning such as undergraduate university (traditional university degrees) degrees, means that capability is often obsolete before a student has even crossed the graduation stage to accept their certificate.

So too, gaps in expectations of hiring managers and recruitment staff may well be turning away people who could do the job, but are falling foul of out-dated competency frameworks, recruitment wish lists (i.e. skills demands that are unrealistic or unwarranted) and slow recruitment and selection cycles.

‘Why wouldn’t people want to work in one of the world’s most dynamic and growing sectors?’

When they don’t know it exists.

The data centre sector has an image problem insofar that it has a blurry if perceptible image among job seekers. It’s generally accepted that most people in the sector, particularly those who have been working in the sector for some time, have fallen into it by some mysterious stroke of good fortune.

Given that many of the industries that the data centre sector has traditionally drawn from (for example, IT, engineering, facilities management, communications) have been lamenting difficulty in finding skilled people for decades, it’s little wonder that the problem has spilled over. However, the sector is still competing for people who have a clearer picture of prospects and expectations in say, traditional mechanical engineering in industries such as manufacturing, than in the data centre sector.

Not only are we competing for skills, we’re competing with a poorly articulated employer brand (reputation) for talented people who already have a good understanding of where other jobs exist. Waiting for people to fall into a data centre by accident is not a good recruitment strategy and it demands a sector wide, globally driven branding and awareness raising campaign in a race to secure talent where our competitors are already two decades in front.

‘We just need to get into schools’

Most sectors have a schools strategy. As with the employer brand problem, the data centre sector is miles behind the curve on this one. In a crowded landscape of well developed, cohesive and supported schools strategies including manufacturing, retail, health and the emergency services, the data centre sector is barely scratching the surface.

So too, there are some more practised organisations working with a well-constructed schools agenda who do it very well; the STEM Ambassador program, for example. Sending well-intentioned data centre employees, managers or leaders into the local school is a great start, to influence school pupils while they are still young.

STEM Ambassadors[3], are volunteers who are supported by STEM.org.uk[4]; the UK’s largest provider of education and careers support in STEM. Charged with working with schools and colleges with the aim to achieve greater visibility of the sector.

CNet Training, for example, has a number of staff signed up as STEM Ambassadors and are given paid time off during the year to volunteer their time to promote careers in the data centre sector. Managing Director, Andrew Stevens suggests it’s time the sector took a collective stance on an awareness raising campaign and invested time and energy with those who already have capability to generate interest in the sector. Working together with a consistent message will present a far stronger campaign rather than going it alone.

A well-articulated sector strategy that covers all levels of school engagement is required. Dr Terri Simpkin, Higher and Further Education Principal at CNet Training suggests that widening the interest in the sector has to start broad and early. “Waiting until secondary school is far too late” she says. “Building an identifiable and attractive brand starts with getting all children interested in STEM early and then tailoring a data centre specific message later in their school career. Children from non-traditional STEM backgrounds, such as those from lower socio-economic backgrounds and girls, must be engaged early as children start divesting career options as young as 6 and 7 years of age. If we lose them then, it’s unlikely we’ll see them by the time they’re making choices at 14. Addressing a STEM skills shortage is a long-term activity.”

It’s a multi-faceted, wicked problem

It’s been 20 years since Steven Hankin of McKinsey[5] and Co first coined the term ‘the war for talent’. Since then demographic, organisational, change related to workforce characteristic factors have conflated to deliver a set of challenges that requires a smart, quick and consolidated response. It’s time the sector took a strategic and multifaceted approach to the problems associated with filling vacancies imperative to meet growth forecasts and organisational challenges expected in the foreseeable future.

Author: Giordano Albertazzi, president for Vertiv in Europe, Middle East & Africa

As with much of the technology sector, the data centre industry has undergone significant change in 2017. Rising data volumes, fuelled by connected devices and the Internet of Things (IoT), have continued to push infrastructures to their limit, and in many cases, to the brink of collapse.

The past 12 months will likely have been an eye opener for businesses into their readiness for the years ahead. With data expected to continue to grow at a phenomenal rate, companies should be evaluating, planning and investing in updated infrastructures to keep up with the growing demand from consumers, but bearing in mind one priority: consumers will expect a consistent, immediate and uninterrupted service throughout this change.

Looking into 2018, we at Vertiv have identified five predictions to help put businesses on the front foot and start working in the right direction to adapt to these changes:

1. Emergence of the Gen 4 Data Centre: Data centres of all shapes and sizes are increasingly relying on the edge. The once promised Gen 4 data centre – a modular, prefabricated and containerised design – will emerge in 2018 as a seamless and holistic approach to integrating edge and core. The Gen 4 data centre will elevate these new architectures beyond simple distributed networks.

This is happening with innovative architectures delivering near real-time capacity in scalable, economical modules that use optimised thermal solutions, high-density power supplies, lithium-ion batteries, and advanced power distribution units. The combined use of integrated monitoring systems will then allow hundreds or even thousands of distributed IT nodes to operate in concert, ultimately allowing organisations to add network-connected IT capacity when and where they need it.

2. Cloud Providers Go Colo: Cloud adoption is happening so fast that in many cases cloud providers can’t keep up with capacity demands. In reality, some would rather not try. They would prefer to focus on service delivery and other priorities over new data centre builds, and will turn to colocation providers to meet their capacity demands.

With their focus on efficiency and scalability, colos can meet demand quickly while driving costs downward. The proliferation of colocation facilities also allows cloud providers to choose colo partners in locations that match end-user demand, where they can operate as edge facilities. Colos are responding by provisioning portions of their data centres for cloud services or providing entire build-to-suit facilities.

3. Reconfiguring the Data Centre’s Middle Class: It’s no secret that the greatest areas of growth in the data centre market are in hyperscale facilities – typically cloud or colocation providers – and at the edge of the network. With the growth in colo and cloud resources, traditional data centre operators now have the opportunity to reimagine and reconfigure their facilities and resources that remain critical to local operations.

Organisations with multiple data centres will continue to consolidate their internal IT resources likely to transition what they can to the cloud or colos and downsize to smaller facilities, to leverage rapid deployment configurations that can scale quickly. These new facilities will be smaller, but also more efficient and secure, with high availability – consistent with the mission-critical nature of the data these organisations seek to protect.

In parts of the world where cloud and colo adoption is slower, hybrid cloud architectures are the expected next step, marrying more secure owned IT resources with a private or public cloud in the interest of lowering costs and managing risk.

4. High-Density (Finally) Arrives: The data centre community has been predicting a spike in rack power densities for a decade, but those increases have been incremental at best. We are seeing this change in 2018. While densities under 10 kW per rack remain the norm, mainstream deployments at 15 kW are no longer uncommon, with some facilities inching toward 25 kW.

Why now? The introduction and widespread adoption of hyper-converged computing systems is the chief driver. Colos, of course, put a premium on space in their facilities, and high rack densities can mean higher revenues. And the energy-saving advances in server and chip technologies can only delay the inevitability of high density for so long. There are reasons to believe, however, that a mainstream move toward higher densities may look more like a slow march than a sprint. Significantly higher densities can fundamentally change a data centre’s form factor – from the power infrastructure to the way organisations cool higher density environments. High-density is coming, so prepare for later in 2018 and beyond.

5. The World Reacts to the Edge: As more and more businesses shift computing to the edge of their networks, critical evaluation of the facilities housing these edge resources and the security and ownership of the data contained there is needed. This includes the physical and mechanical design, construction and security of edge facilities as well as complicated questions related to data ownership. Governments and regulatory bodies around the world increasingly will be challenged to consider and act on these issues.

Moving data around the world to the cloud or a core facility and back for analysis is too slow and cumbersome, so more and more data clusters and analytical capabilities sit on the edge – an edge that resides in different cities, states or countries than the home business. Who owns that data, and what are they allowed to do with it? Debate is ongoing, but 2018 will see those discussions advance toward action and answers.

Director Cloud & Software, UK&I at Ingram Micro, Apay Obang-Oyway, discusses the many appealing facets of cloud infrastructure.

Gartner’s report of an $11 billion increase in spending on cloud system infrastructure in the past year alone suggests the growth in cloud technology shows no sign of dwindling. This progression is often attributed to the alluring possibility of outsourcing the responsibility for infrastructure to a third party. However, cloud’s meteoric growth owes itself to a range of additional attributes which, when combined, make cloud a powerful enabler for greater business innovation and agility.

According to research from Vanson Bourne, commissioned by Ingram Micro Cloud in 2017, which surveyed 250 UK-based cloud end users from a variety of organisations across a range of key sectors, over two-thirds (68 per cent) of cloud resellers feel that it is necessary for their organisation to offer 24/7 continuous customer support on top of the cloud platform, with 60 per cent seeing support during the implementation process as crucial. The desire for competitive advantage and ubiquity of information on the value of advanced technologies, is requiring the need for business expertise from third parties, which is increasingly requested from end users.

Economics will always play a key role in influencing a business when making decisions, regardless of the department within the company. Therefore, businesses will be searching for ways to save money, without compromising on quality. By optimising infrastructure and IT management responsibilities within internal teams, businesses can significantly reduce their capital expenditure, which are frequently found to place pressure on an organisation’s bottom line. Consumption-based pricing models further reward companies financially by enabling them to strip out unnecessary spending, freeing up valuable funds to be invested elsewhere within the company.

While the financial benefit is obviously a core driver for a business, cloud is also an opportunity for positive business transformation. The introduction of cloud drives business innovation across the organisation, creating greater value and an enhanced customer experience. Upkeep on IT infrastructure is infamously known as a drain on time and resources. If infrastructure is moved to the cloud, IT staff will find themselves with more time to innovate and allocate their resources towards business value creation activities. The time saved from cloud gives IT departments the valuable time they need to concentrate on engaging the business on their strategic needs and deploying the solutions that enable systems and processes to leap frog their competitors. This move will shift the perspective of an IT department being a cost centre whose role is to keep everything running, to an innovative hub which provides real value to the business.

Vanson Bourne’s research also states that scalability is a strong factor for end user organisations, with 68 per cent of respondents stating it was something they looked for in a cloud solution. This figure provides a clear indication that those using cloud want a solution that provides the ability to innovate.

Regarding innovation, cloud experts will be looking to the future, to observe how cloud will assist the adoption and realisation of value from new technologies. Artificial intelligence (AI) is the new technology that seems to be on everyone’s mind today; despite being in its early stages, AI has already begun to show huge promise. The value of all these technologies is about augmenting the new technologies within existing industries and processes as an enabler to delivering greater value in less time and for less cost.

Cloud has the ability to unite the organisation to enable faster and stronger collaboration across all departments. The exciting opportunity arising from augmenting newer technologies, such as AI, IoT and other cognitive services can deliver fantastic business outcomes from improved processes, greater employee engagement and an enhanced customer experience. Cloud-based estates help reduce the management burden of stretching legacy systems.

DSW has found a way to differentiate itself from the competition, solving a consumer’s pain of needing a specific pair of shoes for an event. Volvo’s “Care By Volvo” plan offers drivers, many of whom are already bucking car-ownership trends of the past, to have trouble-free access to a car.

These are good examples of the radical new types of revenue models that solve pain in the customer experience, enabled by innovative use of technology and the Internet of Things. Sounds great, right? They are brilliant ideas, it’s true. But new business models such as these create numerous issues.

Whether it is rental shoes or cars-by-subscription, logistics take on a whole new flow. It used to be fairly easy with the single direction of the product - from supplier to warehouse to store. Omni-channel made this much more complex due to different fulfilment points, but shoe rental or car subscription make it even more complex again - with products moving all over the place.

Technology requirements become massively important – all of a sudden you have to be able to track a given item through the supply chain – a specific route that will be very different every time, but also the Gartner reverse logistics element too.

New style business models like these mean that business processes become so much more complex than before and this puts pressure on the efficiency of the new - and perhaps existing - operations. To fulfil the needs you will need to consider using IoT to track the product through the supply chain until it gets to the customer. You may need a customer-facing app where the customer can browse the rental product catalogue for a request - but also to increase the length of rental or change the return location if required. And all this needs to plug into the finance, operational and logistical systems that already exist.

This kind of approach is an example of a digital business – one that uses technology to enable a new business model focused on the customer and customer experience. Innovation of this kind is rarely supported by commercial off-the-shelf application software.

To borrow the words of Massimo Pezzini of Gartner: “To make customer experience, IoT, ecosystems, intelligence and IT systems work together, a digital business technology platform must effectively interconnect all these sub-platforms at scale.”

A Digital Business Platform will enable you to rent those shoes in time for the ball. And will help your favourite car company to let you use their car on subscription.