A well-known multinational pharmaceutical corporation is undergoing a modernisation programme. The company wants to move large amounts of data about such things as drugs trials, and other matters, across the globe in the pursuit of a cure for cancer. The type of data includes images that emanate from research labs across the globe.

Saïd Business School now enjoys an ongoing close relationship with Centrality’s executive team and its round-the-clock support service. The school has stated that it now has total confidence in providing an up to date, supported, maintained, resilient and highly available ‘world class IT service’ that its students, staff and faculty members reasonably expect and fully appreciate.

JBi regularly need flexible, high performance hosting solutions as the foundation for their digital campaigns and services. Their clients require a combination of competitive pricing, performance, availability and security. To fill this need, JBi wanted a strategic hosting partner with whom they could build an effective, long-term partnership. This was vital for the development, implementation and live stages of client campaigns, as was the need to ensure support and service would be delivered to the highest possible standards.

Fruition Partners and ServiceNow have created an enterprise service management solution for the Travis Perkins Group, now supporting EaaS implementation for IT, HR and logistics and providing a self-service portal which offers 30,000 staff a wide range of products and support, across 20 businesses and 2000 sites.

The above paragraphs are taken from four of the articles in this issue of DCSUK. I’m not going to tell you which ones – you’ll have to read the magazine to find them! However, I do think it’s important to remember that, for all the great content to be found in our digital magazine, much of it is often about the theory of data centres and the wider IT world. Nothing wrong with that. How else would everyone learn about new ideas and technologies if there weren’t such articles?!

However, often it’s just as valuable to read about how your peers are actually implementing new solutions. You might be sceptical as to the theory, but when you can read about it in practice, suddenly it does make sense.

So, I’m delighted that we have some really strong case studies in DCSUK, alongside the usual high quality technology and ideas content.

Data has become one of the most valuable assets for 21st century businesses. Organizations are in a constant state of pressure to manage a massive amount of data in their supervision. As a result, managing the health of data centers is paramount to ensure flexibility, safety and efficiency of a data driven organization.

A continually developing and changing entity, today's complex data center requires regular health checks empowering data center managers to stay on the pulse of their data center facilities in order to maintain business continuity. A preventative versus reactive approach within the data center is paramount to avoiding outages and mitigating downtime. Data center managers can maintain the health of data center hardware by leveraging automated tools that conduct ongoing monitoring, analytics, diagnostics and remediation functions. With the average data center outage costing even the most sophisticated organizations upwards of three-quarters of a million dollars, implementing a data center health management strategy is mission critical in today's dynamic business environment.

A recent study carried out by Morar Consulting, on behalf of Intel and Siemens, amongst 200 data center managers in the UK and US reveals that nearly 1 in 10 businesses do not have a data center health management system in place, showing that many businesses are potentially exposed to outages costing businesses thousands of dollars per minute in downtime.

This report summarizes the findings from a study carried out in Spring 2017, highlighting today's approaches and attitudes towards the implementation of a data center health management strategy.

Other key findings:For businesses that do have health management systems in place, a third implemented these only once their backs were up against the wall, being forced into implementation following an outage, witnessing an outage elsewhere or being pressured by the C-suite to do so.

In an era of automation, 1 in 5 data center managers are still relying on manual processes to perform jobs that could be minimized or automated through innovative software solutions.

Specialist data centre healthcare business, ABM Critical Solutions, has completed an emergency clean of a South London college’s facility within a matter of hours of being called, thus preventing any damaging outages or potential loss of data.

The team was called after a gas suppression system was activated in the server room and unloaded gas and debris onto the IT equipment; a portable fire extinguisher was also accidentally deployed adding further contaminants on to the live equipment.

After receiving the initial emergency call at midday, a senior operations manager was onsite within two hours. The manager completed a detailed survey of the room and equipment and put a full emergency delivery plan together with the client. A team of highly-trained specialist technicians were deployed and onsite within five hours of the original call to begin the clean-up.

IT racks were micro-vacuumed and anti-statically wiped internally and externally; internals of the IT equipment were meticulously micro-vacuumed and cleaned; the room interior was cleaned to ISO14644-1 Class 8 Standards; and an indoor air quality test was completed.

Technicians worked throughout the night to restore the room to its original state as the college couldn’t afford any additional downtime with staff and students dependent on the systems to complete work the following day.

Mike Meyer, Sales Director at ABM Critical Solutions says that thanks to the quick response of his team there was a very low mean time to recovery (MTTR): “None of the IT equipment experienced any long-term damage thanks to our quick response and thorough clean to remove all the abrasive fire suppression materials,” he explains.

“This situation underlines the critical importance of understanding the proper fire safety equipment that should be specified within any data facility,” he adds. “Should an emergency situation such as this occur, calling in an expert like ABM as quickly as possible can prevent an accident becoming a much more serious incident.”

VIRTUS Data Centres continues its rapid expansion announcing plans for two new adjacent facilities on a single campus near Stockley Park, West London. The new site will be amongst the most advanced in the UK and create London’s largest data centre campus.

Establishing this new mega campus further strengthens VIRTUS’ position as the largest hybrid colocation provider in the London metro area.

The two buildings, on the secure eight-acre campus, total 34,475m2. Known as VIRTUS LONDON5 and LONDON6, they are designed to deliver 40MW of IT load and have the secured power capacity to increase to 110MVA of incoming power from diverse grid connection points, future proofing expansion for customers.

The location of the campus, is ideally situated: 16 miles from central London on the main fibre routes from London to Slough, and 7 miles from Slough, thus providing unrivalled hyper efficient, limitless metro fibre connected, flexible and massively scalable data centre space, within the M25.

Work has started to fit out space in LONDON5 for customers who have already committed and general availability is expected in early 2018. These two new data centres will provide an additional 17,000NTM (net technical metres) of IT space and will increase VIRTUS’ portfolio in London to approximately 100MW across their six facilities in Slough, Hayes and Enfield, with the power to expand to circa 150MW on the various campuses.

Together with the recently announced LONDON3 in Slough, the new facilities keep VIRTUS at the forefront of next generation data centres. Their size and expandability will significantly increase the capacity of highly reliable, efficient, secure, scalable and interconnected data centre space available to VIRTUS customers in London.

Neil Cresswell, CEO of VIRTUS Data Centres, said, "With the hunger for connectivity and data growing exponentially, our data centres continue to play a vital role in enabling the UK and Europe’s digital economy. We work with clients across all industries, all with unique audiences and IT landscapes, but with the common need to deliver the highest levels of availability, performance and security of digital experiences. As we move with our customers into an increasingly digital future, we help them deliver high performing applications and content. We provide fast, seamless connectivity to networks and public clouds, along with the capacity for vast data storage and compute processing power - all for lower costs. This investment in LONDON5 and LONDON6 means we can grow with our customers and help them achieve their ambitions.”

Data centre and cloud services specialist Proact is set to future-proof the IT environment at the University of Gloucestershire by supporting a multi-stage infrastructure project to overhaul the entire infrastructure estate, while also providing round-the-clock Service Management to relieve the University’s IT resource of maintenance tasks. Specifically, Proact will assist with IT consolidation and transformation, and will provide an improved DR and backup approach in addition to streamlining operations.

The University was facing a number of challenges due to its ageing infrastructure and the need to refocus the team to add more business value. In particular, the University required assistance to overcome the issues caused by an antiquated backup solution, an out-of-date disaster recovery environment and a need to focus scarce resources on delivering student-facing services. In order to tackle these problems, the University of Gloucestershire called in Proact who will deploy a brand new, leading technology solution, complemented by a full Service Management wrap, to transform the organisation’s IT infrastructure.

Proact was able to demonstrate its expertise early in the ITT process before being selected as the best suited partner to work with the University’s IT team due to the firm’s advanced data centre and cloud capabilities. Proact will act as an extension of the University’s IT department and will not only design and implement infrastructure solutions as part of this phased project, but will play a key advisory role as the organisation looks towards a cloud transformation in the future.

The chosen solution will enable the University of Gloucestershire to become more effective in working towards its key goal which is to integrate support, learning and teaching by transforming IT operations. In particular, Proact’s Service Management means that the University can take advantage of fast and effective IT monitoring, support and incident resolution, provided by Proact’s experts. This will enable the existing IT team at the University to focus on delivering business value to their internal customers without having to concern themselves with the day-to-day operations of their estate.

“It is great to have been selected to work with the University of Gloucestershire, using our advanced skills and experience to completely rejuvenate the University’s IT set-up. With our bespoke infrastructure solutions in place in addition to our 24x7 monitoring service, we look forward to driving innovation at this dynamic organisation,” says Jason Clark, Managing Director at Proact UK and CEO of Proact IT Group.

Doctor Nick Moore, Director of the Library, Technology and Information Service at the University of Gloucestershire, says: “This was a significant decision for the University, not just in terms of making sure we worked through the technical solution, but that we chose a partner organisation that we could trust to deliver the service we needed.

“The transition to their solution went incredibly smoothly and our expectations of the managed service support from Proact have been significantly exceeded. We have found the service desk members we speak to incredibly keen to support us. It feels like a weight has been lifted from my technical team who have been unanimous in their positive comments of the relationship and support from Proact.”

City of Wolverhampton Council now boasts one of the public sector’s most advanced cloud based IT systems, after appointing automation specialist PowerON to maximise its use of Microsoft System Center and launch an innovative Microsoft Azure Hybrid Cloud model.

The council was keen to automate more IT tools, enable the pro-active monitoring of hardware, and streamline software distribution. With a Microsoft-centric system already in place, the council invested in Microsoft System Center, but required help to get the most out of the suite of systems management products, so enlisted the help of PowerON.

The company specialises in providing powerful, high quality IT management and cloud automation solutions to organisations of all sizes, and immediately decided to create a bespoke Cloud Management Appliance (CMA) with extensive management capabilities, for the council. Following its success, PowerON is now replicating the solution blueprint at a number of other local authorities in areas such as Suffolk, Hounslow, Barnsley and Wirral.

Steve Beaumont, Product Development Director at PowerON and a Microsoft Most Valuable Professional, explains: “We have a solid reputation for the quality and simplicity of our service, as well as offering an assured outcome to our customers, which really resonated with City of Wolverhampton Council. Like many public sector organisations, the council is under pressure to make its budgets go as far as possible and was facing challenges with legacy systems.

“Using Microsoft Hyper-V provided flexibility between on-premises and cloud computing resources and when this was combined with the reliability and security of Azure, as well as our unique CMA, it created an unrivalled solution. This is complemented by a hybrid cloud system with the flexing of infrastructure services across on-premises and Azure for key scenarios. We then also incorporated Operations Management Suite for cloud backup, disaster recovery and log analytics, as well as Enterprise Mobility Suite for hybrid management of mobile devices.

“The council had to quickly ‘stand up’ servers and we delivered a fully functioning version of System Center, via our CMA, in just over two weeks which was much less than the 90 days the council had initially estimated it would take.

“We were then able to roll out Windows 10 faster than any other desktop refresh in the council’s history. The whole system, which runs on Windows Server 2012 R2, is now completely scalable through Azure and can support up to 20,000 managed devices per appliance. Our success at City of Wolverhampton Council is now a blueprint for local authorities and we’re already implementing similar systems at other councils throughout the UK.”

Paul Dunlavey, Enterprise Manager at City of Wolverhampton Council, says: “PowerON thrives on being agile and flexible which made them the ideal partner for this large-scale project. They made the adoption of the cloud an easy process and the speed it took to build the infrastructure, which had been a barrier to adopting these technologies in the past, resulted in major cost savings against our initial forecast of how long it would take do in-house. Ultimately it has transformed the way the whole council works.

“Another benefit is the size reduction of both our primary and secondary datacentres which has generated both office space and further savings. Importantly, it has also paved the way for the council to migrate larger systems to the cloud and within two years the aim is to have all primary business applications running from the cloud.”

Following the completion of the project, City of Wolverhampton Council was recently named Local Authority of the Year at the prestigious Municipal Journal (MJ) Awards 2017, which described the organisation as an "outstanding example of modern local government where the resident is at the heart of sound commercial decision-making."

PowerON has also recently completed major projects for a wide range of high-profile organisations including Sandwell and West Birmingham Hospital NHS Trust, Stena Group, Drax Group, Tesco and food giant Princes, as well as winning contracts with British Land, GHD and Clifford Chance.

The Bolton Mountain Rescue Team (Bolton MRT), a voluntary rescue service, has chosen Navisite and SRD Technology UK to help manage, migrate and transform its IT infrastructure to the cloud. Migrating to Navisite’s cloud environment will enable better communication between Bolton MRT and emergency services from remote locations, helping to improve the management and response times for rescues.

Bolton MRT also envisages this connected solution will be a direct aid in helping to locate missing people and casualties. The new Navisite cloud-based system will allow multiple emergency response agencies to work together and share information in real-time, in a single environment. More importantly, it lets Bolton MRT instantly share location information with emergency services so they are able to respond with the most appropriate resources, reducing rescue response time and potentially saving lives.

“Often in rescues, the biggest challenge is precisely locating the lost or injured person,” said Martin Banks, Operational Member and Treasurer at Bolton Mountain Rescue. “We’re now able to start the process of identifying the person’s location using an application which can be run on our system from any location.”

The police can often triangulate a person’s mobile phone location but this data can be inexact. The SARLOC positioning service, developed specifically for mountain rescue teams and now used across the country, is more accurate. Bolton Mountain Rescue team use Navisite’s cloud to access SARLOC data from any location. The positioning service requires the consent of the lost person, and a smartphone with GPS and mobile data service.

Sumeet Sabharwal, General Manager, Navisite said: “We’re proud to be collaborating with SD Technology UK to support such an important, life-saving organisation like Bolton MRT and to be a part of its cloud transformation. When a cloud service like Navisite’s Desktop as a Service (DaaS) is being used in such a critical and time sensitive context, it’s vital that the service has a reliable and robust infrastructure with availability from any location. We’re pleased to have our technology and managed services used in such a beneficial manner.”

The new system works by sending a text message with a link to the mobile phone of the missing person. By clicking the link, any lost or injured person will be able to open a website that will calculate their location and notify Bolton MRT wherever they are through the mapping application running on Navisite’s DaaS. As a result, teams are able to locate lost or injured people much faster and with greater accuracy, no matter where the rescue team is located.

“Bolton MRT was working off IT equipment that had started to tire and limited their ability to carry out important work from the remote locations in which search and rescue incidents often take place,” said Simon Darch, Technical Director, SRD Technology UK. “With a dedicated focus on supporting cloud migrations for charities and not for profit organisations across the UK, the collaboration was critical for us to be able to provide not only the technology, but the managed services around it. Navisite’s Desktop-as-a-Service and Managed Office 365 solution enables Bolton MRT to work remotely, with full access to an integrated and standardised suite of applications to help their rescue operations.”

Bolton MRT is a registered search and rescue charity, with trained volunteers covering an area over 800 square kilometres. The team functions as a vital resource to local emergency services in locating and rescuing missing or injured hillwalkers, climbing and biking accidents and any other incidents involving people in wild and remote places.

The volunteer organisation previously ran its operations from shared desktops located at the organisation's base and had no back-up procedure in place. This system restricted the ability of the team to access important data and systems remotely, as well as limiting information sharing with other emergency agencies. SRD Technology UK implemented Navisite’ s DaaS and Managed Microsoft Office 365 solution which allows standardised remote working, making it easy to deploy and manage desktop environments and gives the team access to a full suite of applications.

SRD Technology UK and Navisite were selected ahead of other managed service providers for the flexibility and reliability of their solutions, as well as their experience and skills in managing these cloud applications and environments.

Cloud specialists sign up online booking giant for five-year deal.

Leading British hosting firm UKFast today announces a five-year partnership with LateRooms.com to support the development of the popular online booking site’s technology infrastructure.

The deal sees the online accommodation site migrate its web hosting arrangements from a colocation solution with ATOS in favour of a bespoke-built, cloud-based solution, developed in partnership with UKFast.

The recently launched cloud solution now regularly processes 6.4 million events an hour. Laterooms.com currently serves 100 million page requests a month.

Head of IO at LateRooms.com, Stuart Taylor, said: “The new platform gives us improved stability, higher availability and the ability to scale up while remaining cost effective. In short we can do a lot more, with less.

“Throughout the migration process there have been learnings on both sides. UKFast has been thoughtful and responsive to the challenges we faced during a migration that was constrained within tight timescales. Together we have created an amazing platform that is a true business enabler.

“UKFast demonstrated a drive and commitment that is familiar to us in our own internal culture. That coupled with a passion for technology and a solid technical solution gave us the confidence that UKFast would become a partner and not just a supplier.”

A four month migration process saw project teams from both sides working to move LateRooms.com across to their new solution with zero downtime.

UKFast CEO Lawrence Jones said: “LateRooms.com is a brilliant online brand that we're incredibly proud to host. We won the deal up against some very good rivals but they chose us for a number of reasons.

“Growing a business is about the challenges you can overcome and the kind of partnerships you build along the way. They trust our brand and they know we care 100% about every element of the service and the experience we give them.

“They could have used a public cloud provider to deliver their whole solution, but recognised it would be harder to keep control of costs as they scaled up, and they wouldn’t receive the same level of support.”

“They are particularly happy with our approach to collaborative working. For example, we offered our developers to solve a few issues they were experiencing. It is clearly a breath of fresh air for them and it built up a trust immediately.”

The new cloud infrastructure includes an overhaul of LateRooms.com’s dev environment, with custom-built APIs and the ability to spin up new VMs at the click of a button. The solution delivers an extremely flexible and easy-to-use system, responding to the booking site’s need for continuous development and ongoing deployment and integration of new features.

PagerDuty has released the findings of its State of Digital Operations Report: United Kingdom, which revealed the need for a shift in workforce expectations and the way teams across an organisation collaborate to resolve consumer-facing incidents.

While the majority of IT practitioners believe their organisation is equipped to support digital services, over half of them also say they face consumer-impacting incidents at least one or more times a week, sometimes costing their organisations millions in lost revenue for every hour that an application is down. The report also highlighted that an organisation's failure to deliver on consumer expectations for a seamless digital experience can greatly affect a company's brand reputation and bottom line.

The report findings are based on a two-part survey of over 300 IT practitioners and over 300 UK consumers on the impact of digital services. The survey specifically examined what UK consumers expect from digital experiences, how organisations are investing in supporting digital services and what tools IT teams are using to keep these services up and running.

The State of Digital Operations report found that nearly all (90.6 percent) of UK consumers surveyed use a digital application or service to complete tasks such as banking, making dinner reservations, finding transportation, grocery shopping and booking airline tickets, at least one or more times a week. This finding is indicative of the larger UK digital services landscape -- IDC predicts that half of the Global 2000 enterprises will see the majority of their business depend on their ability to create and maintain digital services, products and applications by 2020. In addition, IDC says 89 percent of European organisations view digital transformation as central to their corporate strategy.

"With the rise in digital services in the UK, European businesses need to be ready to accelerate their digital transformation journey and adapt to consumer demands," said Jennifer Tejada, CEO at PagerDuty. "Disrupting brand and engagement experience means lost revenue and organisations need to be proactive versus reactive -- a reactive or automated approach to resolving consumer-facing incidents is not table stakes. Organisations can arm their IT teams by taking a holistic approach to incident response. Solutions that embrace capabilities such as machine learning and advanced response automation can help organisations easily deploy an expedited response to consumer-facing incidents."

The State of Digital Operations report revealed that along with the heavy reliance on digital services, UK consumers expect a seamless user experience, and IT organisations are struggling to meet these expectations.

When a digital app or service is unresponsive or slow, many consumers indicated that they are quick to stop using that app or service.IT teams are now at the front and center of providing customers with a satisfying user experience. The State of Digital Operations report revealed that organisations are making significant upfront investments in tools and technologies that support the delivery of digital services in order to avoid costly performance issues later on. Nearly half of respondents (49.9 percent) reported that their organisations budget £ 500,000 or more for DevOps and ITOps tools and services to support and manage digital service offerings -- a critical investment as downtime or service degradation can significantly impact an organisation's financial success.

Majority of IT leaders are in the dark about cloud services and spending.

An overwhelming majority of UK CIOs (76%) don’t know how much their organisation is spending on cloud services, according to a new research report released today by Trustmarque, part of Capita plc. This is due to the increasing rise in employee-driven ‘cloud sprawl’ and ‘Shadow IT’, which are posing a significant challenge to businesses’ cloud adoption and overall data security.

54% of IT leaders admitted they don’t know how many cloud-based services their organisation has, blaming employees being able to sign up to these services easily making it difficult for them to know exactly how many subscriptions and services the company ‘owns’.

58% went on to say they were worried that costs could spiral out of control as a result of cloud sprawl. 86% said cloud sprawl and Shadow IT makes the ongoing management of cloud services a challenge, while almost half of CIOs (45%) argued that providers could do more to warn users about costs they’re incurring when using cloud services.

While 91% of IT decision makers are looking to migrate onto on-premise apps in the next 3-5 years, 59% fear these ambitions for cloud adoption will be slowed over a lack of control on how cloud services are deployed and managed.

This lack of control also means UK companies are exposing themselves to possible data breaches and not being compliant with legal, regulatory and contractual obligations. With the impending EU General Data Protection Regulation (GDPR), this could lead to a significant financial impact, with failure to comply carrying penalties of up to €20m or 4% of global annual turnover.

James Butler, CTO at Trustmarque, said: “Cloud adoption is an unstoppable force, but as this research demonstrates there are still a number of challenges facing organisations. Forward planning is everything in IT and without suitable clarity into who is using what in the organisation, there could be a nasty surprise for IT bosses down the line. That’s not to mention the high potential costs associated with any data breach resulting from such unsanctioned use, as well as the impact of extra network congestion, and even excess mobile data charges.

“The self-service, user-friendly nature of the cloud has made it easy for employees to open cloud services and this is happening on a large scale. The first step towards best practice security is knowing where your data is at all times, and how it is being used. If it is residing in cloud repositories you don’t know about, this may be breaking internal policies and could land you in regulatory hot water – especially if it’s customer data.”

Phil McCoubrey, Head of Security Architecture at Capita, said: “These findings underline the extent to which British organisations must quickly appreciate the magnitude of the potential impact of GDPR. While the regulation clearly sets out that the personal responsibility and therefore accountability lies with managing data control, which is often a job of IT leaders, there is a worrying lack of action being taken by CIOs and GDPR may be difficult for companies to achieve if IT leaders don’t exactly know where employees are storing and sending business data. GDPR is an opportunity to strengthen data security processes and improve resilience, when it is needed more than ever but for those who haven’t adopted basic principles of the Data Protection Act, there is a lot of work to do.”

The next Data Centre Transformation event, organised by Angel Business Communications in association with DataCentre Solutions, the Datacentre Alliance, The University of Leeds and RISE SICS North takes place on 3rd July, 2018 at the University of Manchester. For the 2018 event, we’re taking our title literally, so the focus is on DATA, CENTRE and TRANSFORMATION.

There are plenty of opportunities to be involved in DCT 2018. Right now, we’re looking for people who would like to help shape the event by offering to chair one of the workshop sessions. So, if you are passionate about data centres, recognise and understand the massive changes taking place in the industry at the present time and want to help data centre owners, operators and users understand what these changes mean into the future, please get in contact with us indicating which area you are most interested in.

DATA

Digital Business

Digital Skills

Security

CENTRE

Energy

Connectivity

Hybrid DC

TRANSFORMATION

Open Compute

Automation

Cloud and the Connected World

This expanded and innovative conference programme recognises that data centres do not exist in splendid isolation, but are the foundation of today’s dynamic, digital world. Agility, mobility, scalability, reliability and accessibility are the key drivers for the enterprise as it seeks to ensure the ultimate customer experience. Data centres have a vital role to play in ensuring that the applications and support organisations can connect to their customers seamlessly – wherever and whenever they are being accessed.

And that’s why our 2018 DCT Conference will focus on the constantly changing demands being made on the data centre in this new, digital age, concentrating on how the data centre is evolving to meet these challenges.

Please email Debbie Higham: debbie.higham@angelbc.com to express your interest and tell us about yourself and which subject you would like to chair.

At opposite ends of the healthcare ecosystem, data is being harnessed to drive a revolution.

By Junaid Hussain, Product Marketing, UKCloud.

1) Data standards and interoperability are enabling the patient to become the customer

There are currently a number of technological imbalances in the sector that are being corrected as the industry is turned on its head:

Fundamental to all of this is are data standards and interoperability, enabling a host of new devices and apps to work together to generate a wealth of new and enriched data. This rich data then enables and inspires a further wave of specialist solutions that can deliver new insights, reduce costs and improve outcomes.

2) Powerful secure platforms for pooling valuable datasets are providing clinicians, researchers and specialists solution providers with unprecedented capabilities

At the same time, there were limiting factors that might once have restricted what was possible with data in healthcare and these are being overcome:

While there are a host of technologies in play here, cloud is the central enabler for them all. The IoT (Internet of Things) devices that are gathering data like never before, are feeding it all into the cloud. It is in the cloud that the data is then securely stored and processed in order to mine it for insights and turn it into intelligence. It is in the cloud that collaboration between a vibrant ecosystem of specialist solution providers can then amplify and enrich this intelligence. And it is from the cloud that this intelligence is then accessed remotely by mobile devices, empowering clinicians, researchers and patients.

So, what does all of this mean for the datacentre industry ….

It is evident that few NHS organisations, if any, will be building new datacentres of their own. Indeed, many will be closing such facilities as they look to move to the cloud. This provides an opportunity for colocation providers to host legacy workloads that cannot be moved to the cloud and for cloud service providers not only to host workloads for these organisations, but also for the ecosystem of health tech firms that will be providing IoT and cloud based services to them.

For organisations across health and social care, research and life sciences, and pharmaceuticals, one concern is that patient identifiable data will be secure and if possible will never leave the UK. Cloud service providers that can provide a guarantee that their data will remain in a UK-sovereign data centre will have an advantage here. Such a guarantee, coupled with secure connectivity to HSCN, will provide their customers with secure access to their data, safe in the knowledge that it will always remain in the UK.

UKCloud provides a secure, UK-sovereign cloud platform that is connected to all the public sector networks (from PSN to HSCN) and works closely with an ecosystem of partners in health and public sector technology in the UK. If you want to become part of this ecosystem, get in touch.

Research reveals the reality of hybrid computing.

By Tony Lock, Director of Engagement and Distinguished Analyst, Freeform Dynamics Ltd.

At the beginning of the ‘Cloud’ movement, vendors, evangelists, visionaries and forecasters were often heard proclaiming that eventually all IT services would end up running in the public cloud, not in the data centres owned and operated by enterprises themselves. Our research at the time, along with that of several others, showed that the reality was somewhat different: the majority of organisations said they expected to continue operating IT services from their own data centres and from those of dedicated partners and hosters, even as they put certain workloads into the public cloud.

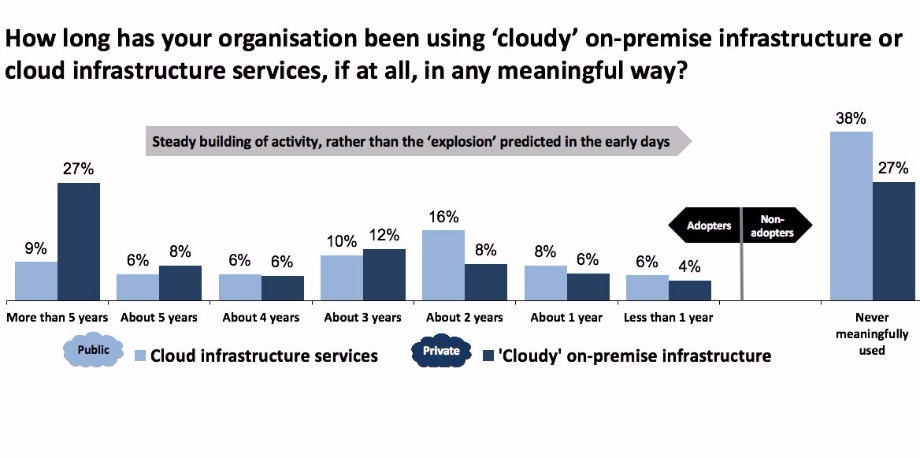

More recent research by Freeform Dynamics (link: http://www.freeformdynamics.com/fullarticle.asp?aid=1964) illustrates that this expectation – running IT services both from in-house operated data centres, and from public cloud sites – is now very much an accepted mode of operation. Indeed, it is what we conveniently term “hybrid cloud” (Figure 1).

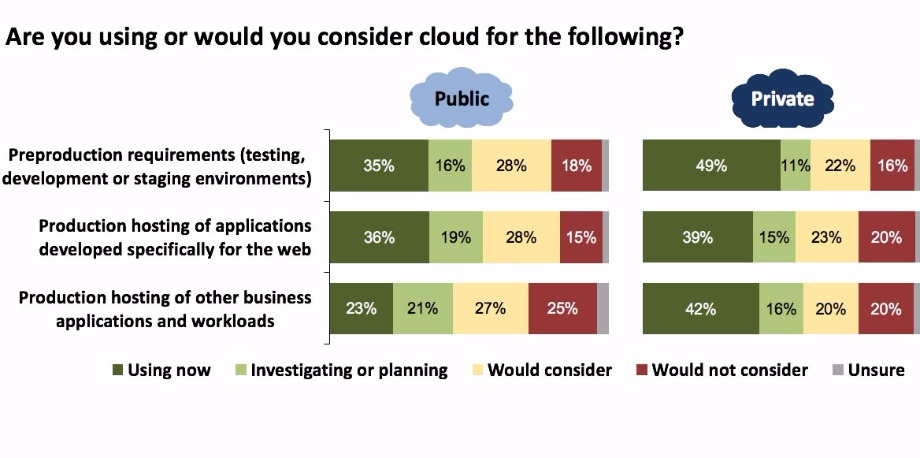

The chart illustrates very clearly that over the course of the last five years almost three-quarters of organisations have already deployed, at least to some degree, internal systems that operate with characteristics similar to those found in public cloud services, i.e. they have deployed private clouds. Over the same period, just under two-thirds of those taking part in the survey stated that they already use public cloud systems. It is interesting to note that both private and public cloud usage has grown steadily rather than explosively, but this is not surprising given the pressures under which IT works, and that the adoption of any “new” offering takes time. Especially if the systems will be expected to support business applications rather than those requiring lower levels of quality or resilience (Figure 2).

The second chart shows that for a majority of organisations, private cloud is already in use or will be supporting production business workloads in the near future. The adoption of public cloud to run such workloads clearly lags behind, but its eventual usage is only out of the question for around a quarter of respondents. When combined with the results for test/dev and the production hosting of applications and services developed specifically for the web, the picture of a hybrid cloud future for IT is unmistakable.

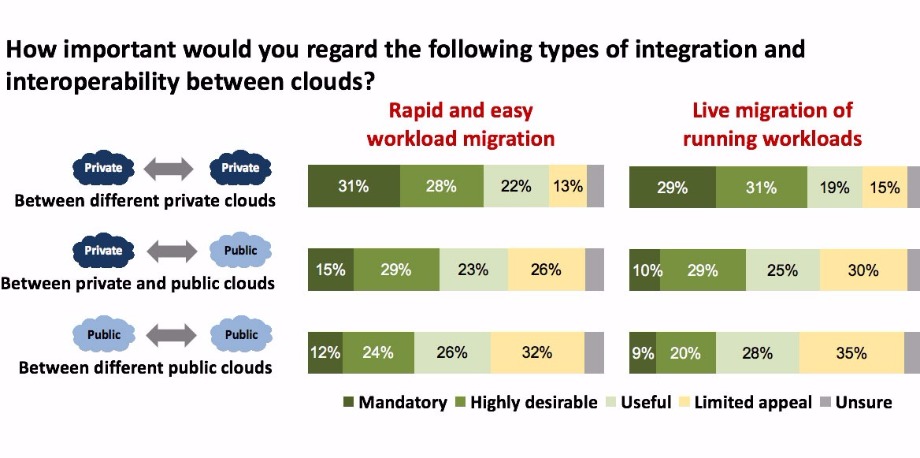

But if ‘hybrid IT’ is to become more than just a case of independently operating some services on internally owned and operated data centre equipment and others on public cloud infrastructure, the survey points out some key characteristics that must form part of the management picture. (Figure 3.)

The results in this figure highlight several key requirements that must be met around the movement of workloads if ‘hybrid cloud’ is to become more than a marketing buzzword. Given that private clouds are today used more extensively to support business applications than public clouds, there should be little surprise that smoothing the movement of workloads between different private clouds is ranked as important, or at least useful, by around four out of five respondents.

But the chart also indicates a recognition of the need to move workloads smoothly between private clouds running in the organisation’s own data centres and those of public cloud providers. And almost as many answered similarly about the need to be able to migrate workloads between different public clouds. The importance of these integration and interoperation capabilities is easy to understand: they are essential if we want to achieve the promise of cloud, in particular the ability to rapidly and easily provision and deprovision services, and the ability to dynamically support changing workloads coupled with hyper scalability to ease peak resource challenges and enhance service quality.

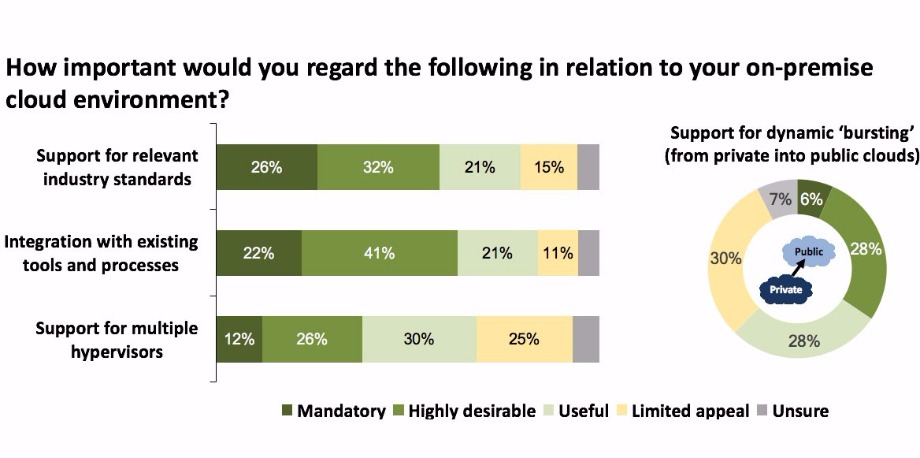

How quickly such capabilities can be delivered depends on a number of factors (Figure 4.)

The need for the industry to adopt common standards is clear and, to its credit, things are beginning to move in this direction although there is still much work to be done. The same can be said for integrating cloud services with the existing management tools with which organisations keep things running, although, once again, things do need to improve especially in terms of visibility and monitoring.

The days of vendors building gated citadels to keep out the competition and keep hold of customers should be coming to an end, as many – though alas not all – are under pressure to supply better interoperability. In truth, while interoperability does make it easier for organisations to move away, such capabilities are also attractive and can act as an incentive to use a service.

After all, no one likes the idea of vendor lock-in, and anything that removes or at least minimises such fear can help smooth the entire sales cycle. In addition, if a supplier makes interoperability simple via adopting standards, being open and making workload migration straightforward, they then have an excellent incentive to keep service quality up and prices competitive.

The SVC Awards celebrate achievements in Storage, Cloud and Digitalisation, rewarding the products, projects and services as well as honouring companies and teams. The SVC Awards recognise the achievements of end-users, channel partners and vendors alike and in the case of the end-user category there will also be an award made to the supplier who nominated the winning organisation.

Voting is free of charge and must be made online at www.svcawards.com

Voting remains open until 3 November so there is still just time to cast your vote count and express your opinion on the companies that you believe deserve recognition in the SVC arena.

The winners will be announced at a gala ceremony on 23 November at the Hilton London Paddington Hotel. Contact the team and join the Storage, Cloud and Digitalisation community as it celebrates the best in the business.

All voting takes place on line and voting rules apply. Make sure you place your votes by 3 November when voting closes. Visit : www.svcawards.com

Below is the full shortlist for 2017 SVC Awards:

Storage Project of the Year

Cohesity supporting Colliers International

DataCore Software supporting Grundon Waste Management

Mavin Global supporting The Weetabix Food Company

Cloud / Infrastructure Project of the Year

Axess Systems supporting Nottingham Community Housing Association

Correlata Solutions supporting insurance company client

Navisite supporting Safeline

Hyper-convergence Project of the Year

HyperGrid supporting Tearfund

Pivto3 supporting Bone Consult

UK Managed Services Provider of the Year

EACS

EBC Group

Mirus IT Solutions

netConsult

Six Degrees Group

Storm Internet

Vendor Channel Program of the Year

NetApp

Pivot3

Veeam Software

International Managed Services Provider of the Year

Alert Logic

Claranet

Datapipe

Backup and Recovery / Archive Product of the Year

Acronis – Backup 12.5

Altaro Software – VM Backup

Arcserve - UDP

Databarracks – DraaS, BaaS, BCaaS solutions

Drobo – 5N2

NetApp – BaaS solution

Quest – Rapid Recovery

StorageCraft – Disaster Recovery Solution

Tarmin – GridBank

Cloud-specific Backup and Recovery / Archive Product of the Year

Acronis – Backup 12.5

CloudRanger – SaaS platform

Datto – Total Data Protection platform

StorageCraft – Cloud Services

Veeam Software - Backup & Replication v9.5

Storage Management Product of the Year

Open-E – JovianDSS

SUSE – Enterprise Storage 4

Tarmin – GridBank Data Management platform

Virtual Instruments – VirtualWisdom

Software Defined / Object Storage Product of the Year

Cloudian – HyperStore

DDN Storage – Web Object Scaler (WOS)

SUSE – Enterprise Storage 4

Software Defined Infrastructure Product of the Year

Anuta Networks – NCX 6.0

Cohesive Networks – VNS3

Runecast Solutions – Analyzer

Silver Peak – Unity EdgeConnect

SUSE – OpenStack Cloud 7

Hyper-convergence Solution of the Year

Pivot3 - Acuity Hyperconverged Software Platform

Scale Computing - HC3

Syneto - HYPERSeries 3000

Hyper-converged Backup and Recovery Product of the Year

Cohesity – DataProtect

ExaGrid - HCSS for Backup

Syneto - HYPERSeries 3000

PaaS Solution of the Year

CAST Highlight - CloudReady Index

Navicat – Premium

SnapLogic - Enterprise Integration Cloud

SaaS Solution of the Year

Adaptive Insights – Adaptive Suite

Impartner – PRM

IPC Systems - Unigy 360

Ixia - CloudLens Public

SaltDNA - Secure Enterprise Communications

x.news information technology gmbh – x.news

IT Security as a Service Solution of the Year

Alert Logic – Cloud Defender

Barracuda Networks - Essentials for Office 365

SaltDNA - Secure Enterprise Communications

Votiro - Content Disarm and Reconstruction technology

Cloud Management Product of the Year

CenturyLink - Cloud Application Manager

Geminaire - Resiliency Management Platform

Highlight - See Clearly - Business Performance Acceleration

HyperGrid – HyperCloud

Rubrik – CDM platform

SUSE - OpenStack Cloud 7

Zerto - Virtual Replication

Storage Company of the Year

Acronis

Altaro Software

DDN Storage

NetApp

Virtual Instruments

Cloud Company of the Year

Databarracks

Navisite

Six Degrees Group

Storm Internet

Hyper-convergence Company of the Year

Cohesity

Pivot3

Syneto

Storage Innovation of the Year

Acronis - Backup 12.5

Altaro Software - VM Backup for MSP’s

DDN Storage - Infinite Memory Engine

Excelero – NVMesh

Nexsan – Unity

Cloud Innovation of the Year

CloudRanger – Server Management platform

IPC Systems - Unigy 360

SaltDNA - Secure Enterprise Communications

StaffConnect - Mobile App Platform

Zerto - ZVR 5.5

Hyper-convergence Innovation of the Year

Pivot3 - Acuity HCI Platform

Schneider Electric - Micro Data Centre Solutions

Syneto - HYPERSeries 3000

Digitalisation Innovation of the Year

Asperitas – Immersed Computing

IGEL - UD Pocket

Loom Systems - AI-powered log analysis platform

MapR – XD

For more information and to vote visit: www.svcawards.com

How well does your company communicate internally? Specifically, how well do your IT departments communicate with each other? Enterprises typically contain four or more IT sub departments (Security, Network Operations, Virtual DC, Capacity Planning, Service Desk, Compliance, etc.) and it’s quite common for them to be at odds with each other, even in good times. For instance, there’s often contention over capital budgets, sharing resources, and headcount.

By Keith Bromley, Senior Solutions Marketing Manager, Ixia.

But let’s be generous. Let’s say that in normal operations things are usually good between departments. What happens if there’s a breach though, even a minor one? Then things can change quickly. Finger pointing can quickly result, especially if there are problems with acquiring accurate monitoring data for security and troubleshooting areas.

So, what can you do? The answer is to create complete network visibility (at a moment’s notice) for network security and network monitoring/troubleshooting activities. Here are three common sources of issues for most IT organizations:

Add taps to replace SPAN ports. Taps are set and forget technology, which means that you only need to get Change Board approval one time to insert the tap, and you are done.Add a network packet broker (NPB) to eliminate most of the other Change Board approvals and eliminate crash carts. The NPB is situated after the tap so you can perform data filtering and distribution whenever you want. By implementing a tap and NPB approach, you may be able to reduce your MTTR times by up to 80 percent.Add an NPB to perform data filtering. The NPB performs data filtering to send the right data to the right tool whenever you need it. This improves data integrity to the tools and improves time to data acquisition.Add an NPB to create role-based access to filters. This eliminates the “who changed my settings” issue and allows multiple departments to share the same NPB.Add virtual taps to get access to the often hidden East-West data in a virtual data center or cloud network

No one wins at the blame game, as it’s a zero sum game. Even if one department appears to win, the whole group typically loses. One of the best things an IT department can do is increase network visibility because it gets at the core of the issue instead of treating symptoms. This is what will help reduce incidents, reduce long-term costs, reduce troubleshooting times, and increase staff happiness.

By Steve Hone CEO, The DCA

On a regular basis we invite DCA members to submit case studies for the DCA Journal. These articles can vary in terms of subject matter, many articles highlight regular challenges operators of data centres are presented with. We have found that articles often provide details and awareness of solutions, this helps the sector move forward to overcome similar challenges.

In this month’s DCA Journal we have a case study from Schneider Electric, detailing an upgrade project at Sheffield Hallam University. What’s interesting is the approach taken by those involved. The parties working on this project viewed it as partnership and wanted to ensure that going forward they could all be available and flexible in their response for the life of the facility.

Also included is a feature from Blygold who apply a high-performance coating to the external condenser. The case study related to a project undertaken for Carillion Energy which dramatically increased the life and efficiency of the entire system, delivering an ROI inside six months, a remarkable success story for a simple solution that really does do exactly what it says on the tin!

We read with great interest an article from ebm-papst. This case study focused on the reduction of energy and cost savings through the introduction of EC fans for UBS. Three DCA members, ebm-papst, Vertiv and CBRE collaborated on this successful project, this is something we are thrilled about and look to promote more collaboration between members going forward.

The DCA exists to help its members and those with an interest in the sector. Case studies allow readers to gain trusted insight into Data Centre projects, how they are implemented and experiences gained. The ability to submit case studies is not just limited to this month’s DCA journal theme we are always interested in receiving case studies for circulation from our members and these are added to the central library for continual reference.

The DCA are working on plans for next year and have been asked to support and endorse an even larger number of Data Centre events in the year ahead. Our own event, Data Centre Transformation 2018 is now scheduled for Tuesday 3rd July 2018 at the University of Manchester.

The structure will be three tracks focusing on:

Data - Digital Business, Digital Skill and Security

Centre - Energy, Connectivity, Hybrid DC

Transformation - Open Compute, Automation, Cloud and the Connected World

So, hold the date in your diary, and plan to come along take advantage of a wide range of educational workshops and network with other DCA members and end users.

The remainder of 2017 conference season is yet again jam packed, events include:

We are coming to the end of another busy year, the DCA Journal for 2017 will finish with a ‘Review of 2017’. This will be published in the Winter edition of DCS Magazine – which covers December and January.

If you would like to submit an article please contact Amanda McFarlane. amandam@datacentrealliance.org

First port of call was to carry out a sample survey of the data centres in the Carillion estate to establish if a deep clean and Blygold Coating could improve the performance of the external air source condensers. In most cases the units were found to be regularly maintained with the coils being washed down twice a year by outside contractors.

Despite this structured maintenance approach, the coils were still being compromised by a steady build-up of dirt and calcium deposits, resulting in restricted air throughput.

It was estimated that following a Blygold treatment the life of the plant would be significantly increased with energy saving in the region of 10% and with an ROI of less than 6 months.

Based on these initial findings the client was keen to progress to a trial and a site was selected which was felt indicative of the plants within the estate. On this occasion a York chiller was selected at the Hayes data centre facility.

A week prior to the Blygold treatment taking place, the Chiller had been deep cleaned and underwent an oil/refrigerant change and a Climacheck Data Logger was used to monitor performance both before and after treatment

After Treatment

The initial results following Blygold treatment looked very promising and the system continued to be remotely monitored as full results would only be seen over time under full operating conditions. It did not take long !!

Just 2 days later we received a call from the Engineering Department who were concerned we had broken two of their condensers as they were no longer working.

After investigation, it was found that they were still in fully operation condition just no longer needed. Prior to treatment they had three big Denco Condensers working 24x7x365 to maintain cooling, however after they had been Blygold treated two out of three condensers had reverted to ‘standby mode’ as only one was now needed.

Talking with the client it soon became clear that the Engineering Department had never seen the units in standby mode before and that this had led to the understandable confusion.

‘As a result of the increased efficiency the engineers also had to visit each server room to INCREASE the temperatures by 5◦.as the server rooms were now becoming too cold!’. Bob Molinew VM

The net effect of the Blygold Coating on the York YCAJ76 Chiller was compiled in a report by an independent consultant – Dave Wright, MacWhirter Ltd, which highlighted the following key points:

‘The units now run considerably better having had the Blygold treatment I am just surprised that the units are not Blygold treated from new!’ Greg Markham, Carillion

Based on these positive results the client contracted with Blygold to carry out the same process on all of its other nineteen client sites. As a result the client has tripled the life time of the coils, reduced its energy bills by 15% and reduced wear and tear on the rest of the system resulting in lower maintenance costs, increased uptime and fewer call outs.

About Blygold UK Ltd

Blygold UK Ltd apply anti-corrosion coatings to Air Source heat exchangers such as chillers, AHUs, Air-Conditioning etc. Blygold coatings can more than triple the life of the coils blocks and reduce the energy consumption by as much as 25%, particularly on units in such corrosive environments as airports, ports, industrial areas, coastal areas and city centres. The coatings can be applied to new units prior to installation or to existing units already installed on site.

The simplest way to reduce the energy consumption in buildings is to ensure that all Heating, Ventilation & Air Conditioning (HVAC) equipment is fitted with the highest efficiency EC fans. Those involved in the data centre industry are quickly realising the energy reduction potential in their buildings through upgrading HVAC equipment to innovative Electronically Commutated (EC) Fans. The motor and control technology in GreenTech EC fans from ebm-papst has enabled UBS to benefit from proven efficient upgrades to their data centre cooling systems.

ebm-papst undertook an initial site survey to review the types of units being used and the potential solutions that were needed; along with an estimation of the payback period for any new kit. The units that were in place before the project were chilled water, with an optional switch to lower performance and utilised AC fan technology. In order to improve efficiency, ebm-papst recommended upgrading the equipment with EC fan technology. Based on the survey results, a trial was then agreed on a single 10UC and 14UC CRAC unit to establish actual performance and energy savings. Data was logged before the upgrade and again once the trial units were converted from AC to EC.

Post upgrade trial data, revealed that less power was absorbed by ebm-papst’s EC fan motors than by their AC predecessors. Based on this information, UBS decided to proceed with the conversion of all units, installing 191 fans within 76 CRAC units. Three different unit models were installed: 39x14UC units; 21x10UC units and 16xCCD900CW.

Vertiv™ then worked with CBRE (who project managed the upgrade) both to UBS’s satisfaction and without causing disruption to the live data. The main element of the upgrade project was the replacement of all fans, with ebm-papst’s EC technology direct drive centrifugal fans, including the installation of EC fans within a floor void that required modification.

Since completion of the EC Technology upgrade project, the following savings have been made:

On average, UBS has seen a 48% energy saving across all units and a payback period of under two years. Other project paybacks include a CO reduction of 5,229 tonnes. In addition to these savings, new control strategy software was put in place; which controls the EC fans on supply air temperature. This saw a further reduction of 14% in energy usage. UBS’s data centres are now also benefitting from reduced noise levels, increased cooling capacity and extended fan and unit life.

Project Challenges

UBS operates a 130,000 sq ft data centre in west London, which is fundamental to the to the operation of the firm’s global banking systems. Within this site there were a number of Down Flow Units (DFUs) operating around the clock, making them crucial to sustaining the required operating conditions for the computer equipment in the data centre. The challenge was to improve the energy efficiency of the data centre, freeing up additional electrical capacity to use on IT resource. In addition, the task was to improve the airflow and improve controllability of the cooling units in the data hall.

Project restrictions were extensive given the live data environment and the upgrade teams were only allowed access to three halls, with only one unit switched off at any one time. However the upgrade was delivered on time and to budget, without disruption. Work took place while the data centres were live; the project managers had to factor in working space and access around constraints from existing equipment and infrastructure.

ebm-papst replaced the existing DFUs in the data centre with high efficiency direct drive EC fans in the Computer Room Air Conditioning (CRAC) units. UBS’s objective for the project was to reduce the drawn-down power by up to 30%, resulting in a 180kW power reduction load to be allocated to IT equipment.

The solution resulted in a load reduction of 250 kW. This resulted in an annual power saving of 48%, which allowed UBS to increase IT power consumption in addition to reducing CO emissions and energy costs. Nearly 5 years since the project took place, UBS has seen the below key metrics:

The energy savings from the EC fan replacement project were exactly as predicted and there was no need to perform any additional analysis due to monthly energy reports being dramatically lower. The EC fans have continued to deliver energy savings, through increased reliability, resulting in a reduced maintenance burden for CBRE and UBS.

Heating, Ventilation and Air Conditioning (HVAC) systems can be responsible for over half the energy consumed by data centres. In cases where energy is limited, improving the energy efficiency of HVAC equipment will result in an improved allocation of energy resource to IT equipment. Whilst many new data centre facilities built in the UK already incorporate EC fans in their HVAC systems, most older buildings continue to use inefficient equipment. Rather than spending capital on buying brand new equipment, often the more cost-effective option is to upgrade the fans in existing equipment to new, high efficiency EC fans.

With ebm-papst GreenTech EC fans, the impeller, motor and electronics form a compact unit that is far superior to conventional AC solutions. The UBS project is an excellent example of how upgrading from AC to EC technology can impact on energy savings, carbon and CO reduction.

Working with Advanced Power Technology (APT), an Elite Partner to Schneider Electric and specialist in data centre design, build and maintenance, Sheffield Hallam University has undertaken work to deploy a state-of-the art highly virtualised data centre as part of a £30m building development at Charles Street in central Sheffield.

APT’s installation is based on Schneider Electric InfraStruxure integrated data centre physical infrastructure solution for power, cooling and racking. The new facility is managed using StruxureWare for Data Centers™ DCIM (Data Centre Infrastructure Management) software to maximise the efficiency of data centre operations.

With a pedigree dating back to the early 19th Century, Sheffield Hallam is now the sixth largest university in the United Kingdom with more than 31,000 students, around 20% of whom are post graduates, and over 4,500 staff. One of the UK's largest providers of tuition for health and social care career paths, and teacher training, it offers around 700 courses across a wide range of disciplines including Business and administrative studies, Biological sciences, and Engineering & Technology.

The university has a range of research centres and institutes as well as specialised research groups. Research grants and contracts provide an important source of income to support work at Sheffield Hallam; in May 2013 the university was awarded £6.9m from the HEFCE Catalyst Fund to create the National Centre of Excellence for Food Engineering, to be fully operational by 2017.

Sheffield Hallam University is situated on two campuses comprising 12 major buildings in the centre of the city of Sheffield. Its IT department operates two data centres, running as an active-active pair in which each location provides primary IT services as well as offering failover support to the other.

“Services provided by the IT department are typical of those required by any university,” says Robin Jeeps, Project Manager for Sheffield Hallam. “We host the website, the intranets and common applications such as Exchange, Outlook and Office, in addition to the student management systems, virtual learning environments, library systems and CRM (customer relationship management) systems.”

In terms of hardware, the university has adopted a virtualisation policy, running between 800 and 900 Virtual Machines on about 70 blade servers distributed across both data centres. It also has a small high-performance Beowulf compute cluster to support research projects but for the most part the main concerns for the IT department are high availability, reliability and cost.

As one of the existing data centres was located in a building whose lease was due to expire, the IT department took the opportunity presented to move the IT facility into the Charles Street development and upgrade its capabilities to improve efficiency and availability.

Following a contract tender, APT was selected to provide and install the cooling and power infrastructure equipment and the DCIM software necessary to manage it efficiently. Thanks to virtualisation, the number of physical servers the university needed to maintain services had dropped from 60 devices in the older data centre to 15 in the new Charles Street facility.

“We can now run on one chassis what we would have run in three racks before,” says Robin Jeeps. “That makes a big difference.”

Located at the new Charles Street data centre, the IT equipment racks are installed within two APC by Schneider Electric InfraStruxure with Hot Aisle Containment Systems (HACS) to ensure an efficient and effective cooling supply. Two 300kW free-cooling units supply chilled water to the HACS and within the equipment racks, APC InRow cooling units maintain optimum operating temperatures.

The HACS segregates the cool air supply from the hot exhaust air, preventing both streams from mixing and enabling more precise control of the cooling according to the IT load’s requirement. At the same time, locating the InRow cooling units next to the servers and storage equipment also reduces the cooling energy requirement by eliminating the need to move large volumes of air in a suspended floor space.

Crucial to maintaining efficient operation is the adoption of Schneider Electric’s StruxureWare software. This marks the first time that Sheffield Hallam has had an integrated management system for monitoring all aspects of its data centres’ infrastructure, according to Robin Jeeps.

“We had a variety of software packages in place before,” he says. “But StruxureWare for Data Centers provides us with a much more integrated solution. As long as something has an IP address, we can see it in StruxureWare and monitor how it is working. Previously we had to go through physical switches and hard-wired cables to monitor a particular piece of equipment.”

Jeeps says that the homogenous integrated management environment proposed by APT was crucial to its winning the contract to supply the data centre infrastructure. “We kept the IT side of the contract separate from the overall development of the building,” he says. “When we studied APT’s tender we liked the clear design they presented and the consistent management of our infrastructure that it made possible.”

The new management capabilities presented by StruxureWare will allow Sheffield Hallam the flexibility to monitor its infrastructure for maximum efficiency and to manage how it makes its services available to students and researchers. Jeeps says that this will allow the university to tender for research contracts that hitherto it had not been able to do.

“We don’t currently provide cost charging or resource charging of IT services to our departments and I doubt that we ever will,” he says. “I don’t think that’s the best way for a university to operate. But if we were undertaking a research project, for example, which work on fixed funding and had to itemise how much the computing support would cost, we have the tools to do that now. We never had anything like that before.”

Another potential benefit offered by StruxureWare is the benchmarking of the overall system efficiency, especially with regard to how well the cooling infrastructure operates as a percentage of the overall power budget of the data centre. PUE (Power Usage Effectiveness) ratings are increasingly being used to compare one data centre’s efficiency with its peers.

“It’s a bit of a ‘chicken and egg’ situation,” says Jeeps. “Until we saw the capabilities of the software we didn’t know some of the monitoring, reporting and capacity planning that was now possible. Previously, we could only have done some rough calculations using a tool like Excel but the capabilities we have now will spur us on further to think about all sorts of things we can do.”

“Working with Advanced Power Technology and Schneider Electric has been an efficient and productive partnership from start to finish,” said Robin Jeeps. “The services they provided have been professional, thorough and at times very patient in terms of solving some of the challenges we’ve had to correct throughout the deployment stages. They remained focused on delivering an intricate solution that would meet our expectations and point of view as a customer, at all times.”

John Thompson from APT explains; “when we build a data centre for one of our clients we look on the relationship as a partnership. It is very important for us to understand the long-term requirements so that we can design for future possibilities in order to remain available and flexible in our response, throughout the life of the facility. This is one of the reasons we chose to deploy a complete Schneider Electric ‘engineered as a system’ data centre solution for the Charles Street room. To begin we built a virtual data centre within the StruxureWare for Data Centers™ software suite, so that the stakeholders could have a ‘3D walk round’ and provide feedback on the solution they were getting prior to delivery. Whilst this resulted in quite a few design revisions it helped to ensure that APT delivered exactly what was expected.”

What do you know about where your telecommunications and mobile provider stores, manages and secures your personal data? Asks Alastair Hartrup, Global CEO of Network Critical.

Having revealed that the UK generated £6.8 billion worth of investment in digital tech last year – 50 per cent more than any other European country - Tech City UK’s Tech Nation 2017 Report highlighted the crucial role the sector plays in economic and business growth.

By Leo Craig, general manager, Riello UPS.

But with this growth comes added risks. The increased pressure on the UK’s power supply has the potential to lead to a number of issues, including possible power fluctuations and disturbances, blackouts and voltage spokes, all of which can have a major impact on business productivity.

To minimise these risks, data centres require reliable and stable power that is protected by an uninterruptible power supply (UPS). The UPS acts as the first line of defence in this environment, but as with any electronic device, it’s likely to need repair at some point during its product lifecycle. It’s vital, therefore, that businesses have a robust maintenance regime in place to prevent downtime and ensure efficiency remains intact.

This article explains the most common errors that can be overcome with maintenance checks and gives some top tips for putting a plan in place to keep your data centre running seamlessly.

Bespoke data centre protection

Regardless of the sector in which they operate, data centres should be resilient by nature. This is fundamental - not only to minimise risk, but also ensure operations remain fail-safe and working in an efficient manner. As UPS systems are the backbone of minimising this risk - working to provide a lifeline when input power source or mains power fails - it’s crucial to endeavour to keep up a regular and robust maintenance regime.

It’s all well and good to know that you should be carrying out regular maintenance, but what’s important is to put specific checks and balances in place to suit your way of working.

Human error

Since the summer of 2017, British Airways has become an example of how human error can bring catastrophic cost to a company, not to mention tarnish its reputation with customers and partners for years to come.

Whether it be engineers throwing a wrong switch, or carrying out a procedure in the wrong order, human error is the main cause of problems that occur during UPS maintenance procedures. In this instance, it may seem easy to place the blame solely on the engineer when in fact errors of this kind are often a result of poor operational procedures, poor labelling or poor training. By tackling these issues from the offset and throughout the UPS installation, risks can be avoided.

For example, if the system being installed is a critical system comprising large UPS’s in parallel and a complex switchgear panel, castell interlocks should be incorporated into the design. Castell interlocks force the user to switch in a controlled and safe fashion, but are often left out of the design to save costs at the start of the project.

Simple things can make a difference. By ensuring that basic labelling and switching schematics are up-to-date, disaster can be averted. Having clearly documented switching procedures available is recommended. If the site is extremely critical, the procedure of Pilot - Co Pilot (two engineers both check the procedure before carrying out each action) will prevent most human errors.

Use the latest technology

UPS maintenance is intrusive by nature, so reducing downtime is only a good thing. Common problems, including electrical components failing, are proceeded with an increase in heat. If a connect point isn’t tightened properly, for example, it will start to heat up and eventually fail in some way.

It’s not always (if ever) possible to check every connection manually, which is where thermal image technology can come in handy. This technology can identify potential issues that wouldn’t necessarily be picked up using conventional methods, without the need of physical intervention.

Select the right provider

When it comes to selecting a supplier, it must be one you feel comfortable with. Do your research and find a supplier that offers a bespoke solution for your requirements with a robust provision for spares and guaranteed response time.

24-hour equipment monitoring significantly strengthens protection against power failure and should therefore be part of any data centre’s maintenance package. What’s more, rigorous training is key to ensuring that field service engineers are able to carry out their work in a timely and efficient fashion. You should also be clear on exactly what the ‘response’ constitutes - will it just be a phone call or will it be someone coming to site, and, if so, will that someone be a competent engineer?

Unlike other manufacturers, Riello UPS stocks all spare parts/components in strategically placed warehouses across the UK combined with a multimillion pound stock holding at its Wrexham headquarters where UPS up to 500kVA are ready for immediate dispatch.

Finally, you should never be afraid to ask questions of your maintenance provider. Remember, it’s your responsibility to request proof of competency levels – both of which can impact the company itself and the engineers it uses.

Undertaking a review of your current UPS maintenance procedure will help to identify and reduce risk to critical operations, that you may not have previously anticipated. By applying an extra level of due diligence today, you can help to avert disaster tomorrow.

Riello’s Multi Power Combo was recently awarded Data Centre Power Product of the Year for its outstanding capabilities combining high power in a compact space. Multi Power Combo is part of the modular range developed by Riello UPS and features both UPS modules and battery units in one.

With the cost associated with downtime on the rise not only in terms of revenue, but also company reputation, businesses need to be more aware of the importance of power protection and the benefits for a reliable, well maintained UPS.

Complex industrial environments, such as data centres, will always require exceptional levels of resilience and reliability under all operating conditions. Having the right UPS and support in place will give peace of mind that even when the worst happens, the impact on the business can be managed.

Fruition Partners and ServiceNow have created an enterprise service management solution for the Travis Perkins Group, now supporting EaaS implementation for IT, HR and logistics and providing a self-service portal which offers 30,000 staff a wide range of products and support, across 20 businesses and 2000 sites.

Travis Perkins plc is one of the UK's leading distributors of materials to the building and construction and home improvement markets.

The Group operates more than 20 businesses from approximately 2,000 sites and employs over 30,000 people across the UK. With a proud heritage that can be traced back over 200 years, the Group’s employees are continuing that tradition by working with their customers to build better together.

As a FTSE 100 company boasting revenue of £5 billion, it has helped in the delivery of major building and infrastructure projects including Heathrow Terminal 5 and the Shard. In addition to Travis Perkins itself, its 20+ industry-leading brands include Wickes, Tool Station and Tile Giant, making it the largest supplier of building products to consumers and the trade in the UK.

The company is part-way through the delivery of an ambitious five-year growth strategy. This includes investing in IT to create greater integration and efficiency across the business through innovation, multi-channel transactional support, plus re-engineering and upgrading legacy systems to provide enhanced infrastructure, and a wider and deeper capability based on open source and cloud architecture. Improved service and support plays a key part of that, based on the ServiceNow platform.

In March 2013 the company put together a five-year roadmap for IT development that would include investments that would more than double the IT budget. Technology is seen to be a key enabler of strategic change at Travis Perkins rather than a support function. CIO Neil Pearce was recently quoted as saying that by making these investments: “We’ll have a much more efficient business – one that’s able to attract and retain people because we’ve got a better set of systems and processes. We’ll be at a point where we’re making use of our digital capabilities to create much better services for our customers.”

At an early stage of the five-year plan, Tech Director Matt Greaves established the Service Development & Change team alongside the Service Delivery team to deliver changes without dropping the ball. With multi-channel sales platforms, a commitment to cloud-based computing and support for trends such as BYOD (bring your own device), IT service management (ITSM) was going to be required to play a significantly enhanced role in future.

The Group’s legacy systems were unsuited to the task, based as they were on a ‘break/fix’ approach, and offered no proactive review of trends and issues from a top-down perspective, which meant the team was constantly ‘fire-fighting’.