44% of organisations currently have a Digital Transformation strategy and a further 32% plan to implement one.

The latest research from the Cloud Industry Forum (CIF) has revealed that Digital Transformation is climbing up UK businesses’ agendas as fears of digital disruption mount. However, the industry body has warned that organisations will need to focus on upskilling the workforce if their Digital Transformation efforts are to be a success.

Conducted in February 2017, the research polled 250 IT and business decision-makers in large enterprises, small to medium-sized businesses (SMBs) and public sector organisations and found that that a significant proportion of UK organisations are keenly aware of the threat of disruption. Two in five (40%) of respondents expect their organisation’s sector to be significantly or moderately disrupted within the next two years, and a similar proportion (37%) expect the same for their organisation’s business model.

Against this backdrop, it should come as little surprise that many organisations have transformation in their sights. The results revealed that 44% of organisations have already implemented, or are in the process of implementing, a Digital Transformation strategy, and a further 32% expect to have done so within the next two years.

However, 94% reported facing barriers to their organisation’s Digital Transformation. Over half (55%) stated that their organisation did not have the skills needed to adapt to Digital Transformation, 48% cited privacy and security concerns, while 47% were worried about legacy IT systems. Worryingly, just 17% were completely confident that their senior leadership team would be able to deliver Digital Transformation.

Commenting on the findings, Alex Hilton, CEO of CIF, said: “It is clear that UK-based organisations can see some big changes on the horizon and Digital Transformation strategies are gaining traction as a result. This is certainly encouraging, but the results from our research indicate that many organisations lack the strategic thinking, direction, and support needed to make a success of Digital Transformation. UK business and technology leaders need to consider the digital imperatives and look at how they support their businesses with technology to meet them. Moreover, they need to invest in skills development and training schemes for staff to help drive digital initiatives further.

“Digital Transformation is about more than just turning legacy processes into digital ones; it looks at how an organisation interacts and engages with its employees, partners and customers. Having the right skills in the broader workforce to deliver Digital Transformation is critical and the research revealed that just 45% of respondents believe that their organisation has the skills required to adapt to Digital Transformation. Looking to the future, UK organisations need to focus on plugging the digital skills gap if they are to enjoy the full benefits of Digital Transformation. To this end, the Cloud Industry Forum has built and launched our Professional Membership and eLearning scheme, which provides the means to aid the development of key digital and cloud computing skills,” concludes Alex.

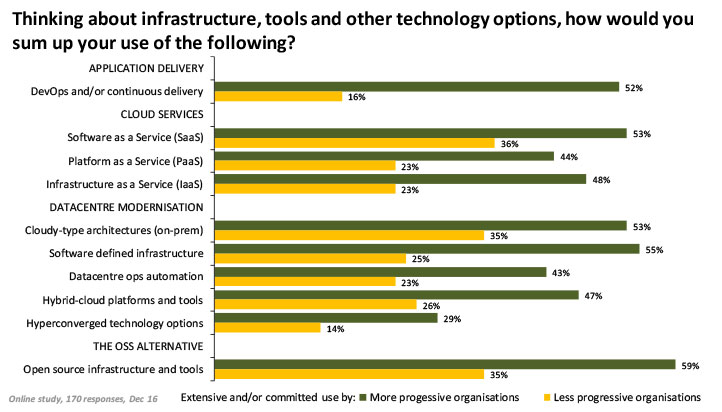

Enterprise server rooms will be unable to meet the compute power and IT energy efficiencies required to meet the demands of fluctuating technology trends, pushing a higher uptake in hyperscale cloud and colocation facilities.

Citing the latest IDC research, which predicts a growing fall in the number of server rooms globally, Roel Castelein, Customer Services Director, at The Green Grid argues that legacy server rooms are failing to keep pace with new workload types and causing organisations to seek alternative solutions.

“It wasn’t too long ago that the main data exchanges going through a server room were email and file storing processes, where 2-5KW racks was often sufficient. But as technology has grown, so have the pressures and demands placed on the data centre. Now, we’re seeing data centres equipped with 10-12KW racks to better cater for modern-day requirements, with legacy data centres falling further behind.

“IoT, social media, and the number of personal devices now accessing data are just a handful of factors that are pushing the demands of compute power and energy consumption, which is causing further pressures on legacy server rooms used within the enterprise. As a result, more organisations are now shifting to cloud-based services, dominated by the likes of Google and Microsoft, and also colo facilities. This trend is not only reducing carbon footprints, but also guarantees that the environment organisations are buying into are both energy efficient and equipped for higher server processing.”

In IDC’s latest report, ‘Worldwide Datacenter Census and Construction 2014-2018 Forecast: Aging Enterprise Datacenters and the Accelerating Service Provider Buildout’, it claims that while the industry is at a record high of 8.6 million data centre facilities, after this year, there will be a significant reduction in server rooms. This is due to the growth and popularity of public cloud based services, occupied by the large hyperscalers including AWS, Azure and Google, which is expected to grow to 400 hyperscale data centres globally by the end of 2018. Roel continued:

“While server rooms are declining, this won’t affect the data centre industry as a whole. The research identified that data centre square footage is expected to grow to 1.94bn, up from 1.58bn in 2013. And with hyperscale and colo facilities offering new services in the form of high-performance compute (HPC) and Open Compute Project (OCP), more organisations will see the benefits in having more powerful, yet energy efficient IT solutions that meet modern technology requirements.”Migration to Windows 10 is expected to be faster than previous operating system (OS) adoption, according to a survey by Gartner, Inc. The survey showed that 85 percent of enterprises will have started Windows 10 deployments by the end of 2017.

Between September and December of 2016, Gartner conducted a survey in six countries (the U.S., the U.K., France, China, India and Brazil) of 1,014 respondents who were involved in decisions for Windows 10 migration."

Organizations recognize the need to move to Windows 10, and the total time to both evaluate and deploy Windows 10 has shortened from 23 months to 21 months between surveys that Gartner did during 2015 and 2016," said Ranjit Atwal, research director at Gartner. "Large businesses are either already engaged in Windows 10 upgrades or have delayed upgrading until 2018. This likely reflects the transition of legacy applications to Windows 10 or replacing those legacy applications before Windows 10 migration takes place."

When asked what reasons are driving their migration to Windows 10, 49 percent of respondents said that security improvements were the main reason for the migration. The second most-often-named reason for Windows 10 deployment was cloud integration capabilities (38 percent). However, budgetary approval is not straightforward.

"Windows 10 is not perceived as an immediate business-critical project; it is not surprising that one in four respondents expect issues with budgeting," said Mr. Atwal.

"Respondents' device buying intentions have significantly increased as organizations saw third- and fourth-generation products optimized for Windows 10 with longer battery life, touchscreens and other Windows 10 features. The intention to purchase convertible notebooks increased as organizations shifted from the testing and pilot phases into the buying and deployment phases," said Meike Escherich, principal research analyst at Gartner.

Top-performing organizations in the private and public sectors, on average, spend a greater proportion of their IT budgets on digital initiatives (33 percent) than government organizations (21 percent), according to a global survey of CIOs by Gartner, Inc. Looking forward to 2018, top-performing organizations anticipate spending 43 percent of their IT budgets on digitalization, compared with 28 percent for government CIOs.

Gartner's 2017 CIO Agenda survey includes the views of 2,598 CIOs from 93 countries, representing US$9.4 trillion in revenue or public sector budgets and $292 billion in IT spending, including 377 government CIOs in 38 countries. Government respondents are segmented into national or federal, state or province (regional) and local jurisdictions, to identify trends specific to each tier. For the purposes of the survey, respondents were also categorized as top, typical and trailing performers in digitalization.

Rick Howard, research vice president at Gartner, said that 2016 proved to be a watershed year in which frustration with the status quo of government was widely expressed by citizens at the voting booth and in the streets, accompanied by low levels of confidence and trust about the performance of public institutions.

"This has to be addressed head on," said Mr. Howard. "Government CIOs in 2017 have an urgent obligation to look beyond their own organizations and benchmark themselves against top-performing peers within the public sector and from other service industries. They must commit to pursuing actions that result in immediate and measurable improvements that citizens recognize and appreciate."

Government CIOs as a group anticipate a 1.4 percent average increase in their IT budgets, compared with an average 2.2 percent increase across all industries. Local government CIOs fare better, averaging 3.5 percent growth, which is still more than 1 percent less on average than IT budget growth among top-performing organizations overall (4.6 percent).

The data is directionally consistent with Gartner's benchmark analytics, which indicate that average IT spending for state and local governments in 2016 represented 4 percent of operating expenses, up from 3.6 percent in 2015. For national and international government organizations, average IT spending as a percentage of operating expenses in 2016 was 9.4 percent, up from 8.6 percent in 2015.

"Whatever the financial outlook may be, government CIOs who aspire to join the group of top performers must justify growth in the IT budget by clearly connecting all investments to lowering the business costs of government and improving the performance of government programs," Mr. Howard said.

Looking beyond 2017, Gartner asked respondents to identify technologies with the most potential to change their organizations over the next five years.

Advanced analytics takes the top spot across all levels of government (79 percent). Digital security remains a critical investment for all levels of government (57 percent), particularly in defense and intelligence (74 percent).

The Internet of Things will clearly drive transformative change for local governments (68 percent), whereas interest in business algorithms is highest among national governments (41 percent). All levels of government presently see less opportunity in machine learning or blockchain than top performers do. Local governments are slightly more bullish than the rest of government and top performers when it comes to autonomous vehicles (9 percent) and smart robots (6 percent).

The top three barriers that government CIOs report they must overcome to achieve their objectives are skills or resources (26 percent), funding or budgets (19 percent), and culture or structure of the organization (12 percent).

Drilling down into the areas in which workforce skills are lacking, the government sector is vulnerable in the domain of data analytics (30 percent), which includes information, analytics, data science and business intelligence. Security and risk is ranked second for government overall (23 percent).

"Bridge the skills gap by extending your networks of experts outside the agency," Mr. Howard said. "Compared with CIOs in other industries, government CIOs tend not to partner with startups and midsize companies, missing out on new ideas, skills and technologies."

The concept of a digital ecosystem is not new to government CIOs. Government organizations participate in digital ecosystems at rates higher than other industries, but they do so as a matter of necessity and without planned design, according to Gartner. Overall, 58 percent of government CIOs report that they participate in digital ecosystems, compared with 49 percent across all industries.

As digitalization gains momentum across all industries, the need for government to join digital ecosystems — interdependent, scalable networks of enterprises, people and things — also increases. "The digital ecosystem becomes the means by which government can truly become more effective and efficient in the delivery of public services," Mr. Howard said.

A look at recent developments in the delivery of IT Services

Tony Lock, Director of Engagement and Distinguished Analyst, Freeform Dynamics Ltd, April 2017

For much of the past decade, many vendors and researchers suggested that cloud would become the only sensible model for delivering most IT services. Indeed, in the early days of public cloud marketing they evangelised that it would be the dominant model. Today things have settled down, and the majority now accept that most organisations will consume IT services via a range of models, combining systems running inside their data centres as well as others taken from external clouds.

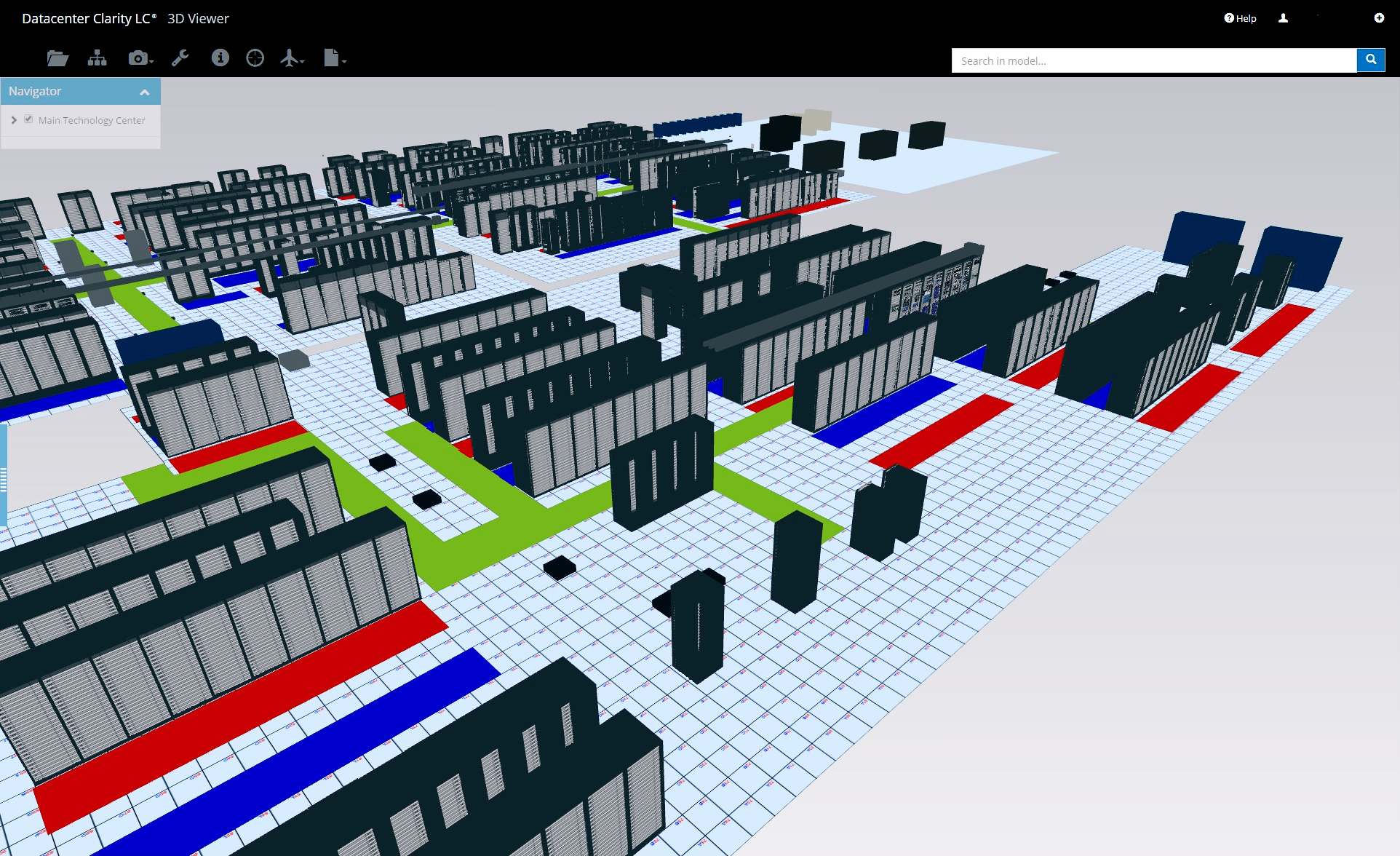

But how are enterprises transforming the way they deliver IT to their users, and what factors are driving that evolution? Recent research carried out by Freeform Dynamics (link http://www.freeformdynamics.com/fullarticle.asp?aid=1923 and http://www.freeformdynamics.com/fullarticle.asp?aid=1922) provides a good indication (Figure 1).

It is interesting to note that while much is said about the need for IT to become more responsive to rapidly shifting business requirements, a matter of which there is little dispute, a few other factors jump out. It is especially worth considering the “business alignment” results, as these point out some facets with the potential to dramatically impact IT’s responsiveness to business change, or even get ahead of it.

Adopting more collaborative relationships with business stakeholders is essential if IT, and hence the data centre, is to deliver new services rapidly, but it is subtly different from simply reacting to change requests quickly. And if it is combined with IT becoming more proactive, i.e. actually suggesting how IT can help the business move forwards, the potential impacts could be valuable.

One other point from the results also has the potential to modify how many projects will be financed and undertaken going forwards. Over half of the respondents taking part in the survey already see or foresee a shift away from big IT projects towards more continuous improvement models. For many, the days of massive projects taking years to show results are coming to an end. The way forward is to do little and often, always keeping the big picture in mind. This approach is helped by recent advances to make the core IT infrastructure inherently more flexible and rapidly reconfigurable, as ‘software defined’ IT becomes a reality.

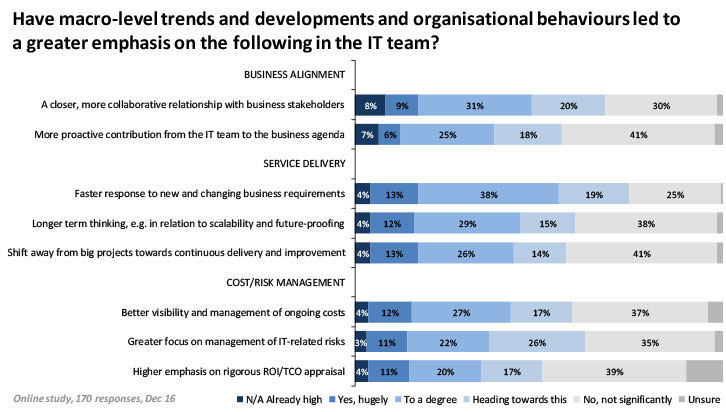

While there is plenty of talk about IT and the business working together more effectively, what IT projects are taking place? The answer, perhaps unsurprisingly, is that there are developments across both data centres and public cloud (Figure 2).

The results show a wide range of infrastructure project activity, despite the steady increase in expected life of individual items of hardware in data centres. Indeed, the ever-increasing dependence of nearly all business operations on IT requires more IT services to be available without interruption than in the past. This in turn makes itself felt in projects to modernise core data centre facilities such as power systems, power management and cooling.

It is worth noting the slow, but steady, implementation of virtual desktop solutions. In terms of user awareness, desktops, whether physical or virtual, are a focal point for users, who will notice should anything happen to impact service quality or availability. This is therefore yet another critical service that data centre managers must keep an eye on.

Overall, these research results reaffirm a key finding from many other studies executed by Freeform Dynamics in recent years, which is that while public cloud services are growing, the data centre is not heading for extinction. As ever in IT, pragmatism rules. Consequently, the public cloud is simply another delivery option to be considered in the light of all the relevant business, legal and IT requirements, and of how well it fits in with the corporate mind-set (Figure 3).

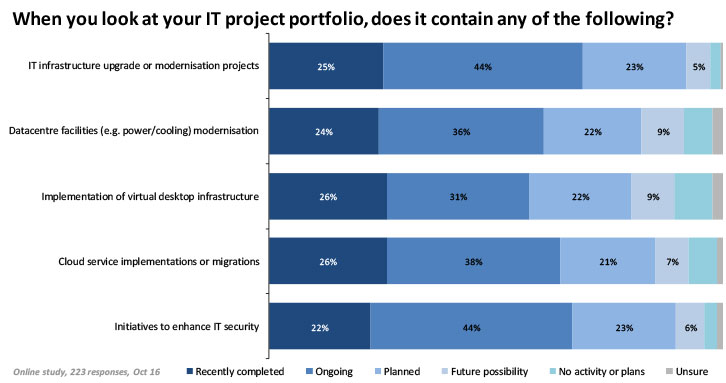

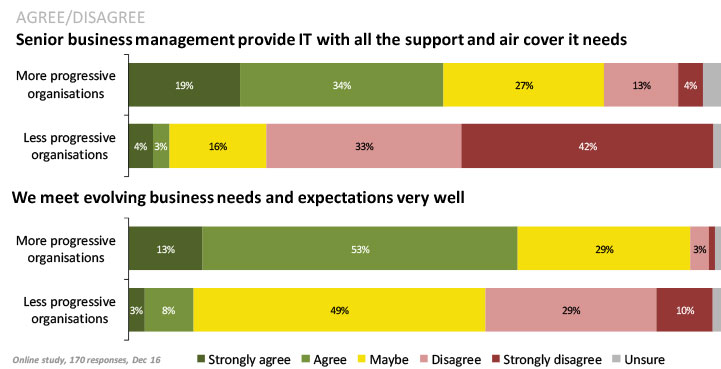

This pragmatism is well reflected by deeper analysis of the survey results, where an index of the progressiveness of organisations was built based upon the answers respondents provided to questions around their organisation’s personality/culture (including their attitude to investment, leadership style, and response to external events and developments).

The results revealed some interesting differences, with the more progressive organisations (those with an above average personality/culture score) being more committed in their use of various cloud and data centre solutions compared to the less progressive group. What is an eye-opener is the difference in scale of usage and commitment of more progressive organisations to almost every data centre and cloud solution listed.

Interestingly, the analysis also revealed the importance of executive support in the more progressive organisations to assist in the fast and broad adoption of new IT technologies and ultimately overall performance with respect to meeting evolving business needs and expectations (Figure 4).

The Bottom Line

The shape of IT infrastructure is changing faster than at any time in history. Data centres are being forced to evolve rapidly to keep up with increasing demands to house expanding numbers of systems and to do so with an expectation that the systems will run 24 by seven, without fail. Getting the technology right is only part of building IT solutions that can drive the enterprise forwards. The need to communicate clearly and effectively with senior business management about why IT investments are valuable to them is just as important as being proactive in IT solution advice to business stakeholders.

By Steve Hone, CEO, DCA Data Centre Trade Association

Making predictions about the future is never easy, especially when it comes to technological advances or the impact these might have on the data centre sector as it attempts to keep up with demand and change.

We live in a fast moving world whose insatiable appetite for digital services is both rapidly growing and evolving at an alarming rate leaving Moore’s Law in its wake. In this month’s edition of the DCA Journal Dr Jon Summers article titled “standing on the shoulders of giants” touches on this very point. Additional contributions from Ian Bitterlin, David Hogg from ABM (formally 8 Solutions) and Laurens Van Reijen from LCL all provide additional insight into what might lie ahead and the impact this could have both positively and negatively on the data centre sector.

You would be wise not to ignore the past when peering into our crystal ball to predict what’s likely to be round the next corner. It’s also healthy to review some of the previous forecasts and predictions to see if they were proved correct or were widely over or under estimated, as Robert Kiyosaki (American Author) said “if you want to predict the future, study the past”.

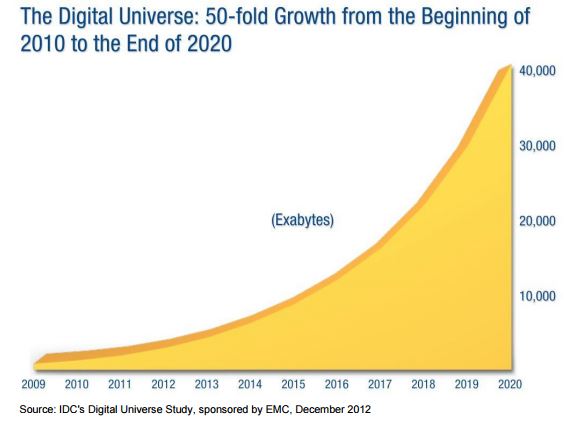

There is no denying that we are using far more digital services than we ever predicted, to put this into perspective in 2012 an IDC's Digital Universe Study*1, sponsored by EMC, calculated that based on historical data collected since 2010 the worlds data usage would rise from a modest 10,000 Exabyte’s to 40,000 Exabyte’s by 2020. Well, a Cisco white paper*2 confirmed that we had reached and exceeded that forecast by January 1st 2016 in mobile data alone, so it’s anyone’s guess where we go from here! Up and on a very steep curve would be a very safe prediction! Remember the concept of ‘Smart Cities’ and the ‘Internet of Everything’ is only just warming up.

Now I’m not suggesting for one minute that this explosion in demand for digital services is something to be frowned upon or that we should try in some way to slow it down, as quite frankly any attempt to do so would be utterly futile, now the genie is out of the bottle it’s simply unstoppable.

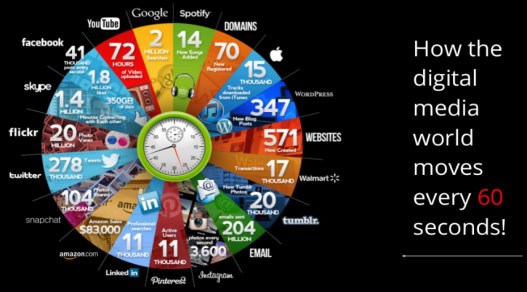

I was still in a highchair throwing food at my parents in 1969 when an American flag was first planted on the moon; that was nearly 50 years ago, today it is reported that the same amount of computing power it took to put man on the moon can now be found in my sons Xbox 360! If you need even more statistics take a look at the info graphic below produced in 2015 – 2M Google searches and 204M emails sent every 60 seconds together with over 4300 Hours of You Tube videos uploaded every 60 minutes. These figures are staggering and remember these numbers are now two years old and were compiled before the likes of On Demand TV, Netflix and Now TV streaming services kicked off.

It is only when you take these sorts of statistics into consideration that you realise how far we have travelled in such a short amount of time and why predicting the future is proving so hard to predict! As uncomfortable as it may be, the undeniable fact is we are now completely reliant and if you take my kids as a good (or bad) example, utterly dependant on the IT based technology and online digital services we use every day without thinking twice about them.

We have also become completely intolerant when it fails us, you would have thought the world was about to end if you can’t get 3G or Wi-Fi. We expect access to these services 24x7xforever[SH1] and to make that happen an unbelievable amount of work goes on behind the scenes at an infrastructure level to ensure you are not let down.

The Data Centre Industry, represents the beating heart of any digital infrastructure and is arguably now just as important to the health of our nation as Water, Gas and Electricity - ironically the supply of which are all controlled by servers located in data centres.

If the revised statistics coming out are to be believed, the demands on the Data Centre Operators and the import role they play in supporting our digital world is probably going to increase five times quicker than originally predicted. Like the Enterprise in Star Trek, life is now moving at warp speed and we need to find the right solutions to keep up with this voyage into the unknown.

The DCA plays a vital role as the Trade Association for the Data Centre Sector in ensuring the industry remains on the ball and fit for purpose. It was created with the express purpose of both supporting existing business leaders attempting to address the many challenges faced today and to collaborate with suppliers on R&D, training and skills development programmes to ensure we meet future demand. This is a team effort and we are here to help.

Next month’s DCA Journal theme is focused on Energy Efficiency, deadline for copy is the 16th May this is followed by Education, Skill and Training with a copy deadline of 13th June. If you would like to submit articles for either one of these editions please contact info@datacentrealliance.org, full details are on the DCA website http://data-central.site-ym.com/page/DCAjournal.

By David Hogg, Managing Director, ABM Critical Solutions

With the advent of the UK’s forthcoming departure from the European Union, much has been made of the ‘uncertainty’ that threatens to dog future trading relations.

But of all the challenges the data centre industry faces over the next few years, Brexit is, perhaps surprisingly, the least of our concerns. British firms and the UK Government are hopefully leaning towards pragmatism and avoiding unnecessary complexity. It is highly likely, therefore, that the UK will adopt the same data control laws as exist in the EU currently, meaning that there will be no difference for the major US tech players in where their data is stored.

It is true to say, of course, that restrictions on the free movement of labour may drive up the cost (and reduce the availability) of labour, but this tends to impact the lower skilled ‘commodity’ roles (e.g. within the hospitality sector) and will therefore have little or no impact on data centres.

Indeed, for all of our predictions for the future, most are likely to have a positive impact on the data centre industry of tomorrow. Consolidation of data centres, for example, continues at a pace, and this will include more pan-European deals as the maturity of the market and expansion of clients continues. This in turn will add to an increased focus on achieving best practice.

Whilst there is yet to be a ‘one-size fits all’ set of standards, best practice levels have risen markedly across the UK in the past few years. Bodies such as the Data Centre Alliance have been key to promoting the need for best practice, complemented by the commercial imperatives of the co-location data sector segment looking for a differentiator in order to attract new clients. The updated European standard for data centres (EN50600 – Information Technology – data centre facilities and infrastructures) will also drive an increase in best practice adoption rates.

Leading on from this, the increasing density of IT equipment is similarly prompting closer attention on performance against best practice. As a case in point, ABM Critical Solutions recently upgraded one of its client’s data centres (the customer is a major high street retailer) following the client’s investment in new IT. The retailer is now able to generate the same IT computing power using only 25% of their previous space occupied by the ageing IT infrastructure.

Best practice is similarly enabling a focus on insurance, and driving down the cost of premiums. There is already evidence that insurance companies recognise that data centres that adopt best practice inherently contain less risk, and therefore adjust premiums directly. Allianz, for example, appears to be taking the lead in this area, and we predict that others are likely to follow once the idea fully takes hold.

From a technology perspective, ‘Edge’ data centres will become increasingly important as high-speed networks such as 5G are rolled out (5G is currently expected in 2020). We predict a real growth opportunity in this type of facility, especially where the big data centre players don’t have a presence, or there is demand from a specific market niche. The Internet of Things (IoT), Virtual Reality (VR) and 5G will lead to a massive growth in the world’s data centre volume, and a key opportunity for data centre providers and service companies alike.

We also predict a period of evolution in the way services to data centres will be delivered. There is already an increased demand for companies to provide a full complement of services based around a core expertise or skilled workforce. This is being driven, in part, by a desire by data centre operators to manage a smaller number of external suppliers, wherever possible, to reduce costs.

By way of example, ABM Critical Solutions is now using the same teams that complete its technical cleans to also undertake simple, additional tasks such as cleaning CRAC units and changing filters. AC engineers are expensive, and this allows their time on site to be more productively utilised in areas where their skills can be better deployed. In this way, service providers will be able to deliver greater value, while supporting their clients’ need for greater operational efficiency.

By Ian Bitterlin, Consulting Engineer & Visiting Professor, Leeds University

Most of us like a bit of speculation and making lists, so this month, when asked for ‘predictions’, I have decided to make a list of my top three.

The first in my list, ‘in no particular order’, concerns ASHRAE and their Thermal Guidelines for microprocessor based hardware. We, the data centre industry in Europe, are very lucky to have ASHRAE. OK, they are a purely North American based trade association that serves its members but they are the sole global source for the limits of temperature, humidity and air-quality for ICT devices and have proven themselves to be far more progressive for the environmental good than anyone could have expected. If you follow their guidelines from the first to the latest you could be saving more than 50% of your data centre power consumption since the members of TC9.9, who include all the ICT hardware OEMs, have consistently and regularly updated their Thermal Guidelines, widening the temperature/humidity window to enable drastic improvements in cooling energy. So, what is my prediction? Well it certainly is not that they will make the same improvements in the future that they have made in the past, since the latest iteration leaves server inlet temperature warmer than most ambient climates where people want to build data centres and requiring almost zero humidity control. My prediction is, in fact, that the conservative nature of our data centre users will keep the constant lag in ASHRAE adoption at a lackadaisical and slightly unhealthy five years. What I mean by that is simple – the 2011 ‘Recommended’ Guidelines are, in 2017, just about accepted by the mainstream users as ‘risk free’ whilst many users still regard the 2011 ‘Allowable’ limits as avant-garde. So, I predict that ‘no humidity control’ and inlet temperatures of 28-30°C will be mainstream by 2022….

The second prediction in my trio concerns the long-forecasted, but now clearly closer, demise of Moore’s Law. When Gordon Moore, chemical engineer and founder of Intel, wrote his Law it was clear to him that the photo-etching of transistors and circuitry into silicon wafer strata doubled in density every two years. That was soon revised by his own company to a doubling of capacity every 18 months to consider the increasing clock-speed and, more recently by Raymond Kurzweil (sometime nominated as the successor to Edison), to 15 months when considering software improvements. It lost its simple ‘transistor count per square mm’ basis long ago but Koomey’s Law took up the baton and converted the 18 months’ capacity doubling to computations per Watt. Effectively that explains why it is so beneficial to refresh your ICT hardware every 30 months (or less) and more than halve your power consumption for the same ICT load. To make a little visualisation experiment in ‘halving’ take a piece of paper of any size and fold it in half, and again, and again... You will not get to seven folds since you will have reached the physical limit.

So why have data centres been growing if Moore’s Law and its derivatives have been providing a >40% capacity compound annual growth rate (CAGR)? The explanation is simple – our insatiable hunger for IT data services (notably including social networking, search, gaming, gambling, dating and any entertainment based on HD video such as YouTube and Netflix et al) has been growing at 4% per month CAGR (near to 60% per year) for the past 15 years. The delta between Moore’s Laws’ 40-45% and the data traffic rise at 60% gives us the 15-20% growth rate in data centre power. The problem comes when Moore’s Law runs out, which it surely will with a silicon base material, as then we will have to manage the 60% traffic per year without any assistance from the technology curve. Moore’s Law probably has 5 years left without a paradigm shift away from silicon (to something like graphene) but that is unlikely to happen ‘in bulk’ within the 5-year time frame. Looking at one of the major internet exchanges in Europe shows that peak traffic is running at 5.5TB/s with reported capacity at 12TB/s – but if we consider even a slight slowing down of the annual growth rate to 50% then it will be less than 2 years before the peak traffic will be pushing the present capacity limits. I predict a couple of years of problems during the dual-event of a paradigm shift away from silicon and a sea-change in network photonics capacity.

The last of my trio of predictions concerns the reuse of waste heat from data centres and is simply stated as: By 2027 waste heat will not be ‘wasted’ from a huge array of ‘edge’ facilities and they will become close to net-zero energy consumers. From my perspective, there is a gathering ‘perfect storm’ of drivers that will converge to drive infrastructure designers to liquid based cooling:

The solution is simple and within our grasp today – liquid cooling of the heat generating components, particularly the microprocessors. With liquid immersed or encapsulated hardware and heat-exchangers pushing out 75-80°C into a local hot-water circuit with 94% efficiency the data centre will have a net power draw of just 6%. Just five cabinets (a micro-data centre by todays definition), equivalent to 80x todays ICT capacity, will be able to offer to the building 100kW of continuous hot-water. Consider embedded 100kW micro-facilities in offices, hotels, sports centres, hospitals and apartment buildings. Indeed, could this be the ‘major’ future? Could giant, remote, air-cooled facilities become obsolete? Probably not for twenty years, but then…

By Dr Jon Summers, University of Leeds

How much do you trust the weather predictions for tomorrow? If you are observant you may have noticed that such predictions have improved over time. This is in fact a direct consequence of Moore’s law, which I am sure you have heard much about, but suffice it to say it has been a self-fulfilling prophecy for successful growth of the ICT industry for nearly 50 years. Weather predictions become more accurate with faster supercomputers, which can then provide predictions in time for the broadcast weather forecast.

Talking about Moore’s law and making predictions and forecasts, it is interesting to ask the question if there are physical limits that restrict Moore’s law from continuing as it has done for many decades. Recently the academic and technical literature abounds with indications that manufacturing transistors with gate lengths of only a couple of atoms is limited due to two main reasons, namely fabrication cost and quantum effects. The former is likely to be the main limitation as indicated in the 2016 Nature News article called the chips are down for Moore’s law, which included the quote “I bet we run out of money before we run out of physics”.

In 1961, IBM employee Rolf Landauer published a paper that highlighted a relationship between energy and information that amongst other things reinforced the point that digital information is not ethereal. What the paper implied was that there was an ultimate minimum amount of energy dissipated (as heat) in a transistor (switch) at room temperature of 3 zeptoJoules (0.000000000000000000003 Joules), which is due to the erasure of information as part of the logical steps in the digital processes, which lead to the notion of the “physics of forgetting”. This minimum energy became known as the Landauer limit, but if information is never erased it would be theoretically possible to build switches that do not adhere to this limit. In fact this was discussed by a colleague of Landauer, Charles Bennett, in 1973 where it was suggested that if the computer logic was made reversible so that information could flow both ways without digital erasure, then it would be possible to compute with far less energy. This was in fact recently achieved in the laboratory using what is called an adiabatically clocked microprocessor.

The question you may be asking yourself now is how the demise of Moore’s law impacts on data centres. The answer is probably not much since the heat removal and power requirements will continue to be an issue for facility management, but it is worth trying to understand how ICT may change as we march into the next decade. Analysing the literature there are a number of interesting developments in building a replacement for Field Effect Transistors (FETs), the switch that creates the logic necessary for processing digital information. The immediate idea with today’s technology is to keep heat dissipation down but continue to increase transistor count by lower voltages, introducing three dimensional features, switching at lower speeds and making use of new materials. There are also a range of activities that are being pursued in the laboratory, namely computers that use reversible logic, superconducting switches, quantum processes, approximation and neuromorphic processes, which are not ready for the mainstream data centres. However, the issue of dark silicon, i.e. part of the “silicon chip” that cannot use power simultaneously with other parts, is likely to grow. This in effect has already happened when multicore microprocessors were introduced in 2005, but rather than having more “general purpose” processing cores they will be specific for certain functions, such as encoding, encryption, compression, etc., a development that is already occurring in the smartphone. ICT hardware is likely to become heterogeneous and application software development will then become the main focus for energy efficiency.

Our ability to make prediction based on scientific theory has only been possible with the developments of calculating machines, but predicting how ICT will develop in the future may be a question that we really need to ask the machines themselves.

“If I have seen further, it is by standing on the shoulders of giants” was the phrase that Isaac Newton used in a letter to his rival, Robert Hooke, in 1675.

LCL Survey of Belgian Companies By Laurens van Reijen, Managing Director of LCL Belgium

Belgium's listed companies have a false sense of security when it comes to data storage. 97% do not test power back-up systems and 50% plan to outsource activities. CIOs and IT managers of listed companies incorrectly assume that their corporate data is stored safely and securely. According to a survey of Belgian listed companies carried out by LCL Data Centers, they underestimate risks such as power cuts and fire, they fail to test their protective systems and they do not invest sufficiently in redundancy.

The survey of Belgian, quoted companies that LCL ordered, shows that data security is not seen as essential within IT governance, not even with quoted companies. For instance: with only one data center, in case of a disaster, you risk losing absolutely all your data. After your power shuts down, your company does too. If you really want to be safe, at least 30k’s should separate both data centers. Moreover, best practices dictate that one should separate the development environment from the production systems.

The CIOs and IT managers of 168 Belgian quoted companies took part in the survey. Of these companies, 87.5% felt they were protected from disasters such as fire or lengthy power cuts. Surprisingly, these respondents said that this was ‘because power cuts rarely happen’. The fact that they also have a disaster recovery service also added to their sense of security. Just 5% of respondents indicated that their organization was ‘reasonably protected', while 7.5% said that their organization had inadequate protection. This final group stated that in the event of a disaster it would not be possible to guarantee the continuity of the organization.

However, when asked whether their systems are also tested by switching off the electricity supply, only 3% of respondents answered yes. This means that a full 97% of respondents will effectively ‘test’ their backup systems for the first time when a disaster occurs.

“Our conclusion is that Belgian listed companies have a false sense of security,” Laurens van Reijen, LCL's Managing Director, said. “Many of the smaller listed companies, and some of the larger organizations, are not adequately equipped to deal with power cuts or other risks. They don't even know how well-protected their systems are, as they don't test their power backup systems. All organizations, and quoted companies in particular (in the context of corporate governance), should have all the protective systems they need to guarantee that the servers are dependable 24 hours a day, 7 days a week and they should actually test these systems on a regular basis.”

More than half of the listed companies store their data internally at the head office. One tenth of them rely on their own server room or a data center at another location owned by the company. A total of 44% of the respondents have a server room that is less than 5 m² in area. In this kind of set-up it is clearly impossible to include appropriate protective measures or specialized staff.

That said, most of the respondents do not have a second data center: 53%. That means, they have no backup in case of fire or theft of the servers. At the same time, half of the listed companies included in the survey have plans to outsource activities. At one third of the quoted companies that have a second data center, the second data center is located less than 25 km from the company's first data center. A major power cut is therefore likely to affect both data centers, which means the back-up plan will not be very effective.

“And yet business continuity is a must for virtually every business today,” Laurens van Reijen added. “The rise of digital technology has led to more and more business processes being digitized. Digital technology is being adopted in new, disruptive business models more than ever before, and these business models are thus dependent on the availability of the IT infrastructure. Shutting down servers in order to carry out maintenance work is no longer an option, as customers also need to be able to visit the website at night to submit orders. And as we have seen recently in Belgium at Delta Airlines, Belgocontrol and the National Register, a server breakdown can cause serious problems.”

“What are the odds that the current mentality – we all trust that all will go well - will change in the short term? Only a minority of companies interviewed said they were planning to set up a second data center. If we really want change, it will have to be directed by the Belgian stock exchange control body: FSMA. So in the best interest of our Belgian quoted companies, for the sake of their business continuity and employment - not to mention the shareholders who want return on their investment; data loss will almost certainly cause share devaluation - we call upon FSMA to issue a new guideline for quoted companies. A guideline pushing quoted companies to have a second data center, and to either thoroughly test all back-up systems, including power backup, or to confide in a party that does just that for them. It’s a pain in the lower back part, but people will not move unless they have to”, Laurens van Reijen concluded.

LCL has many years' experience and know-how in data centers and colocation. The company has three independent data centers: in Brussels East, Brussels West and Antwerp. The Belgian IDC-G member is ideally located in the center of Europe. At 4 miliseconds from Amsterdam and 5 miliseconds from London and Paris in terms of round trip latency, LCL is a vital link in IDC-G’s international data center network.

LCL has clients in a wide range of sectors. Multinationals and small and medium-sized enterprises, government bodies, internet companies and telecom operators all call upon the services of LCL. The company is ISO 27001 and Tier 3 certified. LCL also opts resolutely in favor of sustainability and is 14001 certified.

Laurens van Reijen, LCL’s CEO, is a seasoned data center professional. He was a founder and Operations Director at Eurofiber before founding data center company LCL in 2002.

For more information:

http://www.lcl.beHow the Cloud changes storage.

By John Kim, Chair, SNIA Ethernet Storage Forum.

Everyone knows cloud is growing. According to analysts, cloud and service providers consumed between 35-40% of servers in 2016 while enterprise data centers consumed 60-65%. By 2018, cloud will deploy more servers each year than enterprise.

This trend has challenged traditional storage vendors because more storage has also moved to the cloud each year, following the servers and applications. But it’s also challenging storage customers—the IT departments who buy and manage storage—as well, because they are expected to offer the same benefits as cloud storage at the same price.

The appeal of cloud storage is four-fold:

1) Price: Cloud storage might be cheaper than on-premises storage, as public cloud providers leverage economies of scale and frequently lower prices.

2) Rapid deployment: Application users can rent cloud storage capacity in a few hours, using a credit card, whereas traditional enterprise storage often requires weeks to acquire, provision and deploy.

3) Flexibility and automation: Cloud allows rapid increases or decreases in the amount and performance of storage, with no concerns about hardware management or refreshes, while changes and monitoring can be automated with scripts or management tools.

4) Cost structure: Cloud storage is billed as a monthly operating expense (OpEx) instead of an upfront capital expense (CapEx) that turns into a depreciating asset. You only pay for what you use and it’s typically easy to charge storage costs to the application or department using it.

Despite this appeal, many enterprise users are against moving all their storage to the public cloud for various reasons. Security: they might not trust their data will be sufficiently private or secure in the cloud. Regulations: government regulations might prevent them from using shared cloud infrastructure. Or from a performance standpoint, they might have locally-run applications that cannot get sufficient performance from remote cloud storage. (This can be resolved by moving applications to run in the same cloud as the storage.)

Other times, hardware is already purchased and the IT team strives to prove they can deliver on-premises storage solutions at a lower price than the public cloud. Either way, in the face of public cloud storage that is easy to consume and always falling in price, enterprise IT departments need to make storage cheaper and more flexible, either with a private cloud deployment or more efficient enterprise storage.

One way to “cloudify” the enterprise is software-defined storage (SDS). This separates the storage hardware from the software, and in some cases separates the storage control plane from the data plane. The immediate benefit is the ability to use commodity servers and drives to reduce storage hardware costs by 50%. Other benefits include increased agility and more deployment flexibility. You can choose different types and amounts of CPU, RAM, drives (spinning and/or solid-state), and networking for different projects and refresh or upgrade the hardware when you want instead of the storage vendor’s schedule. If you buy some of the fastest servers and SSDs, they can be your fast block/database storage today with one SDS solution then converted to archive/object storage three years from now using a different SDS solution.

Some SDS solutions let you choose between scale-up vs. scale-out and even hyper-converged deployments, and you can deploy different SDS products for different workloads. For example it’s easy to deploy one SDS product for fast block storage, a second one for cheap object storage, and a 3rd one for hyper-converged infrastructure. Compared to traditional arrays, SDS products are more likely to be scale-out and based on Ethernet (rather than on Fibre Channel or InfiniBand), but there are SDS products that support nearly every kind of storage architecture, access protocol, and connectivity option.

Other SDS vendors include more automation, orchestration, monitoring and charge-back/show-back (granular billing) features. These make on-premises storage seem more like public cloud storage, though it’s important to note that many enterprise storage arrays have also been adding these types of management features to make their products more cloud-like.

The benefits of SDS are appealing but not “free” because it requires integration and testing work. Achieving the 5 or 6-nines (99.999 % or 99.9999% availability) desired for enterprise storage typically requires careful qualification and testing of many aspects including server BIOS, drive firmware, RAID controllers, network cards, and of course the storage software. Enterprise storage vendors do all this in advance with rigorous qualification cycles and develop detailed plans for each model that covers support, upgrades, parts replacement, etc.

This integration work makes the storage more reliable and easier to support and service, but it takes a significant effort for an enterprise to do all this. It could easily require a few months of testing for the first rollout, followed by more months of testing every time the server model, software, drive model, or network speed changes. Cloud providers—and very large enterprises—can easily invest in hardware and software integration work then amortize the cost of their thousands of servers and customers. The larger ones customize the hardware and software while the huge Hyperscalers typically design their own hardware, software, and management tools from scratch. Enterprises need to determine if the savings of SDS are worth the cost of integrating it themselves.

Customers who want the cost savings and flexibility of SDS without the testing and integration requirements often turn to SDS appliances or bundles created by server vendors and system integrators who do all the testing and certification work. These appliances may cost more to buy and be less open to hardware choices than a “raw” SDS solution that is 100% integrated by the end user. But they still cost less and offer more frequent hardware refreshes than a traditional enterprise storage array. For these reasons the SDS appliances offers a good solution to customers who want the benefits of SDS but don’t want to do their own testing and integration work.

In the end choosing between SDS and traditional enterprise arrays usually comes down to a tradeoff between time and money. SDS lets you save money on hardware by investing a lot of time up-front for qualification and testing, while traditional arrays cost more to buy but don’t require the upfront time investment. Generally speaking, larger customers find SDS more appealing than smaller customers, but choosing a pre-integrated SDS appliance—which can include hyper-converged or hypervisor-based solutions—can make SDS accessible and affordable to customers of any size.

For more perspective on how the cloud changes storage, see the following SNIA resources on Hyperscaler Storage at www.snia.org/hyperscaler

At times we focus so much on one specific topic and its nitty-gritty details that we miss the big picture: this is what people mean when someone can't see the woods for the trees. Today the IT world is impacted by the ongoing debate around cloud. People tend to visualise the cloud as a location on some sort of geographical map. The cloud can be within your datacentre or outside of it, at times in some not better specified location. In reality the cloud is not a place, it’s a paradigm: it is a consumption model and an expectation of instant delivery. It opened up everyone’s eyes about the possibilities for greater agility, automation, efficiency, and simplicity. And those attributes can be found in all flavours of clouds.

By Fausto Vaninetti, SNIA Europe.

So should enterprises embrace private, public or hybrid cloud? The question is so strongly connected send youto datacentre technologies that one aspect is often forgotten: how will users reach their cloud services? The answer is easy: through the wide area network (WAN). This is why the debate around the alternative cloud deployment models should get a start from the wide area network options. And this is also the reason the IT crew should be talking to the network team as a first step when approaching the cloud.

Nobody would have even considered building skyscrapers if Otis had not invented the elevator a couple of centuries ago. That was a key enabling technology and also a differentiator among builders. When you look at cloud IT in a broader sense, it is apparent that users can access the required services in the cloud only by connecting via the WAN. You can have a fantastic application running in whatever form of cloud, but it will look pretty nasty if you have poor connectivity. Bandwidth and latency are clearly very important, but packet drops and security will also play a key role to make users happy.

In the past the most adopted solution for enterprise connectivity was to deploy a virtual private network on top of MPLS technology from a service provider. More recently, with the increasing adoption of Internet as a viable enterprise WAN transport solution and the move of applications to either the public cloud (typically for SaaS) or hybrid cloud (typically for PaaS and IaaS), customers find themselves at the cusp of a WAN evolution. A top priority now becomes uncompromised application performance and availability regardless of the application type and how it is consumed. Customers are expecting that the evolving landscape of WAN solutions will incorporate more and more of the WAN optimisation capabilities available on the market, even better if they are designed specifically for application and cloud-access optimisation. Bandwidth reduction, handling of packet drops and high jitter, high throughput even on long distance links, encryption and application specific quality of service are just a few features that come to mind. The focus should be on user experience, application performance, visibility, control and security. The success of a cloud service will strongly depend on how well and easily users will be able to connect to it through the WAN.

Software-defined WAN solutions are becoming a hot topic these days and they have to thank the wide adoption of IT services from the cloud as a major driver behind this momentum. Moreover, SD-WAN connectivity can be controlled with software living in the cloud space, making the marriage even better. SD-WAN is a flavour of software-defined networking as applied to long distance connections, outside the datacentre. Using SD-WAN technology, enterprises connect their branch offices to their datacentre networks and to the cloud, crossing geographical distances.

Branch routers are incorporating WAN optimisation and SD-WAN capabilities, alleviating the need for dedicated hardware appliances. Products in the Network Function Virtualisation Infrastructure category are also emerging as an appealing new approach for enterprises to combine multiple services (like security, load-balancing, WAN optimisation) on a single x86 server engine. WAN optimisation is also being included within data replication software tools, facilitating backup toward a cloud environment.

Hybrid cloud is definitely under the spotlight as the ideal candidate for an affordable, secure and flexible delivery of IT services. When combined with the ongoing trends of virtualisation, mobility, desktop virtualisation and analytics, this leads to an increase in bandwidth and management complexity. SD-WAN solutions seem to be the answer, determining a swift shift in enterprise WAN architectures, where there is a need to unify management of WAN application performance across Internet, MPLS and cellular links. Integrated platform offerings are top of mind within large enterprises with complex networks and requirements. Virtualised solutions, as part of the broader NFVI approach, are considered best for simpler branch and datacentre environments.

For organisations that are considering adopting some form of private, public or hybrid cloud, it would be a savvy move if they would start from the WAN. Just like elevators enabled skyscrapers, SD-WAN technology enables cloud IT. A famous song in the 70s suggested a lady was buying a “stairway to heaven”. Now it could be the time for IT managers to buy an “elevator to the cloud IT”.

A key revelation to some at the first European Managed Services and Hosting

Summit in Amsterdam on 25th April was that, outside of the managed services

industry, no-one is calling it that. With a strong focus on customers and how

they engage with managed services, the event discussed how the model had become

mainstream in the last year, and was now the assumed way of working for many

industries.

Over 150 attendees from nineteen different European countries met to review the state of the market and the ways to take the industry forward. Bianca Granetto, Research Director at Gartner, set the scene with a keynote on how Digital Business redefines the Buyer-Seller relationship. In this she showed how customers are using more and more diverse IT suppliers, while still looking for a trust relationship with those suppliers, and that this process will continue in coming years. “The future managed services company will look very different from today’s,” she concluded.

This was reinforced by TOPdesk’s CEO Wolter Smit who, in a discussion on the new services model, said that MSPs were actually in the driving seat as the larger IT companies could not reach their level of specialisation. Dave Sobel, SolarWinds MSP’s partner community director also pointed out that many of the existing IT services companies were decades old and, with management due for replacement, new thinking among the providers was inevitable.

The top trends affecting the market were outlined by several speakers, with IoT, user experience and smart machines within the list – and IoT will be profitable for suppliers, according to Dave Sobel, with the MSPs top of the list as beneficiaries.

IT Europa’s editor John Garratt highlighted the differences between the US and European managed services markets, with the US more focused on financial returns. Price was apparently less important to European customers, who were more focused on gaining control of their IT resources. Autotask’s Matthe Smit said that price indeed mattered less than a good supportive relationship. But, he said, less than half of providers actually measured customer satisfaction, and this would have to change.

If anyone was in any doubt of the impact of the new model, Robinder Koura, RingCentral’s European channel head, showed how cloud-based communications had pushed Avaya into bankruptcy, and the new force was cloud-based and more flexible.

Security was never going to be far from the discussions, and Datto’s Business Development Director Chris Tate shook up the meeting with some of the latest statistics on ransomware. MSPs are in the firing line in the event of an attack like this, and he gave some sound advice on responses and precautionary measures. Local MSP Xcellent Automatisering’s MD Mark Schoonderbeek also revealed how he launched new services using a four-layered security offering: “First we'll search for vendors through our existing partnerships. When we find a good product - we'll R&D it from a technical standpoint. If the product meets our quality standards we will roll out within our own production environment. Then we'll go to one of our best customers in a very early stage, we tell them it's a test-phase and we'll implement the service for free, but in return we want the customers feedback (what went well, what went not so well and what is the perceived value of the service that is offered). Then we'll make a cost calculation and ask the customer what the service is worth. We'll put a price on the product and deliver it fixed price. Next step is to sell the product to all existing customers.”

The impact of the new EU General Data Protection Regulation (GDPR) was starting, but there were many unknowns, not least how various regulators across Europe would react to the provisions, warned legal expert and partner at Fieldfisher, Renzo Marchini, while the opportunities and general strong confidence in the European IT market were illustrated by Peter van den Berg, European General Manager for the Global Technology Distribution Council (GTDC).

Finally, a well-received analysis of what was going on in the tech M&A sector showed attendees where to make their fortunes and how to do so quickly. Perhaps unsurprisingly the key to creating value within a company turns out to be generating highly repeatable revenues – which is what managed services is all about.

For further information on the European Managed Services and Hosting Summit visit www.mshsummit.com/amsterdam.

Many of the issues debated during the European Managed Services event will be further discussed at the UK Managed Services and Hosting Summit, which will be staged in London in September – www.mshsummit.com

The IoT is changing the workplace in many ways, altering working patterns, driving new cultural trends and even creating new job roles. Whether it’s influencing business decisions, enhancing organisational efficiency, or creating more informed employees, the technology is undoubtedly a disruptive force. However, to achieve its full potential, we must get the security dimension right. The expansion in data unfortunately presents more opportunities for cyber-attackers.

By Manfred Kube, Head of M2M Segment Marketing, Gemalto.

While there are understandable concerns over the impact of technology on jobs, we think the future is positive. The IoT is going to create more intelligent employees, allowing them to access huge volumes of data to make more informed decisions. They will need help in this task from advanced machine learning and Artificial Intelligence techniques, which will help make sense of the data and discover patterns. Furthermore, the IoT going to make work more flexible, rendering the traditional office space increasingly obsolete.

The rise in IoT-enabled devices, such as smart glasses, wristbands and powerful tablets, will equip workers with the tools to perform better. Just imagine how much more successful an aircraft engineer would be wearing connected smart glasses, enabling information on the plane to be fed into their field of vision while they fix a problem. We are also seeing products such as industrial smart gloves emerge, which can speed up assembly line processes by enabling workers with hands-free scanning and documentation of goods. The same principles apply to corporate leaders; think about how much more productive a boardroom meeting might be if directors had a constant stream of real-time data flowing to them from around the business which can be used to the company’s advantage.

The expansion in data can bring benefits for organizations, allowing them to better understand customers and make more informed decisions. Take banks as an example. The proliferation of connected devices means that financial institutions have more information at their disposal, allowing them to conduct more rigorous market analyses.

With IoT and M2M technology, banks can access data from across customers’ value chain. M2M sensors are set to enhance underwriting processes, since banks can better track physical performance of individuals, the shipping of goods and manufacturing quality control. Better informed lending decisions are also possible, since powerful IoT sensors can monitor the condition of retail, agricultural and manufacturing businesses.

While we’re optimistic about the future of work, there is a major obstacle – and that’s getting the cyber security right. The IoT is going to lead to an expansion in data, while the rise of mobile working is going to place more pressure on company networks to deliver cloud-based systems. Vulnerabilities could allow hackers to cripple organizations, potentially seizing control of organizational AI systems and wreaking havoc. We’ve covered real life examples of this on our blog before.

With cybercrime projected to cause losses of $2 trillion by 2019, companies need to develop strong identity management systems, as well as deploying tools like encryption and tokenization to combat cybercriminals. In addition, businesses running IoT projects will have to do more to ensure the identity of their connected machines and the sensors they are attached to, and the integrity of the data they are producing. After all, if a business will be using this data to make informed business decisions, they had better be sure the data is correct.

Clearly, the IoT is set to radically change the way we work, encouraging employees to make better use of data and pushing cyber security to the top of the agenda in the boardroom.

Hundreds of millions of cyber threats travel the internet every day and businesses of all sizes are at serious risk. For example, did you know that between 2010 and 2014, successful cyber-attacks on businesses of all sizes increased by 144%? On top of this, the National Cyber Security Alliance reports that approximately 60% of all businesses who experience a loss due to a security breach go out of business within six months.

By Atif Ahmed, Vice President EMEA Sales, Cyren.

This is quite staggering and clearly cyber-crime is big business—we’re talking large-scale organised crime and billions of dollars to be made from corporate and personal data. And, the Web is the primary highway for these attacks. Sophisticated phishing emails, malware, and even spam target more than just servers and desktops; laptops, smartphones, and tablets can also be the focus of a cyber-attack, from any location around the globe.

We recently conducted research to explore how smaller businesses are coping with the level of escalating cyber threats to look at whether it was just the larger organisations that are being attacked and how the small to medium sized enterprise and businesses are bearing up. The research which was conducted by: Osterman Research and sponsored by Cyren highlighted that security problems in small to medium sized businesses are rampant. The research, which was conducted: in February 2017, surveyed: IT and security managers at 102 UK companies with anywhere from 100 to 5000 employees The reason we say security problems are rampant is because75% of organisations surveyed reported a security breach or infection in the last 12 months, rising to 85% for businesses with 1000 or fewer employees.

In terms of frequency and the type of breaches causing organisations anguish, the average number of known breaches reported was 2.1. The threats that were rated of greatest concern were data breaches, ransomware, targeted attacks and zero-day exploits. Interestingly, ransomware infections were reported at twice the rate among organisations with fewer than 1,000 employees, when compared to organisations with 2,500-5,000 employees, which came out as 6 percent versus. 3 percent, respectively.

The greatest security gaps, where IT managers’ level of concern most outstrips their evaluation of their security capabilities, are in dealing with targeted and zero-day attacks. The threat of data breaches, botnet activity, and malicious activity from insiders were also cited. Only 19% of the respondents said that their web security is inspecting SSL traffic for threats.

The research also showed that IT managers are far more concerned about the costs of infection than the cost of protection. The initial cost of web or email security solutions or their total lifecycle cost were ranked much lower as decision criteria than features like ease of administration, visibility, and advanced security protection (the top three categories). Therefore, it is safe to assume that IT managers are far more concerned with stopping malware than controlling employee web behaviour, with the exception of preventing access to pornography from business networks.

“Shadow IT” is a moderate concern for larger companies, but a low priority for those with 1,000 employees or less, with only 9% considering it of concern. The largest organisations surveyed, with 2,500 to 5,000 employees, are currently rating application control as the most important capability in evaluating new solutions, with 73% rating it extremely important. This compares to just 43 and 41 percent of organisations in the two smaller employee size categories.

Data Loss Prevention is highly utilised in the UK, ranking as the second-most-deployed capability for both web security (64%) and email security (62%), among the capabilities evaluated. Less than 25% say they protect company-owned or BYOD mobile devices, and less than 30% of remote offices and Guest Wi-Fi networks have gateway security. The vast majority of organisations rely on endpoint protection for traveling employees’ laptops and to protect use of the web at remote offices.

2017 has started with some major developments in cybersecurity. The UK’s National Cybersecurity Centre opened up its doors and the work to get UK businesses and citizens more aware about cybersecurity intensified. This is hardly surprising as the country, and the rest of Europe, is only just 12 months away from new tougher legal regulations on cybersecurity going live.

By Aaron Miller, Systems Engineering Manager, Palo Alto Networks.

So, there has been plenty of opportunities to hear about cybersecurity in the media, even in Parliament. But, what’s the lie of the land as the half year mark gets closer? Attending both major public and private events on security, the prognosis is the industry is becoming more mature and less manic but there remain some challenges to still address.

Cybersecurity vendor collaboration is becoming a real benefit for customers. The Cyber Threat Alliance (CTA), of which my company is a founding member, brought into the fold more vendors to share vital threat intelligence and apply to this to tackle cyber threats much more effectively. As a result, every major security vendor is now a member of the alliance, working together to help our joint customers in the challenges they face.

However, what is really ground breaking about how the alliance has grown is how the CTA has committed itself to the ongoing development of a new, automated threat intelligence sharing platform. This could be transformative for how threat intelligence sharing delivers a real rather than theoretical blow to threat actors and their exploits.

This new platform automates information sharing in near real-time to solve the problems of isolated and manual approaches to threat intelligence. It better organises threat information into “adversary playbooks” focused on specific attacks, increasing the value and usability of collected threat intelligence. This innovative approach turns abstract threat intelligence into real world action and lets users speed up information analysis and deployment of the intelligence in their respective products. This kind of collaboration strengthens the industry and makes cyberattackers’ jobs more difficult.

Awareness of how legacy antivirus approaches do not work has arrived and more organisations are actively seeking alternatives. Hardly surprising when endpoint security was such a buzz at the events earlier this year and there are lots of approaches being presented. The most intriguing alternative to me is one that not only checks for compliance in antivirus replacement boxes, but is also natively integrated with the rest of the network security stack. As 2017 rolls on and organisations realise the magnitude of responding to cyber threats and complying with the tougher structures on data protection set out by GDPR and NIS, there is going to be a trend towards solutions with the native ability to integrate newly discovered threat intelligence into the platform with a minimum of human intervention. This is the only way to deal with both the floods of threat alerts most organisations receive and the growing number of endpoints connecting to networks.

There is a varied ecosystem of security products targeting new threat vectors and techniques. This is no surprise but while new thinking and innovation are vital, an ad hoc approach to building a cybersecurity infrastructure doesn’t give organisations the complete visibility into their risk posture they need to prevent attacks. The feedback that I get from CISOs and others is point solutions have some value but they don’t interact.

Orchestration is a term that’s going to become more frequently heard in 2017. So, expect more vendors to claim they have found ‘THE’ solution for managing a mixed-vendor cybersecurity environment. While each company’s claims of supporting heterogeneous security, as an industry we must do better in delivering natively engineered security platforms in which many of the capabilities delivered by a point product have been integrated into the greater whole. If done well, this can be much more beneficial solution.

As threats become more common and damaging, and the legal requirements on organisations to protect their users for cybercrime become more exacting, we are exposing a shortage of ready-to-go cybersecurity expert talent at all levels.

If you boil down much of the current debate about cybersecurity, finding ways to identify, hire and budget for more staff is the number one concern for government and business. This nut has to be cracked but there is a twin track approach that needs to be followed.

On one hand, we must encourage more cybersecurity learning within the education system. People are interested in these kind of jobs – indeed, almost 1250 people applied for the UK government’s 23 cybersecurity apprentice positions. Therefore, we need to fund more of these initiatives whether within universities or more practical training within the workplace. The new proposals of T-levels also could be a vehicle for getting more cybersecurity into the school curriculum and technical education system.

Although training the next generation of cybersecurity experts is vital, we need more cybersecurity capabilities today and to enable the preventative strategies that are best able to protect organisations from cyberattacks. So, expect more organisations to evaluate how machine learning and artificial intelligence can be used alongside greater automation of cybersecurity processes to drive effective prevention strategies.

Over the last ten or more years we have seen tremendous changes in how our societies and economies have become more digitised. And, threats to these new ways of working and living our lives have not been unusual. So maybe one of those past years might have felt significant but, three months in, 2017 has got a strong claim to be transformative year for my industry. Or, at least until 2018 begins.

DCSUK talks to Leo Craig, Managing Director of Riello UPS about recent developments at the company and some of the industry issues that are already having an impact on the way power plant is being designed and used across the data centre industry.

1. Recently, Riello introduced the Netman 204 firmware rev 2.03 – what’s new?

The upgraded firmware in the Netman 204 communications card now gives more interaction between the Riello UPS product and the user. We have redesigned the web interface, and the same network card is now upgraded to use on our new Multi Power Modular UPS as well as all ranges within the Riello UPS family. There is also an improved setup wizard to add extra environmental sensors and contacts that can be monitored as well as the UPS.

2. And can you tell us a little bit about Riello’s new Riello UPS Hub?

The Riello Hub is a new platform we have developed to offer customers, resellers, consultants and Riello certified engineers a one-stop shop of all information about products and services. This includes content tailored to specific customer news such as data sheets, drawings, stock availability, order progress, firmware updates, training videos, price lists, and much more. In essence, it’s about making life easier for all our clients so that they can find all relevant information in one place.

3. And the company recently opened a new subsidiary where was that?

Riello UPS, part of the Riello Elettronica group, is proud to announce the opening of Riello UPS America. Riello UPS America will take care of marketing, engineering, pre and post-sales of Riello UPS products, particularly those with UL certification, for field applications such as Data Centers, Automation, Medical / Hospitals and for all applications where continuous and reliable power quality is essential.

4. Looking ahead, what can we expect from Riello’s Sentinel product line over the next 12 to 18 months?

At Riello, we continuously look to improve our range, and we listen to our clients and what they ask for, so in the coming months we will be upgrading some of the range to introduce unity power factor and a paralleling capability, so that multiple units can be paralleled together to increase capacity or add redundancy.

5. And what about the Multi product line – any developments here?

We have recently increased the Multi Power modular range with the Multi Power Combo which offers 126KVA of redundant power and batteries in one single rack. Soon, there will also be another addition to the range, but I am keeping that under wraps at the moment, but as they say - watch this space over the coming weeks!

6. And then there’s the Master product line?

On our large UPS ranges we will have some very exciting new products on the horizon, which we hope to launch in the Autumn, but again I really cannot say much yet.

7. And your Solutions offering?

Our solutions offerings are as strong as ever, encompassing best practice in Resilience, Efficiency and Total cost of Ownership (TCO), which is no mean feat. The combination of high efficient modular products and being the only major UPS manufacturer to use open protocol means we really can achieve the best power solutions for our clients.

8. And, finally, what might we see in the software/connectivity space?

We have just launched our Riello Connect cloud service to enable our service team and the client to monitor their UPS’ performance in the cloud via PC, laptop or smart phone. This replaces our old telenetgaurd service, and existing clients are being migrated across to the new service throughout 2017.

9. And how is the service side of the business developing?