Nothing spectacular this month (is there ever?!) – just the suggestion that visiting Infosecurity Europe in early June could well be time well spent away from your office/desk/data centre. No need to bore you all with the statistics as to how many data breaches there are every minute of every day, of the increase in ransomware and various other malicious attacks, or the impending ‘doom’ of the GDPR. I’m sure you are all fully aware of the chaos that security is becoming, seemingly in direct contrast to the importance it is now assuming.

A few hours spent at the show will hopefully clarify, rather than confuse. And you can return to your place of work armed with the knowledge, and knowing where to obtain the tools, necessary to ensure that your company’s IT security policy is either in tip top shape, or shortly will be.

I guess the only potential banana skin is that finding helpful, objective, vendor-neutral might be a tad tricky. No doubt there’ll be the Brexit scaremongers, sitting alongside the GDPR, the end of the world is nigh doom-mongers, all trying to convince you that you must spend a large sum of money right now. But there should also be plenty of folks who are happy to give you helpful advice (yes, they’ll want to sell as well) and, at the very least, you’ll be able to listen to your peers talk through how they’ve addressed various security issues, and also visit the various trade associations that can point you in the right direction(s).

Security has never been more important than right now, so do try and take the time to visit the event. In the mean time, enjoy reading DW, and, in particular, the security articles included in this issue.

Angel Business Communications Ltd, 6 Bow Court, Fletchworth Gate, Burnsall Rd, Coventry CV5 6SP. T: +44(0)2476 718970. All information herein is believed to be correct at time of going to press. The publisher does not accept responsibility for any errors and omissions. The view expressed in Digitalisation World are not necessarily those of the publisher. Every effort has been made to obtain copyright permission for the material contained in this publication. Angel Business Communications Ltd will be happy to acknowledge any copyright oversights in a subsequent issue of the publication. Angel Business Communications Ltd © Copyright 2017. All rights reserved. Contents may not be reproduced in whole or part without the written consent of the publishers. ISSN 2396-9016

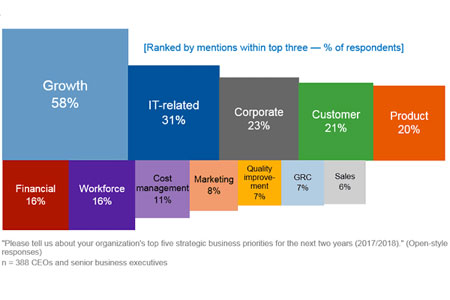

An unsettled global political environment has not shifted CEOs' focus on profits and growth in 2017. Growth is the No. 1 business priority for 58 percent of CEOs, according to a recent survey of 388 CEOs by Gartner, Inc. This is up from 42 percent in 2016.

Product improvement and technology are the biggest-rising priorities for CEOs in 2017 (see Figure 1). "IT-related priorities, cited by 31 percent of CEOs, have never been this high in the history of the CEO survey," said Mark Raskino, vice president and Gartner Fellow. "Almost twice as many CEOs are intent on building up in-house technology and digital capabilities as those plan on outsourcing it (57 percent and 29 percent, respectively). We refer to this trend as the reinternalization of IT — bringing information technology capability back toward the core of the enterprise because of its renewed importance to competitive advantage. This is the building up of new-era technology skills and capabilities."

While the idea of shifting toward digital business was speculative for most CEOs a few years ago, it has become a reality for many in 2017.

Forty-seven percent of CEOs are being challenged by the board of directors to make progress in digital business, and 56 percent said that their digital improvements have already improved profits. "CEO understanding of the benefits of a digital business strategy is improving," said Mr. Raskino. "They are able to describe it more specifically. Although a significant number of CEOs still mention e-commerce or digital marketing, more of them align it to advanced business ideas, such as digital product and service innovation, the Internet of Things, or digital platforms and ecosystems."

CEOs have also progressed in their digital business endeavors. Twenty percent of CEOs are now taking a "digital-first" approach to business change. "This might mean, for example, creating the first version of a new business process or in the form of a mobile app," said Mr. Raskino. "Twenty-two percent are taking digital to the core of their enterprise models. That's where the product, service and business model are being changed and the new digital capabilities that support those are becoming core competencies."

Although more CEOs have digital ambitions, the survey revealed that nearly half of CEOs have no digital transformation success metric. "For those who are quantifying progress, revenue is a top metric: Thirty-three percent of CEOs define and measure their digital revenue," said Mr. Raskino.

Deeper transformation can only be achieved at scale if it is systematically driven. "CIOs should help CEOs set the success criteria for digital business," added Mr. Raskino. "It starts by remembering that you cannot scale what you do not quantify, and you cannot quantify what you do not define. You should also ask yourself: What is 'digital' for us? What kind of growth do we seek? What's the No. 1 metric and which KPIs must change?"

Many CEOs have recognized that being open-minded, entrepreneurial, adaptable and collaborative are the most-needed digital leadership mindsets. "It is time for CEOs to scale up their digital business ambition and let CIOs help them set and track incisive success metrics and KPIs, to better direct business transformation. CIOs should also help them toward more-abstract thinking about the nature of digital business change and how to lead it," concluded Mr. Raskino. "The disruption it brings often cannot be dealt with wholly within existing frames of reference."

A new update to the Worldwide Semiannual Security Spending Guide from International Data Corporation (IDC) forecasts worldwide revenues for security-related hardware, software, and services will reach $81.7 billion in 2017, an increase of 8.2% over 2016. Global spending on security solutions is expected to accelerate slightly over the next several years, achieving a compound annual growth rate (CAGR) of 8.7% through 2020 when revenues will be nearly $105 billion.

"The rapid growth of digital transformation is putting pressures on companies across all industries to proactively invest in security to protect themselves against known and unknown threats," said Eileen Smith, program director, Customer Insights and Analysis. "On a global basis, the banking, discrete manufacturing, and federal/central government industries will spend the most on security hardware, software, and services throughout the 2015-2020 forecast. Combined, these three industries will deliver more than 30% of the worldwide total in 2017."

In addition to the banking, discrete manufacturing, and federal/central government industries, three other industries (process manufacturing, professional services, and telecommunications) will each spend more than $5 billion on security products this year. These will remain the six largest industries for security-related spending throughout the forecast period, while a robust CAGR of 11.2% will enable telecommunications to move into the number 5 position in 2018. Following telecommunications, the industries with the next fastest five-year CAGRs are state/local government (10.2%), healthcare (9.8%), utilities (9.7%), and banking (9.5%).

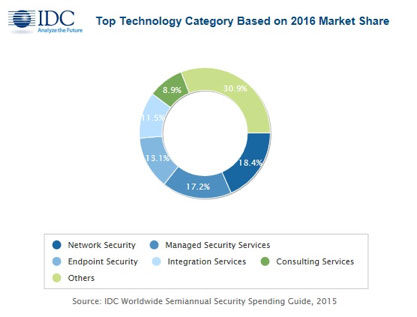

Services will be the largest area of security-related spending throughout the forecast, led by three of the five largest technology categories: managed security services, integration services, and consulting services. Together, companies will spend nearly $31.2 billion, more than 38% of the worldwide total, on these three categories in 2017. Network security (hardware and software combined) will be the largest category of security-related spending in 2017 at $15.2 billion, while endpoint security software will be the third largest category at $10.2 billion. The technology categories that will see the fastest spending growth over the 2015-2020 forecast period are device vulnerability assessment software (16.0% CAGR), software vulnerability assessment (14.5% CAGR), managed security services (12.2% CAGR), user behavioral analytics (12.2% CAGR), and UTM hardware (11.9% CAGR).

From a geographic perspective, the United States will be the largest market for security products throughout the forecast. In 2017, the U.S. is forecast to see $36.9 billion in security-related investments. Western Europe will be the second largest market with spending of nearly $19.2 billion this year, followed by the Asia/Pacific (excluding Japan) region. Asia/Pacific (excluding Japan) will be the fastest growing region with a CAGR of 18.5% over the 2015-2020 forecast period, followed by the Middle East & Africa (MEA)(9.2% CAGR) and Western Europe (8.0% CAGR).

"European organizations show a strong focus on security matters with data, cloud, and mobile security being the top three security concerns. In this context, GDPR will drive up compliance-related projects significantly in 2017 and 2018, until organizations have found a cost-efficient and scalable way of dealing with data," said Angela Vacca, senior research manager, Customer Insights and Analysis. "In particular, Western European utilities, professional services, and healthcare institutions will increase their security spending the most while the banking industry remains the largest market."

From a company size perspective, large and very large businesses (those with more than 500 employees) will be responsible for roughly two thirds of all security-related spending throughout the forecast. IDC also expects very large businesses (more than 1,000 employees) to pass the $50 billion spending level in 2019. Small and medium businesses (SMBs) will also be a significant contributor to BDA spending with the remaining one third of worldwide revenues coming from companies with fewer than 500 employees.

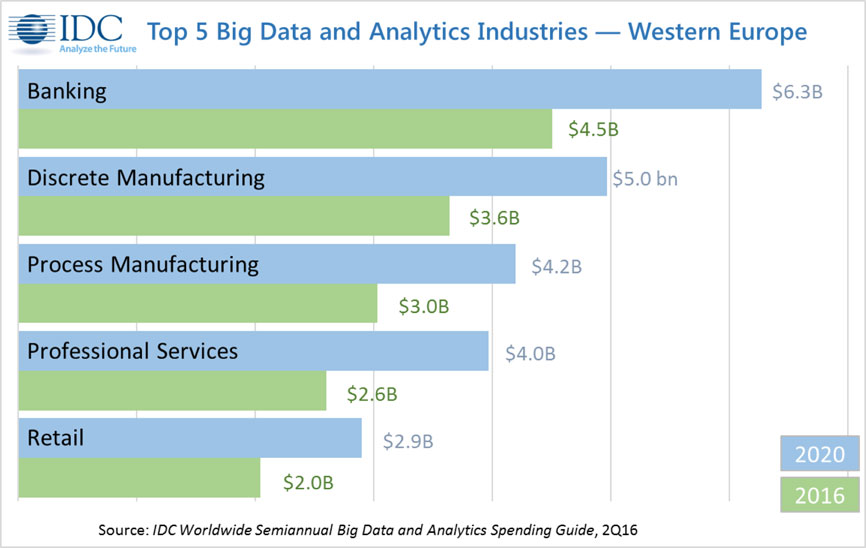

A new update to the Worldwide Semiannual Big Data and Analytics Spending Guide from International Data Corporation (IDC) forecasts that Western European revenues for Big Data and business analytics (BDA) will reach $34.1billion in 2017, an increase of 10.4% over 2016. Commercial purchases of BDA-related hardware, software, and services are expected to maintain a compound annual growth rate (CAGR) of 9.2% through 2020 when revenues will be more than $43 billion.

"Digital disruption is forcing many organizations to reevaluate their information needs, as the ability to react with greater speed and efficiency becomes critical for competitive businesses," said Helena Schwenk, research manager, Big Data and Analytics, IDC. "European organizations currently active in Big Data programs are now focusing on scaling up these efforts and propagating use as they seek to learn and internalize best practices. The shift toward cloud deployments, greater levels of automation, and lower-cost storage and data processing platforms are helping to reduce the barriers to driving value and impact from Big Data at scale."

Banking, discrete manufacturing, and process manufacturing are the three largest industries to invest in Big Data and analytics solutions over the forecast period, and by 2020 will account for more than a third of total IT spending on BDA solutions. Overall, the financial sector and manufacturing vie with each other for the largest share of spending, with finance just edging out manufacturing, accounting for 21.5% of spending on BDA solutions compared with manufacturing's 21.2%. However, the industries that will show the highest growth over the forecast period are professional services, telecommunications, utilities, and retail.

Western Europe lags the worldwide market in overall growth, with a CAGR of 9.2% for the region, while worldwide spending will grow at a CAGR of 11.9%. The highest growth is in Latin America, while the largest regional market is the U.S. with more than half of the world's IT investment in Big Data and analytics solutions.

"The investments in the finance sector — banking, insurance, and securities and investment services — apply across a wide range of use cases within the industry," said Mike Glennon, associate vice president, Customer Insights and Analysis, IDC. "Examples include optimizing and enhancing the customer journey for these institutions, together with fraud detection and risk management, and these use cases drive investment in the industry. However, the strong manufacturing base in Western Europe will also invest in Big Data and analytics solutions for more effective logistics management and enhanced analysis of operations related data, both of which contribute significantly to improved cost management, and hence profitability."

He added that adoption of Big Data solutions lags that of other 3rd Platform technologies such as social media, public cloud, and mobility, so the opportunity for accelerated investment is great across all industries.

BDA technology investments will be led by IT and business services, which together will account for half of all Big Data and business analytics revenue in 2017 and throughout the forecast. Software investments will grow to more than $17 billion in 2020, led by purchases of end-user query, reporting, and analysis tools and data warehouse management tools.

Cognitive software platforms and non-relational analytic data stores will experience strong growth (CAGRs of 39.8% and 38.6% respectively) as companies expand their Big Data and analytic activities. BDA-related purchases of servers and storage will grow at a CAGR of 12.4%, reaching $4.4 billion in 2020.

Very large businesses (those with more than 1,000 employees) will be responsible for more than 60% of all BDA spending throughout the forecast and IDC expects this group of companies to pass the $25 billion level by 2020. IT spending on Big Data and analytics solutions by businesses with fewer than 10 employees is expected to be below 1% of the total, even though these businesses account for over 90% of all businesses in Western Europe. These businesses need expertise and time to evaluate and adopt Big Data solutions and will rely heavily on solution providers to guide them through implementation of this technology.

According to the International Data Corporation (IDC) Worldwide Quarterly Cloud IT Infrastructure Tracker, vendor revenue from sales of infrastructure products (server, storage, and Ethernet switch) for cloud IT, including public and private cloud, grew by 9.2% year over year to $32.6 billion in 2016, with vendor revenue for the fourth quarter (4Q16) growing at 7.3% to $9.2 billion.

Cloud IT infrastructure sales as a share of overall worldwide IT spending climbed to 37.2% in 4Q16, up from 33.4% a year ago. Revenue from infrastructure sales to private cloud grew by 10.2% to $3.8 billion, and to public cloud by 5.3% to $5.4 billion. In comparison, revenue in the traditional (non-cloud) IT infrastructure segment decreased 9.0% year over year in the fourth quarter. Private cloud infrastructure growth was led by Ethernet switch at 52.7% year-over-year growth, followed by server at 9.3%, and storage at 3.6%. Public cloud growth was also led by Ethernet switch at 30.0% year-over-year growth, followed by server at 2.4% and a 2.1% decline in storage. In traditional IT deployments, storage declined the most (10.8% year over year), with Ethernet switch and server declining 3.4% and 9.0%, respectively.

"Growth slowed to single digits in 2016 in the cloud IT infrastructure market as hyperscale cloud datacenter growth continued its pause," said Kuba Stolarski, research director for Computing Platforms at IDC. "Network upgrades continue to be the focus of public cloud deployments, as network bandwidth has become by far the largest bottleneck in cloud datacenters. After some delays for a few hyperscalers, datacenter buildouts and refresh are expected to accelerate throughout 2017, built on newer generation hardware, primarily using Intel's Skylake architecture."

From a regional perspective, vendor revenue from cloud IT infrastructure sales grew fastest in Japan at 42.3% year over year in 4Q16, followed by Middle East & Africa at 33.6%, Canada at 16.6%, Western Europe at 15.6%, Asia/Pacific (excluding Japan) at 14.5%, Central and Eastern Europe at 11.6%, Latin America at 9.9%, and the United States at 0.1%.

| Top 5 Vendor Group, Worldwide Cloud IT Infrastructure Vendor Revenue, Q4 2016 | |||||

| Vendor Group | 4Q16 Revenue (US$M) | 4Q16 Market Share | 4Q15 Revenue (US$M) | 4Q15 Market Share | 4Q16/4Q15 Revenue Growth |

| 1. Dell Technologies | $1,587 | 17.3% | $1,645 | 19.2% | -3.5% |

| 2. HPE/New H3C Group** | $1,340 | 14.6% | $1,381 | 16.2% | -3.0% |

| 3. Cisco | $1,032 | 11.3% | $838 | 9.8% | 23.1% |

| 4. Huawei | $416 | 4.5% | $258 | 3.0% | 61.4% |

| 5. IBM* | $346 | 3.8% | $357 | 4.2% | -3.0% |

| 5. Lenovo* | $295 | 3.2% | $275 | 3.2% | 7.3% |

| 5. NetApp* | $267 | 2.9% | $257 | 3.0% | 3.9% |

| ODM Direct | $1,877 | 20.5% | $1,926 | 22.5% | -2.6% |

| Others | $2,014 | 28.1% | $1,613 | 25.1% | 24.9% |

| Total | $9,173 | 100% | $8,549 | 100% | 7.3% |

| IDC's Worldwide Quarterly Cloud IT Infrastructure Tracker, April 2017 | |||||

Notes:

* IDC declares a statistical tie in the worldwide cloud IT infrastructure market when there is a difference of one percent or less in the vendor revenue shares among two or more vendors.

** Due to the existing joint venture between HPE and the New H3C Group, IDC will be reporting external market share on a global level for HPE as "HPE/New H3C Group" starting from Q2 2016 and going forward.

| Top 5 Vendor Group, Worldwide Cloud IT Infrastructure Vendor Revenue, 2016 | |||||

| Vendor Group | 2016 Revenue (US$M) | 2016 Market Share | 2015 Revenue (US$M) | 2015 Market Share | 2016/2015 Revenue Growth |

| 1. Dell Technologies | $5,747 | 17.6% | $5,391 | 18.1% | 6.6% |

| 2. HPE/New H3C Group** | $5,311 | 16.3% | $4,768 | 16.0% | 11.4% |

| 3. Cisco | $3,783 | 11.6% | $2,906 | 9.7% | 30.2% |

| 4. Huawei* | $1,205 | 3.7% | $743 | 2.5% | 62.3% |

| 4. Lenovo* | $1,097 | 3.4% | $990 | 3.3% | 10.8% |

| 4. IBM* | $1,017 | 3.1% | $1,257 | 4.2% | -19.1% |

| 4. NetApp* | $1,007 | 3.1% | $1,036 | 3.5% | -2.8% |

| ODM Direct | $6,733 | 20.7% | $7,427 | 24.9% | -9.3% |

| Others | $6,694 | 20.5% | $5,327 | 17.8% | 25.7% |

| Total | $32,594 | 100% | $29,845 | 100% | 9.2% |

| IDC's Worldwide Quarterly Cloud IT Infrastructure Tracker, April 2017 | |||||

Notes:

* IDC declares a statistical tie in the worldwide cloud IT infrastructure market when there is a difference of one percent or less in the vendor revenue shares among two or more vendors.

** Due to the existing joint venture between HPE and the New H3C Group, IDC will be reporting external market share on a global level for HPE as "HPE/New H3C Group" starting from Q2 2016 and going forward.

An update to the Worldwide Semiannual Cognitive/Artificial Intelligence Systems Spending Guide from International Data Corporation (IDC) forecasts Western European revenues for cognitive and artificial intelligence (AI) systems will reach $1.5 billion in 2017, an increase of 40.0% over 2016. Western European spending on cognitive and AI solutions will continue to see significant corporate investment over the next several years, achieving a compound annual growth rate (CAGR) of 42.5% through 2020, when revenues will be more than $4.3 billion.

"IDC is seeing huge interest in cognitive applications and AI across Europe right now, from different industry sectors, healthcare, and government," said Philip Carnelley, research director for Enterprise Software at IDC Europe and leader of IDC's European AI Practice. "Although only a minority of European organizations have deployed AI solutions today, a large majority are either planning to deploy or evaluating its potential. They are looking at use cases with clear ROI, such as predictive maintenance, fraud prevention, customer service, and sales recommendation."

The three largest Western European industries to invest in cognitive and artificial intelligence systems are banking, retail, and discrete manufacturing, although cross-industry applications have the largest share across all industries. By 2020 these industries – including cross-industry applications – account for almost half of all IT spending on cognitive and artificial intelligence systems. The total finance sector (banking, insurance, and securities) will account for 22% of cognitive spending in 2020. However, the fastest growing sectors to 2020 are distribution and services (professional services, retail, transportation) with a CAGR of 60.8% to 2020, the public sector (education, government, healthcare provider) with a CAGR of 60.8%, and infrastructure (telecommunications, utilities) with a CAGR of 60.1%

Western Europe represents 12.1% of spending on cognitive and artificial intelligence systems in 2017, but its overall growth in spending at a CAGR of 42.5% lags the worldwide market, which shows a CAGR of 54.4% from 2015 to 2020. Western Europe's share of spending will fall to 9.5% of the world by 2020 and the region loses its second place to the Asia/Pacific region (including Japan).

From a technology perspective, the largest area of spending in Western Europe in 2017 will be cognitive applications ($516 million), which includes cognitively-enabled process and industry applications that automatically learn, discover, and make recommendations or predictions. Cognitive/AI software platforms, which provide the tools and technologies to analyze, organize, access, and provide advisory services based on a range of structured and unstructured information, will see investments reach $350 million this year, as will IT services associated with cognitive and artificial intelligence systems; but cognitive/AI software platforms show much higher growth, and will nudge past $1.0 billion by 2020. Dedicated server and storage purchase will total just under $250 million in 2017, but will reach nearly $850 million by 2020.

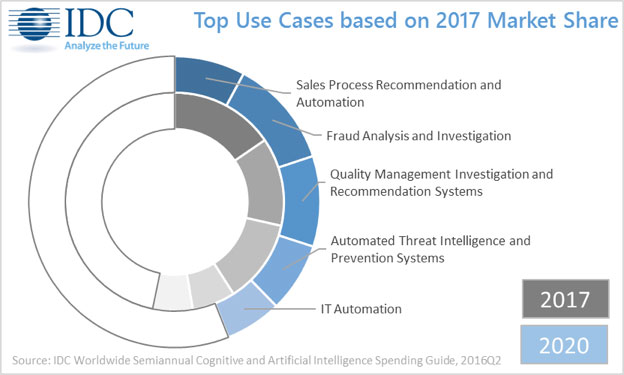

The cognitive/AI use cases that will see the greatest levels of investment in Western Europe this year are: sales process recommendation and automation systems, fraud analysis and investigation systems, quality management investigation and recommendation systems, automated threat intelligence and prevention systems, and IT automation systems. Combined, these five use cases will consume over half of all cognitive/AI systems spending in 2017. However, by the end of the forecast, slower spending on sales process recommendation and automation systems will drop it to the number 4 position, with fraud analysis and investigation systems taking over the lead position among use cases. Those use cases that will experience the fastest spending growth over the 2015-2020 forecast period are smart networking (137% CAGR) and diagnosis and treatment systems (87.4% CAGR), although smart networking will still only represent less than 1% of spending by 2020.

"Cognitive Computing is coming, and we expect it to embed itself across all industries. However, early adopters are those tightly regulated industries that need robust decision support: Finance, specifically banking and securities investment services, is one of these early adopters" said Mike Glennon, Associate Vice President, Customer Insights and Analysis.

"However, the cost savings to be found in automating decision support in a structured environment, together with the enhanced ability to identify previously hidden aspects of behavior, ensure the distribution and services and public sectors embrace cognitive computing and artificial intelligence systems – where it can offer the dual benefits of lowering cost, and growing new business. We also expect strong growth in adoption in manufacturing in Western Europe, at the core of industry across the region."

The growth of cloud and industrialized services and the decline of traditional data center outsourcing (DCO) indicate a massive shift toward hybrid infrastructure services, according to Gartner, Inc.

In a report containing a series of predictions about IT infrastructure services, Gartner analysts said that by 2020, cloud, hosting and traditional infrastructure services will come in more or less at par in terms of spending.

"As the demand for agility and flexibility grows, organizations will shift toward more industrialized, less-tailored options," said DD Mishra, research director at Gartner. "Organizations that adopt hybrid infrastructure will optimize costs and increase efficiency. However, it increases the complexity of selecting the right toolset to deliver end-to-end services in a multisourced environment."

Gartner predicts that by 2020, 90 percent of organizations will adopt hybrid infrastructure management capabilities.

The traditional DCO market is shrinking, according to Gartner's forecast data. Worldwide traditional DCO spending is expected to decline from $55.1 billion in 2016 to $45.2 billion in 2020. Cloud compute services, on the other hand, are expected to grow from $23.3 billion in 2016 to reach $68.4 billion in 2020. Spending on colocation and hosting is also expected to increase, from $53.9 billion in 2016 to $74.5 billion in 2020. In addition, infrastructure utility services (IUS) will grow from $21.3 billion in 2016 to $37 billion in 2020 and storage as a service will increase from $1.7 billion in 2016 to 2.7 billion in 2020.

Advertisement: Gigamon - An intro to the first pervasive Visibility Platform into Hybrid, Private and Public Cloud.

In 2016, traditional worldwide DCO and IUS together represented 49 percent of the $154 billion total data center services market worldwide, consisting of DCO/IUS, hosting and cloud infrastructure as a service (IaaS). This is expected to tilt further toward cloud IaaS and hosting, and by 2020, DCO/IUS will be approximately 35 percent of the expected $228 billion worldwide data center services market.

"This means that by 2020 traditional services will coexist with a minority share alongside the industrialized and digitalized services," said Mr. Mishra.

A 2016 Gartner survey of 303 DCO reference customers worldwide found that 20 percent use hybrid infrastructure services and 20 percent more intend to get them in the next 12 months.

Gartner also predicts that through 2020, data center and relevant "as a service" (aaS) pricing will continue to decline by at least 10 percent per year.

From 2008 through 2016, Gartner pricing analysis of data center service offerings shows prices have dropped yearly by 5 percent to 7 percent for large deals and by 9 percent to 12 percent for smaller deals.

More recently — from 2012 to the present — prices for the new aaS offerings, including IaaS and storage as a service, have dropped in similar to higher ranges.

Traditional DCO vendors will exit the DCO market due to price pressure, while others will develop solution capabilities and continue to compete. Buyers will have the ability to choose between many more vendors, choose traditional or new solutions and achieve price reductions year over year through 2020.

By 2019, 90 percent of native cloud IaaS providers will be forced out of this market by the Amazon Web Services (AWS)-Microsoft duopoly.

Over the last four years, the public cloud IaaS market has begun to develop two dominant leaders — AWS and Microsoft Azure — that are beginning to corner the market. In 2016, they both grew their cloud service businesses significantly while other players are sliding backward in comparison. Between them, they not only have many times the compute power of all other players, but they are also investing in innovative service and pricing offerings that others cannot match.

According to Gartner, it is only in new markets that the dominance of AWS and Microsoft will be challenged by businesses such as Aliyun, the cloud service arm of Alibaba, the top player in China.

"The competition between AWS and Azure in the IaaS market will benefit sourcing executives in the short to medium term but may be of concern in the longer term," said David Groombridge, research director at Gartner. "Lack of substantial competition for two key providers could lead to an uncompetitive market. This could see organizations locked into one platform by dependence on proprietary capabilities and potentially exposed to substantial price increases."

Worldwide IT spending is projected to total $3.5 trillion in 2017, a 1.4 percent increase from 2016, according to Gartner, Inc. This growth rate is down from the previous quarter's forecast of 2.7 percent, due in part to the rising U.S. dollar (see Table 1.)

"The strong U.S. dollar has cut $67 billion out of our 2017 IT spending forecast," said John-David Lovelock, research vice president at Gartner. "We expect these currency headwinds to be a drag on earnings of U.S.-based multinational IT vendors through 2017."

The Gartner Worldwide IT Spending Forecast is the leading indicator of major technology trends across the hardware, software, IT services and telecom markets. For more than a decade, global IT and business executives have been using these highly anticipated quarterly reports to recognize market opportunities and challenges, and base their critical business decisions on proven methodologies rather than guesswork.

The data center system segment is expected to grow 0.3 percent in 2017. While this is up from negative growth in 2016, the segment is experiencing a slowdown in the server market. "We are seeing a shift in who is buying servers and who they are buying them from," said Mr. Lovelock. "Enterprises are moving away from buying servers from the traditional vendors and instead renting server power in the cloud from companies such as Amazon, Google and Microsoft. This has created a reduction in spending on servers which is impacting the overall data center system segment."

Table 1. Worldwide IT Spending Forecast (Billions of U.S. Dollars)

|

| 2016Spending | 2016 Growth (%) | 2017Spending | 2017 Growth (%) | 2018 Spending | 2018 Growth (%) |

| Data Center Systems | 171 | -0.1 | 171 | 0.3 | 173 | 1.2 |

| Enterprise Software | 332 | 5.9 | 351 | 5.5 | 376 | 7.1 |

| Devices | 634 | -2.6 | 645 | 1.7 | 656 | 1.7 |

| IT Services | 897 | 3.6 | 917 | 2.3 | 961 | 4.7 |

| Communications Services | 1,380 | -1.4 | 1,376 | -0.3 | 1,394 | 1.3 |

| Overall IT | 3,414 | 0.4 | 3,460 | 1.4 | 3,559 | 2.9 |

Source: Gartner (April 2017)

Advertisement: MSH Summit

Driven by strength in mobile phone sales and smaller improvements in sales of printers, PCs and tablets, worldwide spending on devices (PCs, tablets, ultramobiles and mobile phones) is projected to grow 1.7 percent in 2017, to reach $645 billion. This is up from negative 2.6 percent growth in 2016. Mobile phone growth in 2017 will be driven by increased average selling prices (ASPs) for phones in emerging Asia/Pacific and China, together with iPhone replacements and the 10th anniversary of the iPhone. The tablet market continues to decline significantly, as replacement cycles remain extended and both sales and ownership of desktop PCs and laptops are negative throughout the forecast. Through 2017, business Windows 10 upgrades should provide underlying growth, although increased component costs will see PC prices increase.

The 2017 worldwide IT services market is forecast to grow 2.3 percent in 2017, down from 3.6 percent growth in 2016. The modest changes to the IT services forecast this quarter can be characterized as adjustments to particular geographies as a result of potential changes of direction anticipated regarding U.S. policy — both foreign and domestic. The business-friendly policies of the new U.S. administration are expected to have a slightly positive impact on the U.S. implementation service market as the U.S. government is expected to significantly increase its infrastructure spending during the next few years.

Despite continuing price cuts, analyst firm finds margins still healthy for cloud providers.

In its latest analysis of cloud pricing, 451 Research reveals that the cloud price battlefield has shifted from virtual machines (VMs) to object storage. The analyst firm predicts that other services, particularly databases, will undergo the same pricing pressures over the next 18 months.

Until recently, the prices of services beyond compute held steady in the face of intense competition, according to 451 Research’s Cloud Price Index. Virtual machines have been the traditional battleground for price cuts as providers have sought to gain attention and differentiation. The tide has now turned, with object storage pricing declining in every region, including a drop of 14% over the past 12 months. For comparison, the cloud mainstay of VMs has dropped a relatively small 5% over the same period.

Analysts believe market maturity is leading to price cuts moving beyond compute. Other factors include increasing cloud-native development and faith in the cloud model, as well as a competitive scrum to capture data migrating out of on-premises infrastructure.

While some in the industry have speculated that cloud providers have been using cheap VMs as 'loss leaders' in their cloud portfolios, 451 Research finds that, even in the worst case, margins for VMs are at least 30%. There is little data suggesting cloud is anywhere near a commodity yet. Analysts believe the cloud market is not highly price-sensitive at this time, although naturally, end users want to make sure they are paying a reasonable price.“The big cloud providers appear to be playing an aggressive game of tit for tat, cutting object storage prices to avoid standing out as expensive,” said Jean Atelsek, Analyst, Digital Economics Unit at 451 Research. “This is the first time there has been a big price war outside compute, and it reflects object storage’s move into the mainstream. While price cuts are good news for cloud buyers, they are now faced with a new level of complexity when comparing providers.”

The cloud storage battle started in Q3 2016 when 451 Research’s Digital Economics Unit identified a reduction in IBM SoftLayer’s object storage prices. Google, AWS and then Microsoft followed suit with cuts as well. The 451 Research Digital Economics Unit predicts that prices for virtual machines and object storage will continue to come down, with relational databases likely to be the next competitive front.

Digital solutions offer many benefits, but also present new risks as organizations become more open and agile. Tom Scholtz, vice president and Gartner Fellow, and conference chair for Gartner Security & Risk Management Summit 2017, discusses how security and risk management leaders are developing security programs for digital business and the challenges they should be prepared to meet.

Q: How has security and risk management evolved to support digital business?

A: Digital business is about access and collaboration — organizations have to let external partners and customers in to participate. The end user has typically been deemed the weakest link in the security chain. In a digital world, end users are part of the security function and a people-centric solution. Therefore, security and risk management leaders are developing programs based on trust. Instead of a default-deny approach to security, we are now seeing a default-allow approach. This is a fundamental change in how security programs are developed.

Q: Which technologies should be on security and risk management leaders’ radars?

A: User and entity behavior analytics (UEBA) are important, as is understanding and institutionalizing adaptive security architecture. Artificial intelligence (AI) can deliver context-based situational intelligence to improve security decision making. Blockchain is transforming digital commerce and has potential value for security as a means of supporting more distributed trust.

New technologies create new risks. AI generates intellectual property that must be protected, like algorithms and institutionalized knowledge that defines what is normal for an organization’s systems. The right hack could have catastrophic effects on an organization’s production system. AI opens the door to more subtle forms of disruption, too. For instance, a hacker may just make tweaks that do not bring an entire system down so that small failures go unnoticed.

Security teams have to stay current and proactive. They need to be aware of new technologies and the vendor landscape to determine what to adopt into their security programs.

Q: What are the biggest challenges security and risk management leaders face today?

A: Chief information security officers (CISOs) are tasked with strategic planning in a digital business environment where agile and bimodal are critical to success. They also need to acquire talent to manage the IoT and integration of operational technology.

Security teams have to stay current and proactive. They need to be aware of new technologies and the vendor landscape to determine what to adopt into their security programs. They also need to understand the latest security threats because the threat landscape is evolving rapidly and becoming more complex.

The General Data Protection Regulation (GDPR), the new EU privacy and personal data protection law going into effect in 2018, presents a challenge to risk and compliance leaders because they need to make sure their organizations are compliant. Risk and compliance leaders also need to evolve and shift their focus on compliance to managing risk effectively to protect the organization. Risk and compliance leaders must make sure their organizations understand the risks and accountability associated with new technologies as they invest in digital business initiatives.

Business continuity management (BCM) leaders must continue building IT and business operations while facing threats that are more serious, as well as frequent disruptions. They have to protect against disruptions, but also plan for how their organizations overcome them and minimize their impact. Resilience, not just recovery, has to be engineered into digital business systems. Critical infrastructure must be resilient enough to withstand a cyberattack and recover from a major disaster, ideally without interruption.

This article first appeared on Smarter with Gartner.

A key revelation to some at the first European Managed Services and Hosting

Summit in Amsterdam on 25th April was that, outside of the managed services

industry, no-one is calling it that. With a strong focus on customers and how

they engage with managed services, the event discussed how the model had become

mainstream in the last year, and was now the assumed way of working for many

industries.

Over 150 attendees from nineteen different European countries met to review the state of the market and the ways to take the industry forward. Bianca Granetto, Research Director at Gartner, set the scene with a keynote on how Digital Business redefines the Buyer-Seller relationship. In this she showed how customers are using more and more diverse IT suppliers, while still looking for a trust relationship with those suppliers, and that this process will continue in coming years. “The future managed services company will look very different from today’s,” she concluded.

This was reinforced by TOPdesk’s CEO Wolter Smit who, in a discussion on the new services model, said that MSPs were actually in the driving seat as the larger IT companies could not reach their level of specialisation. Dave Sobel, SolarWinds MSP’s partner community director also pointed out that many of the existing IT services companies were decades old and, with management due for replacement, new thinking among the providers was inevitable.

The top trends affecting the market were outlined by several speakers, with IoT, user experience and smart machines within the list – and IoT will be profitable for suppliers, according to Dave Sobel, with the MSPs top of the list as beneficiaries.

IT Europa’s editor John Garratt highlighted the differences between the US and European managed services markets, with the US more focused on financial returns. Price was apparently less important to European customers, who were more focused on gaining control of their IT resources. Autotask’s Matthe Smit said that price indeed mattered less than a good supportive relationship. But, he said, less than half of providers actually measured customer satisfaction, and this would have to change.

If anyone was in any doubt of the impact of the new model, Robinder Koura, RingCentral’s European channel head, showed how cloud-based communications had pushed Avaya into bankruptcy, and the new force was cloud-based and more flexible.

Security was never going to be far from the discussions, and Datto’s Business Development Director Chris Tate shook up the meeting with some of the latest statistics on ransomware. MSPs are in the firing line in the event of an attack like this, and he gave some sound advice on responses and precautionary measures. Local MSP Xcellent Automatisering’s MD Mark Schoonderbeek also revealed how he launched new services using a four-layered security offering: “First we'll search for vendors through our existing partnerships. When we find a good product - we'll R&D it from a technical standpoint. If the product meets our quality standards we will roll out within our own production environment. Then we'll go to one of our best customers in a very early stage, we tell them it's a test-phase and we'll implement the service for free, but in return we want the customers feedback (what went well, what went not so well and what is the perceived value of the service that is offered). Then we'll make a cost calculation and ask the customer what the service is worth. We'll put a price on the product and deliver it fixed price. Next step is to sell the product to all existing customers.”

The impact of the new EU General Data Protection Regulation (GDPR) was starting, but there were many unknowns, not least how various regulators across Europe would react to the provisions, warned legal expert and partner at Fieldfisher, Renzo Marchini, while the opportunities and general strong confidence in the European IT market were illustrated by Peter van den Berg, European General Manager for the Global Technology Distribution Council (GTDC).

Finally, a well-received analysis of what was going on in the tech M&A sector showed attendees where to make their fortunes and how to do so quickly. Perhaps unsurprisingly the key to creating value within a company turns out to be generating highly repeatable revenues – which is what managed services is all about.

For further information on the European Managed Services and Hosting Summit visit www.mshsummit.com/amsterdam.

Many of the issues debated during the European Managed Services event will be further discussed at the UK Managed Services and Hosting Summit, which will be staged in London in September – www.mshsummit.com

The Internet of Things (IoT) is disrupting life as we know it, from our homes and offices, to connected cities and beyond. As IoT streamlines business processes, it empowers our ability to connect with people, systems and environments that shape our daily lives, and can vastly contribute to improving efficiencies within our organisations.

By Nick Bailey, Head of Practice, Connected World, Networkers.

In particular, IoT can help the healthcare sector to advance innovative projects within the NHS. These include the ‘Diabetes Digital Coach’ which provides remote monitoring technology to permit effective self-management of diabetes, and ‘Technology Integrated Health Management (TIHM)’ which creates wearables to help patients with dementia remain in their homes for longer.

The significance of IoT on the business world was highlighted in Networkers’ recent Technology: Voice of the Workforce research. In our survey of over 1,600 technology professionals, we found that 40% believe IoT will be the biggest future challenge for the tech industry. Crucially, 64% don’t believe their company embraces technology which will allow them to adapt for the future.

So, how can businesses guarantee they are equipped to handle IoT?

First, they need to ensure that senior decision makers are prioritising IoT, and developing employee roles within the workforce. At a high level, a Chief Internet of Things Officer (CIoTO) will keep the importance of IoT at the forefront of executives’ minds. In wider teams, there is a need for full stack developers, whose knowledge extends beyond basic software and who possess the ability to incorporate hardware programming into their skillsets.

The challenge companies will face when building this workforce, is finding the right skills. Our research found that 57% of tech professionals believe there is a skills shortage in the technology sector. As demand is outstripping supply for IT professionals who are well-equipped to deal with IoT, it will be crucial for companies to build and retain core IT skill sets.

With the number of connected things due to increase to more than 20 billion by 2020 (Gartner)[1], the pressure is on for companies to invest in IoT systems that are secure. IoT, often dubbed the ‘Internet of Hackable Things’, is vulnerable due to the high number of devices containing confidential data that are at risk of attack from cyber criminals. Consequently, the big IoT players are creating partnerships to deliver IoT ecosystems, for one simultaneous authentication across multiple devices, improving security and accelerating processes.

Ultimately, to embrace IoT, public and private organisations need to build prepared workforces and develop systems that are secure. The interconnectedness of our society will benefit as a result.

[1] Source: Gartner (November 2015), http://www.gartner.com/newsroom/id/3165317

The emergence of “big software” – a complex assembly of many software components sourced from different vendors, running on multiple distributed machines, providing to its user the impression of a single system – has raised the importance of operations in a software, and in particular, a hybrid cloud world.

By Stefan Johansson, Global Software Alliances Director, Canonical.

Integration and operations now consume a significant share of technology budgets, and for IT organizations and there is no sign of slowing down. To help alleviate the burden of integrating software components many IT organizations are adopting open source software and model driven operations to reduce complexity and integration costs while improving time to market. The cloud and microservices (independent services that interact with a network) are making software implementation more challenging and distributed. The costs and intricacies of Big Software are keeping many CIOs and DevOps leaders awake at night. Where do these technology leaders turn for answers? In-house solutions are expensive to deploy and integrate, probably not. Costly systems integrators that only focus on solving one technology challenge and have a long learning curve, not optimal. Or organizations can continue to invest in siloed solutions only to achieve incremental gains, too expensive and time consuming. Forward-thinking IT & DevOps executives are adopting:

“Buy what you can, build what you have to, and integrate for competitive advantage.”

These hurdles are why model driven operations are changing how software is deployed and operated today.

For CIOs and DevOps, model driven operations improves how software is not just deployed, but scaled across the enterprise or among various cloud services, providers, or bare-metal servers. One of the main values for model driven operations is the ability to share and reuse open source code that has common components and functionality so development organizations can spend their time and resources deploying solutions unique to their business. This allows internal teams and systems integrators to leverage model driven operations to focus on what they do best while delivering business value, improve lead time and be more efficient.

For example, when a new server needs to be deployed, modelling can automate most, if not all, of the provisioning process. Automation makes deploying solutions much quicker and more efficient because it allows tedious tasks to be performed faster and more accurately without human intervention. Even with proper and thorough documentation, manually deploying a web server or Hadoop deployment, for example, could take hours compared to a few minutes with service modelling. This is why CIOs and DevOps chiefs are adopting service modelling as a way to make the most effective use of their team’s precious resources and time.

Further, model-driven operations gives development organizations more choice in how services are consumed (public, private, or hybrid cloud) and options that make it easier to replicate environments with the same software and configurations. As these systems evolve, organizations can deploy pre-configured services, private infrastructure solutions including OpenStack, and even the organization’s own code to any public or private cloud. This allows enterprises to deploy solutions that are consistent, integrated, and relevant to their business needs.

Companies like Google, Amazon, AT&T, and many others have all moved to model-driven operations to provision and deploy software and cloud services across multiple domains and environments faster and more efficiently.

Companies are integrating the tools and technologies that will help drive business outcomes faster, more reliably, and efficiently. Software modelling solutions like Canonical’s Juju helps customers to build and deploy proofs of concepts faster, integrate solutions more seamlessly while expanding their organization’s capabilities more broadly. Juju is a universal modelling solution that speaks to executives, developers, and operations. Imagine using a solution that enables the deployment of revenue-generating cloud services with only dragging and dropping a few commands.

Juju Charms, which are sets of scripts for deploying and managing services within Juju, allow organizations to connect, integrate, and deploy new services automatically without the need for consultants, integrators, or additional costs or resources. Companies can choose from hundreds of microservices that enable everything from cloud communications via WebRTC, IoT enablement, big data, web services, mobile applications, security, and data management tools. Further, with the rise of open source, enterprises, and programmers can leverage the power of a vast library and a community of developers to design, develop, and deploy their solutions much faster. Additionally, network administrators and developers can free up their time to focus on bringing to market revenue-generating solutions and services, rather than architecting complicated network stacks and deploying additional resources. What matters to the developer is what services are involved, not the details of how many machines they need, which cloud they are on, whether they are big machines or small machines, or whether all the services installed are on the same machine. There has been a shift from software and infrastructure orchestration to model driven operations that makes the task of deploying distributed systems - or Big Software - more efficient and faster. It’s about choice and options.

The world of software deployment is becoming more and more complex. Software deployments were once simple and spread across a few machines, now they have evolved to become distributed across many machines, operating systems, regions, and environments. This shift has created both a competitive threat and simultaneously, a massive opportunity. Many CIOs and DevOps executives are exploiting these opportunities, while others risk being relegated to the dustbin of oblivion. Model driven operations helps organizations to reduce complexity, improve efficiency, and deploy revenue-generating services and solutions faster.

For more information about how Canonical can help your business to understand and move to model driven operations please contact us. If you are ready to get started, or want to learn more about how Canonical’s Juju solution can help drive model driven operations, please click here.

Throughout history, the balance of power has shifted back and forth between attackers and defenders, with technology playing a significant role in the fluctuation.

By Shehzad Merchant, Chief Technology Officer, Gigamon.

At one time, a well-defended and provisioned castle was practically impregnable (defender advantage) until gunpowder and cannons came along and shifted the balance (attacker advantage). Later, barbed wire and machine guns made it almost impossible for World War I foot soldiers to break through the trenches (defender advantage). But just 20 years on, the internal combustion engine made those trenches totally obsolete and, once again, swung the advantage back (attacker advantage).

Today with cyberwarfare, the balance of power favours the attacker. There are several reasons for this. Firstly, the democratization of malware is bringing powerful instruments of cyber-attack to the masses while sophisticated tools to compromise the human element via phishing campaigns are now available to the masses. Zero-day vulnerabilities are readily available for purchase at the right price and command and control infrastructures are available for rent. Finally, large-scale botnets leveraging IOT devices are being advertised freely as weapons of unparalleled scale for DDoS attacks.

And while this democratization of malware is creating an unprecedented surge in the number of cyber-attacks, another factor that is making it harder for the defender to keep the attacks at bay is the speed at which data is moving. Today on 100Gbs networks, the time from the start of one packet on the wire to the start of the next packet can be as little as 6.7ns—that is 6.7 billionths of a second. This makes it very difficult to do any intelligent or meaningful analysis of data flying by in real time. Consequently, it is becoming increasingly difficult to prevent malware from breaking through.

An opportunity—perhaps an historic one—does, however, exist to reverse the current cyber attacker advantage. But it will require a fundamental rethinking of our security frameworks and models. Our traditional security models have focused on keeping threats out. It’s an approach akin to putting Band-Aids on parts of the skin. Not only does this leave large parts of the body exposed, but it doesn’t help to defend against airborne, water-borne, or other communicable diseases.

By contrast, if we were to flip that model and focus on the inside, we can reverse the advantage. This new approach would see cybersecurity acting more like the human immune system does—from the inside and providing full coverage of the body. The human immune system can learn about, remember, and adapt to threats; it can respond rapidly to combat the threats from within; and it can provide comprehensive and complete coverage against massive numbers of diseases and strains of bacteria and viruses.

Cybersecurity needs to evolve and adapt to a similar model. It should provide complete coverage from within the organization to detect malware and threats within the organization. It should learn and adapt to polymorphic variants in threats. And it should be able to act quickly.

To build such a security immune system, organizations should consider four central pillars—good hygiene, detection, prediction and action—that are all underpinned by pervasive visibility as a critical foundational layer.

The four foundational pillars should always be viewed, however, in terms of a lifecycle of detection, prediction and action continuum.

To force a shift in the balance of power between the cyber attacker and defender, a fundamental discontinuity needs to come about. Changing our mindsets in terms of how we think about cybersecurity—and learning from the human immune system—provides an opportunity to bring about that discontinuity and leverage technology to shift the advantage back to the defender.

Big data has been around for far longer than it has been a trending topic but businesses are only really beginning to realise its potential to transform internal processes and impact customer experience.

Paul Cant, Vice President EMEA, BMC Software.

According to a recent report from Accenture, “Big Success From Big Data,” researchers found that 89 percent of respondents who have implemented at least one big data project see it as a way to revolutionise business operations. Furthermore, 85 percent believe big data will dramatically change the way business is done. Yet there are still operational challenges preventing businesses from fully embracing this new way to trade.

In a world where everything is connected, IT pros are overwhelmed, searching through inundations of data for meaningful insights that can give the business any advantage over competitors and protect its infrastructure, data, and employees. It is often an arduous, time-consuming, and manual process that frequently results in analysing old and therefore irrelevant data.

An enhanced approach to scrutinising and discovering terabytes of data isn’t just favourable, it is imperative in the 4th Industrial Revolution.

Volumes are exploding, objects are getting bigger, and even planned downtime is unacceptable and costly if you can’t meet business demands for availability and performance. Eventually every organisation will want to benefit as the lines of business push for better access to big data. But how can businesses formulate new methods of collection, storage and analysis to extract the most value from their data?

The challenge that businesses face is that the more volume, velocity, and variety of data that is introduced into the organisation the more the need for a sophisticated and scalable approach to managing the big data environment. Mastering this data is fundamental to every organisation's successful digital transformation – and failure to leverage the data and analytics will cripple an organisation's ability to meet customer expectations and competitive pressures.

When data is siloed, such as residing in self-contained departmental databases, you’re going to get lackluster insights. It is important to take a holistic approach to a big data strategy. The big data technology ecosystem must interface with enterprise applications and data sources, such as ERP solutions and connected devices, to integrate data in one central location.

Right from the pilot phase of a big data initiative, automation processes must be put in place to ensure that data from across multiple sources can be seamlessly ingested, processed and available to the business, for on demand analytics

In the modern digital business revolution technology is integrated into every step of an organisation’s value chain. This unprecedented use of technology creates a complex challenge for IT Infrastructure and Operations organisations, as it becomes difficult to collect and organise the sheer volume of data generated by new digital business systems and sources.

With technology that enables the collection, storage, and analysis of this data, business demand for big data deployments has moved from experimentation to production. At the same time, IT needs to keep integration with existing business systems in mind. For instance, structured data remains the main focus, so IT needs to keep traditional storage and analytics solutions in place while exploring big data.

To leverage the benefits of the data explosion, organisations need to prepare their IT teams properly. An IT operations management tool with enterprise-grade capacity optimisation and visualisation can help IT plan and right size the data ecosystems—including compute, storage, and network resources, ensuring control over infrastructure costs. Not to mention, having the right actionable data helps you to be proactive when you’re troubleshooting any IT operations issue.

But the most valuable use of big data analytics is not reporting what’s already happened; it is accurately predicting future outcomes and behavior affecting the important areas of the business. Through predictive big data analytics, organisations can change operations in real time and build strategic, forward-looking plans to drive faster business outcomes, which give companies a competitive edge. One of the most powerful types of data for actionable insight is the ability see how measurements change over time, intelligence indicating trends upward or downward to enable action before a condition impacts end users.

With IDC predicting that the digital universe will double every two years to about 1.7 MB of new information created every second for every human by 2020 it is clear that data in the modern age is the currency that guides all decisions and actions. Complete and accurate analysis will empower IT operations to make fast, data-driven decisions that support continuous digital service improvement and innovation.

Everyone remembers the social media boom of the mid-2000s. While social networks such as MySpace and Friendster already existed and had fledgling ad revenue models, it wasn’t until the emergence of Twitter, Facebook’s acquisition of FriendFeed, and the development of tools such as HubSpot and HootSuite that businesses began to take social media seriously as a digital channel.

By Josh Lefkowitz, CEO, Flashpoint.

Then, as is the case of all emerging technology use cases, market confusion began. Is social media really important in business? Is it digital marketing? Is it social media for business? Is it social marketing? Does it fit in lead generation or communications?

In the end it was rightly determined that social media is merely a tactical approach that is part of a bigger marketing and business strategy and wouldn’t be as valuable if that strategy were not developed first. And, as with most strategic development, sometimes research and more advanced tools are required to glean the information to put the right tactics in motion.

Fast forward to the mid-2010s and we’re in a similar dilemma with the crowded cyber threat intelligence (CTI) market, especially in the discussion around digital risk monitoring. According to Forrester, digital risk is assessing cyber risk, brand risk, and physical risk emanating from open web properties, social networks, and some computer and mobile applications. Much like tactical social media tools, a good intelligence-rich strategy needs to be developed in advance of any digital risk monitoring implementation in order to be most effective.

Business Risk Intelligence (BRI), on the other hand, provides strategic intelligence gleaned from the Deep & Dark Web that informs organisations what the actual threats are that are critical to their business. While many organisations do have digital risk monitoring in addition to BRI, many organisations end up adding BRI later on to address the intelligence gap that digital risk monitoring approaches leave open. Many concerns often stem from missed information around insider threats, fraud, anti-money laundering, geopolitical intelligence, supply chain, and a need for more sophisticated threat actor profiling or directed actor engagement.

For one, putting the tactical before the strategic is going to land most organisations in a corner where they are missing business critical information. Second, digital risk monitoring solutions, even if they offer data from the Deep & Dark Web, do not often have expertise beyond purely automated approaches to gain information, which can never be rich enough to be considered intelligence.

Just as strategy needs to come before tactics, BRI must come before digital risk monitoring. Digital risk solutions are good for setting and monitoring already known information, or as I’ve said before, “answering the questions companies already know to ask.” But BRI is what helps determine what needs to change in operations, policies, and protections across an organisation.

Here’s an example based on the insider threat use case. In one incident, intelligence from an underground forum revealed that a rogue employee of a multinational technology company was preparing to profit from stolen source code from unreleased, enterprise-level software. With this intelligence, the company was able to be alerted and then supported in completing an internal investigation, work with law enforcement to support the employee’s arrest, prevent the illicit sale, and preserve the company’s intellectual property.

Digital risk monitoring could not have been used to detect or mitigate this insider threat. BRI, on the other hand, found the threat in its relevant context, enabling the company to take the appropriate steps to minimise its risk.

According to The Forrester Wave: Digital Risk Monitoring, Q3 2016: "Generic online or social media monitoring provides a false sense of security. Many security and risk] and marketing pros remain naïve about serious risks in their organisation’s digital presence, because they believe their existing social media monitoring or cyber threat intelligence (CTI) tools will detect them. That notion, however, is increasingly misguided.”

It’s misguided, of course, because these basic tools are tactical and do not provide the intelligence alone that is needed. The challenge of digital risk is that it rests somewhere between basic social media and brand monitoring, sprinkled with traditional cyber threat intelligence. Digital risk doesn’t have the scalable technology and human power behind it to produce BRI that helps all departments in an organisation determine the best strategies for protecting their digital, human, and physical assets.

Digital risk monitoring is a helpful tool for organisations that already have rich intelligence and not just data. Failing to distinguish between the two can be problematic. It is nearly impossible to form relevant context without first considering how the data relates to the entire risk profile of an organisation not just a tactical report. Observing digital risk through the open web is not enough to develop necessary context and thus cannot enable organisations to apply and operationalise the data to address their challenges effectively. BRI must come first.

The rise of the digital work force has resulted in a double-edged sword of enhanced flexibility and productivity, offset by increased complexity in staying connected and efficiently collaborating. Yes, today’s workers have an unprecedented range of tools and applications that increase the places, platforms and processes by which they can do their jobs. At the same time, a dispersed workforce introduces new challenges from a communications and collaboration standpoint, not to mention in terms of technical complexity and security issues.

To that end, Andy Nolan, VP for UK, Ireland and Northern Europe at Lifesize offers these five steps to help ‘mind the gap’ of a dispersed workforce.

The pace of communication and connectivity technology evolution and adoption has resulted in a whole new set of expectations – from customers, partners, employers and employees. We need to respond faster, be able to connect to colleagues easily, and generally adapt to an ‘always on’ world.

The challenges of staying connected are both compounded and addressed by the mobile work force. Being able to work from anywhere sounds nice, but it also introduces new issues in how all that interconnectivity and communications should be managed.

Also, considering that surveys consistently indicate that in a typical enterprise, management spends up to 35 per cent of their time in meetings, and executive staff up to 50 per cent of their time, the importance of being able to stay connected to collaborate with colleagues, staff, partners and customers is incredibly important, and thus technology must evolve with changing work scenarios.

Communication technology today is driven by consumers: FaceTime, SnapChat and Skype. Employees want that type of functionality in their workplace, especially if their workplace is the home or based around their ubiquitous mobile device. If you can FaceTime your grandmother with one click, why do you need a long dial string to connect to your boardroom?

A large fraction of lost productivity time is a result of technical complications – setting-up, managing, administering and securing communications and collaboration processes. Now more than ever, platforms and tools must be as painless and friction-free a possible for employees to embrace them, for the productivity benefit to be fully realised, and to realise maximum ROI for management.

So, while the consumerization of communication technology gives us a lot of options and certainly has established a model for simplicity and ease of use, it also has resulted in a crowded and complicated range of tools that IT management has to deal with. Studies have shown that an average employee uses 16 apps at work, all of which represent security, quality, integration and management issues for IT. Another study, by Frost & Sullivan, revealed that 80 per cent of employees use non-approved tools and apps at work. The result is a lack of company-wide collaboration, redundant functionality, administration confusion and security risks – in short, a major IT headache.

Adding more tools in an ad hoc way leads to user frustration, siloed work environments and a significant increase in administration costs. Sure, it’s tempting for a worker to use the newest IM app his teenage daughter is raving about at work, but each new addition adds a new level of complexity and potential risk that must be controlled.

If there is one reason to manage the number and types of collaboration tools in use at a company, it’s security.

Security needs to be strategically planned, to be baked in from the start. A standardised set of IT programmes allows you to take back control of your programmes, enhancing security and streamlining communication within your organisation. But it must be done without being overly restrictive or disruptive to the end user.

When it comes to efficient, productivity-oriented communication and collaboration, there is no question that video conferencing is an outstanding option, if not the best (besides in person interaction, of course). Long perceived as a bastion of the corporate boardroom, it has now evolved to be an accessible tool, easily used by anyone in the work force, regardless of location, platform or job function. Thanks to cloud-based solutions, video conferencing is now a very straight forward proposition from a deployment, management and use standpoint. Simple one-click offerings provide the ease of use of consumer apps, with the performance, features, and security of enterprise solutions.

For dispersed workers and groups, the face-to-face interaction enabled by cloud-based video conferencing is unmatched by any other communication and collaboration tool– be it email, voice, web based conferencing, IM apps, etc. It results in more productivity, shorter meetings, better and clear communication, and ultimately the ability to build better relationships across distances.

We know it’s difficult to keep up with the pace of technology and the impact it has – both positively and negatively – on keeping a smooth running operation. Factor in a dispersed workforce using any number of devices, platforms, apps and tools, the task of keeping everyone synced is even more daunting. Cloud-based video conferencing can be a critical tool in addressing the most demanding communications and collaboration requirements of the modern workforce.

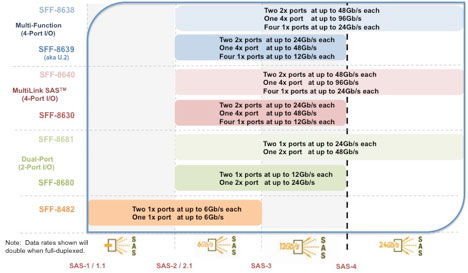

For developers and implementers of data storage solutions,

it’s useful to understand the Serial Attached SCSI (SAS) connectivity

documentation developed by various organizations within the SAS community and

how they fit and work together.

By Jay Neer, board member, SCSI Trade Association, Molex.

The International Committee for Information Technology Standards (INCITS) is the central U.S. forum dedicated to creating technology standards for the next generation of innovation. INCITS members combine their expertise to create the building blocks for globally transformative technologies. The INCITS Technical Committee T10 creates the SCSI standards which include the SAS standards that apply to today’s most popular enterprise level storage interface.

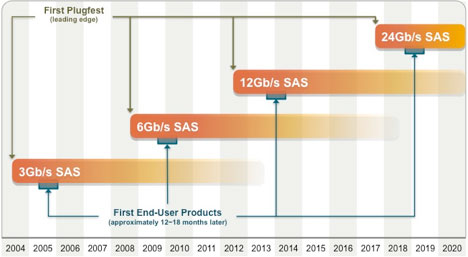

The SCSI Trade Association (STA) was formed to provide marketing support for the SAS standard and community. The roadmaps published by the STA show the progression of and the projections for SAS data rates as well as for the external I/O, internal I/O, and mid-plane connector interfaces that accompany each SAS revision. The latest revisions of the four components of the SAS Connectivity Roadmap follow and are available at https://ta.snia.org.

Figure 1 – SAS Advanced Connectivity Technology Roadmap

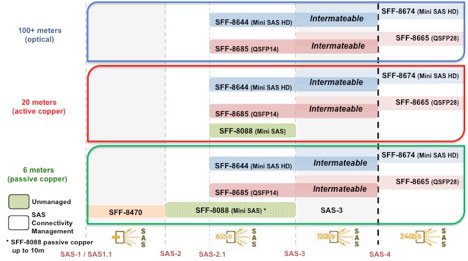

Figure 2 - SAS Advanced Connectivity External I/O Roadmap

SAS-3 interconnects have been woven into the SAS Advanced Connectivity Roadmap and therefore highlight updates and/or when new physical connector interfaces have been incorporated into the SAS-3 standard.

- SFF-8644 (mechanical) plus SAS-3 (electrical performance)

The external I/O is provided in 4x granularity. As shown in Figure 3, 1x1, 1x2 and 1x4 fixed/host board shielded receptacles are specified.

Figure 3

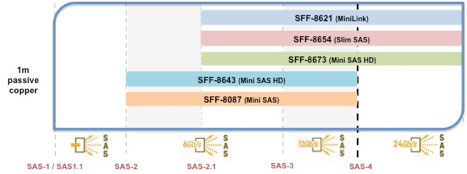

Figure 4 - SAS Advanced Connectivity Internal I/O Roadmap

Figure 5

Mating/free cables are 4x (1x1) and 8x (1x2) with the 8x capable of plugging into any two adjacent 4x ganged host board receptacles. 4x and 8x passive copper, 4x active copper and 4x active optical cables are defined in SAS-3. Four 4x ports fit within the I/O connector space defined for the low-profile PCIe add-in cards.

- SFF-8643 (mechanical) plus SAS-3 (electrical performance)

- SFF-9402 supersedes SFF-9401 and provides recommended pinouts

Any single port, dual port or quad port SAS-3 storage device can physically plug into any of these three storage device receptacles – the number of active ports depends on which of the three receptacles (the dual, quad or multi-protocol) has been designed onto the mid-plane, as well as how many ports are active on the storage device itself.

Figure 6 - SAS Advanced Connectivity Mid-plane I/O Roadmap

Figure 7

The SAS standard includes a comprehensive table listing all of the approved interconnects for each revision level of the standard. The specification numbers do change based on the performance requirements for each succeeding revision level of the SAS standard.

The Small Form Factor (SFF) Committee, an ad hoc industry association, develops the mechanical and auxiliary specifications for the connectors and cables implemented by the T10 committee and are listed within the SAS standards. These specifications are also integrated into the STA roadmaps as shown above. The latest revisions of these specifications are available at https://www.snia.org/sff.

The electrical performance for all SAS interconnects and links are defined within the INCITS SAS-3 standard itself. Pin 1 and complete pin-out specifications are located within the SAS-3 standard. It is important to keep these kinds of interface specifications within the primary source document which is the SAS-3 standard. Copies of the SAS-3 standard can be ordered from the INCITS website.

Humans are not perfect but most people accept that. However, when running a business there is little room for human error, as even the slightest mistake can have significant consequences. Near perfection can be achieved if businesses are prepared to invest the time needed to closely managing all their various processes and operations, but in this increasingly competitive market – where time is money – more and more firms are opting to take a more ‘intelligent’ approach.

By Mani Vembu, chief operations officer at Zoho.

Imagine a business where reams of data are automatically deciphered, where every need is anticipated even before the company knows what they are, and where solutions are already in place to always ensure operations run smoothly. Under these circumstances, businesses can divert their focus towards what matters most; running their companies.

The key to finding this modern day business nirvana is Artificial intelligence (AI). With AI, tasks which may take employees hours or even days to complete can be undertaken in a fraction of the time, significantly streamlining business processes. Not only does it speed up operations, AI also eradicates errors that are bound to creep in to business operations when humans are involved.

AI also takes the complex and makes it straightforward. It’s hard enough managing the dozens of processes that are required to keep a business functioning; it’s harder still to figure out which, if any, of these processes are working optimally and how each process is interconnected. Even for something as simple as contacting customers, an AI tool can figure out the best time to do so. Countless business problems – for example, unhappy customers, budget overspends and high employee turnover – can be caused by inefficient processes, which can be avoided.

AI solutions can be programmed to make business growth the top priority. They work because their interpretations are unbiased and not based on hunches. For example; if three people are tasked with finding a target audience for a new software solution by looking at the same set of data, one might argue the need to target the biggest demographic while another might advise to concentrate on the second highest demographic because it comprises more influential decision makers. The third person might conclude that the data is inadequate.

There are always multiple ways to analyse a single data set, so it is not always easy to work out which approach will be most beneficial to a business. Although reports and analytics can help, they still require deciphering. AI’s advantage is that it can summarise the data and provide intelligence-led advice on the best course of action.