This month, readers will be delighted to know that I’ve parked my own opinions and ideas, as the Green Grid news below reached me just as we were going to press. As a summary of the present situation, whereby plenty of organisations need to understand that they can no longer rely on doing what they’ve always done when it comes to sourcing and running their IT infrastructure; and many other organisations are discovering data centre solutions that address the growing trends of high density, energy efficiency, high performance compute/networks/storage and the increasing interest in open technologies, it could hardly be expressed more elegantly or succinctly.

Enterprise server rooms will be unable to meet the compute power and IT energy efficiencies required to meet the demands of fluctuating technology trends, pushing a higher uptake in hyperscale cloud and colocation facilities. Citing the latest IDC research, which predicts a growing fall in the number of server rooms globally, Roel Castelein, Customer Services Director, at The Green Grid argues that legacy server rooms are failing to keep pace with new workload types and causing organisations to seek alternative solutions.

“It wasn’t too long ago that the main data exchanges going through a server room were email and file storing processes, where 2-5KW racks was often sufficient. But as technology has grown, so have the pressures and demands placed on the data centre. Now, we’re seeing data centres equipped with 10-12KW racks to better cater for modern-day requirements, with legacy data centres falling further behind.

“IoT, social media, and the number of personal devices now accessing data are just a handful of factors that are pushing the demands of compute power and energy consumption, which is causing further pressures on legacy server rooms used within the enterprise. As a result, more organisations are now shifting to cloud-based services, dominated by the likes of Google and Microsoft, and also colo facilities. This trend is not only reducing carbon footprints, but also guarantees that the environment organisations are buying into are both energy efficient and equipped for higher server processing.”

In IDC’s latest report, ‘Worldwide Datacenter Census and Construction 2014-2018 Forecast: Aging Enterprise Datacenters and the Accelerating Service Provider Buildout’, it claims that while the industry is at a record high of 8.6 million data centre facilities, after this year, there will be a significant reduction in server rooms. This is due to the growth and popularity of public cloud based services, occupied by the large hyperscalers including AWS, Azure and Google, which is expected to grow to 400 hyperscale data centres globally by the end of 2018.

Roel continues: “While server rooms are declining, this won’t affect the data centre industry as a whole. The research identified that data centre square footage is expected to grow to 1.94bn, up from 1.58bn in 2013. And with hyperscale and colo facilities offering new services in the form of high-performance compute (HPC) and Open Compute Project (OCP), more organisations will see the benefits in having more powerful, yet energy efficient IT solutions that meet modern technology requirements.”

Greater transparency in energy sustainable practice among data industry players will help improve collaboration to tackle rising carbon emissions seen in the industry. In Greenpeace’s 2017 green IT report, ‘Clicking Clean: Who is winning the race to build a green internet?’, many hyperscalers scored highly in the report for its adoption and initiatives on renewable energy, but other players in the industry were urged to improve advocacy and transparency, and to work more collaboratively.

Roel Castelein, Customer Services Director, The Green Grid said: “The Greenpeace Report is a good indicator that while there are definite movements towards a more sustainable data centre industry, many organisations have sought individual goals, rather than working together to share best practice and find the best ways to a sustainable future. Google, Facebook and Apple are constantly pushing the barriers of green innovation, while also working closely with energy suppliers to help achieve sustainable company targets. Their ability to advocate such measures is beginning to influence the rest of the sector, yet more must be done.

“Netflix is one such hyperscaler that whilst having one of the largest data footprints out of all the companies profiled, it has been urged to increase the adoption of renewable energy and advocate for more use of renewables across the data centre industry. As the video streaming market continues to grow and produce unprecedented amounts of data, the need for Netflix or an equally large provider to set the standard and advocate green policies can set a precedent for others to follow.”Advertisement: Eltek

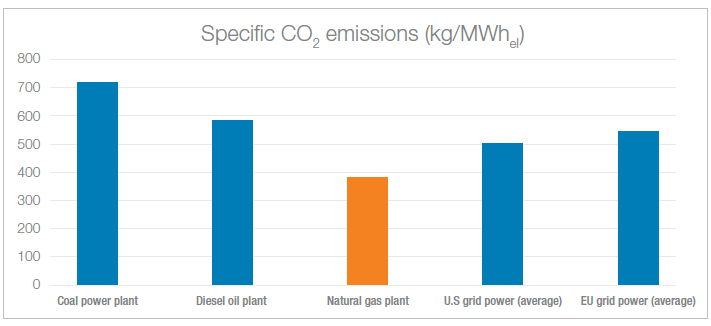

Since 2012, the amount of electricity consumed by the IT sector has increased by six per cent (totalling 21%) in the past five years, making the need for a green data centre industry stronger than ever before. With an anticipated threefold increase in global internet traffic by 2020, the advocacy of renewable energy for data centres will be important in sustaining its growth.

“The growth in the amount of data demands that all data centre providers come together, rather than working in silos, and be clear in their use of renewable energy in creating a more sustainable industry. Whether it’s meeting government sustainability objectives, using renewable energy as secondary sources, or pushing for stronger connections with energy suppliers, it can all contribute to enhanced efforts in tackling carbon emissions.”

Roel continued: “The need for data centre providers and end users to collaborate to ensure our use of data is sustainable has never been greater. Organisations like The Green Grid are providing the space for this to happen and are developing a range of tools to make sure that our growing dependency on technology is sustainable.”

Hybrid IT intensifies demand for comprehensive managed, consulting and professional services, finds Frost & Sullivan’s Digital Transformation team.

The increasing maturity and customer awareness of cloud services in Europe is impelling a phased migration from premise-based data centres to a cloud environment. With this shift, public cloud providers such as AWS and Google have identified a large market for Infrastructure-as-a-Service (IaaS) solutions. The convergence of cloud services with emerging applications like Big Data and Internet of Things (IoT) creates more opportunities for growth, encouraging innovations in infrastructure and platforms.

European Infrastructure-as-a-Service Market, Forecast to 2021, the new analysis from Frost & Sullivan’s IT Services & Applications Growth Partnership Service program, analyses the emerging trends, competitive factors and provider strategies in the European IaaS market. Western Europe, specifically Germany, the UK and Benelux, leads the market; in due course, Eastern Europe also will emerge an influential region.

As the pace of migration of each enterprise depends on its size, type and regional presence, IaaS providers are recognising that a one-size-fits-all solution is not ideal. They are, therefore, developing nuances within their portfolios to meet each customer's unique requirements.

“The varying cloud-readiness of enterprises has fostered a market for hybrid IT, and service providers are tailoring their portfolios to meet this enterprise requirement,” said Digital Transformation Research Analyst Shuba Ramkumar. “Astutely, they are seeking to cement long-term customer relations in this emerging market by offering managed, consulting and professional services to guide customers through the transition period.”

The biggest challenge for IaaS providers in Europe is the regional nature of the market, which compels them to adopt indirect channel strategies to expand their presence across European countries. Partnerships with local providers will give them a stronger foothold in European countries where there are strict security regulations about data being housed within countries’ borders.

“Meanwhile, enterprises’ increased familiarity with cloud services and recognition of its benefits will reduce the impact of adoption deterrents such as security,” noted Ramkumar. “The ability of IaaS to improve performance, data migration and management, as well as enhance business agility, will eventually attract investments from data centres of all sizes, across Europe.”

By 2018, half of enterprise architecture (EA) business architecture initiatives will focus on defining and enabling digital business platform strategies, according to Gartner, Inc.

"We've always said that business architecture is a required and integral part of EA efforts," said Betsy Burton, vice president and distinguished analyst at Gartner. "The increasing focus of EA practitioners and CIOs on their business ecosystems will drive organizations further toward supporting and integrating business architecture. This is to ensure that investments support a business ecosystem strategy that involves customers, partners, organizations and technology."

The results of Gartner's annual global CIO survey support this development. The responses show that, of CIOs in organizations participating in a digital ecosystem (n = 841), on average, the number of ecosystem partners they had two years ago was 22. Today, it is 42, and two years from now, Gartner estimates that it will have risen to 86. In other words, CIOs in organizations participating in a digital ecosystem are seeing, and expecting to see, their digital ecosystem partners increase by approximately 100 percent every two years.

Advertisement: Vertiv

"EA practitioners must focus their business architecture efforts on defining their business strategy, which includes outlining their digital business platform's strategy*, particularly relative to a platform business model," added Ms. Burton. In addition, EA practitioners will increasingly focus on the business and technology opportunities and challenges by integrating with another organizations' digital platforms and/or by defining their own innovative digital platforms.

Digital innovation continues to transform itself, and EA needs to continuously evolve to keep pace with digital. Building on the base of business-outcome-driven EA, which emphasizes the business and the execution of the business, enterprise architects are increasingly focusing on the design side of architecture — which is at the forefront of digital innovation.

Gartner predicts that by 2018, 40 percent of enterprise architects will focus on design-driven architecture. "It allows organizations to understand the ecosystem and its actors, gaining insight into them and their behavior and developing and evolving the services they need," said Marcus Blosch, research vice president at Gartner. "Many leading platform companies, such as Airbnb and Dropbox, use design-driven approaches such as 'design thinking' to build and evolve their platforms. Going forward, design-driven and business-outcome-driven approaches are set to define leading EA practice."

However, the move to design-driven architecture has implications for people, tools and services. "We recommend that enterprise architects develop the design knowledge, skills and competencies of the EA team," concluded Mr. Blosch. "They also need to educate the business on design-driven architecture and identify an area where they can start with a design-driven architect, to not only develop innovation but also to learn, as an organization, how to do design."

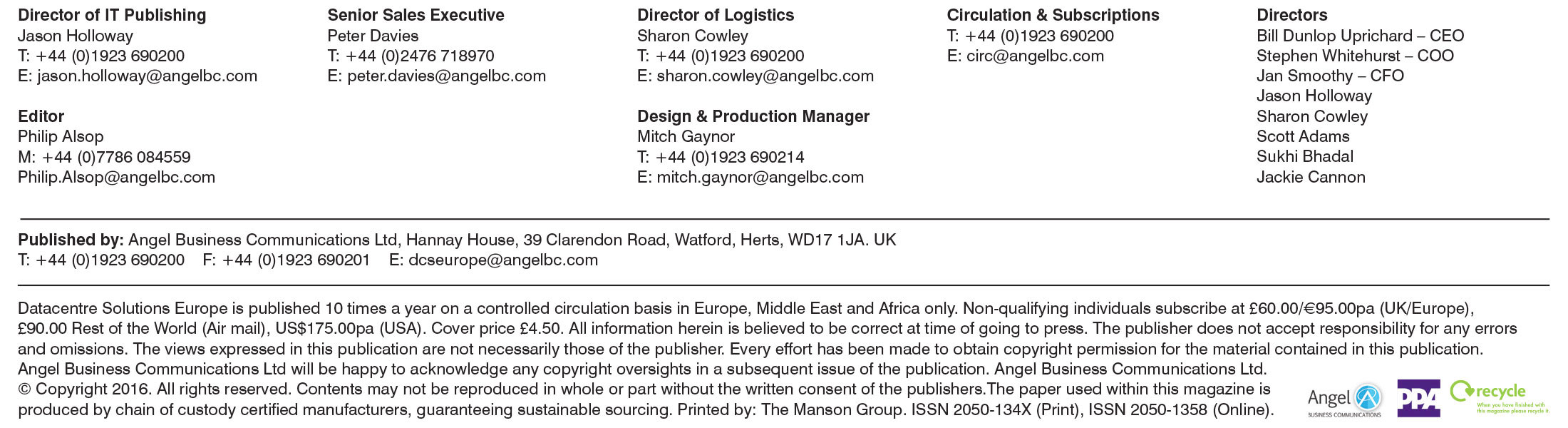

A new update to the Worldwide Semiannual Security Spending Guide from International Data Corporation (IDC) forecasts worldwide revenues for security-related hardware, software, and services will reach $81.7 billion in 2017, an increase of 8.2% over 2016. Global spending on security solutions is expected to accelerate slightly over the next several years, achieving a compound annual growth rate (CAGR) of 8.7% through 2020 when revenues will be nearly $105 billion.

"The rapid growth of digital transformation is putting pressures on companies across all industries to proactively invest in security to protect themselves against known and unknown threats," said Eileen Smith, program director, Customer Insights and Analysis. "On a global basis, the banking, discrete manufacturing, and federal/central government industries will spend the most on security hardware, software, and services throughout the 2015-2020 forecast. Combined, these three industries will deliver more than 30% of the worldwide total in 2017."

In addition to the banking, discrete manufacturing, and federal/central government industries, three other industries (process manufacturing, professional services, and telecommunications) will each spend more than $5 billion on security products this year. These will remain the six largest industries for security-related spending throughout the forecast period, while a robust CAGR of 11.2% will enable telecommunications to move into the number 5 position in 2018. Following telecommunications, the industries with the next fastest five-year CAGRs are state/local government (10.2%), healthcare (9.8%), utilities (9.7%), and banking (9.5%).

Services will be the largest area of security-related spending throughout the forecast, led by three of the five largest technology categories: managed security services, integration services, and consulting services. Together, companies will spend nearly $31.2 billion, more than 38% of the worldwide total, on these three categories in 2017. Network security (hardware and software combined) will be the largest category of security-related spending in 2017 at $15.2 billion, while endpoint security software will be the third largest category at $10.2 billion. The technology categories that will see the fastest spending growth over the 2015-2020 forecast period are device vulnerability assessment software (16.0% CAGR), software vulnerability assessment (14.5% CAGR), managed security services (12.2% CAGR), user behavioral analytics (12.2% CAGR), and UTM hardware (11.9% CAGR).

Advertisement: Managed Services And Hosting Summit Europe

From a geographic perspective, the United States will be the largest market for security products throughout the forecast. In 2017, the U.S. is forecast to see $36.9 billion in security-related investments. Western Europe will be the second largest market with spending of nearly $19.2 billion this year, followed by the Asia/Pacific (excluding Japan) region. Asia/Pacific (excluding Japan) will be the fastest growing region with a CAGR of 18.5% over the 2015-2020 forecast period, followed by the Middle East & Africa (MEA)(9.2% CAGR) and Western Europe (8.0% CAGR).

"European organizations show a strong focus on security matters with data, cloud, and mobile security being the top three security concerns. In this context, GDPR will drive up compliance-related projects significantly in 2017 and 2018, until organizations have found a cost-efficient and scalable way of dealing with data," said Angela Vacca, senior research manager, Customer Insights and Analysis. "In particular, Western European utilities, professional services, and healthcare institutions will increase their security spending the most while the banking industry remains the largest market."

From a company size perspective, large and very large businesses (those with more than 500 employees) will be responsible for roughly two thirds of all security-related spending throughout the forecast. IDC also expects very large businesses (more than 1,000 employees) to pass the $50 billion spending level in 2019. Small and medium businesses (SMBs) will also be a significant contributor to BDA spending with the remaining one third of worldwide revenues coming from companies with fewer than 500 employees.

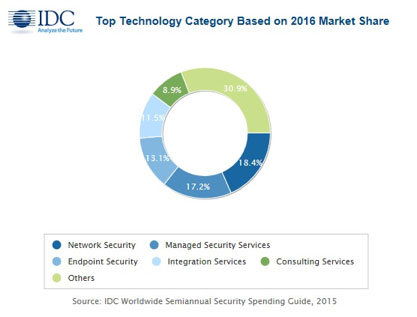

A new update to the Worldwide Semiannual Big Data and Analytics Spending Guide from International Data Corporation (IDC) forecasts that Western European revenues for Big Data and business analytics (BDA) will reach $34.1billion in 2017, an increase of 10.4% over 2016. Commercial purchases of BDA-related hardware, software, and services are expected to maintain a compound annual growth rate (CAGR) of 9.2% through 2020 when revenues will be more than $43 billion.

"Digital disruption is forcing many organizations to reevaluate their information needs, as the ability to react with greater speed and efficiency becomes critical for competitive businesses," said Helena Schwenk, research manager, Big Data and Analytics, IDC. "European organizations currently active in Big Data programs are now focusing on scaling up these efforts and propagating use as they seek to learn and internalize best practices. The shift toward cloud deployments, greater levels of automation, and lower-cost storage and data processing platforms are helping to reduce the barriers to driving value and impact from Big Data at scale."

Banking, discrete manufacturing, and process manufacturing are the three largest industries to invest in Big Data and analytics solutions over the forecast period, and by 2020 will account for more than a third of total IT spending on BDA solutions. Overall, the financial sector and manufacturing vie with each other for the largest share of spending, with finance just edging out manufacturing, accounting for 21.5% of spending on BDA solutions compared with manufacturing's 21.2%. However, the industries that will show the highest growth over the forecast period are professional services, telecommunications, utilities, and retail.

Western Europe lags the worldwide market in overall growth, with a CAGR of 9.2% for the region, while worldwide spending will grow at a CAGR of 11.9%. The highest growth is in Latin America, while the largest regional market is the U.S. with more than half of the world's IT investment in Big Data and analytics solutions.

"The investments in the finance sector — banking, insurance, and securities and investment services — apply across a wide range of use cases within the industry," said Mike Glennon, associate vice president, Customer Insights and Analysis, IDC. "Examples include optimizing and enhancing the customer journey for these institutions, together with fraud detection and risk management, and these use cases drive investment in the industry. However, the strong manufacturing base in Western Europe will also invest in Big Data and analytics solutions for more effective logistics management and enhanced analysis of operations related data, both of which contribute significantly to improved cost management, and hence profitability."

He added that adoption of Big Data solutions lags that of other 3rd Platform technologies such as social media, public cloud, and mobility, so the opportunity for accelerated investment is great across all industries.

BDA technology investments will be led by IT and business services, which together will account for half of all Big Data and business analytics revenue in 2017 and throughout the forecast. Software investments will grow to more than $17 billion in 2020, led by purchases of end-user query, reporting, and analysis tools and data warehouse management tools.

Cognitive software platforms and non-relational analytic data stores will experience strong growth (CAGRs of 39.8% and 38.6% respectively) as companies expand their Big Data and analytic activities. BDA-related purchases of servers and storage will grow at a CAGR of 12.4%, reaching $4.4 billion in 2020.

Advertisement: Flash Forward

Very large businesses (those with more than 1,000 employees) will be responsible for more than 60% of all BDA spending throughout the forecast and IDC expects this group of companies to pass the $25 billion level by 2020. IT spending on Big Data and analytics solutions by businesses with fewer than 10 employees is expected to be below 1% of the total, even though these businesses account for over 90% of all businesses in Western Europe. These businesses need expertise and time to evaluate and adopt Big Data solutions and will rely heavily on solution providers to guide them through implementation of this technology.

From watching movies, to reading books; it’s impossible to think of an aspect of our lives that has not been affected by technology. In business, even the most established sectors are adapting.

By Keith Tilley, Executive Vice President & Vice-Chair, Sungard Availability Services.

The banking sector for example, is undergoing severe disruption due to the rise of plucky fintech start-ups. The UK has recently been hailed as number one in the world for supporting innovation in this area – but while a positive accolade, one can’t help but think of the pressure this is placing upon the IT department to keep up with this pace of digital transformation.

For the business, IT holds immeasurable power: offering a competitive advantage, enabling growth and playing a vital role in the market strategy. However, with so much to do, IT is becoming something of a complex beast.

Digital tools are vital for business growth today, from attracting the brightest talent[1], through to entering new markets[2]. This power has not gone unnoticed, in research recently undertaken by Sungard Availability Services, with 79% of ITDMs stated that digital transformation is vital in remaining competitive.

In tandem, employees are placing growing importance on the power of digital technology, believing it will improve productivity, allow them to develop new skills, and make their jobs easier. With UK businesses facing ongoing market uncertainty following the EU referendum and subsequent vote for Brexit, could this digital revolution be the key to futureproofing organisations during these turbulent times?

Advertisement: MPL Technology

The pressure is certainly on for the IT department, and 50% of IT decision makers fear that they cannot drive digital transformation forward at the speed their management team expects. Combine this with the fact that 32% of employees also believe their employers are not driving digital transformation as fast as competitors are doing, and you have the ingredients for a disaster – commercially speaking.

When too much pressure is heaped on IT, the department struggles to deliver the best quality service to end users – impeding businesses from innovating to remain competitive in their fields.

Unsurprisingly, this affects more than just the IT department and is having a knock-on effect upon the whole organisation. Nearly a third of employees (30%) confessed that new digital tools are making their jobs more difficult, while 31% said it made their roles more stressful.

As the demand for digital continues, reining in disruptive IT has never been more critical – and those who fail to do so will risk everything from staff retention[3] and customer satisfaction, through to their very survival.

This pace of change will not abate. With this in mind here are some top tips for a more manageable IT estate:

Seek out the right skills: Bringing in the right talent – inside and outside of the IT department – who can help drive your digital culture forward will be vital. Remember that soft skills are just as important as technical ability.

Communicate clearly: Keeping a clear and open dialogue with the wider business will not only help the IT department understand exactly where business priorities lie, but will help prevent employee and management expectations from getting out of hand.

Secure adequate resources: Changing a company’s culture understandably requires investment. Communicating the benefits associated with digital transformation – and investing in training for those who need it – is crucial to ensure that the wider business both pays its fair share of the associated costs, and receives due positive outcomes too.

Don’t go it alone: Turning to colleagues and tech champions outside the IT department can help drive change. Additionally, partnering with an appropriately experienced managed services provider can allow the IT department to focus on delivering new, innovative services, rather than getting waylaid by small, fiddly system maintenance tasks, or overwhelmed by the changes undertaken.

Perhaps one of the biggest mistakes lies with the connotations that are associated with the term ‘digital transformation’. Many have made the mistake of thinking it should be an all-encompassing overhaul; but a revolution doesn’t come out of nowhere, it can be built upon one step at a time. By aligning IT to business outcomes, you then use it to create new opportunities, create better working practices, and ultimately improve the competitive strength of your business.

[1] 35% of UK businesses attribute digital success as vital to attracting graduate talent

[2] 59% of UK IT Decision makers believe digital success results in increased business agility, with 43% stating revenue growth will result

[3] 34% of UK employee respondents said they would leave their current organisation if they were offered a role at a more digitally progressive company

Luckily, things have moved on since in the last 10 years around Big Data, but there’s still an awful lot of confusion and frustration when it comes to analytics. By Bob Plumridge, Director and Treasurer, SNIA Europe.

This is not a unique experience. Companies struggle to exploit Big Data - partly because they don’t know how to overcome the technical challenges, and partly because they do not know how to approach Big Data analytics. The most common problem is data complexity. Often, this is self-inflicted, as companies starting out with Big Data analytics try to “boil the ocean.” Subsequently, IT teams become overwhelmed and the task turns out to be impossible to solve. It is true, data analytics can deliver important business insights. But it’s not a solution for every corporate problem or opportunity.

Complexity can also be a symptom of another problem, with some companies struggling to extract data from a hotchpotch of legacy technologies. The reality is, many companies will be tied to legacy technologies for years to come, and they need to find a way to work within this context, and not try to escape it, as they will most likely fail.

Another source of trouble is setting wrong or poorly planned business objectives. This can result in people asking the wrong questions and interrogating non-traditional data sets through traditional means. Take Google Flu Trends, an initiative launched by Google to predict flu epidemics. It made the mistake of asking: “When will the next flu epidemic hit North America?” When the data was analysed, it was discovered that Google Flu Trends missed the 2009 US epidemic and consistently over-predicted flu trends and the initiative was abandoned in 2013. An academic later speculated that if the researchers had asked “what do the frequency and number of Google search terms tell us?” the project may have proved more successful.

Advertisement: DTC Manchester

The renowned American poet, Henry Wadsworth Longfellow, once wrote: “In character, in manner, in style, in all things, the supreme excellence is simplicity”. Too often, people associate simplicity with a lack of ambition and accomplishment. In fact, it’s the key to unlocking a great deal of power in business. Steve Jobs once said you can move mountains with ‘simple’.

Over the years, technology has progressed by getting simpler rather than more complex. However, this doesn’t mean the back-end isn’t complicated. Rather, a huge amount of work goes into creating an intuitive user experience. Consider Microsoft Word: every time you type, transistors switch on or off and voltage changes take place all over computer and storage mediums. You only see the document, but a lot of technical wizardry is happening in the background.

Extracting meaningful value from data depends on three disciplines: data engineering, business knowledge and data visualisation. To achieve all three, you need a team of super humans who can code in their sleep, have a nose for business, an expansive knowledge of their industry and adjacent industries, supreme mathematical genii and excellent management and communication skills. Or, you have technology that can abstract all these challenges and create a platform layer which does most of the computations in the background.

However, there is a caveat. Even if you eschew complexity and embrace a simplified data platform, you still need data savvy people. These data scientists won’t have to train for three years to memorise the finer points of Hadoop, but they will need to understand Big Data challenges.

There are companies which provide the method and points businesses in the right direction, but they still need to uncover what questions to ask, and what kind of answers to expect. How businesses can equip themselves with the right skills for the job is an extremely important issue to consider.

While Big Data projects may stall, or fail for any of the above reasons, we are starting to see more “succeed and transform” businesses, mainly thanks to the huge strides in stripping out complexity in the front-end through layer technology.

Let’s take the Financial Industry Regulatory Authority, Inc. (FINRA), a private self-regulatory organisation (SRO) and the largest independent regulator for all US-based securities firms. Thanks to the methods I was referencing earlier, the financial watchdog has been able to find the right ‘needles’ in their growing data ‘haystack’. Analysts can now access any data in FINRA’s multi-petabyte data lake to identify trading violations – in an automated fashion making the process 10 to 100 times faster: this means a difference of seconds vs. hours!

FINRA achieved simplicity and more control of its data as a result. It ordered brokerages to return an estimated €90 million in funds, obtained through misconduct during 2015 which was nearly three times the 2014 total.

Big Data projects don’t have to confound and confuse. They can bring breakthrough lightbulb moments, provided they’re grounded in simplicity. Let the technology do the difficult stuff – in all else, keep it simple.

One of the most important events in the datacenter industry calendar, the Open Compute Project (OCP) Summit took place in Santa Clara in March 2017. Jeffrey Fidacaro, at 451 Research, was there and gives us his take on OCP adoption as well as hot topics and news at the summit.

The value proposition of OCP-based designs – lower cost, highly efficient, interoperable, scalable – appears to be well understood by the industry even with some healthy scepticism around the magnitude of savings announced by some hyperscalers.

However, despite the maturing hardware ecosystem and the known benefits of open compute, many vendors are still waiting for signs of an anticipated wave of non-hyperscale adoption. We believe this is primarily because of a lack of maturity around the procurement, testing and certification, and support functions that firms are accustomed to with traditional (non-OCP) hardware.

There are some systems integrators and others that are stepping up to fulfil these functions, and to help with integration, but there is more work ahead, before enterprises and other non-hyperscale buyers can confidently overcome their caution. There is clearly a high level of enterprise interest in OCP, and supplier and service provider momentum. We believe broader adoption is a question of when – not if.

The OCP community is working hard on many levels to address these challenges. Most visibly, it has launched an online OCP Marketplace, where users can search for OCP hardware and find where to order it.

Major hyperscale announcements at the OCP event included Microsoft's support of ARM server processors for Azure cloud OCP servers, and Facebook's OCP server portfolio refresh that includes a new server type. Intel announced a collaboration with small software supplier Virtual Power Systems to contribute software-defined power technology to the community. Equinix, the largest colocation provider by revenue, announced broad OCP support, while some of the leading OCP hardware makers discussed their plans to drive greater OCP adoption. This year's event also focused on the open software stack including open network switch software and new software-defined storage announcements from NetApp and IBM.

Advertisement: Eltek

Since its inception in 2011, the OCP ecosystem has grown to 198 official members. OCP hardware deployments, however, have been primarily in hyperscale environments – Facebook, Microsoft and Google – and a handful of large financial institutions. The maturity of OCP hardware and vendor support was evident at the event, with a vendor show floor that was easily twice the size of last year's.

At this year's Summit, Facebook and Microsoft made significant announcements, while Google was notably quiet (and absent from the keynote roster). Google joined the OCP a year ago, and has since shared its 48V rack designs with the community – a higher voltage than the 'traditional' 12V OCP designs.

A focus of the 2017 Summit was on networking components, as well as the software stacks running OCP gear. A panel discussion highlighted the need for greater interoperability between, for example, network operating systems, software-defined storage, OpenStack and Linux.

One of the major announcements at the Summit was Microsoft's support of ARM-based servers integrating chips from Cavium and Qualcomm (and others) into its OCP-designed servers. While ARM-based servers have been around for some time (with little traction), support by Microsoft presents a significant threat to Intel's monopoly in the world of server processors. Microsoft also announced it will integrate AMD's new x86 server chip, Naples, which is shipping in 2Q 2017. (Look for a separate 451 Research report that delves deeper into the ARM and AMD, versus Intel, server chip battle.)

Microsoft ported its Windows Server operating system to run on 64-bit ARM processors, and is strategically testing the ARM servers for specific (not all) workloads – primarily for cloud services. This includes its Bing search engine, big-data analytics, storage and machine learning. This is significant, because Microsoft claims that these workloads make up nearly half of its cloud datacenter capacity. Microsoft noted that the ARM-based version of Windows Server will not be available externally.

The ARM-based servers are part of Microsoft's Project Olympus platform (first announced in November 2016), which includes OCP designs for a universal motherboard, a universal rack power distribution unit, power supply and batteries, and rack management card. Project Olympus is a new development model whereby designs are 50% complete by intention, and shared with the OCP community for collaboration and to speed innovation. Intel and AMD are also working with Microsoft to have their newest processors (respectively, Skylake and Naples) included as part of the Project Olympus specifications.

At the Summit, Facebook introduced a full refresh of its OCP portfolio, introduced a seventh OCP server type, and updated its software stack. The new Type VIII server combines two systems – the Tioga Pass dual-socket server and the Lightning storage (JBOF) – to maximize shared flash storage across servers. Its next-generation storage platform, Bryce Canyon, is designed for handling high-density storage (photos and videos) and can support up to 72 hard disk drives in a 4-OpenU chassis.

Facebook also updated its Big Sur GPU server to Big Basin using the latest generation GPU processors, and increased its memory from 12GB to 16GB, allowing it to train machine-learning models that are 30% larger compared to its predecessor. Other refreshes included the Yosemite v2 server (four single-socket compute nodes) and Wedge 100S top-of-rack network switch.

In the storage space, NetApp announced it is offering a software-only version of its ONTAP operating system, ONTAP Select, for use with OCP storage hardware in a private cloud. IBM also released its Spectrum Scale storage software for OCP. These enterprise storage operating systems are now unbundled, and bolster the software-defined storage stack that had been missing in OCP.

A new online OCP Marketplace was launched where products can be reviewed and sourced by the community. Last year, an incubation committee was established that developed two designations: OCP Accepted (full hardware design specifications that are contributed to the OCP community) and OCP Inspired (designs that hold true to an existing OCP specification).

The marketplace currently lists 70 OCP products that are ready to purchase. We believe this is a positive initial step in aggregating available OCP-based hardware in one location, but more curating and certification may be needed to resolve some of the enterprise challenges in OCP hardware procurement.

Schneider Electric, one of the leading datacenter technologies suppliers, and Microsoft announced their co-engineering of a universal rack power distribution unit (UPDU), a unique component of Project Olympus. The UPDU is based on a single PDU reference design along with multiple adapter options to accommodate varying alternating current standards and input power ratings (amps, phases and voltages) across different geographies, as well as different rack densities. The goal is to simplify global procurement, inventory management and deployment.

Also at the datacenter facilities level, was the announcement from Intel that it is working with VPS to develop software-defined power monitoring and management. We believe the broader datacenter industry will increasingly move toward the use of software-driven power management, enabling greater efficiencies and utilization.

This is not a new approach, but one that has been slow to take off – Intel's support may help to change that. Intel is integrating VPS's software with its Rack Scale Design open APIs to enable power availability where needed on-demand, as well as peak shaving among other functions. Intel and VPS have committed to contributing the specification to OCP.

The leading OCP server manufacturers (ODMs), Quanta Cloud Technology and Wiwynn, are assessing more integrated OCP rack offerings, in a bid to simplify the procurement process. But in our view, they still need to develop stronger channel and distributor relationships to get closer to resembling the traditional supply chain.

A number of systems integrators at the event shared a common strategic mandate to fulfill the intermediary role between enterprise and non-hyperscale buyers and the OCP supply chain. The availability of OCP-compliant space at colocation providers is likely to also be an enabler. Equinix, the largest colocation supplier by revenue, joined the OCP in January, including the OCP Telco Project that launched a year ago (and has since grown from 20 to over 100 participants).

At the Summit, Equinix announced that it would adopt OCP hardware at its International Business Exchange datacenters to support certain infrastructure services. Equinix discussed with 451 Research its broader intent to support – and in some cases, help facilitate via interoperability testing – OCP adoption among its customers and partners. This could include the top 10 cloud providers, plus hundreds of smaller cloud customers. Equinix's broader OCP strategy will be discussed in greater detail in a forthcoming report.

Several other colocation providers are also paving the way for OCP inside their facilities. Aegis Data made OCP-compliant space available in its HPC-designed datacenter outside of London and, along with Hyperscale IT (a hardware reseller and integrator) and DCPro Development (datacenter training), stood up OCP hardware and held an awareness course in February.

CS Squared, a datacenter consultant, opened an OCP lab in a Volta colo datacenter in London (mirroring Facebook's Disaggregated Lab in California). More recently, the Dutch colo Switch Datacenters announced a data hall in one of its Amsterdam facilities that is suitable for Open Rack systems.

In reality, the 'end to end' service offerings available today for the non-hyperscale buyers of OCP hardware, from procurement to maintenance and support, are still a work in progress. It is moving forward and evolving but, in our opinion, is still too onerous or complicated for most.

Further investment and a concerted effort from the ODMs, integrators, colos and others in the supply chain will be required. But once the issues around procurement, testing and support are fully resolved, it could be a tipping point for broader non-hyperscale OCP adoption.

Jeffrey Fidacaro is a Senior Analyst in the Datacenter Technologies and Eco-Efficient IT practices at 451 Research

By Steve Hone CEO

The DCA, Trade Association for the date centre sector

Throughout the year we invite DCA members to

submit articles on a variety of subject matters related to the data centre

sector, many highlight common challenges which operators of data centres face on

a day to day basis. Often these thought leadership articles provide details and

awareness of possible solutions, this helps the data centre sector move forward

and overcome similar challenges.

In this month’s DCA journal we thought we would provide members with the opportunity to submit some detailed customer/client case studies providing examples of just how innovative solutions have been applied and implemented.

From independent research conducted it has been shown that the majority of us are fairly risk adverse, do not like change and reluctant to be the first ones to introduce something new which many run the risk of back firing or failing to deliver.

Now I’m not saying that change is easy or without risk, far from it - nothing worth doing came easy after all! However, the change can be made easier and the risks dramatically reduced if you can be reassured that you are not the first to face a particular challenge. Things improve if you are facing a challenge that has already been successfully solved by someone else who was in exactly the same position as you. That’s where ‘real life’ case studies come into their own and are “worth their weight in gold”.

Reading about other businesses who have already implemented what you are considering can be a real confidence boost. Far from imploding these innovative businesses have come out of the other side stronger and more prepared than ever for what lies ahead.

Last month I spent two days at DCW 2017 at the Excel in London, while there I took the opportunity to visit various DCA members; many of who were also exhibiting. During the course of our conversations I raised the subject of case studies, I found many members had case studies which they felt would be of value but they also confessed that they were not always made very easy to find on their own websites.

Over the coming months, with the membership’s support, we intend to gather as many member cases studies as possible to build a document library within the new DCA website (due out in the summer). The intention is to make these invaluable case studies provided by DCA members easier to find and refer to.

As always, a big thank you for all the contributions submitted this month. If you would like to participate in this case study initiative please contact the DCA.

Next month the theme will be Predictions and Forecasts (deadline for copy is 11th April). This is a broad title and is a great opportunity for members to share their thoughts on what they feel lies ahead, the challenges we might need to overcome or innovations we can look forward too.

If you would like to submit an article please contact Amanda McFarlane. Amandam@datacentrealliance.org

Chatsworth Products – Customer Case Study

Basefarm, a leading, global IT hosting and colocation services provider, securely hosts more than 35,000 services and reaches over 40 million end users worldwide in industries ranging from finance and government, to media and travel. Headquartered in Oslo, Norway, Basefarm offers its customers advanced technology solutions, high-end cloud services, application management and colocation from its six data centres located throughout Europe.

In response to growing demand for its colocation services, Basefarm set out in March 2015 to design and build a green, state-of-the-art data centre—Basefarm Oslo 5.

“The brief for the new site was to create the most energy-efficient data centre in Oslo,” said Ketil Hjort Elgethun, Senior System Consultant, Basefarm. “Cooling was a key factor in the design, so we set out to find an airflow containment and cabinet package that could maximise the return on our cooling equipment, and provide a thermal solution for all the racks and cabinets within, whilst being flexible and easy to use.”

What Basefarm sought was a customised cabinet and containment solution, one that would allow it to rapidly respond to the future deployment of integrated cabinets, as well as accommodate a variety of cabinet sizes. With Chatsworth Products’ (CPI) Build To Spec (BTS) Kit Hot Aisle Containment (HAC) Solution and GF-Series GlobalFrame® Gen 2 Cabinet Systems, Basefarm found the optimal solution.

“The cabinet platform on which your enterprise is built is just as critical as the equipment it stores. Using a properly configured cabinet that is designed to fit your equipment and work with your data centre’s cooling system is crucial,” commented Magnus Lundberg, Regional Sales Manager, CPI.

Integral to the design of Basefarm Oslo 5 was a state-of-the-art, ‘Air-to-Air’ cooling system, designed to deliver high levels of cooling with exceptionally low power consumption.

This innovative cooling technology demanded a containment strategy that could effectively manage the separation of hot and cold airflow. The solution needed to be of the highest quality, easy to work with and flexible enough to accommodate a mix of cabinets and equipment from multiple suppliers.

HAC solutions isolate hot exhaust air from IT equipment, and direct back to the CRAC/CRAH through a vertical exhaust duct, which guides the hot exhaust air away from the cabinet to support a closed return application, resulting in more efficient cooling units. This ability to isolate, redirect and recycle hot exhaust air was exactly what Basefarm was looking for in its new super-efficient data centre design.

Basefarm worked closely with CPI engineers to create a layout that included the BTS Kit, allowing the flexibility to field-fabricate ducts over the contained aisle. This was key to the ongoing needs of Basefarm Oslo 5 because the BTS Kit can be used over a mix of cabinets of different heights, widths and depths in the same row and can be ceiling- or cabinet-supported. BTS Kit also features an elevated, single-piece duct, allowing cabinets to be removed, omitted or replaced as required. With a high-quality Glacier White finish that reflects more light, a durable construction and a maintenance-free design, the BTS Kit has given Basefarm the security of enduring performance in building its new mission critical data centre.

Along with the BTS Kit, CPI supplied Basefarm with a range of GF-Series GlobalFrame Cabinets with Finger Cable Managers installed. The GF-Series GlobalFrame Cabinet System is an industry-standard server and network equipment storage solution that provides smarter airflow management. Also in a Glacier White finish, GlobalFrame Cabinets feature perforated areas on the doors that are 78 percent open to maximise airflow.

“During the design phase, we considered many different options for cabinet and containment solutions. CPI’s GlobalFrame Cabinets and the BTS Kit stood out as the best-in-class to meet our needs in building a super-efficient data centre,” added Elgethun.

With CPI’s cabinets and aisle containment, Basefarm had deployed a solution that allowed them to:

The dream of building Oslo’s most reliable and sustainable data centre became a reality, just one year after the design process began, when phase 1 of Basefarm Oslo 5 was completed. In Spring 2016, the new data centre went live, offering Basefarm customers access to more than 10 megawatts of critical capacity and up to 6000 square metres of white space. Located only five kilometres away from Basefarm’s existing data centre, Basefarm Oslo 5 will be used as part of a dual site solution for customers requiring high levels of redundancy.

Finding an innovative and low-cost cooling solution was a top-level priority for the Basefarm design team from the start. In a facility designed to grow over the next five years, beyond meeting the desired Power Useage Effectiveness (PUE) goal of 1.1, this was a chance to break new ground and become a model for other green data centres to follow.

With the flexibility CPI’s BTS Kit and GlobalFrame Cabinet Systems provided, Basefarm can now quickly scale the new data centre to accommodate its clients’ future colocation needs.

“The combination of the quality and design of CPI’s products and their responsiveness during deployment, meant it was a win-win situation for Basefarm. The cost of improving our PUE numbers by 10 percent is a small cost compared to being able to use the space 80 percent more effectively. And it looks great!” Elgethun stated.

Taking a team approach was the key to building the trust needed for Basefarm and CPI to work together to successfully design and build Oslo’s biggest and greenest data centre yet–Basefarm Oslo 5.

Basefarm securely hosts more than 35,000 services and reaches over 40 million end users worldwide in industries ranging from finance and government, to media and travel. Headquartered in Oslo, Norway, Basefarm offers its customers advanced technology solutions, high-end cloud services, application management and colocation from its six data centres located throughout Europe.

At Chatsworth Products (CPI), it is our mission to address today’s critical IT infrastructure needs with products and services that protect your ever-growing investment in information and communication technology. We act as your business partner and are uniquely prepared to respond to your specific requirements with global availability and rapid product customisation that will give you a competitive advantage. At CPI, our passion works for you. With over two decades of engineering innovative IT physical layer solutions for the Fortune 500 and multinational corporations, CPI can respond to your business requirements with unequalled application expertise, customer service and technical support, as well as a global network of industry-leading distributors. Headquartered in the United States, CPI operates from multiple sites worldwide, including offices in Mexico, Canada, China, the United Arab Emirates and the United Kingdom. CPI’s manufacturing facilities are located in the United States, Asia and Europe. For more information, please visit www.chatsworth.com

Brendan O’Reilly, Sales Director – Blygold UK Ltd

For several years there is an increasing interest in the energy consumption of data centres. The increasing power hungry servers produce more heat. More powerful and advanced cooling technology is required to remove the heat and allow the servers to operate at optimal conditions.

Next to the direct power consumption of the servers the indirect power consumption of the cooling installation plays a key role in energy efficient data centres. This has initiated research and the market introduction of new technologies. Some of these developments focus on the process inside the data centre from which the heat must be removed, others focus on the equipment outside the data centres that is essential for releasing the heat to the environment. Together these developments should result in a well designed and easy to maintain cooling system that will only constitute a small part of the energy consumption of the total data centre.

It is clear that operational costs will exceed the initial investments by far on the long term. Yet a big part of the of the operational costs are fixed by choices made in the initial investment process. The choices made the design phase of data centre cooling equipment, will not only affect the initial efficiency and power consumption but it may have a major impact on these parameters in the future.

A good example of this is the choice of the type and material of the heat exchangers that are used in most installations. In the process industry or power plants heat exchangers are considered key elements for optimal process efficiency. Loss of heat transfer in these elements affects the efficiency of the whole installation and is therefore carefully monitored and corrected if necessary. In data centre design and operation the focus on these heat exchangers seems to be less than other industries.

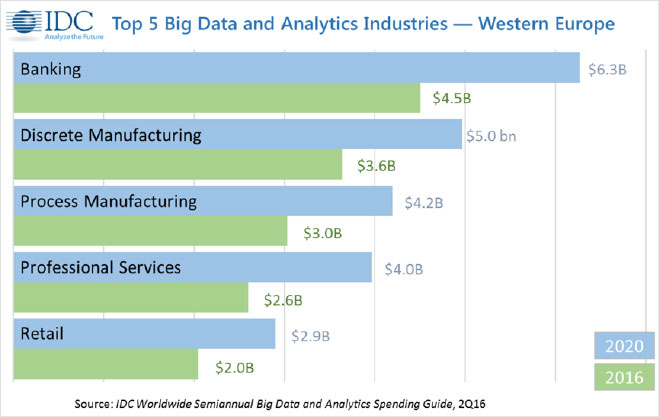

To understand how corrosion and pollution in HX can have such an impact on cooling installations efficiency we can look at the basics of the cooling process. In all cooling installations the refrigeration cycle uses the evaporation of a liquid to absorb heat. The absorbed heat is then released at a higher pressure/temperature to the environment. The cycle of evaporation, compression, condensing and expansion is shown simply in figure 1

The white lines show the normal cycle where the system works in optimal condition. The red lines show the cycle when the condensing temperature raises. This can be due to higher outside temperatures or due to a less efficient heat exchanger. The higher condensing temperature results in an extra power input while the effective capacity is reduced. Because an increased condensing temperature has this double effect, the efficiency of the cooling equipment is reduced significantly.

For an acceptable energy consumption of the cooling installation it is essential to keep the condensing temperature as low as possible. Every degree counts! This is the point where a close look at the heat exchangers in the system becomes vital. These heat exchangers must be kept clean and free of corrosion. The right choices in the design phase determine the performance in the long term.

Heat exchangers are designed to exchange heat between media without direct contact between those media. Aluminium and copper are good materials for this purpose as they have high heat conductivity. Standard liquid‐to‐air heat exchangers are made with copper tubes and aluminium fins.

A weakness in this design is the joint between the copper and aluminium. As long as the fins are tightly joined to the copper tube, without gaps or interference of organic layers or corrosive products, the heat transfer will be optimal. Pollution on the fin surface will also influence the heat transfer of a heat exchanger and the airflow through it.

The joint between the copper tubes and aluminium fins is one of the more corrosion sensitive parts of an air‐conditioning unit. With aluminium being less noble

than copper it will be sacrificed in the presence of electrical conducting fluids.

These fluids will

always be present due to pollution and

moisture from the environment.

The accelerated corrosion due to the presence of different metals is called galvanic corrosion and is one of the main problems in copper‐aluminium heat exchangers. An example of this galvanic corrosion is given in figure 2. The joint that existed between copper and aluminium is now replaced by a copper aluminium oxide joint. The heat conductivity of aluminium oxide is much lower than that of aluminium. Therefore, the heat transfer between copper tubes and aluminium fins is significantly decreased.

If pollution on the fins limits the airflow through the heat exchanger, the temperature of the air that is passing over the aluminium fins will increase (the same kW in less kg of air). This will cause the temperature difference between the liquid/gas in the copper tube and the air passing over the fins to decrease. A smaller temperature difference will result in reduced heat transfer. The only way the system can cope with this loss of heat transfer is the undesirable increase of the condensing pressure and temperature.

In the design phase engineers must take into account the effect corrosion and pollution will have during the lifetime of a cooling installation. Corrosion can of course be controlled by selecting the right materials but also the type of heat exchangers is important. The use of indirect adiabatic cooling on a heat exchangers will create massive galvanic corrosion due to the amount of moisture that is brought into the heat exchanger.

Protecting heat exchangers from corrosion and accumulating pollution is essential for cooling installation capacity and energy consumption. The options available in the market to realize this can be divided into three parts; metal optimization, pre‐coated metals and post‐coated metals.

Metal optimization consists of looking into different metals or alloys to reduce the risk of corrosion. Using copper fins instead of aluminium is a good example of this. The resulting copper tube‐copper fin heat exchangers will not suffer from extreme galvanic corrosion anymore. Apart from the price and the weight the disadvantage is that in industrial environments sulphurous and nitrogen contamination will create high amounts of metal loss. Heat transfer will not directly be affected but the lifetime of the heat exchangers will significantly decrease.

Pre-coated aluminium is often offered as a “better than nothing” solution against corrosion in heat exchangers. The ease of application makes this a cheap and tempting solution. In this case the aluminium fin material receives a thin coating layer before being cut and stamped to fit. The result is that during cutting and stamping the fins, the protective layer is damaged creating hundred and thousands of meters of unprotected cutting edges in every single coil. Next to this one must take into account the fact that the protective layer will be between the copper tube and alu fin which reduces the heat transfer already without any corrosion being present.

Post coating is a technique where corrosion protective coatings are applied to heat exchangers after full assembly. If the right coating and the right procedures are applied the metals will be sealed off from the environment without reducing heat transfer. Disadvantage is that it requires special coatings and application can only be done by specialized companies.

Applying these special heat conducting coatings on the complete heat exchanging surface of coil is difficult and time consuming. This creates extra challenges with respect to pricing and delivery times compared to other solutions that can often be produced/supplied by heat exchanger manufactures themselves. Even though post coatings are preferably applied as a preventive measure before installation, it is possible to use them as corrective measure if choices made in the design phase turn out to be insufficient.

Selecting the right solution for heat exchanger design and corrosion prevention will affect the costs of maintenance, replacement and energy consumption. Investments made in the design phase will show significantly reduced operational costs in the long term. Data centres engineering has a big focus on electronic equipment that can handle higher temperatures because increasing the operational temperature can significantly reduces the operational costs, every degree counts! With this awareness it is only a small step to look at the heat exchangers of cooling equipment the same way.

Every degree counts; Protect, monitor and maintain these key elements of the cooling process!

How choices in the design phase affect long term performance ; example from the field

Aluminium – copper air‐cooled heat exchangers with indirect adiabatic cooling system might seem to be a cost effective method to lower the initial investment but will significantly increase corrosion problems. Within 3‐4 years the heat exchanger efficiency is significantly reduced as the hidden corrosion process is accelerated due to the water atomization during hot days (design days). With a less efficient heat exchanger the installation efficiency is negatively affected the whole year long. Once efficiency drops below critical point early replacements of HX is inevitable even though the front of the coil might look in good condition.

.gif)

As a retailer committed to “exceeding customer needs”, Asda wanted to go beyond offering affordable goods to their customers and provide a new service that would bring convenience to their daily lives.

The answer was toyou, an innovative end-to-end parcel solution. The service takes advantage of Asda’s extensive logistics and retail network enabling consumers to return or collect purchases from third-party online retailers across its stores, petrol forecourts and Click & Collect points. This now meaning, a consumer who buys a non-grocery product from another retailer, is able to pick it up or return the item at an Asda store rather than wait for home or office delivery.

In order to implement the service, Asda required a far more agile warehouse and supply chain management system. This new system needed to be hosted off-site so that there was no reliance on a single store or team.

“We were launching a new service, in a new field, on a scale we had never undertaken before. We needed a pedigree IT solutions provider that could support the full scale of our full end-to-end implementation, so CenturyLink stood out to us,” explains Paul Anastasiou, Senior Director toyou in Asda.

Despite the scale of the project, Asda could not risk putting extra pressure on their existing legacy IT system – a seamless and secure transition was required. In addition to this, as a brand dedicated to making goods and services more affordable to customers, keeping costs low as the business model expanded was crucial. As such, Asda chose to outsource the administration of the operating system and application licenses to manage costs.

Advertisement: Managed Services and Hosting Summit UK

Asda chose a warehouse management software platform from CenturyLink's partner Manhattan Associates. The multi-faceted solution was deployed as a hosted managed solution across two data centres. CenturyLink Managed Hosting administered Asda's operating systems, and Oracle and SQL databases on a full life cycle basis as part of the solution. Asda created its complete development, test certification and production environments for the Manhattan Associates platform on that dedicated infrastructure.

Asda used CenturyLink Dedicated Cloud Compute, which provided Compute and Managed Storage with further capacity to house the data flowing through Asda's business on-demand. The security requirement was accomplished with a dedicated cloud firewall protecting the entire solution.

CenturyLink instituted Disaster Recovery services between the two data centres at the application and database level, as well as managed firewalls to secure the data. CenturyLink also implemented Managed Load Balancing to manage the entire virtual environment and interface to all the linked warehouses.

18 months from concept to implementation

Approximately 3 - 4 months of testing

Asda’s launch of toyou with the support of CenturyLink, has greatly boosted the retailer’s customer relationships. By moving most of the retailer’s operations to CenturyLink, Asda has effectively launched a huge scale new venture, all the while maintaining the same level of service and value to customers.

Asda and CenturyLink are continuing to develop the working relationship and discuss what opportunities could be available as toyou grows and develops.

About CenturyLink

CenturyLink is a global communications, hosting, cloud and IT services company enabling millions of customers to transform their businesses and their lives through innovative technology solutions. CenturyLink offers network and data systems management, Big Data analytics and IT consulting.

Founded in 1945, KoçSistem is a member of the Koç Group and a leading, well-established, information technology company in Turkey. The company’s history spans seven decades, during which time KoçSistem has introduced leading edge technologies to the market in order to enhance the competitive edge and productivity of enterprises.

KoçSistem plays a major role in the digital transformation of enterprise offerings with a portfolio of smart IT solutions crossing mega industry trends including the Internet of Things, Big Data, Cloud Computing, Corporate Mobility and Smart Solutions. Providing data centre services for some of the biggest enterprises in both the public and private sector in Turkey, KoçSistem is also focused on delivering reliable co-location services.

KoçSistem originally supported its data centre business from two data centres, one in Istanbul and one in Ankara as a disaster recovery site. However, as demand grew, it recognised that it needed to invest in another data centre facility in Istanbul to prepare for, and manage, expansion.

KoçSistem carefully considered the issues related to self-build versus the selection of a data centre operator that would provide the bespoke infrastructure it required. Ultimately, it made the decision to outsource the new data centre requirements in order to maximise service quality. This approach would also enable the company to focus on strategic aspects of its business, instead of attention and resource being diverted to building and managing the infrastructure supporting it.

The ability of the operator to provide maximum uptime and resilience for its growing managed services business was key in the selection of a new data centre partner. Location, connectivity, capacity and the technical specification of the new facility was also important.

An initial review of the market revealed that whilst approximately 60% of data centre operators in Turkey are located in Istanbul, their size and the capacity available for future growth and adherence to international standards required for a Tier III+ level of resilience was extremely limited.

Few of the existing data centres had been built to withstand serious seismic activity although this is a geographical ‘at risk’ area, in spite of regulatory standards for the construction of critical buildings, such as hospitals, government offices, power generation and distribution facilities and telecommunications centres.

Zenium’s decision to enter the Turkish data centre market in 2014 with the development of a state-of-the-art data centre campus in Istanbul coincided with KoçSistem’s search for a partner.

Can Barış Öztok, Assistant General Manager, Sales & Marketing at KoçSistem explained: “KoçSistem manages technology demands for clients across all sectors, particularly from financial to retail so it’s critical to us that all business partners offer exceptional levels of service to the highest standard. We have found without doubt that Zenium meets those expectations.”

“Zenium demonstrated its ability to meet all of our key criteria and must-have features and capabilities,” added Öztok. “The company also shared our belief in the future of the Turkish IT market and supported our main focus; delivering enterprise cloud solutions crucial for digital transformation.”

Solution

KoçSistem was able to provide its customers with IT and cloud services from Istanbul One from day one of the launch of the new facility in September 2015. Its initial requirement was for 500 sq m of data centre space, customised to meet KoçSistem’s initial day-one power and cooling profile. This was further supported by dedicated mechanical and electrical plant, and with the ability to be scaled up as its requirements, for power densities for example, increased.

“Zenium’s decision to design and construct Istanbul One to earthquake code TEC 2007 and the highest importance factor of 1.5 for ‘buildings to be utilised after an earthquake’ provided us with the peace of mind that the facility would provide the business-critical security and resilience that we required,” continued Öztok.

“Its proactive approach to tackling the potential power outages that can occur in the region, by investing in multiple fuel oil storage tanks as an emergency power supply to the generators and stringent SLA contracts with fuel suppliers, also provided the reassurance that maximum uptime will be achieved regardless of the external issues relating to power supply,” said Öztok.

The combination of Zenium’s experience in data centre design, build and management and KoçSistem’s expertise in co-location and managed services has already paid dividends.

Increasing demand for quality data centre space and high level data management services resulted in KoçSistem filling its initial 500 sq m data hall within 12 months and the decision to extend its agreement with Zenium, and take on a second data hall, doubling capacity to 1,000 sq m in early 2017.

Istanbul One is the first data center to provide wholesale data center solutions in Turkey which is an important feature for KoçSistem going forward.

“We provide our customers a whole solution including all layers of cloud starting from co-location and cloud infrastructures to applications,” Öztok explained. “Our enterprise customers have large co-location requirements so the potential to increase capacity with Zenium offered us a future-proofed solution. It is scalable, energy efficient and economical from the outset. A win-win for us all.”

Istanbul has long been the link between east and west. However, consumer demand for mobile and internet services and the need for business class IT infrastructure, coupled with Istanbul’s status as a regional banking hub, is fuelling demand for high speed, low latency connectivity between Turkish and European financial and business centres. Istanbul is now in the unique position of being able to provide a digital bridge that will support communications and growth between neighbouring geographies. It is poised to become the natural regional centre for Internet connectivity.

Located in a well-established Organized Industrial Zone (OIZ) in close proximity to the new International Financial Center (IFC) and within easy reach of the historic business district in Istanbul, the Zenium campus comprises three self-contained, purpose-designed buildings that deliver 22 MW of IT load to 12,000 sq m of high specification technical space.

Constructed from a concrete frame with proprietary steel insulated cladding, the buildings are designed to meet the highest level of earthquake code allowing for immediate use and continued operation following a seismic event. Mains power is supplied via dual diverse HV feeds providing 30MVA, backed up by seismically-rated emergency generators.

The only carrier-neutral facility in the region, tenants at Istanbul One benefit from diverse connectivity via multiple fibre providers which enables them to choose the telecommunications operator/ISP that best meets their needs.

As the first global grade data center in Turkey with peering capabilities, Istanbul One also supports the enhanced connectivity increasingly required by international organisations.

Shortlist confirmed.

Online voting for the DCS Awards opened recently and votes are coming in thick and fast for this year’s shortlist. Make sure you don’t miss out on the opportunity to express your opinion on the companies that you believe deserve recognition as being the best in their field.

Following assessment and validation from the panel at Angel Business Communications, the shortlist for the 24 categories in this year’s DCS Awards has been put forward for online voting by our readership. The Data Centre Solutions (DCS) Awards reward the products, projects and solutions as well as honour companies, teams and individuals operating in the Data Centre arena.

DCS Awards are delighted to be joined by MPL Technology Group as our Headline Sponsor, together with our other sponsors, Eltek, Vertiv, Comtec, Riello UPS, Starline Track Busway and Volta Data Centres and our event partners Data Centre Alliance and Datacentre.ME

Phil Maidment, Co-Founder and Owner of MPL Technology Group said: "We are very excited to be Headline Sponsor of the DCS Awards 2017, and have lots of good things planned for this year. We are looking forward to working with such a prestigious media company, and to getting to know some more great people in the industry."

The winners will be announced at a gala ceremony taking place at London’s Grange St Paul’s Hotel on 18 May.

All voting takes place on line and voting rules apply. Make sure you place your votes by 28 April when voting closes by visiting: http://www.dcsawards.com/voting.php

The full 2017 shortlist is below:

Data Centre Energy Efficiency Project of the Year

New Design/Build Data Centre Project of the Year

Data Centre Management Project of the Year

Data Centre Consolidation/Upgrade/Refresh Project of the Year

Data Centre Cloud Project of the Year

Data Centre Power Product of the Year

Data Centre PDU Product of the Year

Data Centre Cooling Product of the Year

Data Centre Facilities Management Product of the Year

Data Centre Physical Security & Fire Suppression Product of the Year

Data Centre Cabling Product of the Year

Data Centre Cabinets/Racks Product of the Year

Data Centre ICT Storage Hardware Product of the Year

Data Centre ICT Software Defined Storage Product of the Year

Data Centre ICT Cloud Storage Product of the Year

Data Centre ICT Security Product of the Year

Data Centre ICT Management Product of the Year

Data Centre ICT Networking Product of the Year

Excellence in Service Award

Data Centre Hosting/co-location Supplier of the Year

Data Centre Cloud Vendor of the Year

Data Centre Facilities Innovation of the Year

Data Centre ICT Innovation of the Year

Data Centre Individual of the Year

Voting closes : 28 April

www.dcsawards.com

The successful Managed Services & Hosting Summit series of events is expanding to Amsterdam in April, assessing the impact of market trends and compliance on the MSP sector in Europe. Expert speakers from Gartner and a leading legal firm involved in assessing EU General Data Protection Regulation (GDPR) impact will be providing keynote presentations, speaking as evidence emerges that many MSPs need to “up their game”, particularly in their sales and customer retention.

Customers are demanding more, so Bianca Granetto, Research Director at Gartner will examine new research into digital business and digital transformation market dynamics and what customers are really asking about.

Another keynote speaker, Renzo Marchini, author of Cloud Computing: A Practical Introduction to the Legal Issues, is a partner in law firm Fieldfisher's privacy and information law group which has over 20 years' experience in advising clients across different sectors and ranging from start-ups to multinationals. He has a particular focus on cloud computing, the “Internet of Things”, and big data.

Finally, for those seeking guidance on the high level of merger and acquisition activity in the sector, David Reimanschneider, of M&A experts Hampleton, will look at where the smart money is going in the MSP business and what the real measures of value and time are, and when to sell.

The European Managed Services & Hosting Summit 2017 which will be staged in Amsterdam on 25th April 2017 will build on the success of the UK Managed Services & Hosting Summit which is now in its seventh year and will bring leading hardware and software vendors, hosting providers, telcos, mobile operators and web services providers involved in managed services and hosting together with channels including Managed Service Providers (MSPs) and resellers, integrators and service providers seeking to developing their managed services portfolios and sales of hosted solutions.

The European Managed Services & Hosting Summit 2017 is a management-level event designed to help channel organisations identify opportunities arising from the increasing demand for managed and hosted services and to develop and strengthen partnerships aimed at supporting sales. The event has attracted a strong line up including many of Europe’s leading suppliers to the MSP sector such as: Datto, SolarWinds MSP. Autotask, Kingston Technology, RingCentral, TOPdesk, ASG, Cato Networks and Kaseya.

For further information or to register please visit: www.mshsummit.com/amsterdam

FLASH FORWARD - A one-day end-user conference on flash and SSD storage technologies and their benefits for IT infrastructure design and application performance.

1st June 2017 – Hotel Sofitel Munich Bayerpost

Since the very early days of flash storage the industry has gathered pace at an increasingly rapid rate with over 1,000 product introductions and today there is one SSD drive sold for every three HDD equivalents. According to Trendfocus over 60 million flash drives shipped in the first half of 2016 alone compared to just over 100 million in the whole of 2015.

FLASH FORWARD brings together leading independent commentators experienced end-users and key vendors to examine the current technologies and their uses and most importantly their impact on application time-to-market and business competitiveness.

Divided into four areas of focus the conference will carry out a review of the technologies and the applications to which they are bringing new life together with examining who is deploying flash and where are the current sweet spots in your data centre architecture.

The conference will also examine what are the best practices that can be shared amongst users to gain the most advantage and avoid the pitfalls that some may have experienced and finally will discuss the future directions for these storage technologies.

The keynote speakers and moderators delivering the main conference content are confirmed as respected analyst Dr. Carlo Velten of Crisp Research AG, Jens Leischner the founder of the end user organization sanboard, The Storage Networking User Group, Bertie Hoermannsdorfer of speicherguide.de and André M. Braun representing SNIA Europe.

Sponsors include Dell/EMC, Fujitsu, IBM, Pure Systems, Seagate, Tintri, Toshiba, and Virtual Instruments and the event is endorsed by SNIA Europe.