No need to tell everyone that the Internet of Things, artificial intelligence and virtual reality are going to be playing an increasing part in all our lives, whether we like it or not, over the coming years. Less certain is how much of what goes on in the consumer world will infiltrate the business environment. Data centres run by robots (and no jokes that some of them are already!), employees connected to the workplace with VR technology implanted in their brains, and every single movement by humans and machines measured, monitored and analysed, to ensure that there’s no wastage, whatever the task.

No one seems to read books any more, but, for those of you who do, and might just have read Orwell and Huxley, you may well find that what’s going on is disconcertingly close to the story lines of such classics as ‘1984 and ‘Brave New World’. Neither book ends happily.

However, smart technology is here to stay, like it or not, and there are some amazing benefits to be had. And this issue of DCSUK includes several articles that demonstrate what is now possible. ‘Four smart ways to use your UPS’, ‘Multi-Cloud collaboration’, and ‘Sausage making and digital remote monitoring platforms’ - the titles alone give you a good indication of the way things are going in the data centre. And there’s every reason to celebrate these developments – there seem to be few, if any, down sides, and plenty of potential benefits.

The choice for those folks who own and operate data centres is a relatively simple one: keep things as they are, and have always been, keep IT and facilities apart, and watch your data centre(s) become spectacularly inefficient and expensive to run; or, embrace the technology that’s out there, get IT and facilities folks to work together, and rest easy that optimisation and efficiency are the hallmarks of your data centre(s).

Advertisement: Riello UPS

According to a new forecast from the International Data Corporation (IDC) Worldwide Quarterly Cloud IT Infrastructure Tracker, total spending on IT infrastructure products (server, enterprise storage, and Ethernet switches) for deployment in cloud environments will increase by 18.2% in 2017 to reach $44.2 billion. Of this amount, the majority (61.2%) will be done by public cloud datacenters, while off-premises private cloud environments will contribute 14.6% of spending. With increasing adoption of private and hybrid cloud strategies within corporate datacenters, spending on IT infrastructure for on-premises private cloud deployments will growth at 16.6%. In comparison, spending on traditional, non-cloud, IT infrastructure will decline by 3.3% in 2017 but will still account for the largest share (57.1%) of end user spending. (Note: All figures above exclude double counting between server and storage.)

In 2017, spending on IT infrastructure for off-premises cloud deployments will experience double-digit growth across all regions in a continued strong movement toward utilization of off-premises IT resources around the world. However, the majority of 2017 end user spending (57.9%) will still be done on on-premises IT infrastructure which combines on-premises private cloud and on-premises traditional IT. In on-premises settings, all regions expect to see sustained movement toward private cloud deployments with the share of traditional, non-cloud, IT shrinking across all regions.

Ethernet switches will be fastest growing segment of cloud IT infrastructure spending, increasing 23.9% in 2017, while spending on servers and enterprise storage will grow 13.6% and 23.7%, respectively. In all three technology segments, spending on private cloud deployments will grow faster than public cloud while investments on non-cloud infrastructure will decline.

Advertisement: Scheider Electric

Long term, IDC expects that spending on off-premises cloud IT infrastructure will experience a five-year compound annual growth rate (CAGR) of 14.2%, reaching $48.1 billion in 2020. Public cloud datacenters will account for 80.8% of this amount. Combined with on-premises private cloud, overall spending on cloud IT infrastructure will grow at a 13.9% CAGR and will surpass spending on non-cloud IT infrastructure by 2020. Spending on on-premises private cloud IT infrastructure will grow at a 12.9% CAGR, while spending on non-cloud IT (on-premises and off-premises combined) will decline at a CAGR of 1.9% during the same period.

"In the coming quarters, growth in spending on cloud IT infrastructure will be driven by investments done by new hyperscale datacenters opening across the globe and increasing activity of tier-two and regional service providers," said Natalya Yezhkova, research director, Storage. "Another significant boost to overall spending on cloud IT infrastructure will be coming from on-premises private cloud deployments as end users continue gaining knowledge and experience in setting up and managing cloud IT within their own datacenters."

More than 40 percent of data science tasks will be automated by 2020, resulting in increased productivity and broader usage of data and analytics by citizen data scientists, according to Gartner, Inc.

Gartner defines a citizen data scientist as a person who creates or generates models that use advanced diagnostic analytics or predictive and prescriptive capabilities, but whose primary job function is outside the field of statistics and analytics.

According to Gartner, citizen data scientists can bridge the gap between mainstream self-service analytics by business users and the advanced analytics techniques of data scientists. They are now able to perform sophisticated analysis that would previously have required more expertise, enabling them to deliver advanced analytics without having the skills that characterize data scientists.

Advertisement: CeBIT

With data science continuing to emerge as a powerful differentiator across industries, almost every data and analytics software platform vendor is now focused on making simplification a top goal through the automation of various tasks, such as data integration and model building.

"Making data science products easier for citizen data scientists to use will increase vendors' reach across the enterprise as well as help overcome the skills gap," said Alexander Linden, research vice president at Gartner. "The key to simplicity is the automation of tasks that are repetitive, manual intensive and don't require deep data science expertise."

Mr. Linden said the increase in automation will also lead to significant productivity improvements for data scientists. Fewer data scientists will be needed to do the same amount of work, but every advanced data science project will still require at least one or two data scientists.

Gartner also predicts that citizen data scientists will surpass data scientists in the amount of advanced analysis produced by 2019. A vast amount of analysis produced by citizen data scientists will feed and impact the business, creating a more pervasive analytics-driven environment, while at the same time supporting the data scientists who can shift their focus onto more complex analysis.

"Most organizations don't have enough data scientists consistently available throughout the business, but they do have plenty of skilled information analysts that could become citizen data scientists," said Joao Tapadinhas, research director at Gartner. "Equipped with the proper tools, they can perform intricate diagnostic analysis and create models that leverage predictive or prescriptive analytics. This enables them to go beyond the analytics reach of regular business users into analytics processes with greater depth and breadth."

According to Gartner, the result will be access to more data sources, including more complex data types; a broader and more sophisticated range of analytics capabilities; and the empowering of a large audience of analysts throughout the organization, with a simplified form of data science.

"Access to data science is currently uneven, due to lack of resources and complexity — not all organizations will be able leverage it," said Mr. Tapadinhas. "For some organizations, citizen data science will therefore be a simpler and quicker solution — their best path to advanced analytics."

Smaller data centre providers should follow in the footsteps of the large hyperscalers in adopting renewable energy sources to power the data centre rather than relying solely on unsustainable fossil fuel energies.

Roel Castelein, Customer Services Director of The Green Grid, argues that more innovative approaches to renewable energy will be useful towards reducing carbon emissions across the data centre industry. Roel said: “While fossil fuels have traditionally been an effective resource in powering the data centre, it is essentially a finite resource, while also being a significant contributor towards rising carbon emissions experienced in the industry. According to a white paper from Digital Realty, by 2020, data centres are projected to consume electricity equivalent to the output from 50 large coal-fired power plants - and that’s just in the US alone.

Advertisement: Riello UPS

“This goes to show the amount of power required to support data centres, but with limited supplies, alternative power through renewable energy will clearly be the most sustainable solution. Data centre operators have already placed large emphasis on using natural resources to cool IT infrastructure such as free air cooling, and this same mindset should exist when considering how the data centre should be powered.”

“The government could additionally serve as a support arm for facilitating the adoption of renewable energy by bringing together energy suppliers with data centre operators. Combining this with fiscal incentives can help guarantee that organisations incorporate some form of renewable energy and in the process push for long-term contracts with energy suppliers. This will help create a portfolio of partners and help lead to more progressive goals towards a sustainable and green data centre industry”, Roel concluded.

What does it take to get investment in data centre infrastructure?

Asks Tony Lock, Director of Engagement and Distinguished Analyst, Freeform Dynamics Ltd, February.

![]() Every few years, core IT infrastructure issues surge into visibility on the corporate radar. Often, they are then energetically discussed for a while, before drifting away into the background as more immediate challenges grab the organisation’s attention. The green data centre and power consumption is a good example of this. It gathers attention, gets talked about in the press, and then fades into the background as it is too easily taken for granted.

Every few years, core IT infrastructure issues surge into visibility on the corporate radar. Often, they are then energetically discussed for a while, before drifting away into the background as more immediate challenges grab the organisation’s attention. The green data centre and power consumption is a good example of this. It gathers attention, gets talked about in the press, and then fades into the background as it is too easily taken for granted.

But a recent survey by Freeform Dynamics highlights that while the green data centre isn’t a revolution gathering steam, power efficiency from a purely economic point of view is gathering pace – and environmental concerns are becoming a very visible challenge. Perhaps for the first time, green IT is not just riding on the coat-tails of economic savings.

Advertisement: MPL

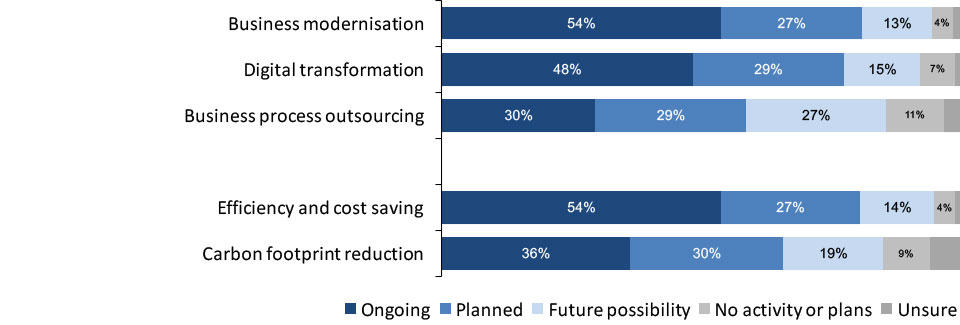

The survey asked what initiatives were actively driving change in mid-size to large UK-based organisations. The current fashion favourites business modernisation and digital transformation are clearly active or have planned projects in around three out of four organisations. And business process outsourcing is also still attracting attention (Figure 1).

Such responses are hardly unexpected, and the same could be said for the very large numbers who report keen interest in ‘efficiency and cost savings’, those perennial favourites of executive boards ever since businesses started being audited and subject to investor pressures. What is surprising is the fact that carbon footprint reduction initiatives are underway in over a third of organisations, with nearly as many more planning something. Less than one in ten stated they have no carbon-reduction activities or plans, a much smaller number than in the recent past.

Unlike in previous years, I suspect that most of these positive reports are not just politically correct answers being given to toe the corporate line. Indeed, it is clear there is a linkage between cost saving, energy efficiency and carbon footprint reduction: together, these are key pressures forcing data centre change. Data centres have taken significant steps in recent years to limit the power they consume. X86 server virtualisation allowed IT services to be delivered using fewer kilowatts, and now storage systems are undergoing similar consolidations, initially to optimise resource usage but with an impact on electricity consumption too.

Some organisations have also looked beyond basic server and storage systems into how the data centre itself is architected and operated. Popular techniques include free air cooling, hot and cold aisles, and running systems at higher operating temperatures. Such projects can require significant capital investment and considerable time spans to show returns, but they are happening.

And these optimisations are needed, not just to ensure that operational running costs are optimised, but because most data centres are now routinely challenged to run more and more workloads. IT is doing more for the same power bill. Not only is that good for the IT and business budget, it can be especially important where power supplies may be limited, a matter of no small concern in many locations.

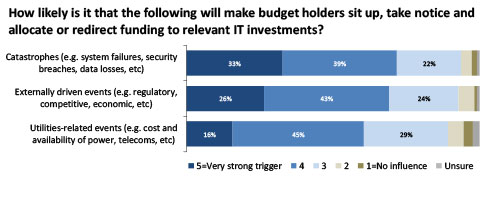

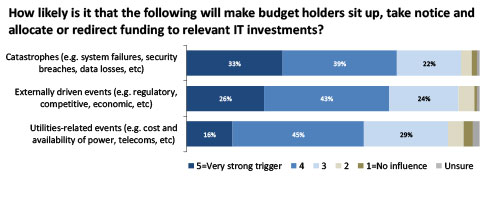

Alas, while these pressures are obvious and the benefits tangible, it can still take something going badly wrong to turn initiatives and ideas into budget funding (Figure 2).

The fact that severe service interruption is still such a strong trigger for change is worrying, as the survey also shows a clear correlation between organisations that are high achievers, and data centre improvement initiatives around carbon footprint and power consumption.

The survey asked respondents how well they were delivering against key indicators on an IT performance scorecard. This identified a group of high achievers the survey report labelled ‘Top IT Performers’, and such organisations are far more likely to have programmes in place to improve energy efficiency and reduce their carbon footprints (Figure 3).

While the report does not claim a causal relationship between good IT performance and that ‘green initiatives’ basket of better energy efficiency, carbon footprint and other environmental considerations, there is an obvious correlation between them. More importantly still, Freeform Dynamics has an abundance of other research that shows similar onward correlations between good IT performance and business success.

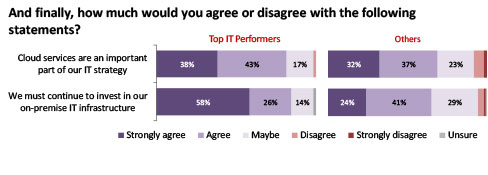

Some evangelists claim ‘Cloud’ to be the solution to all IT challenges, including power consumption. But it should be recognised that while cloud solutions are good options for some workloads, they are not the best for everything. Considerable research by Freeform Dynamics and many other organisations, as well as experience of the real world, shows that the majority of organisations plan to combine IT running in their data centres with solutions provided from external cloud suppliers (Figure 4).

A decade ago there were evangelical cries that data centres must become more green, but economics put a brake on many idealistic schemes to improve data centres’ carbon footprints. However, today that same economics, combined with advances in technology, more sophisticated energy management systems and new ways of designing and operating data centres, makes greener IT almost an inevitability, especially as most data centres are not going to be replaced by the cloud anytime soon.

But data centres are becoming greener, almost of their own accord. As one data centre infrastructure manager told me: “Most of the pressure for energy efficiency and transparency comes from investors rather than regulators. It’s all about being able to show your social responsibility credentials.” Perhaps for the first time, the need to demonstrate social responsibility is adding thrust to the drive to make data centres more power-efficient and to reduce carbon footprints.

As a company that’s made a number of acquisitions itself, Alternative is well experienced in how to amalgamate IT functions post acquisition. After all, we’ve acquired six IT and telco businesses in the past 10 years, so we know the complexity involved in the unification of existing structures at many different levels.

By Marion Stewart, Director of Operations, Alternative Networks.

And if you have a core proposition that positions your own IT operations function as “an extension of your customers’ IT team”, as Alternative’s does, then managing such amalgamations can add further layers of complication. Even if you don’t position your IT as an adjunct of the clients’, then getting your tech in order pre-merger, is still something you need to ensure you do well, otherwise you run the risk of disrupting and possibly damaging the benefits of the acquisition in the first place.

There are a number of facets to integration that clearly need discussing, but for the purposes of this blog I think we should focus on the area that consumes the most time and resources in M&A: tooling.

Clearly change is a significant factor of any M&A, but we have determined that we could reduce the impact of change on organisational teams by having the right technology already in place to collaborate effectively when we either made acquisitions, or indeed became an acquisition target ourselves. We now dine out on the story of how we have “industrialised our operations” and how we are able to help our customers do the same.

Following any merger, there clearly are lots of unknowns. People and skills are more often than not stuck in silos and quite surprisingly, yet commonly, do not overlap. Communication methods are complex, usually with multiple and disparate domains, email addresses, file stores, etc.; whilst processes are disjointed, and unconnected, so much so that finding harmony, efficiencies and service improvements add to overall complexity.

Advertisement: Schneider Electric

Our recommendation and approach to resolving this, based on our own experience, is to have a clear and uncompromising view of the end game, in terms of service architecture, technology and tooling. This end game needs to be mandatory, simple and everyone needs to be bought in.

Over the past year, we have focussed our efforts on rationalising operational tooling into a single ServiceNow instance and a single Salesforce instance, with some more specialist tools and bespoke IP around the outside. It was important though that the overall tooling and service architecture could always be drawn on one side of A4; and equally important that everyone could draw it. Internally we called it the “Magic Doughnut”.

Armed with the doughnut, we set about going from eight different ERP platforms, four CRM, numerous spreadsheets and fag packets, to a clear consolidated approach. Satisfyingly, because we’d clearly set out the end game, we found that all stakeholders proactively considered the possible ramifications of the new tooling and process on their own teams, rather than expecting someone to have done it for them.

Generally speaking, the integrations went smoothly because we did not waiver from this end game vision. Of course people had to compromise in places, but providing this compromise did not adversely affect our services to our clients, we were happy to make the compromise. The end result is that we have a simple, structured and universally understood operational structure, which runs from a centralised and universally visible tool. We have layered our ITIL processes over this ServiceNow instance, integrated ServiceNow with other systems to provide end to end workflow and bespoked some interfaces to maintain our services to our users.

We termed this as “industrialising” our operations, because we felt the legacy platforms could have been better, but by moving to a single industrial strength structure, we could now drive improved customer services and efficiency through consistent nomenclature and clarity of understanding. We now run tickets according to best practice, manage configurations according to best practice, provision new services according to best practice, and integrate our cloud platforms according to best practice.

And now this is in place, future acquisitions or mergers, or future product innovations, can be delivered from the same service architecture with minimum rework. Critically though, a smoothly integrated, uncomplicated, consolidated approach, not only enhances the ease and efficiency of service and boosts customer satisfaction, but resonates positively with your workforce, which, and make no mistake, during any merger or acquisition, is certainly the most valuable asset, but also its most sensitive.

The Cloud Industry Forum (CIF), is a not for profit company limited by guarantee, and is an industry body that champions and advocates the adoption and use of Cloud-based services by businesses and individuals.

CIF aims to educate, inform and represent Cloud Service Users, Cloud Service Providers, Cloud Infrastructure Providers, Cloud Resellers, Cloud System Integrators and international Cloud Standards organisations.

Each issue of DCS Europe includes an article written by a CIF member company.

By Steve Hone CEO and Cofounder, DCA Trade Association

It’s all change at the DCA for the start of 2017. There are now two new staff at the DCA to help and support members. Kieran Howse joined the team at the start of the New Year as Member Engagement Executive replacing Kelly Edmond; he is already up to speed and here to support you moving forward.

I am also pleased to announce that Amanda McFarlane has joined the team as Marketing Executive. Amanda has extensive marketing experience in the IT sector. This new role has been created to ensure information continues to be effectively disseminated out to our members target audiences; be that government, end users, general public or supply chain. Amanda has already taken over responsibility for the delivery of the new DCA website and members portal which is due to come on stream very soon.

Many of you will have had the pleasure of both working and spending time with Kelly Edmond over the past three years and I know you will join me in wishing her all the best for the future and extending a warm welcome to our new staff.

Advertisement: Schneider Electric

This month’s theme is ‘Industry Trends and Innovations’. I would like to thank all those who have taken time to contribute to this month’s edition allowing us to start the year with a good selection of articles.

During a recent visit to Amsterdam to attend the EU Annual COC meeting and the 5th EU Commission Funded EURECA event. I had the pleasure of getting a lift in the New Tesla X, not only does it run on pure battery power and do 0-60 in 2.6 seconds (quicker than a Ferrari) it literally drove itself, which when you think about all the innovative technology which must go into this car, the computer power needed to ensure it remains both reliable and safe - it’s quite mind blowing!

If this is a barometer of the sort of trends and innovations we can expect to see within the data centre sector, then we certainly have exciting times ahead. The DCA plans to dedicate a section of the new website to showcase our members ground breaking ideas as they emerge. Please contact Kieran or myself if you wish to spread the word about something new we’d be very keen to hear your news. The theme for the March edition is ‘Service Availability and Resilience’, the deadline date for copy is the 23rd February. This will be closely followed by the April edition which this year offers members the opportunity to submit customer case studies, the copy deadline is the 21st March.

Looking forward to a productive year, thank you again for all your article contributions and support.

T: +44(0)845 873 4587

E: info@datacentrealliance.org

Amanda McFarlane

Marketing Executive

Kieran Howse

Member Engagement Executive

By Franek Sodzawiczny, CEO & Founder, Zenium

The benefit of hindsight is that it provides clarity and perspective. It is now clear that - mergers, acquisitions and ground breaking technologies aside – there was an obvious shift in thinking last year towards the data centre now being regarded as a utility. Going forward, that most likely means that there is also going to be a much greater emphasis than ever before on service excellence in the data centre in 2017.

The key driver behind this is that data centres are becoming increasingly core to business operation and success, and are no longer considered to be ‘simply running behind the scenes’ but ensuring backup and storage is managed effectively. Information continues to be the lifeblood of global businesses, the mission-critical data centre is now deemed to be an asset that needs to be protected at all times.

Data centres are playing an increasingly important role in providing the essential infrastructure required to support ‘always-on’, content-rich, connectivity-hungry digital international businesses, we must perhaps now expect that the way in which they are procured will also change.

Advertisement: Riello UPS

What is shifting first and foremost is that the major purchasers of data centre space are now more likely to be hyper-scale companies such as Amazon, Google and Alibaba, than large corporates. The move to the Infrastructure-as-a-Service (IaaS) and Software-as-a-Service (SaaS) model, or any other such cloud services for that matter, will only continue to grow. The hyper-scale businesses know what they want and exactly when they need it. Their expertise in providing a hosted service to their customers means that they are 100 percent focused on efficiency, pricing, flexibility and reliability of service from the data centre operators that they ultimately select as business partners. They set the business agenda and the data operator is expected to meet it.

As a result, the requirement for consultancy and ‘old fashioned outsourcing’ from data centre operators is being replaced by a smart new approach, driven by an overall preference for finding a different way of working that focuses on taking a far more collaborative approach.

Data centre operators will without doubt continue to be selected based on their ability to build a facility that is sophisticated but added to future demands and expectations will be the underlying ability to form a trustworthy partnership with their clients. The focus will become about showing detailed levels of understanding about the specifications provided by the client’s highly knowledgeable and experienced engineering and IT team, whilst delivering an agile yet cost effective implementation and maintenance model in the longer term. As a service industry, data centre operators will therefore need to expand their levels of knowledge to identify lower efficiencies and continued cost savings. It will also require a responsive ‘can do’ attitude, complemented by advanced problem-solving skills and a heightened awareness of what guaranteeing a 24x7 operation actually means.

The impact of globalisation also suggests that data centre operators should be prepared to be asked to support client expansion into new territories, practically on demand, by harnessing their own experience of what it takes to enter new markets successfully, and then build quickly.

Extensive advanced planning, combined with the ability to deliver the same infrastructure in more than one country - against increasingly short deadlines - in order to support ambitious go to market strategies will become critical requirements in the RFP.

Looking ahead, we fully expect data centre operators to have to work much harder to meet the needs of fewer but larger customers and our flexible business model means we are ready. For those intending to deliver a new kind of data centre service in 2017, agility and adaptability will be what keeps you ahead of the game. Don’t under-estimate the importance of this.

Why innovation should not just be about the ‘tech’

By Dr Theresa Simpkin, Head of Department, Leadership and Management, Course Leader Masters in Data Centre Leadership and Management, Anglia Ruskin University

When Time magazine named the personal computer the “Machine of the Year” in 1982 few would have accurately predicted the rampant technological advances that stitch together the fabric of our economies and underpin our personal interactions with the world.

The introduction of the internet and all that has come with it, the explosion of big data, innovations in artificial intelligence and machine learning have brought with them profound change in an extraordinarily snapshot of time.

The tools available to us to be better at what we do (be it related to an IoT connected golf club that improves one’s swing or making better medical diagnoses based on advice from AI applications) have evolved exponentially. However, the way in which we work is still largely based on post war habit and leadership capabilities that have changed little in the last half century.

It is clear that despite sensational advances in technology and the applications to which they have been put, we are yet to apply similar attitudes to technical innovation and change to the human and organisational structures that form the spines of our businesses. It is ironic that the Data Centre sector is as much a victim of outmoded business thinking as many other traditional sectors.

Advertisement: Data Centre World

There is a raft of evidence to suggest that the sector is facing some big ticket issues; ones so large that individually they put business objectives at risk but together they form a perfect storm that dampens growth and may limit carriage of strategic objectives such as profitability and growth in market share.

One of the most pressing is the discussion regarding the capabilities inherent in the human aspects of this ‘second machine age’; the rampant advancement of technology bringing with it seismic shifts in the way we work, the skills we develop and the capabilities we need to take organisations and economies into a space where there is optimal capacity for efficiency, profitability and public good. For example, there is a raft of research from industry associations, vendors and business analysts that identifies skills shortages as a pressing and immediate risk to business and the sector as a whole.

Shortages in occupations such as engineering, IT and facilities management are leading to a rise in salaries as the ‘unicorns’ (those with a valuable suite of capabilities) are poached from one organisation to another driving up wages and generating a domino effect of constant recruitment and selection activity. So too, the upsurge of contingent labour (contract and short term contracts) to back fill vacancies gives rise to an enlarged risk profile for the DC organisation. The lack of a pipeline of diverse entrants to the sector diminishes the capacity for a varied landscape of thought, critical analysis that leads to innovation and creative application of technical and knowledge assets. Couple this with a generally aging workforce and the picture for organisations that are chronically ‘unready’ is grim.

These few examples of ‘big ticket’ and often intractable problems exist in a business environment of shifting business models, a sector that is swiftly consolidating and that is enhancing operations in emerging markets. Place all this against a backdrop of more savvy but possibly shrinking operational budgets and a highly competitive landscape it becomes obvious that the business responses of yesterday are little match for the sectoral challenges of today, let alone tomorrow.

When we think of research and development, innovation and advancement we tend to think about the kit; the fancy new widget that will help us make better decisions, build better tools or store and utilise data more securely and efficiently. We rarely think of the innovations in the way we lead, manage, educate and engage our people within the organisations charged with building the next big thing.

Research currently being undertaken by Anglia Ruskin University suggests that, like many other sectors, the DC sector is hampered by traditional business thinking. Inflexible organisational silos are obstructing innovation in the ways companies are organised and traditional ‘back end’ structures provide incompatible support to a ‘front end’ that is dynamic and geared for change and challenge.

For example, the ways in which people are recruited, trained, retained and managed in general is largely still influenced by and designed around business practices of mid last century. The attitudes and practices of recruitment and selection for example are still largely predicated on the workforce structures of that time too; but that time has passed and the workforce itself is remarkably different in structure, behaviour and expectation.

The Data Centre sector, of course, lies at the confluence of the ongoing digital revolution. The ‘second machine age’ demands a new managerial paradigm.

The intersection of rapid and discontinuous technological advances and latent demand for new business approaches is quite clearly the space where the Data Centre sector resides.

It is self-evident then that innovation should not just be thought of in terms of the tech or the gadgets. As the digital revolution marches on unconstrained, so should we see an organisational revolution of leadership capability, managerial expertise and business structure if the ‘perfect storm’ of sectoral challenges is to be diminished and overcome.

By Prof Ian F Bitterlin, CEng FIET, Consulting Engineer & Visiting Professor, University of Leeds

Whilst the principles of thermal management in the data centre, cold-aisle/hot-aisle layout, blanking plates, hold stopping and ‘tight’ aisle containment are not exactly ‘new’ it is still surprising to see how many facilities don’t go the whole way and apply those principles. Many of those that do seem to be running into problems when they try to adopt a suitable control system for the supply of cooling air.

The principles are simple; if you separate the cold air supply from the hot air exhaust you can maximise the return air temperature and increase the capacity and the efficiency of the cooling plant. This is especially effective if you are located where the external air temperature is cool for much of the year and you can take advantage of that by installing free-cooling coils that cool the return air without a mechanical refrigeration cycle. In this context, the term ‘free-cooling’ simply translates into ‘compressor free’ – you still must move air (or water) by fans (or pumps) so it is certainly not ‘free’ but it does reduce the cooling energy. The hotter you make your cold-aisle and the colder it is outside (or you use adiabatic or evaporative technology to take advantage of the wet-bulb, rather than dry-bulb) the more free-cooling hours you will achieve per year.

Advertisement: Datacentre Transformation Manchester

Clearly this doesn’t work very effectively when the outside ambient is hot and humid but for most of Europe from mid-France northwards 100% compressor-free operation is perfectly possible if the cold-aisle is taken up into the ASHRAE ‘Allowable’ region for temperature. Of course, some users/operators don’t want to take such risks (real or perceived) with their ICT hardware so limit the energy saving opportunities and some will still fit compressor-based cooling to be in ‘standby’. For some users, the consumption of water in data centre cooling systems, for adiabatic sprays or evaporative pads, is an issue, but it is simple to show that as water consumption increases your energy bill goes down and the cost of water (in most locations in northern Europe) does not materially eat into those energy savings. In fact, if your utility is based on a thermal combustion cycle (such as coal, gas, municipal waste or nuclear) then the overall water consumption (utility plus data centre) goes down as thermal power stations use more water than an adiabatic system per kWh of ICT load. For a rule-of-thumb you can calculate a full adiabatic system in the UK climate as consuming 1000m3/MW of cooling load per year, equivalent to about 10 average UK homes. So, what’s the problem? To see what the problem of tight (say <5% leakage between cold and hot aisle, instead of 50%) air management is we should look at the load that needs cooling, e.g. a server. The mechanical designer positions the cooling fans and all the heat-generating components.

The space is small and confined so the model can be highly accurate using CFD as a design tool. He places the 20-30 temperature sensors that are installed in the average server at the key points and someone writes an algorithm that takes all the data and calculates the fan speed to keep the hottest component below its operational limit.

However, we should note the simple model environment – one source of input air at zero pressure differential to the exhaust air and one small physical space with a route for the cooling air. So, the fan speed varies with IT load as it causes the components to heat up. Then we can look at the cabinet level:

And what to load each server with? Any model is now incapable of representing reality: If the user enables the thermal management firmware on their hardware (admittedly not as common as it should be) the loads’ fan speed is controlled by the IT load (or more specifically by the hottest component) then each server will be ramping its fans up and down on a continuous basis as the work flows in and out of the facility.

In a large enterprise facility, the variations of air-flow are totally random. The fan speed variation with ICT load is unique to each server make/model with some varying kW load from (as good as) 23% at idle to (as bad as) nearly 80% at idle.

This leads us to consider ‘how?’ to control the air delivery: Often cooling systems are designed to the set-point feed temperature or exhaust temperature or to operate with a slight pressure differential between cold and hot side – although this is most undesirable as the ICT hardware dictates the air-flow demand and its on-board fans are rated for zero pressure differential between inlet and exhaust. That results in air being ‘pushed’ through the hardware as the server fans slow down. Don’t forget that the cooling air will find the shortest path, not the most effective, and the clear majority of excess bypass air is through the load.

However, for air-cooled servers, the whole concept of a small set of very large supply fans (operating together) feeding a very large set of very small fans (servers all operating independently) with no control between them is a mechanical engineering problem. If we add to that a set of very large fans acting to remove/scavenge the exhaust air we have three fans in series and, without a degree of bypass-air, it becomes a mechanical engineering nightmare.

Then we might as well take the opportunity to look at the generally held view that server fans ramp up at 27°C and any temperature higher than that only serves to cancel out any energy saving in the cooling system. The principle is true but the knee-point is nearer 32°C. The main reason for the ‘Recommended’ higher limit of 27°C in the ASHRAE Thermal Guidelines is to limit the fan noise from multiple deployments to avoid H&S issues needing the facility to be classified as a noise hazard area.

We can now see that, somehow, the cooling system must:

Whatever you do don’t succeed in pressurising the cold-aisle as there will be risks of internal hot and cold spots inside your ICT hardware with unknown consequences.

By Garry Connolly, Founder and President, Host in Ireland

The European data hosting industry is on an upswing as the region continues to attract impressive infrastructure investments from a number of content and technology behemoths, including Google, Apple, Microsoft and Facebook. This rapid growth is not only felt at the top, however, as a host of small and medium-sized enterprises (SMEs) are also recognising and capitalising on the growth of colocation opportunities and increased availability of transatlantic bandwidth capacity due to additional subsea cable deployments.

Against this backdrop, a major sea change looms over the EU data hosting community that will fundamentally impact all industry stakeholders, including service providers and data centres, as well as hyperscales and SMEs alike. Following four long years

of debate and preparation, the EU General Data Protection Regulation (GDPR) was approved by the EU Parliament last spring and will go into effect on May 25, 2018.

The GDPR is making waves throughout the European data industry, as it is set to replace the Data Protection Directive 95/46/EC, a regulation adopted in 1995. Since that year, there hasn’t been any major change to the regulatory environment surrounding data storage and consumption, making this the most significant event in data privacy regulation in more than two decades. Unfortunately, this major shift leaves many IT professionals and organisational heads in a state of confusion as they attempt to understand and comply with coming

policies.

According to a report by Netskope, out of 500 businesses surveyed, only one in five IT professionals in medium and large businesses felt that they would comply with upcoming regulations, including the GDPR. In fact, 21 percent of respondents mistakenly assumed their cloud providers would handle all compliance obligations on their behalf.

Advertisement: DCS Awards

It’s clear that there are many questions surrounding the coming changes to the regulatory landscape, among these, what will be the impact of Brexit and how this new regulation will affect UK-based organisations.

Following this decision, the UK government stated its intention to give notice to leave the EU by the end of March 2017. Under the Treaty of the European Union, after giving notice there will be an intense period of preparation and negotiation between the UK and EU with respect to the terms of withdrawal. Once these terms are agreed upon or two years have passed since the original notice, the UK be officially separated from the European Union. That means that there is a possibility that UK will remain a part of the EU through March of 2019 – roughly a full year after the GDPR takes effect.

This leaves much confusion for British organisations wishing to remain compliant with their government’s data privacy regulations. Given the fact that the UK’s membership in the EU is likely to linger into 2019, UK-based organisations must take appropriate measures to comply with the GDPR between May 2018 and the official departure date from the EU, at which point they are to conform to their country’s individual legislation which may or may not differ.

Some of the more formidable obligations of the GDPR include increased territorial scope, stricter rules for data processing consent, and heavy penalties for non-compliance. The GDPR also puts major emphasis on the privacy and protective rights of data subjects, whether individuals or corporate entities. These new rules will not only apply to organisations residing in the EU, but also to organisations responsible for the data processing of any EU resident regardless of the company’s physical location. Fines for non-compliance are severe, as any data controller in breach of new GDPR policies will receive fines of up to four percent of total annual global turnover or €20 million, whichever is deemed larger.

May 2018 may seem a far way off, however it’s important to remain current and educated on the GDPR to ensure your company is prepared for what’s ahead. For data centres, arguably the community that will be most significantly affected by this law, the Data Centre Alliance will keep readers abreast of developments regarding this critical issue in accordance with its mission to promote awareness of industry best practices.

By John Taylor, Vigilent Managing Director, EMEA

In a perfect world, data centres operate exactly as designed. Cold air produced by cooling systems will be delivered directly to the air inlets of IT Load with the correct volume and temperature to meet the SLA.

In reality, neither of these scenarios occur. The disconnect is airflow – invisible, dynamic, and often counterintuitive; airflow is completely susceptible to even minor changes in facility infrastructure.

Properly managed, correct airflow can deliver significant energy reductions and a hotspot-free facility. Add dynamic control, and you can ensure that the optimum amount of cooling is delivered precisely where it’s needed, even as the facility evolves over time.

Why does airflow go awry, even within meticulously maintained facilities? The biggest factor is that you can’t see what is happening. Hotspots are notoriously difficult to diagnose, as their root cause is rarely obvious. Fixing one area can cause temperature problems to pop up in another. When a hot spot is identified, a common first instinct is to bring more air to the location, usually by adding or opening a perforated floor tile.

Temperatures may actually fall at that location. But what happens in other locations? As floor tiles proliferate, a greater volume of air, and so more fan energy, is required to meet SLA commitments at the inlets of all equipment.

Consider that fans don’t cool equipment. Fans distribute cold air, while adding the heat of their motors to the total heat load in the room. As the fan speed increases, power consumption grows with the cube of their rotation. Opening holes in the floor to address hot-spots is ultimately self-limiting.

Increased fan speed also increases air mixing, disrupting the return of hot air back to cooling equipment and compromising efficiency. We often see examples of the Venturi effect, where conditioned air blows past the inlets of IT equipment, leaving IT equipment starving for cooling.

Ideally, conditioned air should move through IT equipment to remove heat before returning directly to the air conditioning unit. If air is going elsewhere, or never flows through IT equipment, efficiency is compromised.

Poorly functioning or improperly configured cooling equipment can also affect airflow. Even equipment that has been regularly maintained, and appears on inspection to be working, may in reality be performing so poorly that it actually produces heat. And facility managers don’t realize when this occurs.

The data is typically not available. Containment is often deployed to gain efficiency by separating hot and cold air. Unfortunately, most containment isn’t properly configured and can work against this objective. Even small openings in containment or non-uniformly distributed load can lead to hot spots. Where pressure control is used, small gaps lead to higher fan speeds in order to properly condition the contained space.

So how can airflow be better managed? First you need data. And not just a temperature sensor on the wall, or return air temperature sensors in your cooling equipment. Airflow is best managed at the point of delivery – where the conditioned air enters the IT equipment.

Since airflow distribution is uneven, sensing and presenting the temperatures at many locations within each technical room will provide the best visibility into airflow. And instrumentation of cooling equipment can reveal which units are working properly and which machines may require maintenance.

Next, you need intelligent software that can measure how the output of each individual cooling unit influences temperatures at air inlets across the entire room. When a cooling unit turns on or off, temperatures change throughout the room. It’s possible to track and correlate changes in rack inlet temperatures with individual cooling units to create a real-time empirical model of how air moves through

a particular facility at any moment.

And finally, you need automatic control. Cooling equipment and fan speeds that are adjusted dynamically and in real-time will deliver the right amount of conditioned air to each location. Machine learning techniques ensure that cooling unit influences are kept up to date over time.

Advertisement: Data Centre Transformation Conference

What better way to

celebrate the end of the 2017 sporting summer than by joining up with the 4th

annual Data Centre Golf Tournament?

Co-organised by the Data Centre Alliance and Data Centre Solutions, the event brings together data centre owners, operators and equipment and services vendors for a great day out at Heythrop Park in Oxfordshire. This year’s venue promises to build on our three previous successful events, raising money for the CLIC Sargent cancer charity, and providing an enjoyable and friendly atmosphere to mix with your industry colleagues while trying to get that little white ball into that very small white hole

There might not be any green jackets or huge prize funds to tempt you, but if the following description of our 2017 venue doesn’t have you reaching for the application form right away, either you don’t have a pulse, or you really, really don’t like golf (or being pampered):

“Open 365 days of the year the Bainbridge Championship Course at Heythrop Park was redesigned in 2009 by Tom MacKenzie the golf course architect responsible for many Open Championship venues, including Turnberry, Royal St. Georges and Royal Portrush. The 7088 yard par 72 course weaves throughout the 440 acres of rambling estate and provides the perfect challenge for all golfers.

The 18 hole course meanders over ridges and through valleys that are studded with ancient woodland, lakes and streams. The course is quintessentially English and has several signature holes notably the 6th hole where the green nestles besides a fishing lake, the 14th which sweeps leftwards around an ancient woodland and the closing hole which is straight as a die and has the impressive mansion house as its backdrop.

Located 12 miles north of Oxford just outside Chipping Norton in the Cotswolds, Heythrop Park is within 90 minutes drive time of London and the Midlands.”

With the DCS and DCA teams already signed up, there’ll be plenty of meandering over ridges and through valleys, with plenty of diversions to inspect the woodlands, lakes and streams at close quarters. The fishing lake sounds particularly promising, if coming a little early in the round, while the 14th offers the opportunity to ignore the sweep left round the ancient woodland, and to head straight into the trees, where cries as ancient as civilisation itself will be heard. Needless to say designing a hole to be as ‘straight as a die’ will be wasted on most of us, and it is to be hoped that the mansion house is set sufficiently far back from the 18th green to avoid the potential vandalism of thinned approach shots.

Full details of the Data Centre Tournament, co-organised by the DCA and DCS, can be found here:

https://digitalisationworld.com/golf/

And if any of your colleagues think that a day on the golf course has nothing to do with data centres, tell them that it involves all of the following:

See you at Heythrop Park in September.

This year is already seeing a continuation of the process of change

which rattled many people in previous years. The IT industry is in the middle

of one of the biggest upheavals it has ever faced. All parties agree that the

change is here to stay; indicators are pointing to the continued rapid growth

of managed services, with the world market predicted to grow at a compound

annual growth rate of 12.5% for at least the next two years.

Inside this new model, there are other indicators. Not least of all the considerations, and a factor which is prominent in most discussions with customers, is security. Security as a service is a part of the picture, but needs to be better understood, particularly when the rising need to secure devices through the Internet of Things (IoT) is examined. Industrial applications are set to be the core focus for IoT Managed Security Service Providers (MSSPs) with ABI Research forecasting overall market revenues to increase fivefold and top $11bn in 2021. Though OEM and aftermarket telematics, fleet management, and video surveillance use cases primarily drive IoT MSSP service revenues in 2017, continued innovation in industrial applications that include the connected car, smart cities, and utilities will be the future forces that IoT MSSPs need to target and understand in all their implications.

ABI Research stresses that there will not be one sole technology that addresses all IoT security challenges; in actuality, the fact that true end-to-end IoT security is near impossible for a single vendor to achieve is a primary reason for the rise in vendors offering managed security services to plug security gaps. But it will be something that customers are aware of, and where they will need answers. Security will, in the future, form part of the purchase choice, directly driven by improved security education and a broader demand to protect digital assets in the same sense as users protect their physical assets today, says the researcher. For this reason, security service providers, although largely invisible today, may become the household names of tomorrow as IoT security moves from a requirement to a product differentiator.

Security is not an afterthought in most environments, however, and the way it relates to an industry or enterprise is a critical aspect of the architecture and systems design. This plays to the strengths of the expert service provider, who understands both the solutions and the risk profile of the customer. Just as ABI has argued that the security provider brand will start to play more of a role, so the channels with more of the answers will reap more of the rewards as customers look for help in challenging times. Security, like hosting and recovery, network resilience and scaling, will become a part of an overall solution which offers the lower costs of repeatability as well as enhancements as a part of the package which the customer will look to build on for the future.

How to find the answers to customers’ questions and engage in a more holistic discussion on security will be a concern for many. The Managed Services and Hosting Summit, London, 20 September, is the perfect event at which to do this, with over twenty leading suppliers on hand as well as industry experts.

And now in its seventh year, the MSHS event offers multiple ways to get those answers: from plenary-style presentations from experts in the field to demonstrations; from more detailed technical pitches to wide-ranging round-table discussions with questions from the floor, there is a wealth of information on offer.

A key part of the event will look at how, having gained the knowledge needed to offers such managed services, complete with security implications understood, the messages can then be conveyed to the customer in a meaningful way. Sales is no longer just a matter of faster technologies and brands, it is about being able to convey the whole picture of changed ways of working as the race for productivity, competitive advantage and cost control drives customers to look for new and different answers. Get your questions answered, see what others are doing, learn just what other resources are available to support your business at the key managed services event of 2017. New for 2017, Managed Services and Hosting Summit Europe, taking place at the Hilton Hotel, Apollolaan, Amsterdam.

For more details on both events visit:

London: http://www.mshsummit.com

Amsterdam: http://mshsummit.com/amsterdam

Angel Business communications have announced the categories and entry criteria for the 2017 Datacentre Solutions Awards (DCS Awards).

The DCS Awards are designed to reward the product designers, manufacturers, suppliers and providers operating in data centre arena and are updated each year to reflect this fast moving industry. The Awards recognise the achievements of the vendors and their business partners alike and this year encompass a wider range of project, facilities and information technology award categories designed to address all of the main areas of the datacentre market in Europe.

DCS are pleased to announce the Headline Sponsor for the 2017 event. MPL Technology Group is a Global leader in the development, production and integration of advanced Software and Hardware solutions to support the availability, agility and efficient operations of IT infrastructures and mission-critical environments worldwide. N-Gen by MPL provides a detailed yet intuitive interface, rich visualisations and easy access to data in real-time.

Phil Maidment, Co-Founder and Owner of MPL Technology Group said: "We are very excited to be Headline Sponsor of the DCS Awards 2017, and have lots of good things planned for this year. We are looking forward to working with such a prestigious media company, and to getting to know some more great people in the industry."

The DCS Awards categories provide a comprehensive range of options for organisations involved in the IT industry to participate, so you are encouraged to get your nominations made as soon as possible for the categories where you think you have achieved something outstanding or where you have a product that stands out from the rest, to be in with a chance to win one of the coveted crystal trophies.

The editorial staff at Angel Business Communications will validate entries and announce the final short list to be forwarded for voting by the readership of the Digitalisation World stable of publications during March and April. The winners will be announced at a gala evening on 11 May at London’s Grange St Paul’s Hotel.

The 2017 DCS Awards feature 26 categories across four groups. The Project and Product categories are open to end use implementations and services and products and solutions that have been available, i.e. shipping in Europe, before 31st December 2016 while the Company and Special awards nominees must have been present in the EMEA market prior to 1st June 2016. Nomination is free of charge and all entries must feature a comprehensive set of supporting material in order to be considered for the voting short-list. The deadline for entries is : 1st March 2017.

Please visit : www.dcsawards.com for rules and entry criteria for each of the following categories:

During the UK referendum campaign, the Leave camp spoke ardently about the importance of protecting our sovereignty and making our own laws. Now we’re coming out of the EU and the single market, sovereignty is top of the agenda again, for the opposite reason. Rather than solving our right to sovereignty, Brexit threatens to destabilise it.

By Jack Bedell-Pearce, Managing Director, 4D.

Right now, data holders are worried about the sovereignty of their information and the onus of complying with international laws that are not our own. For instance, company directors are wondering what their obligations will be if their organisation’s data is stored abroad and subject to the laws of the country in which the data resides? How do they comply with the country’s privacy regulations and keep foreign countries from being able to subpoena their data?

In the Autumn of 2016 4D surveyed 200 UK decision-makers in small-to-medium sized businesses. We discovered that 72% of the respondents are under pressure to demonstrate data protection compliance for customer data and 63% say Brexit has intensified their concerns surrounding data location and sovereignty even further – suggesting matters of sovereignty may not have been the best reason for exiting the EU.

Brexit’s impact on General Data Protection Regulations (GDPR) is a case in point. The UK authorities played a significant role in developing and refining the new EU framework, that comes into force on 25th May 2018.

Advertisement: DCS Awards

Contrary to wanting to shake off the European enforced legislation, 69% of businesses want to keep GDPR. Nearly half (46%) of these businesses are fully prepared to absorb additional costs incurred through direct marketing – which the Information Commissioner’s Office (ICO) estimates will come to an additional £76,000 a year. Just 23% would like to scrap GDPR to avoid extra operating costs. While the majority (59%) think GDPR should be compulsory for all large businesses.

This doesn’t necessarily mean that businesses are happy to embrace all European legislation. Data protection is a minefield and proper governance is desperately needed. For many, protecting one’s data is a major factor in a company’s decision-making. One in two businesses in the UK decide where data is stored based on matters of data security alone.

However, on the flip side, this means the other half aren’t thinking about data residency issues. We also know that only 28% think about data sovereignty in terms of how local laws will impact the way they store their data and 87% of IT decision-makers confess to not looking at data location and sovereignty issues post-referendum.

This lack of consideration could be a ticking time-bomb. If the UK’s data flows become pawns in a messy divorce, with Theresa May reiterating recently that the government is pushing a hard Brexit as opposed to soft, businesses will need to get to grips with where their data lies, what laws their data is subject to and who owns the data centres in which their data resides. As amorphous as cloud computing sounds, company data hosted in the cloud is not an ethereal mass of zeros and ones. It has a home and this home may become a bone of contention.

Yesteryear, a European data centre could have served UK and European customers. In just over two years’ time, companies may need a European data centre for European customers and a UK data centre for UK customers. If this comes to fruition, expect multinational companies, serving a European population to move the bulk of their servers from London to a European data centre (i.e. in Dublin, Paris, Frankfurt etc.). This would represent a mass exodus of investment.

However, it also stands to reason that SMEs in the UK that don’t intend to trade with the EU, would gain far more certainty and simplicity by placing their physical servers in a UK owned and located data centre, on a co-location basis. This is reflected in the 64% of respondents who believe that in the current climate, the assurance of colocation and flexibility of cloud infrastructure strikes a good balance.

We also have to consider the recently published (10th January) European Commission’s Free Flow of Data Initiative (FFDI) Communications proposal. Up until then, the position of the European Commission was that member states (with the exception of certain specific classes of data) need not require data to be located within nation state boundaries – by law. Companies would have the right to choose where to locate their data within the EU. To add to the confusion, they are also proposing to introduce new legal concepts and policy measures targeted at business to business transactions.

The only silver lining to this is that these proposals are still at the consultation phase and there may be opportunities for trade associations such as TechUK to push for reform.

So where does this leave software, cloud and hosting companies that want to enter the UK market over the next couple of years? Until very recently, data sovereignty has been a bit of a misnomer in the US and Europe as we’ve all become used to storing and transferring private citizen data across borders without much fuss. The only certainty emerging from all this uncertainty, is that if you are looking to expand into the UK market, the safest long term bet is to put your servers and data into British based data centres. By doing so, you will automatically be aligning the data security needs of your British clients with current and future UK data protection legislation – whatever that may be. Britain is also likely to adhere to the very strict data privacy rules it (ironically) helped craft in the upcoming General Data Protection Regulation (GDPR) in 2018.If the data centre or hosting provider happens to be British owned, even better, as it won’t be subject to outside meddling from US agencies, as Microsoft has found out with some of its Irish based data centres.

Taking a home-grown approach would certainly insulate SMEs them from the negotiations’ changing winds. This awareness is starting to dawn. Almost one third of companies using an international public cloud for company data intend to stop doing so in two years’ time, following Brexit. While the proportion of companies using a UK public cloud for company data are expected to increase by almost a third in two years’ time, in the wake of the UK’s exit from the European Union.

While the wholesale movement of company data would be premature at this stage, the thinking certainly needs to be done over the next 12 months, in terms of the connotations of a business’s current cloud mix and the ins and outs of transitioning to a UK-based data centre.

The sovereignty of their data will only be one small piece of the jigsaw but it’s an important one. In the digital era, data is a company’s crown jewels and the way businesses treat and protect their data will govern their reputations.

However, there is something of a mystique surrounding these different data center components, as many people don’t realize just how they’re used and why. In this pod of the “Too Proud To Ask” series, we’re going to be demystifying this very important aspect of data center storage. You’ll learn:•What are buffers, caches, and queues, and why you should care about the differences?

•What’s the difference between a read cache and a write cache?

•What does “queue depth” mean?

•What’s a buffer, a ring buffer, and host memory buffer, and why does it matter?

•What happens when things go wrong?

These are just some of the topics we’ll be covering, and while it won’t be exhaustive look at buffers, caches and queues, you can be sure that you’ll get insight into this very important, and yet often overlooked, part of storage design.

Recorded Feb 14 2017 64 mins

Presented by: John Kim & Rob Davis, Mellanox, Mark Rogov, Dell EMC, Dave Minturn, Intel, Alex McDonald, NetApp

Advertisement: Cloud Expo Europe

Converged Infrastructure (CI), Hyperconverged Infrastructure (HCI) along with Cluster or Cloud In Box (CIB) are popular trend topics that have gained both industry and customer adoption. As part of data infrastructures, CI, CIB and HCI enable simplified deployment of resources (servers, storage, I/O networking, hardware, software) across different environments.

However, what do these approaches mean for a hyperconverged storage environment? What are the key concerns and considerations related specifically to storage? Most importantly, how do you know that you’re asking the right questions in order to get to the right answers?

Find out in this live SNIA-ESF webcast where expert Greg Schulz, founder and analyst of Server StorageIO, will move beyond the hype to discuss:

· What are the storage considerations for CI, CIB and HCI

· Fast applications and fast servers need fast server storage I/O

· Networking and server storage I/O considerations

· How to avoid aggravation-causing aggregation (bottlenecks)

· Aggregated vs. disaggregated vs. hybrid converged

· Planning, comparing, benchmarking and decision-making

· Data protection, management and east-west I/O traffic

· Application and server I/O north-south traffic

Live online Mar 15 10:00 am United States - Los Angeles or after on demand 75 mins

Presented by: Greg Schulz, founder and analyst of Server StorageIO, John Kim, SNIA-ESF Chair, Mellanox

The demand for digital data preservation has increased drastically in recent years. Maintaining a large amount of data for long periods of time (months, years, decades, or even forever) becomes even more important given government regulations such as HIPAA, Sarbanes-Oxley, OSHA, and many others that define specific preservation periods for critical records.

While the move from paper to digital information over the past decades has greatly improved information access, it complicates information preservation. This is due to many factors including digital format changes, media obsolescence, media failure, and loss of contextual metadata. The Self-contained Information Retention Format (SIRF) was created by SNIA to facilitate long-term data storage and preservation. SIRF can be used with disk, tape, and cloud based storage containers, and is extensible to any new storage technologies.

It provides an effective and efficient way to preserve and secure digital information for many decades, even with the ever-changing technology landscape.

Join this webcast to learn:

•Key challenges of long-term data retention

•How the SIRF format works and its key elements

•How SIRF supports different storage containers - disks, tapes, CDMI and the cloud

•Availability of Open SIRFSNIA experts that developed the SIRF standard will be on hand to answer your questions.

Live online Feb 16 10:00 am United States - Los Angeles or after on demand 75 mins

Simona Rabinovici-Cohen, IBM, Phillip Viana, IBM, Sam Fineberg

SMB Direct makes use of RDMA networking, creates block transport system and provides reliable transport to zetabytes of unstructured data, worldwide. SMB3 forms the basis of hyper-converged and scale-out systems for virtualization and SQL Server. It is available for a variety of hardware devices, from printers, network-attached storage appliances, to Storage Area Networks (SANs). It is often the most prevalent protocol on a network, with high-performance data transfers as well as efficient end-user access over wide-area connections.

In this SNIA-ESF Webcast, Microsoft’s Ned Pyle, program manager of the SMB protocol, will discuss the current state of SMB, including:

•Brief background on SMB

•An overview of the SMB 3.x family, first released with Windows 8, Windows Server 2012, MacOS 10.10, Samba 4.1, and Linux CIFS 3.12

•What changed in SMB 3.1.1

•Understanding SMB security, scenarios, and workloads•The deprecation and removal of the legacy SMB1 protocol

•How SMB3 supports hyperconverged and scale-out storage

Live online Apr 5 10:00 am United States - Los Angeles or after on demand 75 mins

Ned Pyle, SMB Program Manager, Microsoft, John Kim, SNIA-ESF Chair, Mellanox, Alex McDonald, SNIA-ESF Vice Chair, NetApp

•Why latency is important in accessing solid state storage

•How to determine the appropriate use of networking in the context of a latency budget

•Do’s and don’ts for Load/Store access

Live online Apr 19 10:00 am United States - Los Angeles or after on demand 75 mins

Doug Voigt, Chair SNIA NVM Programming Model, HPE, J Metz, SNIA Board of Directors, Cisco

UsingEnglish.com explains the “watching sausage being made” idiom in this way: “If something is like watching sausages getting made, unpleasant truths about it emerge that make it much less appealing. The idea is that if people watched sausages getting made, they would probably be less fond of them.”[1]

By Patrick Donovan, Sr. Research Analyst, Data Center Science Center, IT Division, Schneider Electric.

LESS fond?! There’s a Discovery Channel “How It’s Made” episode on hot dogs. Normally this TV show tends to be soothing…mesmerizing…oddly satisfying as you watch simple raw materials rhythmically turn into beautiful finished goods like a well-orchestrated ballet. But this hot dog episode is horrifying. It will make the most ardent carnivores among us queasy. Some things are definitely better off unseen.

With 10 plus years of product development experience, I know that “sausage making” can be an apt analogy for what often happens when a product goes from concept to “store shelves”. It can be messy, wasteful, unduly iterative, and ugly. But, in the end, that can still be OK. For most products, regardless of how haphazard or inefficient the development process is, the end product can still end up being good enough for market success. For data center remote monitoring platforms, however, this is unacceptable. HOW it gets developed…how disciplined, controlled, and thorough the process is…is critically important to how safe the platform is from a cyber security perspective. And so data center owners who are considering using a third party to help manage and monitor their facility infrastructure systems remotely, should find out how these vendors develop their offers and manage their offer creation processes.

Advertisement: Managed Services & Hosting Summit Europe

Digital remote monitoring platforms work by having connected data center infrastructure systems send a continuous stream of data about itself to a gateway that forwards it outside the network or to the cloud. This data is then monitored and analyzed by people and data analytic engines. Finally, there is a feedback loop from the monitoring team and systems to the data center operators. The data center operators have access to monitoring dashboards from inside the network via the gateway or to the platform’s cloud when outside the network via a mobile app or computer in a remote NOC.

There is understandably a concern these externally connected monitoring platforms could be a successful avenue of attack for cyber criminals. Today, cyber security threats are always present and the nature of cybercriminal attacks is constantly evolving. Preventing these attacks from causing theft, loss of data, and system downtime requires a secure monitoring platform and constant vigilance by a dedicated DevOps team. Before selecting and implementing a digital monitoring platform, vendor solutions should be evaluated not just on the basis of features and functions, but also on their ability to protect data and the system from cyber-attacks. Knowing how secure a platform is requires an understanding of how it is developed, deployed, and operated.

Secure Development Lifecycle is a process by which security is considered and evaluated throughout the development lifecycle of products and solutions. It was originally developed and proposed by Microsoft. The use of an SDL process to govern development, deployment, and operation of a monitoring platform is good evidence that the vendor is taking the appropriate measures to ensure security and regulatory compliance. The vendor should be using a process that is consistent with ISO 27034. There are 8 key practices to look for in an SDL process. They are described briefly here…

There should be a continuous training program for employees to design, develop, test, and deploy solutions that are more secure.

The cyber security features and customer security requirements to be included in product development should be enumerated clearly and in detail.

Security architecture documents are produced that follow industry accepted design practices to develop the security features required by the customer. These documents are reviewed and threat models are created to identify, quantify, and address the potential security risks.

Implementation of the security architecture design into the product follows the detailed design phase and is guided by documentation for best practices and coding standards. A variety of security tools as part of the development process including static, binary, and dynamic analysis of the code should be used.

Security testing on the product implementation is performed from the perspective of the threat model and ensuring robustness. Regulatory requirements as well as the deployment strategy are included as part of the testing.

Security documentation that defines how to more securely install, commission, maintain, manage, and decommission the product or solutions should be developed. Security artifacts are reviewed against the original requirements and to the security level that was targeted or specified.

The project development team or its deployment leader should be available to help train and advise service technicians on how best to install and optimize security features. Service teams should be able to provide help for customers to install, manage, and upgrade products and solutions throughout the life cycle.

There should be a product “Cyber Emergency Response Team” that manages vulnerabilities and supports customers in the event of a cyber incident. Ideally, this team should be the same group of people that has developed the application. This means that everyone involved knows the product in detail.

White Paper 239, “Addressing Cyber Security Concerns of Data Center Remote Monitoring Platforms”, gets into more details including covering key elements related to developer training and management, as well as describing recommended design attributes to look for in the monitoring platform itself. Being aware of how remote monitoring platforms are developed, deployed, and maintained can help you make a better decision as to which one to implement. If you start thinking about how sausage is made as you interview a vendor, take that as a clear signal to go elsewhere!

[1] https://www.usingenglish.com/reference/idioms/like+watching+sausage+getting+made.html

In a political world gone mad mounting questions are being asked over some of the outlandish decisions being made by our government, in particular for backed incentive schemes. A prime example of this can be seen in the latest raft of changes to the ETPL (Energy Technology Product List), part of the ECA (Enhanced Capital Allowance) scheme, which were released in October.

By Mark Trolley, Managing Director, Power Control Ltd.