Many years ago, Volkswagen advertised a new vehicle which, if memory serves, could do 800 miles on one tankful of fuel. Now, even though closer inspection of this claim revealed the ‘laboratory-like’ conditions under which this amazing feat had been achieved, all these years later, I can still remember the claim, and it still impresses me.

At a time when gaslighting, at least in the world of politics, appears to be the new normal, I sincerely hope that the business world does not follow suit. The reason I bring this subject up? Well, much of the news I receive is in the form of survey-based research. And the headline findings of many such news releases are arresting to put it mildly. And many of them contradict one another. So, one report will ‘reveal’ that, say, 90 percent of all businesses are already well on their way to digital transformation. Another might suggest that digital transformation projects are absent from a similar number of organisations. And then there will be the reports that either suggest digital transformation projects are, or are not, meeting expectations.

Look closely, and there’s often a correlation between the research findings and the company which has carried out and published them. If your business is persuading everyone that the flexible, hybrid working model is the future, you won’t be publishing survey results that suggest everyone wants to go back to the office. Similarly, if your technology solution requires everyone to be in the office, you’ll make sure to publish statistics that downplay the working from home trend.

All of which leads me to conclude that research surveys are far from worthless – indeed, many do uncover some fascinating trends and provide much food for thought; but they do come with a slight warning: be aware of where they come from. Just as the daily newspapers push their own right/left (not much interest in the middle ground these days!) political views with carefully crafted articles, so vendors are not immune from lobbying potential customers with carefully selected, perhaps incomplete, data sets. So, read as many surveys as you can, and you should end up with a balanced view!

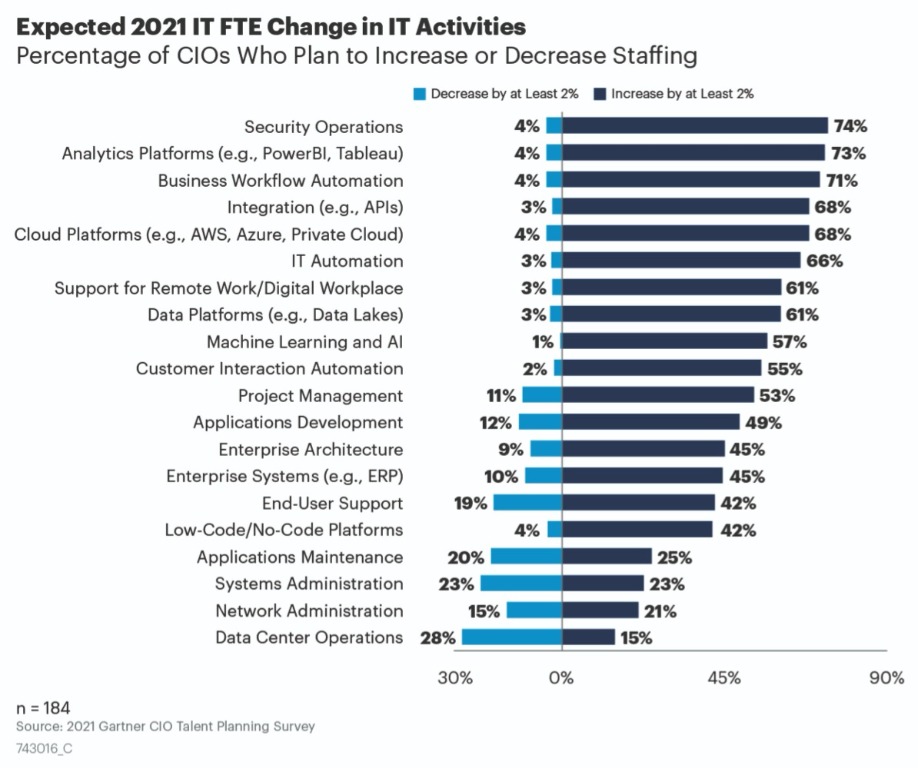

Fifty-five percent of CIOs plan to increase their total number of full-time employees (FTEs) in IT across the course of 2021, according to a recent survey* from Gartner, Inc. They will predominantly focus staffing growth in the areas of automation, cloud and analytics platforms, and support for remote work.

“The critical role IT played across most firms’ response to the pandemic appears to have had a positive impact on IT staffing plans,” said Matthew Charlet, research vice president at Gartner. “The initial pessimism around the 2021 talent situation that many CIOs expressed mid-2020 has since dwindled.”

Among the CIOs surveyed, the need to accelerate digital initiatives is, by a large margin, the primary factor driving IT talent strategies in 2021. This is followed by the automation of business operations and increase in cloud adoption.

Overall, CIOs are much more likely to expand FTEs in newer, more-emerging technology domains. Growth in security personnel is necessary to reduce the risks from significant investments in remote work, analytics and cloud platforms. Data center, network, systems administration and applications maintenance are the most likely areas to see staffing decreases due to the shift towards cloud services (see Figure 1).

Figure 1. Expected 2021 IT FTE Change in IT Activities

Source: Gartner (March 2021)

“While CIOs plan to hire more staff in several areas critical to meeting changed consumer and employee expectations, most will not be able to meet their planned talent strategy goals without also upskilling or refocusing their existing teams,” said Mr. Charlet.

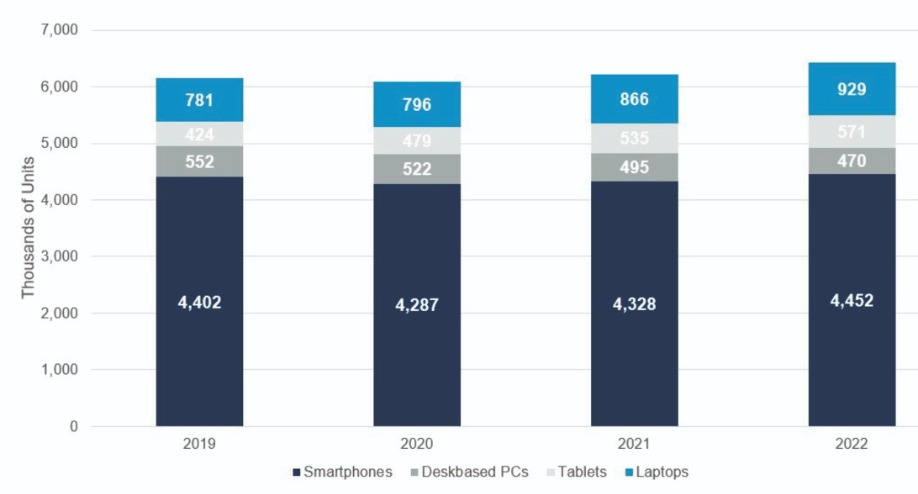

Global devices installed base to reach 6.2 billion units

The number of devices (PCs (laptops and deskbased), tablets and mobile phones) in use globally will total 6.2 billion units in 2021, according to Gartner, Inc. In 2021, 125 million more laptops and tablets are expected to be in use than in 2020.

“The COVID-19 pandemic has permanently changed device usage patterns of employees and consumers,” said Ranjit Atwal, senior research director at Gartner. “With remote work turning into hybrid work, home education changing into digital education and interactive gaming moving to the cloud, both the types and number of devices people need, have and use will continue to rise.”

In 2022, global devices installed base is on pace to reach 6.4 billion units, up 3.2% from 2021 (see Figure 1). While the shift to remote work exacerbated the decline of desktop PCs, it boosted the use of tablets and laptops. In 2021, the number of laptops and tablets in use will increase 8.8% and 11.7%, respectively, while the number of deskbased PCs in use is expected to decline from 522 million in use in 2020 to a forecasted 470 million in use in 2022.

Figure 2. Installed Base of Devices, Worldwide, 2019-2022 (Thousands of Units)

Source: Gartner (April 2021)

Smartphone Installed Base Set for Upturn in 2021

Users’ confidence is returning in the smartphone market. Although the number of smartphones in use declined 2.6% in 2020, smartphone installed base is on pace to return to growth with a 1% increase in 2021. “With more variety and choice, and lower-priced 5G smartphones to choose from, consumers have begun to either upgrade their smartphones or upgrade from feature phones,” said Mr. Atwal. “The smartphone is also a key tool that people use to communicate and share moments during social distancing and social isolation.”

The integration of personal and business lives, together with a much more dispersed workforce requires flexibility of device choice. Workers are increasingly using a mix of company-owned devices and their own personal devices running on Chrome, iOS and Android, which is increasing the complexity of IT service and support.

“Connectivity is already a pain-point for many users who are working remotely. But as mobility returns to the workforce, the need to equip employees able to work anywhere with the right tools, will be crucial,” said Mr. Atwal. “Demand for connected 4G/5G laptops and other devices will rise as business justification increases.”

Security and risk management leaders must address eight top trends to enable rapid reinvention in their organization, as COVID-19 accelerates digital business transformation and challenges traditional cybersecurity practices, according to Gartner, Inc.

In the opening keynote at the recent Gartner Security & Risk Management Summit, Peter Firstbrook, research vice president at Gartner, said these trends are a response to persistent global challenges that all organizations are experiencing.

“The first challenge is a skills gap. 80% of organizations tell us they have a hard time finding and hiring security professionals and 71% say it’s impacting their ability to deliver security projects within their organizations,” said Mr. Firstbrook.

Other key challenges facing security and risk leaders in 2021 include the complex geopolitical situation and increasing global regulations, the migration of workspaces and workloads off traditional networks, an explosion in endpoint diversity and locations and a shifting attack environment, in particular, the challenges of ransomware and business email compromise.

The following top trends represent business, market and technology dynamics that are expected to have broad industry impact and significant potential for disruption.

Gartner Top Security and Risk Management Trends, 2021

Source: Gartner, March 2021

Trend 1: Cybersecurity Mesh

Cybersecurity mesh is a modern security approach that consists of deploying controls where they are most needed. Rather than every security tool running in a silo, a cybersecurity mesh enables tools to interoperate by providing foundational security services and centralized policy management and orchestration. With many IT assets now outside traditional enterprise perimeters, a cybersecurity mesh architecture allows organizations to extend security controls to distributed assets.

Trend 2: Identity-First Security

For many years, the vision of access for any user, anytime, and from anywhere (often referred to as “identity as the new security perimeter”) was an ideal. It has now become a reality due to technical and cultural shifts, coupled with a now majority remote workforce during COVID-19. Identity-first security puts identity at the center of security design and demands a major shift from traditional LAN edge design thinking.

“The SolarWinds attack demonstrated that we’re not doing a great job of managing and monitoring identities. While a lot of money and time has been spent on multifactor authentication, single sign-on and biometric authentication, very little has been spent on effective monitoring of authentication to spot attacks against this infrastructure,” said Mr. Firstbrook.

Trend 3: Security Support for Remote Work is Here to Stay

According to the 2021 Gartner CIO Agenda Survey, 64% of employees are now able to work from home. Gartner surveys indicate that at least 30-40% will continue to work from home post COVID-19. For many organizations, this shift requires a total reboot of policies and security tools suitable for the modern remote workspace. For example, endpoint protection services will need to move to cloud delivered services. Security leaders also need to revisit policies for data protection, disaster recovery and backup to make sure they still work for a remote environment.

Trend 4: Cyber-Savvy Board of Directors

In the Gartner 2021 Board of Directors Survey, directors rated cybersecurity the second-highest source of risk for the enterprise after regulatory compliance. Large enterprises are now beginning to create a dedicated cybersecurity committee at the board level, led by a board member with security expertise or a third-party consultant.

Gartner predicts that by 2025, 40% of boards of directors will have a dedicated cybersecurity committee overseen by a qualified board member, up from less than 10% today.

Trend 5: Security Vendor Consolidation

Gartner’s 2020 CISO Effectiveness Survey found that 78% of CISOs have 16 or more tools in their cybersecurity vendor portfolio; 12% have 46 or more. The large number of security products in organizations increases complexity, integration costs and staffing requirements. In a recent Gartner survey, 80% of IT organizations said they plan to consolidate vendors over the next three years.

“CISOs are keen to consolidate the number of security products and vendors they must deal with,” said Mr. Firstbrook. “Having fewer security solutions can make it easier to properly configure them and respond to alerts, improving your security risk posture. However, buying a broader platform can have downsides in terms of cost and the time it takes to implement. We recommend focusing on TCO over time as a measure of success.”

Trend 6: Privacy-Enhancing Computation

Privacy-enhancing computation techniques are emerging that protect data while it’s being used — as opposed to while it’s at rest or in motion — to enable secure data processing, sharing, cross-border transfers and analytics, even in untrusted environments. Implementations are on the rise in fraud analysis, intelligence, data sharing, financial services (e.g. anti-money laundering), pharmaceuticals and healthcare.

Gartner predicts that by 2025, 50% of large organizations will adopt privacy-enhancing computation for processing data in untrusted environments or multiparty data analytics use cases.

Trend 7: Breach and Attack Simulation

Breach and attack simulation (BAS) tools are emerging to provide continuous defensive posture assessments, challenging the limited visibility provided by annual point assessments like penetration testing. When CISOs include BAS as a part of their regular security assessments, they can help their teams identify gaps in their security posture more effectively and prioritize security initiatives more efficiently.

Trend 8: Managing Machine Identities

Machine identity management aims to establish and manage trust in the identity of a machine interacting with other entities, such as devices, applications, cloud services or gateways. Increased numbers of nonhuman entities are now present in organizations, which means managing machine identities has become a vital part of the security strategy.

Gartner, Inc. has identified the top 10 data and analytics (D&A) technology trends for 2021 that can help organizations respond to change, uncertainty and the opportunities they bring in the next year.

“The speed at which the COVID-19 pandemic disrupted organizations has forced D&A leaders to have tools and processes in place to identify key technology trends and prioritize those with the biggest potential impact on their competitive advantage,” said Rita Sallam, distinguished research vice president at Gartner.

D&A leaders should use the following 10 trends as mission-critical investments that accelerate their capabilities to anticipate, shift and respond.

Trend 1: Smarter, Responsible, Scalable AI

The greater impact of artificial intelligence (AI) and machine learning (ML) requires businesses to apply new techniques for smarter, less data-hungry, ethically responsible and more resilient AI solutions. By deploying smarter, more responsible, scalable AI, organizations will leverage learning algorithms and interpretable systems into shorter time to value and higher business impact.

Trend 2: Composable Data and Analytics

Open, containerized analytics architectures make analytics capabilities more composable. Composable data and analytics leverages components from multiple data, analytics and AI solutions to rapidly build flexible and user-friendly intelligent applications that help D&A leaders connect insights to actions.

With the center of data gravity moving to the cloud, composable data and analytics will become a more agile way to build analytics applications enabled by cloud marketplaces and low-code and no-code solutions.

Trend 3: Data Fabric Is the Foundation

With increased digitization and more emancipated consumers, D&A leaders are increasingly using data fabric to help address higher levels of diversity, distribution, scale and complexity in their organizations’ data assets.

The data fabric uses analytics to constantly monitor data pipelines. A data fabric utilizes continuous analytics of data assets to support the design, deployment and utilization of diverse data to reduce time for integration by 30%, deployment by 30% and maintenance by 70%.

Trend 4: From Big to Small and Wide Data

The extreme business changes from the COVID-19 pandemic caused ML and AI models based on large amounts of historical data to become less relevant. At the same time, decision making by humans and AI are more complex and demanding, requiring D&A leaders to have a greater variety of data for better situational awareness.

As a result, D&A leaders should choose analytical techniques that can use available data more effectively. D&A leaders rely on wide data that enables the analysis and synergy of a variety of small and large, unstructured, and structured data sources, as well as small data which is the application of analytical techniques that require less data but still offer useful insights.

“Small and wide data approaches provide robust analytics and AI, while reducing organizations’ large data set dependency,” said Ms. Sallam. “Using wide data, organizations attain a richer, more complete situational awareness or 360-degree view, enabling them to apply analytics for better decision making.”

Trend 5: XOps

The goal of XOps, including DataOps, MLOps, ModelOps, and PlatformOps, is to achieve efficiencies and economies of scale using DevOps best practices, and ensure reliability, reusability and repeatability. At the same time, it reduces duplication of technology and processes and enabling automation.

Most analytics and AI projects fail because operationalization is only addressed as an afterthought. If D&A leaders operationalize at scale using XOps, they will enable the reproducibility, traceability, integrity and integrability of analytics and AI assets.

Trend 6: Engineering Decision Intelligence

Engineering decision intelligence applies to not just individual decisions, but sequences of decisions, grouping them into business processes and even networks of emergent decisions and consequences. As decisions become increasingly automated and augmented, engineering decisions give the opportunity for D&A leaders to make decisions more accurate, repeatable, transparent and traceable.

Trend 7: Data and Analytics as a Core Business Function

Instead of being a secondary activity, D&A is shifting to a core business function. In this situation, D&A becomes a shared business asset aligned to business results, and D&A silos break down because of better collaboration between central and federated D&A teams.

Trend 8: Graph Relates Everything

Graphs form the foundation of many modern data and analytics capabilities to find relationships between people, places, things, events and locations across diverse data assets. D&A leaders rely on graphs to quickly answer complex business questions which require contextual awareness and an understanding of the nature of connections and strengths across multiple entities.

Gartner predicts that by 2025, graph technologies will be used in 80% of data and analytics innovations, up from 10% in 2021, facilitating rapid decision making across the organization.

Trend 9: The Rise of the Augmented Consumer

Most business users are today using predefined dashboards and manual data exploration, which can lead to incorrect conclusions and flawed decisions and actions. Time spent in predefined dashboards will progressively be replaced with automated, conversational, mobile, and dynamically generated insights customized to a user’s needs and delivered to their point of consumption.

“This will shift the analytical power to the information consumer — the augmented consumer — giving them capabilities previously only available to analysts and citizen data scientists,” said Ms. Sallam.

Trend 10: Data and Analytics at the Edge

Data, analytics and other technologies supporting them increasingly reside in edge computing environments, closer to assets in the physical world and outside IT’s purview. Gartner predicts that by 2023, over 50% of the primary responsibility of data and analytics leaders will comprise data created, managed, and analyzed in edge environments.

D&A leaders can use this trend to enable greater data management flexibility, speed, governance, and resilience. A diversity of use cases is driving the interest in edge capabilities for D&A, ranging from supporting real-time event analytics to enabling autonomous behavior of “things”.

A new forecast from International Data Corporation (IDC) shows that the continued adoption of cloud computing could prevent the emission of more than 1 billion metric tons of carbon dioxide (CO2) from 2021 through 2024.

The forecast uses IDC data on server distribution and cloud and on-premises software use along with third-party information on datacenter power usage, carbon dioxide (CO2) emissions per kilowatt-hour, and emission comparisons of cloud and non-cloud datacenters.

A key factor in reducing the CO2 emissions associated with cloud computing comes from the greater efficiency of aggregated compute resources. The emissions reductions are driven by the aggregation of computation from discrete enterprise datacenters to larger-scale centers that can more efficiently manage power capacity, optimize cooling, leverage the most power-efficient servers, and increase server utilization rates.

At the same time, the magnitude of savings changes based on the degree to which a kilowatt of power generates CO2, and this varies widely from region to region and country to country. Given this, it is not surprising that the greatest opportunity to eliminate CO2 by migrating to cloud datacenters comes in the regions with higher values of CO2 emitted per kilowatt-hour. The Asia/Pacific region, which utilizes coal for much of its' power generation, is expected to account for more than half the CO2 emissions savings over the next four years. Meanwhile EMEA will deliver about 10% of the savings, largely due to its use of power sources with lower CO2 emissions per kilowatt-hour.

While shifting to cleaner sources of energy is very important to lowering emissions, reducing wasted energy use will also play a critical role. Cloud datacenters are doing this through optimizing the physical environment and reducing the amount of energy spent to cool the datacenter environment. The goal of an efficient datacenter is to have more energy spent on running the IT equipment than cooling the environment where the equipment resides.

Another capability of cloud computing that can be used to lower CO2 emissions is the ability to shift workloads to any location around the globe. Developed to deliver IT service wherever it is needed, this capability also enables workloads to be shifted to enable greater use of renewable resources, such as wind and solar power.

IDC's forecast includes upper and lower bounds for the estimated reduction in emissions. If the percentage of green cloud datacenters today stays where it is, just the migration to cloud itself could save 629 million metric tons over the four-year time period. If all datacenters in use in 2024 were designed for sustainability, then 1.6 billion metric tons could be saved. IDC's projection of more than 1 billion metric tons is based on the assumption that 60% of datacenters will adopt the technology and processes underlying more sustainable "smarter" datacenters by 2024.

"The idea of 'green IT' has been around now for years, but the direct impact of hyperscale computing can have on CO2 emissions is getting increased notice from customers, regulators, and investors and it's starting to factor into buying decisions," said Cushing Anderson, program vice president at IDC. "For some, going 'carbon neutral' will be achieved using carbon offsets, but designing datacenters from the ground up to be carbon neutral will be the real measure of contribution. And for advanced cloud providers, matching workloads with renewable energy availability will further accelerate their sustainability goals."

The International Data Corporation (IDC) Worldwide Artificial Intelligence Spending Guide estimates that spending on artificial intelligence (AI) will reach $12 billion in 2021 in Europe and will continue to experience solid double-digit growth through 2024. Automation needs, digital transformation, and customer experience continue to support spending on AI, even in times when COVID-19 has impacted negatively on revenues for many companies.

"COVID-19 was a trigger for AI investments for some verticals, such as healthcare. Hospitals across Europe have deployed AI for a variety of use cases, from AI-based software tool for automated diagnosis of COVID-19 to machine learning-based hospital capacity planning systems," said Andrea Minonne, senior research analyst at IDC Customer Insights & Analysis. "On the other hand, other verticals such as retail, transport, and personal and consumer services had to contain their AI investments, especially when AI was used to package personalized customer experiences to be delivered in-store."

The COVID-19 pandemic did not end in 2020 and will have effects throughout 2021 and the years to come. COVID-19 has revolutionized the way many industries operate, changing their business processes but also the products, services, and experiences they deliver. Many non-essential retailers are still closed today due to strict lockdowns, meaning that retailers were forced to shift their focus from in-store AI toward AI-driven online experiences and services. Customers also had to adapt to a new reality, and that triggered their behavior to change. Shopping online is the new normal. For that reason, retailers are looking closely at use cases such as chatbots, pricing optimization, and digital product recommendations to guarantee customer engagement but also secure revenues from digital channels.

The same is the case for transportation, an industry that has been heavily affected by COVID-19. With travel being restricted to essential reasons only and quarantine measures widely in place, many travelers have stalled or cancelled their plans, which has a strong impact on transportation companies' revenues. In 2020, transportation's focus has shifted from AI-driven innovation to cost-containment, at least until the industry recovers. For that reason, AI investments across transportation companies will grow below average this year.

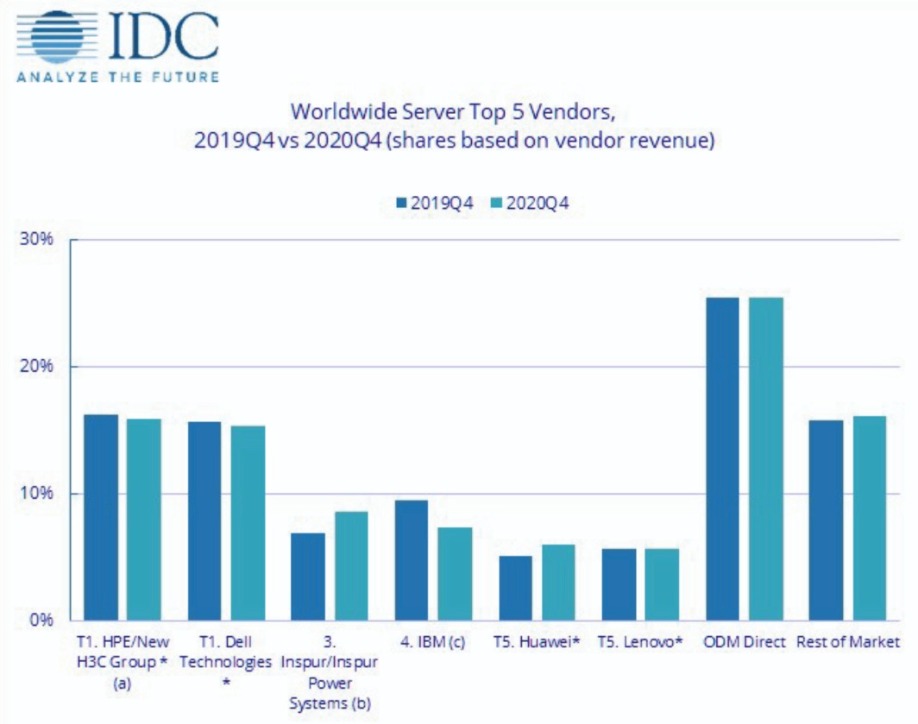

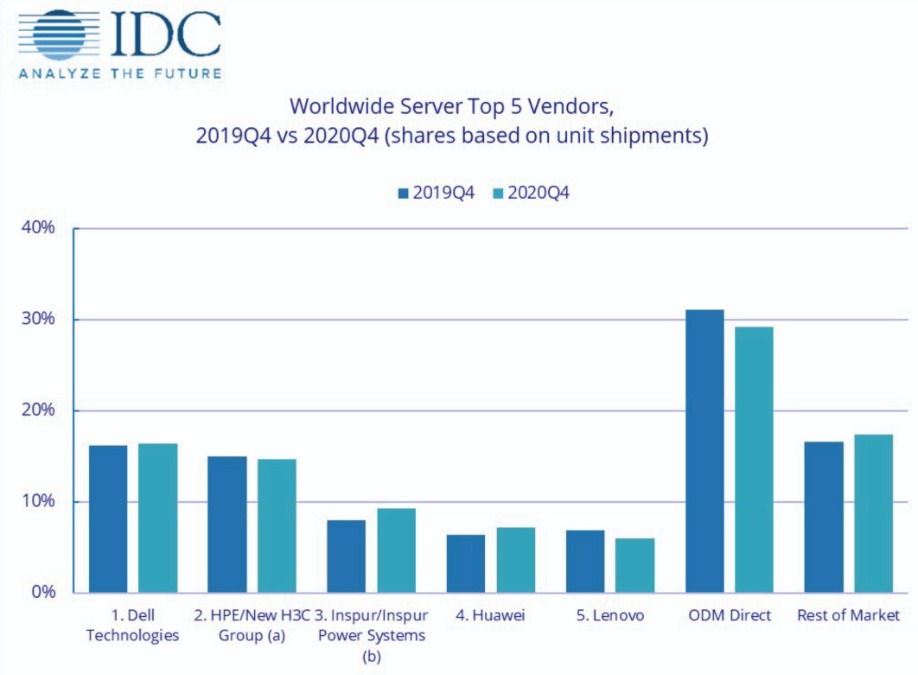

According to the International Data Corporation (IDC) Worldwide Quarterly Server Tracker, vendor revenue in the worldwide server market grew 1.5% year over year to $25.8 billion during the fourth quarter of 2020 (4Q20). Worldwide server shipments declined 3.0% year over year to nearly 3.3 million units in 4Q20.

Volume server revenue was up 3.7% to $20.4 billion, midrange server revenue also increased 8.4% to $3.3 billion, while high-end servers declined by 21.8% to $2.1 billion.

"Global demand for enterprise servers was relatively flat during the fourth quarter of 2020 with the strongest increase to demand coming from China (PRC)," said Paul Maguranis, senior research analyst, Infrastructure Platforms and Technologies at IDC. "From a regional perspective, server revenue within PRC grew 22.7% year over year while the rest of the world declined 4.2%. Blade systems continued to decline, down 18.1% while rack optimized servers grew 10.3% year over year. Similar to the previous quarter, servers running AMD CPUs as well as ARM-based servers continued to grow revenue, increasing 100.9% and 345.0% year over year respectively, albeit on a small but growing base."

Overall Server Market Standings, by Company

HPE/New H3C Groupa and Dell Technologies were tied* for the top position in the worldwide server market based on 4Q20 revenues. Inspur/Inspur Power Systemsb finished third, while IBMc held the fourth position. Huawei and Lenovo tied* for the fifth position in the market.

Notes:

* IDC declares a statistical tie in the worldwide server market when there is a difference of one percent or less in the share of revenues or shipments among two or more vendors.

a Due to the existing joint venture between HPE and the New H3C Group, IDC is reporting external market share on a global level for HPE and New H3C Group as "HPE/New H3C Group" starting from 2Q 2016. Per the JV agreement, Tsinghua Holdings subsidiary, Unisplendour Corporation, through a wholly-owned affiliate, purchased a 51% stake in New H3C and HPE has a 49% ownership stake in the new company.

b Due to the existing joint venture between IBM and Inspur, IDC is reporting external market share on a global level for Inspur and Inspur Power Systems as "Inspur/Inspur Power Systems" starting from 3Q 2018. The JV, Inspur Power Commercial System Co., Ltd., has total registered capital of RMB 1 billion, with Inspur investing RMB 510 million for a 51% equity stake, and IBM investing RMB 490 million for the remaining 49% equity stake.

c IBM server revenue excludes sales of Power Systems generated through Inspur Power Systems in China, starting from 3Q 2018.

In terms of server units shipped, Dell Technologies held the top position in the market, followed closely by HPE/New H3C Groupa in the second position. Inspur/Inspur Power Systemsb, Huawei, and Lenovo finished the quarter in third, fourth, and fifth place, respectively.

Notes:

a Due to the existing joint venture between HPE and the New H3C Group, IDC is reporting external market share on a global level for HPE and New H3C Group as "HPE/New H3C Group" starting from 2Q 2016. Per the JV agreement, Tsinghua Holdings subsidiary, Unisplendour Corporation, through a wholly-owned affiliate, purchased a 51% stake in New H3C and HPE has a 49% ownership stake in the new company.

b Due to the existing joint venture between IBM and Inspur, IDC is reporting external market share on a global level for Inspur and Inspur Power Systems as "Inspur/Inspur Power Systems" starting from 3Q 2018. The JV, Inspur Power Commercial System Co., Ltd., has total registered capital of RMB 1 billion, with Inspur investing RMB 510 million for a 51% equity stake, and IBM investing RMB 490 million for the remaining 49% equity stake.

Top Server Market Findings

On a geographic basis, China (PRC) was the fastest growing region with 22.7% year-over-year revenue growth. Latin America was the only other region with revenue growth in 4Q20, up 1.5% in the quarter. Asia/Pacific (excluding Japan and China) decreased 0.3% in 4Q20, while North America declined 6.2% year over year (Canada at 23.7% and the United States at 5.5%). Both EMEA and Japan declined during the quarter at rates of 1.1% and 6.3%, respectively.

Revenue generated from x86 servers increased 2.9% in 4Q20 to around $23.1 billion. Non-x86 server revenue declined 9.0% year over year to around $2.8 billion.

The worldwide Unified Communications & Collaboration (UC&C) market grew 29.2% year over year and 7.1% quarter over quarter to $13.1 billion in the fourth quarter of 2020 (4Q20) , according to the International Data Corporation (IDC) Worldwide Quarterly Unified Communications & Collaboration Q V iew. Revenue growth was also up an impressive 24.9% for the full year 2020 to $47.2 billion.

How business was conducted changed dramatically in 2020 due to COVID-19, driving companies of all sizes to consider and adopt scalable, flexible, cloud-based digital technology solutions (e.g., Unified Communications as a Service or UCaaS) as part of their overall integrated UC&C solution. Vendors and service providers also saw exponential growth in the number of video and collaboration end users in 2020. In 2021 and beyond, IDC expects worldwide UC&C growth will be driven by customers across all business size segments (small, midsize, and large) with interest especially in video, collaboration, UCaaS, mobile applications, and digital transformation (DX) projects.

Some UC&C market specifics include the following:

"In 2020, COVID-19 caused many businesses and organizations to re-think their plans for leveraging digital technologies and accelerated interest in and adoption of solutions such as team collaboration, team messaging, videoconferencing, and UCaaS, among other technologies," said Rich Costello, senior research analyst, Unified Communications and Collaboration at IDC. "In 2021, IDC expects positive growth numbers across these key UC&C segments to continue, albeit at slightly more modest rates."

From a regional perspective, the UC&C market saw positive numbers across the board in 4Q20 and for the full year 2020.

UC&C Company Highlights

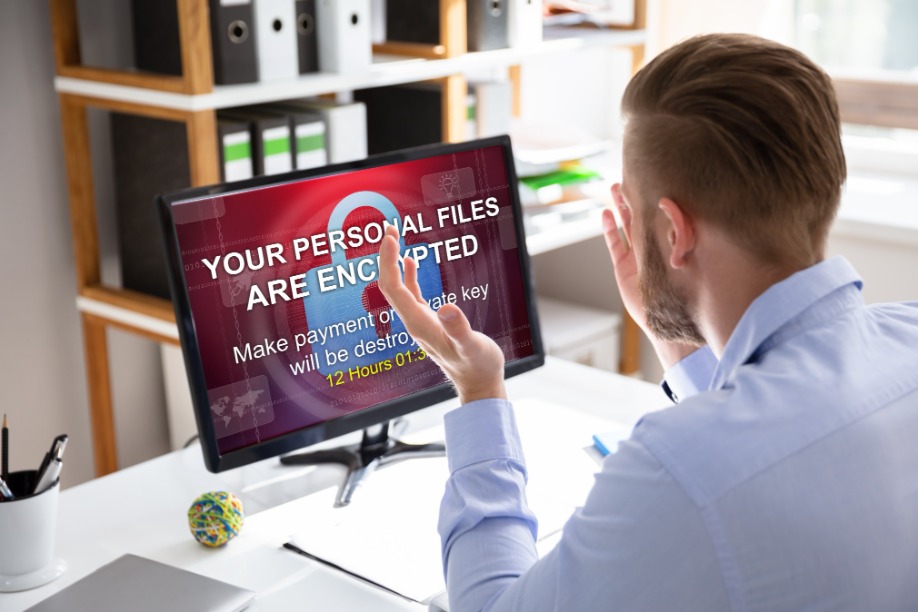

Everyone is petrified of ransomware attacks right now, and with good reason. The attacks have penetrated every sector, from academia to local government organizations, to manufacturing, healthcare, high tech and every other sector.

By Bill Andrews, President and CEO of ExaGrid

The ransoms that hackers demand have increased drastically in recent years, with the most audacious at over $12M dollars (10 million Euros). Ransomware attacks occur all of the time, studies estimate that a ransomware attack is carried out every 14 seconds.

Ransomware disrupts the functionality of an organization by restricting access to data through encrypting the primary storage and then deleting the backup storage. Ransomware attacks are on the rise, becoming disruptive and potentially very costly to businesses. No matter how meticulously an organization follows best practices to protect valuable data, the attackers seem to stay one step ahead. They maliciously encrypt primary data, take control of the backup application and delete the backup data.

The challenge is how to protect the backup data from being deleted while at the same time allow for backup retention to be purged when retention points are hit. If you retention lock all of the data, you cannot delete the retention points and the storage costs become untenable. If you allow retention points to be deleted to save storage, you leave the system open for hackers to delete all data.

How Do Hackers Get Control of Backed Up Data?

Often, hackers are able to gain control of a server on a network and then work their way into critical systems, such as primary storage, and then into the backup application and backup storage. Sometimes hackers even manage to access the backup storage through the backup application. The hackers encrypt the data in the primary storage and issue delete commands to the backup storage, so that there is no backup or retention to recover from. Once the backup storage is deleted, organizations are forced to pay the ransom, as its users cannot work.

How Can Organizations Recover from a Ransomware Attack?

One of the best practices for data protection is to implement a strong backup solution, so that an organization can recover data whenever it is deleted, overwritten, corrupted or encrypted.

However, even standard backup approaches, such backing up data to as low-cost primary storage or to deduplication appliances, are vulnerable to ransomware attacks. To eliminate this vulnerability, a backup solution needs to have second non-network-facing storage, so that even if the hacker deletes the backup they cannot reach the long-term retention data.

If an organization is hit with a ransomware attack but their backup data remains intact, then the organization can recover the data without paying a ransom.

ExaGrid’s Unique Feature: Retention Time-Lock for Ransomware Recovery

ExaGrid has always utilized a two-tiered approach to its backup storage, which provides an extra layer of protection to its customers, called Tiered Backup Storage. Its appliances have a network-facing disk-

cache Landing Zone Tier where the most recent backups are stored in an undeduplicated format, for fast backup and restore performance. Data is deduplicated into a non-network-facing tier called the repository where deduplicated data is stored for longer-term retention. The combination of a non-network facing tier (virtual air gap) plus delayed deletes and immutable data objects guards against the backup data being delete or encrypted.

As ExaGrid monitored the growing trend of ransomware attacks, the backup storage company worked on a new feature to further safeguard its repository tier: Retention Time-Lock for Ransomware Recovery. This feature allows for “delayed deletes” so that any delete commands that might be issued by a ransomware attack are not processed for a period of time determined by the ExaGrid customer, with a default of 10 days that can be extended by policy. ExaGrid released this feature in the 2020 and many of its customers have already successfully recovered from ransomware attacks.

Don’t pay the ransom! Implement a solution that is designed to help your organization recover.

Now more than ever, digital transformation (DX) has become a strategic priority for every organisation.

By Ash Finnegan, digital transformation officer, Conga

Navigating what is currently the most complex business landscape to date, leaders have had to rapidly transform their operations in order to deliver their services remotely. In their panic, business leaders have invested heavily in the latest technological solutions to keep their organisations running. From artificial intelligence (AI) to wider automation, such as robotics process automation (RPA) and natural language processing (NLP) or machine learning (ML). Chaos has been the driver of change.

And over the past few months, whole departments have undergone the most intense and complicated transformation programmes their senior leadership teams have ever delivered, but that does not necessarily mean they have been well executed.

How to approach automation – it is a process, not a race

Even before the pandemic, most companies would approach digital transformation projects all wrong. Many companies aspire to be disrupters, picking a technology and implementing it at speed. They want to adopt the latest AI programme and automate their business as fast as possible, with no real idea of how this will improve their services. These projects rarely result in success. In fact, according to Conga research, only half of all digital transformation initiatives of this kind are considered somewhat successful. Compared to Europe, where the success rate is even lower, only 43 percent of these programmes result in success.

The issue lies with how businesses approach automation in the first place. Many strategies are driven by the desire to use and incorporate the latest technology as opposed to identifying clear business goals or reconsidering their current operational model and where AI would be best suited. Covid-19 has only accelerated this issue. Whilst AI and automation offer many competitive advantages, that does not necessarily mean they are easy to implement or deliver as part of a wider digital transformation programme. Too many business leaders are prioritising technology over strategy and simply do not understand what AI, or digital transformation for that matter, really is, can achieve and should drive.

Without stepping back and reviewing their current operational model, what works or what needs improving, how can leaders really understand whether AI is best suited to automate their business? Companies have essentially adopted ‘transformational’ technology without having any clear business objectives in mind or considering where this technology may be better placed to improve overall operability. If there are bad processes in place, that fail to deliver real business objectives or real commercial outcomes, automation will only accelerate this issue.

In reality, businesses need to establish clear commercial objectives, before adopting any disruptive or automation technology. It is crucial that companies first establish where they currently stand in their own digital transformation journey, by considering their own digital maturity.

The digital maturity model – how to adopt AI

‘Digital maturity’ refers to where a business currently stands in its digital transformation journey. Before adopting any new technology or starting any transformation programme, and most importantly, automating areas of the business, companies need to take a step back and reconsider their current operational model. Given the speed at which businesses transformed last year, teams may have stumbled across a number of roadblocks and bottlenecks; the ‘older’ operational model likely did not translate well in the switch to remote

working. Complicated or unnecessary processes have more than likely limited the business’ performance and stunted any commercial growth.

By taking a step back and reviewing their business, leaders will have a clear picture of the current state and what the next stage of their company’s digital transformation journey should be, as opposed to simply guessing, or learning through trial and error. Only by identifying areas where there are operational issues or room for improvement, can businesses establish clear objectives and a strategy – then leaders can incorporate new technology such as AI and automation, to streamline their services and help them achieve these goals.

Once they have assessed their current maturity, leaders can accelerate the processes that work well and can add value to their business, instead of speeding up flawed processes or legacy systems. Automating a bad process doesn’t stop it from being a bad process and, by this same logic, AI isn’t a silver bullet or a ‘quick fix’ – rushing a transformation programme won’t bolster company growth. By assessing their digital maturity and approaching automation in this methodical way, companies can improve their overall operability and streamline the processes that matter – that is, improving the customer experience, generating revenue, and managing key commercial relationships.

As companies progress along their digital transformation journeys, they will streamline processes, break down silos and enable cross-team working across departmental boundaries. The maturity model framework does not prescribe a linear change programme. It is vital that every stage, from foundation and core business logic, to reevaluating current systems, fine-tunes basic workflows to ensure any inefficiencies are removed from the overall business process. By the next stage, leaders can consider the possibility of further integration between systems, such as customer lifecycle management (CLM) or enterprise resource planning (ERP) to deliver more multi-channel management.

Only then can organisations enter the next stage of transformation. As processes are streamlined, cross-team collaboration increases and leaders will begin to break down any departmental silos, establishing true data intelligence. Their operations will be seamless with end-to-end processes that inform decision-making. Following this, leaders can then perhaps consider further integrating their systems and exploring other areas to automation and AI across other areas of their businesses, because this is now clear to them.

AI is only as good as the data provided

Businesses will proceed through these stages of digital maturity at different rates depending on the complexity of their structures, and how many roadblocks they encounter across the business cycle. No doubt some will have to go back several stages to tackle any issues regarding the business operability or efficiency. But by no means can leaders prioritise technology over strategy. If organisations think technology has all the answers and AI will solve all their problems, they are approaching transformation all wrong. Organisations need to optimise the business process; it needs to be frictionless from end to end before they consider adopting AI or any form of automation technology.

It is vital that businesses understand their digital maturity – where they are and where they need to get to – to create a transformation programme that actually aligns teams and departments. It’s important to ensure that systems, teams and processes are working together smoothly. After all, it is about establishing cross-functional collaboration, not fine-tuning a process for a particular department – whether sales, legal or finance – but improving the overall business process. By reviewing every stage of the maturity model for their organisation, from foundation (data transparency and business logic) to full system integration, leaders can take their business to a truly intelligent state, where they are actually using data to make decisions to allow for further business growth. Companies can create a seamless enterprise and a fully connected customer and employee experience, which automation can then accelerate even further. From here, AI can actually add real value.

Invention is the creation of technology; innovation is how you use that invention to extract value.

By Dr. Colin Parris, Senior Vice President and Chief Technical Officer, GE Digital

As industrial companies move forward with Digital Transformation, the first question they need to ask is, “How do I build and implement solutions that provide the best and most lasting value?”

Wherever you are on your path to digital transformation, the goal is the same: make your business smarter, leaner, and more profitable. We can create new technologies; we can build powerful solutions and scale them like never before. However, if there is no clear path to value, no clear return on investment, all we are building is barriers. This is especially true in the world of industrial IoT, where innovation is often seen more as evolution than revolution. The risks are tremendously high.

One of the technologies that can make a difference for businesses in this transformation journey is the Digital Twin. A Digital Twin is a software representation of a physical asset, system, or process that is designed to detect, prevent, predict, and optimize through real time analytics. All of these industrial necessities cost millions to build and to fix, and if they stop working, they can cost millions in unplanned downtime. You don’t get to fail fast.

Digital Twins are used to give us early warnings on equipment failures so companies can take actions early to maintain availability targets. These Twins provide continuous predictions so that we can get estimates on the on-going damage on a part, and then have these parts ready when we do maintenance events. This is crucial in the industrial world as the lead time to some of the more critical parts are six to 18 months. Imagine the business impact if you did not have that critical part when you needed it. This is why many industrial companies stockpile these expensive parts and, therefore have high inventory cost.

But what if these critical pieces of your business could help you even more? What if they could help you in a way that aided business adoption and reduced your business risk? What if they could protect themselves from outside threats? What if they could talk to each other? This is where Digital Twin technology is headed and what makes software mission critical today and tomorrow.

As we look to the future, one of the software technologies we will look to is called Humble AI. Humble AI is a Digital Twin that optimizes industrial assets under a known set of operating conditions but can relinquish control to a human engineer or safe default mode on its own when encountering unfamiliar scenarios to ensure safe, reliable operations. Humble AI affords a zone of data competency, which pinpoints where the digital twin model is most accurate and in which it is comfortable making normal operational decisions. If a situation is outside of the zone of competency, the Humble AI algorithm recognizes and redirects the situation to a human operator or reverts back to its traditional algorithm. Just as a trainee engineer might call their supervisor when faced with a new challenge, Humble AI escalates anything outside of its comfort zone.

A second technology is something we call Digital Ghost, a new paradigm for securing industrial assets and critical infrastructure from both malicious cyber-attacks and naturally occurring faults in sensor equipment. Much attention is given to a company’s external firewalls, but some viruses can be accidentally spread by doing something as innocuous as using a compromised USB drive inside the network. A Digital Ghost uses a Digital Twin which understands both the physics associated with the asset and the operational states given the data from its operators and environments and can use this to detect when conditions on its sensors seem to be malicious. In this way it can provide early detection of any security issues that might occur and also provide a path to both detection and neutralization of the fault.

A third interesting and forward-thinking technology is called “Twins that Talk.” This is an emerging, and exciting, technology that gives machines the ability to mimic human intuition and react to evolving situations.

We’re at a place where we’ve generated enough data and harnessed artificial intelligence applications to the point that Digital Twins can now “talk” to each other, learn from each other, and can “educate” other assets. Using neural network technologies (sponsored by DARPA) we have pilots that allow machines to generate their own language for communications. A turbine or an engine, can “inform” other like machines by communicating the prior problems it has experienced and the symptoms associated with the issue. This information forms potential root causes for field engineers even before the problem has been diagnosed.

Here’s an example of machines learning and communicating in the field: Imagine a number of wind turbines in the same geographical area being able to detect icing on their blades and understanding that this reduces the amount of electricity they can create. Not only would they be able to flag potential disruption, they’d be able to predict icing in the future by amalgamating contextual cues, weather patterns, and prior experience.

These new and exciting innovative Digital Twin inventions allow us to build our capacity for business transformation. Humble AI helps create value by leveraging artificial intelligence technology. Digital Ghosts and Twins that Talk help accelerate value. These innovative technologies all provide insight that compels business to take actions that deliver the most value.

Gurpreet Purewal, Associate Vice President, Business Development, iResearch Services, explores how organisations can overcome the challenges presented by AI in 2021.

2020 has been a year of tumultuous change and 2021 isn’t set to slow down. Technology has been the saving grace of the waves of turbulence this year, and next year as the use of technology continues to boom, we will see new systems and processes emerge and others join forces to make a bigger impact. From assistive technology to biometrics, ‘agritech’ and the rise in self-driving vehicles, tech acceleration will be here to stay, with COVID-19 seemingly just the catalyst for what’s to come. Of course, the increased use of technology will also bring its challenges, from cybersecurity and white-collar crime to the need to instill trust in not just those investing in the technology, but those using it, and artificial intelligence (AI) will be at the heart of this.

1. Instilling a longer-term vision

New AI and automation innovations have led to additional challenges such as big data requirements for the value of these new technologies to be effectively shown. For future technology to learn from the challenges already faced, a comprehensive technology backbone needs to be built and businesses need to take stock and begin rolling out priority technologies that can be continuously deployed and developed.

Furthermore, organisations must have a longer-term vision of implementation rather than the need for immediacy and short-term gains. Ultimately, these technologies aim to create more intelligence in the business to better serve their customers. As a result, new groups of business stakeholders will be created to implement change, including technologists, business strategists, product specialists and others to cohesively work through these challenges, but these groups will need to be carefully managed to ensure a consistent and coherent approach and long-term vision is achieved.

2. Overcoming the data challenge

AI and automation continue to be at the forefront of business strategy. The biggest challenge, however, is that automation is still in its infancy, in the form of bots, which have limited capabilities without being layered with AI and machine learning. For these to work cohesively, businesses need huge pools of data. AI can only begin to understand trends and nuances by having this data to begin with, which is a real challenge. Only some of the largest organisations with huge data sets have been able to reap the rewards, so other smaller businesses will need to watch closely and learn from the bigger players in order to overcome the data challenge.

3. Controlling compliance and governance

One of the critical challenges of increased AI adoption is technology governance. Businesses are acutely aware that these issues must be addressed but orchestrating such change can lead to huge costs, which can spiral out of control. For example, cloud governance should be high on the agenda; the cloud offers new architecture and platforms for business agility and innovation, but who has ownership once cloud infrastructures are implemented? What is added and what isn’t?

AI and automation can make a huge difference to compliance, data quality and security. The rules of the compliance game are always changing, and technology should enable companies not just to comply with ever-evolving regulatory requirements, but to leverage their data and analytics across the business to show breadth and depth of insight and knowledge of the workings of their business, inside and out.

In the past, companies struggled to get access and oversight over the right data across their business to comply with the vast quantities of MI needed for regulatory reporting. Now they are expected to not only collate the correct data but to be able to analyse it efficiently and effectively for regulatory reporting purposes and strategic business planning. There are no longer the time-honoured excuses of not having enough information, or data gaps from reliance on third parties, for example, so organisations need to ensure they are adhering to regulatory requirements in 2021.

4. Eliminating bias

AI governance is business-critical, not just for regulatory compliance and cybersecurity, but also in diversity and equity. There are fears that AI programming will lead to natural bias based on the type of programmer and the current datasets available and used. For example, most computer scientists are predominantly male and Caucasian, which can lead to conscious/unconscious bias, and datasets can be unrepresentative leading to discriminatory feedback loops.

Gender bias in AI programming has been a hot topic for some years and has come to the fore in 2020 again within wider conversations on diversity. By only having narrow representation within AI programmers, it will lead to their own bias being programmed into systems, which will have huge implications on how AI interprets data, not just now but far into the future. As a result, new roles will emerge to try and prevent these biases and build a more equitable future, alongside new regulations being driven by companies and specialist technology firms.

5. Balancing humans with AI

As AI and automation come into play, workforces fear employee levels will diminish, as roles become redundant. There is also inherent suspicion of AI among consumers and certain business sectors. But this fear is over-estimated, and, according to leading academics and business leaders, unfounded. While technology can take away specific jobs, it also creates them. In responding to change and uncertainty, technology can be a force for good and source of considerable opportunity, leading to, in the longer-term, more jobs for humans with specialist skillsets.

Automation is an example of helping people to do their jobs better, speeding up business processes and taking care of the time-intensive, repetitive tasks that could be completed far quicker by using technology.

There remain just as many tasks within the workforce and the wider economy that cannot be automated, where a human being is required.

Businesses need to review and put initiatives in place to upskill and augment workforces. Reflecting this, a survey on the future of work found that 67% of businesses plan to invest in robotic process automation, 68% in machine learning, and 80% investing in perhaps more mainstream business process management software. There is clearly an appetite to invest strongly in this technology, so organisations must work hard to achieve harmony between humans and technology to make the investment successful.

6. Putting customers first

There is growing recognition of the difference AI can make in providing better service and creating more meaningful interactions with customers. Another recent report examining empathy in AI saw 68% of survey respondents declare they trust a human more than AI to approve bank loans. Furthermore, 69% felt they were more likely to tell the truth to a human than AI, yet 48% of those surveyed see the potential for improved customer service and interactions with the use of AI technologies.

2020 has taught us about uncertainty and risk as a catalyst for digital disruption, technological innovation and more human interactions with colleagues and clients, despite face-to-face interaction no longer being an option. 2021 will see continued development across businesses to address the changing world of work and the evolving needs of customers and stakeholders in fast-moving, transitional markets. The firms that look forward, think fast and embrace agility of both technology and strategy, anticipating further challenges and opportunities through better take-up of technology, will reap the benefits.

As the fallout from the COVID-19 pandemic continues to disrupt the majority of industries, its impact on supply chains has been nothing short of seismic. As teams continue to face increasing pressure to make the right decisions at the right time - squeezing every last drop of insight and information out of vast lakes of data is now more important than ever.

By Will Dutton, Director of Manufacturing, Peak

The phrase ‘data is the new oil’ has framed a large amount of discourse in the twenty-first century. The statement, although contestable, does beg the question that should always follow: exactly what data are we talking about? These tricky times call for a new approach to data-driven decision making. There’s now a real need for supply chains to focus on the greater data ecosystem, accessing wider sources of data and utilising it to its fullest capacity. While making effective decisions based on data from current systems, or by joining up a few previously-siloed sources across the organisation is becoming easier than ever – there’s potential to go even further than this. The more data there is to play with, the more informed supply chain decisions will be. Here are four data sources that can help accelerate smart supply chain decisions:

1. Linking the supply chain with customer systems

The more systems that talk to each other, the better. Linking data from supply chain systems with customer systems, including behaviour data points, can help understand the pain points that arise. For instance, this could be the customer's ERP system or even the logistics systems between the business and the customer. Taking consumer-packaged goods businesses and manufacturers as an example, with a better handle on Electronic Point of Sale (EPOS) and any other sell-out data from customers’ systems, the business can better predict what demand is going to be like, and better understand their stock levels in order to help anticipate their own. Factoring into account things like receipts data, what baskets are shoppers generally buying together, and how can this help better anticipate the groups of products that are going to sell together. This closer relationship with customers’ systems allows the business to better serve them, increasing efficiency and anticipating demand fluctuations. Inherently it’s all about creating more competitive supply chains which are more cost-effective, with better service levels and a more accurate view of demand.

2. Supplier data for efficiency

By leveraging data points from suppliers’ systems, businesses can plan ahead in the most efficient way and execute an effective just-in-time (JIT) inventory management strategy, holding minimal assets to save cash and space whilst still fulfilling customer demand. By employing this methodology, businesses are able to understand when a supplier is going to deliver, to what location, and anticipate the arrival of goods and raw materials whilst also better understanding the working capital implications.

3. Using environmental data

Don’t underestimate the power hidden away in external, non-industry related data sources and the impact it can have on supply chain decision making. Think about the ways a business can utilise, let’s say, macroeconomic data to understand what could be driving issues connected to supply and demand. Yes, we immediately think of things like GDP, or maybe even exchange rates, but there is now a plethora of data out there, that may be more industry and company-specific, that helps predict demand or implications for business performance. In recent months, appropriate data feeds impacting the supply chain could be an increase in Covid-19 cases near a supplier, hampering their ability to operate as normal. Connecting these data points up to technology such as Artificial Intelligence (AI) could help understand the impact of these incidents with supply performance, and create accurate forecasts on the trends.

4. Sharing data across the network with co-opetition

For many, the rule of thumb is not giving the game away to competitors, so this may seem a little pie-in-the-sky for many businesses at first. However, the benefits of sharing data with the industry and accessing competitor data sources can be enormous. The data of those providing similar products is at first harmless – but using it in the right way, to make intelligent decisions, will allow the business to gain a unique view of what is happening across the rest of the market. This ultimately leads to a better understanding of wider trends and the ability to make smarter decisions. With a mutually beneficial relationship with the wider network, a business can understand supply issues, and work with competitors or neutral parties to deliver better products and services to customers creating a form of ‘co-opetition.’

Accessing the ecosystem requires digital transformation

Tapping into the greater data ecosystem and utilising it in decision making will be essential for supply chain teams to run smooth operations in disruptive climates. However, to truly unlock the potential this offers, a central AI system is needed.

In the same way that business functions have their own systems of record, the ability to power decision making based on a wide range of data sources hinges on the introduction of a new, centralised enterprise AI system. Using AI gives teams the ability to leverage unlimited data points at scale and speed. Utilising AI in this manner, to make decisions that are both smarter and faster to supercharge teams. At Peak, we call this Decision Intelligence (DI).

Decision Intelligence results in being able to connect the dots between data points with AI, to prescribe recommendations and actions to make more informed commercial decisions across the entire supply chain.

By feeding external data from the points above into both demand and supply planning systems, leveraging it with AI, enterprises can optimise that connection between these two core areas. Not only does it allow a better sense of demand with a higher degree of accuracy, but also enables a better understanding of how supplier and operations constraints are affecting supply – automatically making micro-adjustments to optimise the way demand is being fulfilled.

One of the most critical steps in any operational machine learning (ML) pipeline is artificial intelligence (AI) serving, a task usually performed by an AI serving engine.

By Yiftach Schoolman, Redis Labs Co-founder and CTO

AI serving engines evaluate and interpret data in the knowledgebase, handle model deployment, and monitor performance. They represent a whole new world in which applications will be able to leverage AI technologies to improve operational efficiencies and solve significant business problems.

AI Serving Engine for Real Time: Best Practices

I have been working with Redis Labs customers to better understand their challenges in taking AI to production and how they need to architect their AI serving engines. To help, we’ve developed a list of best practices:

Fast end-to-end serving

If you are supporting real-time apps, you should ensure that adding AI functionality in your stack will have little to no effect on application performance.

No downtime

As every transaction potentially includes some AI processing, you need to maintain a consistent standard SLA, preferably at least five-nines (99.999%) for mission-critical applications, using proven mechanisms such as replication, data persistence, multi availability zone/rack, Active-Active geo- distribution, periodic backups, and auto-cluster recovery.

Scalability

Driven by user behavior, many applications are built to serve peak use cases, from Black Friday to the big game. You need the flexibility to scale-out or scale-in the AI serving engine based on your expected and current loads.

Support for multiple platforms

Your AI serving engine should be able to serve deep-learning models trained by state-of-the-art platforms like TensorFlow or PyTorch. In addition, machine-learning models like random-forest and linear-regression still provide good predictability for many use cases and should be supported by your AI serving engine.

Easy to deploy new models

Most companies want the option to frequently update their models according to market trends or to exploit new opportunities. Updating a model should be as transparent as possible and should not affect application performance.

Performance monitoring and retraining

Everyone wants to know how well the model they trained is executing and be able to tune it according to how well it performs in the real world. Make sure to require that the AI serving engine support A/B testing to compare the model against a default model. The system should also provide tools to rank the AI execution of your applications.

Deploy everywhere

In most cases it’s best to build and train in the cloud and be able to serve wherever you need to, for example: in a vendor’s cloud, across multiple clouds, on-premises, in hybrid clouds, or at the edge. The AI serving engine should be platform agnostic, based on open source technology, and have a well-known deployment model that can run on CPUs, state-of-the-art GPUs, high- engines, and even Raspberry Pi device.

In a lot of ways, the economy is like a road; businesses are the drivers and customers are the passengers. And the vehicle propelling those organisations forward? Their IT architecture.

By Nick Ford, Chief Technology Evangelist at Mendix

For most of the journey so far, businesses have been able to take a traditional approach to their vehicle maintenance: they would drive hard for a few years until the parts were well and truly worn down, by which point there were probably a few new upgrades available. Only then would that business pull their architecture in for a massive tune-up.

That’s when IT department would conduct a complete overhaul, bring their infrastructure into the present day so it could chug along for another few years. This would take a lot of time and more money, but since it only needed to happen once or twice every decade, the system worked.

But not anymore. These days, business is highspeed – and new competitors are joining industries at ever more disruptive rates.

And It’s time to consider a new, more modifiable motor – and that’s the composable enterprise.

Speeding IT up

Any organisation that wants to stand a chance at continuing to successfully navigate the pot-hole ridden path of the post-COVID world needs to become incredibly adaptable.

The pandemic may have wreaked havoc on the economy, but it has also accelerated the digitalisation of society even further. It has led to 54% of businesses to accelerate their digitalisation in a bid to support newly remote workforces and keep up with customers’ rapidly changing needs, according to Mendix research.

The scope of these digital transformation projects is just too large and time-sensitive for IT teams to build solutions for from scratch, like they used to – making the shift to a moving to a composable model so attractive.

If a firm can tack on new services, features and improve their customer experience when they need to, one application at a time, by reusing automated, vetted functionalities, they can dramatically lower their IT costs while also scaling-up their time to value.

This is what it means to become a composable enterprise – having the ability to build solutions from best of breed. This means assembling solutions from a variety of vendors and all of them working seamlessly together.

Low code path to high performance

This is where low-code platforms come into the picture.

On a low-code platform, all the functionalities needed to build out a new application can be pulled from an existing library. These components can be dragged and dropped into a visual workflow, meaning app development doesn’t even require coding experience. This reduces the burden placed on IT to be responsible for all digital transformation projects (as we can all probably agree, they have more than enough on their plates).

So, businesses can rapidly assemble new business applications like their kitting out a custom car – an accessory at a time, each one a different functionality. And even though IT doesn’t have to be

directly involved in this app development by way of assembly, they still have an element of oversight as every part is made up of tried and tested functionalities that they’ve already approved/built.

But more than that, a low-code platform can be a bridge connecting non-technical staff and IT departments and giving them a common language. So, these teams can move closer than ever before and collaborate in new, more efficient and creative ways.

Once a business is able to inspire collaboration at every level, empowering workers to create new innovations to both make their work lives easier and improve customer experience, it will have a chance of staying on track.

Because the iterative changes lead to incredibly adaptable IT infrastructures – perfectly geared for whatever conditions the world throws at them.

From old banger to hot hatch

The age of the massive overhaul is over. The businesses holding pole position use all the information they can get their hands on to make incremental and iterative changes – without even needing to slow down.

So, if you’re a driver of the old style, struggling to switch from your static IT architecture into a dynamic, composable enterprise, try starting with a shift to low code development. It may be the boost of nitro your organisation needs.

Here, Matt Parker, CEO of Babble, explains the inherent differences between adaptability and agility and why both are vital.

Believe it or not, we’re no longer tied to our desks, hooked up to our workspace via wires and telephone lines. The world of work is transforming, and smart businesses are making sure they’re ahead of the curve. If business is booming, your staff are working tirelessly and phones are ringing off the hook, you might not notice a problem. But, before you know it, your old-fashioned way of working will be overtaken by swift, forward-thinking competitors, and, if 2020 showed us anything, it’s that an ability to adapt is invaluable. The world around us isn’t static – it’s constantly evolving, and businesses must learn to change with the times.

Agile working connects people to technology that helps to improve effectiveness and productivity. Therefore, agility is defined by the way a business proactively evolves in order to thrive. Agility allows a business to realise its full potential through implementing new systems. As technology evolves and the way we work changes, businesses can’t just stand still. Continuing a way of working because “this is how we’ve always done it” will get mediocre results and hinder a business’s progress.

For example, a company that embraced agile working is Aquavista. The leading leisure and mooring supplier that needed to implement a scalable cloud technology solution that would align to its rapid growth. To enable employees to operate efficiently and remotely, Babble deployed a fibre grade connection and a hosted telephony platform. These solutions facilitated agile working, reduced operational downtime, boosted productivity and ultimately enhanced customer experience.

Adaptability, however, is a business’s ability to respond to these changes. Businesses must recognise the importance of both of these competencies. It’s how prepared a business is to change or evolve its practices to overcome challenges or align with environmental changes. Businesses should be able to adapt to new changes in a productive, positive way – with company culture emerging unscathed and business processes improved as a result. Maintaining business effectiveness through times of change will ensure a business can thrive, no matter what life throws at it.

Whilst “agile working” in a software development sense isn’t wholly aligned with the term used when discussing business, there are some shared principles and key takeaways. Fundamentally, viewing change positively and approaching new processes with flexibility helps businesses adapt. Improving internal processes benefits the business, its staff and ultimately customers as the service provided is more effective.

Businesses need a unified vision and passionate leaders to ensure agile adoption. Problem solving with a positive, productive mindset will ensure successful agile transformation. It’s all about understanding what currently works but being open to exploring what could work better.

Businesses must be both agile and adaptable in order to weather new storms and safeguard its future. However, achieving agility and adaptability is a fine balancing act. Constantly striving for change without strategic rationale may cause a business to flounder. You should always aim for the final goal, with a strong end result in mind and a clear idea of how you’re going to get there.

Implementing change within a business can be a challenge. It’s true that leaders can sometimes be too close to their own operational processes to be able to clearly see better ways to work, but partnering with a specialist such as Babble offers a unique advantage.

Flexible, scalable solutions ensure that a business can adapt to new challenges or changes in circumstance. As we’ve seen from the COVID-19 pandemic, there’ll be times when businesses must adapt to new ways of working almost overnight. Think ahead by utilising an optimised network and deploying cloud-based communication tools to allow your business to work in an agile way. This results in maximum efficiency, maximum benefit to your customers and maximum business productivity.

Being agile requires business leaders to identify change and understand how it could impact the business. Seeking new opportunities and deploying the resources needed to secure these opportunities futureproofs a business and helps it succeed. Although it’s worth noting that there’s no set formula for agile working. Businesses can’t follow a ‘how to’ guide for agility. Every business has its own way of working; its own processes. Identifying where there’s need for change is unique across each business. However, ultimately, businesses that don’t adopt agile working will stagnate as proactive, forward-thinking competitors take the lead. Don’t just wait for new ways of working to become a necessity – take the initiative now.

Brian Johnson, ABB Data Center Segment Head, explores how digitalisation is shaping the evolution of green data centres, and provides a range of sustainable growth tactics for delivering cost and energy efficiencies.

It is a common misconception that data centers are responsible for consuming vast amounts of the world’s energy reserves. The truth is that data centers are leading the charge to become carbon free and are supporting members of their supply chains in achieving the same.

Even in the face of rapid digital acceleration, the vast proliferation of smart devices and an upward surge in demand for data, the data center sector remains a force for positive change on climate action and is well on course to fulfil its ‘green evolution’ masterplan.

To be more specific, data centers are estimated to consume between one and two percent of the world’s electricity according to the United States Data Center Energy Usage Report (1). A recent study confirmed that, while data centers’ computing output jumped six-fold from 2010 to 2018, their energy consumption rose only six percent (2).

To better envisage just how energy efficient data processing has become, imagine that if the airline industry was able to demonstrate the same level of efficiency, a typical 747 passenger plane would be able to fly from New York to London on just 2.8 liters of fuel in around eight minutes.

How has the data center sector reduced total energy consumption?

Using a range of safe, smart and sustainable solutions, ABB is helping its data center customers to reduce CO2 emissions by at least 100 megatons until 2030. That is equivalent to the annual emissions of 30 million combustion engine cars.

Solutions include more energy efficient power systems innovations or even moving entirely to large scale battery energy storage systems which ensure reliable grid connectivity in case of prolonged periods of power loss.

These are obviously big budget changes, with impressive yields, but there is much more that can be done to harness the power of digitalization on a smaller scale.

Making every watt count

The need for additional data from society and industry shows no sign of stopping, and it is the job of the data center to meet this increased demand, without consuming significantly more energy. Unlike many industries which wait for regulation before forcing change, the desire to offer a more environmentally conscious data center comes from within the industry, with many big players and smaller facilities too, taking an “every watt counts” approach to operational efficiency.

By digitalizing data center operations, data center managers can react to increased demand without incurring significant additional emissions. Running data centers at higher temperatures, switching to frequency drives instead of dampers to control fan loads, adopting the improved efficiency of modern UPS and using virtualization to reduce the number of underutilized servers, are all strong approaches to improve data center operational efficiency.

To understand this further, let us explore some key sustainable growth tactics for delivering power and cost savings for green data centers:

Digitalization of data centers