Read, watch or listen to the news and, whatever one’s political views, it’s difficult to become anything other than somewhat depressed as to just how bad things seem to be. Yes, government responses to the Covid-19 pandemic have varied across the globe, with varying degrees of success, and many countries are beginning to open up after lockdown, but the coronavirus is finding new victims every day, and most, if not all, economies are going to be on life-support for the foreseeable future.

Add to this the political unrest over the ‘Black Lives Matter’ campaign, and maybe it’s time to take early retirement (if you can afford to!) and head off to a peaceful, remote location, should such a place exist…

But wait a minute, history tells us – when we choose to listen – that times of great turmoil and chaos are the perfect conditions out of which are born great new ideas and inventions. And for those for whom the glass will always be half full, the way in which individuals and businesses have reacted to the harsh realities of lockdown, has demonstrated the ingenuity and resilience of the human mind.

And technology solutions have played a key part in this. In our home lives, we’ve harnessed technology to keep in touch with family and friends, and, along the way, learnt not to take for granted such valuable contact. At work – whether this is in an actual office or from home – Teams and Zoom (other solutions are available) have played a valuable role in keeping the workforce communicating and in focus.

And I’ve received plenty of stories outlining how many other IT solutions have helped all manner of organisations continue to work during lockdown – with managed services and the cloud taking the starring roles.

Furthermore, I’m sure that many IT vendors and suppliers are busy working on more and more new ideas and solutions to help businesses both survive and thrive in the ‘new normal’ over the coming months and years.

Evolution v revolution is one for the philosophers, but I’m fairly sure that revolutions – however and whenever started – have, in the long term, and in the main, been the catalysts for major, positive changes. Right now, we’re on the verge of a major technology revolution. A revolution both in terms of what’s being developed but, equally importantly, how it will be used.

Properly shaped and harnessed, the benefits could be extraordinary for us all.

Veeam 2020 Data Protection Trends Report indicates global businesses are embracing Digital Transformation, but struggle with antiquated solutions to protect and manage their data; data protection must move to a higher state of intelligence to support transformational needs and hybrid/multi cloud adoption.

As organizations look to transform their business operations and revolutionize customer service, Digital Transformation (DX) is at the top of most CXOs’ agendas; in fact, DX spending is expected to approach $7.4 trillion between 2020 and 2023, a CAGR of 17.5%. However, according to the latest industry data released from Veeam® Software, almost half of global organizations are being hindered in their DX journeys due to unreliable, legacy technologies with 44% citing lack of IT skills or expertise as another barrier to success. Moreover, almost every company admitted to experiencing downtime, with 1 out of every 10 servers having unexpected outages each year — problems that last for hours and cost hundreds of thousands of dollars – and this points to an urgent need to modernize data protection and focus on business continuity to enable DX.

The Veeam 2020 Data Protection Trends Report surveyed more than 1,500 global enterprises to understand their approach toward data protection and management today, and how they expect to be prepared for the IT challenges they face, including reacting to demand changes and interruptions in service, as well as more aspirational goals of IT modernization and DX.

“Technology is constantly moving forward, continually changing, and transforming how we do business – especially in these current times as we’re all working in new ways. Due to DX, it’s important to always look at the ever-changing IT landscape to see where businesses stand on their solutions, challenges and goals,” said Danny Allan, CTO and SVP of Product Strategy at Veeam. “It’s great to see the global drive to embrace technology to deliver a richer user experience, however the Achilles Heel still seems to be how to protect and manage data across the hybrid cloud. Data protection must move beyond outdated legacy solutions to a higher state of intelligence and be able to anticipate needs and meet evolving demands. Based on our data, unless business leaders recognize that – and act on it – real transformation just won’t happen.”

The Criticality of Data Protection and Availability

Respondents stated that data delivered through IT has become the heart and soul of most organizations, so it should not be a surprise how important “data protection” has become within IT teams, including not just backing up and restoring data, but also extending business capabilities. However, many organizations (40%) still rely on legacy systems to protect their data without fully appreciating the negative impact this can have on their business. The vast majority (95%) of organizations suffer unexpected outages and on average, an outage lasts 117 minutes (almost two hours).

Putting this into context, organizations consider 51% of their data as ‘High Priority’ versus ‘Normal’. An hour of downtime from a High Priority application is estimated to cost $67,651, while this number is $61,642 for a Normal application. With such a balance between High Priority and Normal in percentages and impact costs, it’s clear that “all data matters” and that downtime is intolerable anywhere within today’s environments.

“Data protection is more important than ever now to help organizations continue to meet their operational IT demands while also aspiring towards DX and IT modernization. Data is now spread across data centers and clouds through file shares, shared storage, and even SaaS-based platforms. Legacy tools designed to back up on-premises file shares and applications cannot succeed in the hybrid/multi-cloud world and are costing companies time and resources while also putting their data at risk,” added Allan.

DX and the Cloud

Enterprises know they must continue to make progress with their IT modernization and DX initiatives in order to meet new industry challenges, and according to this report’s feedback, the most defining aspects of a modern data protection strategy all hinge upon utilization of various cloud-based capabilities: Organizations’ ability to do disaster recovery (DR) via a cloud service (54%), the ability to move workloads from on-premises to cloud follows (50%), and the ability to move workloads from one cloud to another (48%). Half of businesses recognize that cloud has a pivotal part to play in today’s data protection strategy; and it will most likely become even more important in the future. For a truly modernized data protection plan, a company needs a comprehensive solution that supports cloud, virtual and physical data management for any application and any data across any cloud.

Allan concluded: “By already starting to modernize their infrastructures in 2020, organizations expect to continue their DX journey and increase their cloud use. Legacy solutions were intended to protect data in physical datacenters in the past, but they’re so outdated and complex that they cost more money, time, resources and trouble than realized. Modern protection, such as Veeam’s Cloud Data Management solutions, go far beyond backup. Cloud Data Management provides a simple, flexible and reliable solution that saves costs and resources so they can be repurposed for future development. Data protection can no longer be tied to on-premises, physically-dedicated environments and companies must have flexible licensing options to easily move to a hybrid/multi cloud environment.”

Other highlights of the Veeam 2020 Data Protection Trends Report include:

AppDynamics has released a special edition of its global research study, The Agents of Transformation Report with new findings related to the COVID-19 pandemic. The report reveals the pressures technologists are experiencing as they lead their organizations’ responses to the pandemic and how their priorities are changing as the rate of digital transformation accelerates.

The COVID-19 pandemic has required enterprise organizations to shift overnight to an almost completely digital world. Technology departments across the globe are now grappling with surging demand and mounting pressures to accelerate digital transformation strategies. They must deliver high performing digital experiences to customers and all-remote workforces at a time when the survival of the organization is resting on their shoulders.

Technologists are Under Pressure

The latest research from AppDynamics reveals that technologists are experiencing pressure from every angle as they mobilize workforces to operate from home, manage increasing pressure on their networks and applications, and maintain the security of the technology stack, while also taking on new roles and responsibilities.

Of those surveyed, 66 percent of technologists confirm that the pandemic has exposed weaknesses in their digital strategies, creating an urgent need to accelerate initiatives that were once part of multi-year digital transformation programs.

Prioritizing the Customer Experience

The research highlights that the majority of organizations (95 percent) changed their technology priorities during the pandemic, and 88 percent of technologists state that the digital customer experience is now the priority.

However, technologists report not having the resources and support they need to make this priority shift, with 80 percent citing that they feel held back from delivering the optimal customer experience because of a lack of visibility and insight into the performance of their technology stack. Technologists list the following areas as the biggest challenges in delivering seamless customer experiences during the pandemic:

New Resources and Support are Needed to Rebuild

The report highlights that technologists need specific resources and support from their organizations to meet the challenges ahead. 92 percent state that having visibility and insight into the performance of the technology stack and its impact on customers and the business is the most important factor during this period.13 Other key areas technologists note needing support right now include:

Despite the enormous pressure technologists are facing, 87 percent see this period of time as an opportunity for technology professionals to show their value to the business. Already, 80 percent of technologists report that the response of their IT teams to the pandemic has positively changed the perception of IT within their organizations.

Technologists have the opportunity to step up and guide their organizations through the current crisis, but need access to data and insights to make smarter decisions, to be operating within the right internal structures and culture, and with close support from strategic technology partners.

The Urgent Need for Agents of Transformation

In 2018, The Agents of Transformation Report revealed the need for a new breed of technologists, primed to deliver transformation and business impact within their organizations. These elite technologists, known as Agents of Transformation, possess the skills and attributes needed to drive innovation.

At the time, the research concluded that in order for businesses to remain competitive, 45 percent of technologists within an organization needed to be operating as Agents of Transformation within the next ten years. In the midst of the COVID-19 pandemic, technologists report that the target must be reached not within ten years, but now.

With 83 percent of respondents stating that Agents of Transformation are critical in order for businesses to recover quickly from the COVID-19 pandemic, there is an urgent need for technologists to operate at the highest level of their profession.

“Technologists are stepping up in their organizations’ hour of need, and it is now the responsibility of business leaders to do everything possible to provide these women and men with the tools, leadership and support they require to deliver first class digital customer and employee experiences,” said Danny Winokur, general manager, AppDynamics. “It will be the skill, vision and leadership of these Agents of Transformation that will determine how businesses are able to navigate this turbulent period and emerge stronger on the other side.”

75 per cent of executives polled say employees won’t return to the office as they knew it in wake of COVID-19, 72 per cent accelerating digital transformation to accommodate long-term remote work.

The coronavirus has challenged IT organisations around the world in ways unimaginable. But new research conducted by Censuswide on behalf of Citrix Systems, shows they are rising to the occasion, accelerating their digital transformation efforts to accommodate more flexible ways of working they say employees will demand even after the pandemic subsides. Over three-quarters of more than 3,700 IT leaders in seven countries surveyed believe a majority of workers will be reluctant to return to the office as it was. And 62 per cent say they are expediting their move to the cloud as a result.

Testing their mettle

“COVID-19 has put already stressed IT teams to the test as mandates designed to slow the spread of the virus have forced them to deliver digital work environments with unprecedented speed,” said Meerah Rajavel, chief information officer, Citrix. “But as the results of our latest research reveal, they have responded and are stepping up their efforts to accommodate flexible models that will drive work for the foreseeable future.”

Flexing their muscle

Over two-thirds of the IT decision makers polled by Censuswide (69 per cent) agree** that it has been surprisingly easy for the majority of their employees to work from home, and 71 per cent say that the technology they have put in place has enabled them to collaborate just as effectively as they can face-to-face. In light of this, they are revving up their digital engines and implementing solutions to support remote work for the long haul.

A rough road

The road to widespread remote work has not been easy. Almost half (48 per cent) of the IT leaders who participated in the Censuswide survey say their organisations did not have a business continuity plan based on the vast majority of employees working from home, and 61 per cent found it challenging*** to make the switch.

In addition, the fast and widespread adoption of remote work has opened a new set of concerns and challenges with which they must deal:

Taking a toll

All of this has taken a toll on IT teams, with over three-quarters (77 per cent) reporting high stress levels. But there is a silver lining.

“This crisis has thrust IT teams – often the ‘unsung heroes’ of a business – into the limelight like never before,” Rajavel said. “They have worked to deliver secure, reliable work environments that are keeping employees engaged and productive and business moving in extremely challenging times. And in doing so, they will emerge from the crisis more strategic and valued by their organisations than they were going in.”

More than three-quarters of the IT leaders polled (77 per cent) share this sentiment and say that IT is currently seen as “business critical to their organisation,” while 55 per cent believe that their new job title should be “working from home warrior” or “corporate saviour.”

IT teams are wasting more than a quarter of their time just laying the groundwork for projects, reveals research from NTT Ltd.

Global Data Centers, a division of NTT Ltd., has released a report revealing that digital transformation projects are stalling due to a ‘hesitancy gap’, as enterprises struggle to navigate the risks and complexity associated with turning innovation from concept to reality. The report, ‘Mind the Hesitancy Gap: There’s No Time to Waste in High Stakes Digital Transformation’, shows that 26% of IT teams’ time is wasted laying the groundwork for digital transformation projects, costing UK enterprises an average of £ 2.01m per year.

Despite a continued focus on digital transformation – with respondents saying they are investing in artificial intelligence (63%), Internet of Things (58%), and software defined networks (51%) – half of UK enterprises admit their projects are always or regularly delayed, as a result of too many barriers to overcome, or too much existing pressure on IT. At the same time, 65% are heavily reliant on multi-cloud services to underpin their projects, creating added integration challenges. In fact, 35% cite the complexity of connecting the range of cloud services and other technologies together as a major barrier to the progression of their digital transformation projects.

“In a rapidly evolving landscape, enterprises can’t afford to drag their feet on digital transformation, but it’s not surprising that many are feeling hesitant,” explains John Eland, Chief Strategy Officer of Global Data Centers. “The complexity of connecting a mix of cloud services and other technologies together, adds a significant challenge to overcome before transformation projects can turn into a reality. Adding further strain, there’s the risk that even just a Proof of Concept could have a negative impact on live production systems, leading to service failures that result in reputational or revenue damage. This is understandably causing enterprises concern, resulting in many projects falling behind and innovation to stagnate.”

Other key challenges to innovation include:

Addressing these concerns, the survey showed that UK enterprises estimate they could shave an average of nine months off digital transformation projects, if they didn’t have to spend time building a partner ecosystem and cloud infrastructure and implementing connectivity. A further 94% of enterprises say their digital transformation projects could be ‘supercharged’ if they could test new concepts in a full-scale,

production-ready environment, using a multitude of cloud services, partners and connections – without the hassle of pulling everything together by themselves.

“Many of the challenges associated with a project’s viability could be overcome if enterprises could connect with partners and start-ups to test out their concept before taking the plunge,” continuous John Eland. “Having access to a full-scale, production-ready environment where an innovation project can be trailed using, connections, technologies and service providers, would undoubtedly be a significant boon for businesses. By supercharging digital transformation projects and accelerating time to market, enterprises will be well on their way to closing the hesitancy gap.”

The report is based on a survey of 200 IT decision makers in large UK enterprises with over 1,000 people, across multiple industry sectors, conducted by independent research firm Vanson Bourne on behalf of the Global Data Centers division of NTT Ltd. To download the full report, visit:https://datacenter.hello.global.ntt/hesitancy-gap

With its Technology Experience Labs NTT enables enterprises to overcome the hesitancy gap by helping them to develop efficient cloud strategies, optimise their internal IT landscape, and embrace new business models to remain competitive. Enterprises can gain access to a network of service providers, and to a broad range of tools, technologies, cloud services and connectivity. This reduces the initial investment in complex new scenarios, enables IT departments to measure the impact on IT service delivery, and helps accelerate time to market deployment – crucial to the modern ‘agile’ enterprise.

Syniverse has published the findings of research conducted by Omdia into the concerns and opportunities seen by enterprises around internet of things (IoT) implementation. Most companies see strong business drivers to adopt IoT as part of a broader digital transformation process. Improved efficiency and productivity, improved product/service quality, and improved customer retention and experience ranked highest as objectives. Implementation concerns, particularly around security, remain.

The study was conducted across 200 enterprise executives in North America and Europe in several key vertical industries already using or in the process of deploying IoT, including financial services, retail, manufacturing, health care and hospitality.

Security concerns drive IoT deployments toward private networks

With 50% of respondents identifying data, network, and device security as the biggest challenge to IoT adoption, the insights from “Connected Everything: Taking the I Out of IoT,” reinforce the trend of moving away from the public internet, as concerns such as malware and data theft and leakage increase. Indeed, when asked about how IoT security concerns are being tackled, 50% of respondents cited putting IoT devices on their own private networks, and 97% are either considering, or currently using, a private network for their IoT deployments.

Concerningly, however, 50% of enterprises report they do not have dedicated teams, processes or policies for IoT cybersecurity. The survey also revealed that IoT security is creating an adoption lag, with 86% of enterprises reporting that IoT deployments have been delayed or constrained due to security concerns. Budgets for security are significant, with 54% of respondents spending 20% or higher of their IoT budget on security. This indicates significant reliance on IoT providers for both solutions and guidance in this area, with 83% of enterprises stating the ability to provide proven integrated IoT security solutions is essential or very important to them when choosing an IoT supplier.

The research also identified integration as a top issue, following only security concerns as the biggest challenges to IoT adoption in the enterprise:

Since security ranks high on the list of enterprises’ IoT concerns, providing technical solutions, consulting services and transparent approaches to IoT security will be key if enterprises are to more fully embrace IoT.

Priorities vary by industry

Different verticals perceive different challenges with IoT, so providers need to gear their support to these areas in a more customized way.

On being asked which IoT applications are being deployed now, connected security dominates across the board at enterprises with 70% adoption. Other common IoT applications already being deployed are worker and workplace safety applications, and remote payment terminals, depending on the industry.

For example:

Expansion into other uses cases such as asset monitoring, smart building and energy management applications, and predictive maintenance are being widely considered for future deployment.

Research by Harvard Business Review Analytic Services, sponsored by ThoughtSpot, shows only 7% of organisations are fully equipping their teams with the analytic tools and resources needed to drive decision-making and autonomy.

New research from Harvard Business Review Analytic Services sponsored by ThoughtSpot, the leader in search and AI-driven analytics, illustrates a link between empowering frontline employees, like retail merchandisers, doctors, and banking relationship managers, and organisational performance.

The report, “The New Decision Makers: Equipping Frontline Workers For Success,” analyses the sentiments of 464 business executives from 16 industry sectors in North America, Europe and Asia Pacific. The study found organisations will be more successful when frontline workers are empowered to make important decisions in the moment, but the reality is few are equipping their workers with the resources to do so. Only one-fifth of organisations say they currently have a truly empowered and digitally equipped workforce while 86% agree their frontline workers need better technology and more insight to be able to make good decisions in the moment.

Frontline employees stand to benefit the most from resources like communication and collaboration tools and self-service analytics, which top the list of technologies that survey respondents expect knowledge workers to be using over the next two years. Respondents across the board strongly believe that both work quality and productivity will increase as more data-based insights are made available to such workers. Specifically, 92% say the quality of work of frontline employees in their organisation would improve in the long term, and 73% say it would improve in the short term as well.

“Now more than ever we’re seeing a need for organisations to be able to adapt, evolve and pivot at pace in order to meet changing business demands. Frontline staff have become even more critical in enabling businesses to identify efficiencies and opportunities to navigate this new world, and most simply don’t have the tools they need,” said Sudheesh Nair, CEO of ThoughtSpot. What this research shows is what we see with our own customers: those that are empowering and equipping the frontlines are not only delivering better customer experiences, but breaking down the traditional business silos and structures needed for true agility and transformation.”

Technology and telecoms outperform all other industries for frontline empowerment

The study also found huge discrepancies between different industries in terms of their capacity for empowering frontline staff - with technology/telecoms outperforming all other industries.

Based on collected performance data, the report was able to identify certain industries as ‘Leaders’ and ‘Laggards’ when it comes to empowering employees and equipping them with digital tools to make informed business decisions.

After telecoms, ‘Leaders’ were best represented by organisations within the financial services (20%) industries, whereas ‘Laggards’ were represented by organisations in manufacturing (18%), government and education (17%) and healthcare and pharmaceuticals (15%).

‘Leaders’ who are actively empowering frontline staff are already reaping the benefits, with 72 per cent saying productivity has increased at least moderately; 69 per cent saying they’ve increased both customer and employee engagement/satisfaction, and 67 per cent saying they’ve increased the quality of their products and services.

Leaders are also more likely to have seen increased revenue over the past year: 16 per cent have grown more than 30 per cent and another third have grown between 10 and 30 per cent.

The obstacles are both culture and process-led

When looking at the differences between ‘Leaders’ and ‘Laggard’ companies, it becomes clear that part of the empowerment issue lies in part within the culture of these businesses. For example, respondents at ‘Laggard’ companies were 10 times more likely than ‘Leaders’ to say their top management does not want frontline workers making decisions (42% vs. 4%).

Further, organisations are not able to realise the benefits of a fully empowered workforce without overcoming the barriers influencing the decision-making process. Currently, the largest hurdle to frontline worker empowerment is the lack of effective change management and adoption processes (44%).

Additionally, nearly one third (31%) say a lack of skills to make appropriate use of technology-enabled insight is an obstacle. While almost all respondents (91%) say that managers and supervisors play an essential role in empowering frontline workers, over half (51% overall, 66% of ‘Laggards’) say managers and supervisors are not well equipped with the right tools, training, and knowledge to empower frontline employees appropriately.

Creating change comes from the top down

For a fully empowered and productive and frontline, organisations can learn from the ‘Leaders’. ‘Leaders’ specifically report that a shift to a data-driven culture is critical to their corporate strategy (51% compared to 23% of ‘Laggards’). As a result, ‘Leaders’ are investing more heavily than others in digital capabilities that are designed to transform frontline working: They widely expect their organisations to adopt collaboration tools (55%) and self-service analytics (54%) over the next two years.

Industries across can also invest more deeply in training employees. Today, only two-thirds invest in programmes that teach workers how to use new technology tools and only half (46%) are spending on programmes that show workers how to effectively apply the insights these technologies provide.

“Quality frontline decision-making is driving short term gains, but more drastically propelling better business in the long term,” said Alex Clemente, managing director, at Harvard Business Review Analytics Services. “The shift to an empowered workforce is causing organisations to experience significant increases in productivity and customer and employee satisfaction, however, more holistically, these efforts are also generating enhanced innovation, top-line growth, market position and profitability. To enable this growth, we expect top management to first prioritise building a culture and team that supports data-based decision-making.”

Significant opportunities to improve CSR performance, increase profitability and accelerate innovation linked to improving supplier collaboration.

Ivalua has published the findings of a worldwide study of supply chain, procurement, sales and finance business leaders on the current state of collaboration in procurement. The study reveals that a lack of digitisation and a focus on costs is hindering supplier collaboration, with 77% of respondents admitting that cost dominates supplier selection for most sourcing projects, and a further 80% acknowledging they need to accelerate their digitisation of procurement.

The research, conducted by Forrester Consulting and commissioned by Ivalua, found improving collaboration was a priority for 77% of respondents. Respondents identified improving CSR (41%), increasing margins (38%) and accelerating the pace of innovation (36%) as the top opportunities to unlock greater value through supplier collaboration.

The majority of respondents say they have improved collaboration with most strategic suppliers (90%) and their broader supply base (91%) in the last two years. However, there are still a number of areas for improvement. This includes collaboration processes; there was no mechanism in place for many organisations to collaborate effectively with the broader supply base (42%), finance (41%), IT (40%), or their most strategic suppliers (39%).

The research highlighted notable geographic differences:

“Most procurement departments are taking steps to improve supplier collaboration, but supplier selection is still dominated by cost, and businesses are struggling to work strategically with suppliers,” explained Alex Saric, Smart Procurement Expert at Ivalua. “As firms increasingly rely on suppliers to help them keep up with the growing pace of innovation and to adapt during crises such as COVID-19, they need be building stronger relationships. If organisations don’t establish themselves as a customer of choice now, they will be at a significant competitive disadvantage as in-demand suppliers pick and choose who they work with and prioritize.”

According to the study, respondents believe the most effective ways to improve supplier collaboration are setting up performance goals and KPIs that place greater weight on collaboration (76%), and investing in technology that enables better information sharing with suppliers (63%). The study also surveyed suppliers, of which 88% stated that the main factor that is likely to increase their willingness to collaborate and share innovations with a specific customer was visibility into payments and their timeliness. Digitisation is essential to enabling payment efficiency, transparency and control.

“It’s clear that companies need to digitise procurement processes to enable deeper collaboration across more of their supply base. Doing so will enable them to better unlock innovation from suppliers, ensure supply continuity and better address other strategic priorities,” concluded Saric.

The data centre sector is one of the best protected in the current downturn. The fundamentals of being in lockdown at home, for both work and personal life, mean that this digital infrastructure is more crucial than ever.

Mitul Patel, Head of EMEA Data Centre Research at CBRE, comments:

All of the applications and services provided by the cloud computing companies that we are using to function effectively during mandatory lockdown are ultimately powered by data centres, and therefore the role of the data centre today is critical for all aspects of life. Data centres are the reason that we can have conference calls, shop online, stream television, play video games and connect with family and friends.

Q1 2020 was unusually quiet for cloud companies, who are responsible for 80% of take-up across the FLAP markets. While the total take-up for Q1 was therefore relatively modest, at 26MW, there is no doubting the appetite of the cloud service providers for new data centre infrastructure in Europe. The scale of their individual commitments is increasing and this is resulting in a growth in the number of single-tenant facilities. In the most active markets, London and Frankfurt, these companies are increasingly looking to lease whole buildings of over 20MW. As a result, the number of facilities leased entirely by single-tenants in 2020 is expected to reach nine, compared to four in 2019 and one in 2018.

On top of Q1 take-up, CBRE is aware of 138MW of further capacity that has already been committed to or is under option in the FLAP markets. These transactions, plus additional deals which come to market in the next three quarters, make it likely that the FLAP markets will reach 200MW for the second consecutive year.

Possibly one of the most significant challenges in the current environment involves the construction of new facilities and fit-out of data halls for new tenants. Market take-up is dependent of the development of these facilities to house new end-user requirements, so minimizing any delays to construction programmes will be important to overall success.

As things stand, most companies are continuing with construction and plan to continue to do so as long as it is legal, practically possible and safe. Developer-operators are confident that delays will be minimised and that there will therefore not be a significant disruption to existing development pipelines.

The underlying drivers in the European data centre sector are strong and the outlook is promising. Most economic indicators show that data centre asset class is weathering the effects of Covid-19 well and we are confident the sector in Europe will continue to show strong growth in the coming 12-18 months

O’Reilly has released the survey findings of its latest report, “Cloud Adoption in 2020,” that captures the latest trends in cloud, microservices, distributed application development, and other critical infrastructure and operations technologies.

The report found that more than 88% percent of respondents use cloud infrastructure in one form or another, and 45% of organisations expect to move three quarters or more of their applications to the cloud over the next twelve months.

The report surveyed 1,283 software engineers, technical leads, and decision-makers from around the globe. Of note, the report uncovered that 21% of organisations are hosting all applications in a cloud context. The report also found that while 49% of organisations are running applications in traditional, on-premises contexts, 39% use a combination of public and private cloud deployments in a hybrid-cloud alternative, and 54% use multiple cloud services.

Public cloud dominates as the most popular deployment option with a usage share greater than 61%, with AWS (67%), Azure (48%), and Google Cloud Platform (GCP) (32%) as the most used platforms. However, while Azure and GCP customers also report using AWS, the reverse is not necessarily true.

“We see a widespread embrace of cloud infrastructure across the enterprise which suggests that most organisations now equate cloud with “what’s next” for their infrastructure decisions and AWS as the front-runner when it comes to public cloud adoption,” said Mary Treseler, vice president of content strategy at O’Reilly. “For those still on the journey to cloud-based infrastructure migration, ensuring that staff is well-versed in critical skills, such as cloud security and monitoring, will be incredibly important for successful implementations. Enterprises with solid footing have the potential to leverage this infrastructure for better software development and AI-based services, which will put them at an advantage over competitors.”

Other notable findings include:

Cloud computing has surged in recent years, changing the way people communicate, manage data and do business. Billions of private and business users take advantage of the on-demand technology

However, with coronavirus lockdown rules in place and millions of people spending more time indoors and online, the global demand for cloud services has soared over the last few months. This growing need for cloud solutions has led to increased spending on hardware and software components needed to support the computing requirements.

Global cloud IT infrastructure spending is expected to grow 3.6% year-on-year, reaching $69.2bn in 2020, according to data gathered by LearnBonds.

Cloud Infrastructure Spending Has Tripled Since 2013

Today, billions of people use personal cloud storage to manage and store private data. However, its ability to provide access to computing power that would otherwise be extremely expensive has seen cloud computing technology spread widely in the business sector, also.

The examples of cloud computing use can be found practically everywhere, from social networking, messaging apps, and streaming services to business processes, office tools, chatbots, or lending platforms.

In 2013, the global spending on cloud IT infrastructure, including hardware, abstracted resources, storage, and network resources, amounted to $22.3bn, revealed Statista data and the International Data Corporation (IDC) Worldwide Quarterly Cloud IT Infrastructure Tracker. Over the next four years, this amount grew to $47.4bn. The trend has continued to grow strongly so that global cloud IT infrastructure spending has tripled since 2013.

IDC`s report revealed that public cloud infrastructure spending is expected to drive the global market growth this year.

Research director of Infrastructure Systems, Platforms, and Technologies at IDC, Kuba Stolarski said: “As enterprise IT budgets tighten through the year, the public cloud will see an increase in demand for services.

This increase will come in part from the surge of work-from-home employees using online collaboration tools, but also from workload migration to the public cloud as enterprises seek ways to save money for the current year. Once the coast is clear of the coronavirus, we expect some of this new cloud service demand to remain sticky going forward."

IDC's five-year forecast predicts cloud IT infrastructure spending will reach $100.1 bn by 2024, growing by a compound annual rate of growth of 8.4%.

Almost 80% of Businesses to Adopt Cloud Technology in 2020

The growing need for cloud solutions recently has led to a surge in the vendor revenue from cloud IT infrastructure. In 2019, they made a $63.97bn profit from selling these IT products and solutions, 30% more compared to 2017 figures. The 2019 data also showed that ODM Direct held 32% of the market. Dell Technologies and HPE follow with 16% and 11.7% market share, respectively.

According to a global CIO survey, public cloud adoption was the key draw for many companies in 2020, with 79% of polled groups planning to make heavy to moderate adoption of cloud technology. AI/machine learning ranked as the second-most wanted technology, with 72% of firms planning to use it in 2020.

Statistics show that 70% of businesses plan to adopt private cloud solutions this year, followed by 63% of companies who prefer multi-cloud solutions.

Attackers using COVID-19 pandemic to launch attacks on vulnerable organisations.

NTT has launched its 2020 Global Threat Intelligence Report (GTIR), which reveals that despite efforts by organizations to layer up their cyber defences, attackers are continuing to innovate faster than ever before and automate their attacks. Referencing the current COVID-19 pandemic, the report highlights the challenges that businesses face as cyber criminals look to gain from the global crisis and the importance of secure-by-design and cyber-resilience.

The attack data indicates that over half (55%) of all attacks in 2019 were a combination of web-application and application-specific attacks, up from 32% the year before, while 20% of attacks targeted CMS suites and more than 28% targeted technologies that support websites. For organizations that are relying more on their web presence during COVID-19, such as customer portals, retail sites, and supported web applications, they risk exposing themselves through systems and applications that cyber criminals are already targeting heavily.

Matthew Gyde, President and CEO of the Security division, NTT Ltd., says: “The current global crisis has shown us that cyber criminals will always take advantage of any situation and organizations must be ready for anything. We are already seeing an increased number of ransomware attacks on healthcare organizations and we expect this to get worse before it gets better. Now more than ever, it’s critical to pay attention to the security that enables your business; making sure you are cyber-resilient and maximizing the effectiveness of secure-by-design initiatives.”

Industry focus: Technology tops most attacked list

While attack volumes increased across all industries in the past year, the technology and government sectors were the most attacked globally. Technology became the most attacked industry for the first time, accounting for 25% of all attacks (up from 17%). Over half of attacks aimed at this sector were application-specific (31%) and DoS/DDoS (25%) attacks, as well as an increase in weaponization of IoT attacks. Government was in second position, driven largely by geo-political activity accounting for 16% of threat activity, and finance was third with 15% of all activity. Business and professional services (12%) and education (9%) completed the top five.

Mark Thomas who leads NTT Ltd.’s Global Threat intelligence Center, comments: “The technology sector experienced a 70% increase in overall attack volume. Weaponization of IoT attacks also contributed to this rise and, while no single botnet dominated activity, we saw significant volumes of both Mirai and IoTroop activity. Attacks on government organizations nearly doubled, including big jumps in both reconnaissance activity and application-specific attacks, driven by threat actors taking advantage of the increase in online local and regional services delivered to citizens.”

2020 GTIR key highlights:

The 2020 GTIR also calls last year the ‘year of enforcement’ as the number of Governance, Risk and Compliance (GRC) initiatives continues to grow, creating a more challenging global regulatory landscape. Several acts and laws now influence how organizations handle data and privacy, including the General Data Protection Regulation (GDPR), which has set a high standard for the rest of the world, and The California Consumer Privacy Act (CCPA) which recently came into effect. The report goes on to provide several recommendations to help navigate compliance complexity, including identifying acceptable risk levels, building cyber-resilience capabilities and implementing solutions that are secure-by-design into an organization’s goals.

The 2020 Mainframe Modernisation Business Barometer Report suggests disconnect between C-Suite and technical teams as main barrier to modernisation efforts.

More than two-thirds of organisations (67%) have started a legacy system modernisation project but failed to complete it. This is according to new global research from Advanced, which suggests one of the largest obstacles to a successful modernisation project is a disconnect of priorities between technical and leadership teams.

The IT services provider’s 2020 Mainframe Modernisation Business Barometer surveyed business and technology employees working for large enterprises with a minimum annual turnover of $1 billion across the UK1. The report explores trends within the mainframe market, the challenges organisations face, and the case for application modernisation among large enterprises across the globe.

With a lack of success or progress in a large number of legacy system modernisation projects, the report also suggests a disconnect between business and technical teams could be to blame. In fact, the primary motivations behind pursuing modernisation initiatives varies between those with more business-focused versus technical job roles – as does their chances of success when securing funding for these projects.

The research finds that CIOs and Heads of IT are more interested in the technology landscape of their organisation as a whole, whereas Enterprise Architects are more internally focused. 87% of Enterprise Architects cite poor performance and other technical influences as the primary reason to modernise whereas CIOs and Heads of IT cite business competitiveness (65%), security (57%) and integration (48%).

Despite the apparent business benefits of modernising, there is a significant disconnect between the desire for technical teams to pursue these projects and the level of commitment they receive from broader leadership teams:

“Collaboration is absolutely essential to successful modernisation,” said Brandon Edenfield, Managing Director of Application Modernisation at Advanced. “To achieve this, technical teams must ensure that senior leadership see the value and broader business impact of these efforts in terms they can understand. Without full commitment and buy-in from the C-Suite, these projects run the risk of complete failure.”

Looking to the Cloud

Despite differences in their motivations for modernisation, most respondents agreed on the value of the Cloud in modernisation. In fact, 100% of those surveyed reported active plans to move legacy applications to the Cloud in 2020. This push is likely driven by key benefits such as enhanced business agility and flexibility as well as the opportunity to attract new generations entering the workforce who expect advanced technologies. Above all, it offers significant cost savings for IT infrastructures. In fact, organisations could save around £24 million if they modernise the most urgent aspects of their legacy systems.

Brandon continued: “As our world grows increasingly connected, organisations need to get more serious about modernisation. If organisations are to adapt to market changes and remain competitive, organisations need to consider legacy modernisation as the foundation and starting point of their overall digital transformation efforts. Those who fail to prioritise this shift risk falling behind their competition and significant revenue loss in the future.”

Many optimistic ideas emerged in this week’s Managed Services Summit Live as MSPs and service partner channels met online to listen to their customers, other MSPs, analysts and tech experts to review the state of the business. IT Europa's John Garratt reports.

Over two hundred participants heard ex-Gartner analyst and sales evangelist Tiffani Bova outline the processes to re-engage with customers, and how *not* to do it. Her message was an enthusiastic and practical call to address existing customers as a priority in these times, not go chasing too much new business yet. “It is a crisis of prioritization; people are asking ‘what should I be focused on now?’ in the middle of all the things they could be doing. Look at what you are doing – is it going to help you or your customers stabilize, help you get back to work, or help you or your customers grow? You could answer yes to everything - but put on the hard hat and cut what is not needed.”

Also sitting in a hard-hat area was Andy Evers, head of IT at Red Carnation Hotels, whose global business is shut down. In the current uncertainty, he is having to delay project decisions, so asks partners to be patient in decision over strategies. “Last minute decisions and changes are probably going to be part of our programme, looking at the next year or so.”

He emphasized how important the relationship is with MSPs for him as a customer. In the same way that his hotel chain tries to anticipate a guest’s need, he expects MSPs to bring ideas to him with experiences from similar customers, challenges he might not be aware of yet and with facilities which can be dialled up and down when needed.

“This kind of dialogue is the piece that is missing – it separates those on the goodies list from the others.”

Among the MSPs who contributed to the session over two days was Jason Fry of PAV IT Services. Delivering IT services in a more agile way is essential, compared with the previously more planned approach is of benefit. “There are advantages in planning and we have always delivered IT projects in that way, but the position is different now. It is difficult to plan for a future now. Anything which means an MSP can working in a more agile way is a big advantage. Perhaps taking a less risk-averse approach to the delivery of projects. What has amazed me is just how quickly some of our customers have been able to implement change since lockdown. So much time in the past was spent trying to work out every eventuality to minimise risk but causing delays. By the time it is deployed, the technology landscape would have changed. in the last few weeks, customers have changed and evolved by learning and experimenting with some of that technology and actually the outcome has not been too bad.”

Presentations on sales messaging, technologies and educating both themselves and customers from leading experts, gave MSPs plenty of ideas to take away from the online event, which is available to download.

Sponsors of the event included Dell Technolgoies, LogMeIn IT Glue, mimecast, SolarWinds, StorageCraft, Tech Data, Trend Micro, WatchGuard, Webroot, Bitdefender, ConnectWise, liongard, e92, Sophos, Comstor, MAP360 and Skout.

Worldwide IT spending is projected to total $3.4 trillion in 2020, a decline of 8% from 2019, according to the latest forecast by Gartner, Inc. The coronavirus pandemic and effects of the global economic recession are causing CIOs to prioritize spending on technology and services that are deemed “mission-critical” over initiatives aimed at growth or transformation.

“CIOs have moved into emergency cost optimization which means that investments will be minimized and prioritized on operations that keep the business running, which will be the top priority for most organizations through 2020,” said John-David Lovelock, distinguished research vice president at Gartner. “Recovery will not follow previous patterns as the forces behind this recession will create both supply side and demand side shocks as the public health, social and commercial restrictions begin to lessen.”

All segments will experience a decline in 2020, with devices and data center systems experiencing the largest drops in spending (see Table 1.) However, as the COVID-19 pandemic continues to spur remote working, sub segments such as public cloud services (which falls into multiple categories) will be a bright spot in the forecast, growing 19% in 2020. Cloud-based telephony and messaging and cloud-based conferencing will also see high levels of spending growing 8.9% and 24.3%, respectively.

“In 2020, some longer-term cloud-based transformational projects may be put on hiatus, but the overall cloud spending levels Gartner was projecting for 2023 and 2024 will now be showing up as early as 2022,” said Mr. Lovelock.

Table 1. Worldwide IT Spending Forecast (Millions of U.S. Dollars)

| 2019 Spending | 2019 Growth (%) | 2020 Spending | 2020 Growth (%) |

Data Center Systems | 211,633 | 0.7 | 191,122 | -9.7 |

Enterprise Software | 458,133 | 8.8 | 426,255 | -6.9 |

Devices | 698,086 | -2.2 | 589,879 | -15.5 |

IT Services | 1,031,578 | 3.8 | 952,461 | -7.7 |

Communications Services | 1,357,432 | -1.6 | 1,296,627 | -4.5 |

Overall IT | 3,756,862 | 1.0 | 3,456,344 | -8.0 |

Source: Gartner (May 2020)

“IT spending recovery will be slow through 2020, with the hardest hit industries, such as entertainment, air transport and heavy industry, taking over three years to come back to 2019 IT spending levels,” said Mr. Lovelock. “Recovery requires a change in mindset for most organizations. There is no bouncing back. There needs to be a reset focused on moving forward.”

Organizations can differentiate themselves from competitors during the COVID-19 pandemic by leveraging nine ongoing trends, according to Gartner, Inc. These trends are broken into three categories: accelerating trends, new impacts that were not previously part of the future of work discussion, and pendulum swings – temporary shorter-term reactions.

“It is critical for business leaders to understand the large scale shifts that are changing how people work and how business gets done,” said Brian Kropp, chief of research for the Gartner HR practice. “Then, they must apply this knowledge to their specific organization so they can alter their strategy accordingly.”

Gartner recommends HR leaders evaluate the following trends to determine if and how they apply to their business:

Accelerating Trends

Increase in remote work. Gartner analysis shows that 48% of employees will likely work remotely at least part of the time after the COVID-19 pandemic, compared to 30% pre-pandemic. In fact, 74% of CFOs intend to increase remote work at their organization after the outbreak. To succeed in a world of increased remote work, hiring managers should prioritize digital dexterity and digital collaboration skills. HR must consider how the context of remote work shifts performance management, particularly how goals are set and how employees are evaluated.

Expanded data collection. Organizations have increased their passive tracking of employees as their workforce has become remote. According to an April Gartner survey, 16% of organizations are passively tracking employees via methods like virtual clocking in and out, tracking work computer usage and monitoring employee emails or internal communications/chat. In addition, employers are likely to have significantly more access to the health data of their employees. For example, employers will want to know if any of their employees have the COVID 19 antibodies.

“HR leaders should weigh-in on the ethics of using employee data, but also on how to utilize employee monitoring to understand employee engagement across an increasingly dispersed workforce,” said Mr. Kropp.

Employer as social safety net. Employers will expand their involvement in the lives of their employees by increasing mental health support, expanding health care coverage, and providing financial health support during and after the pandemic.

Organizations are also considering the question of maintaining compensation for employees, even for those who are unable to work remotely or have been furloughed/laid off throughout and after the COVID-19 crisis.

Expansion of contingent workers. A recent Gartner survey revealed that 32% of organizations are replacing full time employees with contingent workers as a cost-saving measure. Utilizing more gig workers provides employers with greater workforce management flexibility. However, HR will also need to consider how performance management systems apply to contingent workers as well as questions around whether contingent workers will be eligible for the same benefits as their full-time peers.

New Impacts

Separation of critical skills and critical roles. Leaders are redefining what critical means to include: employees in critical strategic roles, employees with critical skills and employees in critical workflow roles.

“Separating critical skills from critical roles shifts the focus to coaching employees to develop skills that potentially open multiple avenues for them, rather than focusing on preparing for a specific next role,” said Emily Rose McRae, director in the Gartner HR practice. “Organizations should reevaluate their succession plans and may expand the range of roles considered as part of the development path for a given role’s potential future successors.”

Humanization (and dehumanization) of workers. Throughout the COVID-19 pandemic, some employees have formed more connected relationships, while others have moved into roles that are increasingly task oriented. Understanding how to engage task workers in the team culture and creating a culture of inclusiveness is now even more important. To deliver on employee experience, HR will need to facilitate partnerships across the organization while working with managers to help employees navigate the different norms and expectations associated with these shifts.

Emergence of new top-tier employers. As the labor market starts to return to normalcy, candidates will want to know how companies treated their workforce during the COVID-19 outbreak. Organizations must balance the decisions made today to address immediate concerns during the pandemic with the long-term impact on their employment brand that will span the next several years.

Pendulum Swings

Shift from designing for efficiency to designing for resilience. Prior to the COVID-19 crisis, 55% of organizational redesigns were focused on streamlining roles, supply chains, and workflows to increase efficiency. Unfortunately, this path has created fragile systems, prompting organizations to prioritize resilience as equally important as efficiency.

Providing more varied, adaptive and flexible careers helps employees gain the cross-functional knowledge and training necessary for more flexible organizations. Additionally, organizations should shift from trying to “predict” (targeting a specific set of future skills) to “responding” (structuring such that you can quickly course correct with change).

Increase in organizational complexity. Across the next several months there will be an acceleration of M&A, nationalization of companies, and bigger companies becoming even bigger. This rise in complexity will create challenges for leaders as operating models evolve. HR will need to take the lead on shifting to more agile operating models and helping leaders manage greater complexity.

According to the latest release of the Worldwide Digital Transformation Spending Guide published by International Data Corporation (IDC), European ICT investments aimed at digital transformation will increase 12% year on year to reach $305 billion in 2020. This represents a significant contraction from the 18% growth forecast prior to the onset of the COVID-19 pandemic.

In a lockdown situation with most global economies in at least a mild recession, companies realize that, with the right technology in place, employees can continue working remotely, and operations can be maintained while they adjust as quickly as possible to the new normal. While digitalization investments across industries will suffer, some will be more pronounced than others, depending on the extent to which the given industry has been impacted by the crisis and on the ability of organizations to innovate.

The biggest drops in DX spending growth in 2020 compared to the pre-pandemic forecast will be seen in transportation, personal and consumer services, retail, and discrete manufacturing. The most resilient sectors are expected to be government, utilities, education, and telecom. In terms of DX spending, healthcare is expected to be the fastest growing industry in 2020 in Europe.

However, even in the most affected industries, some areas of technology investments (use cases) will keep growing, as businesses are looking to resiliency, flexibility, and efficiency to their operations. In discrete manufacturing, efficiency and productivity have never been more important, yet the skills shortage was costing European manufacturing companies billions of euros annually, even before the COVID-19 outbreak. Although they are considered expensive, autonomous technologies are more appealing to companies than the alternative of downtimes. Technologies like IoT, automation, and robotics also decrease the effect of restrictions related to health concerns (lockdowns) and increase flexibility when reconfiguring production lines. For transportation companies, the intelligent inventory planning and routing use case becomes essential when traditional transportation routes are not available, or destinations and cargo volumes change. In healthcare, use cases like remote patient monitoring are accelerating as more people with chronic diseases can be consulted and treated remotely. In education, uses cases have focused on distance education, which has triggered investments in virtualized labs and integrated planning and advising.

TOP 10 Use Cases for Advancing DX

Investments in the following use cases have accelerated the most compared to the pre-COVID 19 forecast.

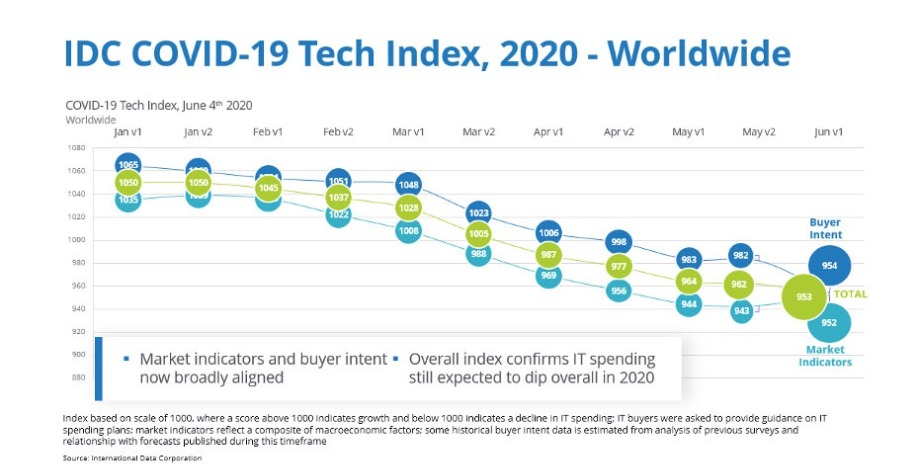

Business confidence in IT spending declines

Business confidence levels declined in the last week of May, according to the latest update to the IDC COVID-19 Tech Index. IT buyers in the US, Western Europe, and some parts of Asia/Pacific indicated that they now expect total IT spending to decline by more than previously anticipated. This is in spite of a general stabilization in other market indicators over the past month, as many countries prepare to tentatively move into a gradual recovery phase.

Confidence levels are still especially weak in the USA, where they have continued to trend down since the crisis began. US firms are a little more confident about the overall economy than two weeks ago, but conversely less confident about their own IT budgets for the year as a whole. Significant spending declines are predicted for traditional technologies including PCs, peripherals, software applications, and project-oriented IT services. Survey results also deteriorated in Europe, especially in France, Italy, and Russia.

"The survey results have diverged with businesses in most countries now expressing less confidence about their own spending than about the broader economy," said Stephen Minton, vice president with IDC's Customer Insights & Analysis group. "This could just reflect the fact that we’re still in the middle of the second quarter when the biggest spending cuts are likely to be concentrated and the scale of the short-term impact has been even worse than some firms expected. In fact, survey results are now closer in line with market indicators in terms of the scale of IT spending decline projected for 2020 as a whole."

The COVID-19 Tech Index uses a scale of 1000 to provide a directional indicator of changes in the outlook for IT spending and is updated every two weeks. The index is based partly on a global survey of enterprise IT buyers, and partly on a composite of market indicators which are calibrated with country-level analyst inputs relating to medical infection rates, social distancing, travel restrictions, public life, and government stimulus. A score above 1000 indicates that IT spending is expected to increase, while a score below 1000 points towards a likely decline.

COVID-19 Tech Index | March | April | May | June |

Buyer Intent | 1023 | 1006 | 983 | 954 |

Market Indicators | 988 | 969 | 944 | 952 |

Total Index | 1005 | 987 | 964 | 953 |

Source: IDC COVID-19 Tech Index, 2020 | ||||

Notes: Index score above 1000 indicates expected increase in IT spending for 2020 overall; score below 1000 indicates a projected decline.

Business confidence had been improving steadily in Asia/Pacific, but the picture is more complex according to the latest poll. IT spending is still projected to increase in China, where the economy has moved more quickly from a containment to recovery mode, but confidence levels plunged in India and even declined in Korea where moves to ease lockdown measures appeared to trigger some instances of infections increasing again.

"The recovery phase in the second half of the year will be unpredictable and there may be volatility in survey results as businesses react to anxiety around a possible second wave of infections," said Minton. "The first phase of this crisis was uniformly bad for everyone, but the next chapter will be very localized and dependent on a delicate balance of medical and economic factors. Not surprisingly, the latest survey results support a sense that IT buyers remain cautious in this type of economic climate and continue to be vigilant in the near term. Moreover, we have now entered a phase where some companies are being forced into bankruptcy or employee reductions, which will have inevitable implications for tech spending in the second half of the year."

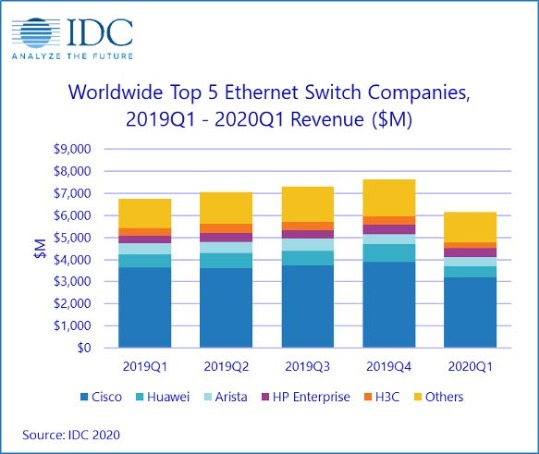

Worldwide ethernet switch and router markets decline

The Worldwide Ethernet switch market recorded $6.16 billion in revenue in the first quarter of 2020 (1Q20), a decrease of 8.9% year over year. Meanwhile, the worldwide total enterprise and service provider (SP) router market revenues fell 16.4% year over year in 1Q20 to $2.99 billion. These market results were published today in the International Data Corporation (IDC) Quarterly Ethernet Switch Tracker and IDC Quarterly Router Tracker.

A variety of factors led to the weakening of these markets across the globe. Despite the Ethernet switch market growing 2.3% for the full year 2019, in the fourth quarter of 2019 the market fell 2.2%, indicating that the market's slow end to 2019 spilled into 1Q20. The first quarter of 2020 was also impacted by the COVID-19 pandemic that swept across the world throughout the quarter, specifically disrupting supply chains while weakening customer demand. IDC expects the negative impact of COVID-19 on both the Ethernet switch and router markets to continue in the second quarter of 2020.

Ethernet Switch Market Highlights

From a geographic perspective, the 1Q20 Ethernet switch market saw mixed results across the globe. The Middle East and Africa (MEA) region declined 2.9%, with Saudi Arabia's market off 12.7% year over year. Across Europe, growth was uneven. The Central and Eastern Europe (CEE) region grew 3.7% compared to a year earlier, with Russia up 23.2% year over year. The Western Europe market fell 12.9% with Germany losing 10.6% year over year and the United Kingdom off 18.4% from a year earlier.

The Asia/Pacific region (excluding Japan and China) (APeJC) declined 7.0% year over year, with India off 11.3% and Australia declining 16.2% year over year. The People's Republic of China was down 14.6% year over year while Japan was relatively flat with a 0.1% increase compared to the first quarter of 2019. The Latin American market dropped 9.7% year over year, while the market in the United States declined 8.7% annually and Canada fell 7.2% year over year.

"Weakness in the Ethernet switch and routing markets at the end of 2019 continued into the first quarter of 2020, which was exacerbated by the onset of the novel coronavirus and subsequent lockdown of economies around the globe as 1Q20 progressed," said Brad Casemore, research vice president, Datacenter and Multicloud Networks. "Meanwhile, diverging trends intensify in the Ethernet switch market as hyperscale and cloud providers invest in greater datacenter scale and higher bandwidths while enterprises continue to refresh campus networks with lower-speed switch ports."

Growth in the Ethernet switch market continues to be driven by the highest speed switching platforms. For example, port shipments for 100Gb switches rose 52.1% year over year to $5.5 million. 100Gb revenues grew 9.9% year over year in 1Q20 to $1.28 billion, making up 20.8% of the market's revenue. 25Gb switches also saw impressive growth, with revenues increasing 58.9% to $482.9 million and port shipments growing 67.7% year over year. Lower-speed campus switches, a more mature part of the market, saw mixed results in port shipments and revenue, as average selling prices (ASPs) in this segment continue to decline. 10Gb port shipments rose 3.9% year over year, but revenue declined 21.4%. 10Gb switches make up 24.8% of the market's total revenue. 1Gb switches declined 3.8% year over year in port shipments and fell 11.9% in revenue.1Gb now accounts for 39.0% of the total Ethernet switch market's revenue.

Router Market Highlights

The worldwide enterprise and service provider router market decreased 16.4% on a year-over-year basis in 1Q20, with the major service provider segment, which accounts for 75.1% of revenues, decreasing 16.8% and the enterprise segment of the market declining 15.3%. From a regional perspective, the combined service provider and enterprise router market fell 29.4% in APeJC. Japan's total market grew 8.4% year over year and the People's Republic of China market was off 10.9%. Revenues in Western Europe declined 23.5% year over year, while the combined enterprise and service provider market in the CEE region declined 17.1%. The MEA region was down 5.2%. In the U.S., the enterprise segment was down 12.2%, while service provider revenues fell 19.6%, giving the combined markets a year-over-year drop of 17.5%. The Latin America market declined 15.9% on an annualized basis.

Vendor Highlights

Cisco finished 1Q20 with a 12.0% year-over-year decline in overall Ethernet switch revenues and market share of 51.9%. In the hotly contested 25Gb/100Gb segment, Cisco is the market leader with 39.8% of the market's revenue. Cisco's combined service provider and enterprise router revenue declined 28.1% year over year, with enterprise router revenue decreasing 18.7% and SP revenues falling 33.8%. Cisco's combined SP and enterprise router market share stands at 36.3%.

Huawei's Ethernet switch revenue declined 14.0% on an annualized basis, giving the company a market share of 8.4%. The company's combined SP and enterprise router revenue declined 2.1% year over year, resulting in a market share of 28.8%.

Arista Networks saw its Ethernet switch revenues decline 18.7% in 1Q20, bringing its share to 6.7% of the total market. 100Gb revenues account for 73.7% of the company's total revenue, reflecting the company's longstanding presence at hyperscalers and other cloud providers.

HPE's Ethernet switch revenue increased 6.7% year over year, giving the company a market share of 6.2%, up from 5.3% market share the same quarter a year earlier.

Juniper's Ethernet switch revenue rose 14.1% year over year in 1Q20, bringing its market share to 3.3%. Juniper saw a 16.0% decline in combined enterprise and SP router sales, bringing its market share in the router market to 10.5%.

"Results in the Ethernet switch and routing markets were fairly consistent across geographies, indicating the widespread impact the Coronavirus has had on both markets across the globe," notes Petr Jirovsky, research director, IDC Networking Trackers. "At the end of 2019, a variety of factors contributed to economic uncertainty, including tensions related to the US-China trade war, Brexit being finalized, and then in the first quarter of 2020, the novel coronavirus. While results from some countries have been mixed in 1Q20, the dynamics across these markets will continue to evolve significantly in the near term. IDC will monitor these trends and their impact across all geographies and segments of these markets."

A new forecast from International Data Corporation (IDC) shows worldwide revenue from the Open Compute Project (OCP) infrastructure market will reach $33.8 billion in 2024. While year-over-year growth will slow slightly in 2020 due to capital preservation strategies during the Covid-19 situation, the market for OCP compute and storage infrastructure is forecast to see a compound annual growth rate (CAGR) of 16.6% over the 2020-2024 forecast period. The forecast assumes a rapid recovery for this market in 2021-22, fueled by a robust economic recovery worldwide. However, a prolonged crisis and economic uncertainty could delay the market's recovery well past 2021, although investments in and by cloud service providers may dominate infrastructure investments when they occur during this period.

The Open Compute Project (OCP) was established in 2011 as an open community focused on designing hardware technology to efficiently support the growing demands on compute infrastructure at midsize to large datacenter operators (hyperscalers). Open Compute standards are now supported by market leaders such as Facebook, Microsoft, LinkedIn, Alibaba, Baidu, Tencent, and Rackspace. The OCP encourages infrastructure suppliers, hyperscalers, cloud service providers, systems integrators, and components vendors to collaborate on new innovations, specifications, and initiatives across several key categories.

"By opening and sharing the innovations and designs within the community, IDC believes that OCP will be one of the most important indicators of datacenter infrastructure innovation and development, especially among hyperscalers and cloud service providers," said Sebastian Lagana, research manager, Infrastructure Systems, Platforms and Technologies.

"IDC projects massive growth in the amount of data generated, transmitted, and stored worldwide. Much of this data will flow in and out of the cloud and get stored in hyperscale cloud data centers, thereby driving demand for infrastructure," said Kuba Stolarski, research director, Infrastructure Systems, Platforms and Technologies at IDC.

OCP Technology by Segment

The compute segment will remain the primary driver of overall OCP infrastructure revenue for the coming five years, accounting for roughly 83% of the total market. Despite being a much larger portion of the market, compute will achieve a CAGR comparable to storage through 2024. The compute and storage segments are defined below:

· Compute: Spend on computing platforms (i.e., servers including accelerators and interconnects) is estimated to grow at a five-year CAGR of 16.2% and reach $28.07 billion. This segment includes externally attached accelerator trays also known as JBOGs (GPUs) and JBOFs (FPGAs).

· Storage: Spend on storage (i.e., server-based platforms and externally attached platforms and systems) is estimated to grow at a five-year CAGR of 18.5% and reach $5.73 billion. Externally attached platforms are also known as JBOFs (Flash) and JBODs (HDDs) and do not contain a controller. Externally attached systems are built using storage controllers.

OCP Technology Segment Data, 2019 and 2024 (Revenues are in US$ billions) | |||||

Market | 2019 Revenue | 2019 Market Share | 2024 Revenue | 2024 Market Share | 2019-2024 CAGR |

Compute | $13.25 | 83.1% | $28.07 | 83.0% | 16.2% |

Storage | $2.45 | 16.9% | $5.73 | 17.0% | 18.5% |

Total | $15.70 | 100.0% | $33.80 | 100.0% | 16.6% |

Source: IDC Worldwide Open Compute Project Compute and Storage Infrastructure Market Forecast, May 2020. | |||||

Buyer Type Highlights

OCP Board Member purchases make up the bulk of the OCP infrastructure market and are poised to grow at a 14.8% CAGR through 2024, when they will account for just under 75% of the total market. Conversely, non-member spending is projected to increase at a five-year CAGR of 23.2% and will expand its share of the OCP infrastructure market by just over 600 basis points during that period.

In terms of end user type, hyperscalers account for the largest portion of the market at just over 78% in 2019 and are projected to expand spending at a 14.2% CAGR through 2024, although this will result in erosion of total share. Conversely non-hyperscaler purchases will expand 23.8% over the same period, increasing this group's market share by approximately 650 basis points from 2019 to 2024.

DW talks with Nebulon, a storage start-up coming out of stealth mode, with the focus very much on the company’s disruptive technology: Cloud-Defined Storage.

Can you tell us a little bit about Nebulon and why you got started?

As with any startup, we recognized a need in the industry.

When our CEO, Siamak Nazari, was at 3PAR and later at HPE, he regularly travelled to see enterprise customers. During this time, he would often get asked by the CIOs why customers have to buy premium-priced external arrays when they have literally thousands of servers sitting in their data centers, each with a couple dozen slots for disk drives.

Siamak’s answer was always the same. In order for server-based storage to have the same enterprise capabilities as external arrays, they would need to have some piece of SW on each server and customers would hate that. That software would need to be different for different OS/hypervisors, would have to be installed and maintained on each server, and their firmware updates and reboots would take storage offline. That was not the answer these enterprises wanted to hear.

But Siamak knew that something needed to be done. After a number of conversations with other IT leaders, and some timely industry developments, our Siamak was able to arrive at a more holistic solution to the CIO challenge shaped in large part by the following themes that came up over and over again:

1.) Simple API-centric, cloud-based management

2.) An alternative to the 3-tier architecture

3.) A modern approach for critical workloads on-prem

So that is why Nebulon was created and what we decided to do. Provide a simple solution for CIO’s to leverage their on-prem, server-based storage to handle mission-critical workloads with the flexibility and ease of cloud-managed storage.

There are storage alternatives on the market. Why can’t this problem be solved with existing architectures?

The long and short of it? Arrays are expensive & Hyperconverged Infrastructure and Software-defined Storage solutions are restrictive.