Following on from my comment in the February issue of DW, I thought it worthwhile to spend a few moments contemplating just how much, or how little, companies (as well as individuals) can learn from the history books. Thankfully, major health scares as per the current coronavirus pandemic, are few and far between. Nevertheless, they do occur. And organisations might do well to spend a little time, after we have weathered the storm, thinking about the future, in terms of the human aspect of their business. Yes, AI and robots are, we hope, immune to illness, but humans are still a vital part of any organisation, and large scale illness is something that might just need a bit more planning for in the future. At least as part of a business continuity/disaster recovery plan, where the emphasis tends to be on the machines, not the humans.

Financially, there are always peaks and troughs in economies – both individual ones and the global one. It is difficult to predict these – else all economists would nearly always be in agreement – something which they rarely are! So, it’s difficult to plan for them. However, the current situation might just encourage more businesses to keep more money in reserve, ‘just in case’. And, just maybe, shareholders will not be quite so demanding for seemingly endless, increasing dividends if it leaves the company coffers bare when recession or unforeseen events threaten to derail the economic landscape.

More generally, students of history can tell anyone who cares to listen, that history really does have a habit of repeating itself. Unfortunately, there is much uncertainty as to when these repetitions might occur, but re-occur they do.

For example, there will always be threats to national, regional and global security. The 20th century was rather volatile on this front. And when the Communist empire finally collapsed and everyone imagined eternal, global peace, along came the terrorist threat from the Middle East. More recently, cyber warfare would appear to be a growing concern.

Add to this environmental stresses – politicial commentators love to say that many of the wars of the future will be fought over water (access to it/the lack of it in certain locations); other aspects of climate change – denied by the most powerful leader in the world (!); the odd health scare; the ongoing migration crises in parts of the world, and various other events, all of which have been witnessed previously in some shape or form (yes, even climate change has had a major impact over the years), and the future is not so certain.

Of course, planning for a series of ‘might happens’ is not that easy, but maybe, just maybe, the present global crisis will serve as a timely warning for countries, corporations and individual citizens that we can no longer just keep our heads down, stick our fingers firmly in our ears (having washed them well beforehand!) and carry on regardless.

Integration challenges continue to slow down digital transformation initiatives, as many organisations don’t have a company-wide API strategy.

MuleSoft has published the findings of the 2020 Connectivity Benchmark Report on the state of IT and digital transformation. The global survey of 800 IT decision makers (ITDMs) in organisations with at least 1,000 employees revealed that more than half (59%) of organisations were unable to deliver on all their projects last year, creating a backlog for 2020.

“Businesses are under increasing pressure to digitally transform as a failure to do so risks negatively impacting revenues. However, traditional IT operating models are broken, forcing organisations to find new ways of accelerating project delivery and reusing integrations,” said Ian Fairclough, vice-president of services, EMEA at MuleSoft. “For organisations with hundreds of different applications, integration remains a significant challenge towards them being able to deliver the connected experiences customers strive for. This report highlights that while ITDMs recognise the value of APIs, many organisations have yet to fully realise their potential.”

Businesses are failing to capture the full value of APIs without a company-wide strategy: The vast majority of organisations understand the power and potential of APIs – 80% currently use public or private APIs. However, very few have developed a strategic approach to enabling API usage across the business.

API reuse is directly linked to speed of innovation, operational efficiency and revenue

By establishing API strategies that promote self-service and reuse, businesses put themselves in a much better position to innovate at speed, increase productivity and open up new revenue streams. However, only 42% of ITDMs (30% in the UK) are leveraging APIs to increase the efficiency of their application development processes.

“CIOs are uniquely positioned to lead their organization’s digital transformation. IT leaders across all industries must be focused on creating a new operating model that accelerates the speed of delivery, increases organizational agility and delivers innovation at scale,” said Simon Parmett, CEO, MuleSoft. “With an API-led approach, CIOs can change the clock speed of their business and emerge as the steward of a composable enterprise to democratize access to existing assets and new capabilities.”

Study highlights lack of investment in areas most likely to have a positive CX impact.

Organizations must tear down the walls between IT and the business and make more customer-centric investments if they are to improve customer experience (CX), according to new research from Pegasystems Inc.. Pega’s 2020 Global Customer Experience Study was conducted among decision makers spanning 12 countries and seven different industries by research firm Savanta.

The study highlighted four key pain points businesses must address if they are to provide a better, more personalized customer experience, successfully differentiate themselves from the competition, and improve customer satisfaction and loyalty:

Infoblox has published new research that exposes the significant threat posed by shadow IoT devices on enterprise networks. The report, titled “What’s Lurking in the Shadows 2020” surveyed 2,650 IT professionals across the US, UK, Germany, Spain, the Netherlands and UAE to understand the state of shadow IoT in modern enterprises.

Shadow IT devices are defined as IoT devices or sensors in active use within an organisation without IT’s knowledge. Shadow IoT devices can be any number of connected technologies including laptops, mobile phones, tablets, fitness trackers or smart home gadgets like voice assistants that are managed outside of the IT department. The survey found that over the past 12 months, a staggering 80% of IT professionals discovered shadow IoT devices connected to their network, and nearly one third (29%) found more than 20.

The report revealed that, in addition to the devices deployed by the IT team, organisations around the world have countless personal devices, such as personal laptops, mobile phones and fitness trackers, connecting to their network. The majority of enterprises (78%) have more than 1,000 devices connected to their corporate networks.

“The amount of shadow IoT devices lurking on networks has reached pandemic proportions, and IT leaders need to act now before the security of their business is seriously compromised,” said Malcolm Murphy, Technical Director, EMEA at Infoblox.

“Personal IoT devices are easily discoverable by cybercriminals, presenting a weak entry point into the network and posing a serious security risk to the organisation,” he added. “Without a full view of the security policies of the devices connected to their network, IT teams are fighting a losing battle to keep the ever-expanding network perimeter safe.”

Nearly nine in ten IT leaders (89%) were particularly concerned about shadow IoT devices connected to remote or branch locations of the business.

“As workforces evolve to include more remote and branch offices and enterprises continue to go through digital transformations, organisations need to focus on protecting their cloud-hosted services the same way in which they do at their main offices,” the report recommends. “If not, enterprise IT teams will be left in the dark and unable to have visibility over what’s lurking on their networks.”

To manage the security threat posed by shadow IoT devices to the network, 89% of organisations have introduced a security policy for personal IoT devices. While most respondents believe these policies to be effective, levels of confidence range significantly across regions. For example, 58%of IT professionals in the Netherlands feel their security policy for personal IoT devices is very effective, compared to just over a third (34%) of respondents in Spain.

“Whilst it’s great to see many organisations have IoT security policies in place, there’s no point in implementing policies for their own sake if you don’t know what’s really happening on your network,” Murphy said. “Gaining full visibility into connected devices, whether on premises or while roaming, as well as using intelligent systems to detect anomalous and potentially malicious communications to and from the network, can help security teams detect and stop cybercriminals in their tracks.”

Global research highlights how organisations are capitalising on emerging technologies to enhance finance and operations for competitive advantage.

Organisations that are adopting Artificial Intelligence (AI) and other emerging technologies in finance and operations are growing their annual profits 80 percent faster, according to a new study fromEnterprise Strategy Group and Oracle. The global study,Emerging Technologies: The competitive edge for finance and operations, surveyed 700 finance and operations leaders across 13 countries and found that emerging technologies – AI,Internet of Things(IoT),blockchain,digital assistants– have passed the adoption tipping point, exceed expectations, and create significant competitive advantage for organisations.

AI and Digital Assistants Improve Accuracy and Efficiency in Finance

Organisations embracing emerging technologies in finance are experiencing benefits far greater than anticipated:

AI, IoT, and Blockchain Drive More Responsive Supply Chains

AI, IoT, blockchain and digital assistants are helping organisations improve accuracy, speed and insight in operations and the supply chain, and respondents expect additional business value as blockchain applications become mainstream.

Emerging Tech Equals Competitive Advantage

The vast majority of organisations have now adopted emerging technologies and early adopters (those using three or more solutions) are seeing the greatest benefit and are more likely to outperform competitors.

A study from Juniper Research has found that total operator-billed revenue from 5G IoT connections will reach $8 billion by 2024; rising from $525 million in 2020. This is a growth of over 1,400% over the next 5 years. The report identified the automotive and smart cities sectors as key growth drivers for 5G adoption over the next five years.

The new research, 5G Networks in IoT: Sector Analysis & Impact Assessment 2020-2025, anticipated that revenue from these 5G connections is a highly sought-after new revenue stream for operators. It forecasts that 5G IoT connections must be considered as new connections that will not cannibalise existing operator connectivity revenue from current IoT technologies.

For more insights on 5G on the IoT, download our free whitepaper: 5G ~ The 5-Year Roadmap.

5G Value-Added Services Key for Operators

The research urges operators to develop comprehensive value-added services to enable IoT service users to manage their 5G connections. It forecasts that tools, such as network slicing and multi-access edge computing solutions, will be essential to attract the highest spending IoT service users to use their 5G networks.

The research also forecasts that valued-added services will become crucial in the automotive and smart cities sectors. It also forecasts that these sectors would account for 70% of all 5G IoT connections by 2025, with higher than anticipated levels of device support for 5G radios accelerating the uptake of 5G connectivity.

Maximising the New Revenue Stream

The research claimed that the initial high pricing of 5G connectivity in the IoT sector would dissuade all but high value IoT users. It urged operators to roll out holistic network management tools that complement the enhanced capabilities of 5G networks for IoT capabilities.

Research author Andrew Knighton remarked “Management tools for the newly-enabled services are key for users managing large scale deployments. We believe that only 5% of 5G connections will be attributable to the IoT, but as these are newly enabled connections, operators must view them as essential to securing a return on their 5G investment”.

New data from Extreme Networks reveals that IoT is barreling toward the enterprise, but organisations remain highly vulnerable to IoT-based attacks.

The report, which surveyed 540 IT professionals across industries in North America, Europe, and Asia Pacific, found that 84% of organisations have IoT devices on their corporate networks. Of those organisations, 70% are aware of successful or attempted hacks, yet more than half do not use security measures beyond default passwords. The results underscore the vulnerabilities that emerge from a fast-expanding attack surface and enterprises’ uncertainty in how to best defend themselves against breaches.

Key findings include:

● Organisations lack confidence in their network security: 9 out of 10 IT professionals are not confident that their network is secured against attacks or breaches. Financial services IT professionals are the most concerned about security, with 89% saying they are not confident their networks are secured against breaches. This is followed by the healthcare industry (88% not confident), then professional services (86% not confident). Education and government are the least concerned of any sector about their network being a target for attack.

● Enterprises underestimate insider threats: 55% of IT professionals believe the main risk of breaches comes mostly from outside the organisation and over 70% believe they have complete visibility into the devices on the network. But according to Verizon’s 2019 Data Breach Investigations Report, insider and privilege misuse was the top security incident pattern of 2019, and among the top three causes of breaches.

● Europe’s IoT adoption catches up to North America: 83% of organisations in EMEA are now deploying IoT, compared to 85% in North America, which was an early adopter. Greater IoT adoption across geographies is quickly expanding the attack surface.

● Skills shortage and implementation complexity cause NAC deployments to fail: NAC is critical to protect networks from vulnerable IoT devices, yet a third of all NAC deployment projects fail. The top reasons for unsuccessful NAC implementations are a lack of qualified IT personnel (37%), too much maintenance cost/effort (29%), and implementation complexity (19%).

● SaaS-based networking adoption grows: 72% of IT professionals want network access to be controlled from the cloud. This validates 650 Group’s prediction that more than half of enterprise network systems will transition to SaaS-based networking by the end of 2023.

Extreme provides the multi-layered security capabilities that modern enterprises demand, from the wireless and IoT edge to the data centre, including role-based access control, network segmentation and isolation, application telemetry, real-time monitoring of IoT, and compliance automation. As the mass migration of business systems to the cloud continues, cloud security becomes ever more important. Extreme’s security solutions extend in lockstep with the expanding network perimeter to harden enterprises’ environments both on-premises and in the cloud.

Ivanti has released survey results highlighting the challenges faced by IT organisations when it comes to aligning their IT Service Management (ITSM) and IT Asset Management (ITAM) processes

According to data collected by Ivanti, 43% of IT professionals surveyed reported using spreadsheets as one of their resources to track IT assets. Further, 56% currently do not manage the entire asset lifecycle, risking redundant assets, potentially creating a risk, and causing unnecessary and costly purchases.

Findings from the survey demonstrate the need for greater alignment between ITSM and ITAM processes, especially when looking at the time spent reconciling inventory/assets. Nearly a quarter of respondents reported spending hours per week on this process. Another time-intensive process for IT professionals is dealing with out-of-warranty/out-of-support-policy assets, with 28% of respondents reporting they spend hours per week supporting these assets. And, when asked how often they have spent time fixing devices that were later identified to still be under warranty, 50% of respondents said “sometimes.”

“It’s clear that there is room for improvement when it comes to managing assets,” said Ian Aitchison, senior product director at Ivanti. “While IT teams are starting to better track their assets, collaborating with other teams and understanding the benefits of combining asset and service processes, time and money advantages are being lost as they don't have the data they need to effectively manage and optimise their assets and services.”

When asked about the benefits of combining ITSM and ITAM processes, the survey found that respondents expected to see:

Aitchison added, “When ITSM and ITAM are closely aligned and integrated, many activities and processes become more automated, efficient and responsive, with fewer things ‘falling through the cracks.’ IT teams gain more insight and are better positioned to move from reactive activities to more proactive practices, delivering higher service levels and efficiency at lower costs.”

According to the survey, IT professionals are also somewhat dissatisfied with the available asset information, or data, they have access to within their organisations. When asked if they incorporate and monitor purchase data, contracts and/or warranty data as part of their IT asset management program, 39% of respondents said yes, 42% said partially and 19% said no. This means more than 60% of IT professionals are missing key information in their IT asset management program to effectively manage their IT assets from cradle to grave.

Over 80% of C-Suite, business and IT decision makers believe that digital performance is critical to business growth.

Riverbed has published the results of a survey which found that over 70% of C-Suite decision makers believe business innovation and staff retention are driven by improved visibility into network and application performance. The survey findings unveiled in the ‘Rethink Possible: Visibility and Network Performance – The Pillars of Business Success’ report cites a positive correlation between effective technology and company health, a finding that is supported by the fact that 86% of C-Suite and IT decision makers (ITDMs), and 87% of business decision makers (BDMs), reveal that digital performance is increasingly critical to business growth.

Slow running systems and a lack of visibility

Three-quarters of all groups surveyed have felt frustrated by current network performance, with IT infrastructure being given as the key reason for the poor performance. This problem is exacerbated by a lack of full and consistent visibility, as one in three ITDMs report that they don’t have full visibility over applications, their networks and/or end-users. Furthermore, almost half of the C-Suite (49%) believe that slow running and outdated technology is directly impacting the growth of their businesses. This highlights the importance of implementing new technology to drive productivity, creativity and innovation.

Business priorities and challenges

Business priorities and challenges are evolving, so the technology they rely on needs to advance too. Over three quarters (76%) of ITDMs acknowledge that their IT infrastructure will have to change dramatically in the next five years to support new ways of doing business. A further 95% of all respondents recognise that innovation and breaking boundaries is crucial to business success, emphasising the need to embrace new technology. This may be why 80% of BDMs and 77% of the C-Suite say they believe that investing in next-generation technology is vital.

Commenting on the research findings, Colette Kitterhing, Senior Director UK&I at Riverbed Technology, said: “All leaders recognise that visibility, optimised network infrastructure, and the ability to accelerate cloud and SaaS performance are the next frontier in business success. Given this, it’s time the C-Suite, business decision leaders, and IT decision makers come together to invest in the right solutions, prioritise measurement, and place visibility and infrastructure at the top of their agenda.”

Kitterhing continued: “At Riverbed, we are helping businesses evolve their capabilities, whether it’s by monitoring networks and the apps that run on them, application performance, or updating the network infrastructure that underpins their digital services. We fundamentally believe this is the key to supporting our customers’ staff and their ambitions, driving innovation, creativity, and helping them Rethink what’s possible.”

Rethink Possible: Evolving the Digital Experience

With over 80% of all leaders (82% C-Suite, 84% BDMs and ITDMs) agreeing that businesses must rethink what’s possible to survive in today’s unpredictable world, technology needs to be an enabler in the process. Riverbed’s portfolio of next-generation solutions is giving customers across the globe the visibility, acceleration, optimization and connectivity that maximizes performance and visibility for networks and applications.

FireMon has released its 2020 State of Hybrid Cloud Security Report, the annual benchmark of the cloud security landscape.

The latest report finds that while enterprises rapidly transition to the public cloud, complexity is increasing, but visibility and team sizes are decreasing while security budgets remain flat to pose a significant obstacle to preventing data breaches. The 2020 State of Hybrid Cloud Security Report features insights from more than 500 respondents, including 14 percent from the executive ranks, detailing cloud security initiatives in the era of digital transformation.

“As companies around the world undergo digital transformations and migrate to the cloud, they need better visibility to reduce network complexity and strengthen security postures,” said Tim Woods, VP of Technology Alliances for FireMon. “It is shocking to see the lack of automation being used across the cloud security landscape, especially in light of the escalating risk around misconfigurations as enterprises cut security resources. The new State of Hybrid Cloud Security Report shows that enterprises are most concerned about these challenges, and we know that adaptive and automated security tools would be a welcomed solution for their needs.”

Cloud Adoption, Complexity and Scale Create Security Challenges

While enterprises increasingly transition to public and hybrid cloud environments, their network complexity continues to grow and create security risks. Meanwhile, they are losing the visibility needed to protect their cloud systems, which was the biggest concern cited by 18 percent of C-suite respondents, who now also require more vendors and enforcement points for effective security.

The 2020 FireMon State of Hybrid Cloud Security Report found that:

(Consider) Business acceleration outpaces effective security implementations:

Need for Security Outpaces the Need for Data Protection: Nearly 60 percent believed their cloud deployments had surpassed their ability to secure the networks in a timely manner. This number was virtually unchanged from 2019, showing no improvement against a key industry progress indicator.

Cloud Complexity Increases: The number of vendors and enforcement points needed to secure cloud networks are also increasing; 78.2 percent of respondents are using two or more enforcement points. This number increased substantially from the 59 percent using more than two enforcement points last year. Meanwhile, almost half are using two or more public cloud platforms, which further increases complexity and decreases visibility.

Shrinking Budgets and Security Teams Create Gaps in Protection

Despite increasing cyberthreats and ongoing data breaches, respondents also reported a substantial reduction in their security budgets and teams from 2019. These shrinking resources are creating gaps in public cloud and hybrid infrastructure security.

Budget Reductions Increase Risk: There was a 20.7 percent increase in the number of enterprises spending less than 25 percent on cloud security from 2019; 78.2 percent spend less than 25 percent on cloud security (vs. 57.5 percent in 2019). Meanwhile, 44.8 percent of this group spent less than 10 percent of their total security budget on the cloud.

Security Teams are Understaffed and Overworked: While the cyberattack surface and potential for data breaches continues to expand in the cloud, many organisations trimmed the size of their security teams – 69.5 percent had less than 10-person security teams (compare to 52 percent in 2019). The number of 5-person security teams also nearly doubled with 45.2 percent having this smaller team size versus 28.5 percent in 2019.

Lack of Automation and Third-Party Integration Fuels Misconfigurations

While cloud misconfigurations due to human-introduced errors remain the top vulnerability for data breaches, an alarming 65.4 percent of respondents are still using manual processes to manage their hybrid cloud environments. Other key automation findings included:

Misconfigurations are Biggest Security Threat: Almost a third of respondents said that misconfigurations and human-introduced errors are the biggest threat to their hybrid cloud environment. However, 73.5 percent of this group are still using manual processes to manage the security of their hybrid environments.

Better Third-Party Security Tools Integration Needed: The lack of automation and integration across disparate tools is also making it harder for resource-strapped security teams to secure hybrid environments. As such, 24.5 percent of respondents said that not having a “centralised or global view of information from their security tools” was their biggest challenge to managing multiple network security tools across their hybrid cloud.

By harnessing automated network security tools, robust API structures and public cloud integrations, enterprise can gain real-time control across all environments to minimise challenges created by manual processes, increasing complexity and reduced visibility. Automation is also the antidote to shrinking security budgets and teams by enabling organisations to maximise resources and personnel for their most strategic uses.

SolarWinds has released the findings of its “2019 Trends in Managed Services” report, showing the health of managed services and the forces shaping the market across North America and Europe.

SolarWinds MSP partners with The 2112 Group to create the annual report from findings based on data from its benchmarking tool, MSP Pulse, which provides deep insight into potential growth opportunities for IT providers and where they can excel, areas for improvement, and can also help guide how they invest in their business. The comprehensive reports show comparisons on revenue, profit, service selection, sales capacity, customer engagement, and growth potential.

“Our research has revealed that 97% of respondents offer some form of managed services—which is a clear demonstration of the managed services transformation remaking the technology channel. It’s safe to say the state of managed services is strong,” said John Pagliuca, president of SolarWinds MSP. “This latest research also underscored the major growth opportunities we’re already looking to help our MSPs leverage including automation, security, and operations. With our customer success-focused initiatives like MSP Pulse, the MSP Institute, and Empower MSP, we’re supporting partners with much more than technology. We’re working to fuel the success of our MSPs in 2020 and beyond. The future looks bright for those who want to expand their comfort zone, and we’re here to help them do just that.”

The results show that MSPs are comfortable with the security basics such as antivirus, backup, and firewalls:

For solutions in North America, respondents were most comfortable offering and using antivirus (89%), firewalls (83%), data backup and recovery (81%), and endpoint security (75%).

In Europe, respondents were most comfortable offering and using antivirus (93%), data backup and recovery (82%), firewalls (82%), and antispam (80%) as solutions.

However, MSPs have room for growth in some of the more advanced security solutions and offerings, as respondents were less confident in the more complex controls:

European and North American respondents selected the same top three solutions they were least comfortable with: biometrics, cloud access security brokers (CASBs), and digital rights management.

On the services end, European respondents were least comfortable with penetration testing (52%), auditing and compliance management (39%), and risk assessments (36%). North American respondents were least comfortable with auditing and compliance management (53%), penetration testing (47%), and security system architecture (39%).

The results also showed MSPs are starting to increase the use of automation to handle day-to-day tasks such as patch management and backup, but don’t feel comfortable with automating the advanced tasks:

Automation saves North American MSPs an average of 15.6 full-time employee hours per week and in Europe, an average of 23 full-time employee hours per week.

In North America, respondents were least comfortable automating client onboarding (44%) with identity and access management in second place (38%). In Europe, respondents were least comfortable automating SQL query workflows (57%) but shared their discomfort with automating identity and access management with their North American counterparts.

In the 2018 report, MSPs were losing customers almost as fast as they gained them, but 2019 showed an improvement in customer retention. Two of the top three reasons for losing customers stemmed from the customer rather than the service provider:

In North America, respondents pick up an average of four clients every three months while losing one in the same period.

In Europe, respondents pick up an average of three clients every two months while losing more than one on average in the same period.

Top causes of customer loss included the company either went out of business (26% in North America and 16% in Europe) or were fired by the partner (25% in North America and 16% in Europe).

Another key finding showed core business operations are still amongst the biggest growth obstacles for MSPs including lack of resources/time, sales, and marketing:

North American MSPs claimed their biggest obstacles toward growth were sales (43%), lack of resources/time (42%), and marketing (26%).

European MSPs claimed their biggest obstacles toward growth were lack of resources/time (41%), sales (32%), and security threats (32%).

Many providers claim a lack of sales and marketing expertise is a major anchor on their growth—hiring specialized staff could help close the gap or training for existing employees. The SolarWinds MSP Institute, a learning portal within the SolarWinds Customer Success Center, gives SolarWinds partners access to a variety of courses, including sales and marketing tracks, to help coach users in the management and growth of their business, and provides go-to-market strategies and advice to help educate on these issues.

“We’re so pleased to be partnering with SolarWinds MSP again on the annual Trends in Managed Services report,” stated Larry Walsh, CEO of The 2112 Group. “It gives MSPs an opportunity to see where the IT channel currently stands, where the opportunities are for growth and where they could improve as it pinpoints the crucial trends shaping the managed services market.”

A strong Q4 ensured that the four largest colocation FLAP markets of Frankfurt, London, Amsterdam and Paris reached a combined 201MW in take-up for 2019 – a new European record, according to the Q4 European Data Centres Report from CBRE, the world's leading data centre real estate advisor.

CBRE analysis shows that Frankfurt was the star performer during 2019, responsible for 45% of the 201MW. This is both the first time Frankfurt has topped the annual rankings and the first time any European market has seen 90MW of take-up in a single year. Furthermore, half of the pre-let capacity in facilities under construction also took place in Frankfurt.

CBRE expects that take-up in the core FLAP markets will remain high at 200MW for the next two years as the hyperscale companies continue to utilise wholesale colocation services in these markets.

The developer-operators, confident of prolonged strong demand, are now building at a larger scale than ever before to secure these requirements. To this end, there was 314MW of new capacity brought online in the FLAP markets during 2019, equating to a 24% market growth This new capacity would require EUR 2.2bn of capital to deliver.

Mitul Patel, Head of EMEA Data Centre Research at CBRE, commented: “The data centre market in Europe continues to grow like no other and represents one of the most exciting asset classes anywhere. The 201MW procured in 2019, as well as pre-lets and optioned capacity, is a remarkable achievement. Our expectation is that this level of activity will continue as the hyperscalers continue their accelerated procurement of data centre services.”

“As the hyperscale companies roll out their services more widely across Europe, the rate of hyperscale procurement of data centre capacity in other European cities will increase rapidly. As a result, markets such as Madrid, Milan, Warsaw and Zurich, will witness a substantial increase in colocation activity.”

The third annual Verizon Mobile Security Index takes a deep dive into the state of mobile security, looking at different types of threats and offering tips to protect your environment.

The third annual Verizon Mobile Security Index finds that a large number organizations are still compromising mobile security to get things done, which can leave entities at risk. About four out of 10 respondents (43 percent) reported their organization had sacrificed mobile security in the past year. Those that did were twice as likely to suffer a compromise.

In fact, the study found that 39 percent of respondents reported having a mobile-security-related compromise. Sixty-six percent of organizations that suffered a compromise called the impact “major,” and 55 percent said the compromise they experienced had lasting repercussions.

“In today’s world, mobile connectivity is more important than ever. Organizations of all sizes and in all industries rely on mobile devices to run much of the day to day operations, so mobile security is a priority,” said Bryan Sartin, executive director, global security services with Verizon. “The types of devices, diverse applications and further emergence of IoT devices further complicate security. Everyone has to be deliberate and diligent about mobile security to protect themselves and their customers.”

Because mobile attacks aren’t industry specific, this year’s Verizon Mobile Security Index 2020 features supplemental vertical reports in key segments including: financial services; healthcare; manufacturing; public sector; retail and small and medium business. The report also discusses the importance of mobile security in pivotal technologies like cloud and IoT and how the emergence of 5G will impact security. And with 80 percent of organizations saying that mobile will be their primary means of accessing cloud services within five years, now is the time to hone in on mobile security.

The obvious question is: what should organizations do? The report highlights users, apps, devices and networks as the four key mobile attack vectors. The report includes a number of tips on how organizations can safeguard against mobile security threats, including establishing a “security-first” focus, developing and enforcing policies and encrypting data over unsecured networks.

Survey snapshots:

2020 is likely to be a time of uncertainty in both economic and business cycles; customers are more demanding than ever, channels need to understand the markets and where to apply investment and resources.

With a gently rising global GDP (Goldman Sachs guesses 2020 will see around 3.4% worldwide growth; Europe will be lower, at around 1.1%, with Germany, Italy and the UK shrinking, and issues in France) IT sales across Europe can be expected to grow. They have been rising based on enterprises seeing the need to drive efficiency and stay up with competition, plus the growing SMB turn to technology. There have been minor issues over confidence, particularly in the UK market, but the trend is up, especially towards more targeted solutions. It will place more of a burden on channels, however, as the requirement is for more sophisticated and integrated solutions, which in turn place more demands on the channel resources and its ability to successfully sell advanced ideas.

At the same time, customers are asking about the use of technologies such as AI, analytics, blockchain and the many varieties of security. Any supplier or channel player not able to talk convincingly about these technologies may find themselves displaced by an upstart.

This message was powerfully driven home by Microsoft research at the end of 2019. It reported that while customers had previously looked at using tools from Microsoft and others, organisations are “moving beyond adopting the latest applications and are developing their own proprietary digital capabilities to help propel success and gain a competitive advantage”.

According to a Gartner survey Operational excellence, Cost, Digital and Growth are top of mind of CIO plans in 2020, well above other concerns such as Cyber security, Innovation and Recruiting.

Your customers don’t want the same as everyone else; they want to build and develop their own solutions- their own intellectual property (IP) even. There's widespread agreement that the applied use of a “creative, entrepreneurial mindset to invent new digital capabilities using advanced technologies such as AI and IoT — will have a significant impact on global communities and organizational culture”.

Which is where managed services play their part in being scalable, with known fixed costs and in their ability to explore new technology introductions through pilots.

The global managed services market was valued at $166.8bn in 2018, and it is expected to reach $319.5bn by 2024, registering a CAGR of 11.5% during the forecast period of 2019-2024 says researcher Mordor Intelligence. The market for managed services is going to be fuelled by the increasing shortage of expertise with businesses becoming more technology oriented. Furthermore, due to rapid digitalization, the companies are required to continually innovate and upgrade their infrastructure to remain competitive.

Industry trade body CompTIA surveyed members in late 2019 and found general agreement on the areas of growth to 2022 as Managed Services, IoT, Data, security and consulting services. Managed services was out in front with some 82% agreeing that it was a growth engine. Some 35% expected it to grow significantly, half moderately and 14% to stay the same.

But the issues of purchasing such services remains a source of difficulty for customers. As Gartner told the Managed Services Summits in London and Amsterdam last year, people are more tech savvy than ever before which means it’s not just the IT department that makes technology choices. And the internet now opens up many more sources of information and access to trusted sources (independent sites) which used to cost a fortune

But with all that complexity and wealth of information comes a problem; the buyer has to sort through so much information. But worse still the buyer still can’t find the right information to make a quick buying decision.

And so the buying cycle becomes fluid and sometimes erratic. The net result – buying takes 97% longer than planned as found in a Gartner survey last year. And what’s more Gartner surveys show that up to 59% of buyers cancelled at least one buying effort.

And the conversations are becoming more detailed and going to the core of the customer business: this emerged in a lively panel at the Managed Services and Hosting Summit Manchester on October 30 2019 gave some useful ideas on meeting current customer challenges: Nigel Church from MSP First Solution: “We’re speaking to more customers about digital transformation and enablement processes, and we have seen a shift, starting with conversations about cloud and then going onto business strategy.”

So the Managed Services Summits in Amsterdam and London this year aim to reflect the new pressures on MSPs to engage successfully with customers and offer new types of service, in security, in data, in newer technologies such as AI.

Concerns that the assumptions underpinning organizational strategy may be outdated or misaligned to current growth objectives topped business leaders’ concerns in Gartner, Inc.’s latest Emerging Risks Monitor Report.

Gartner surveyed 136 senior executives across industries and geographies and the results showed that “strategic assumptions” had risen to the top emerging risk in the 4Q19 Emerging Risks Monitor survey, up from the third position the previous quarter (see Table 1). Last quarter’s top emerging risk, “digitalization misconceptions,” has now become an established risk after ranking on four previous emerging risk reports.

“This quarter saw a number of external risks converge in executives’ thinking, from increasing concerns about the impact of extreme weather events to trade policy,” said Matt Shinkman, vice president with Gartner’s Risk and Audit Practice. “Currently, however, business leaders are most acutely concerned with the beliefs underpinning their own strategic assumptions and the ramifications of getting them wrong.”

Table 1. Top Five Risks by Overall Risk Score: 1Q19-4Q19

Rank | 1Q19 | 2Q19 | 3Q19 | 4Q19 |

1 | Accelerating Privacy Regulation | Pace of Change | Digitalization Misconceptions | Strategic Assumptions |

2 | Pace of Change | Lagging Digitalization | Lagging Digitalization | Cyber-Physical Convergence |

3 | Talent Shortage | Talent Shortage | Strategic Assumptions | Extreme Weather Events |

4 | Lagging Digitalization | Digitalization Misconceptions | Data Localization | Data Localization |

5 | Digitalization Misconceptions | Data Localization | U.S.-China Trade Talks | U.S.-China Trade Talks |

Source: Gartner (February 2020)

Strategic Planning Must Account for Critical Uncertainties

The study defined a strategic assumption as a plan based on a belief that a certain set of events must occur. Gartner research has found that executives believe more than half of their time spent in strategic planning is wasted, and the quality of those plans fail to meet expectations. Incorrect strategic assumptions often result in stalled growth that can derail planned results.

“Strategic assumptions are often sound when they are first formed, but in today’s environment are more vulnerable to becoming outdated or obsolete due to a rapid increase in the pace of change,” noted Mr. Shinkman. Senior executives ranked the pace of change as a top emerging risk in the second quarter of 2019.

Organizations with a poorly formed set of strategic assumptions typically produce a high number of projects that are not aligned with their stated objectives. Moreover, time and budget for the execution of key initiatives consistently overrun planned targets.

“Risk teams should play a vital role in mitigating the impact of inaccurate strategic assumptions. A key component of clarifying strategic assumptions is discerning between likely truths and critical uncertainties,” Mr. Shinkman said. “Risk leaders should involve themselves early in the strategic planning process and add value by developing a set of criteria to stress-test assumptions and root out biases and flaws before they become cemented in a strategic plan.”

Cyber-Physical Convergence Presents New Risks

The second most cited risk was a convergence of cyber-physical risks, as previously unconnected physical assets become part of an organization’s cyber network. Nearly 90 percent of organizations with connected operational technology (OT) have already experienced a breach related to cyber-physical architecture. Despite these threats, organizations continue to move forward with integrating Internet of Things devices, smart buildings and other OT, often without dedicated security policies.

“The risks of OT are still not widely appreciated throughout most organizations,” said Mr. Shinkman. “Top risk teams partner with IT to develop dedicated strategies that account for the security deficiencies in such assets and include regular meetings to review issues such as access rights and employee training.”

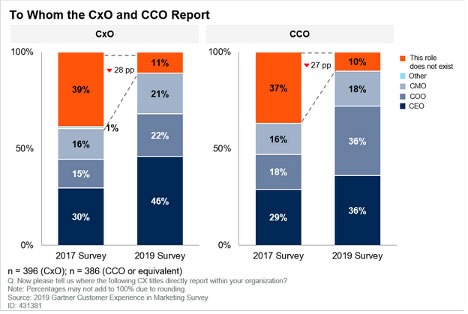

Organizations are taking customer experience (CX) more seriously by committing more resources and talent to the discipline, according to Gartner, Inc. Gartner’s 2019 Customer Experience Management Survey revealed that in 2017, more than 35% of organizations lacked a chief experience officer (CXO) or chief customer officer (CCO) or equivalents, but in 2019, only 11% and 10% lacked one or the other role, respectively (see Figure 1).

“There has been significant growth in the presence of CXOs and CCOs or equivalents in many organizations over the last two years,” said Augie Ray, VP analyst, Gartner for Marketers. “However, these roles rarely report to CMOs despite marketing taking control of more CX initiatives.”

Figure 1: Key CX Leader Roles Are More Common and Rarely Report to the CMO

Source: Gartner (February 2020)

The survey — which covered a variety of departments where CX efforts are run and supported, such as marketing, IT, customer service, operations, sales and stand-alone CX departments — found that responsibility for CX budgets and initiatives has begun to shift into the marketing department.

“As marketing continues to take on a larger role in CX, marketing leadership faces a potential challenge coordinating companywide CX,” said Ray. “CMOs and marketing leaders responsible for aspects of their organization’s CX must ensure that roles are understood, redundancy and conflict are minimized, and collaboration is prioritized.”

To do this, Gartner recommends that CMOs and marketing leaders take the following actions:

Gartner, Inc. predicts that by 2025, at least two of the top 10 global retailers will establish robot resource organizations to manage nonhuman workers.

“The retail industry continues to transform through a period of unprecedented change, with customer experience as the new currency,” said Kelsie Marian, senior research director at Gartner. “The adoption of new digital technologies and the ever-changing expectations of customers continues to challenge traditional retailers, forcing them to investigate new-human hybrid operational models, including artificial intelligence (AI), automation and robotics.”

Gartner research shows that 77% of retailers plan to deploy AI by 2021, with the deployment of robotics for warehouse picking as the No. 1 use case. Warehouse picking involves smart robots working independently or alongside humans. In the future, retailers will establish units within the organization for procuring, maintaining, training, taxing, decommissioning and proper disposal of robot resources. In addition, they will create the governance required to ensure that people and robots can effectively collaborate.

Many retail workers want to use AI specifically as an on-demand or predictive assistant, meaning the robot will need to work alongside humans. “This means the robot will have to “mesh” with the human team — essentially meaning that both sides will need to learn how to “collaborate” to operate effectively together,” said Ms. Marian.

An example is an autonomous robotic kitchen assistant that learns an operator’s specific recipes and prepares them according to the wishes of the operator. The robot can work in harmony with the operators who, in turn, are having to adapt to changing consumer tastes.

Choosing the right candidate — human and machine — for the job is critical for success. A combined effort from HR, IT and the line-of-business hiring managers will be required to identify the skills needed to ensure the pair work together effectively. “Retail CIOs must provide ongoing maintenance and monitoring performance for effectiveness. If not, the team may be counterproductive and lead to a bad customer experience,” said Ms. Marian.

The introduction of AI and robotics will likely cause fear and anxiety among the workforce — particularly among part-time workers. It will be vital for retail CIOs to work with HR and business leaders to address and manage employees’ skills and concerns; and change their mindset around the development of robot resource units.

Gartner, Inc. predicts that by 2023, organizations using blockchain smart contracts will increase overall data quality by 50%, but reduce data availability by 30%.

“When an organization adopts blockchain smart contracts — whether externally imposed or voluntarily adopted — they benefit from the associated increase in data quality, which will increase by 50% by 2023,” said Lydia Clougherty Jones, senior research director at Gartner.

However governance frameworks for blockchain participation, or the terms and conditions within the smart contract, can dictate the availability of the data generated from the smart contract transaction, from none to limited to unlimited. “This variable could leave participants in a worse position than if they did not participate in the blockchain smart contract process. As such, an organization’s overall data asset availability would decrease by 30% by 2023,” added Ms. Clougherty Jones.

The net impact however, is a positive result for data and analytics (D&A) ROI. The impact of blockchain smart contract adoption on analytical decision making is profound. It enhances transparency, speed and granularity of decision making. It also improves the quality of decision making, as its continuous verification makes the data more accurate, reliable and trustworthy.

“Smart contracts are important and D&A leaders should focus on them because they promise a near certainty of trusted exchange. Once deployed, blockchain smart contracts are immutable and irrevocable through nonmodifiable code, which enforces a binding commitment to do or not do something in the future. Moreover, they eliminate third-party intermediaries (e.g., bankers, escrow agents, and lawyers) and their fees, as smart contracts, perform the intermediary functions automatically,” said Ms. Clougherty Jones.

Gartner analysts recommend D&A leaders pilot blockchain smart contracts now. Organizations should start deploying them to automate a simple business process, such as non-sensitive data distribution or a simple contract formation for contract performance or management purposes. Then organizations should engage with their affiliates and partners to pilot blockchain smart contracts to automate multiparty contracts within a well-defined ecosystem, such as banking and finance, real estate, insurance, utilities, and entertainment.

Privacy Compliance Technology to rely on AI

Over 40% of privacy compliance technology will rely on artificial intelligence (AI) by 2023, up from 5% today, according to Gartner, Inc.

“Privacy laws, such as General Data Protection Regulation (GDPR), presented a compelling business case for privacy compliance and inspired many other jurisdictions worldwide to follow,” said Bart Willemsen, research vice president at Gartner.

“More than 60 jurisdictions around the world have proposed or are drafting postmodern privacy and data protection laws as a result. Canada, for example, is looking to modernize their Personal Information Protection and Electronic Documents Act (PIPEDA), in part to maintain the adequacy standing with the EU post-GDPR.”

Privacy leaders are under pressure to ensure that all personal data processed is brought in scope and under control, which is difficult and expensive to manage without technology aid. This is where the use of AI-powered applications that reduce administrative burdens and manual workloads come in.

AI-Powered Privacy Technology Lessens Compliance Headaches

At the forefront of a positive privacy user experience (UX) is the ability of an organization to promptly handle subject rights requests (SRRs). SRRs cover a defined set of rights, where individuals have the power to make requests regarding their data and organizations must respond to them in a defined time frame.

According to the 2019 Gartner Security and Risk Survey, many organizations are not capable of delivering swift and precise answers to the SRRs they receive. Two-thirds of respondents indicated it takes them two or more weeks to respond to a single SRR. Often done manually as well, the average costs of these workflows are roughly $1,400 USD, which pile up over time.

“The speed and consistency by which AI-powered tools can help address large volumes of SRRs not only saves an organization excessive spend, but also repairs customer trust,” said Mr. Willemsen. “With the loss of customers serving as privacy leaders’ second highest concern, such tools will ensure that their privacy demands are met.”

Global Privacy Spending on Compliance Tooling Will Rise to $8 Billion Through 2022

Through 2022, privacy-driven spending on compliance tooling will rise to $8 billion worldwide. Gartner expects privacy spending to impact connected stakeholders’ purchasing strategies, including those of CIOs, CDOs and CMOs. “Today’s post-GDPR era demands a wide array of technological capabilities, well beyond the standard Excel sheets of the past,” said Mr. Willemsen.

“The privacy-driven technology market is still emerging,” said Mr. Willemsen. “What is certain is that privacy, as a conscious and deliberate discipline, will play a considerable role in how and why vendors develop their products. As AI turbocharges privacy readiness by assisting organizations in areas like SRR management and data discovery, we’ll start to see more AI capabilities offered by service providers.”

International Data Corporation (IDC) has announced the release of IDC FutureScape: Worldwide Developer and DevOps 2020 Predictions — China Implications (IDC #CHC45894020, January 2020). The new IDC paper puts forward ten developer and DevOps market predictions for 2020 with a specific focus on China, as well as actionable insights for technology buyers in the DevOps market for the next five years.

Nan Wang, Senior Market Analyst, Enterprise System and Software Research, IDC China, said that the study provides a well-rounded analysis and discussion of developer and DevOps market trends and their impact on digital transformation and business’ technology departments.

“Senior IT leaders and business executives will come away from this report with guidance for managing the implications of these technologies,” she said. “Furthermore, our market predictions will help inform their IT investment priorities and implementation strategies.”

IDC’s Top10 developer and DevOps market predictions for 2020 are as follows:

1. Enhanced AI optimizations for developers

By 2024, 56% of companies will not confine their artificial intelligence (AI)/machine learning (ML) use to application development. By then, close to 10% of AI/ML-based optimizations will focus on software development, design, quality management, security and deployment. By 2023, 70% of companies will invest in employee retraining and development, including third-party services to meet the needs of new skills and working methods brought about by AI applications.

2. Wide use of container platforms

By 2024, 70% of new application developed with programming languages will be deployed in containers for improved deployment speed, application consistency and portability.

3. Growth of part-time developers

By 2023, the number of part-time developers (including business analysts, data analysts and data scientists) in China will be double the number in2019. Specifically, part-time developers will increase from1.8 million in 2019 to 3.6 million, representing a CAGR of 12.2%. By 2020, 15% of customer experience applications will deliver hyper-personalization through reinforcement learning algorithms continuously trained on a wide range of data and innovations.

4. DevOps as a daily activity

By 2023, the number of organizations releasing codes for specific applications will increase to 30% from 3% in 2019. By 2024, at least 90% of new versions of enterprise-grade AI applications will feature embedded AI functions, though those disruptive AI-focused applications will only account for 10% of the total market.

5. Accelerating transformation of traditional applications

By 2022, the accelerating modernization of traditional applications and the development of new applications will increase the percentage of cloud-native production applications to 25%, driven by the utilization of microservices, containers and dynamic orchestration.

6. DevOps focus on business KPIs

By 2023, 40% of DevOps teams will invest in tooling and focus on business KPIs such as cost and revenue as operations will begin to play a more important role in the performance of end-to-end applications and business impact.

7. Use of related analytical tools driven by open source software

The increasing reliance of applications on open source components has driven the rapid growth of software component analysis and related tools. By 2023, software component analysis tools, which are currently only used by a minority of organizations, will be used by 45% of organizations.

8. Companies establishing their own development ecosystems

By 2023, 60% of Chinese G2000 companies will establish their own software ecosystems, and 50% will access important reusable code components from publicly accessible community code libraries.

9. Growth of open source codebases

By 2024, open source software derived from open code libraries as a percentage of all enterprise applications will double to 25%, with the remaining75% being customized according to organizations’ business models or use scenarios.

10. Recognized applicability of DevOps

By 2024, applications that completely use DevOps will account for less than 35%. Enterprises have recognized that not all applications can benefit from the complex operations spanning development and production related to continuous integration and continuous delivery (CI/CD).

As shown by the above predictions, emerging business models and frequently changing needs for services have created challenges to enterprises’ IT infrastructure. Going forward, the ability to quickly establish new business systems and continuously respond to system iterations brought about by market changes will emerge as the top priorities for IT departments

In response to these external pressures, companies have begun adopting modern methods to implement application development, packaging and testing. In this new and challenging environment, machine learning and AI are being widely used. IDC predicts that the work of future will, to a large extent, will be influenced by how developers and the DevOps community evolve in the next five years.

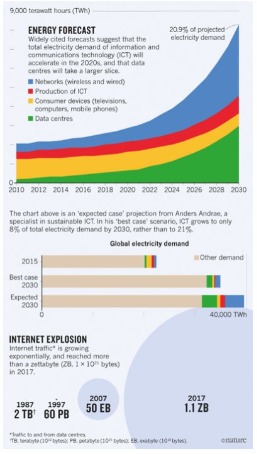

Worldwide ICT spending to reach $4.3 trillion

A new forecast from International Data Corporation (IDC) predicts worldwide spending on information and communications technology (ICT) will be $4.3 trillion in 2020, an increase of 3.6% over 2019. Commercial and public sector spending on information technology (hardware, software and IT services), telecommunications services, and business services will account for nearly $2.7 trillion of the total in 2020 with consumer spending making up the remainder.

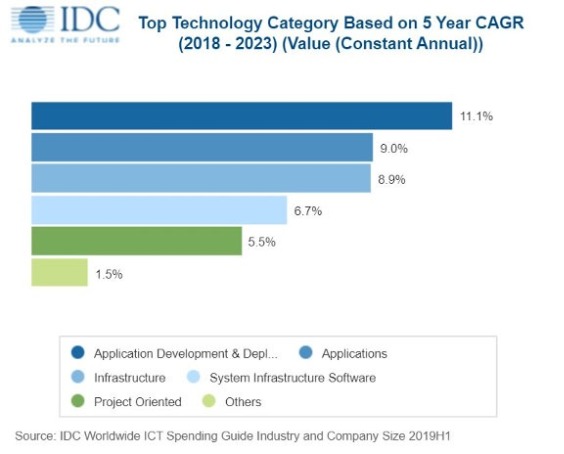

"The slow economy, weak business investment, and uncertain production expectations combined with protectionist policies and geopolitical tensions — including the US-China trade war, threats of US tariffs on EU automobiles and the EU's expected response, and continued uncertainty around the Brexit deal — are still acting as inhibitors to ICT spending across regions," said Serena Da Rold, program manager in IDC's Customer Insights and Analysis group. "On the upside, our surveys indicate a strong focus on customer experience and on creating innovative products and services driving new ICT investments. Companies and organizations across industries are shifting gears in their digital transformation process, investing in cloud, mobility, the Internet of Things, artificial intelligence, robotics, and increasingly in DevOps and edge computing, to transform their business processes."

IT spending will make up more than half of all ICT spending in 2020, led by purchases of devices (mainly mobile phones and PCs) and enterprise applications. However, when combined, the three IT services categories (managed services, project-oriented services, and support services) will deliver more than $750 billion in spending this year as organizations look to accelerate their digital transformation efforts. The application development & deployment category will provide the strongest spending growth over the 2019-2023 forecast period with a five-year compound annual growth rates (CAGR) of 11.1%.

Telecommunications services will represent more than one third of all ICT spending in 2020. Mobile telecom services will be the largest category at more than $859 billion, followed by fixed telecom services. Both categories will see growth in the low single digits over the forecast period. Business services, including key horizontal business process outsourcing and business consulting, will be about half the size of the IT services market in 2020 with solid growth (8.2% CAGR) expected for business consulting.

Consumer ICT spending will grow at a much slower rate (0.7% CAGR) resulting in a gradual loss of share over the five-year forecast period. Consumer spending will be dominated by purchases of mobile telecom services (data and voice) and devices (such as smartphones, notebooks, and tablets).

Four industries – banking, discrete manufacturing, professional services, and telecommunications – will deliver 40% of all commercial ICT spending in 2020. IT services will represent a significant portion of the spending in all four industries, ranging from 50% in banking to 26% in professional services. From there, investment priorities will vary as banking and discrete manufacturing focus on applications while telecommunications and professional services invest in infrastructure. The industries that will deliver the fastest ICT spending growth over the five-year forecast are professional services (7.2% CAGR) and media (6.6% CAGR).

More than half of all commercial ICT spending in 2020 will come from very large businesses (more than 1,000 employees), while small businesses (10-99 employees) and medium businesses (100-499 employees) will account for nearly 28%. IT services will represent a significant portion of the overall spending for both market segments – 54% for very large businesses and 35% for small and medium businesses. Application and infrastructure spending will be about equal for very large businesses while small and medium businesses will invest more in applications.

"SMBs are increasingly embracing digital transformation to take advantage of both the opportunities it presents, and the disruption it can mitigate," said Shari Lava, research director, Small and Medium Business Markets. "Digitally determined SMBs, defined as those that are making investments in digital transformation related technology, are almost twice as likely to report double-digit revenue growth versus their technology indifferent peers."

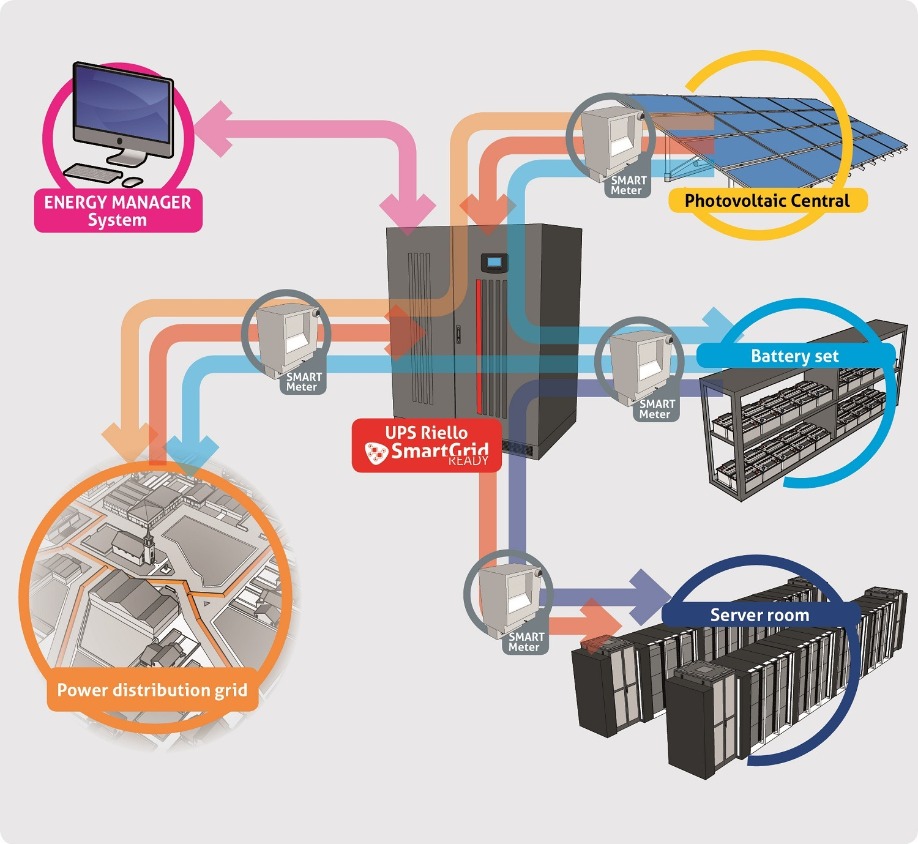

$124 billion to be spent on Smart Cities initiatives in 2020

A new forecast from the International Data Corporation (IDC) Worldwide Smart Cities Spending Guide shows global spending on smart cities initiatives will total nearly $124 billion this year, an increase of 18.9% over 2019. The top 100 cities investing in smart initiatives in 2019 represented around 29% of global spending, and while growth will be sustained among the top spenders in the short term, the market is quite dispersed across midsize and small cities investing in relatively small projects.

"This new release of IDC's Worldwide Smart Cities Spending Guide brings further expansion of our forecasts into smart ecosystems with the addition of smart ports alongside smart stadiums and campus," said Serena Da Rold, program manager in IDC's Customer Insights & Analysis group. "The Spending Guide also provides spending data for more than 200 cities and shows that fewer than 80 cities are investing over $100 million per year. At the same time, around 70% of the opportunity lies within cities that are spending $1 million or less per year. There is a great opportunity for providers of smart city solutions who are able to leverage the experience gained from larger projects to offer affordable smart initiatives for small and medium sized cities."

In 2019, use cases related to resilient energy and infrastructure represented over one third of the opportunity, driven mainly by smart grids. Data-driven public safety and intelligent transportation represented around 18% and 14% of overall spending respectively.

Looking at the largest use cases, smart grids (electricity and gas combined) still attract the largest share of investments, although their relative importance will decrease over time as the market matures and other use cases become mainstream. Fixed visual surveillance, advanced public transportation, intelligent traffic management, and connected back office follow, and these five use cases together currently represent over half of the opportunity. The use cases that will see the fastest spending growth over the five-year forecast are vehicle-to-everything (V2X) connectivity, digital twin, and officer wearables.

Singapore will remain the top investor in smart cities initiatives. Tokyo will be the second largest spender in 2020, driven by investments for the Summer Olympics, followed by New York City and London. These four cities will each see smart city spending of more than $1 billion in 2020.

On a regional basis, the United States, Western Europe, and China will account for more than 70% of global smart cities spending throughout the forecast. Latin America and Japan will experience the fastest growth in smart cities spending in 2020.

"Regional and municipal governments are working hard to keep pace with technology advances and take advantage of new opportunities in the context of risk management, public expectations, and funding needs to scale initiatives," said Ruthbea Yesner, vice president of IDC Government Insights and Smart Cities and Communities. "Many are moving to incorporate Smart City use cases into budgets, or financing efforts through more traditional means. This is helping to grow investments."

Oliver Krebs, Vice President of EMEA at Cherwell Software, discusses how digital transformation can leverage Artificial Intelligence to improve the employee and customer experience.

Was there ever a buzzword as buzzy as “digital transformation?” It’s perfect: It sounds futuristic and exciting, but it’s also vague enough to be used for any number of different products and services. It could be a process, a technology suite, a mindset, or all of the above. But aside from the inevitable hype that accompanies every other innovation, digital transformation is not just technology for technology's sake; it will be a real game changer to your business.

Is digital transformation for you?

Digital transformation is, put simply, the use of new and fast evolving digital technology to solve a business problem. Gartner, in its glossary, describes it as “anything from IT modernization (for example, cloud computing), digital optimization, to the invention of new digital business models.” Sounds obvious that you would want it, doesn’t it?

But if you are still having doubts about whether it is quite right for you, then consider the following four questions. If you can answer ‘yes’ to at least one of these when evaluating the relevance of a new technology for your organisation, then you can be pretty confident that digital transformation is for you.

1. Does this solution make us more efficient?

2. Does this solution enhance the customer experience?

3. Will this solution help attract and retain the right talent?

4. Will this solution carry a measurable return on investment?

It is worth reiterating here that digital transformation is no longer something reserved for the future. It is the here and now. According to a Smart Insights survey, 65% of businesses already have a transformation program in place, with only 35% saying they currently have no plans to run one; which means that businesses who have not yet started their transformation are already behind two thirds of their competitors, a worrying thought in the competitive world we live in. So, undertaking a digital transformation is clearly not proceeding at the same pace everywhere, but what can it actually do?

AI

One of the biggest innovations to sit under the digital transformation umbrella is Artificial Intelligence (AI). In its most basic form, you would consider yourself to be in the presence of AI when a computer system performs tasks which would normally be performed by humans. AI has moved beyond theory and into reality so quickly that it is fast becoming a ‘must have’ rather than a ‘nice to have’. Human skills such as visual perception, psychological insights, speech recognition, decision-making, and translation between languages are now routinely being replicated by AI algorithms that provide the instructions for computer systems to follow.

And according to a recent (2018) study, which Cherwell conducted in partnership with global research firm IDG, a whopping 71 percent of IT organisations have already implemented one or more AI projects. And around one-third of those surveyed say their AI projects are already generating a return on investment, with an overwhelming majority—90 percent—anticipating that the ROI will be positive in the next five years.

Many businesses have already begun to make use of AI-enabling technologies as part of their digital transformation, with basic information capture and process automation being at the top of their ‘To Do’ list. All of this is being facilitated by integrating processes across departments.

Integration

Integrating processes is an essential building block of digital transformation because it breaks down the silos of information that commonly grow up within organisations. Integration also makes communication and collaboration between staff and customers much easier, promoting self-serve options that eliminate departmental bottlenecks.

Work process integration has also been shown to encourage higher employee engagement, satisfaction and productivity. According to a recent Lawless Research study of cross-functional processes commissioned by Cherwell Software, (‘Work Process Integration: Bad News is Good News – Small Footprint Today Signals Big Opportunities Ahead’) a variety of standard processes aren’t highly integrated, and poorly designed (i.e., non-integrated) processes reduce productivity and negatively affect the employee experience.

At least one-quarter of cross-functional team managers expressed frustration that different team members were using different apps. The issues considered most frustrating in this context were inefficiencies (cited by 43% of managers), repetitive work (40%), miscommunication (37%), errors (27%), and software incompatibility (26%). Respondents also reported that, on average, manual processes (which could be automated) consumed nearly half of their workday. 43% said that they spent at least half of their workday on manual processes. These processes included onboarding/offboarding an employee (86%), resolving customer issues (also 86%), conducting performance reviews (85%), and participating in cross-functional projects (also 85%).

Whether your ‘customers’ are inside or outside your organisation, their demands for a service that utilises data across the organisation are only increasing. ‘Inside’ customers, such as employees who use the company’s IT services and ‘Outside’ customers who buy the company’s products and services can both benefit equally from AI-enabled activities that draw from a common well of resources.

IT Support

The IT support desk is a good example of where organisations can start gathering information around the most frequent ‘pain points’ of their end users and not just react to problems after they have occurred. Proactively anticipating them in advance can prevent them happening in the first place. If they have the resources and know-how, they can find solutions and innovate. Predictive analytics can also be used for incident management, demand planning, and workflow improvement.

Your IT employees might be relying on manually - processed IT tickets for every request they receive, whereas moving to a self-service IT model would give the IT team more time to focus on transformation initiatives, while also increasing employee efficiency and effectiveness.

AI can also reduce the costs associated with high-volume, low-value service desk activities because it takes over routine and repetitive tasks. Chatbots, knowledge curation, and incident/request routing are three big categories of AI features that are already in widespread use now. AI-assisted knowledge management now includes an intelligent search function that doesn’t just rely on specific keywords, but actually understands context and meaning in a way that a human being does. It should be pointed out that while AI does change the way that IT supports staff work, it won’t replace them completely, but will add value to what they are already doing.

The use of chatbots, for example, means there is always ‘someone’ available 24/7 and 365 days a year, even if it’s not an actual human being. While many chatbots are used simply to deflect routine and repetitive queries and requests before escalating more complex interactions to a live IT support agent, virtual support assistants (VSAs) are now even capable of carrying out actions for the customer such as resetting passwords, deploying software, escalating support requests and restoring their IT services.

Customer Service

And what about customer service? Chatbots, for example, are one of a number of different engagement options that can be made available to customers. A chatbot AI can give customers the answers they need, when they want them, without having to wait on hold for ages until a human employee becomes available. This can drastically improve the time both parties spend resolving the query.

And how much better would it be if you could put customer communications, history and documents into context and use that knowledge to provide a better, faster service? Human agents draw on a wide range of skills to solve customer problems, but so too can non-human agents if they have the information available. And non-human agents have the advantage that they can read, digest and process huge amounts of data far faster than humans can. It is also worth remembering that chatbots are not the only option for customers – organisations should be able to customise the available interfaces depending on the context of the business and the customers themselves.

The benefits of getting quick and easy resolutions to problems and getting perfectly tailored offers that suit your specific requirements make it easy to see why these AI systems are becoming so popular. In the future, we can look forward to enjoying the convenience of a fully tailored, always ‘on’, user experience when at work or at home.

Connectivity as a concept has become an essential part of life, as opposed to just a luxury. The Internet of Things (IoT) has already become commonplace in our lives, thanks to all the connected devices and smart technologies we own, interacting with one another to create a fully connected network. With the global number of IoT devices projected to triple by 2025 and 5G technologies very soon to become a cohesive part of the UK’s telecoms infrastructure, as a country we will soon be more connected than ever.

By David Higgins, EMEA Technical Director at CyberArk.

Constant connectivity provides opportunities for innovation and modernisation. Conversely though, it also creates cybersecurity threats that can compromise extremely sensitive information.

With the world heading swiftly into an age of ever-more-enhanced connectivity, individuals and organisations need to familiarise themselves with these developing threats and the volatile landscape, while ensuring they have a robust way to protect themselves against these threats.

Finding a Place for CSPs in a Volatile Landscape

Communications services providers (CSPs) specialising in mobile services, media, or web services live in a world of relentless innovation. A need to stay relevant forces CSPs to deliver value beyond basic connectivity. This opens lucrative new markets and opportunities for all industries.