I still chuckle when coming across the, thankfully, odd example, of a highly sophisticated company, which invests large sums on data centre and IT infrastructure, with special attention paid to reliability and resilience, only to discover that one of their key applications is running on a twenty year old machine, because it’s ‘too dangerous’ to migrate it!

Thankfully, very few companies leave themselves open to such a single point of failure.

However, the ongoing threat of the coronavirus, allied to yet another bout of extreme weather conditions, in the UK at least, has set me wondering whether there is an aspect of resilience that is being ignored right now. Thomas Malthus’s ideas around war, famine and disease being natural population checks might not hold so good in the 21st century, but disease as a business disruptor could just be a problem both now and into the future. Of course, if it wipes out most of the world’s population, then we’ll have bigger problems to worry about than keeping businesses functioning!

Assuming that, thanks in part to our growing immunity to antibiotics, widespread diseases, easily transported around the world thanks to globalisation (both of tourism and business), could well become more common and more disruptive. Right now, it seems as if the closing down of large parts of China, thanks to the coronavirus, is about to have an unexpected consequence on many businesses across the world. In simple terms, the quantity of components and finished goods which China manufactures and then supplies across the globe is already slowing down significantly. Factories are closed, so production has stopped. And even those goods which are completed cannot find any logistics companies ‘brave enough’ to go and collect them.

Ironically, it is the Far East we have to thank for the concept of Just In Time manufacturing, which then spilled over into the general business world. Why hold vast stocks of anything, if you can push them back to the supplier to keep hold of them. A massively reduce inventory, and a great aid to cash flow – only paying for goods when they’ve already been sold on to customers.

Ah, but all of a sudden, thanks to China’s shutdown, this policy doesn’t look so clever, whether you’re a car manufacturer relying on crucial components, or a retail company awaiting clothing shipments.

Okay, so one major health scare doesn’t, just yet, at least, cause a wholescale change in attitude to how, when and where supplies are sourced, but it’s something to bear in mind moving forwards. After all, the once in a lifetime extreme weather events which were so memorable for our parents’ and grandparents’ generations now seem to be occurring every few years. Indeed, in the UK, for example, hardly a winter goes by without pictures of towns and villages under water.

So, maybe no need to panic just yet when it comes to disease, but certainly a situation worth monitoring in the coming years, if the increase in climate volatility is anything to go by. The cost of data centre and IT hardware could well increase in the short term, and while any organisation can sweat older assets for a little longer, if the competition has managed to secure the very latest technology, the gap between the technology haves and have nots could grow that bit wider.

Amidst the changing nature of work, competition, and society, the research suggests ways to reimagine leadership.

A study released by MIT Sloan Management Review (MIT SMR) and Cognizant reveals that most executives around the world are out of touch with what it takes to lead effectively and for their businesses to stay competitive in the digital economy. Reliance on antiquated and ineffective leadership approaches by the current generation of leaders is undermining organizational performance. To remain competitive and lead effectively, executives will need to fully reimagine leadership, the study’s authors have found.

“We are on the precipice of an exciting new world of work, one that gives executives an opportunity to chart a new course for what their leadership should look like, feel like, and be like,” said Doug Ready, senior lecturer in organization effectiveness at the MIT Sloan School of Management and guest editor of the report. “Yet, our study suggests that digitalisation, upstart competitors, the need for breakneck speed and agility, and an increasingly diverse and demanding workforce demand more from leaders than what most can offer. The sobering data underscores the urgent need for a fully reimagined playbook for leaders in the coming digital age.”

The study, as reported in “The New Leadership Playbook for the Digital Age: Reimagining What It Takes to Lead” is based on a survey of 4,394 global executives from over 120 countries, 27 executive interviews, and focus group exchanges with next-gen global emerging leaders. The data reveals:

“A generation of leaders in large companies are out of sync, out of tune, and out of touch with their workforces, markets, and competitive landscapes. What got them to their current exalted status won’t be effective much longer — unless they take swift action,” said Benjamin Pring, report coauthor and director of the Center for the Future of Work for Cognizant. “Allowing unprepared senior executives with outdated skills and attitudes to stick around forces next-generation, high-potential leaders to move on to new pastures, which harms morale and ultimately shifts the organisation further away from where market demand is heading.”

The authors identify three categories of existing leadership behaviors (the 3Es); these include:

“Our experience suggests that the most advanced leadership teams are those committed to developing these 3Es in their organisations,” added Carol Cohen, report coauthor and senior vice president, global head of talent management and leadership at Cognizant. “A key to success is artfully introducing new leadership approaches that particularly appeal to a new generation of employees while at the same time honoring the time-tested behaviors and attributes that inspire trust, build a sense of community, and motivate employees to improve performance.”

The authors caution that the primary leadership challenges in the digital economy are not solved by merely adopting a group of these 3E behaviors but require developing new mindsets that anchor, inform, and advance these behaviors. They identified four distinct mindsets that together constitute what they believe are the new leadership hallmarks in the digital economy and illustrate through data and case studies how they can shape successful leadership. They include:

The report also offers further recommendations for a new leadership playbook and briefs leaders on the need to articulate a powerful leadership narrative, build communities of leaders, demand diversity and inclusion, and align talent, leadership, and business strategies.

An average IT team spends 15% of its total time trying to sort through monitoring alerts as the gap between IT resources, cloud scale and complexity widens.

Software intelligence company Dynatrace has published the findings of an independent global survey of 800 CIOs, which highlights a widening gap between IT resources and the demands of managing the increasing scale and complexity of enterprise cloud ecosystems. IT leaders around the world are concerned about their ability to support the business effectively, as traditional monitoring solutions and custom-built approaches drown their teams in data and alerts that offer more questions than answers. The 2020 global report “Top challenges for CIOs on the road to the AI-driven autonomous cloud” is available for download here.

CIO responses in the research indicate that, on average, IT and cloud operations teams receive nearly 3,000 alerts from their monitoring and management tools each day. With such a high volume of alerts, the average IT team spends 15% of its total available time trying to identify which alerts need to be focused on and which are irrelevant. This costs organizations an average of $1.5 million in overhead expense each year. As a result, CIOs are increasingly looking to AI and automation as they seek to maintain control and close the gap between constrained IT resources and the rising scale and complexity of the enterprise cloud.

Findings from the global report include:

IT is drowning in data

Traditional monitoring tools were not designed to handle the volume, velocity and variety of data generated by applications running in dynamic, web-scale enterprise clouds. These tools are often siloed and lack the broader context of events taking place across the entire technology stack. As a result, they bombard IT and cloud operations teams with hundreds, if not thousands, of alerts every day. IT is drowning in data as incremental improvements to monitoring tools fail to make a difference.

Existing systems provide more questions than answers

Traditional monitoring tools only provide data on a narrow selection of components from the technology stack. This forces IT teams to manually integrate and correlate alerts to filter out duplicates and false positives before manually identifying the underlying root cause of issues. As a result, IT teams’ ability to support the business and customers are greatly reduced as they’re faced with more questions than answers.

Precise, explainable AI provides relief

Organizations need a radically different approach – an answers-based approach to monitoring, to keep up with the transformation that’s taken place in their IT environments, and an approach with AI and automation at the core.

“Several years ago, we saw that the scale and complexity of enterprise cloud environments was set to soar beyond the capabilities of today’s IT and cloud operations teams,” said Bernd Greifeneder, CTO and founder, Dynatrace. “We realized traditional monitoring tools and approaches wouldn’t come close to understanding the volume, velocity and variety of alerts that are generated today, which is why we reinvented our platform to be unlike any other. The Dynatrace® Software Intelligence Platform is a single platform with multiple modules, leveraging a common data model with a precise explainable AI engine at its core. Unlike other solutions, which just serve up more data on glass, it’s this combination that enables Dynatrace to deliver the precise answers and contextual causation that organizations need to succeed in taming cloud complexity and, ultimately, achieving AI-driven autonomous cloud operations.”

AVEVA has revealed global survey findings identifying the key investment drivers for digital transformation. The survey was conducted with 1,240 decision makers in ten countries in EMEA, North America and APAC across nine industry verticals.

The research identifies a strong demand across both industries and markets to implement advanced technologies such as Artificial Intelligence (AI) and data visualization to make sense of vast data streams in real time, with 75% of respondents globally prioritizing investment in AI and analytics.

The research also identified three key global investment priorities for organizations when it comes to embarking upon the digital transformation journey:

1.Making sense of data utilizing artificial intelligence and real time data visualization

·The research highlighted a strong demand for technologies that provide predictive outputs from large data flows, with AI and Analytics listed as the most important enabler (75%), closely followed by Real-Time Data Visualization (64%), Augmented, Virtual or Mixed Reality (60%) and Big Data Processing (59%).

·AI was a top three enabler across all industries globally, with the greatest importance assigned in Power and Utilities (81%) and Oil & Gas (particularly upstream 79% and midstream 78%).

·Japan (88%) and China (84%) prioritised AI highest, with the UK (79%) and US (77%) following closely behind.

2.Fostering collaboration through Advanced Process and Engineering Design

·Advanced Process and Engineering Design was the second most important technology (74%) and was in the top three technology priorities across all industries globally, scoring highest among Engineering, Procurement and Construction professionals.

·This was perceived as an essential technology for global production, ranked as the most important enabler for Marine Ship Building (75%), Buildings/Infrastructure (74%) and Packaged Goods (73%), with Oil and Gas and Energy all ranking the technology highly.

·Japan (85%) and Germany (82%) are early adopters with high importance attributed across all regions.

3. Stepping up cyber security and safety capabilities

·Cyber security was the third most prioritised technology enabler (71%) and in particular a focus for Mining (76%), Downstream Oil and Gas (75%), Power & Utilities (70%) and Marine (70%) and the highest priority for Planning & Scheduling specialists.

·Improving Safety and Security through technology investment was a priority across all regions, with the Middle East (68%), Australia (63%) and India (60%) particularly highlighting this issue

For global corporates the two most valuable assets are their people and their data. Businesses today have great responsibility to protect employees and customers, with technology that provides the foresight to critical failures before they occur. Lisa Johnston, CMO, AVEVA commented, “As digital transformation moves to the forefront of the industrial agenda, the power of technology to unify data and break silos is allowing specialists to collaborate and change business models. The world’s most capital-intensive projects, from sustainable energy production and mining to smart factories and connected cities are now being designed, planned and delivered by global multidisciplinary teams all connected seamlessly through technology.”

Other findings from the research included:

·Asset Performance Managers (APM) were found to have the most demanding desires for technology investment, requiring a far-reaching product set and visionary approach. APM was set apart from all other professional categories in the strength of their demand for technology to create new services or products; use data to drive new revenue streams; and to enable collaboration with AI and Analytics their most important enabler. APM also identified technology as providing a great potential to upgrade both safety and security and emergency response times.

·Face to face engagement and trust remain key vectors for driving sales success. Face to face meetings with a vendor, either at a vendor event or as an introductory meeting, are most influential across all categories, with personal referrals also particularly meaningful. Experience with a vendor is highly prioritized in UK, China and India while France, Japan and Australia place considerable weight on case studies from the vendor.

·Remote Operations Centers and Learning Management and Training scored highest for relevance across global industries. China and Japan see a Command Center as most relevant, while the US and Australia cite Supply Chain Optimization is key to their business.

·Delivering cost reduction and enhancing safety prioritised by high growth organisations The countries prioritising cost reduction have been fast to scale over the past decades - China (61%), India (58%) and the Middle East(60%) indicated that significant margin improvement is possible in these geographies from software solutions. This demand for greater efficiency mirrored a requirement to invest in technology to promote safety as these economies continue to mature (China 51%, UAE 68% and India 60%).

“This research was an opportunity to hear from both our existing as well as potential new customers across the globe and our findings mirror the growing demands for technology solutions in the market globally today. Emerging technologies like AI, Machine Learning and Edge Computing which are transforming the technology landscape with vast data streams delivering operable output as well as true business outcomes for our customers today,” concluded Lisa Johnston.

Infosys Digital Radar 2020 finds that few companies have progressed to the most advanced stages of digital transformation this year.

Businesses globally face a “digital ceiling” when it comes to digital transformation, according to new research from Infosys Knowledge Institute (IKI), the thought leadership and research arm of Infosys (NYSE: INFY), a global leader in next-generation digital services and consulting. The study reveals that businesses must change their mindsets to achieve sophisticated levels of digital maturity.

Infosys Digital Radar 2020 assessed the digital transformation efforts of companies on a Digital Maturity Index and found year-over-year progress in basic areas, such as digital initiatives to improve a company’s efficiency. However, most companies come up against a “digital ceiling” when trying to achieve the most advanced levels of maturity.

The report, which surveyed over 1,000 executives globally, ranked the most digitally advanced companies as “Visionaries”, followed by “Explorers” and then “Watchers.”

Companies know how to achieve moderate transformation success, with an 18 per cent increase in companies progressing this year from the lowest tier of Watchers to the middle Explorer tier. However, Explorers struggled to move into the top Visionary cluster, with the top tier remaining the same, indicating a “digital ceiling” to transformation efforts.

The Visionary cluster remains unchanged despite companies reporting fewer barriers to digital transformation than last year. Human, rather than technological, barriers are now the most persistent, with the two of the top hurdles being the lack of talent or skills (34 per cent) and a risk-averse corporate culture (35 per cent).

How to break through the digital ceiling?

The research demonstrates that top performers break through the digital ceiling because they think differently.

Firstly, successful companies focus strongly on people, using digital transformation to make improvements centred on customers and employees.

Most companies (68 per cent) across the spectrum stated operational efficiency and increased productivity as a main transformation objective. But successful companies in the Visionary cluster are particularly motivated to make improvements for their employees. Nearly half of Visionaries describe “empowering employees” as a major business objective for transformation, compared with less than one third of Explorers and less than one fifth of Watchers.

Likewise, Visionaries have an increased focus on customer centred initiatives, being significantly more likely than other clusters to undertake transformation to improve customer experiences and engagement and in order to respond more quickly to customer needs.

Secondly, successful companies have a different mindset when it comes to transformation processes.

Traditional linear transformations result in long transformation timelines, meaning a company’s improvements are out of date by the time the process is complete. Instead, top performers demonstrate a cyclical mindset, implementing recurring rapid feedback loops to accelerate transformation and keep updates relevant. The Visionary cluster is far ahead of others in digital initiatives tied to quick cycles: 75 per cent operate at scale in Agile and DevOps, compared with an overall average of 34 per cent for the entire survey group.

Businesses overestimate tech barriers and underestimate the importance of a company’s mindset

The importance of culture and a cyclical transformation mindset to breaking through the digital ceiling were underestimated by businesses last year.

In the 2019 Digital Radar report, companies were asked to predict the biggest barriers to their transformation progress for the following year. This year’s Infosys Digital Radar 2020 compares these predictions to the actual challenges businesses faced in 2019.

Businesses reported dramatic declines in the impact that technological barriers have on their transformation progress, including:

However, businesses made much less progress against cultural barriers, including lack of change management capabilities (down 7 per cent) and lack of talent (down 6 per cent).

Progress across industries and geographies

Salil Parekh, CEO and MD at Infosys, commented: “We’ve seen enterprises successfully employ emerging technologies to optimise productivity and efficiency, but struggle at the next stage of digital maturity. Faster, better, and cheaper technology alone will not provide the improvements enterprises need. Our research has shown that companies which can keep pace with digital transformation are those that design digital initiatives to improve customer experiences and empower their employees, differentiating themselves and propelling their business to the most advanced levels of progress.”

Jeff Kavanaugh, VP and Global Head at Infosys Knowledge Institute, commented: “This year’s Digital Radar research revealed significant progress across transformation initiatives – however, traditional programme models are not keeping up with the rapid pace of market change and companies face a distinct barrier in reaching top levels of digital maturity.

“The most successful businesses in our survey have an employee focus and a circular transformation mindset, which enable top performers to kick off a virtuous cycle in the company. The result is a “living enterprise” that is constantly sensing, improving, and attuned to its customers and employees. This living enterprise is suited to serving a larger circle of stakeholders – employees, customers, suppliers, local communities, and larger society – not just shareholders.”

61% of CIOs say time spent on strategic planning increased in last 12 months.

The role of the CIO is evolving with more of a focus on revenue and strategy, according to the 2019 Global CIO Survey from Logicalis, a global provider of IT solutions. The study, which questioned 888 CIOs from around the world, found that 61% of CIOs have spent more time on strategic planning in the last 12 months whilst 43% are now being measured on their contribution to revenue growth.

The survey reveals that although CIOs are becoming more strategic and accountable, they are under pressure with reduced budgets and higher security risks. Almost half of respondents (48%) say that their time spent on security defenses has increased in the last year, with CIO’s spending 25% of their time on information and security compliance. The maintenance of technology remains a key aspect of the CIO’s role, with CIO’s on average, spending one-third (33%) of their time focused on day-to-day management of technology.

The increased strain is having a negative impact on CIOs’ enjoyment of their job. Almost half of CIOs (49%) believe their job satisfaction has decreased in the last 12 months, whilst 29% say their work/life balance has worsened. The expanded focus on strategy and revenue has had an impact on the amount of time CIOs are able to spend on innovation, with 30% saying it has decreased in the last 12 months.

Mark Rogers, CEO at Logicalis, said: “It is clear from these survey results that the role of the CIO has changed and is continuing to evolve. Digital transformation has impacted almost every industry, which has led to the role of the CIO increasing in importance. CIOs are now expected to be responsible for business performance at a strategic level which has added to the time that they are expected to spend on maintaining IT infrastructure.”

“This increase in strategic responsibility - should be embraced by businesses and CIOs alike - because technology does hold the key to unlocking competitive advantage and operational efficiency. However, these survey results are stark in their findings and show the increased amount of pressure being exerted on CIOs. Organisations must ensure their CIO is fully supported and has the necessary resources to carry out their job effectively. Businesses are pushing their CIOs to understand more about the line of business and input on strategy, whilst CIOs are still under pressure carrying out day-to-day activities. Clearly, this needs to be addressed.”

Nutanix, has published the findings of its second global Enterprise Cloud Index survey and research report, which measures enterprise progress with adopting private, hybrid and public clouds. The new report found enterprises plan to aggressively shift investment to hybrid cloud architectures, with respondents reporting steady and substantial hybrid deployment plans over the next five years. The vast majority of 2019 survey respondents (85%) selected hybrid cloud as their ideal IT operating model.

For the second consecutive year, Vanson Bourne conducted research on behalf of Nutanix to learn about the state of global enterprise cloud deployments and adoption plans. The researcher surveyed 2,650 IT decision-makers in 24 countries around the world about where they’re running their business applications today, where they plan to run them in the future, what their cloud challenges are, and how their cloud initiatives stack up against other IT projects and priorities. The 2019 respondent base spanned multiple industries, business sizes, and the following geographies: the Americas; Europe, the Middle East, and Africa (EMEA); and the Asia-Pacific (APJ) region.

This year’s report illustrated that creating and executing a cloud strategy has become a multidimensional challenge. At one time, a primary value proposition associated with the public cloud was substantial upfront capex savings. Now, enterprises have discovered that there are other considerations when selecting the best cloud for the business as well, and that one size cloud strategy doesn’t fit all use cases. For example, while applications with unpredictable usage may be best suited to the public clouds offering elastic IT resources, workloads with more predictable characteristics can often run on-premises at a lower cost than public cloud. Savings are also dependent on businesses’ ability to match each application to the appropriate cloud service and pricing tier, and to remain diligent about regularly reviewing service plans and fees, which change frequently.

In this ever-changing environment, flexibility is essential, and a hybrid cloud provides this choice. Other key findings from the report include:

“As organisations continue to grapple with complex digital transformation initiatives, flexibility and security are critical components to enable seamless and reliable cloud adoption,” said Wendy M. Pfeiffer, CIO of Nutanix. “The enterprise has progressed in its understanding and adoption of hybrid cloud, but there is still work to do when it comes to reaping all of its benefits. In the next few years, we’ll see businesses rethinking how to best utilise hybrid cloud, including hiring for hybrid computing skills and reskilling IT teams to keep up with emerging technologies.”

“Cloud computing has become an integral part of business strategy, but it has introduced several challenges along with it," said Ashish Nadkarni, group vice president of infrastructure systems, platforms and technologies at IDC. "These include security and application performance concerns and high cost. As the 2019 Enterprise Cloud Index report demonstrates, hybrid cloud will continue to be the best option for enterprises, enabling them to securely meet modernisation and agility requirements for workloads.”

Continuing unprecedented growth in the datacentre sector may be at risk due to increasing concerns around scarce resource and rising labour costs according to the latest industry survey from Business Critical Solutions (BCS), the specialist professional services provider to the international digital infrastructure industry.

The Winter Report 2020, now in its 11thyear, is undertaken by independent research house IX Consulting, who capture the views of over 300 senior datacentre professionals across Europe, including owners, operators, developers, consultants and end users. It is commissioned by BCS, the specialist services provider to the digital infrastructure industry.

Just over two-thirds of respondents believe that the next year will see an increase in demand, up on the 55% from our previous summer survey. This is supported by over 90% of developers and investor respondents stating they expect to see a further expansion in their data centre portfolio over the coming year.

However, concerns are being raised by many Design Engineering and Construction (DEC) respondents around general shortages amongst design, construction and operational professionals with four-fifths expressing resourcing concerns. DEC respondents identified build professionals as being subject to the most serious shortages – 82% stated this view compared with 78% for design professionals and 77% for operational functionality of data centres.

When asked to rank the impact of this our respondents highlighted the increased workload placed on their existing staff (96%), rising operating/labour costs (92%) and over 80% indicating that this has led to an increase in the use of outsourcing options over the past 12 months. The increased workload for existing staff had in turn led to problems in resourcing existing work, with just over 70% stating that they had experienced difficulties in meeting deadlines or client objectives.

James Hart, CEO at BCS (Business Critical Solutions) said: “At BCS we are currently doing the round of careers fairs looking for candidates for next year’s graduate and apprenticeship scheme. When we are talking to these young people we often find that they either haven’t even considered our sector and/or they have misconceived ideas about what this career path involves. We can address this by going into universities, colleges and schools telling STEM graduates about the data centre industry and how great it is. Without action, this these issues will become more acute, so the rallying cry for 2020 is that the sector is an exciting place to be and we have to get out there and spread the word!”

New study finds that nearly 90% of organisations faced business email compromise (BEC) and spear phishing attacks in 2019.

Proofpoint has released its sixth annual global State of the Phishreport, which provides an in-depth look at user phishing awareness, vulnerability, and resilience. Among the key findings, nearly 90 percent of global organisations surveyed were targeted with business email compromise (BEC) and spear phishing attacks, reflecting cybercriminals’ continued focus on compromising individual end users. Seventy-eight percent also reported that security awareness training activities resulted in measurable reductions in phishing susceptibility.

Proofpoint’s annual State of the Phish report examines global data from nearly 50 million simulated phishing attacks sent by Proofpoint customers over a one-year period, along with third-party survey responses from more than 600 information security professionals in the U.S., Australia, France, Germany, Japan, Spain, and the UK. The report also analyses the fundamental cybersecurity knowledge of more than 3,500 working adults who were surveyed across those same seven countries.

“Effective security awareness training must focus on the issues and behaviours that matter most to an organisation’s mission,” said Joe Ferrara, senior vice president and general manager of Security Awareness Training for Proofpoint. “We recommend taking a people-centric approach to cybersecurity by blending organisation-wide awareness training initiatives with targeted, threat-driven education. The goal is to empower users to recognise and report attacks.”

End-user email reporting, a critical metric for gauging positive employee behaviour, is also examined within this year’s report. The volume of reported messages jumped significantly year over year, with end users reporting more than nine million suspicious emails in 2019, an increase of 67 percent over 2018. The increase is a positive sign for infosec teams, as Proofpoint threat intelligence has shown a trend toward more targeted, personalised attacks over bulk campaigns. Users need to be increasingly vigilant in order to identify sophisticated phishing lures, and reporting mechanisms allow employees to alert infosec teams to potentially dangerous messages that evade perimeter defences.

Additional State of the Phish report global findings include the following takeaways. Specifics on North America, EMEA, and APAC are detailed within the report as well.

·More than half (55 percent) of surveyed organisations dealt with at least one successful phishing attack in 2019, and infosecurity professionals reported a high frequency of social engineering attempts across a range of methods: 88 percent of organisations worldwide reported spear-phishing attacks, 86 percent reported BEC attacks, 86 percent reported social media attacks, 84 percent reported SMS/text phishing (smishing), 83 percent reported voice phishing (vishing), and 81 percent reported malicious USB drops.

·Sixty-five percent of surveyed infosec professionals said their organisation experienced a ransomware infection in 2019; 33 percent opted to pay the ransom while 32 percent did not. Of those who negotiated with attackers, nine percent were hit with follow-up ransom demands, and 22 percent never got access to their data, even after paying a ransom.

·Organisations are benefitting from consequence models. Globally, 63 percent of organisations take corrective action with users who repeatedly make mistakes related to phishing attacks. Most infosec respondents said that employee awareness improved following the implementation of a consequence model.

·Many working adults fail to follow cybersecurity best practices. Forty-fivepercent admit to password reuse, more than 50 percent do not password-protect home networks, and 90 percent said they use employer-issued devices for personal activities. In addition, 32 percent of working adults were unfamiliar with virtual private network (VPN) services.

·Recognition of common cybersecurity terms is lacking among many users. In the global survey, working adults were asked to identify the definitions of the following cybersecurity terms: phishing (61 percent correct), ransomware (31 percent correct), smishing (30 percent correct), and vishing (25 percent correct). These findings spotlight a knowledge gap among some users and a potential language barrier for security teams attempting to educate employees about these threats. It’s critical for organisations to communicate effectively with users and empower them to be a strong last line of defence.

·Millennials continue to underperform other age groups in fundamental phishing and ransomware awareness, a caution that organisations should not assume younger workers have an innate understanding of cybersecurity threats. Millennials had the best recognition of only one term: smishing.

A new report published by CREST looks for solutions to the increasing problems of stress and burnout among many cyber security professionals, often working remotely in high-pressure and under-resourced environments. CREST – the not-for-profit body that represents the technical security industry including vulnerability assessment, penetration testing, incident response, threat intelligence and SOC (Security Operations Centre) – highlights its concerns and says that more needs to be done to identify the early stages of stress and provide more support.

Recent statistics show that 30% of security team members experience tremendous stress, while 27% of CISOs admit stress levels greatly affect the ability to do their jobs and 23% say stress adversely affects relationships out of work.

“While most security professionals are passionate about what they do and thrive well under bouts of pressure, it is important to recognise when this healthy and positive stress becomes unhealthy and detrimental to performance and wellbeing, and where people are working remotely, as many are, it can be really difficult to spot because of a lack of support and communication,” says Ian Glover, president of CREST. “The problem can sometimes be compounded by the rise in complex attacks, long hours spent under a constant ‘state of alert’, the shortage of skills and pressure from senior management and regulators. Reported breaches are a frequent reminder of the business and reputational consequences if mistakes are made or malicious activity is missed.”

Author of the report, David Slade, aPsychotherapist, points to the main stress warning signs to look out for, which include anxiety, lack of confidence, making erratic decisions, irritability, a reduction in concentration, poor time keeping and generally feeling overwhelmed. These factors can lead to bouts of insomnia, a decline in performance, increasing use of drugs or alcohol, over or under eating, taking more sick days, withdrawal, a loss of motivation and actual physical and mental exhaustion.

“As in many high-pressure professions, it is very rare for people in cyber security to seek professional help when feeling stressed or overwhelmed,” says David Slade. “We need to instil a culture of better communication and peer-to peer support as well as encouraging practical measures such as taking regular breaks, exercise and holidays as well as introducing relaxation techniquessuch as mindfulness and having time set aside to discuss individual worries and concerns.”

The CREST report urges businesses and organisations to accept responsibility to ease staff stress levels by creating an organisational culture of openness at all levels and building a flexible environment in which individuals get encouragement, advice and support. This includes access to sources of advice on mental health issues, training tools and workshops, along with stress and burnout self-help videos.With the increasingly acute skills shortage in cyber security, CREST also believes that more automation can play a part in taking the strain off overworked staff, while the use of DevSecOps can help to move from a reactiveapproach to cyber security to a ‘security by design’ model.

“Management’s urgent task is to ensure that the organisation flourishes in a way that serves both the people outside and the people inside with a way of assessing how well the psychological needs of both groups are taken into account,” says Slade. “This would ensure that any change of structure or practice does not impinge on these needs.”

The CREST report was borne out of research conducted among its members and an open Access to Cyber Day that included stress and burnout workshops.“The level of interest and engagement in putting the report together was a clear demonstration of both the growing concern around stress and burnout in the industry, and the willingness to do something about it” adds Ian Glover. “If we want to retain the skills and experience we already have while also encouraging the best new talent into the cyber security industry, we need to recognise the problems and face up to the challenges to create exciting and stimulating careers while providing the right environment and support.”

As one of the leading types of cyber-attacks, ransomware is expected to dominate cybercrime in 2020. According to PreciseSecurity.com research, weak passwords were one of the most common cybersecurity vulnerabilities in 2019, causing 30% of ransomware infections in 2019.

Weak Passwords Are the Third Most Common Ransomware Cause Globally

The recent PreciseSecurity.com research revealed that phishing scams caused more than 67% of ransomware infection globally during the last year. Another 36% of Mail Protection Service users reported ransomware attacks caused by the lack of cybersecurity training. Weak passwords were the third most common reason for ransomware infections globally in 2019.

The 30% share in the combined number of ransomware infections during the last years indicates a concerning level of password security awareness. The 2019 Google survey about beliefs and behaviors around online security showed that two in three individuals recycle the same password across multiple accounts. More than 50% admitted using one "favorite" password for the majority of the accounts. Only one-third of respondents knew how to define the password manager.

Only 12 % of US Online Users Take Advantage of Password Managers

The 2019 Statista survey reveals that 64% of US respondents find stolen passwords as the most concerning issue about data privacy. However, such a high level of concern didn't affect their habits related to keeping track of login information. According to the findings, 43% of respondents reported that their primary method of keeping track of their most crucial login information was to write it down. Another 45% of respondents named memorizing the login data as their primary method of tracking. At the same time, only 12% of US online users take advantage of password managers.

23.2 Million Victim Accounts Globally Used 123456 as Password

Using hard-to-guess passwords represent the first step in securing sensitive online information. However, according to the UK's National Cyber Security Centre 2019 survey, password re-use and weak passwords still represent a significant risk for companies and individuals all over the world.

The breach analysis indicated that 23.2 million victim accounts from all parts of the world used 123456 as a password. Another 7.8 million data breach victims chose a 12345678 password. More than 3.5 million people globally picked up the word "password" to protect access to their sensitive information.

60% of breaches in 2019 involved vulnerabilities where available patches were not applied.

ServiceNow has released its second sponsored study on cybersecurity vulnerability and patch management,conducted with the Ponemon Institute. The study,“Costs and Consequences of Gaps in Vulnerability Response”,found that despite a 24% average increase in annual spending on prevention, detection and remediation in 2019 compared with 2018, patching is delayed an average of 12 days due to data silos and poor organisational coordination. Looking specifically at the most critical vulnerabilities, the average timeline to patch is 16 days.

At the same time, the risk is increasing. According to thefindings, there was a 17% increase in cyberattacks over the past year, and 60% of breaches were linked to a vulnerability where a patch was available, but not applied. The study surveyed almost 3,000 security professionals in nine countries to understand how organisations are responding to vulnerabilities.In this report, ServiceNow presents the consolidatedfindings and comparisons to its 2018 study,Today’s State of Vulnerability Response: Patch Work Requires Attention.

The survey results reinforce a need for organisations to prioritise more effective and efficient security vulnerability management:

Thefindings also indicate a persistent cybercriminal environment, underscoring the need to act quickly:

The report points to other factors beyond staffing that contribute to delays in vulnerability patching:

According to thefindings, automationdelivers a significant payoff in terms of being able to respond quickly and effectively to vulnerabilities. Four infive (80%) of respondents who employ automation techniques say they respond to vulnerabilities in a shorter timeframe through automation.

“This study shows the vulnerability gap that has been a growing pain point for CIOs and CISOs,” said Jordi Ferrer, Vice President and General Manager UK&I at ServiceNow. “Companies saw a 30% increase in downtime due to patching of vulnerabilities, which hurts customers, employees and brands. Many organisations have the motivation to address this challenge but struggle to effectively leverage their resources for more impactful vulnerability management. Teams that invest in automation and maturing their IT and security team interactions will strengthen the security posture across their organisations.”

Cloud Industry Forum finds that the Cloud is critical to more than eight in ten Digital Transformation projects.

Over a quarter (28%) of businesses in the UK now have a fully formed digital transformation strategy in place, and over half (54%) are in the process of implementing one, according to the latest research from Cloud Industry Forum (CIF). Critically the Cloud is seen as either very important or vital to organisations’ digital strategies to 82% of respondents.

Launched today, the research, which was conducted by Vanson Bourne and surveyed UK-based IT and business decision-makers, sought to understand how they were exploiting cloud and other next generation technologies, and the barriers standing in the way of adoption.

Almost all of the respondents (98%) said that their digital transformation strategy is at least fairly clearly defined, with just over a third (34%) having full clarity. When asked if their organisation is doing enough to become fully digitised 28% felt they were doing more than enough, and a further 56% stated that they were doing just enough. There is still work to be done with a lack of skills (40%) and the perennial lack of budget (38%) being cited as key hinderances to further and deeper digital transformation.

Alex Hilton, CEO, Cloud Industry Forum, stated: “Digital transformation is a well and truly established concept, with only a tiny minority of our sample not embracing it in some way. We are beginning to see greater clarity in the way leaders are formulating their strategies, but it does not mean it is time to rest on our laurels.

“There is still much that businesses can do to speed up processes, build efficiency and convince all leaders that digital is the way to go. Cloud’s role in all of this remains vital, given its emphasis on flexibility at a time when these qualities are more important than ever.”

Key findings include:

Alex Hilton, continued: “UK businesses clearly recognise the need for transformation and are gradually leaving legacy technologies behind in favour of next generation technologies as they pursue competitive advantage. Cloud is critical to this shift, thanks not only to the flexibility of the delivery model, but also the ease with which servers can be provisioned, which reduces financial and business risk. Furthermore, cloud’s ability to explore the value of vast unstructured data sets is next to none, which in turn is essential for IoT and AI.

“However, it’s clear that the majority of UK organisations are right at the start of this journey and many are being prevented from exploiting IoT, blockchain and AI due to skills shortages, a lack of vision, and, indeed, a lack of support from vendors. The research found that the lack of human resources, alongside a general skills shortage and a lack of budget, are the biggest challenges hampering further transformation.

“According to the research 56% of the sample cited that they are looking for strong, trusted relationships with suppliers, and a further 51% are looking for deep technical knowhow. The vendor community and the channel have a big role to play here, refining their service and support capabilities, and helping end users comprehend the transformative potential of these next generation technologies,” concluded Alex.

Worldwide IT spending is projected to total $3.9 trillion in 2020, an increase of 3.4% from 2019, according to the latest forecast by Gartner, Inc. Global IT spending is expected to cross into $4 trillion territory next year.

“Although political uncertainties pushed the global economy closer to recession, it did not occur in 2019 and is still not the most likely scenario for 2020 and beyond,” said John-David Lovelock, distinguished research vice president at Gartner. “With the waning of global uncertainties, businesses are redoubling investments in IT as they anticipate revenue growth, but their spending patterns are continually shifting.”

Software will be the fastest-growing major market this year, reaching double-digit growth at 10.5% (see Table 1). “Almost all of the market segments with enterprise software are being driven by the adoption of software as a service (SaaS),” said Mr. Lovelock. “We even expect spending on forms of software that are not cloud to continue to grow, albeit at a slower rate. SaaS is gaining more of the new spending, although licensed-based software will still be purchased and its use expanded through 2023.”

Table 1. Worldwide IT Spending Forecast (Billions of U.S. Dollars)

| 2019 Spending | 2019 Growth (%) | 2020 Spending | 2020 Growth (%) | 2021 Spending | 2021 Growth (%) |

Data Center Systems | 205 | -2.7 | 208 | 1.9 | 212 | 1.5 |

Enterprise Software | 456 | 8.5 | 503 | 10.5 | 556 | 10.5 |

Devices | 682 | -4.3 | 688 | 0.8 | 685 | -0.3 |

IT Services | 1,030 | 3.6 | 1,081 | 5.0 | 1,140 | 5.5 |

Communications Services | 1,364 | -1.1 | 1,384 | 1.5 | 1,413 | 2.1 |

Overall IT | 3,737 | 0.5 | 3,865 | 3.4 | 4,007 | 3.7 |

Source: Gartner (January 2020)

Growth in enterprise IT spending for cloud-based offerings will be faster than growth in traditional (noncloud) IT offerings through 2022. Organizations with a high percentage of IT spending dedicated to cloud adoption is indicative of where the next-generation, disruptive business models will emerge.

“Last quarter, we introduced the ‘and’ dilemma where enterprises are challenged with cutting costs and investing for growth simultaneously. Maturing cloud environments is an example of how this dilemma is alleviated: Organizations can expect a greater return on their cloud investments through cost savings, improved agility and innovation, and better security. This spending trend isn’t going away anytime soon.”

The headwind coming from a strong U.S. dollar has become a deterrent to IT spending on devices and data center equipment in effected countries. “For example, mobile phone spending in Japan will decline this year due to local average selling prices going up as a result of the U.S. dollar increasing. The U.K.’s spending on PCs, printers, servers and even external storage systems is expected to decline by 3%, too,” said Mr. Lovelock.

Despite last quarter showing the sharpest decline within the device market among all segments, it will return to overall growth in 2020 due to the adoption of new, less-expensive phone options from emerging countries. “The almost $10 billion increase in device spending in Greater China and Emerging Asia/Pacific is more than enough to offset the expected declines in Western Europe and Latin America,” said Mr. Lovelock.

Collaboration on the increase

Over the next two years, 50% of organizations will experience increased collaboration between their business and IT teams, according to Gartner, Inc. The dispute between business and IT teams over the control of technology will lessen as both sides learn that joint participation is critical to the success of innovation in a digital workplace.

“Business units and IT teams can no longer function in silos, as distant teams can cause chaos,” said Keith Mann, senior research director at Gartner. “Traditionally, each business unit has had its own technology personnel, which has made businesses reluctant to follow the directive of central IT teams. Increasingly, however, organizations now understand that a unified objective is essential to ensure the integrity and stability of core business. As a result, individuals stay aligned with a common goal, work more collaboratively and implement new technologies effectively across the business.”

Evolution of the Role of Application Leader

The role of application leader has changed significantly with the replacement of manual tasks by cloud-based applications in digital workplaces. The application leader must ensure that this transition is supported by appropriate skills and talent.

As more and more organizations opt for cloud-based applications, AI techniques such as machine learning, natural language processing (NLP), chatbots and virtual assistants are emerging as digital integrator technologies. “While the choice of integration technologies continues to expand, the ability to use designed applications and data structures in an integrated manner remains a complex and growing challenge for businesses. In such scenarios, application leaders need to deliver the role of integration specialists in order to ensure that projects are completed faster and at lower cost,” said Mr. Mann.

Application leaders will have to replace the command-and-control model with versatility, diversity and team engagement with key stakeholders. Application leaders must become more people-centric and provide critical support to digital transformation initiatives.

Additionally, in a digital workplace, it is the application leader’s responsibility to serve as the organizational “nerve center” by quickly sensing, responding to, and provisioning applications and infrastructures. “Application leaders will bring together business units and central IT teams to form the overall digital business team,” said Mr. Mann.

Much management work to be fully automated by 2024

Artificial intelligence (AI) and emerging technologies such as virtual personal assistants and chatbots are rapidly making headway into the workplace. By 2024, Gartner, Inc. predicts that these technologies will replace almost 69% of the manager’s workload.

“The role of manager will see a complete overhaul in the next four years,” said Helen Poitevin, research vice-president at Gartner. “Currently, managers often need to spend time filling in forms, updating information and approving workflows. By using AI to automate these tasks, they can spend less time managing transactions and can invest more time on learning, performance management and goal setting.”

AI and emerging technologies will undeniably change the role of the manager and will allow employees to extend their degree of responsibility and influence, without taking on management tasks. Application leaders focused on innovation and AI are now accountable for improving worker experience, developing worker skills and building organizational competency in responsible use of AI.

“Application leaders will need to support a gradual transition to increased automation of management tasks as this functionality becomes increasingly available across more enterprise applications,” said Ms. Poitevin.

AI to Foster Workplace Diversity

Nearly 75% of heads of recruiting reported that talent shortages will have a major effect on their organizations. Enterprises have been experiencing critical talent shortage for several years. Organizations need to consider people with disabilities, an untapped pool of critically skilled talent. Today, AI and other emerging technologies are making work more accessible for employees with disabilities.

Gartner estimates that organizations actively employing people with disabilities have 89% higher retention rates, a 72% increase in employee productivity and a 29% increase in profitability.

In addition, Gartner said that by 2023, the number of people with disabilities employed will triple, due to AI and emerging technologies reducing barriers to access.

“Some organizations are successfully using AI to make work accessible for those with special needs,” said Ms. Poitevin. “Restaurants are piloting AI robotics technology that enables paralyzed employees to control robotic waiters remotely. With technologies like braille-readers and virtual reality, organizations are more open to opportunities to employ a diverse workforce.”

By 2022, organizations that do not employ people with disabilities will fall behind their competitors.

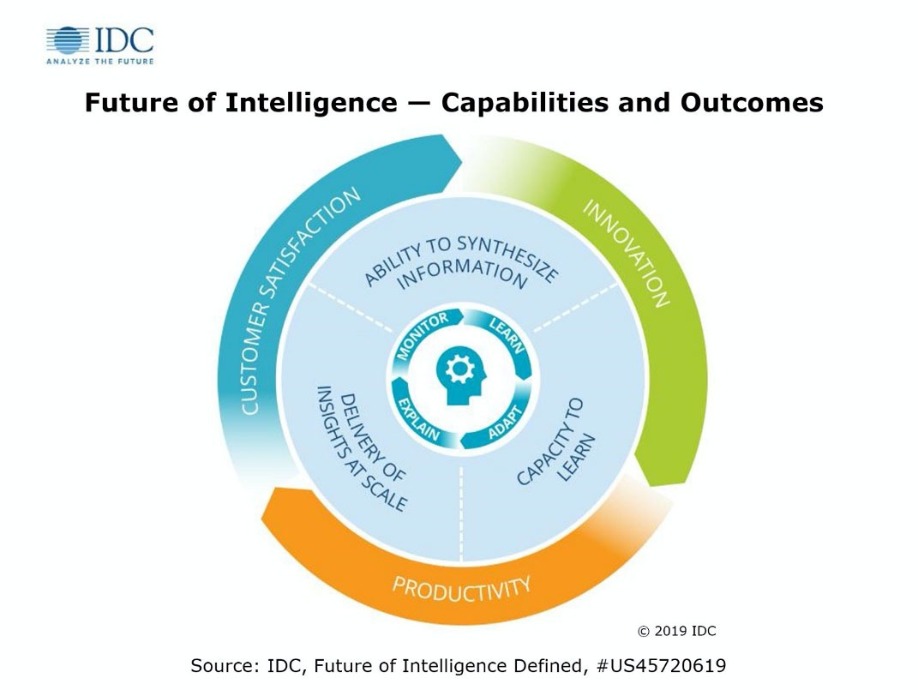

Over the past five years, International Data Corporation (IDC) has been documenting the rise of the digital economy and the digital transformation that enterprises must undergo to compete and succeed. Greater enterprise intelligence has become a top priority for business leaders on this transformation journey. By working with and observing such enterprises, IDC has developed a new Future of Intelligence framework, which provides insight and understanding for business leaders and technology suppliers.

IDC defines the future of intelligence as an organization's capacity to learn, combined with its ability to synthesize the information it needs in order to learn and to apply the resulting insights at scale. The ability to continuously learn at scale – and apply that learning across the entire organization instead of in silos at a faster rate than the competition – is the crucial differentiator that will separate those with greater enterprise intelligence enterprises from their peers.

The capabilities needed in the drive towards the future of intelligence will depend on a platform that enables ongoing explanation, monitoring, learning, and adaptation that will drive economies of intelligence.

IDC predicts that over the next four to five years, enterprises that invest in future of intelligence capabilities effectively will experience a 100% increase in knowledge worker productivity, resulting in shorter reaction times, increased product innovation, and improved customer satisfaction, in turn leading to sustainable market share leadership (or achievement of their mission) in their industry. These enterprises will be able to:

"In 2019, enterprises globally spent $190 billion on data management, analytics, and AI technologies and services — not even including labor costs or purchases of external data. How much of that spending generated intelligence and how much of that investment generated value are questions many executives are unable to answer," said Dan Vesset, group vice president, Analytics and Information Management and IDC's Future of Intelligence research practice lead. "Enterprises that achieve economy of intelligence will have a competitive advantage just as those enterprises in the past that achieved economies of scale and scope had an advantage over their peers."

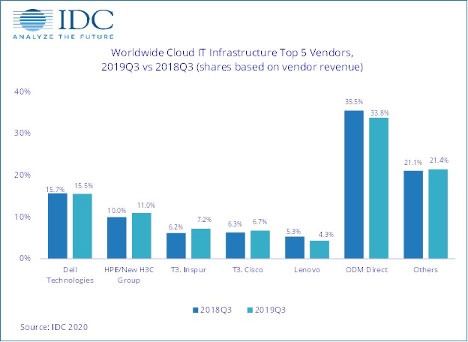

Cloud IT infrastructure revenues decline

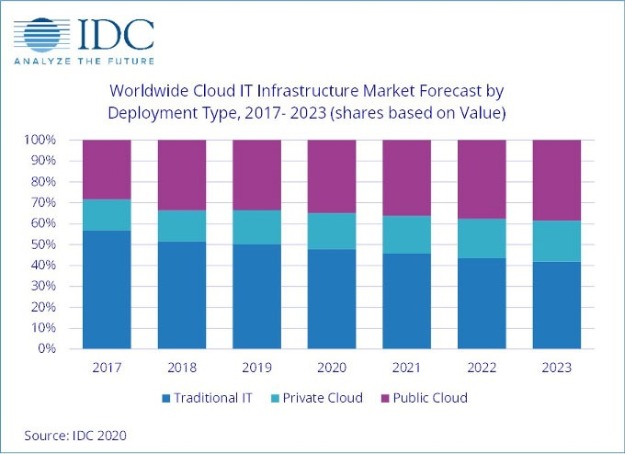

According to the International Data Corporation (IDC) Worldwide Quarterly Cloud IT Infrastructure Tracker, vendor revenue from sales of IT infrastructure products (server, enterprise storage, and Ethernet switch) for cloud environments, including public and private cloud, declined in the third quarter of 2019 (3Q19) as the overall IT infrastructure market continues to experience weakening sales following strong growth in 2018. The decline of 1.8% year over year was much softer than in 2Q19 as the overall spend on IT infrastructure for cloud environments reached $16.8 billion. IDC slightly increased its forecast for total spending on cloud IT infrastructure in 2019 to $65.4 billion, which represents flat performance compared to 2018.

The decline in cloud IT infrastructure spending was driven by the public cloud segment, which was down 3.7% year over year, reaching $11.9 billion; sequentially from 2Q19, this represents a 24.4% increase. As the overall segment is generally trending up, it tends to be more volatile quarterly as a significant part of the public cloud IT segment is represented by a few hyperscale service providers. This softness of the public cloud IT segment is aligned with IDC's expectation of a slowdown in this segment in 2019 after a strong performance in 2018. It is expected to reach $44 billion in sales for the full year 2019, a decline of 3.3% from 2018. Despite softness, public cloud continues to account for most of the spending on cloud IT environments. However, as demand for private cloud IT infrastructure is increasing, the share of public cloud IT infrastructure continued to decline in 2019 and will be declining slightly throughout the forecast period. Spending on private cloud IT infrastructure has shown more stable growth since IDC started tracking sales of IT infrastructure products in various deployment environments. In 3Q19, vendor revenues from private cloud environments increased 3.2% year over year, reaching nearly $5 billion. IDC expects spending in this segment to grow 7.2% year over year in 2019 to $21.4 billion.

As investments in cloud IT infrastructure continue to increase, with some swings up and down in the quarterly intervals, the IT infrastructure industry is approaching the point where spending on cloud IT infrastructure consistently surpasses spending on non-cloud IT infrastructure. Until 3Q19, it happened only once, in 3Q18, and in 3Q19 it crossed the 50% mark for the second time since IDC started tracking IT infrastructure deployments. In 3Q19, cloud IT environments accounted for 53.4% of vendor revenues. However, for the full year 2019, spending on cloud IT infrastructure is expected to stay just below the 50% mark at 49.8%. This year (2020) is expected to become the tipping point with spending on cloud IT infrastructure staying in the 50+% range.

Across the three IT infrastructure domains, Ethernet switches is the only segment expected to deliver visible year-over-year growth in 2019, up 11.2%, while spending on compute platforms will decline 3.1% and spending on storage will grow just 0.8%. Compute will remain the largest category of cloud IT infrastructure spending at $34.1 billion.

Sales of IT infrastructure products into traditional (non-cloud) IT environments declined 7.7% from a year ago in 3Q19. For the full year 2019, worldwide spending on traditional non-cloud IT infrastructure is expected to decline by 5.3%. By 2023, IDC expects that traditional non-cloud IT infrastructure will only represent 41.9% of total worldwide IT infrastructure spending (down from 51.6% in 2018). This share loss and the growing share of cloud environments in overall spending on IT infrastructure is common across all regions. While the industry overall is moving toward greater use of cloud, there are certain types of workloads and business practices, and sometimes end user inertia, which keep demand for traditional dedicated IT infrastructure afloat.

Geographically, the cloud IT Infrastructure segment had a mixed performance in 3Q19. Declines in the U.S., Western Europe, and Latin America were driven by overall market weakness; in these and some other regions 3Q19 softness in cloud IT infrastructure spending was also affected by comparisons to a strong 3Q18. In Asia/Pacific (excluding Japan), the second largest geography after the U.S., spending on cloud IT infrastructure increased 1.2% year over year, which is low for this region. However, it is in comparison with strong double-digit growth in 2018. Other growing regions in 3Q19 included Canada (4.9%), Central & Eastern Europe (4.6%), and Middle East & Africa (18.1%).

Top Companies, Worldwide Cloud IT Infrastructure Vendor Revenue, Market Share, and Year-Over-Year Growth, Q3 2019 (Revenues are in Millions) | |||||

Company | 3Q19 Revenue (US$M) | 3Q19 Market Share | 3Q18 Revenue (US$M) | 3Q18 Market Share | 3Q19/3Q18 Revenue Growth |

1. Dell Technologies | $2,616 | 15.5% | $2,684 | 15.7% | -2.6% |

2. HPE/New H3C Group** | $1,846 | 11.0% | $1,708 | 10.0% | 8.0% |

3. Inspur/Inspur Power Systems* *** | $1,215 | 7.2% | $1,058 | 6.2% | 14.8% |

3. Cisco* | $1,133 | 6.7% | $1,079 | 6.3% | 5.0% |

5. Lenovo | $723 | 4.3% | $906 | 5.3% | -20.2% |

ODM Direct | $5,687 | 33.8% | $6,087 | 35.5% | -6.6% |

Others | $3,607 | 21.4% | $3,607 | 21.1% | 0.0% |

Total | $16,827 | 100.0% | $17,129 | 100.0% | -1.8% |

IDC's Quarterly Cloud IT Infrastructure Tracker, Q3 2019 | |||||

Notes:

* IDC declares a statistical tie in the worldwide cloud IT infrastructure market when there is a difference of one percent or less in the vendor revenue shares among two or more vendors.

** Due to the existing joint venture between HPE and the New H3C Group, IDC reports external market share on a global level for HPE as "HPE/New H3C Group" starting from Q2 2016 and going forward.

*** Due to the existing joint venture between IBM and Inspur, IDC will be reporting external market share on a global level for Inspur and Inspur Power Systems as "Inspur/Inspur Power Systems" starting from 3Q 2018.

Long-term, IDC expects spending on cloud IT infrastructure to grow at a five-year compound annual growth rate (CAGR) of 7%, reaching $92 billion in 2023 and accounting for 58.1% of total IT infrastructure spend. Public cloud datacenters will account for 66.3% of this amount, growing at a 6% CAGR. Spending on private cloud infrastructure will grow at a CAGR of 9.2%.

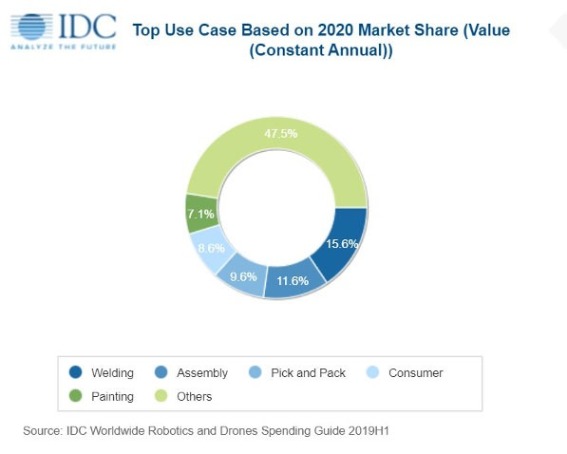

Spending on robotics systems and drones forecast to reach $128.7 Billion in 2020

Worldwide spending on robotics systems and drones will be $128.7 billion in 2020, an increase of 17.1% over 2019, according to a new update to the International Data Corporation (IDC) Worldwide Robotics and Drones Spending Guide. By 2023, IDC expects this spending will reach $241.4 billion with a compound annual growth rate (CAGR) of 19.8%.

Robotics systems will be the larger of the two categories throughout the five-year forecast period with worldwide robotics spending forecast to be $112.4 billion in 2020. Spending on drones will total $16.3 billion in 2020 but is forecast to grow at a faster rate (33.3% CAGR) than robotics systems (17.8% CAGR).

Hardware purchases will dominate the robotics market with 60% of all spending going toward robotic systems, after-market robotics hardware, and system hardware. Purchases of industrial robots and service robots will total more than $30 billion in 2020. Meanwhile, robotics-related software spending will mostly go toward purchases of command and control applications and robotics-specific applications. Services spending will be spread across several segments, including systems integration, application management, and hardware deployment and support. Services spending is forecast to grow at a slightly faster rate (21.3% CAGR) than software or hardware spending (21.2% CAGR and 15.5% CAGR, respectively).

"Software developments are among the most important trends currently shaping the robotics industry. Solution providers are progressively integrating additional software-based, often cloud-based, functionalities into robotics systems. An operational-centric example is an asset management application to monitor the robotic equipment performance in real-time. It aligns solutions with current expectations for modern operational technology (OT) at large and plays in facilitated adoption by operations leaders," said Remy Glaisner, research director, Worldwide Robotics: Commercial Service Robots. "Equally important is the early trend driven by burgeoning 'software-defined' capabilities for robotics and drone solutions. The purpose is to enable systems beyond some of the limitations imposed by hardware and to open up entirely new sets of commercially viable use-cases."

Discrete manufacturing will be responsible for nearly half of all robotics systems spending worldwide in 2020 with purchases totaling $53.8 billion. The next largest industries for robotics systems will be process manufacturing, resource industries, healthcare, and retail. The industries that will see the fastest growth in robotics spending over the 2019-2023 forecast are wholesale (30.5% CAGR), retail (29.3% CAGR), and construction (25.2% CAGR).

"Despite movement toward a trade agreement between the U.S. and China, it appears that tariffs may remain in place on many robotics systems. This will have a negative impact on both the manufacturing and resource industries, where robotics adoption has been strong. The additional duties will likely slow investment in the robotics systems used in manufacturing processes, automated supply chains, and mining operations," said Jessica Goepfert, program vice president, Customer Insights & Analysis.

Spending on drones will also be dominated by hardware purchases with more than 90% of the category total going toward consumer drones, after-market sensors, and service drones in 2020. Drone software spending will primarily go to command and control applications and drone-specific applications while services spending will be led by education and training. Software will see the fastest growth (38.2% CAGR) over the five-year forecast, followed closely by services (37.6% CAGR) and hardware (32.8% CAGR).

Consumer spending on drones will total $6.5 billion in 2020 and will represent nearly 40% of the worldwide total throughout the forecast. Industry spending on drones will be led by utilities ($1.9 billion), construction ($1.4 billion), and the discrete manufacturing and resource industries ($1.2 billion each). IDC expects the resource industry to move ahead of both construction and discrete manufacturing to become the second largest industry for drone spending in 2021. The fastest growth in drone spending over the five-year forecast period will come from the federal/central government (63.4% CAGR), education (55.9% CAGR), and state/local government (49.9% CAGR).

"We expect to see some price increases as drone manufacturers pass on the cost of tariffs imposed on the import/export of drones. The construction and resource industries will particularly feel the effects of these price increases. In contrast, many consumer drone manufacturers have chosen against raising prices and are absorbing the additional costs in order to maintain supply and to satisfy continuing consumer demand for drones. While the pending trade agreement offers some hope, these industries will face continued headwinds as long as tariffs remain in place," said Stacey Soohoo, research manager, Customer Insights & Analysis. "Elsewhere, robotics manufacturers will continue to face the one-two punch of higher costs for both materials and imported components."

On a geographic basis, China will be the largest region for drones and robotics systems with overall spending of $46.9 billion in 2020. Asia/Pacific (excluding Japan and China) (APeJC) will be the second largest region with $25.1 billion in spending, followed by the United States ($17.5 billion) and Western Europe ($14.4 billion). China will also be the leading region for robotics systems with $43.4 billion in spending this year. The United States will be the largest region for drones in 2020 with spending of nearly $5.7 billion. The fastest spending growth for robotics systems will be in the Middle East & Africa which will see a five-year CAGR of 24.9%. China will follow closely with a CAGR of 23.5%. The fastest growth in drone spending will be in APeJC, with a five-year CAGR of 78.5%, and Japan (63.0% CAGR).

Blockchain has been capturing the imagination of both businesses and government organisations, however it can be difficult to distinguish between hype and the real potential of this technology.

By Scrinath Perera, VP of Research, WS02.

Blockchain promises to redefine trust - it lets us build decentralised systems where we do not need to trust the owners of the system. Likewise, blockchain lets previously untrusted parties establish trust quickly and efficiently. This enables developers to build novel applications that can work in untrusted environments. However it is not readily apparent where blockchain use cases are feasible and can deliver clear value. Here at WS02 we recently analysed blockchain’s viability using the Emerging Technology Analysis Canvas (ETAC) taking a broad view of emerging technology and probing impact, feasibility, risks and future timelines.

It’s hard to talk about all the different blockchain use cases collectively and come to sensible conclusions. This is because they are many and varied and each will have varying requirements and goals. Therefore, we began our analysis by surveying the feasibility of 10 categories of blockchain use cases including digital currency, ledgers and lightweight financial systems, among others, and we put these into various categories. This has enabled us to identify a number of common traits.

New versus old systems of trust

What we found was that most of the use cases we looked at have already been solved in some way. So, why do we need to implement blockchain?

The answer is that blockchain can provide a new kind of trust.

Traditional, pre-blockchain systems generally function effectively. However, they implicitly assume two kinds of trust. In the first instance, we trust the “super users” of what are centralised implementations. Here, one person or a few individuals have deep access to the system and are deemed trustworthy. Alternatively, with an organisation or government, we can reasonably assume processes are in place that should deter wrongdoing.

The second way we establish trust is through an out-of-bound means, such as signing a legal contract, obtaining a reference or by providing a credit card to gain access. This is why most systems or ecosystems require you to provide credentials, which you need to create through some other channel, before you can access them.

Unlike traditional trust systems, blockchain-based systems can operate without either of the assumptions being true. Operating without the first assumption is known as decentralisation and doing so without the second is known as “dynamic trust establishment.” However, our ability to operate without these assumptions and achieve a new level of trust does not always mean that we should. We need to consider the trade-off between the cost of using blockchain and the potential return. This is not a technical decision, but one that looks at values and how much risk we’re willing to take.

Weighing the costs of blockchain

Our analysis identified several challenges, some of these were technical and will likely be fixed in the future, others were risks that are inherent aspects of blockchain and unlikely to change. Blockchain challenges are limited scalability and latency, limited privacy, storage constraints and unsustainable consensus (e.g. current consensus algorithms are slow and consume significant computing power). Meanwhile, blockchain risks include irrevocability, regulator absence, misunderstood side effects, fluctuations in bitcoin prices and unclear regulatory responses.

We evaluated all of the use case categories in the context of blockchain’s challenges and risks and arrived at three levels of feasibility.

First, blockchain technology is ready for applications in digital currency, including initial coin offerings (ICOs); provenance, e.g. supply chains and other B2B scenarios; and disintermediation. We expect to see use cases in the next three years.

Second, ledgers (of identity, ownership, status and authority), voting and healthcare, are only feasible for limited use cases where the technical limitations do not hinder them.

For other use cases such as lightweight financial systems, smart contracts, new internet apps and autonomous ecosystems, blockchain faces significant challenges, including performance, irrevocability, the need for regulation and lack of consensus mechanisms. These are hard problems to fix and could take at least five to 10 years to resolve. In most cases, today’s centralised or semi-centralised solutions for establishing trust are faster, have more throughput and are cheaper than decentralised blockchain-based solutions.

So is blockchain worth it?

Neither the decentralisation nor the dynamic trust establishment enabled by blockchain is free. However, while true decentralisation is expensive, once in place, it makes dynamic trust establishment easy to implement.

Decentralisation can be useful in a number of scenarios such as: limiting government censorship and control; avoiding having a single organisation controlling critical systems; preventing rogue employees from causing significant damages. It also enables system rules to be applied to everyone evenly and reduces damage because fewer user accounts are compromised in the event of a hack/system breach..

Moreover, the polarised arguments around blockchain’s value suggest there is no shared understanding of the value of decentralisation. Organisations express concern around the arbitrary power of governments as well as large organisations, but do they understand the trade-offs and additional resources required to attain higher trust? Similarly, privacy is a concern, but most of us share data daily in exchange for free access to the internet and social media platforms.

Clearly, decentralisation needs to be part of a policy decision that is taken only after wide discussion. On the one hand, in an increasingly software-controlled world — from banking to healthcare and autonomous cars — the risks associated with centralised systems are increasing. That said, trying to attain full decentralisation could kill blockchain, especially if overly ambitious targets are set because the cost will be prohibitive.

Fortunately, centralised versus decentralised does not have to be an all-or-nothing decision. Multiple levels of decentralisation are possible. For example, private blockchains are essentially semi-decentralised because any action requires consensus among a few key players. Therefore, it is important to critically examine each blockchain use case.

Significant financial investments have been made in blockchain, but if the quest for a fully decentralised solution takes too long; it will put the future of blockchain at risk. This makes a good case for starting with a semi-decentralised approach to minimise risk and then strive for full decentralisation in a second phase.

A full analysis of the trade-offs

Blockchain provides mechanisms for establishing trust that reduce the risks associated with centralised systems and enable agility by automating the verification required to establish trust. However, compared to current decentralised or semi-decentralised blockchain solutions, centralised solutions are faster, have more throughput and cost less to implement. That said, as governments and market demands address the technical challenges blockchain faces, the associated costs and barriers to implementation will be reduced. In summary, deciding which blockchain use case to invest in and when requires deep critical analysis of all the trade-offs.

Blockchain. The hype was massive – businesses everywhere were going to be leveraging the benefits of blockchain technology – not just the gamers and the financial sector. While blockchain activity hasn’t stalled, neither does it seem to have gained the predicted, unstoppable market momentum just yet. So, what’s going on? We asked a range of industry experts for their take on blockchain’s potential in the business world. Part 1.