The January issue of Digitalisation World contains a substantial amount of predictions for 2020 and beyond – following on from a similar series of articles in both our November and December 2019 issues. The good news is that…the February issue will include/conclude the predictions series. So, by then you’ll have a substantial quantity of ideas and opinions which will, hopefully, help you make your own decisions as to what technology developments and what business trends are worth investigating and/or implementing during the coming year.

If I might be allowed to add a prediction of my own? Well, I just do not believe that the hype around 5G will turn into serious deliverables for quite some time. By this I mean that, while 5G may well find deployments within organisations – whether in the office or on the factory floor – the wholescale rollout of 5G infrastructure across cities, regions and, ultimately, whole countries, is some way off. Primarily, because of the expense of building out the required infrastructure against a fairly unpredictable return.

Of course, it maybe that our friends the hyperscalers come up with some killer apps and decide that, rather than wait for the telcos to do the expensive ‘heavy-lifting’, they’ll build out the infrastructure themselves, but it’s not immediately obvious what those apps might be.

Right now, as I may well have mentioned before, when I make the drive home from London to Wiltshire, as soon as I leave the M4 in Berkshire and join the A4 (still a major road) my mobile phone signal varies from an unreliable 4G, through 3G and GPRS to absolutely nothing. If the telcos don’t think it financially worth their while to provide decent mobile phone connectivity right now, why would they go to the expense of building out a 5G network for no obvious return?

And don’t be fooled into thinking that autonomous vehicles will be that killer app. The more I think about it, I just can’t see AVs ever gaining serious traction, unless, and this is a big unless, the insurance industry has a massive overhaul, and the automotive industry is prepared to cannibalise itself – or a new breed of vehicle manufacturers springs up.

So, the multi-billion car industry is going to stand by and watch as pretty much commoditised AVs replace the massive variety of vehicles currently available. They are barely accepting of electric vehicles…

However, even if AVs do gain momentum, there is still the issue of responsibility. The ‘dream’ AV experience is supposed to be one where a central pool of vehicles can be called upon when required. So, a vehicle is ordered for a night out, duly collects and delivers passengers to their required destination, and picks them up at the end of the night and drops them off home. And if a few drinks have been taken, then no worries, because the AV has everything under control.

Right now, AI-assisted vehicles do a great job of helping drivers manage their speed, their parking and various other tasks, but will any AV manufacturer, or, more importantly, an insurer, ever remove any shred of responsibility from the driver? Unlikely.

Oh, and as above, who’s going to build the required, reliable, resilient control network for those stretches of road which are used by a handful of cars a day?

And we haven’t even started to talk about the security aspect.

The vast majority (92%) of debt and equity investors surveyed expect the overall value of investment into Europe’s data centre infrastructure to increase over the next 24 months, according to research commissioned by DLA Piper and published today.

Data centres are used by organisations for the remote storage, processing and distribution of large amounts of data and are currently estimated to use 3-4% of world’s power. According to DLA Piper’s report European Data Centre Investment Outlook: Opportunities and Risks in the Months Ahead, investors anticipate an investment increase in data centres of between 10% and 29% over the next two years.

Data from Acuris in the report shows that the first half of 2019 has already seen a notable rise in investment - with EUR1 billion flooding into the data centres market in H1 alone, compared with a total of EUR1.5 billion for the whole of 2018.

Data centre investment levels in Europe has been impacted by Brexit uncertainty. All respondents agreed that it has negatively impacted the data centre infrastructure market since June 2016, with 56% of equity investors going as far as to say that the negative impact has been ‘significant’. On the flip side, the continuing weakness of the sterling means UK assets may look like a bargain for Eurozone investors.

In an increasingly interconnected world, with an ever-expanding need for data storage facilities, respondents are expecting rent charges to increase for data centres with superior technology, with over a third expecting the increase to be 10% or more.

The majority of respondents chose Germany as the European country that will see the biggest growth in data centre project investment over the next 24 months. Investors also expect the UK to see some of the biggest investment growth in the industry, followed by the Netherlands and France.

Commenting on the findings, partner and head of the Infrastructure sector, EMEA and Asia Pacific, at DLA Piper, Martin Nelson-Jones, said: “Investment into European data centres has spiked recently, with transaction values reaching a new high. Figures for the first half of 2019 suggest strongly that another record year could be in sight. While not without risks, data centres are attractive to many infrastructure investors.”

Intellectual Property & Technology partner at DLA Piper, Anthony Day, said: “What makes data centres so attractive to many investors? Strong fundamentals help. While data centre investment can involve a higher level of risk as compared to other types of infrastructure assets, demand for big data, cloud computing, artificial intelligence and the Internet of Things is rising significantly. The macro trend is that these technologies drive significantly increased demand for data and digital services and, by extension, the buildings and equipment that make them possible.”

Survey with IDG reveals that empowering business units has led to more complex audits, unchecked costs and security vulnerabilities.

In a new report from IDG Connect and Snow Software 67% of IT leaders said at least half of their spend is now controlled by individual business units. While most believe this is beneficial for their organisation, it presents new challenges when combined with increased cloud usage – 56% of IT leaders are concerned with hidden cloud costs and nearly 90% worry about the prospect of vendor audits within cloud environments. The survey, conducted to understand how the rise of infrastructure-as-a-service (IaaS) and democratised IT spending is impacting businesses, found that more than half of IT leaders expressed the need to gain better visibility of their IT assets and spending across their organisation.

Business Units Control a Significant Share of Tech Spend – Which is a Mixed Bag

Traditionally, technology purchasing and management was controlled by IT departments. The cloud and as-a-service models shifted this dynamic, enabling employees throughout the organisation to easily buy and use technology without IT’s involvement. IT leaders are embracing this trend, with 78% reporting that the shift in technology spending is a positive for their organisations. But decentralised IT procurement also creates new complexities for organisations as they try to manage their increasingly diverse IT estates.

The IT leaders in the study voiced concern that the shift in spending to business units:

In fact, more than three-fourths (78%) said audit preparation is growing increasingly complex and time consuming.

Executives are Justified in Worrying About Audits

Results suggest that annual audits are now the rule rather than the exception – 73% of those surveyed said they have been audited by at least one software vendor in the past 12 months.

When asked which vendors they had been audited by within the last year, 60% said Microsoft, 50% indicated IBM and 49% pointed to SAP. Such enterprise software audits can put a tremendous strain on internal resources and result in six, seven and even eight-figure settlement bills.

The vast majority of IT leaders surveyed said they are concerned about the looming possibility of audits, specifically when it comes to IaaS environments. When asked if the thought of software vendor audits for licensed usage on the IaaS front worries them, 60% responded “yes, very much so” and 29% said they are somewhat concerned.

The Roles and Requirements for IT Have Changed

Survey respondents also voiced concern that with the decentralisation of IT spending within their organisations, they will be held responsible for something they currently can’t control. More than half (59%) said that in the next two years they need to gain better visibility of the IT estate. Just slightly less than that (52%) said in that same timeframe, they would have to obtain an increased understanding of who is spending what on IT within the larger organisation.

“As the research highlights, the shift to cloud services coupled with democratised technology spend is fundamentally changing the way businesses and IT leaders need to operate,” said Sanjay Castelino, Chief Product Officer at Snow. “Empowering business units to get the technology they need is largely a positive development, but it creates challenges when it comes to visibility and control – and that can put organisations at risk of having problematic audits. It is more important than ever for organisations to have complete insight and manageability across all of their technology in the IT ecosystem.”

Over half of European manufacturers are implementing AI use cases in the sector with Germany a frontrunner on 69% AI adoption versus US at 28% and China at 11%.

A new report from the Capgemini Research Institute highlights that the European market is leading in terms of implementing Artificial Intelligence (AI) in manufacturing operations. 51% of top global manufacturers in Europe are implementing at least one AI use case. The research also analyzed 22 AI use cases in operations and found that manufacturers can focus on three use cases to kickstart their AI journey: intelligent maintenance, product quality control, and demand planning.

Capgemini’s report entitled ‘Scaling AI in manufacturing operations: A practitioners’ perspective’analyzed AI implementation among the top 75 global organizations in each of four manufacturing segments: Industrial Manufacturing, Automotive, Consumer Products and Aerospace & Defense. The study found that AI holds tremendous potential for industries in terms of reduced operating costs, improved productivity, and enhanced quality. Top global manufacturers in Germany (69%), France (47%) and the UK (33%) are the frontrunners in terms of deploying AI in manufacturing operations, according to the research.

Key points from the report include:

AI is being utilized and making a difference across the operation value chain

Leading organizations are using AI across manufacturing operations to significant benefit. Examples include food company Danone[1] which has succeeded in reducing forecast errors by 20% and lost sales by 30% through using machine learning to predict demand variability. Meanwhile, tire manufacturer Bridgestone[2] has introduced a new assembly system based around automated quality control, resulting in over 15% improvement in uniformity of product.

Manufacturers tend to focus on three main use cases to kickstart their AI journey

According to the report, manufacturers start their AI in operations journey with three use cases (out of 22 unique ones identified in the study) as they possess an optimum combination of several characteristics that make them an ideal starting point. These characteristics include: clear business value, relative ease of implementation, availability of data and AI skills, among others. Executives interviewed by Capgemini commented that product quality control, intelligent maintenance, and demand planning are areas where AI can be most easily implemented and deliver the best return-on-investment. For instance, General Motors[3] piloted a system to spot signs of robotic failures before they occur. This helps GM avoid costs of unplanned outages which can be as high as $20,000 per minute of downtime. While there is consensus on which use cases are best to get started with AI in operations, the study also points out the challenge of scaling beyond the first deployments and then systematically harvest the potential of AI beyond those initial use cases.

“As implementation of AI in manufacturing operations matures, we will see large enterprises transitioning from pilots to broader deployment,”said Pascal Brosset,Chief Technology Officer for Digital Manufacturing at Capgemini. “Quite rightly, organizations are initially focusing their efforts on use-cases that deliver the fastest, most-tangible return on investment: notably in automated quality inspection and intelligent maintenance.

“The executives we interviewed were clear that these are functions which can deliver considerable cost savings, improve the accuracy of manufacturing and eliminate waste. However, the leaders do not solely focus on these use cases but, in parallel with their deployment, prepare for the future by reinvesting part of the savings into building a scalable data/AI infrastructure and developing the supporting skills.” He further added.

The market for extended reality devices shows the typical signs of early volatility, but the long-term outlook for the technology remains positive.

The market for extended reality products, which comprises virtual reality (VR) and augmented reality (AR) devices, will enjoy 21% growth in 2019, to slightly more than 10 million devices, according to the latest forecast by technology analyst firm CCS Insight. Marina Koytcheva, vice president of forecasting, notes, "Although this growth rate might seem disappointing for a market that has had so much hype, it should be assessed pragmatically. Right now, there are still just a handful of successful devices, and a lot rides on every new iteration or new model brought to market by the established players Sony, HTC and Oculus".

CCS Insight believes that Sony is on track to record a solid 2019, and Facebook has also started gaining meaningful revenue from its Oculus devices. HTC, however, despite leading in the premium end of the market, looks set for less growth in 2019. But Koytcheva notes, "It's still early days in the nascent VR market and I have positive expectations for this exciting product category".

The recently published forecast projects that market demand will grow sixfold to 60 million units in 2023. Leo Gebbie, senior analyst at CCS Insight, covering wearables and extended reality devices, explains, "Our optimism is supported by our consumer research of early technology adopters: those who don't yet own a VR device show a strong willingness to buy an extended reality device within the next three years, particularly as more attractive and affordable products with richer content and experiences become available. I believe this is particularly good news for entry-level devices like the standalone Oculus Go headset, which retails at less than $200".

CCS Insight also notes that the variety of VR devices is growing. The company expects new products in 2020 from Oculus, HTC and others, including the highly anticipated Sony PlayStation 5, which is widely expected to be accompanied by an updated PlayStation VR headset.

A further boost to the VR market is coming from the advent of 5G mobile networks. In South Korea, currently the most advanced 5G market in the world in terms of adoption, mobile operators have successfully positioned 5G as a technology that can deliver an attractive VR experience. "Operators in other markets should be encouraged by the success of their South Korean counterparts, and we expect more consumer offerings to be built on the coupling of VR and 5G in the near future", says Koytcheva.

China will also play an important role in the growth of the VR market. The Chinese government's initiative to be a global leader in VR and AR technologies by 2025 will have an impact in the near future. Education is being targeted as one of the major sectors where VR should be adopted. This tallies with CCS Insight's prediction that extended reality will become a standard educational tool in schools in at least two countries by 2025.

"All these trends support our view that the VR market is just heating up. Some quarters will be better than others, but the direction of travel is onward and upward", concludes Koytcheva.

When it comes to AR, adoption by business users is picking up, although the numbers are currently very small. CCS Insight expects about 150,000 AR devices will be sold globally in 2019.

Adoption of AR devices in logistics and remote assistance continues to rise, and other industries are also starting to follow suit, with important vertical markets such as medicine, entertainment and travel beginning to show signs of growth. Importantly, end-to-end AR solutions, consisting of hardware, software and support, are also improving, making adoption much easier than it was a couple of years ago.

The consumer market for smart AR glasses, however, is still a few years away. Gebbie comments, "We've seen some very exciting products starting to emerge, but they're generally prototypes or early iterations of future device designs. It will be some time before mass-market products capable of delivering significant volume hit the market".

CCS Insight also notes that component miniaturization remains a major challenge. Unlike warehouse workers and other enterprise users, consumers are unlikely to be willing to accept anything heavier than and much different in appearance from traditional eyeglasses in their everyday lives. However, Gebbie concludes, "We're confident that this technology will improve rapidly, and predict that by 2022 a major consumer electronics brand will enter the consumer smart glasses market, opening the gates for strong growth in years to come".

98% say improved mobile access would benefit business outcomes.

Domo has released new research that finds business executives want greater access to company data on their mobile devices to power digital transformation across the enterprise. In fact, 98% of respondents believe that improved mobile access to decision-making data would benefit business outcomes.

Decision makers increasingly use mobile devices to conduct business but they often struggle to access the most up-to-date information while on the go. According to the study conducted by Dimensional Research, 96% of teams say it would help decision-making if stakeholders at all levels of the organization had access to up-to-the-minute data on their phones. 87% want to instantly share reports with their team and collaborate on their phones.

According to 2018 McKinsey & Company research1, deployment of mobile internet technology has emerged as the most impactful technology deployed in successful digital transformation efforts across organizations of all sizes. McKinsey’s research found that 68% of organizations that deployed mobile internet technologies reported successful digital transformations compared to 53% of companies that did not.

Other key findings about decision-making data on mobile devices from the Dimensional survey include:

“When teams have immediate access to their data, they can make better and more timely decisions,” said Josh James, founder and CEO, Domo. “The global mobile workforce2 is expected to reach 1.87 billion workers by 2022, and Domo is the best and easiest platform for customers to have the same experience with real-time data on a mobile device as they do on their laptop. Business leaders are spending more time away from their desks so that means they need data on their mobile devices to make key decisions on the go.”

The vast majority (94%) of environmental services managers in councils, local authorities, government or infrastructure agencies admit their organisation is yet to digitalise operations. Budget restrictions (47%) and outdated systems architecture (50%) are seen as two of the main barriers to digitalisation.

Nearly three quarters (72%) of those surveyed noted, however, that they expect the pace of digital transformation to accelerate in the next three to five years. Cloud architectures are expected to have the biggest impact in shaping the future of environmental services over that timeframe (42%), while mobile technologies were referenced by more than a third (35%) of respondents.

Artificial intelligence and machine learning were regarded as impactful by a third of the sample (33%), while Internet of Things (IoT) technologies, including sensors were close behind, with 25% of respondents citing them among the technologies likely to have the most impact in shaping the future of environmental services over the next three to five years.

Tim Woolven, product consultant at Yotta, said: “While most environmental services teams are well underway on their journey to digital or even nearing completion, there is still work to do. Outdated technology systems are seen as one of the main barriers but we would expect that to change over the coming years as mobile systems and cloud architectures become ever more pervasive and advanced technologies like AI, sensors and machine learning continue to mature”.

The study discovered that just under half (48%) of those polled expect improved operational efficiency to be one of the main benefits digital transformation would bring to their organisation’s environmental services provision in the future. Further to this, 44% cited ‘better employee morale’, clearly showing the importance of giving a workforce the latest technology to assist them in their roles. In total, 39% highlighted ‘enhanced data quality’ as a main benefit.

Major global data centre markets are seeing soaring construction costs as development in new and emerging hubs continues to heat up, according to research from global professional services company Turner & Townsend.

The Data Centre Cost Index 2019 highlights the intensification of investment in leading locations in the global data centre network as a trigger for escalating costs. Globally, over 40 per cent of markets surveyed are showing 'hot' construction conditions – where competition for supply chain resources is putting pressure on budgets.

The research analyses input costs – including labour and materials – across 32 key markets, alongside industry sentiment and insight from data centre professionals.

The 2019 report points to the rise of new hotspots across the globe as technological investment in emerging economies takes hold. In Nairobi, Kenya, average build costs stand at $6.5 US per watt on the back of investment required to meet the government’s focus on digitisation of the economy and in response to the arrival of tech giants.

Cost pressures are contributing to the growth of secondary markets in key geographies – including in the US and Europe. In California, Silicon Valley has risen to be the third most expensive place to build globally at a rate of $9.4 US per watt – with unprecedented construction market conditions. Inter-state competition to attract hyperscale investment in the US continues and the study indicates that construction costs in both Dallas and Phoenix ($7.4 US per watt and $7.1 US per watt respectively) are favourable over the world’s largest data centre market of Northern Virginia where costs stand on average at $8 US per watt.

European markets are seeing a significant shift, with capital costs of hyperscale development in the dominant markets of Scandinavia - in Stockholm ($8.6 US per watt) and Copenhagen ($8.5 US per watt) - now exceeding those of the established FLAP markets Frankfurt ($7.6 US per watt), London ($8.5 US per watt), Amsterdam ($7.8 US per watt) and Paris ($7.7 US per watt). Zurich remains the most expensive market to feature in the report but is also expected to be one of the hottest markets for Europe in 2020.

In this environment, respondents to Turner & Townsend’s survey view delivering within budget as a critical challenge, with 90 per cent seeing this as more important than innovation.

Global demand for new space looks set to continue into 2020, with just nine per cent of respondents to the research believing that data centre demands have been met in their markets in the last year – down from 12 per cent in 2018. 70 per cent of those surveyed highlighted the impact of data sovereignty and data protection acts – including those being brought in by the EU, Switzerland and Kenya – as a major catalyst for demand.

The most significant limiter on growth over the next five years is seen as availability of power, especially in the context of pressure on the industry to decarbonise. In Turner & Townsend’s survey, the industry is split 50:50 on whether technological advances with solid state batteries alongside green energy sources can render traditional fossil fuel generators obsolete.

Dan Ayley, global head of hi-tech and manufacturing at Turner & Townsend, said:“Data continues to be one of the most valuable commodities. As deals get bigger and more profitable, we are seeing investment in both established hot spots and emerging markets heat up – putting pressure on cost and resources.”

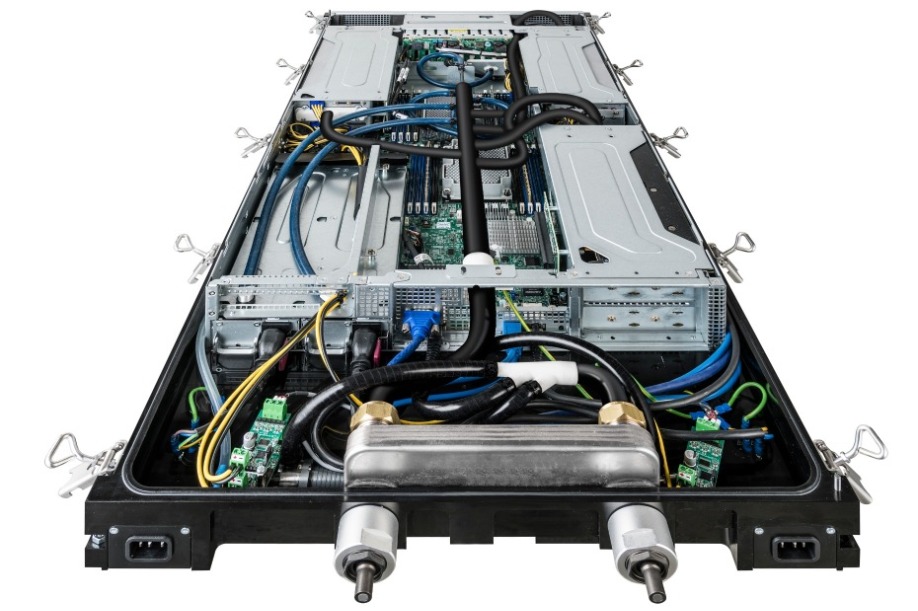

“Although our report points to certainty in delivery as the key issue for the sector across global markets, sustainability is one of the most pressing challenges coming down the track. With power density requirements for data centres increasing by as much as 50 per cent year on year, demonstrating steps towards decarbonisation needs to be a priority for how hubs are conceived, built and operated across their lifecycle.”

Leaseweb USA, a leading hosting and cloud services company, has released the results of its “Developer IaaS Insights Study,” based on a survey conducted at DeveloperWeek Austin. The research revealed that 61.4% of companies see hybrid cloud (31.6%) or private cloud (29.8%) as the infrastructure for the future of their company, and 75.9% of developers prioritized scalability, speed, ease of use and cost as top factors when choosing their IaaS hosting solution.

“As companies evolve, their hosting needs and capabilities also evolve,” said Lex Boost, CEO of Leaseweb USA. “Understanding why companies choose to migrate to an IaaS solutions vendor provides insight to not only the marketplace, but what value vendors can bring to the businesses. This survey is a microcosmic example of the current industry trend. The power, speed, flexibility and functionality of dedicated, hybrid and private cloud infrastructure environments are undeniable. Companies are shifting back to custom solutions designed to fit their exact needs, in this precise moment of their company lifecycle. The mettle of metal cannot be ignored.”

The results are particularly significant when considering that less than 20% of respondents believe they are using the industry standard, are happy with the performance of their infrastructure and have no plans to change. Further, 51.7% plan on migrating in the next two years while 26.7% have not yet made a decision as to whether they will migrate in the same timeframe. Clearly, most companies are reevaluating their DevOps infrastructures, and will either be migrating to solutions or considering solutions that more thoroughly meet their hosting and infrastructure requirements.

Barriers to Migration

To assist developers who are looking for the right fit for their company’s DevOps infrastructure needs, providers need to address cost of migration and the size of the job which rose to the top as barriers to migration that remain a prohibitive factor. Cost of migration or the size of the job were cited as top barriers to migration by 37.5% of companies, while 24.2% of respondents identified cost alone as the top barrier.

The survey also revealed 15% aren’t sure what to outsource, 8.3% are not finding the right partner and 3.3% are held ransom by public cloud providers.

Global report examines latest trends in business communications and how they affect employee productivity and the bottom line.

Mitel has published the results of its latest global report on workplace productivity and business communications trends. The independent research study - conducted by research firm Vanson Bourne with advisement and analysis from KelCor, Inc -surveyed 2,500 business professionals in five countries across North America, Western Europe and Australia to examine overarching trends in business communications and how existing communications and collaboration practices are impacting both workforce productivity and the bottom line.

Key findings from the report:

The report also looked at which methods of communications and collaboration employees find most efficient and effective, offering helpful insights for IT and business leaders looking to ensure digital transformation initiatives and related technology investments are successful.

Given the significant waste surrounding resource costs and time, it’s no surprise that 74% of respondents felt that more effective use of technology within their organization would improve their personal productivity. However, the report brings to light several interesting findings on what is causing communications inefficiency and what organizations should consider to reduce it:

Businesses have an opportunity to lessen the impact of lost productivity by aligning their tools, processes and culture to achieve better results. When it comes to communications and collaboration, clear leadership, improved planning, effective training and employee education on the goals and benefits of the tools could have a strong positive impact on adoption and ROI.

Training remains a critical form of defence against cyber-attacks.

Organisations are leaving themselves unnecessarily exposed to significant security risks. This is according to data from, Databarracks, revealing over two-thirds of IT decision-makers believe their employees regularly flout internal IT security policies.

With industry practitioners speculating on how the cyber security landscape will evolve in 2020, Peter Groucutt, managing director of Databarracks, highlights why training is still a critical form of defence against cyber-attacks.

“People are often the weakest link in the information security chain and to prevent your organisation being caught, it’s important you make employees aware of the risks. Our research has revealed two-thirds (67 per cent) of IT decision-makers believe their employees regularly circumvent company security policies.”

Groucutt continues, “Employees flouting security policies are never deliberately threatening the business – either they don’t know the possible consequences of their actions or feel too restricted by the policies in place. In any case, this neglect for security leaves an organisation exposed to threats.

“To reduce the danger, there are practical steps an organisation can take. Firstly, to develop a culture of shared responsibility, where the cyber security burden doesn’t just rest with the IT department. We understand this in the physical working environment – an unknown person would not be allowed to walk in to an office, and start taking belongings unchallenged – so why should digital security be any different?

“Secondly, lines of communication between the IT department and the rest of the business need to improve. For users to feel like they are part of the solution, they need to be aware of the ongoing battle IT face. Often, IT teams handle incidents in the background with only key senior individuals being informed, but if threats aren’t communicated internally to all employees, they won’t know how to change their behaviour in future. The IT department has a responsibility to educate the entire business on why an incident took place, what the implications were and, most importantly, what can be done to prevent this from happening again.”

Groucutt continues, “When security processes hinder an employee’s performance, they will often find a way to get around them to get a job done quicker. To avoid staff taking the easy route security must be built into an organisation’s overall strategy and communicated down through employees’ objectives. Equally, IT need to be receptive when policies are flagged for being too restrictive. That creates the dialogue and an understanding of a shared goal for IT and users.

“Finally, regular training and education is vital. Awareness training is typically only carried out annually or as part of an initial induction, but this should be increased. Employees need ongoing security refreshers throughout the year, at least twice annually, to address any new threats, and ensure security remains front of mind.”

Almost a quarter of security leaders are experimenting with quantum computing strategies, as more than half worry it will outpace existing technologies.

More than half (54%) of cyber security professionals have expressed concerns that quantum computing will outpace the development of other security technologies, according to new research from the Neustar International Security Council (NISC). Keeping a watchful eye on developments, 74% of organisations admitted to paying close attention to the technology’s evolution, with 21% already experimenting with their own quantum computing strategies.

A further 35% of experts claimed to be in the process of developing a quantum strategy, while just 16% said they were not yet thinking about it. This shift in focus comes as the vast majority (73%) of cyber security professionals expect advances in quantum computing to overcome legacy technologies, such as encryption, within the next five years. Almost all respondents (93%) believe the next-generation computers will overwhelm existing security technology, with just 7% under the impression that true ‘quantum supremacy’ will never happen.

Despite expressing concerns that other technologies will be overshadowed, an overwhelming amount (87%) of CISOs, CSOs, CTOs and security directors are excited about the potential positive impact of quantum computing. The remaining 13% were more cautious and under the impression that the technology would create more harm than good.

“At the moment, we rely on encryption, which is possible to crack in theory, but impossible to crack in practice, precisely because it would take so long to do so, over timescales of trillions or even quadrillions of years,” said Rodney Joffe, Chairman of NISC and Security CTO at Neustar. “Without the protective shield of encryption, a quantum computer in the hands of a malicious actor could launch a cyberattack unlike anything we’ve ever seen.”

“For both today’s major attacks, and also the small-scale, targeted threats that we are seeing more frequently, it is vital that IT professionals begin responding to quantum immediately. The security community has already launched a research effort into quantum-proof cryptography, but information professionals at every organisation holding sensitive data should have quantum on their radar. Quantum computing's ability to solve our great scientific and technological challenges will also be its ability to disrupt everything we know about computer security. Ultimately, IT experts of every stripe will need to work to rebuild the algorithms, strategies, and systems that form our approach to cybersecurity,” added Joffe.

The latest NISC report also highlighted a steep two-year increase on the International Cyber Benchmarks Index. Calculated based on changes in the cybersecurity landscape – including the impact of cyberattacks and changing level of threat – November 2019 saw the highest score yet at 28.2. In November 2017, the benchmark sat at just 10.1, demonstrating an 18-point increase over the last couple of years.

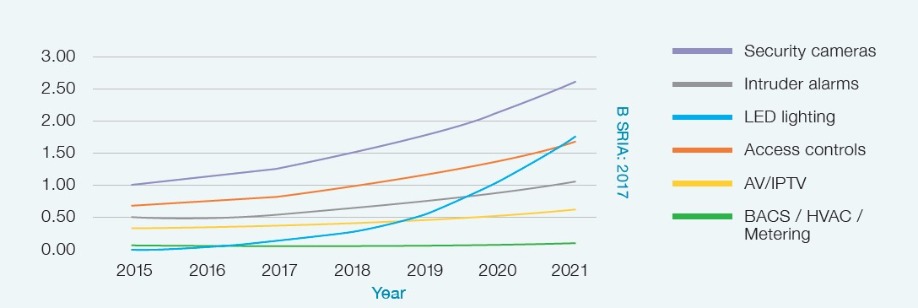

Rethink Technology Research finds that smart building penetration of the total commercial and industrial building stock will reach only 0.49% in the period, with huge room to grow.

The smart building market will grow to $92.5bn globally, by 2025, according to our research, up from around $4.2bn in 2019, with the primary driver being the desire to improve the productivity of the workers that are housed within those buildings’ walls. In most instances, no matter how you slice it, when you look at the costs of occupying a building in terms of square-meters, human capital is almost always the largest single component.

To this end, if you want to use smart building technologies to save costs or increase margins, the main use case you should be targeting is human productivity. While the technologies can certainly help manage operating costs, such as energy bills, or provide improved services such as secure access or usage analytics, on a per-dollar basis, these should not be the priority targets for new installations.

This is something of a surprise for many in the technology markets. We are accustomed to IoT technologies being used for process or resource optimization, such as smart metering providing better purchasing information for energy providers, or predictive maintenance helping to reduce operational costs and unplanned downtime.

This line of thinking is not typically extended to human workers however, but when you evaluate how buildings are used, it becomes clear that getting more out of your workforce is a better use of your budget. To this end, the IoT technologies needed to better understand and optimize a building’s internal processes and the patterns of its workers are vital, and will account for a large number of the devices installed in the smart building sector.

There’s a rule of thumb used, called the ‘3-30-300 Rule,’ which was popularized by real estate firm JLL. The gist of it is that for every square-foot of space that a company occupies in a building, it will spend $3 annually on utilities, $30 on rent, and $300 on its payroll. Based on this ratio, you can see how smart building efforts should be coordinated. A 100% efficiency improvement would only save $1.50 per square-foot per year, which is the equivalent of a 0.5% change in the payroll costs.

Using the rule, JLL argued that if you were to reduce employee absenteeism by 10%, this would equate to $1.50 per square-foot annually, and a 10% improvement in employee retention would translate to an $11 per square-foot annual saving. If you were to increase employee productivity by 10%, this would translate to $65 per square-foot per year – and it points to the World Green Building Council’s (WGBC) decree that an 18-20% improvement is quite easily achieved in the right environment.

The WGBC published a quite influential meta-study back in 2013 that combined the findings of dozens of other pieces of research, to examine the impact of sustainable building design on employee health. JLL was interested in this from the productivity perspective, and the WGBC found that eight primary factors had direct positive impacts on building occupants – in this case, workers.

The factors were natural light, good air and ventilation, temperature controls, views, and green spaces. The WGBC then posited that the following increases in productivity could be achieved by making better use of the factors: better lighting (23% increase), access to green natural spaces (18%), improved ventilation (11%), and individual temperature control (3%). JLL calculated the returns based on an algorithm some of its real estate brokers developed, but of course, those figures aren’t applicable to every building or task.

Payroll, in the commercial sector at least, is usually north of 80% of a company’s operating costs, often much closer to 90%. Industrial output has much more materials costs, and so JLL’s rule is not so applicable. However, given that the commercial sector accounts for around 63% of global GDP, with Industry on 30% and Agriculture at about 7%, the rule is still quite useful for evaluating the value of smart buildings across the spectrum.

To this end, if a company has a given budget to invest, it seems prudent to spend that cash on trying to make employees more productive, rather than save on energy bills. That’s a message that isn’t going to go down well in this environmentally-charged climate, but thankfully, many of the energy providers and associated systems integrators will install Demand Response (DR) and automation technologies through regular upgrade and replacement cycles, which will help optimize energy usage in these buildings.

Collectively, buildings and construction account for around 30-40% of global energy use and energy-related carbon dioxide emissions. Because of this, the per square-foot energy efficiency of buildings needs to improve by around 30% in order to meet the Paris Agreement environmental targets. By 2060, it is expected that the total buildings sector’s footprint will have doubled – reaching around 230bn square-meters.

This forecast examines the value of smart building technology globally, covering the proportion of the hardware that can be directly attributed to smart buildings, the associated software and management platform services, and installation and management related consulting. It does not try to forecast the total value created by the technology, nor the installation and upkeep revenues. That would be such a large number that it would not be useful.

In terms of market variation, we expect North America and Europe West to be the strongest initial market, with parts of APAC (China, Japan, South Korea) making up for the rest of that region’s low adoption. This is a pretty similar story to many of our other IoT forecasts, and there is not really reason to think that this one will be markedly different.

This is a trend that is going to take longer to emerge too, and we expect the years immediately after the forecast period to post some impressive growth. We foresee this market being more gradual than the explosive growth curves seen in other IoT markets, but due to its potential size, this slower penetration is not to be seen in a negative light.

Gartner, Inc. has highlighted the trends that infrastructure and operations (I&O) leaders must start preparing for to support digital infrastructure in 2020.

“This past year, infrastructure trends focused on how technologies like artificial intelligence (AI) or edge computing might support rapidly growing infrastructure and support business needs at the same time,” said Ross Winser, senior research director at Gartner. “While those demands are still present, our 2020 list of trends reflect their ‘cascade effects,’ many of which are not immediately visible today.”

During his presentation, Mr. Winser encouraged I&O leaders to take a step back from “the pressure of keeping the lights on” and prepare for 10 key technologies and trends likely to significantly impact their support of digital infrastructure in 2020 and beyond. They are:

Trend No. 1: Automation Strategy Rethink

In recent years, Gartner has detected a significant range of automation maturity across clients: Most organizations are automating to some level, in many cases attempting to refocus staff on higher-value tasks. However, automation investments are often made without an overall automation strategy in mind.

“As vendors continue to pop up and offer new automation options, enterprises risk ending up with a duplication of tools, processes and hidden costs that culminate to form a situation where they simply cannot scale infrastructure in the way the business expects,” said Mr. Winser. “We think that by 2025, top performing leaders will have employed a dedicated role to steward automation forward and invest to build a proper automation strategy to get away from these ad hoc automation issues.”

Trend No. 2: Hybrid IT Versus Disaster Recovery (DR) Confidence

“Today’s infrastructure is in many places — colocation, on-premises data centers, edge locations, and in cloud services. The reality of this situation is that hybrid IT will seriously disrupt your incumbent disaster recover (DR) planning if it hasn’t already,” said Mr. Winser.

Often, organizations heavily rely on “as a service (aaS)” offerings, where it is easy to overlook the optional features necessary to establish the correct levels of resilience. For instance, by 2021, the root cause of 90% of cloud-based availability issues will be the failure to fully use cloud service provider native redundancy capabilities.

“Organizations are left potentially exposed when their heritage DR plans designed for traditional systems have not been reviewed with new hybrid infrastructures in mind. Resilience requirements must be evaluated at design stages rather than treated as an afterthought two years after deployment,” said Mr. Winser.

Trend No. 3: Scaling DevOps Agility

For enterprises trying to scale DevOps, action is needed in 2020 to find an efficient approach for success. Although individual product teams typically master DevOps practices, constraints begin to emerge as organizations attempt to scale the number of DevOps teams.

“We believe that the vast majority of organizations that do not adopt a shared self-service platform approach will find that their DevOps initiatives simply do not scale,” said Mr. Winser. “Adopting a shared platform approach enables product teams to draw from an I&O digital toolbox of possibilities, all the while benefiting from high standards of governance and efficiency needed for scale.”

Trend No. 4: Infrastructure Is Everywhere — So Is Your Data

“Last year, we introduced the theme of ’infrastructure is everywhere’ that the business needs it. As technologies like AI and machine learning (ML) are harnessed as competitive differentiators, planning for how explosive data growth will be managed is vital,” said Mr. Winser. In fact, by 2022, 60% of enterprise IT infrastructures will focus on centers of data, rather than traditional data centers, according to Gartner.

“The attraction of moving selected workloads closer to users for performance and compliance reasons is understandable. Yet we are rapidly heading toward scenarios where these same workloads run across many locations and cause data to be harder to protect. Cascade effects of data movement combined with data growth will hit I&O folks hard if they are not preparing now.”

Trend No. 5: Overwhelming Impact of IoT

Successful IoT projects have many considerations, and no single vendor is likely to provide a complete end-to-end solution. “I&O must get involved in the early planning discussions of the IoT puzzle to understand the proposed service and support model at scale. This will avoid the cascade effect of unforeseen service gaps, which could cause serious headaches in future,” said Mr. Winser.

Trend No. 6: Distributed Cloud

Distributed cloud is defined as the distribution of public cloud services to different physical locations, while operation, governance, updates and the evolution of those services are the responsibility of the originating public cloud provider.

“Emerging options for distributed cloud will enable I&O teams to put public cloud services in the location of their choosing, which could be really attractive for leaders looking to modernize using public cloud,” said Mr. Winser.

However, Mr. Winser points out that the nascent nature of many of these solutions means a wide range of considerations must not be overlooked. “Enthusiasm for new services like AWS Outposts, Microsoft Azure Stack or Google Anthos must be matched early on with diligence in ensuring the delivery model for these solutions is fully understood by I&O teams who will be involved in supporting them.”

Trend No. 7: Immersive Experience

“Customer standards for the experience delivered by I&O capabilities are higher than ever,” said Mr. Winser. “Previous ‘value adds’ like seamless integration, rapid responses and zero downtime are now simply baseline customer expectations.”

Mr. Winser warned leaders that as digital business systems reach deeper into I&O infrastructures, the potential impact of even the smallest of I&O issues expands. “If the customer experience is good, you might grow in mind and market share over time; but if the experience is bad, the impacts are immediate and could potentially impact corporate reputation rather than just customer satisfaction.”

Trend No. 8: Democratization of IT

Low-code is a visual development approach to application development that is becoming increasingly appealing to business units. It enables developers of varied experience levels to create applications for web and mobile with little or no coding experience, largely driving a “self-service” model for business units instead of turning to central IT for a formal project plan.

“As low-code becomes more commonplace, the complexity of the IT portfolio increases. And when low-code approaches are successful, I&O teams will eventually be asked to provide service,” said Mr. Winser. “Starting now, it is in I&O leaders’ best interest to embed their support and exert influence over things that will inevitably affect their teams, as well as the broader organization.”

Trend No. 9: Networking — What’s Next?

In many cases, network teams have excelled in delivering highly available networks, which is often achieved through cautious change management. At the same time, the pace of change is tough for I&O to keep up with, and there are no signs of things slowing down.

Mr. Winser said that the continued pressure to keep the lights shining brightly has created unexpected issues for the network. “Cultural challenges of risk avoidance, technical debt and vendor lock-in all mean that some network teams face a tough road ahead. 2020 needs to be the time for cultural shifts, as investment in new network technologies is only part of the answer.”

Trend No. 10: Hybrid Digital Infrastructure Management (HDIM)

As the realities of hybrid digital infrastructures kick in, the scale and complexity of managing them is becoming a more pressing issue for IT leaders.

Organizations should investigate the concept of HDIM, which looks to address the primary management issues of a hybrid infrastructure. “This is an emerging area, so organizations should be wary of vendors who say they have tools that offer a single solution to all their hybrid management issues today. Over the next few years, though, we expect vendors focused on HDIM to deliver improvements that enable IT leaders to get the answers they need far faster than they can today.”

Infrastructure-led disruption will drive business innovation

By 2025, 60% of infrastructure and operations (I&O) leaders will drive business innovation using disruptive technologies, up from less than 5% who do so today, according to Gartner, Inc. This trend of infrastructure-led disruption will support the growth of the I&O function within the enterprise.

“As businesses face increased pressure to lower operating costs, many I&O leaders have been siloed into a tactical role rather than a strategic one — essentially, becoming custodians of legacy infrastructure,” said Katherine Lord, research vice president at Gartner. “The result is stunted I&O maturity over the past decade. I&O leaders who harness the power of disruptive technologies, such as cloud and artificial intelligence (AI), will discover new opportunities to serve as business innovators.”

Infrastructure-led disruption is the use of I&O technologies, processes, people, skills and capabilities to promote disruption and embrace risk. “I&O leaders who champion infrastructure-led disruption are constantly looking for new ways to use technology to deliver business value, rather than just remaining reactive to stakeholder needs,” said Ms. Lord.

New Technologies Make Way for I&O Maturity

IT infrastructure remains in a period of protracted change, spurred by new technologies that only increase the complexity of modern, distributed infrastructures. Recognizing the need for growth to remain relevant in the digital age, 45% of respondents in the Gartner 2019 I&O Executive Leaders Survey* indicated that improving maturity was among their top three goals for their I&O organizations. Embracing technologies such as automation, edge and quantum computing can help I&O leaders mature their infrastructure for the next wave of digital.

“Infrastructure-led disruption is about more than avoiding obsolescence,” said Ms. Lord. “It presents an opportunity for I&O to purposefully take an entrepreneur-like approach to extend the function beyond existing organization charts, and even reinvent the role itself. Keeping a pulse on emerging technologies will help center I&O as a part of the business innovation and market disruption they support.”

Evolve Talent, Culture and Practices for the Future of I&O

Through 2022, traditional I&O skills will be insufficient for more than half of the operational tasks that I&O leaders will be responsible for. Further, while 66% of I&O leaders believe that behaviors related to culture hinder their agility, 47% have not adapted these behaviors to align with their cultural and organizational transformation.

“I&O leaders are at a critical decision point, in which they can either choose to embrace disruption or sit in a ‘watch and wait’ mode,” said Ms. Lord. “For those who choose to take the proactive approach, new skills, talent and culture will be critical for driving change in the early stages.”

Strategic alignment and purposeful alliances with the C-suite will also be crucial for the future of I&O. As business becomes increasingly digital, I&O leaders have the chance to use their technology expertise to collaborate with other leaders on breakthrough opportunities.

“Digital transformation presents a real opportunity for I&O to align more closely with the CEO and other key business stakeholders, elevating the function to a more strategic role within the organization and helping the enterprise stay ahead of the curve when it comes to embracing disruptive technologies,” said Ms. Lord.

In its first worldwide 5G forecast, International Data Corporation (IDC) projects the number of 5G connections to grow from roughly 10.0 million in 2019 to 1.01 billion in 2023. This represents a compound annual growth rate (CAGR) of 217.2% over the 2019-2023 forecast period. By 2023, IDC expects 5G will represent 8.9% of all mobile device connections.

Several factors will help to drive the adoption of 5G over the next several years:

"While there is a lot to be excited about with 5G, and there are impressive early success stories to fuel that enthusiasm, the road to realizing the full potential of 5G beyond enhanced mobile broadband is a longer-term endeavor, with a great deal of work yet to be done on standards, regulations, and spectrum allocations," said Jason Leigh, research manager for Mobility at IDC. "Despite the fact that many of the more futuristic use cases involving 5G remain three to five years from commercial scale, mobile subscribers will be drawn to 5G for video streaming, mobile gaming, and AR/VR applications in the near term."

In addition to building out the 5G network infrastructure, mobile network operators will have a lot to do to ensure a return on their investment:

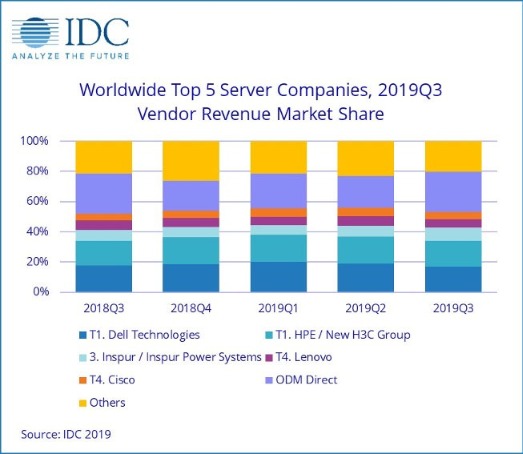

According to the International Data Corporation (IDC) Worldwide Quarterly Server Tracker, vendor revenue in the worldwide server market declined 6.7% year over year to $22.0 billion during the third quarter of 2019 (3Q19). Worldwide server shipments declined 3.0% year over year to just under 3.1 million units in 3Q19.

In terms of server class, volume server revenue was down 4.0% to $17.9 billion, while midrange server revenue declined 14.3% to $3.0 billion and high-end systems contracted by 23.7% to $1.1 billion.

"While the server market did indeed decline last quarter, next generation workloads and advanced server innovation (e.g., accelerated computing, storage class memory, next generation I/O, etc.) keep demand for enterprise compute at near historic highs," said Paul Maguranis, senior research analyst, Infrastructure Platforms and Technologies at IDC. "In fact, 3Q19 represented the second biggest quarter for global server unit shipments in more than 16 years, eclipsed only by 3Q18."

Overall Server Market Standings, by Company

Dell Technologies and the combined HPE/New H3C Group ended 3Q19 in a statistical tie* for the number one position with 17.2% and 16.8% revenue share, respectively. Revenues for Dell Technologies declined 10.8% year over year while HPE/New H3C Group was down 3.2% year over year. The third ranking server company during the quarter was Inspur/Inspur Power Systems, which captured 9.0% market share and grew revenues 15.3% year over year. Lenovo and Cisco ended the quarter tied* for the fifth position with 5.4% and 4.9% revenue share, respectively. Lenovo saw revenue decline by 16.9% year over year and Cisco saw its revenue grow 3.1% year over year.

The ODM Direct group of vendors accounted for 26.4% of total revenue and declined 7.1% year over year to $5.82 billion. Dell Technologies led the worldwide server market in terms of unit shipments, accounting for 16.4% of all units shipped during the quarter.

| Top 5 Companies, Worldwide Server Vendor Revenue, Market Share, and Growth, Third Quarter of 2019 (Revenues are in US$ Millions) | |||||

| Company | 3Q19 Revenue | 3Q19 Market Share | 3Q18 Revenue | 3Q18 Market Share | 3Q19/3Q18 Revenue Growth |

| T1. Dell Technologies* | $3,779.3 | 17.2% | $4,236.0 | 18.0% | -10.8% |

| T1. HPE/New H3C Groupa * | $3,690.8 | 16.8% | $3,811.3 | 16.2% | -3.2% |

| 3. Inspur/Inspur Power Systemsb | $1,973.3 | 9.0% | $1,711.8 | 7.3% | 15.3% |

| T4. Lenovo* | $1,188.3 | 5.4% | $1,430.1 | 6.1% | -16.9% |

| T4. Cisco* | $1,073.2 | 4.9% | $1,041.0 | 4.4% | 3.1% |

| ODM Direct | $5,816.0 | 26.4% | $6,257.2 | 26.5% | -7.1% |

| Rest of Market | $4,473.4 | 20.7% | $5,091.7 | 21.6% | -12.1% |

| Total | $21,994.3 | 100% | $23,579.1 | 100% | -6.7% |

| Source: IDC Worldwide Quarterly Server Tracker, December 5, 2019. | |||||

Notes:

* IDC declares a statistical tie in the worldwide server market when there is a difference of one percent or less in the share of revenues or unit shipments among two or more vendors.

a Due to the existing joint venture between HPE and the New H3C Group, IDC will be reporting external market share on a global level for HPE and New H3C Group as "HPE/New H3C Group" starting from 2Q 2016.

bDue to the existing joint venture between IBM and Inspur, IDC will be reporting external market share on a global level for Inspur and Inspur Power Systems as "Inspur/Inspur Power System

| Top 5 Companies, Worldwide Server Unit Shipments, Market Share, and Growth, Third Quarter of 2019 (Shipments are in thousands) | |||||

| Company | 3Q19 Unit Shipments | 3Q19 Market Share | 3Q18 Unit Shipments | 3Q18 Market Share | 3Q19/3Q18 Unit Growth |

| 1. Dell Technologies | 502,306 | 16.4% | 559,156 | 17.7% | -10.2% |

| 2. HPE/New H3C Groupa | 452,255 | 14.7% | 456,294 | 14.4% | -0.9% |

| 3. Inspur/Inspur Power Systemsb | 314,975 | 10.3% | 283,613 | 9.0% | 11.1% |

| 4. Lenovo | 204,040 | 6.6% | 193,121 | 6.1% | 5.7% |

| T5. Huawei* | 156,150 | 5.1% | 187,860 | 5.9% | -16.9% |

| T5. Super Micro* | 140,171 | 4.6% | 169,320 | 5.4% | -17.2% |

| ODM Direct | 896,625 | 29.2% | 871,476 | 27.5% | 2.9% |

| Rest of Market | 403,078 | 13.1% | 443,543 | 14.0% | -9.1% |

| Total | 3,069,601 | 100% | 3,164,383 | 100% | -3.0% |

| Source: IDC Worldwide Quarterly Server Tracker, Dec 5, 2019 | |||||

Notes:

* IDC declares a statistical tie in the worldwide server market when there is a difference of one percent or less in the share of revenues or shipments among two or more vendors.

a Due to the existing joint venture between HPE and the New H3C Group, IDC will be reporting external market share on a global level for HPE and New H3C Group as "HPE/New H3C Group" starting from 2Q 2016.

b Due to the existing joint venture between IBM and Inspur, IDC will be reporting external market share on a global level for Inspur and Inspur Power Systems as "Inspur/Inspur Power Systems" starting from 3Q 2018.

Top Server Market Findings

On a geographic basis, Asia/Pacific (excluding Japan) (APeJ) and Japan were the only regions to show growth in 3Q19 with Japan as the fastest at 3.3% year over year and APeJ flat at 0.2% year over year. Europe, the Middle East and Africa (EMEA) declined 9.6% year over year while Canada declined 4.7% and Latin America contracted 14.2%. The United States was down 10.7% year over year. China saw its 3Q19 vendor revenues remain essentially flat with year-over-year growth of 0.7%.

Revenue generated from x86 servers decreased 6.2% in 3Q19 to $20.6 billion. Non-x86 servers declined 13.1% year over year to $1.4 billion.

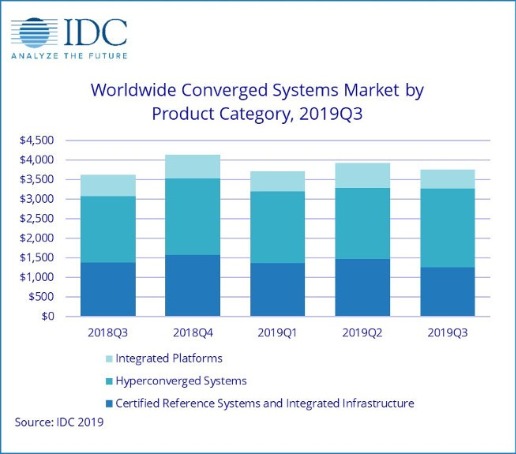

According to the International Data Corporation (IDC) Worldwide Quarterly Converged Systems Tracker, worldwide converged systems market revenue increased 3.5% year over year to $3.75 billion during the third quarter of 2019 (3Q19).

"The converged systems market continues to grow despite a challenging overall datacenter infrastructure environment," said Sebastian Lagana, research manager, Infrastructure Platforms and Technologies at IDC. "In particular, hyperconverged solutions remain in demand as vendors do an excellent job positioning the solutions as an ideal framework for hybrid, multi-cloud environments due to their software-defined nature and ease of integration into premises-agnostic environments."

Converged Systems Segments

IDC's converged systems market view offers three segments: certified reference systems & integrated infrastructure, integrated platforms, and hyperconverged systems. The certified reference systems & integrated infrastructure market generated roughly $1.26 billion in revenue during the third quarter, which represents a contraction of 8.4% year over year and 33.7% of all converged systems revenue. Integrated platforms sales declined 13.9% year over year in 3Q19, generating $475 million worth of sales. This amounted to 12.6% of the total converged systems market revenue. Revenue from hyperconverged systems sales grew 18.7% year over year during the third quarter of 2019, generating nearly $2.02 billion worth of sales. This amounted to 53.7% of the total converged systems market.

IDC offers two ways to rank technology suppliers within the hyperconverged systems market: by the brand of the hyperconverged solution or by the owner of the software providing the core hyperconverged capabilities. Rankings based on a branded view of the market can be found in the first table of this press release and rankings based on the owner of the hyperconverged software can be found in the second table within this press release. Both tables include all the same software and hardware, summing to the same market size.

As it relates to the branded view of the hyperconverged systems market, Dell Technologies was the largest supplier with $708.4 million in revenue and a 35.1% share. Nutanix generated $262.2 million in branded hardware revenue, representing 13.0% of the total HCI market during the quarter. There was a 3-way tie* for third between Cisco, Hewlett Packard Enterprise, and Lenovo, generating $109.0 million, $91.9 million, and $91.5 million in revenue each, which represents 5.4%, 4.6%, and 4.5% share of the market share respectively.

| Top 3 Companies, Worldwide Hyperconverged Systems as Branded, Q3 2019 (revenue in $M) | |||||

| Company | 3Q19 Revenue | 3Q19 Market Share | 3Q18 Revenue | 3Q18 Market Share | 3Q19/3Q18 Revenue Growth |

| 1. Dell Technologiesa | $708.4 | 35.1% | $496.8 | 29.3% | 42.6% |

| 2. Nutanix | $262.2 | 13.0% | $281.2 | 16.6% | -6.8% |

| T3. Cisco* | $109.0 | 5.4% | $78.1 | 4.6% | 39.5% |

| T3. Hewlett Packard Enterprise* | $91.9 | 4.6% | $80.3 | 4.7% | 14.4% |

| T3. Lenovo* | $91.5 | 4.5% | $58.8 | 3.5% | 55.6% |

| Rest of Market | $753.0 | 37.4% | $703.0 | 41.4% | 7.1% |

| Total | $2,016.1 | 100.0% | $1,698.3 | 100.0% | 18.7% |

| Source: IDC Worldwide Quarterly Converged Systems Tracker, December 12, 2019 | |||||

The EMEA purpose-built backup appliance (PBBA) market rose in value 2.7% year-on-year to reach $325.5 million in the third quarter of 2019, according to International Data Corporation's (IDC) Worldwide Quarterly Purpose-Built Backup Appliance Tracker. This follows the 6.3% YoY decline seen in the second quarter of 2019, bringing the market back to growth.

Total EMEA PBBA open systems shipments were valued at $307.5 million, which represented an increase of 5.7% year-on-year. Conversely, mainframe system sales decreased 31% year-on-year in 3Q19.

Regional Highlights

Western Europe

The PBBA tracker for Western Europe indicates a nearly flat performance of this region in terms of value, with 0.9% year-on-year growth, reaching $262.9 million in the third quarter of 2019.

The DACH market became the largest in Western Europe in 3Q19, responsible for 36.2% of the market's value and growth of 33.3% year-on-year.

The United Kingdom lost 6.3% of market value, gaining second place in the Western European PBBA market, suffering a contraction of 19% year-on-year in value.

The French PBBA market ranked third 39.3% growth year-on-year in value, giving it a 14.2% market share.

"The United Kingdom and Germany are the main drivers in the development of data protection technology in Europe," said Jimena Sisa, senior research analyst, EMEA Storage Systems, IDC. "Organizations are becoming increasingly disposed to update their legacy or third-platform technologies with tools that provide more functionality in terms of automation, better monitoring deployment, data management, analytics and orchestration. This is creating more desire to engage in cloud-based data protection-related projects that would help companies to grow their business in a digital transformation era."

CEMA

The PBBA market in Central and Eastern Europe, Middle East and Africa (CEMA) again recorded growth in value (12.2% YoY) in 3Q19, reaching $58.38 million.

The Middle East and Africa (MEA) market was the subregion that prevented the EMEA backup appliances market recording a decline. The major vendors in the open systems space recorded significant growth. The Central and Eastern European (CEE) region had more subdued performance, but nevertheless most companies closed a successful quarter.

"In countries like Saudi Arabia, Egypt, and Israel, there was a demand for larger-drive systems with increased data reduction, backup, and restore rates," said Marina Kostova, research manager, EMEA storage systems, IDC. “In CEE, the large countries of Poland and Russia saw increased shipments for both incumbents and data protection companies, while smaller countries experienced and overall slowdown in infrastructure spending, affecting PBBA as well."

The importance of proactive performance monitoring and analysis in an increasingly complex IT landscape. Digitalisation World launches new one-day conference.

The IT infrastructure of a typical organisation has become much more critical and much more complex in the digital world. Flexibility, agility, scalability and speed are the watchwords of the digital business. To meet these requirements, it’s highly likely that a company must use a multi-IT environment, leveraging a mixture of on-premise, colocation, managed services and Cloud infrastructure.

However, with this exciting new world of digital possibilities comes a whole new level of complexity, which needs to be properly managed. If an application is underperforming, just how easily can the underlying infrastructure problem be identified and resolved? Is the problem in-house or with one of the third party infrastructure or service providers? Is the problem to do with the storage? Or, maybe, the network? Does the application need to be moved?

Right now, obtaining the answer to these and many other performance-related questions relies on a host of monitoring tools. Many of these can highlight performance issues, but not all of them can isolate the cause(s), and few, if any, of them can provide fast, reliable and consistent application performance problem resolution – let alone predict future problems and/or recommend infrastructure improvements designed to enhance application performance.

Application performance monitoring, network performance monitoring and infrastructure performance monitoring tools all have a role to play when it comes to application performance optimisation. But what if there was a single tool that integrated and enhanced these monitoring solutions and, what’s more, provided an enhanced, AI-driven analytics capability?

Step forward AIOps. A relatively new IT discipline, AIOps provides automated, proactive (application) performance monitoring and analysis to help optimise the increasingly complex IT infrastructure landscape. The four major benefits of AIOps are:

1) Faster time to infrastructure fault resolution – great news for the service desk

2) Connecting performance insights to business outcomes – great news for the business

3) Faster and more accurate decision-making for the IT team – great news for the IT department

4) Helping to break down the IT silos into one integrated, business-enabling technology department – good news for everyone!

AIOps is still in its infancy, but its potential has been recognised by many of the major IT vendors and service and cloud providers and, equally important, by an increasing number of end users who recognise that automation, integration and optimisation are vital pillars of application performance.

Set against this background, Angel Business Communications, the Digitalisation World publisher, is running a one day event, entitled: AIOPs – enabling application optimisation. This one-day event will be dedicated to AIOPs - as an essential foundation for application optimisation – recognising the importance of proactive, predictive performance monitoring and analysis in an increasingly complex IT landscape.

Presentations will focus on:

Companies participating in the event to date include: Bloor Research, Dynatrace, Masergy, SynaTek, Virtana, Zenoss.

To find out more about this new event – whether as a potential sponsor or attendee, visit the AIOPS Solutions website: https://aiopssolutions.com/

Or contact Jackie Cannon, Event Director:

Email: jackie.cannon@angelbc.com

Tel: +44 (0)1923 690 205

When it comes to 5G, we’re just getting started. Entire industries are simultaneously planning for a new era of connectivity, bracing themselves for what is set to be one of the most influential roll-outs in technological history. Enabling higher speeds, lower latencies, and more machine-to-machine connections, the arrival of fifth generation networking is already sparking a revolution amongst data centres. And there’s more to come.

By Eric Law, Vice President Enterprise Sales Europe at CommScope.

In the next year alone, Gartner has forecast worldwide 5G network infrastructure revenue will reach a staggering $4.2 billion. That’s almost double its current value. The analyst house has claimed that, despite still being in the early days, ‘vendors, regulators and standards bodies’ alike all have preparations in place.

Meanwhile, the UK government is pushing back against planning authorities that are attempting to halt the installation of 5G masts, with a spokesperson for the Department for Culture, Media and Sport (DCMS) expressing the government’s commitment to 5G, highlighting the economic benefits of the roll-out.

Even as the first 5G services are starting to go live, however, there are still many questions that remain unanswered. To ask how fifth generation networks will affect life inside the data centre, therefore, is similar to asking how a city would stand up to a natural disaster. It depends on the city and the storm.

Moving to the edge

We now know enough about 5G to know that it will without doubt change how data centres are designed and, in some cases, will even alter the role that they play in the larger network. From a technical standpoint, 5G will have several defining characteristics.

The most obvious of these is the use of the 5G New Radio (NR) air interface. Exploiting modern spectrums and providing latency capabilities in just milliseconds, this enhanced performance will drive the deployment of billions of edge-based connected devices. It will also create the need for flexible user-centric networks, pushing the compute and storage resources closer to both users and the devices.

The only way to meet these ultra-reliable, low latency requirements will be to deploy edge nodes as mesh networks, with an east-to-west flow and parallel data paths. In some cases, these nodes may be big enough to classify as pod-type data centres or micro data centres in their own right, similar to those being used by both telecom and cable providers.

Preparing for disruption

Cloud-scale data centres – as well as larger, enterprise facilities – may be the only ones to see just some impact of this move. They are already using distributed processing and have been designed to deal with increased data flow from the edge. At the other end of the scale, retail multi-tenant data centres (MTDCs) will likely incur the most disruption, as they have traditionally grown in response to rising demand for cloud-scale services.

The biggest changes, however, will be seen among service providers. As they begin to refine their relationship between core data centres and evolving centralised RAN (CRAN) hubs, adaptation will become a choice of sink or swim. Increasing virtualisation of the core networks and the radio-access networks will be key when it comes to handling the anticipated 5G data flow. This will also enable service providers to be more flexible with compute and storage capacity, easily moving it to where is most needed.

More broadly across service provider networks, increasing virtualisation may have a more direct effect in the core data centre, with wireless and wireline networks becoming more converged. This will generate an even stronger business case for a single physical layer infrastructure, it then just depends on the degree of convergence that occurs between the core network and the RAN. Whether this occurs in a central office or in a data centre, we still don’t know.

The role of new tech

Aside from considering cloud models, we also need to focus on the impact that new technologies such as artificial intelligence (AI) and machine learning (ML) will have on data centres when 5G makes its mark. These technologies will not only require accelerated server speeds, but also higher network capacity to enable greater volume of growing edge services. When building these data models, processing vast data pools will be essential which, in most cases, are best suited to core data centre capabilities.

Most of the data that goes on to develop AI models will come from the edge. This nods to a potential shift in how more established, cloud-scale data centres will support the network. One potential use-case involves using the power of the core data centre to assemble data from the edge to develop the AI models. These would then be pushed out to deliver localised, low-latency services. The process would then be repeated, in turn creating a feedback loop that revolutionises the operating model.

A balancing act

As with any other aspect of digital transformation, the level and requirement of change within various data centre environments will depend on the individual application. Data streams generated by the billions of sensors and devices may well produce a steady flow of data, whereas others might be delivered intermittently or in irregular bursts. Either way, it’s very much out of our control. It’s how the data is then collected, processed and analysed that needs to be optimised, considering factors such as how much data should remain local to the edge device, and how much should be processed in the core centre?

Once these questions are answered, network engineers need to determine the best way to move the data through the network. Different latency and reliability requirements require the ability to prioritise data traffic at a very granular level. What can be off-loaded onto the internet via local Wi-Fi versus having to be backhauled to the cloud service provider (CSP) data centre? And remember, edge networks must fit into a financial model that makes it profitable.

Infrastructure as an enabler

The widespread roll-out of 5G is still a few years away, but there is no better time to start preparing for what’s to come. At the very core of this evolution, infrastructure must adapt to support higher wireless bandwidth and more universal data usage. Behind closed doors, organisations and building owners are considering more than just Wi-Fi to enable robust and dependable in-building mobile wireless with distributed antenna systems (DAS). Outdoors, it’s a different story. Service providers are upgrading and expanding their fiber networks to carry wireless data back to the core of the network, or in many cases, to edge data centres.

What we really need to think about, is the applications and innovations that these changes will develop as part of a 5G era more broadly. Self-driving cars, facial recognition, smart cities and industrial automation will all be made possible and more advanced by 5G. The problem remains, however, that each of these applications all have a varied set of requirements regarding reliability, latency, and the type/volume of data traffic generated. Unless you therefore understand the parameters of the situation, it’s difficult to pinpoint it’s exact impact on the data centre.

Something we do know, however, is that the avalanche of new data from the network edge, and thanks to 5G, goes hand-in-hand with high compute and storage power. Exactly how much power? You guessed it: that depends!