‘Tis the season of predictions and forecasts – reasons aplenty to be jolly as seemingly everyone has a go at crystal ball gazing for 2019 and beyond. In fairness, I did invite vendors of all shapes and sizes to send through their thoughts to me for this issue of Digitalisation World as my original choice of subject – the edge – was met with a surprising almost total silence. Everyone seems to be talking about edge, but, apparently, no one wants to commit these words to computer screen. As for the predictions and forecasts well, I’ve received so many that it looks as if we’re going to have a Part 1 (in this issue), a Part 2 in the January issue and even a Part 3 in the February issue, if that’s not too late!

Of course, it would be quite wrong of me to spoil the excitement by revealing what everyone is talking about in terms of likely trends and technology developments for the coming year, but let’s just say that there are no real surprises as to the favourite topics: security, Cloud, AI and plenty of other intelligent automation suggestions dominate. However, it must be said that the sheer breadth and depth of the responses to my request for information is mighty impressive and I’d be surprised if readers don’t learn a thing or two, or at least are given pause for a thought or two.

As for myself, I’m always learning new things about data centres and the wider IT environment. Most recently, I had cause to question my (and everyone else’s?) unswerving belief in the edge as a major ongoing trend. Let me explain.

I do not mean that the edge will not be important, but the definition of the edge, indeed the very word ‘edge’ seems to me a tad misleading. When most people talk about edge they do so in terms of either moving IT infrastructure closer to where data is generated or closer to where data is consumed. Now, this can be in remote, edge locations but, equally, this can be in cities and towns, which have a high concentration of, say, retail businesses, all of whom want to use real-time applications to interact with their customers, and hence need a local data centre and not one some distance away. So, rather than edge data centres, we should be talking about local data centres, but that’s not much use for the marketing folks – then again somebody came up with the Internet of Things – does Local Infrastructure Deployment (LID) sound any worse?!

So, that’s my, not entirely original, thought for you ahead of the festive period – with the not very original slogan: Keep a LID on IT!

Merry Christmas and a Happy New Year to you all.

Worldwide spending on the technologies and services that enable the digital transformation (DX) of business practices, products, and organizations is forecast to reach $1.97 trillion in 2022, according to a new update to the International Data Corporation (IDC) Worldwide Semiannual Digital Transformation Spending Guide. DX spending is expected to steadily expand throughout the 2017-2022 forecast period, achieving a five-year compound annual growth rate of 16.7%.

"IDC predicts that, by 2020, 30% of G2000 companies will have allocated capital budget equal to at least 10% of revenue to fuel their digital strategies," said Shawn Fitzgerald, research director, Worldwide Digital Transformation Strategies. "This shift toward capital funding is an important one as business executives come to recognize digital transformation as a long-term investment. This commitment to funding DX will continue to drive spending well into the next decade."

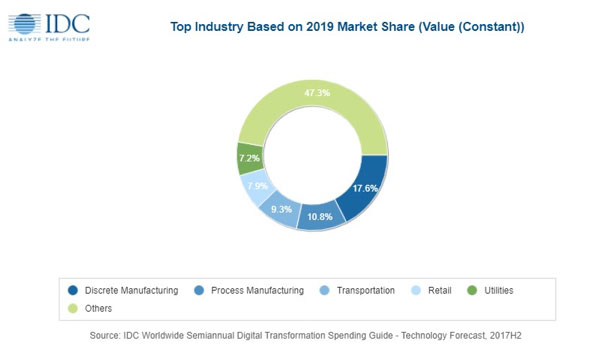

Four industries will be responsible for nearly half of the $1.25 trillion in worldwide DX spending in 2019: discrete manufacturing ($220 billion), process manufacturing ($135 billion), transportation ($116 billion), and retail ($98 billion). For the discrete and process manufacturing industries, the top DX spending priority is smart manufacturing. IDC expects the two industries to invest more than $167 billion in smart manufacturing next year along with significant investments in digital innovation ($46 billion) and digital supply chain optimization ($29 billion). In the transportation industry, the leading strategic priority is digital supply chain optimization, which translates to nearly $65 billion in spending for freight management and intelligent scheduling. Meanwhile, the top priority for the retail industry is omni-channel commerce, which will drive investments of more than $27 billion in omni-channel commerce platforms, augmented virtual experience, in-store contextualized marketing, and next-generation payments.

The DX use cases – discretely funded efforts that support a program objective – that will see the largest investment across all industries in 2019 will be freight management ($60 billion), autonomic operations ($54 billion), robotic manufacturing ($46 billion), and intelligent and predictive grid management for electricity, gas, and water ($45 billion). Other use cases that will see investments in excess of $20 billion in 2019 include root cause, self-healing assets and automated maintenance, and quality and compliance.

"Industry spending on DX technologies is being driven by core innovation accelerator technologies with IoT and cognitive computing leading the race in terms of overall spend," said Eileen Smith, program director with IDC's Customer Insights and Analysis Group. "The introduction of IoT sensors and communications capabilities is rapidly transforming manufacturing processes as well as asset and inventory management across a wide range of industries. Similarly, artificial intelligence and machine learning are dramatically changing the way businesses interact with data and enabling fundamental changes in business processes."

"The unprecedented speed at which technologies are coming to market supporting DX strategies can only be described as frantic," said Craig Simpson, research manager with IDC's Customer Insights and Analysis Group. "Areas regarded as pilot projects just a year ago have already become mature operations in some industries."

From a technology perspective, hardware and services spending will account for more than 75% of all DX spending in 2019. Services spending will be led by IT services ($152 billion) and connectivity services ($147 billion) while business services will experience the fastest growth (29.0% CAGR) over the five-year forecast period. Hardware spending will be spread across a number of categories, including enterprise hardware, personal devices, and IaaS infrastructure. DX-related software spending will total $288 billion in 2019 and will be the fastest growing technology category with a CAGR of 18.8%.

The United States and China will be the two largest geographic markets for DX spending, delivering more than half the worldwide total in 2019. In the U.S., the leading industries will be discrete manufacturing ($63 billion), transportation ($40 billion), and professional services ($37 billion) with DX spending focused on IT services, applications, and connectivity services. In China, the industries spending the most on DX will be discrete manufacturing ($60 billion), process manufacturing ($35 billion), and utilities ($27 billion). Connectivity services and enterprise hardware will be the largest technology categories in China.

Global spending on robotic process automation (RPA) software is estimated to reach $680 million in 2018, an increase of 57 percent year over year, according to the latest research from Gartner, Inc. RPA software spending is on pace to total $2.4 billion in 2022.

“End-user organizations adopt RPA technology as a quick and easy fix to automate manual tasks,” said Cathy Tornbohm, vice president at Gartner. “Some employees will continue to execute mundane tasks that require them to cut, paste and change data manually. But when RPA tools perform those activities, the error-margin shrinks and data quality increases.”

The biggest adopters of RPA has include banks, insurance companies, utilities and telecommunications companies. “Typically, these organizations struggle to knit together the different elements of their accounting and HR systems, and are turning to RPA solutions to automate an existing manual task or process, or automate the functionality of legacy systems,” said Ms. Tornbohm.

RPA tools mimic the “manual” path a human worker would take to complete a task, using a combination of user interface interaction describer technologies. The market provides a broad range of solutions with tools either operating on individual desktops or enterprise servers.

Gartner estimates that 60 percent of organizations with a revenue of more than $1 billion will have deployed RPA tools by the end of the year. By the end of 2022, 85 percent of large and very large organizations will have deployed some form of RPA. “The growth in adoption will be driven by average RPA prices decreasing by approximately 10 percent to 15 percent by 2019, but also because organizations expect to achieve better business outcomes with the technology, such as reduced costs, increased accuracy and improved compliance,” added Ms. Tornbohm.

However, RPA is not a one-size-fits-all technology and there are cases where alternative automation solutions achieve better results. RPA solutions perform best when an organization needs structured data to automate existing tasks or processes, add automated functionality to legacy systems and link to external systems that can’t be connected through other IT options.

RPA Is on Its Way to Mainstream

RPA tools currently reside at the Peak of Inflated Expectations in the Gartner Hype Cycle for Artificial Intelligence, 2018, as organizations look for ways to cut costs, link legacy applications and achieve a high ROI. However, the potential to achieve a strong ROI fully depends on whether RPA fits the individual organization’s needs. “In the near-term future, we expect to see an expanding set of RPA vendors as well as a growing interest from software vendors, which include software testing vendors and business process management vendors that are looking to gain revenue from this set of functionality, said Ms. Tornbohm.”

In addition, another market movement is emerging — the integration of artificial intelligence (AI) functionalities into the product suite. This is happening because RPA providers add or integrate machine learning and AI technology to deliver more types of automation.

Evaluate First Before Any RPA Deployment Project

In order to make an RPA project a success, leaders must first evaluate the possible use cases for RPA in their organization and also focus on revenue-generating activities. “Do not just focus on RPA to reduce labor costs,” Ms. Tornbohm said. “Set clear expectations of what the tools can do and how your organization can use them to support digital transformation as part of an automation strategy.”

The next step is to identify quick wins for RPA. These can be tasks that require people to solely move data between systems or involve structured, digitalized data processed by predefined rules. While those are the use cases where RPA delivers a high ROI, it is important to consider alternative existing tools and services, which already provide a significant proportion of the required functionalities at a suitable price point. Those alternatives can be used in parallel with RPA, or as a hybrid solution. When choosing a vendor, also ask for future AI-based options.

IT spending in EMEA is projected to total $973 billion in 2019, an increase of 2 percent from the estimated spending of $954 billion in 2018, according to the latest forecast by Gartner, Inc.

“2018 is not a good year for IT spending in EMEA,” said John Lovelock, research vice president at Gartner. The 5.8 percent growth witnessed in 2018 includes a 4 percent currency tailwind driven by the Euro’s increase in value against the U.S. Dollar.”

IT spending in EMEA has been stuck and will remain stuck until the unknowns surrounding Brexit are resolved,” Mr. Lovelock added. Until then, the slow growth of the overall EMEA market is masking the divergent growth rates across the segments in the region (see Table 1).

Table 1. EMEA IT Spending Forecast (Millions of U.S. Dollars)

|

| 2018 Spending | 2018 Growth (%) | 2019 Spending | 2019 Growth (%) |

| Data Center Systems | 46,330 | 5.4 | 46,545 | 0.5 |

| Enterprise Software | 108,832 | 12.7 | 116,751 | 7.3 |

| Devices | 155,685 | 2.1 | 153,909 | -1.1 |

| IT Services | 286,717 | 8.9 | 299,414 | 4.4 |

| Communications Services | 356,028 | 3.2 | 356,408 | 0.1 |

| Overall IT | 953,592 | 5.8 | 973,023 | 2.0 |

Source: Gartner (November 2018)

Spending on devices (PCs, tablets and mobile phones) in EMEA is set to decline in 2019. Consumer PC spending declined 9.1 percent in 2018 and demand for business Windows 10 PCs will pass its peak in 2019, with business PCs’ unit growth at 1 percent. Similarly, mobile phones’ unit growth, especially in Western Europe, will start to decline from a 4.7 percent growth in 2018 to -1.1 percent in 2019 as replacements cycles peak and then fall in 2019.

After achieving growth in 2018, spending on data center systems is set to be flat or decline in 2019 and beyond. The brief uptick in spending caused by a bump in upgrade spending and early replacements as a precaution against CPU security issues has abated.

The largest single market — communications services — has become commoditized and is set to show flat growth in 2019. The enterprise software market continues to have a positive effect on the overall spending growth in EMEA. This is largely due to the increasing availability and acceptance of cloud software.

In 2019, Gartner expects cloud, security and the move to digital business to bolster growth in EMEA. End-user spending on public cloud services in EMEA will grow 15 percent in 2019 to total $38.5 billion. In terms of security, with the GDPR in place, penalties for data violations could be as high as 4 percent of revenue.

“The enforcement of GDPR has moved security to a board-level priority. Organizations that are not protecting their customers’ privacy are not protecting their brand,” said Mr. Lovelock. “Global spending on IT security will surpass $133 billion in 2019 and in EMEA it will reach $40 billion in 2019, up 7.8 percent from 2018.”

Brexit Is Slowing Down IT Spending Growth in EMEA

With an expected 2 percent growth in IT spending in 2019, this ranks EMEA as the third slowest growing region for IT spending, ahead of Eurasia (+0.5 percent) and Latin America (+1.7 percent). Brexit is having a dampening effect on IT spending across the region. IT spending in the U.K. is set to total $204 billion in 2019, a 1.9 percent decline from 2018. “The U.K. is not expected to exhibit growth above 2 percent until 2020, which is having a downward effect on the EMEA IT spending average throughout the forecast period,” said Mr. Lovelock.

Israel and Saudi Arabia are leading the overall IT spending growth rate in EMEA in 2019, with each country set to achieve a 5.3 and 4.2 percent increase in IT spending, respectively. Both countries are investing in building a robust IT sector and making the journey to digital business. Israel’s growth is fueled by software spending and the increased use of software as a service. Saudi Arabia’s growth is driven by spend on IT services, including cloud computing and storage.

Secureworks has released the findings of its State of Cybercrime Report 2018 to illuminate the cybercrime trends and events that shaped the year.

From July 2017 through June 2018, Secureworks Counter Threat Unit® (CTU®) researchers analysed incident response outcomes and conducted original research to gain insight into threat activity and behaviour across 4,400 companies.

Among their findings was evidence that a small subset of professional criminal actors is responsible for the bulk of cybercrime-related damage, employing tools and techniques as sophisticated, targeted and insidious as most nation-state actors. These sophisticated and capable criminal gangs operate largely outside of the dark web, although they may leverage low-level criminal tools occasionally when it serves their purposes.

At the same time, there has been no lull in the overall volume of threats, and low-level cybercriminal activity remains a robust market economy, often taking place in view of security researchers and law enforcement on the dark web. While relatively simple in their approach, these activities can still deal widespread damage.

“Cybercrime is a lucrative industry, and it’s not surprising it’s become the arm of powerful, organised groups,” says Don Smith, Senior Director, Cyber Intelligence Cell, Secureworks Counter Threat Unit. “To understand the complete picture of the cybercriminal world, we developed insights based on a combination of dark web monitoring and client brand surveillance with automated technical tracking of cybercriminal toolsets.”

Key Findings

Among the CTU researchers’ key findings were the following:

The boundary between nation-state and cybercriminal actors continues to blur.

Ransomware continues to be a serious threat.

Sophisticated criminal gangs are earning millions of dollars of revenue through stolen payment card data.

The dark web is not the darkest depth of the cybercriminal world.

“The observations of CTU researchers over the last 12 months show that the threat from cybercrime is adaptive and constantly evolving,” the report concludes. “To stay ahead of it, it is imperative that organisations develop a holistic understanding of the landscape and how it relates to them, and tailor their security controls to address both opportunistic and more highly targeted cybercriminal threats.”

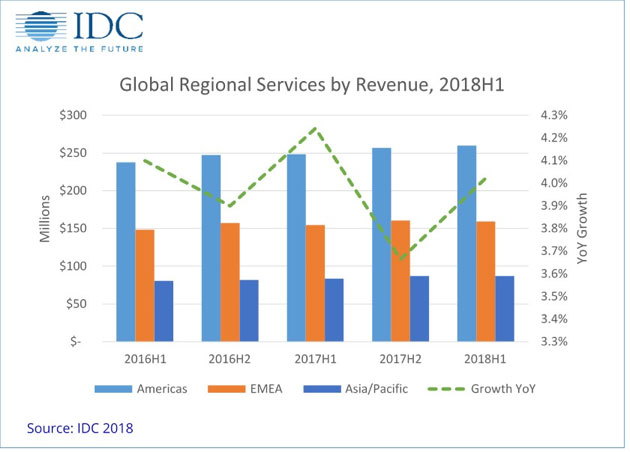

Worldwide revenues for IT Services and Business Services totaled $506 billion in the first half of 2018 (1H18), an increase of 4% year over year (in constant currency), according to the International Data Corporation (IDC) Worldwide Semiannual Services Tracker.

This growth reflects a relatively sanguine economic outlook during the first half of this year with accelerated digital transformation and, in some pockets, new digital services offsetting the cannibalization of traditional services.

During 1H18, it was a mixed picture for tier-one global outsourcers/integrators (companies with full service offerings and more than $10 billion in services revenue) headquartered in developed countries: most remained flat or declined slightly (organic growth in constant currency). But this was partially offset by stronger performances by two large global vendors, who returned to double-digit growth in the teens.

While most Indian services providers still outpace their U.S. and European counterparts, their growth (organic, in constant currency) scaled back slightly from a year ago, continuing their 2H17 deceleration. Growth paths continued to widen between vendors: while most large Indian vendors continued to grow at rates in the low single digits to high teens, it was offset by a few vendors' sharp slowdown. This is partially attributed to restructuring leadership teams and divesting business units to improve margins. It should also be noted that foreign exchange fluctuations in 2018 have complicated the constant currency calculations somewhat.

Looking at the different services markets, project-oriented revenues grew by 5.2% in 1H18 to $191 billion, followed by 3.6% growth for managed services and 2.7% for support services. The above-the-market growth in project-oriented markets was mostly led by business consulting and application development markets with growth rates of 7.5% and 6.5%, respectively. Most major management consulting firms still posted strong earnings in 2018, although growth rates cooled slightly: business consultants still extract more value in digital transformation. But the market movement belies enterprise buyers going from "thinking digital" to "doing digital." For example, the heavy lifting of digital is ultimately reflected in application projects, and the application development market showed faster growth in 2018 than both 1H17 and 2H17. As services vendors are making agile and cloud the central themes in their app businesses, it has helped them to shorten sales cycles and ramp up new app work.

In outsourcing, revenues grew 3.6% to $238 million in 1H18. Application-related managed services revenues (hosted and on-premise application management) outpaced infrastructure and business process outsourcing. Organizations rely largely on outsourcers to supply new app skills at scale. Large outsourcing contracts also served as the best vehicle to standardize and modernize existing application assets. Therefore, IDC expects application-related managed services markets to continue outgrowing other outsourcing markets in the coming years.

On the infrastructure side, while hosting infrastructure services revenue accelerated to 7.2% growth in 1H18, mostly due to cloud adoption, IT Outsourcing (ITO) – still almost twice as large a market and mostly big buyers and vendors – declined by 1.5%, largely chipped away by cloud cannibalization across all regions.

On a geographic basis, the United States, the largest services market, grew by 4.3%, slightly higher than the market rate, while Western Europe, the second largest market, grew only by 2.6%. In the United States, overall economic conditions and corporate spending remained robust. The effect of the trade war will also not be felt until the end of this year or in 2019; therefore, it had no negative impact on services spending in 1H18. In Western Europe, most major vendors are showing some softness in the region: US/European headquartered multinationals' recovery in Western Europe weakened in 2018. The newcomers' (namely Indian services providers) expansion into Europe also cooled slightly, with vendors posting mixed results in the region depending on their customer industry mix there. IDC expects Western European services revenues to be stable but structurally weaker than North America. IDC forecasts the region to grow below 3% annually in the coming years.

In emerging markets, Latin America, Asia/Pacific (excluding Japan) (APeJ), and Central & Eastern Europe led in growth. In Latin America, most major economies are turning the corner despite problems in Argentina and Venezuela. While big political/policy risks still exist, namely newly elected presidents in Mexico and Brazil, realistically, their effects will not be felt until 2019. Additionally, only a handful of regulatory issues will affect services outsourcers directly (i.e. tax and government procurement). On the other hand, Mexico, Canada, and the U.S. have resolved their NAFTA issues and Brazil has wrapped up its scandals. Therefore, IDC is expecting healthier IT spending in the region, especially with a strong deal pipeline in the public sector.

In APeJ, the second largest IT services market, Australia saw its growth scaled back slightly to 3.8% in 1H18, from 4.3% in 1H17. The largest market, China, trimmed its growth rate to just 7.2%, down from the 8% to 9% during the last two or three years, due to slower GDP growth, curbing debts, and pulling back on infrastructure spending, among other factors. Given its ongoing trade war with the U.S. and currency depreciation, we expect China's market growth will continue to flag, although gradually, in the coming years, which inevitably will have a spillover effect on Australia.

So far in 2018, the weaker growth in China and Australia was partially offset by faster growth from other emerging markets in APeJ (i.e. India, the Philippines, Indonesia, Vietnam, etc.). We expect this trend to continue: governments will continue to fund large digital initiatives and a better investment outlook will also drive IT spending (geopolitical and economic tension between China and the U.S. and its allies may help other emerging markets in the region attract foreign investments).

| Global Regional Services 1H18 Revenue and Year-Over-Year Growth (revenues in $US billions) | ||

| Global Region | 1H18 Revenue | 1H18/1H17 Growth |

| Americas | $259.7 | 4.6% |

| Asia/Pacific | $87.1 | 4.2% |

| EMEA | $159.3 | 3.1% |

| Total | $506.1 | 4.0% |

| Source: IDC Worldwide Semiannual Services Tracker 1H 2018 | ||

"Steady growth in the services market is being driven by continued demand for digital solutions across the regions," said Lisa Nagamine, research manager with IDC's Worldwide Semiannual Services Tracker. "But during 2018, as well as most of 2017, it is really the Americas and cloud-related services that are having the largest impact on revenue worldwide."

"For IT services, 2018 has so far been more stabilizing than it seems," said Xiao-Fei Zhang, program director, Global Services Markets and Trends. "Corporate America has been able to shake off geopolitical risks and trade tensions and continue to invest in new tools to reduce cost and add new capabilities."

DigiCert’s 2018 State of IoT survey reveals security as the top concern as IoT takes centre stage with 91 percent of companies saying it will be extremely important in the next two years.

A study from DigiCert reveals that enterprises have begun sustaining significant monetary losses stemming from the lack of good practices as they move forward with incorporating the Internet of Things (IoT) into their business models. In fact, among companies surveyed that are struggling the most with IoT security, 25 percent reported IoT security-related losses of nearly £257,333 in the last two years.

These findings come amid a ramping up of IoT focus within the typical organisation. Seventy-one percent of respondents indicated that IoT is extremely important to them currently, while 91 percent said they anticipate IoT to be extremely important to their respective organisations within two years.

The survey was conducted by ReRez Research in September 2018, with 700 enterprise organisations in the US, UK, Germany, France and Japan from across critical infrastructure industries.

Top concerns

Security and privacy topped the list of concerns for IoT projects, with 82 percent of respondents stating they were somewhat to extremely concerned about security challenges.

“Enterprises today fully grasp the reality that the Internet of Things is upon us and will continue to revolutionise the way we live, work and recreate,” said Mike Nelson, vice president of IoT Security at DigiCert. “Securing IoT devices is still a top priority that many enterprises are struggling to manage; however, integrating security at the beginning, and all the way through IoT implementations, is vital to mitigating rising attacks, which can be expected to continue. Due diligence when it comes to authentication, encryption and integrity of IoT devices and systems can help enterprises reliably and safely embrace IoT.”

Top vs. bottom performers

To give visibility to the specific challenges enterprises are encountering with IoT implementations, respondents were asked a series of questions using a wide variance of terminology. Using standard survey methodology, respondents’ answers were then scored and divided into three tiers:

IoT security missteps

Respondents were asked about IoT-related security incidents their organisations experienced within the past two years. The difference between the top- and bottom-tiers was unmistakable. Companies struggling the most with IoT implementation are much more likely to get hit with IoT-related security incidents. Every single bottom-tier enterprise experienced an IoT-related security incident in that time span, versus just 23 percent of the top-tier. The bottom-tier was also more likely to report problems in these specific areas:

These security incidents were not trivial. Among companies surveyed that are struggling the most with IoT security, 25 percent reported IoT security-related losses of nearly £257,333 in the last two years.

The top five areas for costs incurred within the past two years were:

Meanwhile, although the top-tier enterprises experienced some security missteps, an overwhelming majority reported no costs associated with those missteps. Top-tier enterprises attributed their security successes to these practices:

“When it comes to accelerating implementations of IoT, it’s vital for companies to strike a balance between gaining efficiencies and maintaining security and privacy,” Nelson said. “This study shows that enterprises that are implementing security best practices have less exposure to the risks and resulting damages from attacks on connected devices. Meanwhile, it appears these IoT security best practices, such as authentication and identity, encryption and integrity, are on the rise and companies are beginning to realise what’s at stake.”

Recommendations

The survey points to five best practices to help companies pursuing IoT realise the same success as the top-tier performing enterprises:

1.Review risk: Perform penetration testing to assess the risk of connected devices. Evaluate the risk and build a priority list for addressing primary security concerns, such as authentication and encryption. A strong risk assessment will help assure you do not leave any gaps in your connected security landscape.

2.Encrypt everything: As you evaluate use cases for your connected devices, make sure that all data is encrypted at rest and in transit. Make end-to-end encryption a product requirement to ensure this key security feature is implemented in all of your IoT projects.

3.Authenticate always: Review all of the connections being made to your device, including devices and users, to ensure authentication schemes only allow trusted connections to your IoT device. Using digital certificates helps to provide seamless authentication with binded identities that are tied to cryptographic protocols.

4.Instill integrity: Account for the basics of device and data integrity to include secure boot every time the device starts up, secure over the air updates, and the use of code signing to ensure the integrity of any code being run on the device.

5.Strategise for scale: Make sure that you have a scalable security framework and architecture ready to support your IoT deployments. Plan accordingly and work with third parties that have the scale and focus to help you reach your goals so that you can focus on your company’s core competency.

A new report from International Data Corporation (IDC) presents IDC's inaugural forecast for the worldwide 5G network infrastructure market for the period 2018–2022. It follows the release of IDC's initial forecasts for Telecom Virtual Network Functions (VNF) and Network Functions Virtualization Infrastructure (NFVI) in September and August 2018, respectively.

With the first instances of 5G services rolling out in the fourth quarter of 2018, 2019 is set to be a seminal year in the mobile industry. 5G handsets will begin to hit the market and end-users will be able to experience 5G technology firsthand.

From an infrastructure standpoint, the mobile industry continues to trial innovative solutions that leverage new spectrum, network virtualization, and machine learning and artificial intelligence (ML/AI) to create new value from existing network services. While these and other enhancements will play a critical role, 5G NR represents a key milestone in the next mobile generation, enabling faster speeds and enhanced capacity at lower cost per bit. Even as select cities begin to experience 5G NR today, the full breadth of 5G's potential will take several years to arrive, which will require additional standards work and trials, particularly related to a 5G NG core.

In addition to 5G NR and 5G NG core, procurement patterns indicate communications service providers (SPs) will need to invest in adjacent domains, including backhaul and NFVI, to support the continued push to cloud-native, software-led architectures.

Combined, IDC expects the total 5G and 5G-related network infrastructure market (5G RAN, 5G NG core, NFVI, routing and optical backhaul) to grow from approximately $528 million in 2018 to $26 billion in 2022 at a compound annual growth rate (CAGR) of 118%. IDC expects 5G RAN to be the largest market sub-segment through the forecast period, in line with prior mobile generations.

"Early 5G adopters are laying the groundwork for long-term success by investing in 5G RAN, NFVI, optical underlays, and next-generation routers and switches. Many are also in the process of experimenting with the 5G NG core. The long-term benefit of making these investments now will be when the standards-compliant SA 5G core is combined with a fully virtualized, cloud-ready RAN in the early 2020s. This development will enable many communications SPs to expand their value proposition and offer customized services across a diverse set of enterprise verticals through the use of network slicing," says Patrick Filkins, senior research analyst, IoT and Mobile Network Infrastructure.

Businesses are still a long way from realizing the benefits of much-hyped digital transformation initiatives, according to new research.

Organizations have high expectations for digital transformation, but new research from SnapLogic has uncovered that 40% of enterprises are either behind schedule with their digital transformation projects or haven’t started them yet.

Delayed projects aren’t the only factor keeping ITDMs up at night. The new research, conducted by Vanson Bourne, also revealed that 69% have had to reevaluate their digital transformation strategy entirely, and as a result, 59% would do it differently if given another chance. In fact, only 13% of ITDMs are completely confident they are on course to achieving their digital transformation goals.

When asked why they are struggling with digital transformation, 58% admitted that there is confusion amongst the organization around what they’re trying to achieve with digital transformation – an alarming result.

Digital Transformation Snags

Internal politics (34%), a lack of centralized ownership (22%), and a lack of senior management buy-in (17%) were identified as common roadblocks to digital transformation. In addition, 55% of ITDMs noted that a reliance on legacy technologies and/or a lack of the right technologies within their organization was holding them back, while 33% were stalled by a lack of the right skilled talent, and 31% reported that data silos were causing problems.

Additionally, 20% of organizations didn’t test or pilot their digital transformation projects before deploying them company-wide, and shockingly, 21% of ITDMs continued to roll out a company-wide digital transformation despite unsuccessful pilot programs in one part of the business.

Gaurav Dhillon, CEO at SnapLogic, commented on the findings: “Despite all the noise around digital transformation in recent years, it’s clear from our research that there’s still much work to be done to help organizations be successful. While some companies may take solace in knowing they are not the only ones struggling, for those of us in the technology industry this is a stark wake up call that we must do a better job advising, partnering with, and supporting customers in their digital transformation journey if we are to ever see the reality of a digital-first economy.”

Delivering on the Promise

Make no mistake: The promise of digital transformation is huge. For those about to undertake an enterprise-wide digital transformation, ITDMs expect to see the following results upon completion, on average: increased revenue of 13%, increased market share of 13%, reduced operating costs of 14%, increased business speed and agility of 16%, improved customer satisfaction of 18%, and reduced product development time of 15%.

But businesses are never going to achieve these gains unless they’re able to overcome key hurdles and turn the corner. For those who’ve completed a digital transformation, successful or otherwise, ITDMs identified the three most critical steps to success as investing in the right technologies and tools, involving all departments in strategy development, and investing in staff training.

The promise of new technologies in particular is seen as having a significant impact on digital transformation success. Case in point: 68% consider artificial intelligence and machine learning as vital to accelerating their digital transformation projects.

Dhillon concluded: “Digital transformation doesn’t happen overnight, and there’s no silver bullet for success. To succeed with digital transformation, organizations must first take the time to get the right strategy and plans in place, appoint senior-level leadership and ensure the whole of the organization is on-board and understands their respective roles, and embrace smart technology. In particular, enterprises must identify where they can put new AI or machine learning technologies to work; if done right, this will be a powerful accelerant to their digital transformation success.”

Bitglass has released its 2018 BYOD Security Report. The analysis is based on a survey of nearly 400 enterprise IT experts who revealed the state of BYOD and mobile device security in their organisations.

According to the study, 85 percent of organisations are embracing bring your own device (BYOD). Interestingly, many organisations are even allowing contractors, partners, customers, and suppliers to access corporate data on their personal devices. Amidst this BYOD frenzy, over half of the survey’s respondents believe that the volume of threats to mobile devices has increased over the past twelve months.

“While most companies believe mobile devices are being targeted more than ever, our findings indicate that many still lack the basic tools needed to secure data in BYOD environments,” said Rich Campagna, CMO of Bitglass. “Enterprises should feel empowered to take advantage of BYOD’s myriad benefits, but must employ comprehensive, real-time security if they want to do so safely and successfully.”

Key Findings

Gartner, Inc. has highlighted the top strategic Internet of Things (IoT) technology trends that will drive digital business innovation from 2018 through 2023.

“The IoT will continue to deliver new opportunities for digital business innovation for the next decade, many of which will be enabled by new or improved technologies,” said Nick Jones, research vice president at Gartner. “CIOs who master innovative IoT trends have the opportunity to lead digital innovation in their business.”

In addition, CIOs should ensure they have the necessary skills and partners to support key emerging IoT trends and technologies, as, by 2023, the average CIO will be responsible for more than three times as many endpoints as this year.

Gartner has shortlisted the 10 most strategic IoT technologies and trends that will enable new revenue streams and business models, as well as new experiences and relationships:

Trend No. 1: Artificial Intelligence (AI)

Gartner forecasts that 14.2 billion connected things will be in use in 2019, and that the total will reach 25 billion by 2021, producing immense volume of data. “Data is the fuel that powers the IoT and the organization’s ability to derive meaning from it will define their long term success,” said Mr. Jones. “AI will be applied to a wide range of IoT information, including video, still images, speech, network traffic activity and sensor data.”

The technology landscape for AI is complex and will remain so through 2023, with many IT vendors investing heavily in AI, variants of AI coexisting, and new AI-based tolls and services emerging. Despite this complexity, it will be possible to achieve good results with AI in a wide range of IoT situations. As a result, CIOs must build an organization with the tools and skills to exploit AI in their IoT strategy.

Trend No. 2: Social, Legal and Ethical IoT

As the IoT matures and becomes more widely deployed, a wide range of social, legal and ethical issues will grow in importance. These include ownership of data and the deductions made from it; algorithmic bias; privacy; and compliance with regulations such as the General Data Protection Regulation.

“Successful deployment of an IoT solution demands that it’s not just technically effective but also socially acceptable,” said Mr. Jones. “CIOs must, therefore, educate themselves and their staff in this area, and consider forming groups, such as ethics councils, to review corporate strategy. CIOs should also consider having key algorithms and AI systems reviewed by external consultancies to identify potential bias.”

Trend No. 3: Infonomics and Data Broking

Last year’s Gartner survey of IoT projects showed 35 percent of respondents were selling or planning to sell data collected by their products and services. The theory of infonomics takes this monetization of data further by seeing it as a strategic business asset to be recorded in the company accounts. By 2023, the buying and selling of IoT data will become an essential part of many IoT systems. CIOs must educate their organizations on the risks and opportunities related to data broking in order to set the IT policies required in this area and to advise other parts of the organization.

Trend No. 4: The Shift from Intelligent Edge to Intelligent Mesh

The shift from centralized and cloud to edge architectures is well under way in the IoT space. However, this is not the end point because the neat set of layers associated with edge architecture will evolve to a more unstructured architecture comprising of a wide range of “things” and services connected in a dynamic mesh. These mesh architectures will enable more flexible, intelligent and responsive IoT systems — although often at the cost of additional complexities. CIOs must prepare for mesh architectures’ impact on IT infrastructure, skills and sourcing.

Trend No. 5: IoT Governance

As the IoT continues to expand, the need for a governance framework that ensures appropriate behavior in the creation, storage, use and deletion of information related to IoT projects will become increasingly important. Governance ranges from simple technical tasks such as device audits and firmware updates to more complex issues such as the control of devices and the usage of the information they generate. CIOs must take on the role of educating their organizations on governance issues and in some cases invest in staff and technologies to tackle governance.

Trend No. 6: Sensor Innovation

The sensor market will evolve continuously through 2023. New sensors will enable a wider range of situations and events to be detected, current sensors will fall in price to become more affordable or will be packaged in new ways to support new applications, and new algorithms will emerge to deduce more information from current sensor technologies. CIOs should ensure their teams are monitoring sensor innovations to identify those that might assist new opportunities and business innovation.

Trend No. 7: Trusted Hardware and Operating System

Gartner surveys invariably show that security is the most significant area of technical concern for organizations deploying IoT systems. This is because organizations often don’t have control over the source and nature of the software and hardware being utilised in IoT initiatives. “However, by 2023, we expect to see the deployment of hardware and software combinations that together create more trustworthy and secure IoT systems,” said Mr. Jones. “We advise CIOs to collaborate with chief information security officers to ensure the right staff are involved in reviewing any decisions that involve purchasing IoT devices and embedded operating systems.”

Trend 8: Novel IoT User Experiences

The IoT user experience (UX) covers a wide range of technologies and design techniques. It will be driven by four factors: new sensors, new algorithms, new experience architectures and context, and socially aware experiences. With an increasing number of interactions occurring with things that don’t have screens and keyboards, organizations’ UX designers will be required to use new technologies and adopt new perspectives if they want to create a superior UX that reduces friction, locks in users, and encourages usage and retention.

Trend No. 9: Silicon Chip Innovation

“Currently, most IoT endpoint devices use conventional processor chips, with low-power ARM architectures being particularly popular. However, traditional instruction sets and memory architectures aren’t well-suited to all the tasks that endpoints need to perform,” said Mr. Jones. “For example, the performance of deep neural networks (DNNs) is often limited by memory bandwidth, rather than processing power.”

By 2023, it’s expected that new special-purpose chips will reduce the power consumption required to run a DNN, enabling new edge architectures and embedded DNN functions in low-power IoT endpoints. This will support new capabilities such as data analytics integrated with sensors, and speech recognition included in low cost battery-powered devices. CIOs are advised to take note of this trend as silicon chips enabling functions such as embedded AI will in turn enable organizations to create highly innovative products and services.

Trend No. 10: New Wireless Networking Technologies for IoT

IoT networking involves balancing a set of competing requirements, such as endpoint cost, power consumption, bandwidth, latency, connection density, operating cost, quality of service, and range. No single networking technology optimizes all of these and new IoT networking technologies will provide CIOs with additional choice and flexibility. In particular they should explore 5G, the forthcoming generation of low earth orbit satellites, and backscatter networks.

The human touch and AI

CIOs need to prepare workers for a future in which people do more creative and impactful work because they no longer have to perform many routine and repetitive tasks, according to Gartner, Inc. People and machines are entering a new era of learning in which artificial intelligence (AI) augments ordinary intelligence and helps people realize their full potential.

“Pairing AI with a human creates a new decision-making model in which AI offers new facts and options, but the head remains human, as does the heart,” said Svetlana Sicular, research vice president at Gartner. “Let people identify the most suitable answers among the different ones that AI offers, and use the time freed by AI to create new things.”

Survey Finds AI Eliminates Fewer Jobs Than Expected — and Can Create Them

AI will change the workforce, but its impact will not be detrimental to all workers. According to a Gartner survey conducted in the first quarter of 2018 among 4,019 consumers in the U.K. and U.S., 77 percent of employees whose employers have yet to launch an AI initiative expect AI to eliminate jobs. But the same survey found that only 16 percent of employees whose employers have actually adopted AI technologies saw job reductions, and 26 percent of respondents reported job increases after adopting AI.

People will learn how to do less routine work. They will be trained in new tasks, while old tasks that have become routine will be done by machines. “The human is the strongest element in AI,” explained Ms. Sicular. “Newborns need an adult human to survive. AI is no different. The strongest component of AI is the human touch. AI serves human purposes and is developed by people.”

There are, for example, cases in which consulting an AI system has saved money, time and distress. Not consulting an AI system may actually become unethical in the future — in the field of medicine, for example. “The future lies not in a battle between people and machines, but in a synthesis of their capabilities, of humans and AI working together,” said Helen Poitevin, research senior director at Gartner.

People and Machines: A New Era of Learning

Although AI will give employees the time to do more, organizations will need to train and retrain their employees in anticipation of AI investments.

“We are entering an era of continuous learning,” said Ms. Sicular. “People will have to learn how to live with AI-enabled machines. When machines take away routine tasks, people will have the time to do more new tasks, so they will need to constantly learn.” People will also need to learn how to train AI systems to be useful, clear and trustworthy, in order to work alongside them cooperatively.

“It’s about trust and engagement. People need to trust machines — this is the ultimate condition of AI adoption and success,” said Ms. Sicular.

Choose Your AI Teacher Well

CIOs are likely to be the leader or instigator of AI initiatives in their organization. While machine learning focuses on creating new algorithms and improving the accuracy of “learners”, the machine teaching discipline focuses on the efficacy of “teachers.”

The role of teacher is to transfer knowledge to the learning machine, so that it can generate a useful model that can approximate a concept. Machine learning models and AI applications derive intelligence from the available data in ways that people direct them to do. “These technologies learn from teachers, so it is essential to choose your teachers well,” said Ms. Sicular. “While some may think AI is hard for people, we can also ask ourselves ‘Are people easy for AI?’”

“As a CIO, you will shape the future of work by how you invest in technology and people,” said Ms. Poitevin. “Today, the majority of the CIOs we speak to find themselves amid a proliferation of “bots” (physical robots and software virtual assistants). Adoption of bots in the workplace is rising as workers become increasingly comfortable working with machines and grow more supportive of them.”

CIOs believe bots will be integral to our daily lives, wherever we would ‘rather have a bot do it. “By 2020, AI technologies will pervade almost every new software product and service,” said Ms. Poitevin. “Well-designed robots and virtual assistants will be embraced and seen as helping employees focus on their best work by relieving them of a mountain of mundane work.”

IDC predicts direct digital transformation spending of $5.9 trillion over the years 2018 to 2021.

As the top digital transformation (DX) market research firm in the world, International Data Corporation (IDC) today unveiled IDC FutureScape: Worldwide Digital Transformation 2019 Predictions. In this year's DX predictions, IDC has identified two DX groups based on specific trends and attributes. Leaders in transformation (the digitally determined) are those organizations that have aligned the necessary elements of people, process, and technology for success. In contrast, laggards (the digitally distressed) have not developed the enterprise strategy necessary to align the organization effectively for transformation to date. IDC's market leading analytical understanding and insights are the result of extensive market research and survey data from over 3,000 companies worldwide.

IDC analysts Bob Parker and Shawn Fitzgerald recently discussed the ten industry predictions that will impact digital transformation efforts of CIOs and IT professionals over the next one to five years and offered guidance for managing the implications these predictions harbor for their IT investment priorities and implementation strategies.

The predictions from the IDC FutureScape for Worldwide Digital Transformation are:

"With direct digital transformation (DX) investment spending of $5.5 trillion over the years 2018 to 2021, this topic continues to be a central area of business leadership thinking," said Shawn Fitzgerald, research director, Worldwide Digital Transformation Strategies. "IDC's 2019 DX predictions represent our perspective on the major transformation trends we expect to see over the next five years. With almost 800 business use cases spanning 16 industries and eight functional areas, our DX spending guides illustrate where industry is both prioritizing digital investments and where we expect to see the largest growth in 3rd Platform and innovation accelerator technologies."

2018 saw numerous changes being made within the Data Centre Trade Association. The launch of a new website makes it easier for data centre owners and operators to find the information they require, and the new members portal has been designed to give a much better look and feel to the dedicated members pages.

The DCA - 2019

Having listened to our members and data centre community over the past 12 months our plans for 2019 are to continue to build on our identified areas of focus, provide independent advice and guidance to data centre owners, deliver more opportunities for members to engage, collaborate and gain value from their trade association.

Insight

The workshops planned for 2019 will continue to educate the sector on the latest best practices and to provide updates on new developments. DCA events provide great networking and collaboration opportunities. Our social media channels and monthly publications continue to deliver and expand, providing ‘thought leadership’ and knowledge sharing content opportunities for our members – again all designed to assist data centre owners and operators.

Influence

Providing members with independent representation remains a key deliverable for The DCA in 2019. The DCA will continue to provide members with both a voice and a seat at the table at National, EU and International levels, ensuring key issues are flagged and that the sectors voice is heard. Keeping one step ahead of policies is vital when it comes to steering the strategic direction for businesses. The DCA will continue to work closely with the EU and lobbying groups much as Policy Connect, via APPG’s to ensure that members concerns are heard.

Validation

An increased reliance on digital services in both our business and daily lives brings increased scrutiny. The word regulation seems to be cropping up in conversations more and more, I am an advocate of scrutiny however I still prefer self-regulation to imposed regulation.

The DCA will continue to encourage its members to lead by example through the endorsement and adoption of best practices, the EU Code of Conduct and independent validation through approved certification bodies.

The DCA will continue to support active members who are involved in Certification and Special Interest Groups (SIGs). These working group are run for and by DCA members under Chatham House Rules. Intrinsically linked to many of these SIGs, The DCA will continue to support EN/EU and ISO and Standards committees to ensure new and existing best practices, codes and standards remain both fit for purpose, accessible and affordable. Newly formed SIGs include a Workforce Development Group, Liquid Cooling Alliance, Open Compute Project (OCP) Working Group and Life Cycle Group.

Sustainability

The challenge of how to keep up with demand remains a daunting prospect for many businesses. Jobs need to be filled right across the sector especially when it comes to practical engineers, technicians, installers and operational/maintenance staff. Data Centres themselves may not employ a large workforce but the suppliers who support the sector do and the shortfall in available candidates to fill these roles is growing fast. The DCA recognise that to fill this gap we need to engage and attract a new generation of workforce at a much earlier stage in the educational cycle. In 2019 we will ramp up the work we are doing with STEM Learning to promote the DC Sector as a career of choice. Our aim is to recruit and support STEM Ambassadors from right across our membership to help in this effort.

In Summary, there is a great deal to look forward to in 2019 and as the year unfolds the Data Centre Trade Association will continue to be here to support you.

For more information and to contact The DCA (Data Centre Alliance) Trade Association

Website: www.dca-global.org

Email: info@dca-global.org

Tel: +44 (0)845 873 4587

By Luca Rozzoni, Senior Product Manager for Europe, Chatsworth Products, Inc.

Computing at the edge is blurring the boundaries of the traditional data centre. The growth of the Internet of Things (IoT) means more data processing is being done on an ever-increasing range of smart devices in non-traditional locations such as manufacturing floors, warehouses and outdoors. As the network spreads, such critical factors as power management, cooling and physical security are taking on expanded roles – and even greater importance – in network operations. As we look to 2019 and beyond, we forecast a more a holistic approach to data centre operations, in which infrastructure, hardware and software are addressed as a unified system.

In Europe, we are seeing fast adoption and rapid growth of IoT across markets. Keeping pace with the increasing demands of edge and digital building initiatives next year will require support and protection of critical equipment, regardless of where it is located. Like the proverbial chain that is only as strong as its weakest link, any limitations in network design or performance will result in unacceptable quality of service. Playing a key role in maintaining network operations will be advanced rack and cable management solutions that can sustain the rigors of today’s technology demands.

Intelligent Power May Get More Traction

While rack densities continue to rise in Europe, they are still low compared with some other markets. An average data centre deploys 2-4 kilowatts (kW) per rack (in some isolated cases we see that being pushed to 10-15 kW). For that reason, a large portion of power deployments are conditioned by basic metered power distribution units (PDU). There has been a slow adoption of the new generation of intelligent PDU (monitored/switched), with price being the main stumbling block to greater market entry.

This situation should continue to evolve in 2019, because higher-density computing will require ever-more complex power distribution, monitoring and reporting. And even if rack densities do not increase significantly, compute power is increasing. As chip manufacturers add cores to processors (CPUs), Moore’s law will drive continued increases in computing per watt. Further, the size of the CPU package continues to increase, so the heat flux due to the CPU is decreasing. Basically, servers are more power efficient and support higher utilisation.

So rack densities will probably not climb significantly, but the amount of compute power (utilisation) per rack will. Further into the future, we will have to keep an eye on developments in artificial intelligence (AI). Typically, the PCI cards required to drive AI run at 100-percent power when models are being trained. When AI takes off, we expect extremely large and sustained loads on the data centre that will increase the average workload.

The latest examples of products that can help reduce the complexity of delivering power to equipment includes three-phase PDUs equipped with power monitoring that stretches across the enterprise. Also accessible through an IP connection, they enable an IT team to monitor anything from anywhere—all the way down to the device level. For all these reasons, we expect to see a growing interest in intelligent PDUs in 2019 as customers prepare for the next technology upgrades.

Growing Focus on Airflow Management

As equipment densities continue to increase, airflow management will become an even more vital practice for optimising energy efficiency and maintaining enterprise uptime. Partial containment is still widely deployed, but methods exist to maximise thermal efficiencies (free cooling) by following best thermal management practices. Within the rack, for example, good airflow management requires the use of snap-in filler panels to block open rack-mount spaces as well as air dams to block airflow around the sides and top of equipment. Additionally, passive cooling solutions and vertical exhaust ducts (chimney) help isolate hot exhaust air from cooled air, reducing cooling demands at rack and room levels. With the added benefit of lower energy costs for the end user, we can expect to see greater deployment of this approach to providing equipment cooling performance throughout the data centre.

For this to happen, solution vendors need to provide more education to users on thermal management practices in 2019. The No. 1 thermal management question that vendors hear is “Can I manage higher density without added cooling capacity?” This indicates that many do not yet grasp the benefits of passive cooling in reducing energy consumption and lowering construction and operational costs. There is a common perception that, for high-density environments, it is safer to deploy active cooling devices such as in-row cooling. The majority of data centres continue to oversupply cold air in order to overcome inefficiencies.

We know that customers would like to support higher rack densities within acceptable operational temperatures. At these higher rack densities, however, adding more air conditioning to the room isn’t an effective option. Solutions like Passive Cooling® from Chatsworth Products provide ideal airflow to cool each rack even if the room design limits the amount of airflow volume. They meet the needs of these applications within the architectural limitations of the facility, completely segregating hot and cold air, and can be applied at the cabinet or aisle level, providing increased equipment cooling performance in all elements of the data centre mechanical plant.

Protecting Data and Privacy is a Growing Concern

Finally, security will remain a major area of focus in 2019, as data breaches have become a growing concern among data centre managers, CIOs and end users. The main concern appears to be linked to security from a physical and cyber standpoint. We see a considerable number of enquiries related to electronic access control with two layers of authentication (mostly biometric) and monitoring devices to better control and manage all the devices deployed in the data centre.

In terms of market growth in 2019, we anticipate some positive movement in a number of areas, including cloud applications, colocation, enterprise data centres, and more development at the edge of the network. Cloud and colocation data centres should see the fastest growth and expansion, as large corporate users seek ways to reduce costs related to their IT network deployments and management.

Project Overview

EURECA was a three-year-long (March 2015 - February 2018) project funded by the European Commission’s Horizon 2020 Research and Innovation programme, with partners from the United Kingdom, Ireland, Netherlands and Germany. It aimed to help address the data centre energy efficiency challenge in the European public sector. It would support public authorities to adopt a modern and innovative, procurement approach.

EURECA reinforced the consolidation of newly built and retrofit data centre projects in various European countries, with a focus on Public Procurement for innovation (PPI). Additionally, EURECA supported the development of European standards, best practices and policies related to energy efficiency in data centres and green public procurement. This was done by providing scientific evidence and data.

For further information, please visit the EURECA project website: www.dceureca.eu

Key Results

The EURECA team specifically designed various innovative, engagement methodologies that used state-of-the-art models and tools. The project supported consolidation, new build and retrofit data centre projects in member states. This resulted in savings of over a 131 GWh/year of primary energy (that’s 52.5 GWh/year of end-use energy) from immediate pilots, supported within the project lifetime in Ireland, Netherlands and United Kingdom (plus various ongoing ones in other EU member states). This equated to more than 27.83 thousand tCO2/year savings, with annual electricity bill savings of €7.159M. This was achieved from working on pilots involving 337 data centres.

EURECA influenced various initiatives related to policy, such as:

EURECA contributed to several standards, including the EN50600 series on data centres. EURECA team members also play an active role in developing the EU Code of Conduct for data centre energy efficiency.

Finally, EURECA trained over 815 stakeholders through 10 face-to-face training events, held across Europe. To know more about EURECA training, please read this article[1].

The feedback from the European Commission received on the overall evaluation of the project stated that:

"The project has delivered exceptional results with significant immediate or potential impact”.

Data Centre Market Directory

As part of the EURECA project, a vendor-neutral open market directory was established for the European data centre market. This directory currently lists over 250 data centre products and services available to the European market. It is hosted by the ECLab[2] –EURECA coordinator.

So, if your business provides data centre related products and/or services to the European market (irrespective of company size), you are welcome to list your offerings here (DCdirectory.eu) for FREE.

Policy Recommendations

A scientific research on hardware refresh rates was done under the EURECA project[3]. This was referenced by the Amsterdam Economic Board, Netherlands in June 2018. The report provided policy guidance in the field based on the findings of the work above [4].

In September 2018, European member states voted to implement regulation (EU) No 617/2013 under Directive 2009/125/EC. It focussed on the Ecodesign requirements for servers and online data storage products. Computer Weekly interviewed the EURECA coordinator who was a key player in supporting the legislation, and who shared some of the research findings supporting evidence provided to the policymakers[5].

EURECA in the news

In February 2018, the Computer Weekly magazine published an interview with the project coordinator on the energy consumption of public sector data centres. The article discussed the EURECA project and revealed for the first time some of the project findings such as the size and running cost of European public sector data centres [6].

In October 2018, the BBC Reality Check team interviewed Dr Rabih Bashroush about the energy consumption of streaming media and the overall trends in data centre energy consumption. Based on this, they published an article titled: “Climate change: Is your Netflix habit bad for the environment?”[7]

Next steps

The rich body of knowledge produced by the EURECA project, along with the impact already created, ensures a lasting legacy well beyond the project lifetime.

The EURECA team plans to:

[1] Umaima Haider "Building the Capacities and Skills of Stakeholders in the Data Centre Industry", DataCentre Solutions (DCS) Europe Magazine, July 2018 https://tinyurl.com/y83n29ex

[2] Enterprise Computing Research Group, University of East London, UK https://www.eclab.uel.ac.uk

[3] Rabih Bashroush. "A Comprehensive Reasoning Framework for Hardware Refresh in Data Centres.” IEEE Transactions on Sustainable Computing, 2018. https://doi.org/10.1109/TSUSC.2018.2795465

[4] "Circulaire Dataservers" (in Dutch), Amsterdam Economic Board, Netherlands, June 2018.

URL: https://www.amsterdameconomicboard.com/app/uploads/2018/06/Circulaire-Dataservers-Rapport-2018.pdf

[5] "EU-backed bid to cap idle energy use by datacentre servers moves closer". Computer Weekly, 19 September 2018 https://www.computerweekly.com/news/252448914/EU-backed-bid-to-cap-idle-energy-use-by-datacentre-servers-moves-closer

[6] “The EURECA moment: Counting the cost of running the UK’s public sector datacentres”. Computer Weekly, 20 February 2018, http://www.computerweekly.com/feature/The-EURECA-moment-Counting-the-cost-of-running-the-UKs-public-sector-datacentres

[7] "Climate change: Is your Netflix habit bad for the environment?". BBC, 12 October 2018

https://www.bbc.co.uk/news/technology-45798523

Dr

Umaima Haider

Dr Umaima Haider is a Research Fellow at the University of East London within the EC Lab (https://www.eclab.uel.ac.uk). She held a visiting research scholar position at Clemson University, South Carolina, USA. Her main research interest is in the design and implementation of energy efficient systems, especially in a data centre environment.

Tool Kit to help Data Centres assess their environmental impact

By Vasiliki Georgiadou, Project Manager, Green IT Amsterdam

The European CATALYST innovation project has launched a tool kit that helps data centres to self-assess their environmental impact. The tool kit makes use of existing and well-known standards like EN 50600 and the EU Code of Conduct. It helps owners and operators to prepare themselves for a future where data centres are subject to new and ever more stringent rules and regulations. But perhaps most importantly it can help them reduce costs and develop new revenue streams by becoming an integral part of smart energy grids.

The data centre industry is changing rapidly. We obviously still need data centres to host cloud services, enterprise applications or simply our holiday pictures. At the same time we see a trend where mounting societal pressure requires data centres to become greener. In other words: to minimize their usage of natural resources like energy and water. How can a data centre owner or operator lower the environmental impact of their facilities if we do not have a well-defined and structured method in place to help them assess how good (or bad) their environmental performance is?

This is why the European CATALYST innovation project has developed a tool kit that helps a data centre to perform a self-assessment of their environmental impact. The test will give a data centre operator a useful classification of how their facility performs relative to a number of well-known standards and a set of new services that will be introduced by the CATALYST project in the near future.

The tool kit in this first edition looks at four themes: renewable energy, heat reuse, energy efficiency and resource management (energy, water and more). Its aim is by no means to develop a new standard. Its purpose is to help the data centre industry, engineering firms, (local) governments and others assess how a data centre is performing relative to existing standards. At the heart of the tool kit lies the so-called Value Added Plan that - based on an initial assessment - helps a data centre facility to improve upon its environmental impact.

The tool kit consists of a number of building blocks and workflows. The workflows guide the user through a process that helps assess the performance of a facility based on standards like EN 50600. The tool kit also uses metrics that have been developed by The Green Grid and a number of research and innovation projects like All4Green, CoolEmAll, GreenDataNet, RenewIT, GENIC, DOLFIN, DC4CITIES and GEYSER.

The assessment provides a data centre with a simple method to keep a score on their performance through its grades: Bronze, Silver and Gold. The tool kit produces a separate grade per theme. In that way data centre owners or operators are able to set their own priorities and decide for themselves which themes are most relevant to their facilities or business model. The earlier mentioned Value Added Plan makes it possible for a facility to put in place the measures that help them achieve a higher grade for a chosen theme. Although the assessment is not meant to compare data centres - it is not a benchmark - it does give individual facilities a very good insight into their environmental performance and impact.

Classifying their environmental impact will also help data centres to better understand and communicate the role they would like to play in the energy transition Europe will be going through over the coming years. More and more we see a trend where data centres might become part of smart grids and heat networks. Many data centres are very well suited to become energy hubs that help store and supply energy and also help stabilize the increasingly complex grids of many European countries. With the financial benefits that might come with such a role. By understanding and improving their environmental impact in terms of energy usage, heat reuse and other variables they might even be able to develop new revenue streams.

By Leo Craig, General Manager of Riello UPS

Making any prediction opens yourself up to the possibility of being proved wrong, although it’s safe to say that 2019 will continue to see demand for data centre capacity grow.

According to Equinix, the volume of data created by businesses across Europe increases by 48% every year. And with the number of connected devices in the UK alone set to top 600 million by 2023, our personal and professional lives depend more on the storage and processing powers of our data centres than ever.

Indeed, there’s currently more than 60,000 m2 of new data centres under construction, which will take total UK capacity to above 900,000 m2 once completed.

This expansion doesn’t come without any cost. Even though the industry goes to great lengths to improve efficiency, data centres are power-hungry beasts. They’ll consume a fifth of all the world’s energy by 2025.

Here in the UK, we face the ongoing challenge of balancing rising demand with limited electrical supply. Our energy mix is changing forever. Nearly 23 GW of thermal capacity has gone offline over the last decade, with a further 24 GW of coal and nuclear set to go by 2025.

Renewables is picking up some of that slack, with sources such as solar and wind supplying a record 31.7% of the country’s electricity in Q2 of 2018.

But the only truly sustainable solution is a smart grid that better matches supply with demand in real-time. A decentralised National Grid, with a diverse range of power sources all interconnected and able to turn off demand or increase generation on a second-by-second basis to deliver secure, stable, and reliable power 365 days a year.

Changing Mindset

Battery storage isn’t a new concept, although its potential is undeniable. If just 5% of peak demand is met by demand side response (DSR), it would be equivalent to the electricity generated by a new nuclear power station.

Data centres have been slow on the uptake though, with the need for 100% uptime a far bigger priority than the potential rewards for taking part. But is 2019 when that tide turns?

Research earlier this year revealed 83% of mission-critical sites would participate in DSR if it didn’t negatively impact on core activity. Reliability will always come first, but what if there’s a way to take advantage of battery storage and improve system resilience at the same time?

Every data centre has uninterruptible power supplies (UPS) installed as an invaluable backup that keeps their IT equipment running whenever there’s a power problem. But in reality, how often is that backup actually required?

While it’s essential to have the safety net a UPS provides, the fact is it’s an expensive and often underutilised asset. Using a UPS’s batteries for energy storage transforms a piece of reactive infrastructure into something that’s working for your data centre 24-7.

There are a couple of caveats to keep in mind. Firstly, using a UPS to feed energy back to the grid is far easier if the system uses lithium-ion (Li-ion) batteries rather than the more traditional sealed lead-acid (SLA). This does result in a more expensive initial investment. However, the cost of Li-ion has fallen by 79% since 2010 and is predicted to drop further to roughly £50 per kWh by 2030, making it an increasingly viable option.

Li-ion cells deliver the same power density in less than half the space and weight, recharge much faster, and have 50 times the cycle life of SLA. In addition, they can operate at temperatures of up to 40oC – significantly reducing air conditioning costs and potentially eliminating the need for any separate battery room.

Lithium-ion batteries also last for 10-15 years, during which time an SLA would need replacing up to three times, meaning the total cost of ownership over the course of a decade could be anything from 10-40% less.

Secondly, energy storage isn’t for everyone. To be commercially viable, an organisation needs to be a substantial energy user with sizeable annual electricity bills.

The biggest UK data centres consume 30 GWh a year, a bill of £3 million. Of course, that’s the highest end of the spectrum, but there are plenty of facilities with electricity costs in the £500,000-£1 million range suitable to take part.

Exploring The Practicalities

So how can data centres tap into their UPS batteries for energy storage? There are three main incentives the National Grid offers to help balance the network.

There’s the Capacity Market, which is run as an auction in which successful applicants receive long-term revenues to either invest in additional generation or ensure existing power is always available. This is a serious commitment though, as you must be able to deliver the required energy throughout the entire contract.

A more realistic route for data centres could be providing Reserve Services, which basically covers unexpected increases in demand or a lack of generation.

The most common mechanism is the Short Term Operating Reserve (STOR), where you either reduce demand or increase generation with around 10 minutes’ notice. Payments are guaranteed for two years but you must have the capability to deliver at least three times a week.

Other Reserve Services include Demand Turn-Up, where businesses receive a fee to use energy at times where there’s a surplus – mainly overnight, so it’s only an option if you don’t have set requirements for when you need power – and Fast Reserve. This balances out demand surges, such as those massively popular TV programmes where everyone goes to turn the kettle on during the ad break.

Perhaps the most feasible – and currently most rewarding – entry point for data centres is Frequency Response, which ensures a constant grid frequency of 50Hz, with one hertz latitude either side.