If I had a bitcoin for every time I’ve heard vendors and Channel companies express the desire to become a ‘trusted advisor’ to their customers, well, depending on when I’d sold them, I would either be very wealthy or retirement wealthy! It seems that every organisation involved in the data centre and IT supply chain recognises that it can no longer survive as simply a seller/supplier of software, hardware, data centre space and the like. No, customers want someone to hold their hand as they seek to negotiate as pain-free route as possible through the digital transformation maze.

Unfortunately, long before the digital world reared its exciting, disruptive and slightly scary head, the trusted advisor term had fallen into disrepute, as most of those companies who claimed they wanted to become this to all their customers spectacularly failed to live up to their stated objective.

The temptation to fit square pegs into round holes in return for money was too great, hence many, many end users, still massaging their bruised egos and wallets, are now somewhat sceptical about the spectacular claims being made about anything from colos to Cloud, managed services to software-defined everything and, most recently, IoT and AI.

Anyone running a business has seen how technology-savvy start-ups have made significant in-roads into many industry sectors – leveraging new thinking and new ways of looking at and harnessing data centre and IT infrastructure to their advantage.

So, few if any end users are not receptive to the need for infrastructure change.

However, many feel vulnerable, as their lack of knowledge leaves them prey to unscrupulous suppliers who might pay lip service to the trusted advisor role, but are still rather too heavily driven by the lure of profit, whatever the cost to the customers.

The above might be slightly harsh summary of the status quo, but there’s little doubt that the majority of end users are desperately seeking one or more supplier who they really can trust to help them obtain the business solutions they need, and not simply sell them a bunch of new servers and a stack of storage.

The Channel perfectly reflects this confusing landscape. Many of their staff are struggling to understand how to sell solutions, with monthly payments, as opposed to tin and software with a one-off, front end capital payment. And many Channel companies aren’t sure whether to try and create their own Clouds and managed services, or leverage those available from, say, the hyperscalers, and then add some value.

As with any historical disruption (and I don’t think it’s going too far to place the current digital revolution somewhere in the history books as a significant, global event), there will be winners and losers. The winners will be the ones who understand what digitalisation is all about and who can use it, or help others to use it, to solve real business problems. End users know they have to adapt, and there really is a great opportunity not so much for the vendors (no one organisation can supply the complete, end-to-end digital solution) but the Channel to become the change facilitator.

Words such as ‘trust’ and ‘honesty’ have taken a bit of a battering across various walks of life in recent times (not least on the cricket field), let’s hope that all those involved in selling data centre and IT solutions can be honest enough to tell potential customers if they can, or can’t help them. And let’s hope that switched on Channel organisations recognise that putting together a portfolio of solutions from different colos, vendors, managed services providers etc. could well put them in a strong position to offer end users the trust, honesty and help they are wanting.

Worldwide spending on public cloud services and infrastructure is forecast to reach $160 billion in 2018, an increase of 23.2% over 2017, according to the latest update to the International Data Corporation (IDC) Worldwide Semiannual Public Cloud Services Spending Guide. Although annual spending growth is expected to slow somewhat over the 2016-2021 forecast period, the market is forecast to achieve a five-year compound annual growth rate (CAGR) of 21.9% with public cloud services spending totaling $277 billion in 2021.

The industries that are forecast to spend the most on public cloud services in 2018 are discrete manufacturing ($19.7 billion), professional services ($18.1 billion), and banking ($16.7 billion). The process manufacturing and retail industries are also expected to spend more than $10 billion each on public cloud services in 2018. These five industries will remain at the top in 2021 due to their continued investment in public cloud solutions. The industries that will see the fastest spending growth over the five-year forecast period are professional services (24.4% CAGR), telecommunications (23.3% CAGR), and banking (23.0% CAGR).

"The industries that are spending the most – discrete manufacturing, professional services, and banking – are the ones that have come to recognize the tremendous benefits that can be gained from public cloud services. Organizations within these industries are leveraging public cloud services to quickly develop and launch 3rd Platform solutions, such as big data and analytics and the Internet of Things (IoT), that will enhance and optimize the customer's journey and lower operational costs," said Eileen Smith, program director, Customer Insights & Analysis.

Software as a Service (SaaS) will be the largest cloud computing category, capturing nearly two thirds of all public cloud spending in 2018. SaaS spending, which is comprised of applications and system infrastructure software (SIS), will be dominated by applications purchases, which will make up more than half of all public cloud services spending through 2019. Enterprise resource management (ERM) applications and customer relationship management (CRM) applications will see the most spending in 2018, followed by collaborative applications and content applications.

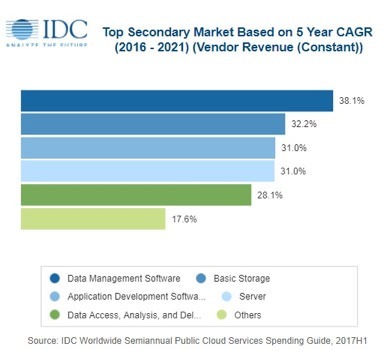

Infrastructure as a Service (IaaS) will be the second largest category of public cloud spending in 2018, followed by Platform as a Service (PaaS). IaaS spending will be fairly balanced throughout the forecast with server spending trending slightly ahead of storage spending. PaaS spending will be led by data management software, which will see the fastest spending growth (38.1% CAGR) over the forecast period. Application platforms, integration and orchestration middleware, and data access, analysis and delivery applications will also see healthy spending levels in 2018 and beyond.

The United States will be the largest country market for public cloud services in 2018 with its $97 billion accounting for more than 60% of worldwide spending. The United Kingdom and Germany will lead public cloud spending in Western Europe at $7.9 billion and $7.4 billion respectively, while Japan and China will round out the top 5 countries in 2018 with spending of $5.8 billion and $5.4 billion, respectively. China will experience the fastest growth in public cloud services spending over the five-year forecast period (43.2% CAGR), enabling it to leap ahead of the UK, Germany, and Japan into the number 2 position in 2021. Argentina (39.4% CAGR), India (38.9% CAGR), and Brazil (37.1% CAGR) will also experience particularly strong spending growth.

The U.S. industries that will spend the most on public cloud services in 2018 are discrete manufacturing, professional services, and banking. Together, these three industries will account for roughly one third of all U.S. public cloud services spending this year. In the UK, the top three industries (banking, retail, and discrete manufacturing) will provide more than 40% of all public cloud spending in 2018, while discrete manufacturing, professional services, and process manufacturing will account for more than 40% of public cloud spending in Germany. In Japan, the professional services, discrete manufacturing, and process manufacturing industries will deliver more than 43% of all public cloud services. The professional services, discrete manufacturing, and banking industries will represent more than 40% of China's public cloud services spending in 2018.

"Digital transformation is driving multi-cloud and hybrid environments for enterprises to create a more agile and cost-effective IT environment in Asia/Pacific. Even heavily regulated industries like banking and finance are using SaaS for non-core functionality, platform as a service (PaaS) for app development and testing, and IaaS for workload trial runs and testing for their new service offerings. Drivers of IaaS growth in the region include the increasing demand for more rapid processing infrastructure, as well as better data backup and disaster recovery," said Ashutosh Bisht, research manager, Customer Insights & Analysis.

100% of IT leaders with high degree of cost transparency are on company board, compared with 54% of non or partially cost transparent enterprises.

A survey of senior IT decision-makers in large enterprises, commissioned by Coeus Consulting, found that IT leaders who can clearly demonstrate the cost and value of IT have greater influence over the strategic direction of the company and are best positioned to deliver business agility for digital transformation. Consequently, cost transparency leaders are twice as likely to be represented at board level and thus are better prepared for external challenges such as changing consumer demand, GDPR and Brexit.

The survey of organisations with revenues of between £200m and £30bn revealed the importance of cost transparency within IT when it comes to forward planning and defining business strategy. Based on the responses of senior decision-makers (more than half of whom are C-level), the report identifies a small group of Cost Transparency Leaders who indicated that their departments: work with the rest of the organisation to provide accurate cost information; ensure that services are fully costed; and manage the cost life cycle.

88% of respondents were unable to indicate that they can demonstrate cost transparency to the rest of the organisation.

When compared to their counterparts, Cost Transparency Leaders are:

Twice as likely to be represented at board level (100% v 54%)1.5x more likely to be involved in setting business strategy (85% v 55%)Twice as likely to report that the business values IT’s advice (100% v 52%)Twice as likely to demonstrate alignment with the business (90% v 50%)More than seven times as likely to link IT performance to genuine business outcomes (38% v 5%)

“This survey clearly reveals that cost transparency is a pre-requisite for IT leaders with aspirations of being a strategic partner to the business. Those that get it right are better able to transform the perception of IT from ‘cost centre’ to ‘value centre’ and support the constant demand for business agility that is typical of the modern, digital organisation. Only those that have achieved cost transparency in their IT operations will be able to deal effectively with external challenges such as Brexit and GDPR” said James Cockroft, Director at Coeus Consulting.

Digital transformation trends mean that businesses are focusing more heavily on their customers and are using technology to improve their experience. However, IT departments remain bogged down in day-to-day activities and the need to keep the lights on, which is preventing teams focusing on how they can help drive improvements to the customer experience.

This is according to research commissioned by managed services provider Claranet, with results summarised it its 2018 Report, Beyond Digital Transformation: Reality check for European IT and Digital leaders.

In a survey of 750 IT and Digital decision-makers from organisations across Europe, market research company Vanson Bourne found that the overwhelming majority (79 per cent) feel that the IT department could be more focused on the customer experience, but that staff do not have the time to do so. More generally, almost all respondents (98 per cent) recognise that there would be some kind of benefit if they adopted a more customer-centric approach, whether this be developing products more quickly (44 per cent), greater business agility (43 per cent), or being better prepared for change (43 per cent).

Commenting on the findings, Michel Robert, Managing Director at Claranet UK, said: “As technology develops, IT departments are finding themselves with a long and growing list of responsibilities, all of which need to be carried out alongside the omnipresent challenge of keeping the lights on and making sure everything runs smoothly. Despite a tangible desire amongst respondents to adopt a more customer-centric approach, this can be difficult when IT teams have to spend a significant amount of their time on general management and maintenance tasks.”Cisco has released the seventh annual Cisco® Global Cloud Index (2016-2021). The updated report focuses on data center virtualization and cloud computing, which have become fundamental elements in transforming how many business and consumer network services are delivered.

According to the study, both consumer and business applications are contributing to the growing dominance of cloud services over the Internet. For consumers, streaming video, social networking, and Internet search are among the most popular cloud applications. For business users, enterprise resource planning (ERP), collaboration, analytics, and other digital enterprise applications represent leading growth areas.451 Research, a top five global IT analyst firm and sister company to datacenter authority Uptime Institute, has published Multi-tenant Datacenter Market reports on Hong Kong and Singapore, its fifth annual reports covering these key APAC markets.

451 Research predicts that Singapore’s colocation and wholesale datacenter market will see a CAGR of 8% and reach S$1.42bn (US$1bn) in revenue in 2021, up from S$1.06bn (US$739m) in 2017. In comparison, Hong Kong’s market will grow at a CAGR of 4%, with revenue reaching HK$7.01bn (US$900m) in 2021, up from HK$5.8bn (US$744m) in 2017.

Hong Kong experienced another solid year of growth at nearly 16%, despite the lack of land available for building, the research finds. Several providers still have room for expansion, but other important players are near or at capacity, and only two plots of land are earmarked for datacenter use. Analysts note that the industry will face challenges as it continues to grow, hence the reduced growth rate over the next three years.

“The Hong Kong datacenter market continues to see impressive growth, and in doing so has managed to stay ahead of its closest rival, Singapore, for yet another year,” said Dan Thompson, Senior Analyst at 451 Research and one of the report’s authors. However, with analysts predicting an 8% CAGR for Singapore over the next few years, Singapore’s datacenter revenue is expected to surpass Hong Kong’s by the end of 2019.

451 Research analysts found that, while the number of new builds in Singapore slowed in 2017, the market still saw nearly 12% supply growth overall, compared with 19% the previous year. The report notes that the reduced builds in 2017 follow two years when providers had invested heavily in building new facilities and expanding existing ones.

“Rather than seeing 2017 as a down year for Singapore, we see it as a ‘filling up’ year, where providers worked to maximize their existing datacenter facilities,” said Thompson. “Meanwhile, 2018 is shaping up to be another big year, with providers including DODID, Global Switch and Iron Mountain slated to bring new datacenters online in Singapore.”

Analysts also reveal that demand growth in both Hong Kong and Singapore has shifted from the financial services, securities, and insurance verticals to the large-scale cloud and content providers.

451 Research finds that Singapore’s role as the gateway to Southeast Asia remains the key reason why cloud providers are choosing the area. “Cloud and content providers are choosing to service their regional audiences from Singapore because it is comparatively easy to do business there, in addition to having strong connectivity with countries throughout the region. This all bodes well for the country’s future as the digital hub for this part of APAC,” added Thompson.

451 Research finds that Hong Kong’s position as the gateway into and out of China remains a key reason why cloud providers are choosing the area, as well as the ease of doing business there. This is good news for the city as long as providers find creative solutions to their lack of available land.

451 Research has also compared the roles of the Singapore and Hong Kong datacenter markets in detail. The analysts concluded that multinationals need to deploy datacenters in both Singapore and Hong Kong, since each serves a very specific role in the region: Hong Kong is the digital gateway into and out of China, while Singapore is the digital gateway into and out of the rest of Southeast Asia.

Analysts find that these two markets compete for some deals, but surrounding markets are vying for a position as well. As an example, Singapore sees some competition from Malaysia and Indonesia, while Hong Kong could potentially see more competition from cities in mainland China, such as Guangzhou, Shenzhen and Shanghai. However, the surrounding markets are not without challenges for potential consumers, suggesting that Singapore and Hong Kong will remain the primary destinations for datacenter deployments in the region for the foreseeable future.

Growing adoption of cloud native architecture and multi-cloud services contributes to $2.5 million annual spend per organization on fixing digital performance problems.

Digital performance management company, Dynatrace, has published the findings of an independent global survey of 800 CIOs, which reveals that 76% of organizations think IT complexity could soon make it impossible to manage digital performance efficiently. The study further highlights that IT complexity is growing exponentially; a single web or mobile transaction now crosses an average of 35 different technology systems or components, compared to 22 just five years ago.

This growth has been driven by the rapid adoption of new technologies in recent years. However, the upward trend is set to accelerate, with 53% of CIOs planning to deploy even more technologies in the next 12 months. The research revealed the key technologies that CIOs will have adopted within the next 12 months include multi-cloud (95%), microservices (88%) and containers (86%).

As a result of this mounting complexity, IT teams now spend an average of 29% of their time dealing with digital performance problems; costing their employers $2.5 million annually. As they search for a solution to these challenges, four in five (81%) CIOs said they think Artificial Intelligence (AI) will be critical to IT's ability to master increasing IT complexity; with 83% either already, or planning to deploy AI in the next 12 months.

“Today’s organizations are under huge pressure to keep-up with the always-on, always connected digital economy and its demand for constant innovation,” said Matthias Scharer, VP of Business Operations, Dynatrace. “As a consequence, IT ecosystems are undergoing a constant transformation. The transition to virtualized infrastructure was followed by the migration to the cloud, which has since been supplanted by the trend towards multi-cloud. CIOs have now realized their legacy apps weren’t built for today’s digital ecosystems and are rebuilding them in a cloud-native architecture. These rapid changes have given rise to hyper-scale, hyper-dynamic and hyper-complex IT ecosystems, which makes it extremely difficult to monitor performance and, find and fix problems fast.”

The research further identified the challenges that organizations find most difficult to overcome as they transition to multi-cloud ecosystems and cloud native architecture. Key findings include:

76% of CIOs say multi-cloud makes it especially difficult and time-consuming to monitor and understand the impact that cloud services have on the user-experience72% are frustrated that IT has to spend so much time setting-up monitoring for different cloud environments when deploying new services72% say monitoring the performance of microservices in real-time is almost impossible84% of CIOs say the dynamic nature of containers makes it difficult to understand their impact on application performanceMaintaining and configuring performance monitoring (56%) and identifying service dependencies and interactions (54%) are the top challenges CIOs identify with managing microservices and containers

“For cloud to deliver on expected benefits, organizations must have end-to-end visibility across every single transaction,” continued Mr. Scharer. “However, this has become very difficult because organizations are building multi-cloud ecosystems on a variety of services from AWS, Azure, Cloud Foundry and SAP amongst others. Added to that, the shift to cloud native architectures fragments the application transaction path even further.

“Today, one environment can have billions of dependencies, so, while modern ecosystems are critical to fast innovation, the legacy approach to monitoring and managing performance falls short. You can’t rely on humans to synthesize and analyze data anymore, nor a bag of independent tools. You need to be able to auto detect and instrument these environments in real time, and most importantly use AI to pinpoint problems with precision and set your environment on a path of auto-remediation to ensure optimal performance and experience from an end users’ perspective.”

Further to the challenges of managing a hyper-complex IT ecosystem, the research also found that IT departments are struggling to keep pace with internal demands from the business. 74% of CIOs said that IT is under too much pressure to keep up with unrealistic demands from the business and end users. 78% also highlighted that it is getting harder to find time and resources to answer the range of questions the business asks and still deliver everything else that is expected of IT. In particular, 80% of CIOs said it is difficult to map the technical metrics of digital performance to the impact they have on the business.

The new Data Centre Trade Association members portal is now live via the following link www.dca-global.org (as people get used to the new domain name, for the time being the original domain name www.datacentrealliance.org will still also get you to the same place).

Amanda and I can’t relax quite yet as we are still busy populating the new members portal and website; however, you will see the new website includes a complete rebranding, if you see a blue rather than green logo don’t panic you have landed in the right place. Updated media packs will be going out over the next few weeks to members, so you have the very latest collateral, together with secure login details enabling you to amend your business and personal profiles, add new users and upload additional content such as news, PFDs. white papers, spec sheets, case studies and video.

(If you wish to have video embedded into your member profile page then simply send the code to the DCA and we can insert it for you).

2018 is again jam pack with data centre related conference and events. To help you plan ahead, The DCA has created a printed events calendar listing all the events that the DCA trade association is either hosting, sponsoring or promoting throughout the year; there are 35 in total. As global event partners for Data Centre World (DCW) we have just returned from DCW at the ExCel, London which continues to go from strength to strength. In we are looking forward to greeting members at Data Centres North on 1– 2 May, we’ll have a presence at DCW Hong Kong on the 16 -17 May and the DCS Awards take place on the 24 May in central London.

Details of these and all the events for the whole of 2018 can be found online in the DCA events calendar should you which to find out more. If you would like a copy of the printed event calendar one can be posted to you, to receive your free copy just email events@dca-global.org.

The DCA Journal theme this month is focused on updates from some of the many Collaborative Partnerships we have. These partnerships play a vital role in keeping end users and members both informed and connected. The Data Centre Trade Association breaks these partnerships down into three main area Strategic, Academic and Media Partners.

The DCA has a growing number of Strategic Partnerships with organisations both directly and indirectly connected to the data centre sector, EMA, BCS, CIF, DCD, ECA, UTI, GITA TechUK to name a few. Maintaining a trusted and open relationship with fellow trade bodies enables mutual support, combined resources and knowledge and a unified voice on common issues.

Strategic Alliances can come in many forms from simple MOU enabling the exchanges of information to more collaborative joint initiatives such as events, workshops and or research projects at local, EU or international levels.

Academic Partnerships with Universities and Technical Colleges equally play an essential role. The DCA continues to act as a valuable link between the academic and commercial world not just on research and development projects but also on promoting the data centre sector as a career destination for students.

With the continued support of members and Media Partnerships The DCA publishes over 150 articles every year on a wide range of data centre related topics, all designed to help keep business owners up to speed on the latest innovations, market trends, products and services they need to ensure they can stay one step ahead of the game. The media partnerships we have in place allow The DCA to disseminate thought leadership content out to a combined readership which exceeds 120,000 global subscribers on a continual basis. Many of our media partners are also event organisers, in their own right, and we are proud to be in a position to support them in the planning of many of these events with everything from promotion, content and sourcing of speakers. Speakers are often taken from the wealth of experienced experts and professionals which make up the data centre trade association

I would like to thank all the members who have contributed thought leadership articles this month. Dr Jon Summers has written a thought provoking piece reminding us all that the purpose of a data centre is to support IT, Fellow Trade body The Cloud Industry Forum (CIF) providing its security predictions, John Booth from Carbon3IT discussing if there is a place for using fuel cells and Hydrogen in data centre and Dr Frank Verhagen of Certios and DCA NL with some well needed and timely advice on the thorny subject of GDPR.

Next month’s journal theme (May edition) is focused on Security both physical and cyber (copy deadline is 19 April). The theme for the June edition is focused on energy efficiency. If you would like to contribute to either of these topics lease contact amandam@dca-global.org.

By Dr Jon Summers Scientific Leader in Data Centres, Research Institutes of Sweden, SICS North

In the world of data centres, the term facility is commonly used to indicate the shell that provides the space, power, cooling, physical security and protection to house Information Technology. The data centre sector is made up of several different industries that purposely have a point of intersection that could loosely be defined as the data centre industry. One very important argument is that a data centre exists to house IT, but the facility and IT domains rarely interact, unless the heat removal infrastructure invades the IT space. This is referring to the so called “Liquid Cooling” of IT, whereas normally the facility-IT divide is cushioned by air.

At RISE SICS North we are on a crusade to approach data centres as integrated systems and our experiments are geared to include the full infrastructure where the facility has IT in it. This holistic approach enables the researchers to measure and monitor the full digital stack from the ground to the cloud and the chip to the chiller, so we have built a management system that makes use of several opensource tools and generates more than 9GB of data from more than 30,000 measuring points within our ICE operating data centre depicted below. Some of these measuring points are provided by in house designed and deployed wired temperature sensor strips that have magnetic rails allowing them to be easily mounted to the front and back of racks. Recently, we have come up with a way to take control of fans in open compute servers in preparation for a new data centre build project where we will try and marry up the server requirements of air with what can be provided by the direct air handling units.

Data centre module, ICE (Infrastructure and Cloud research and test Environment – ice.sics.se).

Before joining the research group in Sweden, I was a full-time academic in the School of Mechanical Engineering at the University of Leeds. At Leeds, our research has been focused around thermal and energy management of microelectronic systems and the experiments made use of real IT, where we were able to integrate the energy required to provide the digital services alongside the energy needed to maintain systems within their thermal envelope. The research involved both air and liquid cooling, and for the latter we were able to work with rear door heat-exchangers, on-chip and immersion systems. In determining the Power Usage Effectiveness (PUE) of air versus liquid systems it is always difficult to show that liquids are more “Effective” than air in removing the heat. However, the argument for a metric that assesses the overhead of heat removal should include all the components whose function is to remove heat. So, for centrally pumped coolants in the case of liquid cooling, the overhead of the pump power is correctly assigned to the numerator of the PUE, but this is not the case for fans inside the IT equipment.

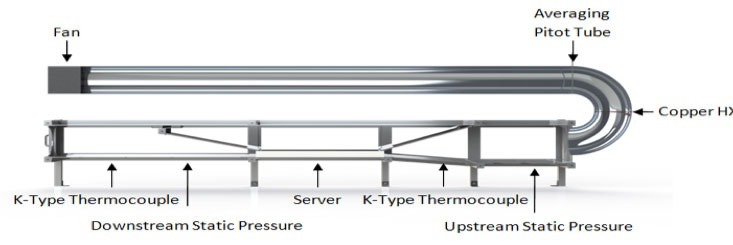

So what percentage of the critical load do the fans consume? Here we can do some simple back of the envelope calculations, but first we need to understand how air movers works. The facility fans are usually large and their electrical power, Pe, can be measured using a power meter. This electrical power is converted into a volumetric flowrate that overcomes the pressure drop, ∆P, that is caused by ducts, obstacles, filters, etc. between this facility fan and the entrance to the IT. If you look at a variety of different literature on this subject, such as fan curves and affinity laws then you may arrive at 1kW of electrical power per cubic metre per second of flow rate, VF. Therefore, with an efficiency, η, of 50% the flowrate and pressure follow the simple relationship, ηPe= ∆P VF. Thus, 1kW of power consumption will overcome 2000 Pascals of pressure drop at a flow rate of 1 cubic metre per minute. The IT fans are now employed to take over this volumetric flowrate of air to overcome the pressure drop across the IT equipment and exhaust the hot air at the rear of the IT equipment. Again, there is literature on the pressure drop across a server and we calculated this at Leeds using a Generic Server Wind Tunnel pictured below. For a 1U server for example the pressure drop is around 350 Pascals, but this does depend on the components inside the server.

Schematic of the generic server wind tunnel at the University of Leeds (source: Daniel Burdett)

Fans that sit inside a 1U server are typically at best 25% efficient and it is a commonly known fact that smaller fans are less efficient than larger ones. We can now use the simple equation, ηPe= ∆P VF, again to determine the electrical power that small less efficient fans require to overcome the server pressure drop at 1 cubic metre per second assuming no air has wondered off somewhere else in the data centre. This yields an accumulated fan power of 1.4kW. But just how much thermal power can these fans remove? For this answer, we need to employ the steady state thermodynamic relationship of PT = ρcpVF∆T, making use of the density of air, ρ (=1.22kg/m3), its specific heat capacity at constant pressure, cp (=1006J/kg/K) and the temperature increase (delta-T), ∆T, across the servers. Now we must make a guess at the delta-T. We can try a range, say 5, 10 and 15oC, which with the same flow rate of 1 cubic metre per second we obtain the thermal power that is injected in the airstream in passing through racks of servers of 6136W, 12273W and 18410W for the three-respective delta-T values. It is then easy to see that in complete ideal conditions, the small server fans respectively consume 18.6%, 10.3% and 7.1% of the total server power assuming no losses in the airflow.

Given that these simple equations are based on a lot of assumptions that would yield conservative approximations, it is not unreasonable to say that IT fan power can consume more than 7% of the power of a typical 1U server. It is now very tempting to add all of these figures together to show how partial PUE is affected by the rack delta-T. Gains in reducing end use energy demand of data centres are clearly best addressed by analysing the full integrated system.

John Booth Chair DCA Energy Efficiency and Sustainability Steering Group

From a recent techUK update (thanks Emma), it transpires that there are 8 individual compliance requirements for data centres on energy ranging from CRC to MEES (contact us directly if you need any help with them!).

Three of them (EU-Emissions Trading System EU-ETS), Industrial Emissions Directive (IED)(Environmental Permit Regulations (EPR) and the Medium Plant Combustion Directive (MPCD)) relate to Generators and specifically SOX. NOX and Particulate Matter (PM), thus it is clear that the sector is facing an increasing compliance burden and, in all possibility, higher costs, either by direct taxation or via CCA Carbon offsets, buy ins, trade-offs or permits etc.

So, faced with additional costs, the yet unseen implications of BREXIT to the UK data centre sector, the threat from countries with mature renewable energy/carbon neutral grids (France, Nordics) etc and guidance from the EU Code of Conduct for Data Centres (Energy Efficiency) with a number of general best practices relating to energy generally and backup power specifically; I thought I would take a look at fuel cells and hydrogen generation options for retrofit and new build data centres. There are some examples of the use of fuel cells in the industry and recent press articles on EU projects dealing with this subject, so it is worthy of a review.

The Fuel Cell and Hydrogen show was recently held at the NEC Birmingham and as it is local to me I managed to wrangle an invite. My intention was to ascertain whether the use of fuel cells and potentially Hydrogen are viable options, so I came up with three questions to ask the delegates:

1) Can Fuel Cells provide the necessary power requirements?

2) What is the footprint of the infrastructure?

3) Do the costs/TCO compared to conventional UPS/Generator options?

First, a quick look at Hydrogen and Fuel Cells.

Hydrogen is the lightest element in the periodic table and is the most abundant element in the Universe. As I am not a chemist I refer readers to the wiki entry on Hydrogen which can be found here https://en.wikipedia.org/wiki/Hydrogen

Fuel Cells are electrochemical cells that converts the chemical energy from a fuel into electricity through an electrochemical reaction of hydrogen fuel with oxygen or another oxidising agent. More information on fuel cells can be found here https://en.wikipedia.org/wiki/Fuel_cell

The energy efficiency of a fuel cell is generally between 40-60%, however, if waste heat is captured in a cogeneration scheme, efficiencies up to 85% can be obtained.

It would be safe to say that fuel cells are very much an emerging technology although the principle has been around for some time, indeed, my research indicates that the first mention of hydrogen (one of the fuels available) fuel cells appeared in 1838.

Enough of the theory, let’s move onto practical matters and answer the three questions posed above.

1. Can Fuel Cells provide the necessary power requirements?

Yes, they can, indeed, one systems solution provider that can provide modular fuel cell systems that can provide power from hundreds of kilowatts to many tens of megawatts. The key thing here is that a fuel cell powered data centre is likely to require a big footprint to provide the floorspace required for the fuel cell equipment and charging apparatus and a connection to either a gas main (some cells can operate from natural gas) or have a hydrogen producer in the near vicinity.

Mindsets will also have to change, firstly, fuel cell arrays need to be considered as a primary energy source and not a backup source, thus they are a replacement for the grid and not conventional backup solutions such as UPS and Generators, whether the risk adverse data centre sector can accept fuel cells rather depends on cost and reliability.

For reliability, fuel cell arrays can achieve 99.998% availability, and can be configured to be extremely robust in the case of failure, indeed if one cell fails, you’ll have many more to choose from as at least one manufacturer can provide hot swappable field replacement units, so I’m sure that a fuel cell array can provide an equivalent service availability to any grid based system, definitely in the UK with its strong and (relatively) stable grid but perhaps will score highly in areas that do not have a stable grid. (bearing in mind the fuel!)

As fuel cells will be under your direct control, it is possible that you could be minded to remove your conventional backup power systems thus saving costly capex and service costs and reduce any exposure to carbon or emission taxation regimes.

You could always over provision (for expected growth) and sell surplus energy from your cells back to the grid.

2) What is the footprint of the infrastructure?

This will clearly vary according to your design, but a 300kW system will weigh around 15 tons, and depending on the layout be 6m x 3m x 2.5m high. You might want to factor in some additional footprint for gas connection equipment, any access requirements, storage space and hot spares and surround it with fences etc.

The key thing is that all fuel cells are modular, thus meeting the EUCOC requirement for modular systems but its easy to add additional modules as the need arises.

The nature of a fuel cell solution is that it’s a partnership with the supplier, so remote monitoring and system performance tweaking is included as standard.

3) Do the costs/TCO compared to conventional UPS/Generator options?

Simply, no, they do not compare favourably with conventional solutions, the cost is around double what you would expect to pay for an equivalent UPS/Generator solution, but as with solar panels, the cost of the solution will reduce in price, the more people specify and procure them for data centre solutions, add to the mix the reduced carbon, energy and emission taxation, the elimination of generator tests and possible noise issues and I personally believe that it’s a worthwhile solution to consider, especially for new builds in water stressed areas (fuel cells can produce water!), areas without grid capacity or unstable connections and the edge!

So, in summary, fuel cell technologies are ready and waiting for the data centre community to come and have a look, indeed some organisations have already done so, the solution is modular and can be expanded at will (provided you have the space), there are significant benefits to the circular economy and the concept of data centre campuses.

A final note, during my day at the show, I made contact with various people working in the industry and it is highly likely that some of them will be speaking about this very subject at some of the summer data centre events. If you’re not able to attend or need any further information, or for some contacts in the fuel cell industry, please contact me on my usual email.

-68u2ha.jpg)

By Frank Verhagen, DPO Certified Data Protection Officer, CDCEP® (Certified Data Centre Energy Professional)

A Cloud provider is a Data Processor according to the definition of the General Data Protection Regulation (GDPR).

We have been looking into some interesting questions on behalf of Cloud providers.

Apart from the last sentence, which I would like to challenge - and which is one of the reasons the GDPR came to live in the first place - the questions are very recognisable and will therefore be addressed in this article. Cloud providers now realise, that related to GDPR, they are data processors; they have no idea and no details of the customer’s data this is very typical nowadays.

First question is about the need to appoint a Data Protection Officer (DPO). A DPO may be internal, external, hired full-time or part-time. The role is to have someone (we might name the DPO into the Data Protection ‘Office’) that may help to solve practical questions concerning the compliancy to the GDPR when processing data, running projects that involve personal data; all to make sure the organisation remains GDPR compliant. A DPO will also negotiate with the Information Commissioner’s Office (ICO) when and if necessary. Public organisations must have a DPO and private organisations that deal with personal data on a larger scale, definitely need to have one too (and they need to register the DPO with the supervisory authorities).

So, the answer to the question is almost always ‘yes’ – we need a DPO.

Cloud providers and data centres have at least two parallel operations running where they should consider the implications of the GDPR. First, their internal organisation and information data flows are subject to the GDPR (personnel and payroll records, contracts, CV’s, appraisals and recruitment records). For this kind of data, every organisation is the Data Controller (having the goals and the means). This first item, however important, will not be in the scope of this article as it applies to almost every existing organisation. Second, there is data that the Cloud provider stores and processes for customers. Following the GDPR, the Cloud provider should have a data processing agreement with each of its customers (the data controllers). In these agreements there will be policies that will stipulate what the data controller (customer) requires from the data processor (Cloud provider) to help meet the requirements of the GDPR.

Can a Cloud provider have any responsibility for the data, in the processing of data of their tenants? To what extend does a data processor assume responsibility?

Let’s see what a Cloud provider needs to do (this is not a complete list!).

First of all, make a data protection impact assessment (DPIA) in where an assessment (evaluation, severity) of the risks is been documented[2]:

· Describe the nature, scope, context and purposes of the processing.

· Assess necessity, proportionality and compliance measures.

· Identify and assess risks to individuals.

· Identify any additional measures to mitigate those risks.

It is important to document any processes that you have, to protect the data whilst within your environment, for example: Is it encrypted - in motion and at rest-, is access (physical and electronic) restricted?

Second, in order to be GDPR compliant, the processor is (a.o.) deemed to have taken ‘appropriate measures’ and avoid risk of data breaches by implementing:

Art. 78 (…) with due regard to the state of the art, to make sure that controllers and processors are able to fulfil their data protection obligations. (…)

These ‘measures’ will be issued, clarified, further detailed in time, by the European Data Protection Board. Based on the DPIA and the risks that have been identified, ‘state of art’ would mean: implementing modern physical and digital security, implemented the right and secure processes, GDPR aware staff (trained); document all of this!

The processor agreement should encapsulate the level of responsibility the Cloud provider has, versus that of the customers. In that relationship the Cloud provider is the processor, so the controller (the customer) is responsible for their data, the responsibility of the processor is different (Chapter IV: Art. 28[3]). A policy that customers agree to, should suffice[4].

If you haven’t done this, start doing it now.

This is the core of what you still can do (assuming your organisation hasn’t done it yet) (if you don’t know how, give me a call).

I encountered an interesting discussion recently.

In the light of the GDPR, can a Cloud provider have responsibilities for data processing when the data is not touched or even accessible?

It is and will be a continuous debate.

If it can be made absolutely clear that - even if you could - your organisation/staff cannot copy, alter or see the data, when (for example) all data is encrypted, and the data Cloud provider doesn’t have any keys to decrypt, can the Cloud provider still be seen as a data processor?

The answer: in that specific, theoretical case the GDPR doesn’t apply[5].

Frank Verhagen

DPO Certified Data Protection Officier

CDCEP® (Certified Data Centre Energy Professional)

Certios B.V.

M +31 6 319 937 33

Twitter: @Certios_Consult

LinkedIn: https://nl.linkedin.com/in/frankverhagen65

[1] https://ico.org.uk/media/1624219/preparing-for-the-gdpr-12-steps.pdf

[2]https://ico.org.uk/for-organisations/guide-to-the-general-data-protection-regulation-gdpr/accountability-and-governance/data-protection-impact-assessments/

[3] Regulation (EU) 2016/679 of the European parliament and of the council

[4]https://ico.org.uk/media/for-organisations/documents/1540/cloud_computing_guidance_for_organisations.pdf

[5]http://ec.europa.eu/justice/data-protection/article-29/documentation/opinion-recommendation/files/2014/wp216_en.pdf

By Bharat Mistry, Principal Security Strategist, Trend Micro

In 2018, digital extortion will be at the core of most cybercriminals’ business model and will propel them into other schemes that will get their hands on potentially hefty payouts. Vulnerabilities in IoT devices will expand the attack surface as devices get further woven into the fabric of smart environments everywhere. Business Email Compromise scams will ensnare more organizations to fork over their money. The age of fake news and cyberpropaganda will persist with old-style cybercriminal techniques. Machine learning and blockchain applications will pose both promises and pitfalls. Companies will face the challenge of keeping up with the directives of the General Data Protection Regulation (GDPR) in time for its enforcement. Not only will enterprises be riddled with vulnerabilities, but loopholes in internal processes will also be abused for production sabotage.

As environments become increasingly interconnected and complex, threats are redefining how we should look at security. Having protection where and when it’s needed will become the backbone of security in this ever-shifting threat landscape.

For 2017, we predicted that cybercriminals would diversify ransomware into other attack methods. True enough, the year unfolded with incidents such as WannaCry and Petya’s rapidly propagated network attacks, Locky and FakeGlobe’s widespread spam run, and Bad Rabbit’s watering hole attacks against Eastern European countries. We do not expect ransomware to go away anytime soon. On the contrary, it can only be anticipated to make further rounds in 2018, even as other types of digital extortion become more prevalent. Cybercriminals have been resorting to using compelling data as a weapon for coercing victims into paying up. With ransomwareas-a-service (RaaS) still being offered in underground forums, along with bitcoin as a secure method to collect ransom, cybercriminals are being all the more drawn to the business model. Attackers will continue to rely on phishing campaigns where emails with ransomware payload are delivered en masse to ensure a percentage of affected users. They will also go for the bigger buck by targeting a single organization, possibly in an Industrial Internet of Things (IIoT) environment, for a ransomware attack that will disrupt the operations and affect the production line. We already saw this in the fallout from the massive WannaCry and Petya outbreaks, and it won’t be long until it becomes the intended impact of the threat.

Users and enterprises can stay resilient against these digital extortion attempts by employing effective web and email gateway solutions as a first line of defense. Solutions with high-fidelity machine learning, behavior monitoring, and vulnerability shielding prevent threats from getting through to the target. These capabilities are especially beneficial in the case of ransomware variants that are seen moving toward fileless delivery, in which there are no malicious payloads or binaries for traditional solutions to detect.

The massive Mirai and Persirai distributed denial-of-service (DDoS) attacks that hijacked IoT devices, such as digital video recorders (DVRs), IP cameras, and routers, have already elevated the conversation of how vulnerable and disruptive these connected devices can be. Recently, the IoT botnet Reaper, which is based on the Mirai code, has been found to catch on as a means to compromise a web of devices, even those from different device makers. We predict that aside from performing DDoS attacks, cybercriminals will turn to IoT devices for creating proxies to obfuscate their location and web traffic, considering that law enforcement usually refers to IP addresses and logs for criminal investigation and post-infection forensics.

Amassing a large network of anonymized devices (running on default credentials no less and having virtually no logs) could serve as jumping-off points for cybercriminals to surreptitiously facilitate their activities within the compromised network. We should also anticipate more IoT vulnerabilities in the market as many, if not most, manufacturers are going to market with devices that are not secure by design. This risk will be compounded by the fact that patching IoT devices may not be as simple as patching PCs. It can take one insecure device that has not been issued a fix or updated to the latest version to become an entry point to the central network. The KRACK attack proved that even the wireless connection itself could add to the security woes. This vulnerability affects most, if not all, devices that connect to the WPA2 protocol, which then raises questions about the security of 5G technology, which is slated to sweep connected environments.

With hundreds of thousands of drones entering the U.S. airspace alone, the prospect of overseeing the aerial vehicles can be daunting. We expect that reports of drone-related accidents or collisions are only the start of it, as hackers have already been found to access computers, grab sensitive information, and hijack deliveries. Likewise, pervasive home devices such as wireless speakers and voice assistants can enable hackers to determine house locations and attempt break-ins. We also expect cases of biohacking, via wearables and medical devices, to materialize in 2018. Biometric activity trackers such as heart rate monitors and fitness bands can be intercepted to gather information about the users. Even life-sustaining pacemakers have been found with vulnerabilities that can be exploited for potentially fatal attacks. What adopters and regulators should recognize now is that not all IoT devices have built-in security, let alone hardened security. The devices are open to compromise unless manufacturers perform regular risk assessments and security audits. Users are also responsible for setting up their devices for security, which can be as simple as changing default passwords and regularly installing firmware updates.

That’s one of the key promises of machine learning, the process by which computers are trained but not deliberately programmed. For a relatively nascent technology, machine learning shows great potential. Already, however, it’s become apparent that machine learning may not be the be-all and end-all of data analysis and insights identification. Machine learning lets computers learn by being fed loads of data. This means that machine learning can only be as good and accurate as the context it gets from its sources. Going into the future, machine learning will be a key component of security solutions. While it uncovers a lot of potential for more accurate and targeted decision-making, it poses an important question: Can machine learning be outwitted by malware? We’ve found that the CERBER ransomware uses a loader that certain machine learning solutions aren’t able to detect because of how the malware is packaged to not look malicious. This is especially problematic for software that employs pre-execution machine learning (which analyzes files without any execution or emulation), as in the case of the UIWIX ransomware (a WannaCry copycat), where there was no file for pre-execution machine learning to detect and block. Machine learning may be a powerful tool, but it is not foolproof. While researchers are already looking into the possibilities of machine learning in monitoring traffic and identifying possible zero-day exploits, it is not farfetched to conjecture that cybercriminals will use the same capability to get ahead of finding the zero-days themselves. It is also possible to deceive machine learning engines, as shown in the slight manipulation of road signs that were recognized differently by autonomous cars. Researchers have already demonstrated how machine learning models have blind spots that adversaries can probe for exploitation. While machine learning definitely helps improve protection, we believe that it should not completely take over security mechanisms. It should be considered an additional security layer incorporated into an in-depth defense strategy, and not a silver bullet. A multilayered defense with end-to-end protection, from the gateway to the endpoint, will be able to fight both known and unknown security threats.

To combat today’s expansive threats and be fortified against those yet to come, organizations should employ security solutions that allow visibility across all networks and that can provide real-time detection and protection against vulnerabilities and attacks. Any potential intrusions and compromise of assets will be avoided with a dynamic security strategy that employs cross-generational techniques appropriate for varying threats.

The DCA would like to thank our Strategic Partner CIF for this article drawn from the TrendMicro Report “Paradigm Shifts – TrendMicro Security Predictions for 2018”. The full report can be downloaded from: https://www.cloudindustryforum.org/content/paradigm-shifts-trend-micro-security-predictions-2018

One of the latest buzz words taking Cloud Computing by storm is that of Functions as a Service (FaaS) or serverless computing by Chris Gray, Chief Delivery Officer, Amido.

Serverless is a hot topic in the world of software architecture, however it has been gaining attention from outside the developer community since AWS pioneered the serverless space with its release of AWS Lambda back in 2014. As one of the fastest growing cloud service delivery models, FaaS has fundamentally changed the way in which technology is not only being purchased but how it’s delivered and operated.

The significance of FaaS for businesses could be huge. Businesses will no longer have to pay for the redundant use of servers, but just for how much computing power that application consumes per millisecond, much like the per-second billing approach that containers are moving towards. Instead of having an application on a server, the business can run it directly from the cloud allowing it to choose when to use it and pay for it, per task – making it event driven. According to Gartner, by 2020, event-sourced, real-time situational awareness will be a required characteristic for 80% of digital business solutions, and 80% of new business ecosystems will require support for event processing.

FaaS is a commoditised function of cloud computing and one that takes away wasted compute associated with idle server storage and infrastructure. “Not every business is going to be right for FaaS or serverless, but there is a real appetite in the industry to reduce the cost of adopting the cloud – so this is a great way to help drive these costs down,” adds Richard Slater, Principal Consultant at Amido. “The thing is, if you’re considering this as an option you are signing up to the ultimate in vendor lock-in as it’s not easy to move these services from one cloud to another (though there are promising frameworks like Serverless JS which claim to resolve this); each cloud provider approaches FaaS in a different way and at present you can’t take a function and move it between vendors. As the appetite for serverless technologies grow, the nature of DevOps will subsequently change; it will still be relevant, although how we go about doing it will be very different. We could say that we are moving into a world of NoOps where applications run themselves in the cloud with no infrastructure and little human involvement. Indeed, humans will need to be there to help automate those services, but won’t be required to do as much coding or testing as they do now. With the advent of AI, the IoT, and other technologies, business events can be detected more quickly and analysed in greater detail; enterprises should embrace ‘event thinking’ and Lambda Architectures as part of a digital landscape.”

With FaaS and serverless gaining momentum, we are seeing fundamental changes to the traditional way in which decisions around technology are made, with roles like the CIO evolving at enterprise level now that there isn’t the same level of vendor negotiations. “Cloud providers are basically the same price across the board, meaning there is little room for negotiation, other than length of contract. However, signing up to long-term single-cloud contracts introduces the risk of having a spending commitment with a cloud that doesn’t offer the features that you need in the future to deliver business value. In this respect, the CIO is still necessary,” adds Richard Slater.

The current industry climate is demanding an increase in specialised IT skills that can cater to serverless digital transformation. If business leaders want to deliver, they need to let go of the ‘command and control’ approach and empower teams to be accountable. Creating the environment and securing the right skillsets to be able to develop, own and operate applications from within the same team is demanding for a new breed of IT engineering. Organisations wanting to embrace digital transformation and this new breed of cloud service delivery must start to give trust to the individuals closest to the business and writing code on the ground. “To a certain extent this trust must be earned, but in many of today’s enterprises there is so much governance around technical delivery that it has the effect of slamming the brakes on any transformation,” concludes Richard Slater, Principal Consultant at Amido.

We’ve seen trends come and go over the years, but with global companies like Expedia and Netflix embracing serverless computing, and cloud heavyweights Amazon, Google and Microsoft offering serverless computing models in their respective public cloud environments, FaaS seems here to stay.

We have been talking to managed services providers about what their customers are saying about GDPR – the compliance requirement which will come in across Europe and affect anyone else with European data. Other than a few household names, it appears that most smaller businesses are taking a watching and monitoring stance, perhaps looking at reviewing their marketing emails, and waiting to see which household name ends up in the headlines.

A more sophisticated group of managed service providers – generally those with large public sector or major global enterprises among their customer base are being pushed into a closer alignment. They are being told that they have to sign new contracts which commit to being GDPR-compliant and in order to continue to do business, they have to make changes. And, as most of them have found out, there is no point solution, no magic bullet in becoming compliant- it is rather a process towards an ideal.

MSPs will find themselves caught in this matrix; the danger is that they could end up making a statement of compliance based on what their vendors have told them about specific products. The vendors, of course have no legal status with the end-user customer, and will not be there behind the MSP should anything wayward emerge in future months.

And ideas on how this will play out are limited; we have spoken with legal experts, and the best advice they can give is that parties show they are aware of the requirements and are able to show some progress towards compliance. MSPs will need to explore in detail how their own provider contracts will work and take suitable advice, not just listen to those selling “solutions”.

The other area of interest emerging in studies on MSPs is *marketing* – while everyone is aware of the level of competition and the need to differentiate, there seems to be little advice on how to build the MSP business pipeline, except by word-of mouth, which necessarily limits the MSP to one geographic region or one vertical or sub-vertical market. Vendors are increasingly able to supply collateral in the form of web downloads or documents, but the MSP needs to be able to wrap a clear message around such material and establish their own right to deliver solutions based on it.

So there are plenty of discussion points on how to build the best MSP business, and we know that the best are doing very well this year. Which is why the Managed Services and Hosting Summit (MSHS) on May 29 in Amsterdam aims to build on best practice, learning from those who are getting it right, and passing on ideas.

The MSHS event offers multiple ways to get those answers: from plenary-style presentations from experts in the field to demonstrations; from more detailed technical pitches to wide-ranging round-table discussions with questions from the floor. There is no excuse not to come away from this with questions answered, or at least a more refined view on which questions actually matter.

One of the most valuable parts of the day, previous attendees have said, is the ability to discuss issues with others in similar situations, and attendees are all hoping to learn from direct experience, especially in the complex world of sales and sales management, where there is a big jump from traditional reselling into annualised revenue models.

In summary, the European Managed Services & Hosting Summit 2018 is a management-level event designed to help channel organisations identify opportunities arising from the increasing demand for managed and hosted services and to develop and strengthen partnerships. Registration is free-of-charge for qualifying delegates - i.e. director/senior management level representatives of Managed Service Providers, Systems Integrators, Solution VARs and ISVs. More details: http://www.mshsummit.com/amsterdam/register.php

The next Data Centre Transformation events, organised by Angel Business Communications in association with DataCentre Solutions, the Data Centre Alliance, The University of Leeds and RISE SICS North, take place on 3 July 2018 at the University of Manchester and 5 July 2018 at the University of Surrey. Keynote speakers have been booked and the final programme is all but in place. The event website (https://www.dct.events/) is the best place to go to keep up to speed with just what’s happening!

For the 2018 events, we’re taking our title literally, so the focus is on each of the three strands of our title: DATA, CENTRE and TRANSFORMATION.

The DATA strand will feature two Workshops on Digital Business and Digital Skills together with a Keynote on Security. Digital transformation is the driving force in the business world right now, and the impact that this is having on the IT function and, crucially, the data centre infrastructure of organisations is something that is, perhaps, not as yet fully understood. No doubt this is in part due to the lack of digital skills available in the workplace right now – a problem which, unless addressed, urgently, will only continue to grow. As for security, hardly a day goes by without news headlines focusing on the latest, high profile data breach at some public or private organisation. Digital business offers many benefits, but it also introduces further potential security issues that need to be addressed. The Digital Business, Digital Skills and Security sessions at DTC will discuss the many issues that need to be addressed, and, hopefully, come up with some helpful solutions.

The CENTRES track features two Workshops on Energy and Hybrid DC with a Keynote on Connectivity. Energy supply and cost remains a major part of the data centre management piece, and this track will look at the technology innovations that are impacting on the supply and use of energy within the data centre. Fewer and fewer organisations have a pure-play in-house data centre real estate; most now make use of some kind of colo and/or managed services offerings. Further, the idea of one or a handful of centralised data centres is now being challenged by the emergence of edge computing. So, in-house and third party data centre facilities, combined with a mixture of centralised, regional and very local sites, makes for a very new and challenging data centre landscape. As for connectivity – feeds and speeds remain critical for many business applications, and it’s good to know what’s around the corner in this fast moving world of networks, telecoms and the like.

The TRANSFORMATION strand features Workshops on Automation and The Connected World together with a Keynote on Automation (Ai/IoT). IoT, AI, ML, RPA – automation in all its various guises is becoming an increasingly important part of the digital business world. In terms of the data centre, the challenges are twofold. How can these automation technologies best be used to improve the design, day to day running, overall management and maintenance of data centre facilities? And how will data centres need to evolve to cope with the increasingly large volumes of applications, data and new-style IT equipment that provide the foundations for this real-time, automated world? Flexibility, agility, security, reliability, resilience, speeds and feeds – they’ve never been so important!

Delegates select two 70 minute workshops to attend and take part in an interactive discussion led by an Industry Chair and featuring panellists - specialists and protagonists - in the subject. The workshops will ensure that delegates not only earn valuable CPD accreditation points but also have an open forum to speak with their peers, academics and leading vendors and suppliers.

There is also a Technical track where our Sponsors will present 15 minute technical sessions on a range of subjects. Keynote presentations in each of the themes together with plenty of networking time to catch up with old friends and make new contacts make this a must-do day in the DC event calendar. Visit the website for more information on this dynamic academic and industry collaborative information exchange.

This expanded and innovative conference programme recognises that data centres do not exist in splendid isolation, but are the foundation of today’s dynamic, digital world. Agility, mobility, scalability, reliability and accessibility are the key drivers for the enterprise as it seeks to ensure the ultimate customer experience. Data centres have a vital role to play in ensuring that the applications and support organisations can connect to their customers seamlessly – wherever and whenever they are being accessed. And that’s why our 2018 Data Centre Transformation events, Manchester and Surrey, will focus on the constantly changing demands being made on the data centre in this new, digital age, concentrating on how the data centre is evolving to meet these challenges.

The modern information technology landscape is changing at breakneck pace. By the year 2020, Business Insider Intelligence estimates that over 5.6 billion devices will utilize edge computing. The primary question you may be asking yourself and your IT staff is: What type of impact this shift to edge computing will have on the broader data center space?

By Chris Brown – Chief Technology Officer, Uptime Institute.

Some experts predict it may push some workloads out of the data center, and other industry authorities hypothesize that edge may ‘blow away cloud.’ In any case, it is important to recognize that edge deployments are highly workload-specific and will only siphon data processing loads away from traditional data centers to support business requirements for greater computational power closer to the data source.

Before you can identify which workloads can and should be moved to the edge, you need to carefully evaluate a variety of factors. A close look at the requirements involved with each specific workload type will give you tremendous insight into where edge computing will have the greatest impact.

Classifying your workloads by type

The key rationale for migrating any workload to the edge lives within its own data requirements, including speed of access, availability and protection. There are three ways to classify workloads in relation to their potential for data processing at the edge. The first is latency tolerance - you may have an application for which performance is contingent on constant access. Workloads with requirements for low latency and high speeds are excellent candidates for edge. Criticality of the site and specific availability and reliability requirements are the second defining factor. Carefully evaluate the levels of availability and reliability needed for the application to meet business objectives. The higher the need for available data, the better candidate it is for its own independent edge site. And finally, the third factor is data volume - can you quantify the volume of data originating at the site and traveling to and from it? The higher data volume originating at the site is, the better candidate for an edge setup.

Aside from data-contingent aspects of your workloads, there are multiple factors you may be weighing when making a decision about edge, including compliance, security, manageability, and costs of any changes to software architecture. Although these are all demands that will be unique to your organization, data considerations should remain the key driver for a switch to the edge.

The top 7 workloads suitable for edge

Below are the top seven types of workloads that are best-suited for an edge site, based on research from our colleagues at 451 Research. If you are managing one of these types of workloads, it may be a good candidate for an edge transition:

1. Industry 4.0 - Also referring to the fourth industrial revolution, Industry 4.0 is another word for smart manufacturing. As technology evolves, industrial companies are finding innovative uses for smart, internet connected technologies. In manufacturing, rich data can be captured from a plethora of tools, labor, cameras and sensors throughout the plant floor, with the potential to revolutionize how business is done. Asset management, machine vision and machine learning are also expected disruptors in this industry. Due to the nature of the data generated on manufacturing plant floors, Industry 4.0 workloads involve high volumes of data and little tolerance for downtime or latency.

2. Carrier Network Functions Virtualization (NFV) - In an ongoing trend - businesses are moving away from fixed-function hardware toward software appliances that run on industry-standard computing and storage equipment. A major, transformational industry trend, NFV will require more IT resources on the local scale and at the edge to support the volume as it grows. This pattern is expected to generate even more data than industry 4.0, while also requiring little latency or downtime.

3. IoT Gateways - IoT device usage is on the rise for consumers and businesses alike. The vast majority of connected machines and sensors do not have the compute power/hardware to for adequate data storage and need to be in close proximity to a data hub to transmit and store their data. Physical or virtual gateways can reside in an edge stack where the volume of data into an edge gateway will be massive, but analytics will limit the amount of on-site processing needed. Even though volume of data is less than other workloads mentioned here, criticality and latency tolerance is low, making it an excellent candidate for edge.

4. Remote Data Processing - As data entrenches itself further into everyday life, departmental and branch offices, retail locations, factories and remote industrial sites will require local processing to support the data being generated on site, even when networking is down. Security and compliance are key considerations when installing outposts like this. Remote data processing can forgive a few seconds of downtime, but there’s still a high risk if downtime lasts any significant period of time or if consequential latency takes place.

5. Imaging (e.g., medical, scientific) - Convenience is working its way into every industry, including medicine. And as high-resolution imaging is used in various areas like medical screenings and diagnostics, large data sets will be legally required to remain on file for years. Even clinics and small medical offices will need local IT capacity to analyze and retain imaging data sets for the long term. While imaging can withstand several seconds of latency, the volume of data and high demands for availability make this workload a good opportunity for edge.

6. 5G Cell Processing - In the coming years, 5G is expected to bid for a much larger share of IP traffic, which will bring about an exponential increase in wireless bandwidth. This will require an all-IP-based and standard IT-based architecture that can also be an accelerator for CDNs. Key functions include data caching and real-time transcoding of content. With little to no tolerance for latency but decreased demands of reliability and high volumes of data, 5G cell processing is a good opportunity to consider edge deployments.

7. CCTV and Analytics - Closed-circuit television installation and usage is only expected to increase with the onset of smarter cameras and more sophisticated processing power. High definition recording and advanced analytics are two contributing trends in this area. Newer CCTV setups could also include camera preprocessing, biometric and object identification, and cross-camera comparison. All of this points to more sophistication for CCTV deployments and as refinement continues, so will additional IT capacity needs and demand for edge.

Making the move to edge is a big decision, but it shouldn’t be a hard one. Systematically evaluating your workloads along with any potential compliance, security, and manageability demands will set your business up for edge success in the future.

I wrote an article recently which centred on Gartner’s prediction that the Disaster Recovery as a Service (DRaaS) market would grow from $2.01B in 2017 to $3.7B by 2021 by Richard Stinton, Enterprise Solutions Architect at iland.

In my opinion, one of the main drivers for this rapid level of growth is the fact that it is ‘as a service’ and not the complex and expensive ‘create your own’ environment that it used to be. As a result, this has made DRaaS much more accessible to the SMB market, as well as enterprise customers. But, as the list of DRaaS solutions grows along with adoption rates, it's important for customers to carefully consider how their choice of cloud provider should be influenced by their existing infrastructure. This will help to avoid technical challenges down the road.

The concept of Disaster Recovery

Before I delve into the key considerations for customers when choosing a DR solution, I should, for the sake of the uninitiated amongst us, explain what DR is. It literally means to recover from a disaster, and so encompasses the time and labour required to be up and running again after a data loss or downtime. DR depends on the solution that is chosen to protect the business against data loss. It is not simply about the time during which systems and employees cannot work. It is also about the amount of data lost when having to fall back on a previous version of that data. Businesses should always ask themselves: “how much would an hour of downtime cost?” And, moreover, “is it possible to remember and reproduce the work that employees, or systems did in the last few hours?”

When choosing a DR solution, what are the considerations?

In the past, customers would usually have resorted to building out a secondary data centre complete with a suitably sized stack of infrastructure to support their key production servers in the event of a DR undertaking. They could either build with new infrastructure, or eke out a few more years from older servers and networking equipment. Often, they would even buy similar storage technology that would support replication.

More recently, software-based replication technologies have enabled a more heterogeneous set up, but still requiring a significant investment in the secondary data centre, and, not forgetting the power and cooling required in the secondary DC, coupled with the ongoing maintenance of the hardware, all of which increases the overall cost and management task of the DR strategy.

Even recent announcements such as VMware Cloud on AWS, are effectively managed co-location offerings, involving a large financial commitment to physical servers and storage which will be running 24/7.

So, should customers be looking to develop their own DR solutions, or would it be easier and more cost-effective to buy a service offering?

Enter DRaaS.

Now, customers need only pay for the storage associated with their virtual machines being replicated and protected, and only pay for CPU and RAM when there is a DR test or real failover.

Choosing the right DR provider for you